Deep Learning Approach for Wildland Fire Recognition Using RGB and Thermal Infrared Aerial Image

Abstract

1. Introduction

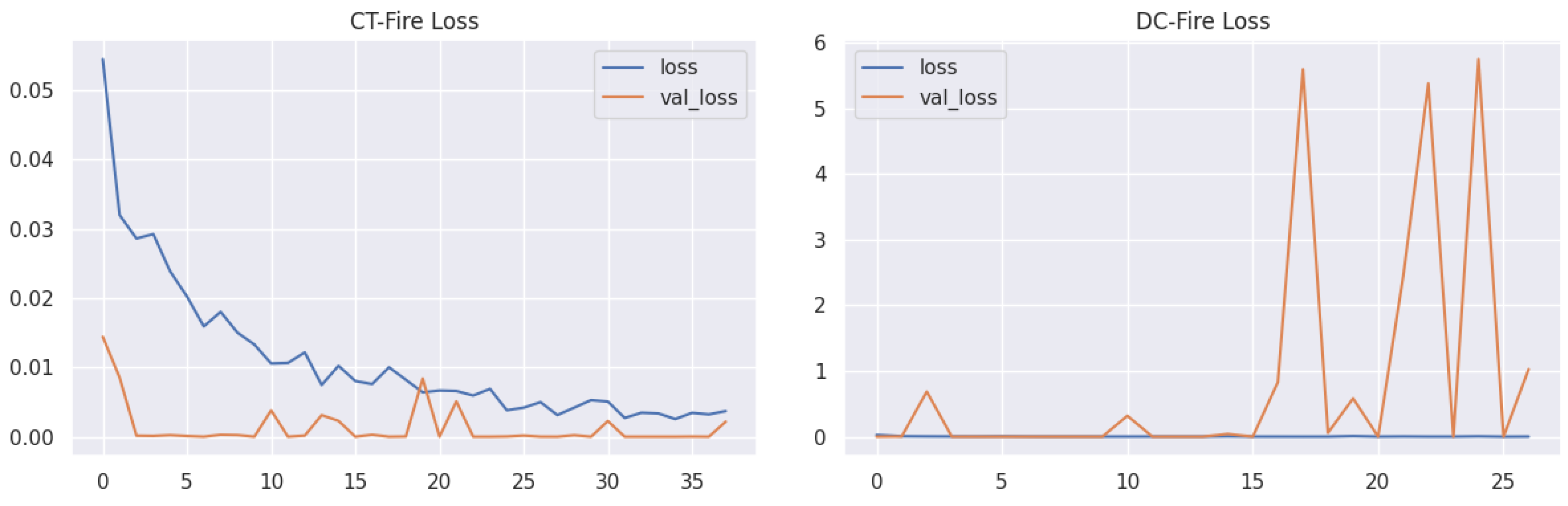

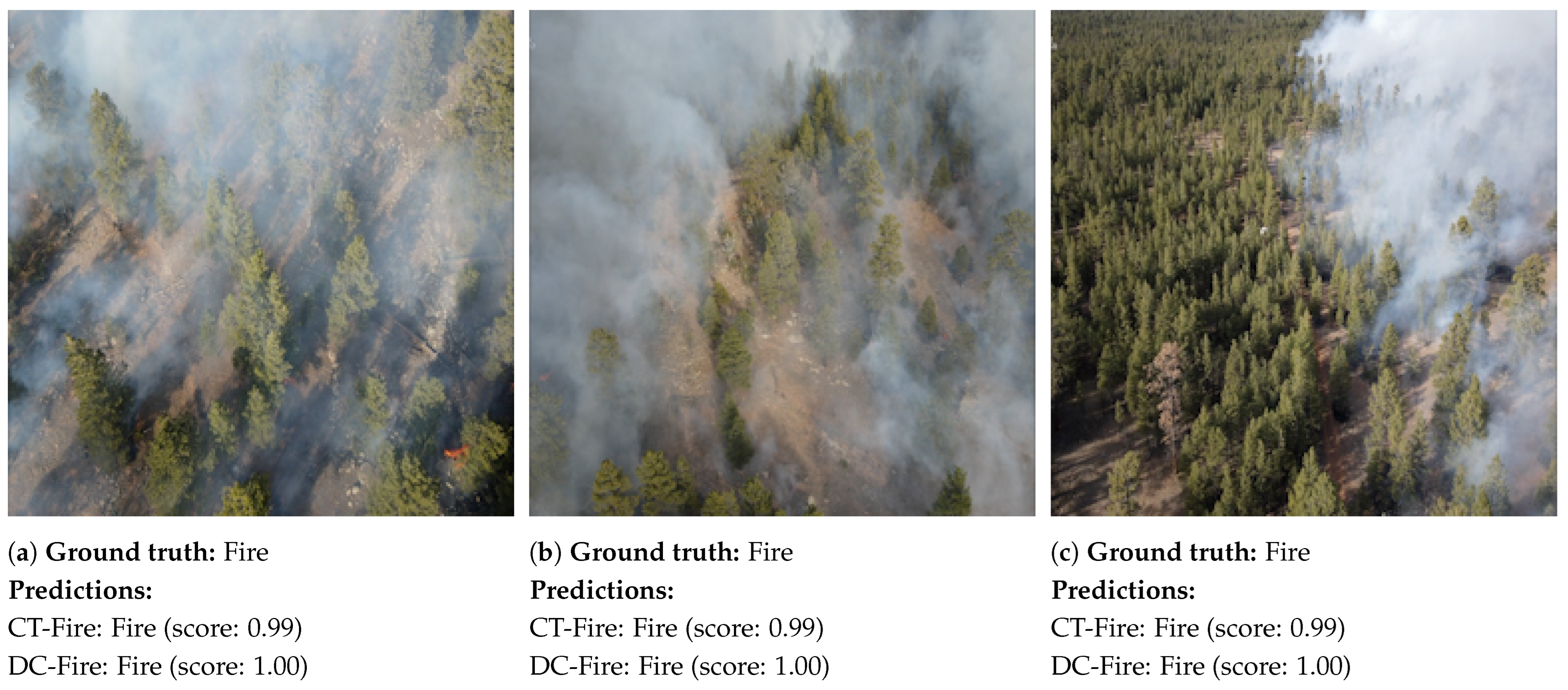

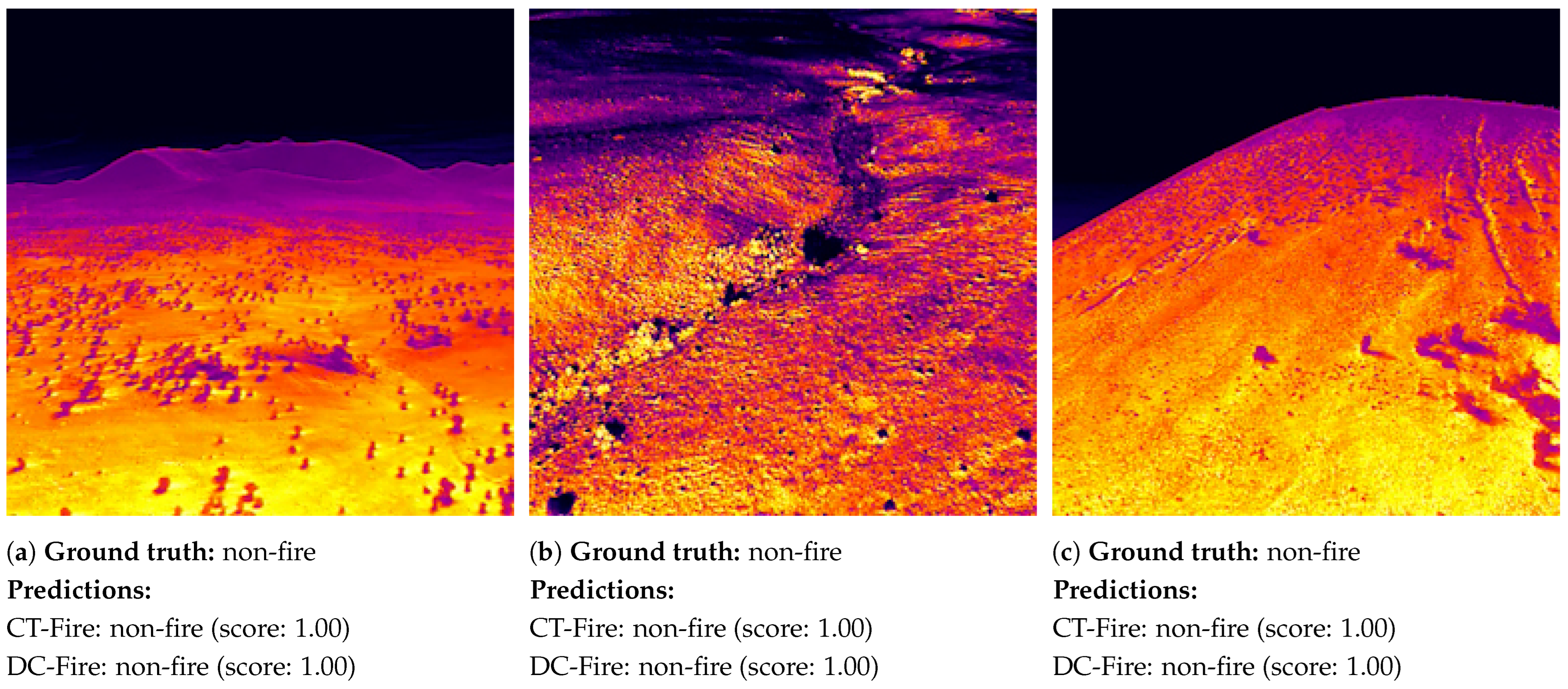

- Two DL methods, namely DC-Fire and CT-Fire, were adopted for recognizing smoke and fires using both IR and visible aerial images in order to improve the performance of wildland fire/smoke classification tasks.

- DC-Fire and CT-Fire showed a promising performance, overcoming challenging limitations, including background complexity, the detection of small wildland fire areas, image quality, and the variability of wildfires regarding their size, shape, and intensity with flame lengths ranging from 0.25 to 0.75 m, occasionally 5 to 10 m.

- DC-Fire and CT-Fire methods showed fast processing speeds, allowing their use for early detection of wildland smoke and fires during both day and night, which is crucial for reliable fire management strategies.

2. Related Works

| Ref. | Methodology | Object Detected | Dataset | Image Type | Results (%) |

|---|---|---|---|---|---|

| [34] | IRCNN and SVM | Flame | Private: 5300 fire images and 6100 non-fire images | IR | F1-score = 98.70 |

| [36] | C_CNN | Flame | SKLFS: 5000 images | IR | Accuracy = 95.30 |

| [33] | VGG-16 | Smoke Flame | FLAME2: 25,434 smoke/fire images, 13,700 non-smoke/non-fire images and 14,317 fire/non-smoke images | IR | Accuracy = 97.29 |

| [33] | MobileNet v2 | Smoke Flame | FLAME2: 50,868 smoke/fire images, 27,400 non-smoke/non-fire images and 28,634 fire/non-smoke images | Both | Accuracy = 99.81 |

| [52] | FireXnet | Smoke Flame | Kaggle, DT-Fire, and FLAME2: 950 images for each class of fire, smoke, thermal fire, and non-fire | Both | F1-score = 98.42 |

| [37] | Xception | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 76.23 |

| [40] | Ensemble learning | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | F1-score = 84.77 |

| [33] | VGG-16 | Smoke Flame | FLAME2: 25,434 smoke/fire images, 13,700 non-smoke/non-fire images and 14,317 fire/non-smoke images | Visible | Accuracy = 99.91 |

| [41] | Two-channel CNN | Smoke Flame | Private: 7000 fire images and 7000 non-fire images | Visible | Accuracy = 98.52 |

| [42] | Reduce-VGGNet | Flame | FLAME: 900 fire images and 1000 non-fire images | Visible | Accuracy = 91.20 |

| [44] | X-MobileNet | Flame | Private, Kaggle, and FLAME: 5313 fire images and 617 non-fire images | Visible | F1-score = 98.89 |

| [46] | Dual-channel CNN | Smoke Flame | Private: 7000 fire images and 7000 non-fire images | Visible | Accuracy = 98.90 |

| [47] | Hybrid DL | Flame | FLAME: 30,155 fire images and 17,855 non-fire images DeepFire: 760 fire images and 760 non-fire images | Visible | F1-score = 97.12 F1-score = 95.54 |

| [49] | EfficientNet-B0 with attention | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 92.02 |

| [51] | AlexNet | Flame Smoke | Private: 582 fire images, 373 smoke images, and 737 non-fire images | Visible | Accuracy = 89.64 |

| [27] | DC-Fire | Flame Smoke | FLAME2: 53,451 images | IR | F1-score = 100.00 |

| [28] | CT-Fire | Flame | FLAME: 47,992 aerial images CorsicanFire, DeepFire, FIRE: 3900 ground images Both aerial and ground images: 51,892 images | Visible | Accuracy = 87.77 Accuracy = 99.62 Accuracy = 85.29 |

| [57] | BoucaNet | Smoke | USTC_SmokeRS: 6225 images | Visible | Accuracy = 93.67 |

| [60] | BoucaNet | Flame | SWIFT, Fire, DeepFire, Corsican: 28,910 images | Visible | Accuracy = 93.21 |

| [61] | EdgeFireSmoke++ | Flame | Private: 49,452 images | Visible | Accuracy = 95.41 |

| [63] | DenseNet121 | Flame | FLAME: 30,155 fire images and 17,855 non-fire images | Visible | Accuracy = 99.32 |

| [65] | TeutongNet | Flame | DeepFire: 1900 images | Visible | Accuracy = 98.68 |

| [66] | AFFD-FDL | Flame | Private: 1710 images | Visible | Accuracy = 98.60 |

| [67] | Two-stage method | Smoke | Public: 9472 smoke/fire images and 9406 non-smoke/-fire images | Visible | Accuracy = 96.10 |

| [68] | SegNet | Flame | Private: 10,242 images | Visible | Accuracy = 98.18 |

3. Materials and Methods

3.1. Proposed Methods

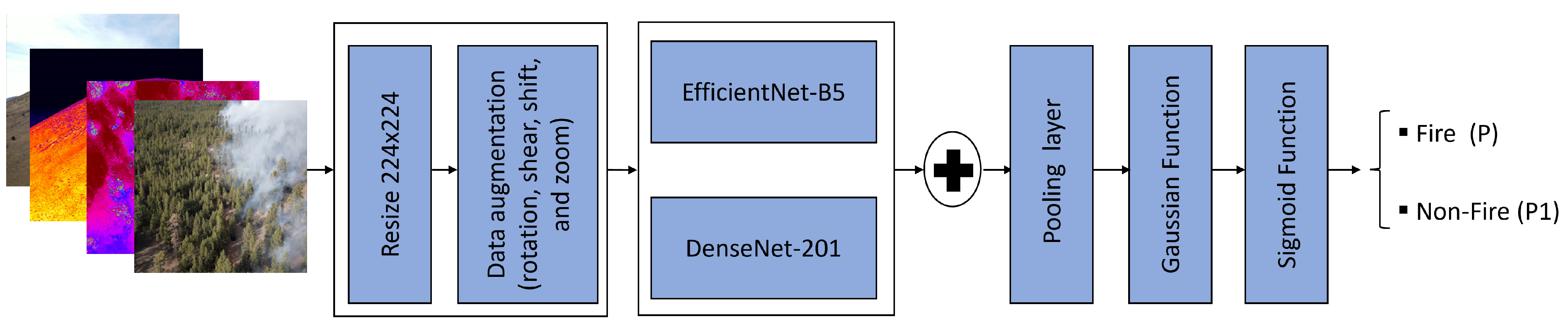

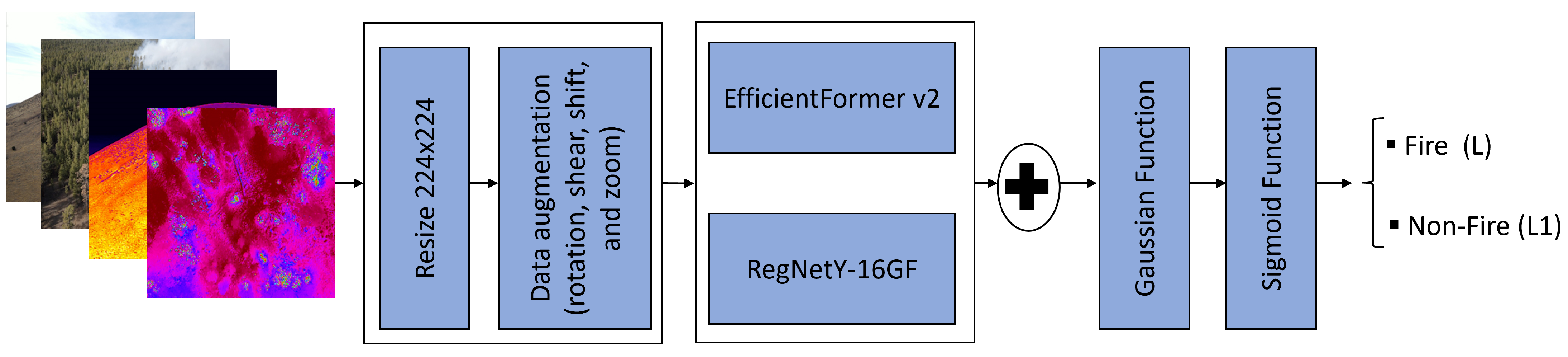

3.1.1. DC-Fire Method

3.1.2. CT-Fire Method

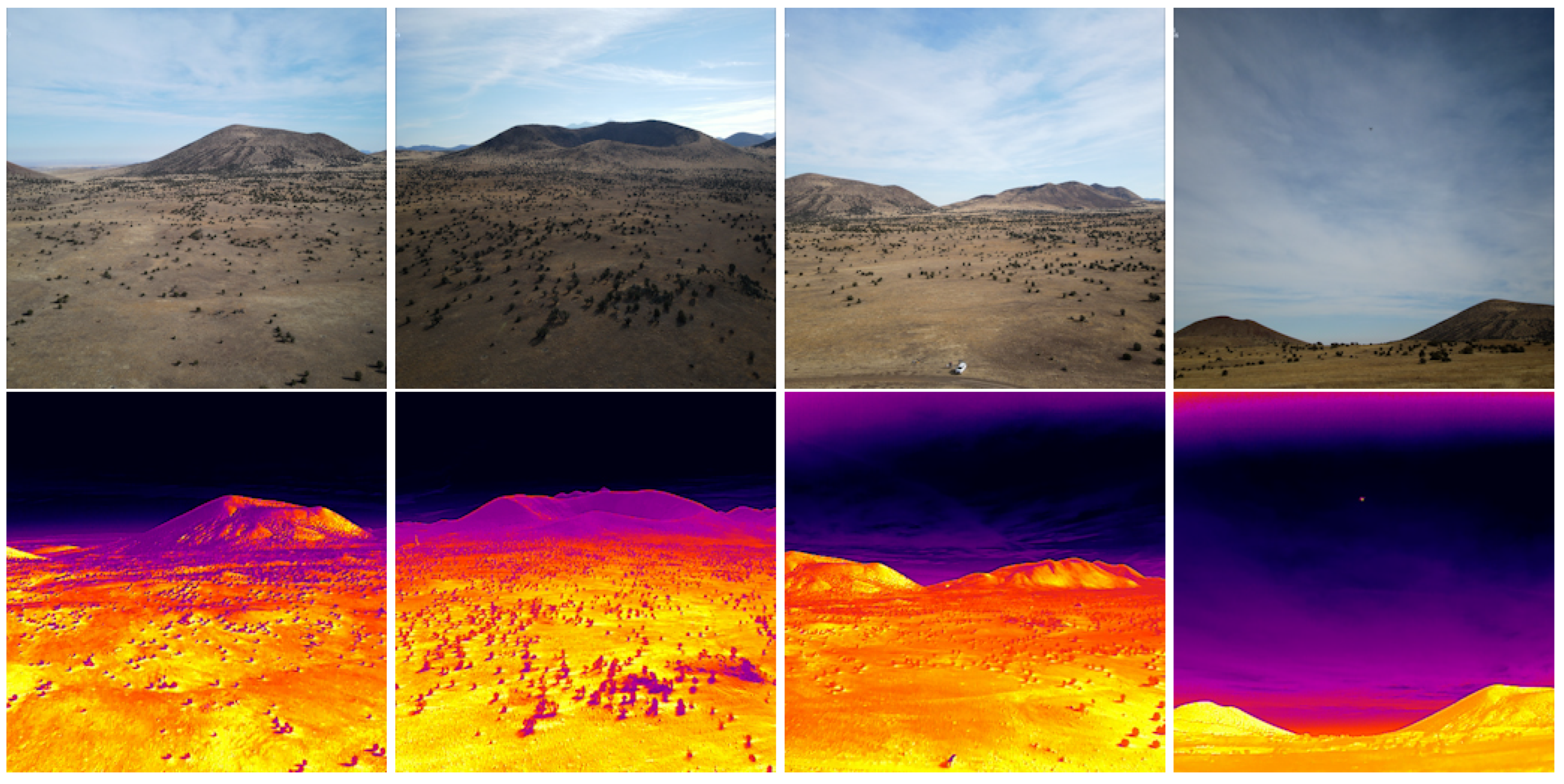

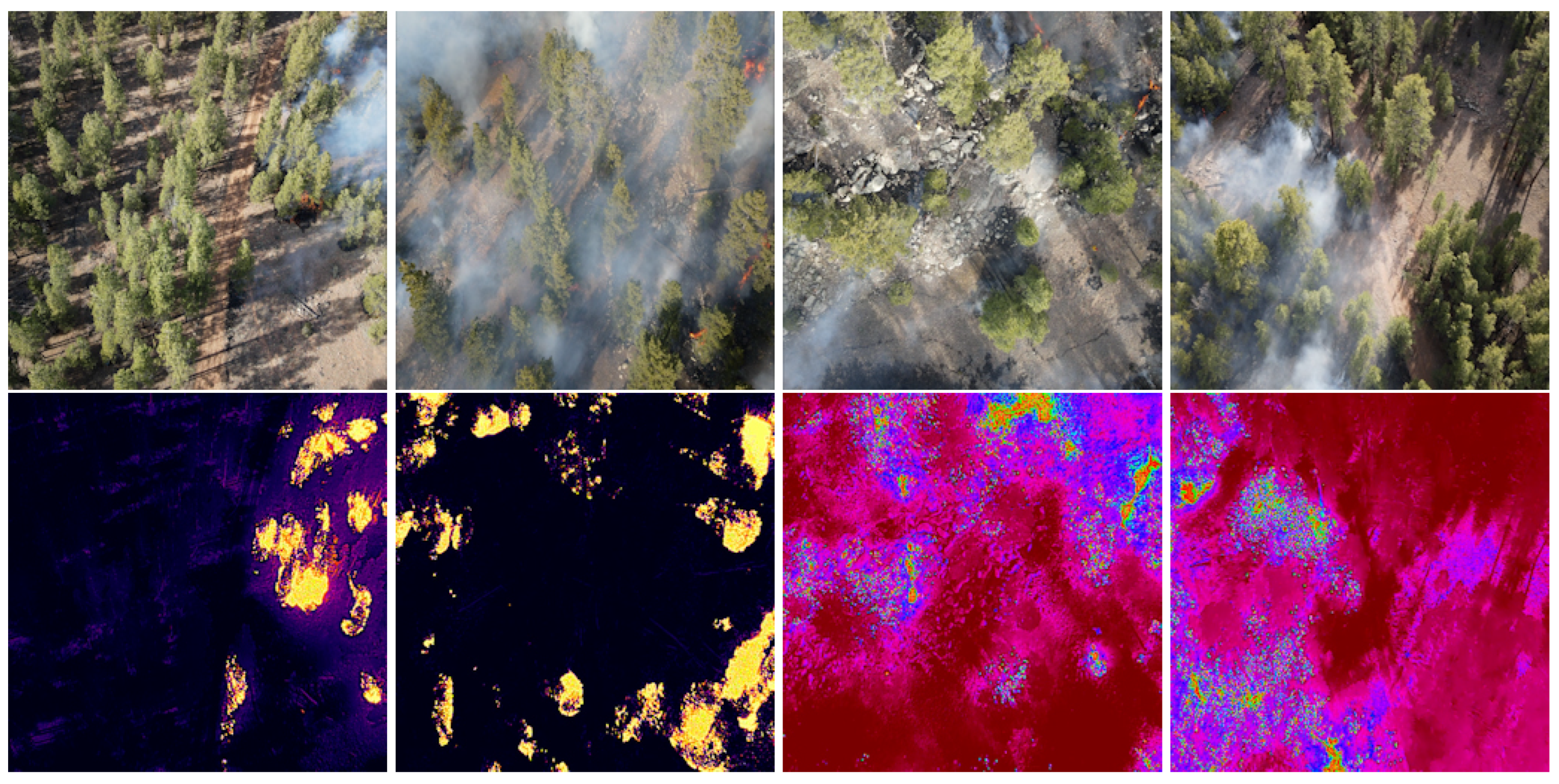

3.2. Dataset

3.3. Implementation Details

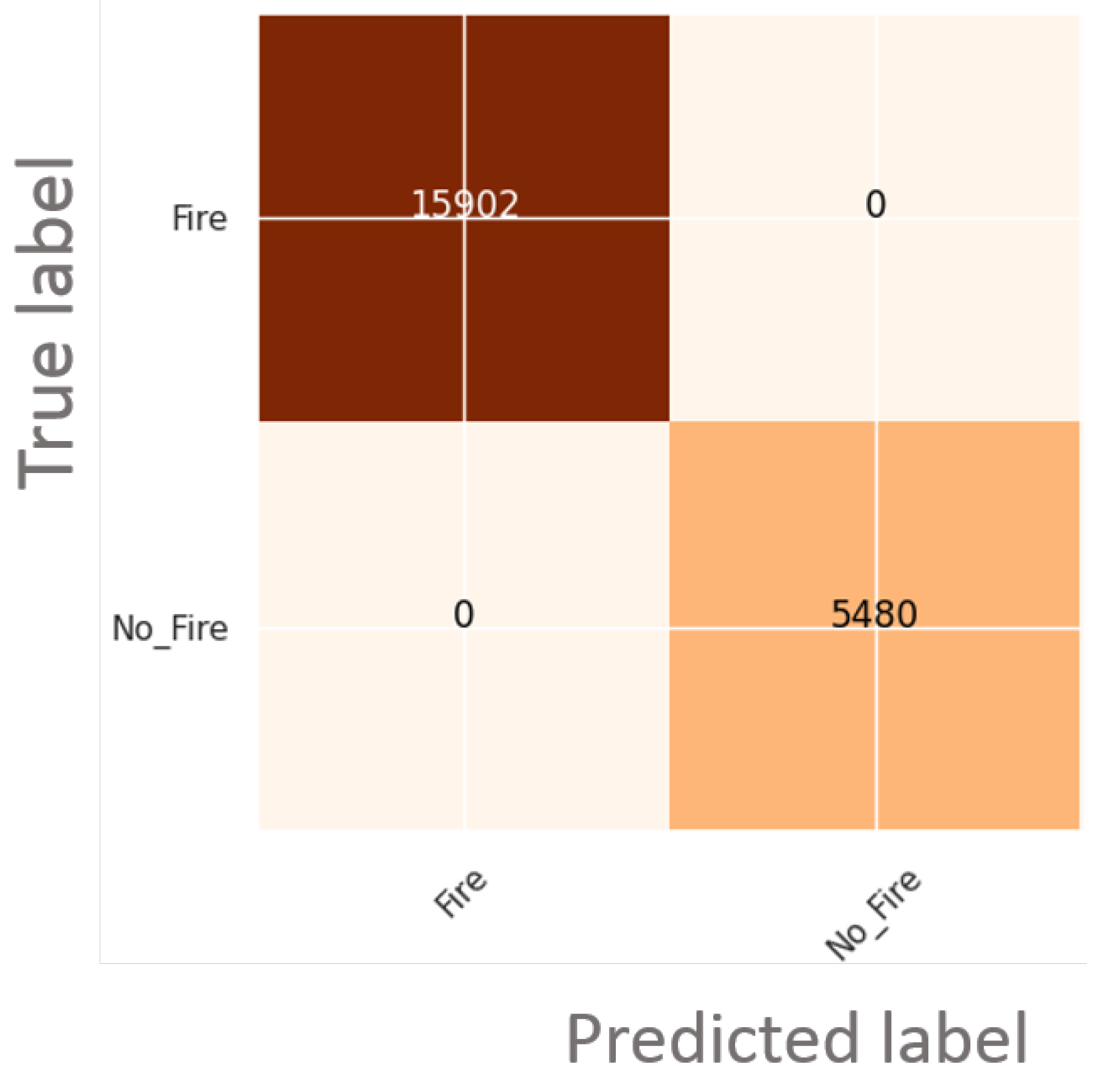

4. Experiments and Results

5. Discussion

5.1. Results Analysis

5.2. Ethical Issues

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence; |

| DL | Deep Learning; |

| IR | Infrared; |

| CNN | Convolutional Neural Network; |

| ReLU | Rectified Linear Unit; |

| SKLFS | State Key Laboratory of Fire Science; |

| CBAM | Convolution Block Attention Module; |

| SHAP | SHapley Additive exPlanation; |

| ACNet | Customized Attention Connected Network; |

| BCE | Cross-Entropy Loss; |

| GRU | Gated Recurrent Unit; |

| Bi-LSTM | Bidirectional Long Short-Term Memory; |

| HOG | Histogram of Oriented Gradients; |

| UAV | Unmanned Aerial Vehicle; |

| BO | Bayesian optimization; |

| ANN | Artificial Neural Network; |

| IRCNN | Infrared Convolutional Neural Network. |

References

- European Commission. 2022 Was the Second-Worst Year for Wildfires. Available online: https://ec.europa.eu/commission/presscorner/detail/en/ip_23_5951 (accessed on 20 May 2024).

- European Commission. Wildfires in the Mediterranean. Available online: https://joint-research-centre.ec.europa.eu/jrc-news-and-updates/wildfires-mediterranean-monitoring-impact-helping-response-2023-07-28_en (accessed on 20 May 2024).

- Government of Canada. Forest Fires. Available online: https://natural-resources.canada.ca/our-natural-resources/forests/wildland-fires-insects-disturbances/forest-fires/13143 (accessed on 20 May 2024).

- Shingler, B.; Bruce, G. Five Charts to Help Understand Canada’s Record Breaking Wildfire Season. Available online: https://www.cbc.ca/news/climate/wildfire-season-2023-wrap-1.6999005 (accessed on 20 May 2024).

- Anshul, G.; Abhishek, S.; Ashok, K.; Kishor, K.; Sayantani, L.; Kamal, K.; Vishal, S.; Anuj, K.; Chandra, M.S. Fire Sensing Technologies: A Review. IEEE Sensors J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation. Remote Sens. 2023, 15, 1821. [Google Scholar] [CrossRef]

- Khan, F.; Xu, Z.; Sun, J.; Khan, F.M.; Ahmed, A.; Zhao, Y. Recent Advances in Sensors for Fire Detection. Sensors 2022, 22, 3310. [Google Scholar] [CrossRef] [PubMed]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Using Satellite Remote Sensing Data: Detection, Mapping, and Prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Celik, T.; Akhloufi, M.A. Automatic Fire Pixel Detection using Image Processing: A Comparative Analysis of Rule-based and Machine Learning-based Methods. Signal Image Video Process. 2016, 10, 647–654. [Google Scholar] [CrossRef]

- Martin, M.; Peter, K.; Ivan, K.; Allen, T. Optical Flow Estimation for Flame Detection in Videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef]

- Kosmas, D.; Panagiotis, B.; Nikos, G. Spatio-Temporal Flame Modeling and Dynamic Texture Analysis for Automatic Video-Based Fire Detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Ishag, M.M.A.; Honge, R. Forest Fire Detection and Identification Using Image Processing and SVM. J. Inf. Process. Syst. 2019, 15, 159–168. [Google Scholar] [CrossRef]

- Ko, B.; Cheong, K.H.; Nam, J.Y. Early Fire Detection Algorithm Based on Irregular Patterns of Flames and Hierarchical Bayesian Networks. Fire Saf. J. 2010, 45, 262–270. [Google Scholar] [CrossRef]

- David, V.H.; Peter, V.; Wilfried, P.; Kristof, T. Fire Detection in Color Images Using Markov Random Fields. In Proceedings of the Advanced Concepts for Intelligent Vision Systems, Sydney, Australia, 13–16 December 2010; pp. 88–97. [Google Scholar]

- Fahad, M. Deep Learning Technique for Recognition of Deep Fake Videos. In Proceedings of the IEEE IAS Global Conference on Emerging Technologies (GlobConET), London, UK, 19–21 May 2023; pp. 1–4. [Google Scholar]

- Ur Rehman, A.; Belhaouari, S.B.; Kabir, M.A.; Khan, A. On the Use of Deep Learning for Video Classification. Appl. Sci. 2023, 13, 2007. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine Learning and Deep Learning Approach for Medical Image Analysis: Diagnosis to Detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Wang, X.; Che, T.; Bao, G.; Li, S. Multi-task Deep Learning for Medical Image Computing and Analysis: A Review. Comput. Biol. Med. 2023, 153, 106496. [Google Scholar] [CrossRef] [PubMed]

- Jasdeep, S.; Subrahmanyam, M.; Raju, K.G.S. Multi Domain Learning for Motion Magnification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13914–13923. [Google Scholar]

- Yu, C.; Bi, X.; Fan, Y. Deep Learning for Fluid Velocity Field Estimation: A Review. Ocean Eng. 2023, 271, 113693. [Google Scholar] [CrossRef]

- Harsh, R.; Lavish, B.; Kartik, S.; Tejan, K.; Varun, J.; Venkatesh, B.R. NoisyTwins: Class-Consistent and Diverse Image Generation Through StyleGANs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 5987–5996. [Google Scholar]

- Dhar, T.; Dey, N.; Borra, S.; Sherratt, R.S. Challenges of Deep Learning in Medical Image Analysis—Improving Explainability and Trust. IEEE Trans. Technol. Soc. 2023, 4, 68–75. [Google Scholar] [CrossRef]

- Xu, T.-X.; Guo, Y.-C.; Lai, Y.-K.; Zhang, S.-H. CXTrack: Improving 3D Point Cloud Tracking With Contextual Information. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 1084–1093. [Google Scholar]

- Ibrahim, N.; Darlis, A.R.; Kusumoputro, B. Performance Analysis of YOLO-Deep SORT on Thermal Video-Based Online Multi-Objet Tracking. In Proceedings of the IEEE 13th International Conference on Consumer Electronics—Berlin (ICCE-Berlin), Berlin, Germany, 3–5 September 2023; pp. 1–6. [Google Scholar]

- Saleh, A.; Zulkifley, M.A.; Harun, H.H.; Gaudreault, F.; Davison, I.; Spraggon, M. Forest Fire Surveillance Systems: A Review of Deep Learning Methods. Heliyon 2024, 10, e23127. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, L.A.O.; Ghali, R.; Akhloufi, M.A. YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images. Fire 2024, 7, 140. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. DC-Fire: A Deep Convolutional Neural Network for Wildland Fire Recognition on Aerial Infrared Images. In Proceedings of the fourth Quantitative Infrared Thermography Asian Conference (QIRT-Asia 2023), Abu Dhabi, United Arab Emirates, 30 October–3 November 2023; pp. 1–6. [Google Scholar]

- Ghali, R.; Akhloufi, M.A. CT-Fire: A CNN-Transformer for Wildfire Classification on Ground and Aerial images. Int. J. Remote Sens. 2023, 44, 7390–7415. [Google Scholar] [CrossRef]

- Gao, H.; Zhuang, L.; Laurens, v.d.M.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Mingxing, T.; Quoc, L. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ilija, R.; Prateek, K.R.; Ross, G.; Kaiming, H.; Piotr, D. Designing Network Design Spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10428–10436. [Google Scholar]

- Yanyu, L.; Ju, H.; Yang, W.; Georgios, E.; Kamyar, S.; Yanzhi, W.; Sergey, T.; Jian, R. Rethinking Vision Transformers for MobileNet Size and Speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 17–24 June 2023; pp. 16889–16900. [Google Scholar]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland Fire Detection and Monitoring Using a Drone-Collected RGB/IR Image Dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, Y.; Jinjun, W.; Zhang, Q.; Bing, C.; Dongcai, L. Fire Detection in Infrared Video Surveillance Based on Convolutional Neural Network and SVM. In Proceedings of the IEEE 3rd International Conference on Signal and Image Processing (ICSIP), Shenzhen, China, 13–15 July 2018; pp. 162–167. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Deng, L.; Chen, Q.; He, Y.; Sui, X.; Liu, Q.; Hu, L. Fire Detection with Infrared Images using Cascaded Neural Network. J. Algorithms Comput. Technol. 2019, 13, 1748302619895433. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial Imagery Pile Burn Detection Using Deep Learning: The FLAME Dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Francois, C. Xception: Deep Learning With Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.; Blasch, E. The FLAME Dataset: Aerial Imagery Pile Burn Detection using Drones (UAVs). IEEE Dataport 2020. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef]

- Guo, Y.Q.; Chen, G.; Yi-Na, W.; Xiu-Mei, Z.; Zhao-Dong, X. Wildfire Identification Based on an Improved Two-Channel Convolutional Neural Network. Forests 2022, 13, 1302. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.; Zhang, Y.; Hu, K.; An, K. A Deep Learning-Based Experiment on Forest Wildfire Detection in Machine Vision Course. IEEE Access 2023, 11, 32671–32681. [Google Scholar] [CrossRef]

- Karen, S.; Andrew, Z. Very Deep Convolutional Networks for Large-scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Anupama, N.; Prabha, S.; Senthilkumar, M.; Sumathi, R.; Tag, E.E. Forest Fire Identification in UAV Imagery Using X-MobileNet. Electronics 2023, 12, 733. [Google Scholar] [CrossRef]

- Mark, S.; Andrew, H.; Menglong, Z.; Andrey, Z.; Liang-Chieh, C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, Z.; Guo, Y.; Chen, G.; Xu, Z. Wildfire Detection via a Dual-Channel CNN with Multi-Level Feature Fusion. Forests 2023, 14, 1499. [Google Scholar] [CrossRef]

- Islam, A.M.; Binta, M.F.; Rayhan, A.M.; Jafar, A.I.; Rahmat, U.J.; Salekul, I.; Swakkhar, S.; Muzahidul, I.A.K.M. An Attention-Guided Deep-Learning-Based Network with Bayesian Optimization for Forest Fire Classification and Localization. Forests 2023, 14, 2080. [Google Scholar] [CrossRef]

- Ali, K.; Bilal, H.; Somaiya, K.; Ramsha, A.; Adnan, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Aral, R.A.; Zalluhoglu, C.; Sezer, E.A. Lightweight and Attention-based CNN Architecture for Wildfire Detection using UAV Vision Data. Int. J. Remote Sens. 2023, 44, 5768–5787. [Google Scholar] [CrossRef]

- Sanghyun, W.; Jongchan, P.; Joon-Young, L.; So, K.I. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Kumar, J.S.; Khan, M.; Jilani, S.A.K.; Rodrigues, J.J.P.C. Automated Fire Extinguishing System Using a Deep Learning Based Framework. Mathematics 2023, 11, 608. [Google Scholar] [CrossRef]

- Khubab, A.; Shahbaz, K.M.; Fawad, A.; Maha, D.; Wadii, B.; Abdulwahab, A.; Mohammad, A.; Alshehri, M.S.; Yasin, G.Y.; Jawad, A. FireXnet: An explainable AI-based Tailored Deep Learning Model for Wildfire Detection on Resource-constrained Devices. Fire Ecol. 2023, 19, 54. [Google Scholar] [CrossRef]

- Dincer, B. Wildfire Detection Image Data. Available online: https://www.kaggle.com/datasets/brsdincer/wildfire-detection-image-data (accessed on 20 May 2024).

- Pedro, V.d.V.; Lisboa, A.C.; Barbosa, A.V. An Automatic Fire Detection System Based on Deep Convolutional Neural Networks for Low-power, Resource-constrained Devices. Neural Comput. Appl. 2022, 34, 15349–15368. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer Vision for Wildfire Research: An Evolving Image Dataset for Processing and Analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Saied, A. FIRE Dataset. Available online: https://www.kaggle.com/datasets/phylake1337/fire-dataset?select=fire_dataset%2C+06.11.2021 (accessed on 20 May 2024).

- Ghali, R.; Akhloufi, M.A. BoucaNet: A CNN-Transformer for Smoke Recognition on Remote Sensing Satellite Images. Fire 2023, 6, 455. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Fernando, L.; Ghali, R.; Akhloufi, M.A. SWIFT: Simulated Wildfire Images for Fast Training Dataset. Remote Sens. 2024, 16, 1627. [Google Scholar] [CrossRef]

- Almeida, J.S.; Jagatheesaperumal, S.K.; Nogueira, F.G.; de Albuquerque, V.H.C. EdgeFireSmoke++: A Novel lightweight Algorithm for Real-time Forest Fire Detection and Visualization using Internet of Things-human Machine Interface. Expert Syst. Appl. 2023, 221, 119747. [Google Scholar] [CrossRef]

- Almeida, J.S.; Huang, C.; Nogueira, F.G.; Bhatia, S.; de Albuquerque, V.H.C. EdgeFireSmoke: A Novel Lightweight CNN Model for Real-Time Video Fire–Smoke Detection. IEEE Trans. Ind. Informatics 2022, 18, 7889–7898. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Detection of Forest Fire using Deep Convolutional Neural Networks with Transfer Learning Approach. Appl. Soft Comput. 2023, 143, 110362. [Google Scholar] [CrossRef]

- Habbib, A.M.; Khidhir, A.M. Transfer Learning Based Fire Recognition. Int. J. Tech. Phys. Probl. Eng. (IJTPE) 2023, 15, 86–92. [Google Scholar]

- Idroes, G.M.; Maulana, A.; Suhendra, R.; Lala, A.; Karma, T.; Kusumo, F.; Hewindati, Y.T.; Noviandy, T.R. TeutongNet: A Fine-Tuned Deep Learning Model for Improved Forest Fire Detection. Leuser J. Environ. Stud. 2023, 1, 1–8. [Google Scholar] [CrossRef]

- Al Duhayyim, M.; Eltahir, M.M.; Omer Ali, O.A.; Albraikan, A.A.; Al-Wesabi, F.N.; Hilal, A.M.; Hamza, M.A.; Rizwanullah, M. Fusion-Based Deep Learning Model for Automated Forest Fire Detection. Comput. Mater. Contin. 2023, 77, 1355–1371. [Google Scholar] [CrossRef]

- Guo, N.; Liu, J.; Di, K.; Gu, K.; Qiao, J. A hybrid Attention Model Based on First-order Statistical Features for Smoke Recognition. Sci. China Technol. Sci. 2024, 67, 809–822. [Google Scholar] [CrossRef]

- Jonnalagadda, A.V.; Hashim, H.A. SegNet: A segmented Deep Learning Based Convolutional Neural Network Approach for Drones Wildfire Detection. Remote Sens. Appl. Soc. Environ. 2024, 34, 101181. [Google Scholar] [CrossRef]

- Pramod, S.; Avinash, M. Introduction to TensorFlow 2.0. In Learn TensorFlow 2.0: Implement Machine Learning and Deep Learning Models with Python; Apress: Berkeley, CA, USA, 2020; pp. 1–24. [Google Scholar] [CrossRef]

- Al-Dabbagh, A.M.; Ilyas, M. Uni-temporal Sentinel-2 Imagery for Wildfire Detection Using Deep Learning Semantic Segmentation Models. Geomat. Nat. Hazards Risk 2023, 14, 2196370. [Google Scholar] [CrossRef]

- Saining, X.; Ross, G.; Piotr, D.; Zhuowen, T.; Kaiming, H. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Ze, L.; Han, H.; Yutong, L.; Zhuliang, Y.; Zhenda, X.; Yixuan, W.; Jia, N.; Yue, C.; Zheng, Z.; Li, D.; et al. Swin Transformer v2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

| Data | Fire IR Images | No-fire IR Images | Fire RGB Images | No-Fire RGB Images | Total |

|---|---|---|---|---|---|

| Training set | 25,440 | 8768 | 25,440 | 8768 | 68,416 |

| Validation set | 6360 | 2192 | 6360 | 2192 | 17,104 |

| Testing set | 7951 | 2740 | 7951 | 2740 | 21,382 |

| Models | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Inference Time (s) |

|---|---|---|---|---|---|

| LeNet5 [33] | 98.32 | 98.35 | 98.58 | 98.45 | — |

| Xception [33] | 98.60 | 98.76 | 98.52 | 98.63 | — |

| MobileNet v2 [33] | 99.81 | 99.78 | 99.87 | 99.82 | — |

| ResNet-18 [33] | 99.44 | 99.46 | 99.56 | 99.50 | — |

| Swin Transformer v2 | 74.37 | 55.31 | 74.37 | 63.44 | 0.015 |

| ResNeXt-50 | 74.70 | 70.38 | 74.70 | 66.02 | 0.004 |

| DC-Fire | 100 | 100 | 100 | 100 | 0.013 |

| CT-Fire | 100 | 100 | 100 | 100 | 0.024 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghali, R.; Akhloufi, M.A. Deep Learning Approach for Wildland Fire Recognition Using RGB and Thermal Infrared Aerial Image. Fire 2024, 7, 343. https://doi.org/10.3390/fire7100343

Ghali R, Akhloufi MA. Deep Learning Approach for Wildland Fire Recognition Using RGB and Thermal Infrared Aerial Image. Fire. 2024; 7(10):343. https://doi.org/10.3390/fire7100343

Chicago/Turabian StyleGhali, Rafik, and Moulay A. Akhloufi. 2024. "Deep Learning Approach for Wildland Fire Recognition Using RGB and Thermal Infrared Aerial Image" Fire 7, no. 10: 343. https://doi.org/10.3390/fire7100343

APA StyleGhali, R., & Akhloufi, M. A. (2024). Deep Learning Approach for Wildland Fire Recognition Using RGB and Thermal Infrared Aerial Image. Fire, 7(10), 343. https://doi.org/10.3390/fire7100343