Abstract

With the advancement of society and the rapid urbanization process, there is an escalating need for effective fire detection systems. This study endeavors to bolster the efficacy and dependability of fire detection systems in intricate settings by refining the existing You Only Look Once version 5 (YOLOv5) algorithm and introducing algorithms grounded on fire characteristics. Primarily, the Convolutional Block Attention Module (CBAM) attention mechanism is introduced to steer the model towards substantial features, thereby amplifying detection precision. Subsequently, a multi-scale feature fusion network, employing the Adaptive Spatial Feature Fusion Module (ASFF), is embraced to proficiently amalgamate feature information from various scales, thereby enhancing the model’s comprehension of image content and subsequently fortifying detection resilience. Moreover, refining the loss function and integrating a larger detection head further fortify the model’s capability to discern diminutive targets. Experimental findings illustrate that the refined YOLOv5 algorithm attains accuracy advancements of 8% and 8.2% on standard and small target datasets, respectively. To ascertain the practical viability of the refined YOLOv5 algorithm, this study introduces a temperature-based flame detection algorithm. By amalgamating and deploying both algorithms, the ultimate experimental outcomes reveal that the integrated algorithm not only elevates accuracy but also achieves a frame rate of 57 frames, aligning with the prerequisites for practical deployment.

1. Introduction

Fires can manifest unexpectedly and spread rapidly, often resulting in substantial damage. Traditional fire detection methodologies predominantly rely on sensor networks and alarm systems, which, while efficacious to a certain extent, exhibit limitations in effectively identifying fires within expansive and intricate environments. To augment the efficacy and precision of fire detection, there has been a surge of interest in video image-based fire detection in recent years.

Video image-based fire detection endeavors to discern flames, smoke, and associated alterations by scrutinizing video and image datasets, furnishing supplementary insights to facilitate early fire detection and response strategies. As a pivotal component of computer vision, object detection technology assumes a paramount role in the realm of video image-based fire detection.

With the advancement of deep learning technology, the inclusion of the Convolutional Neural Network (CNN) [1] has changed the structure of object detection models. The You Only Look Once (YOLO) series of models and others such as the Single Shot Multi Box Detector (SSD) [2] and the Faster Region-based Convolutional Neural Network (Faster R-CNN) [3] have further improved object detection performance, but improvements are still needed when dealing with early fire images.

Currently, a lot of research in the field of fire detection is continuously improving this domain. For example, Sun and colleagues proposed a lightweight real-time fire and smoke detection system based on CNN models, utilizing the lightweight Squeeze and Excitation-GhostNet (SE-GhostNet) as the backbone network and employing a decoupled head to separately predict the category and location of fire or smoke [4]. They conducted experiments on the Smoke and Fire dataset and achieved excellent results. Almeida and his team designed the EdgeFireSmoke++ algorithm, a novel lightweight algorithm for real-time forest fire detection and visualization, achieving a detection accuracy of 95.41% on a selected forest fire dataset [5]. Similarly, Talaat and colleagues proposed an intelligent fire detection system (SFDS) based on You Only Look Once version 8 (YOLOv8), leveraging the advantages of deep learning for real-time detection of fire-specific features [6].

Despite the commendable performance of these models in terms of accuracy, speed, and other metrics, they are not without limitations. For instance, as previously mentioned, the EdgeFireSmoke++ algorithm proposed by Jefferson S. Almeida et al. [5] operates at a modest rate of only 33 frames per second in real-world deployments, suggesting room for enhancement. Additionally, many algorithms still require refinement in their ability to accurately identify small target objects. For instance, while the YOLOv5 model utilized in this study demonstrates strong real-time performance and overall capability, it may encounter challenges during the training phase, leading to instances of false positives and false negatives, as illustrated in Figure 1.

Figure 1.

Misreporting of YOLOv5.

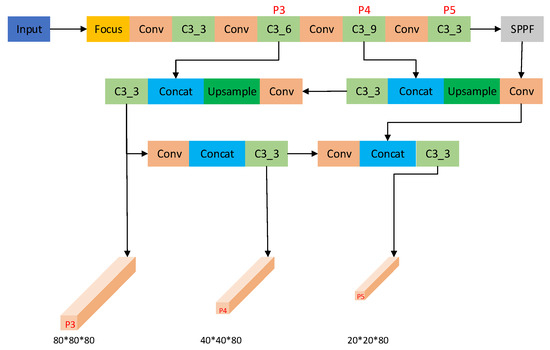

The network structure of YOLOv5 is illustrated in Figure 2.

Figure 2.

YOLOv5 model.

This paper addresses these issues through a series of proposed solutions. Firstly, it compares various methods of introducing attention mechanisms to identify the most effective approach. Secondly, to handle the variability of smoke images, the paper introduces the Adaptive Spatial Feature Fusion module (ASFF). Additionally, in order to reduce false positives and false negatives and improve the prediction accuracy of bounding boxes, the paper opts to replace the loss function from Complete Intersection over Union (CIOU) with End-to-End Intersection over Union (EIOU). Lastly, to address the challenge of detecting small targets, enhancements are made to the YOLOv5 detection head. Furthermore, to enhance the robustness of the proposed improvement algorithm in practical applications and leverage the performance of collection devices, a temperature-based threshold detection algorithm is introduced in the final deployment phase of the experiment. Collectively, these solutions represent the research contributions of this paper, aimed at overcoming challenges in the field of fire detection.

2. Improvements to YOLOv5

2.1. Incorporating Attention Mechanism

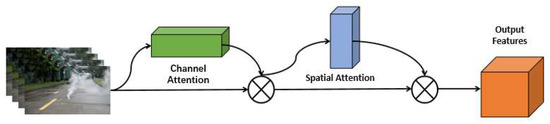

In the realm of smoke image detection, numerous challenges persist, notably the inadequate attention to information within feature maps, non-uniform spatial information distribution, and concerns regarding the robustness of feature representation. These challenges impede the accurate detection and precise localization of smoke targets, particularly in scenarios characterized by complex smoke dispersion patterns and the identification of small targets. To enhance the model’s efficacy in tasks involving the detection of small targets, this study introduces the Convolutional Block Attention Module (CBAM) [7]. CBAM offers a means to augment the model’s performance without augmenting its parameter count. By employing attention weighting mechanisms across both spatial and channel dimensions of the input data, CBAM facilitates the model in directing its focus towards target-rich regions and assimilating more discerning feature representations. This, in turn, translates into improved outcomes in object detection endeavors. The structure of CBAM is delineated in Figure 3.

Figure 3.

CBAM module.

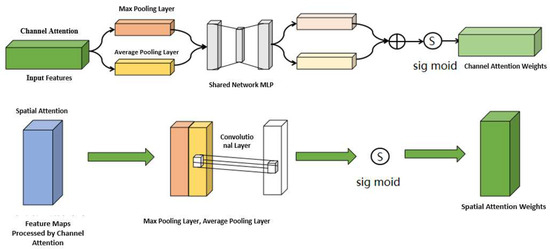

The incorporation of the channel attention mechanism empowers the network to autonomously discern the significance of individual channels, thereby enhancing its ability to extract pivotal information from the feature maps. Through channel attention, the network calculates the importance of each channel, accentuating crucial channels while attenuating less relevant ones. Consequently, the model directs its focus towards the most informative feature channels, thereby enriching the depth of feature representation. Additionally, the integration of the spatial attention mechanism enables the network to concentrate on specific regions within the feature map, facilitating a more nuanced understanding of spatial importance. Spatial attention computes the significance of each pixel position, emphasizing critical pixel positions while mitigating the influence of less substantial ones. This approach aids the model in comprehending the spatial structure and contextual nuances inherent in the image. This is depicted in Figure 4.

Figure 4.

Two types of attention module.

Studying the weights of each channel is accomplished by employing the channel attention mechanism. Given an input feature map , the output of the channel attention mechanism is computed as follows: initially, the average feature map for all channels is computed.

In the subsequent step, the calculation of the weights for each channel is conducted using fully connected layers and activation functions, where C represents the number of channels, represents the feature map of the i-th channel, and represents the output of the channel attention mechanism.

In this context, and denote fully connected layers, while relu denotes the rectified linear unit activation function, and σ represents the sigmoid activation function.

Subsequently, the output of the channel attention is derived by multiplying the weights with the input feature map , yielding the following result:

The spatial attention mechanism serves to determine the weights assigned to each pixel position. For an input feature map , the resulting output of the spatial attention mechanism can be denoted as:

and denote the dimensions of the feature map, where the pixel located at the i-th row and j-th column is represented as . The output of the spatial attention mechanism, denoted as , is calculated as the average value across all pixel positions. Subsequently, the weights for each pixel position are computed using fully connected layers and activation functions.

Afterwards, the output of the spatial attention is obtained by multiplying the weights with the input feature map , expressed as follows:

To assess the detection efficacy of the CBAM module across various configurations, it is imperative to integrate it into all C3_3 modules initially, denoted as YOLOv5 + CBAM1. This integration facilitates a more precise capture of essential channel information, thereby amplifying the representation of image features and consequently augmenting detection precision. Subsequently, the CBAM module is separately incorporated into both the SPPF module and all C3_3 modules, termed as YOLOv5 + CBAM2. Positioning the CBAM module following the SPPF module permits the inclusion of additional attention mechanisms in the intermediate stages of the model, facilitating a more effective capture of features across multiple scales and bolstering the model’s comprehension of global information. Lastly, the CBAM module is integrated into both the SPPF module and the C3_3 modules of the Neck network, denoted as YOLOv5 + CBAM3. This placement of the CBAM module optimally combines the outputs of the SPPF module and the Neck network, refining the overall feature representation of the model. Comparative simulations among these three approaches indicate that YOLOv5 + CBAM3 exhibits the most substantial enhancement in model performance. Therefore, in this investigation, the third integration method is adopted, with the results presented in Table 1.

Table 1.

Comparison of Different Embedding Positions of CBAM in YOLOv5 Model.

In Table 1, DATA1 denotes the smoke target dataset curated for this study, while DATA2 represents the dataset containing small target smoke samples collected for this research endeavor. The primary evaluation metric employed in the experiments detailed in Table 1 is Average Precision (AP). Average Precision (AP) serves as a benchmark for assessing the efficacy of object detection algorithms across varying confidence thresholds. Specifically, AP is computed by determining the Area Under Curve (AUC) of the Precision and Recall curve at different confidence thresholds. A higher AP value indicates superior performance of the model across diverse detection thresholds, facilitating accurate identification and localization of targets.

As shown in Table 1, among the three different positions where the CBAM module is nested, although the improvement is not substantial, the third nesting method performs excellently on both datasets. Therefore, in this experiment, the CBAM module is integrated into the SPPF module and the C3_3 module of the Neck network.

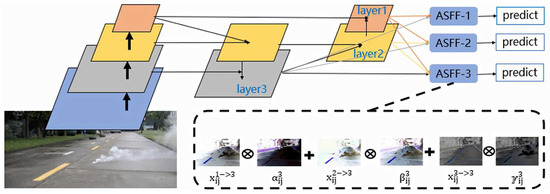

2.2. Adaptive Spatial Feature Fusion Module

To improve the performance of the model in object detection tasks, this paper introduces the Adaptive Feature Fusion Module (ASFF) [8], which is used for multi-scale feature fusion to address the challenge of recognizing small smoke targets.

The main objective of the ASFF module is to address the issue of feature fusion from different scales in object detection tasks. During smoke detection, the distribution and scale of smoke may vary due to the complexity of the environment. Therefore, an effective method is needed to integrate features of various scales to enhance detection performance. The ASFF module dynamically adjusts the contribution of feature maps by introducing adaptive weights, thereby better capturing information from smoke targets.

The design inspiration for ASFF stems from a deep consideration of feature fusion in object detection. In complex scenes, there is a substantial difference in information density between targets and backgrounds, making it difficult for traditional feature fusion methods to balance this difference effectively. ASFF adjusts the weight distribution of feature maps adaptively, allowing the model to learn the relationship between targets and backgrounds more flexibly.

In other words, ASFF integrates feature maps from various network layers, but it differs from traditional methods by incorporating a series of dynamic weight factors that self-adjust based on the importance of each feature map. This adaptability enables the model to flexibly adjust the weight distribution of features based on factors such as target size, location, and background complexity, thus better adapting to various complex scenes.

The core advantage of the ASFF module lies in providing a highly flexible feature fusion mechanism and enabling the model to automatically learn the trade-off between targets and backgrounds during training. This provides the model with stronger adaptability, especially achieving substantial performance improvements in small target detection and complex backgrounds. Figure 5 illustrates the integration process of the ASFF module in the YOLOv5 model, including feature extraction maps from each layer.

Figure 5.

Application of ASFF in Models.

The essence of ASFF is self-regulation to master the spatial weighting combination of feature maps at different scales. Its specific implementation consists of two key steps: intra-scale scaling and adaptive fusion. In the intra-scale scaling process, this paper represents the resolution characteristics of each level m of YOLOv5. The feature maps of other layers (n ≠ m) need to be resized to ensure that they match the size of .

During the initial sampling process, the primary step is to use a 1 × 1 convolutional layer to reduce the number of channels of the feature map until it reaches the required number of channels for the m-th layer. Only a 3 × 3 convolutional layer with a stride of 2 is needed to adjust the downsampling by 1/2, as well as adjust the number of channels and resolution. Before executing downsampling by a factor of 1/4, this study has already added a max-pooling layer with a stride of 2.

The adaptive fusion stage uses the method described in Equation (7) for feature fusion.

In this formula, represents the feature vector at position (i, j) of the feature map corresponding to the k-th layer, while the (i, j)-th vector symbolizes the transformation of the input feature map among various channels . These weights need to satisfy the constraint + + = 1, and their values range from 0 to 1. The calculation method for these weights is defined by Equation (8).

The parameters and are defined using the softmax function, with and as control parameters, respectively.

2.3. Loss Function Improvement

Loss functions play a crucial role in deep learning, guiding model training, evaluating model performance, and playing a vital role in backpropagation. The robustness of small object detection in the YOLOv5 model needs improvement, requiring a more precise loss function that can accurately measure the differences between targets and predicted boxes, thereby more effectively guiding model training and enhancing its performance. Complete Intersection over Union (CIOU) is insensitive to incomplete bounding boxes, which can make it difficult for the model to accurately locate and detect targets in small object detection, especially in complex backgrounds. Additionally, the computational complexity of CIOU may have a detrimental effect on the computational overhead of the training process, which could substantially impact performance in applications requiring high real-time performance. Given these issues, the decision was made to replace the CIOU loss function with the End-to-End Intersection over Union (EIOU) loss function. The computational formula for CIOU is as follows:

In this context, respectively represent the centroids of the predicted box B and the ground truth box . The adjustment factor is represented by (.) used to correct the offset of the centroids. C denotes the diagonal length of the minimum bounding box between , which serves as a positive balancing parameter. is a hyperparameter that adjusts the penalty level, while α is another hyperparameter that tunes the importance of CIOU.

EIOU is an extension of CIOU, incorporating additional terms to comprehensively consider the similarity between bounding boxes. The mathematical expression for EIOU is as follows:

In this context, represent the width and height of the minimum bounding box for and B, respectively.

The advantage of EIOU in object detection lies in its consideration of the rotation and incomplete overlap of the target bounding boxes, which enhances the precise localization and detection capabilities of targets in complex scenes. Compared to CIOU, EIOU demonstrates higher robustness in detecting small smoke in complex scenes, allowing for more accurate capturing of the boundaries of smoke targets. Therefore, it performs better in situations involving blurriness, incompleteness, or occlusion.

2.4. Detection Head Improvement

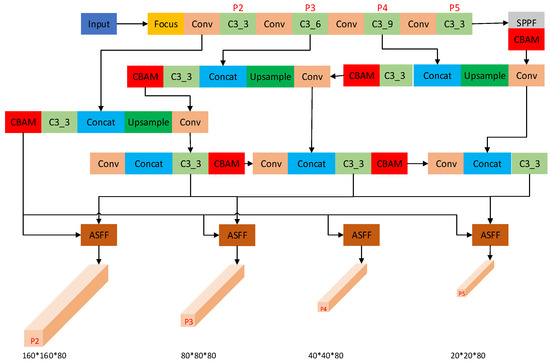

YOLOv5 stands as a cornerstone model in object detection, boasting three detection heads, each tailored to process specific scales of images. For instance, when presented with a 640 × 640 input image, the downsampling operation generates feature maps of sizes 80 × 80, 40 × 40, and 20 × 20, respectively. As a general principle, with increased downsampling, the receptive field expands, yet the challenge of detecting small objects concurrently escalates. The default detection head of YOLOv5 follows a conventional design, comprising a sequence of convolutional and pooling layers aimed at extracting target position and class information from the feature maps. Although this default detection head showcases commendable performance across most scenarios, it falls short in terms of a sufficiently expansive receptive field to effectively manage small objects or intricate backgrounds. Here, the term “receptive field” denotes the portion of the input image visible to a node within the neural network. In the context of small objects, inadequate receptive field coverage within the detection head translates to an inability to capture requisite contextual information, thereby resulting in misidentification or complete oversight of the target. Consequently, this paper advocates for the integration of larger detection heads, as illustrated in Figure 6.

Figure 6.

Improved YOLOv5 model.

Adding a 160 × 160 detection head brings substantial advantages to this study because it has a sufficient receptive field, allowing it to focus on various regions of the input image more broadly, making it easier to discover features of small target objects. This helps to better capture information about small targets, especially when they are located within complex backgrounds. Larger detection heads are typically able to extract more complex and high-level features, which are crucial for the localization and detection of small objects. They help to capture the details and shape information of the target more accurately.

3. Experiment and Analysis of Improved YOLOv5 Algorithm

3.1. Experiment Preparation

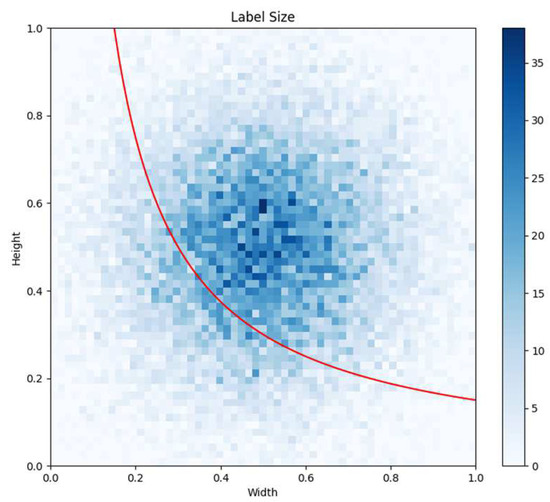

The dataset used in this experiment includes the VSD dataset [9] as well as experimental fire data provided by the Institute of Public Safety Research at Tsinghua University in Hefei. From these datasets, a total of 22,414 smoke images from various environments were selected and named DATA1. To evaluate the efficiency of the algorithm in detecting small smoke targets, all data were uniformly resized, and a two-dimensional histogram was plotted based on the respective Width and Height proportions. The small target dataset for this study selected data where smoke targets occupy less than 15% of the image area, represented by the data below the red line in Figure 7. This dataset is named DATA2 and includes 6781 images, where the red line is defined as .

Figure 7.

Label Size.

In this study, the experimental system utilized Ubuntu 16.04.6 as the operating system. The environment configuration included CUDA Version 10.2, CUDNN 8.6.0, and PyTorch 1.9. The server configuration comprised an Intel i5-11600K processor, 16 GB of RAM, and an RTX 3060 Ti graphics card with 6 GB of VRAM. All models were run on the GPU, with 150 epochs to ensure complete convergence of the experimental data. The batch size was set to 8, and other parameters were kept at their default settings.

3.2. Experimental Evaluation Metrics

This study employed the following important evaluation metrics to measure the performance of the model in small object detection and complex environments. Firstly, precision (P) and recall (R) were used. Additionally, to better represent the accuracy and speed of the model, average precision (AP) and Frames Per Second (FPS) were introduced. Precision (P) measures the accuracy of the model’s positive predictions. Recall (R) reflects the comprehensiveness of the model’s coverage of positive samples, particularly in small object detection, where improving recall can better capture targets. AP, as one of the common metrics for object detection evaluation, helps assess the detection performance of the model for each class. Furthermore, this study also focuses on FPS, a critical performance indicator in practical applications. A high FPS indicates that the model can run quickly in real-time or high-speed scenarios, which is crucial for various applications. The formulas are as follows:

TP (True Positive) refers to samples correctly classified as positive, FP (False Positive) refers to samples incorrectly classified as positive, FN (False Negative) refers to samples incorrectly classified as negative, and TN (True Negative) refers to samples correctly classified as negative.

3.3. Ablation Study

This study aims to evaluate the impact of improvement methods on the performance of the YOLOv5 model and to confirm the effectiveness of all improvement measures. For this purpose, five ablation experiments were designed, all conducted under the same environment and training conditions. The experimental results are shown in Table 2.

Table 2.

Ablation test results (AP, FPS).

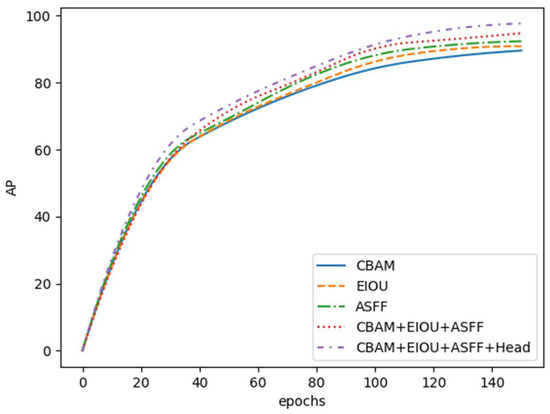

From the data results in Table 2, it is evident that the introduction of different modules in this study has substantially impacted the performance of the YOLOv5 model in detecting small smoke targets. Firstly, when the CBAM and EIOU modules were introduced individually, there was almost no substantial decrease in FPS, but a substantial improvement in accuracy was achieved. This indicates that the CBAM and EIOU modules can enhance the smoke detection accuracy of the model without compromising real-time performance. The convergence curves of each model are shown in Figure 8.

Figure 8.

Ablation experiment curve.

Furthermore, when the ASFF module was introduced alone, although there was a slight decrease in FPS, a substantial improvement in model accuracy was observed on both datasets (DATA1 and DATA2). The advantage of the ASFF module lies in its ability to effectively integrate features of various scales, thereby enhancing the detection performance of micro-smoke targets.

When the CBAM, EIOU, and ASFF modules were introduced simultaneously, the model’s accuracy further improved, with increases of 5.2% and 6.9% on the two datasets, respectively. This suggests that these three modules complement each other and work synergistically to substantially improve smoke detection performance. Although there was a slight decrease in FPS, this performance improvement makes the model more feasible for applications in complex scenarios.

Finally, improvements were made to the detection heads, further enhancing the model’s accuracy. Compared to the previous introduction of the three modules, the final improvement solution resulted in AP increases of 2.9% and 1.7% on the two datasets, respectively, and an accuracy improvement of 8.1% and 8.6% compared to the YOLOv5 model.

In summary, this study has made substantial progress in smoke detection accuracy while meeting real-time requirements. By introducing CBAM, EIOU, ASFF, and detection head improvements, this study has successfully improved the robustness and accuracy of the smoke detection model in complex scenarios.

3.4. Comparative Experiment

To validate the differences between the experimental results of this study and other object detection algorithms, experiments were conducted with different object detection models under the same datasets and environment, as shown in Table 3.

Table 3.

Ablation experiments.

Firstly, on the DATA1 dataset, compared to traditional Faster R-CNN and RetinaNet, the improved methods in this study achieved accuracy increases of 1.6% and 5.3%, respectively. This indicates that the model proposed in this study exhibits superior performance in smoke detection tasks in complex scenarios, enabling more accurate detection and localization of smoke targets.

On the DATA2 dataset, the improved methods achieved even more substantial accuracy improvements, reaching 6.9% and 12.9%, respectively. This suggests that the model proposed in this study shows strong potential in detecting small smoke targets, effectively handling smaller-sized smoke targets, and improving detection accuracy.

These results strongly validate the effectiveness of the proposed improvement methods, especially in addressing complex scenarios and detecting small smoke targets. This provides higher precision and feasibility for applications in the field of fire smoke detection and is expected to play an important role in practical applications. The partial smoke detection results are shown in Figure 9 and Figure 10. Figure 10 demonstrates how the improved model effectively reduces the previously observed false positives and false negatives.

Figure 9.

Smoke detection images from DATA1.

Figure 10.

Smoke detection images from DATA2.

4. Feasibility Verification of Improved YOLOv5 Algorithm Deployment

4.1. Temperature-Based Threshold Detection Algorithm

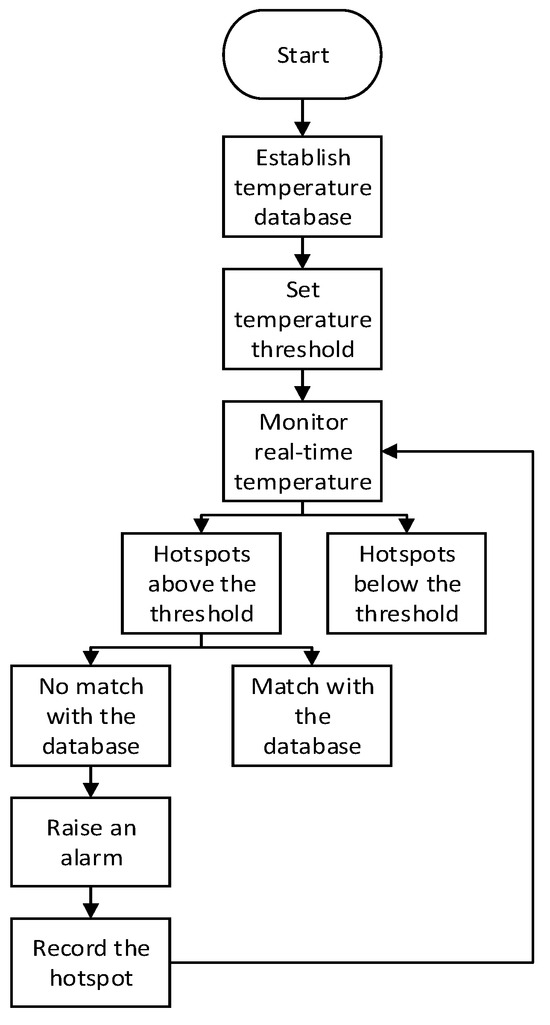

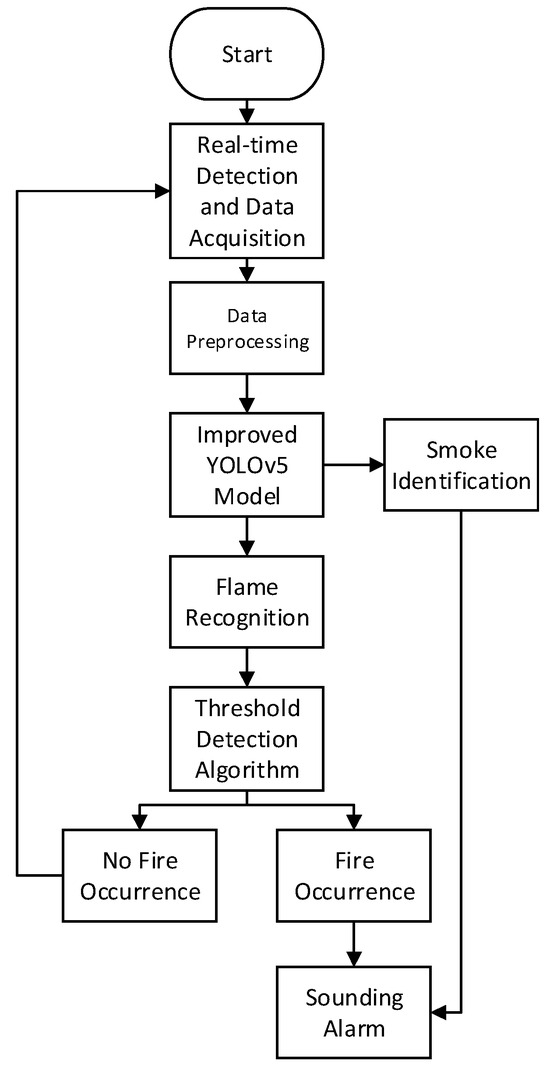

The threshold-based hotspot detection algorithm is a simple and intuitive method suitable for quickly detecting temperature anomalies. Its flowchart is shown in Figure 11.

Figure 11.

Threshold Detection Algorithm Flow.

- Establish a local temperature database: Firstly, process the temperature data collected in the target area to establish a temperature model. Secondly, within a certain time period, record the coordinates, range, temperature, and other data of fire source points that appear above the threshold temperature.

- Analyze the established temperature model and set the threshold value according to specific requirements. The threshold is likely to vary depending on the scene.

- Compare the collected temperature data with the threshold: If the temperature is higher than the high-temperature threshold, it is marked as an abnormal hotspot.

- Compare the detected abnormal hotspots with the fire source points recorded in the database: If the coordinates, range, temperature, and other data of the abnormal hotspot match the data of existing fire source points within a certain time period, no alarm is issued, and the lifespan of the fire source point is updated. If the abnormal hotspot does not match successfully, an alarm is issued, and the new fire source point is recorded in the local temperature database. This ensures that the model does not continuously issue alarm messages for normal sources of fire, such as candles or oil lamps, and the lifespan concept also ensures the robustness of the algorithm.

- Update the local temperature database: Update all fire source points in the database and delete the information of fire source points with insufficient lifespan.

The setting of the threshold may be influenced by factors such as environmental changes and sensor errors, requiring repeated adjustment and optimization. However, the temperature-based setting makes the algorithm simpler, more intuitive, and easier to implement and understand.

4.2. Algorithm Integration

During the software integration phase of the experimental deployment, integrating YOLOv5 with the temperature-based threshold detection algorithm is a crucial step to ensure the collaborative operation of the system. The purpose of this integration is to comprehensively monitor and accurately identify fire scenes by linking various algorithms in series. The detailed description of the entire integration process is shown in Figure 12.

Figure 12.

Algorithm Integration.

- Real-time Data Collection from Cameras: Data within the experimental monitoring and collection scene are captured. By using a thermal surveillance camera, the target scene is monitored in real-time. This thermal surveillance camera efficiently captures temperature matrix data around the fire source while also collecting real-time video surveillance data.

- Real-time Data Decryption and Preprocessing: Since the collected fire videos may need to be decrypted, this step ensures the integrity of the data. This step involves decrypting the data and preprocessing the raw data to make them suitable for subsequent algorithmic inputs.

- Improved YOLOv5 Model: The decrypted and preprocessed data are transmitted to the improved YOLOv5 model for target detection. If smoke is identified, an alarm is directly triggered; if flame data is identified, to prevent false alarms, the algorithm continues execution.

- Threshold Detection Algorithm: This threshold detection algorithm judges whether there are hotspot anomalies based on preset thresholds. This step helps reduce false alarm rates and reduces interference from normal fire sources within the scene.

- Result Output: The results are returned, indicating the accurate positions of targets such as fire sources and smoke, along with judgments on the presence of fire sources. The output of this integrated system can be used for real-time fire monitoring, early warning, and taking corresponding response measures.

4.3. Feasibility Verification Experimental Results

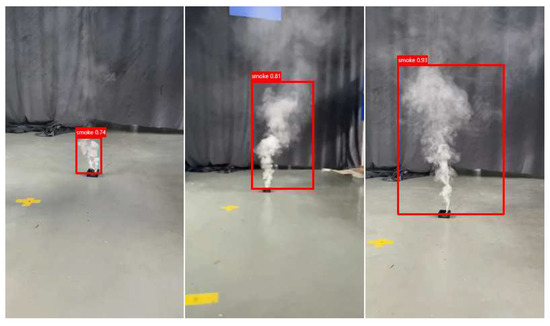

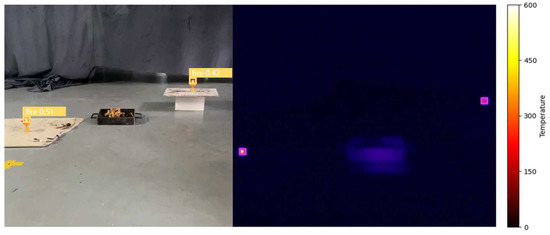

For the feasibility validation of deploying the improved YOLOv5 model, this study employed simulated experiments. In the first stage, smoke targets were simulated using burning smoke cakes. As shown in Figure 13, early-stage fire smoke targets were relatively small, but the improved YOLOv5 was still able to detect them.

Figure 13.

Displaying smoke recognition results based on the YOLOv5 model.

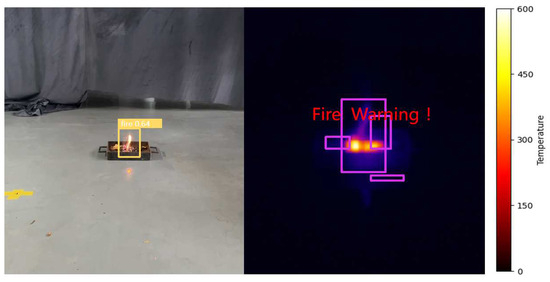

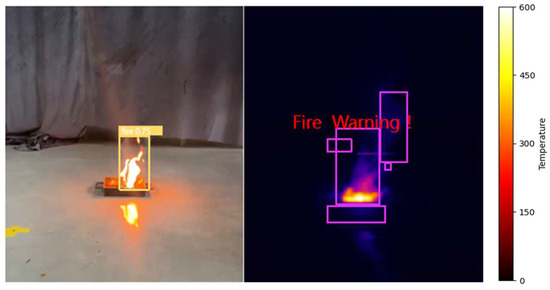

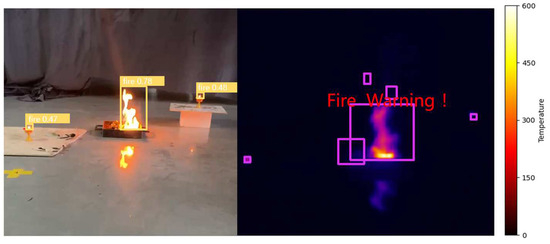

In the second phase, the propagation of flames was emulated using tobacco drenched in liquid fuel. As illustrated in Figure 14, despite the initial fire source being modest, it exhibited pronounced visibility in the thermal imagery captured by the thermal surveillance camera. The left-side target box in the image signifies the flame recognized by the enhanced YOLOv5, while the target box on the right side of the heatmap indicates the aberrant hot spot identified by the temperature-based threshold detection algorithm introduced in this study. As delineated in Figure 15, with the gradual expansion of the fire, the ultimate algorithmic model devised in this investigation demonstrated exceptional performance in both target discernment and flame characteristic extraction.

Figure 14.

Fire source detected.

Figure 15.

Fire expanding.

Certainly, during the combustion process, there will be airflow carrying partially burned remnants with some residual heat. Therefore, in the final thermal image, in addition to the main fire source being boxed, there are also some small additional annotation boxes. However, these small annotation boxes do not affect the experimental results.

In the third stage, based on the second stage, burning candles were used as interference items in the experiment. As shown in Figure 16, although candle flames were recognized as fire targets by the algorithm, the fire detection system did not issue an alarm due to the correction of the flame feature algorithm. In Figure 16, the two red boxes mark the candle targets. With the appearance and spread of new fire sources, as shown in Figure 17, the fire detection system successfully identified the fire and issued an alarm.

Figure 16.

Introducing burning candles.

Figure 17.

New fire source emerges.

The experiment was divided into three control groups. The first group used the fire alarm mode built into the camera without any additional algorithms, named HK. The second group, named HK-YOLO, included the improved YOLOv5 algorithm added to the first group. The third group, named HK-YOLO-TEMP, utilized the updated algorithm, which combines the improved YOLOv5 with a threshold detection algorithm.

Data collection lasted for 10 min, with two frames extracted per second for detection, resulting in a total of 1200 sets of experimental data. The final experimental results are as follows (Table 4).

Table 4.

Test experiment results.

As shown in Table 4, the number of false positives for the HK method, HK-YOLO method, and HK-YOLO-TEMP method are 131, 78, and 17 respectively. The detection accuracy for the HK method, HK-YOLO method, and HK-YOLO-TEMP method are 86.6%, 95.6%, and 98.5% respectively. The HK-YOLO-TEMP method achieves a running frame rate of 57 frames per second. Finally, the experimental results demonstrate that the improved YOLOv5 algorithm meets the deployment requirements, enhancing algorithm accuracy while ensuring timely responsiveness.

5. Conclusions

This study conducted an in-depth exploration and optimization of the YOLOv5 algorithm for the early detection of small smoke targets in complex environments. The research outcomes are summarized as follows:

- An investigation of the impact of attention mechanisms on smoke feature extraction accuracy was conducted. Through experimental comparisons, embedding the CBAM module into the C3_3 module of the SPPF module and the Neck network resulted in the most substantial improvement. It increased precision by 2% on the standard dataset and by 1.4% on the small target dataset.

- The traditional YOLOv5 network structure was improved by incorporating CBAM and ASFF modules, as well as enhancing loss functions and detection modules. These enhancements led to an 8.1% increase in Average Precision (AP) on the standard dataset DATA1 and an 8.6% increase on the small target dataset DATA2.

- The improved YOLOv5 algorithm was combined with a temperature-based threshold detection algorithm, achieving a frame rate of 57 frames per second during actual deployment. Such experimental results demonstrated high accuracy and reliability, both enhancing the precision of the algorithm and validating the feasibility of deployment.

These experimental results conclusively demonstrate the enhancement of early fire smoke detection and warning capabilities, as well as substantial improvements in the algorithm’s robustness.

Author Contributions

Conceptualization, J.W.; Software, Y.T.; Validation, Y.T.; Data curation, J.R.; Writing—original draft, Y.T.; Writing—review & editing, Y.H.; Visualization, Y.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Plan Project of the Fire and Rescue Department, Ministry of Emergency Management grant number 2020XFCX30 and the Science and Technology Plan Project of the National Fire and Rescue Administration grant number 2023XFCX22.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data are not publicly available due to commercial confidentiality, as they contain information that could compromise the privacy of research participants.

Acknowledgments

The authors express deep gratitude for the support of Anhui Construction Engineering Group Co., Ltd. and Anhui Jianke Construction Supervision Co., Ltd.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Majid, S.; Alenezi, F.; Masood, S.; Ahmad, M.; Gündüz, E.S.; Polat, K. Attention Based Cnn Model for Fire Detection and Localization in Real-World Images. Expert Syst. Appl. 2022, 189, 116114. [Google Scholar] [CrossRef]

- Campbell, M.J.; Dennison, P.E.; Thompson, M.P.; Butler, B.W. Assessing Potential Safety Zone Suitability Using a New Online Mapping Tool. Fire 2022, 5, 5. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Ding, Y.; Bu, X. Ms-Frcnn: A Multi-Scale Faster Rcnn Model for Small Target Forest Fire Detection. Forests 2023, 14, 616. [Google Scholar] [CrossRef]

- Sun, B.; Wang, Y.; Wu, S. An Efficient Lightweight Cnn Model for Real-Time Fire Smoke Detection. J. Real-Time Image Process. 2023, 20, 74. [Google Scholar] [CrossRef]

- Almeida, J.S.; Jagatheesaperumal, S.K.; Nogueira, F.G.; de Albuquerque, V.H.C. Edgefiresmoke++: A Novel Lightweight Algorithm for Real-Time Forest Fire Detection and Visualization Using Internet of Things-Human Machine Interface. Expert Syst. Appl. 2023, 221, 119747. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An Improved Fire Detection Approach Based on Yolo-V8 for Smart Cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Kim, S.Y.; Muminov, A. Forest Fire Smoke Detection Based on Deep Learning Approaches and Unmanned Aerial Vehicle Images. Sensors 2023, 23, 5702. [Google Scholar] [CrossRef]

- Lin, J.; Lin, H.; Wang, F. A Semi-Supervised Method for Real-Time Forest Fire Detection Algorithm Based on Adaptively Spatial Feature Fusion. Forests 2023, 14, 361. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Xia, X.; Huang, Q.; Li, X. A Gated Recurrent Network with Dual Classification Assistance for Smoke Semantic Segmentation. IEEE Trans. Image Process. 2021, 30, 4409–4422. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).