Camera-Based Lane Detection—Can Yellow Road Markings Facilitate Automated Driving in Snow?

Abstract

:1. Introduction

2. Background

2.1. Image-Based Lane Detectionl

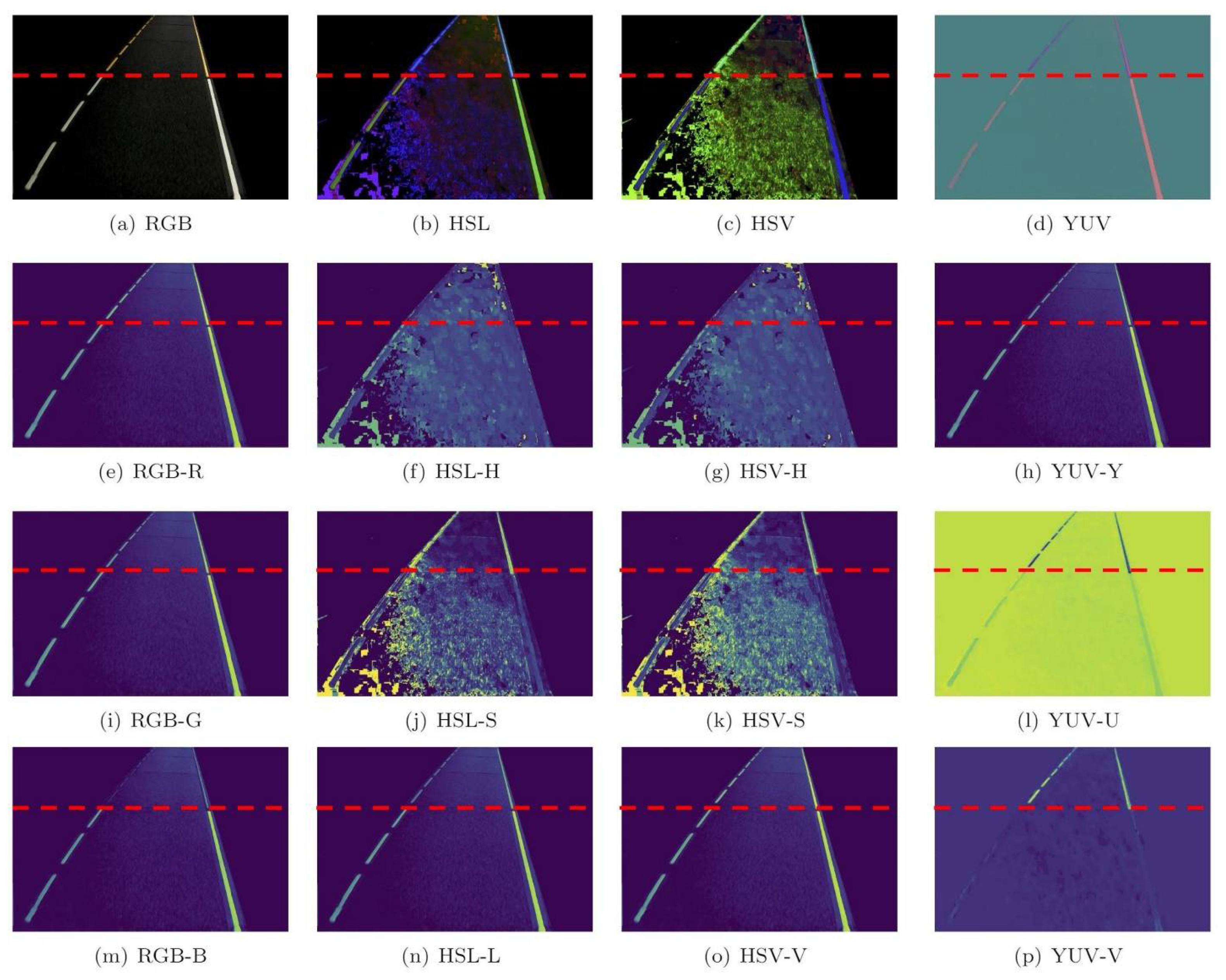

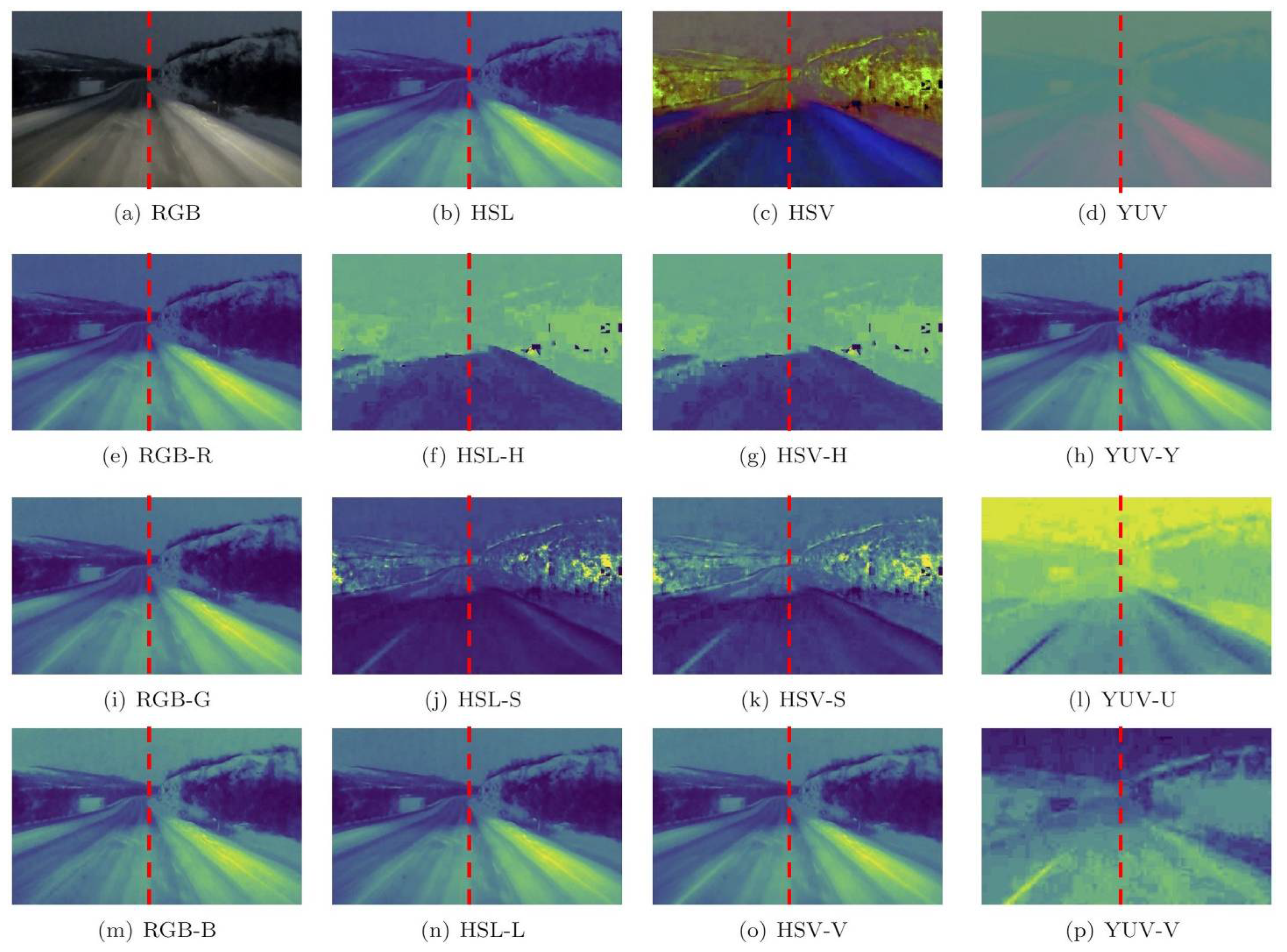

2.2. Color Spaces

3. Materials and Methods

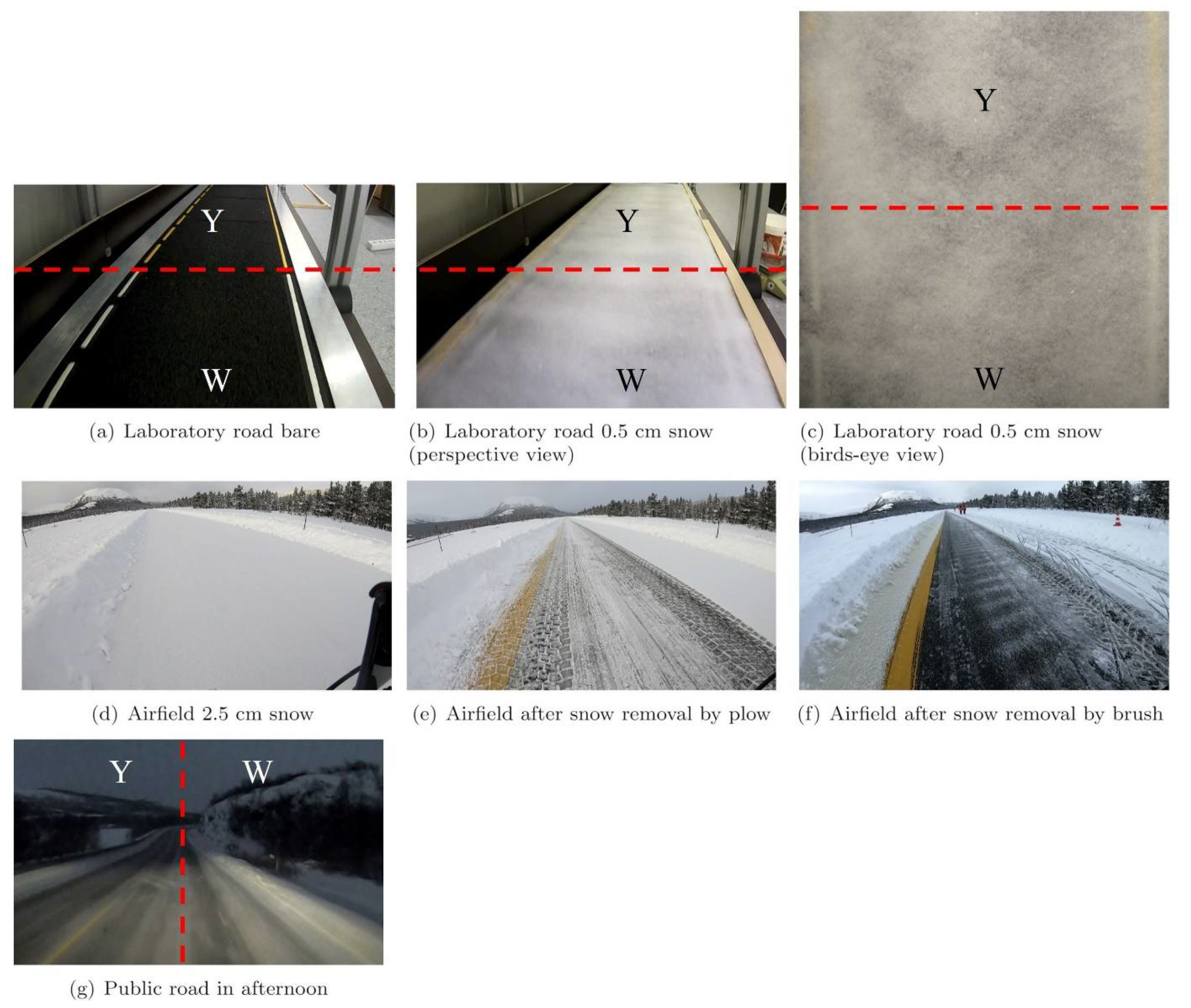

3.1. Image Capture Procedure

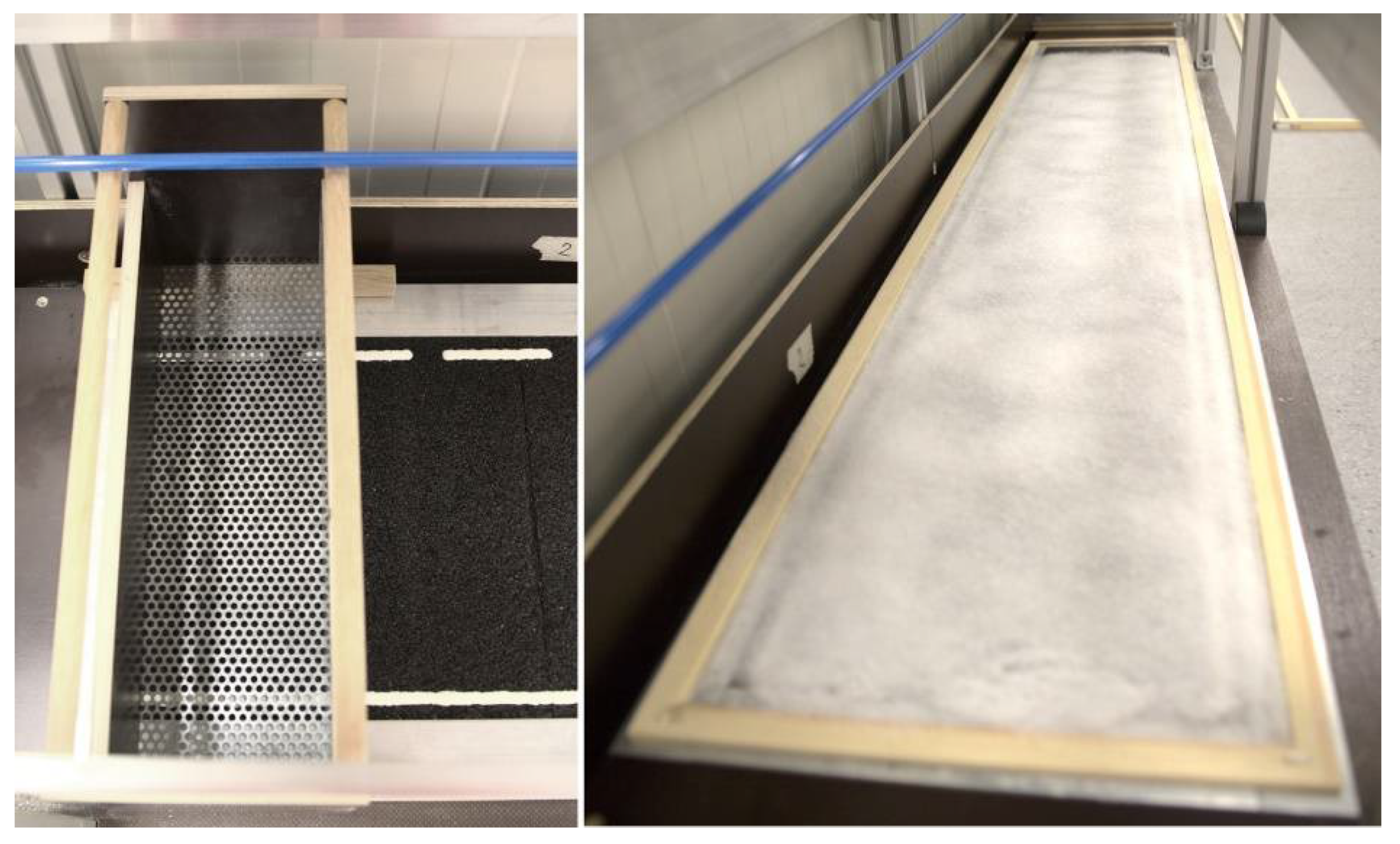

3.1.1. Laboratory Image Capture

Laboratory Snow Production and Application

3.1.2. Airfield Test Track Image Capture

3.1.3. Public Road Image Capture

3.2. Lane Detection Procedure

4. Results

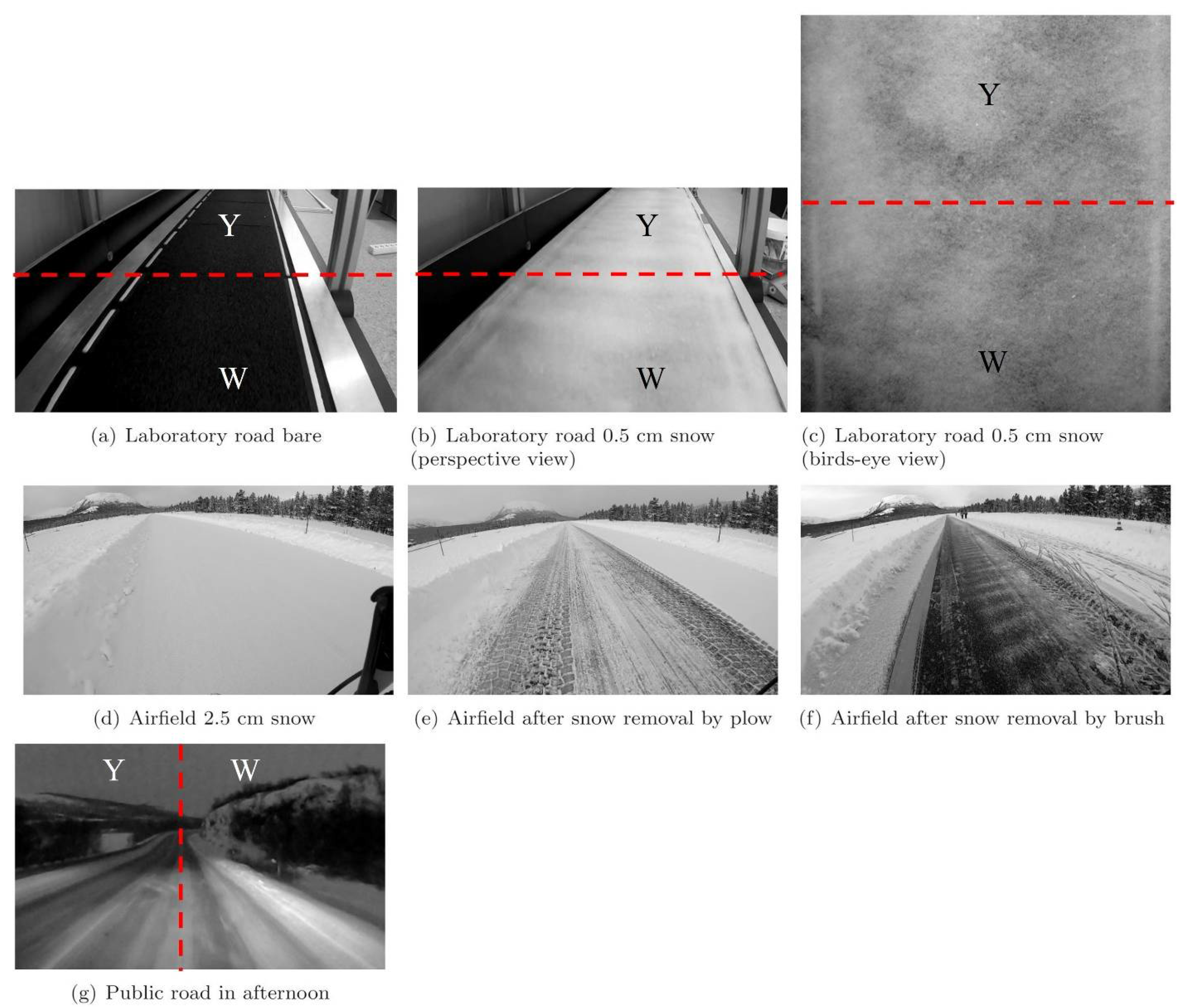

4.1. Grayscale Representation

4.2. Color Space Representation

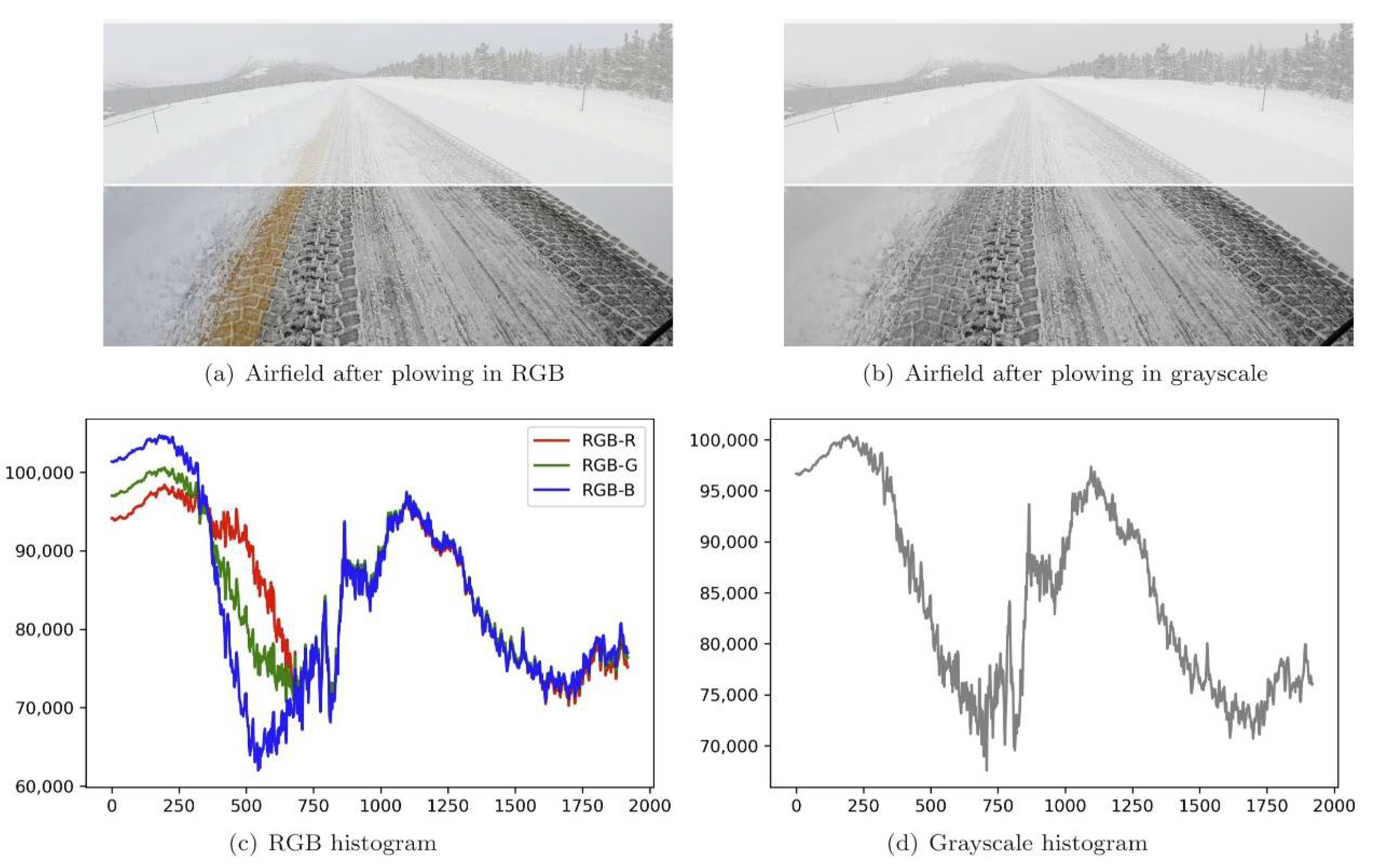

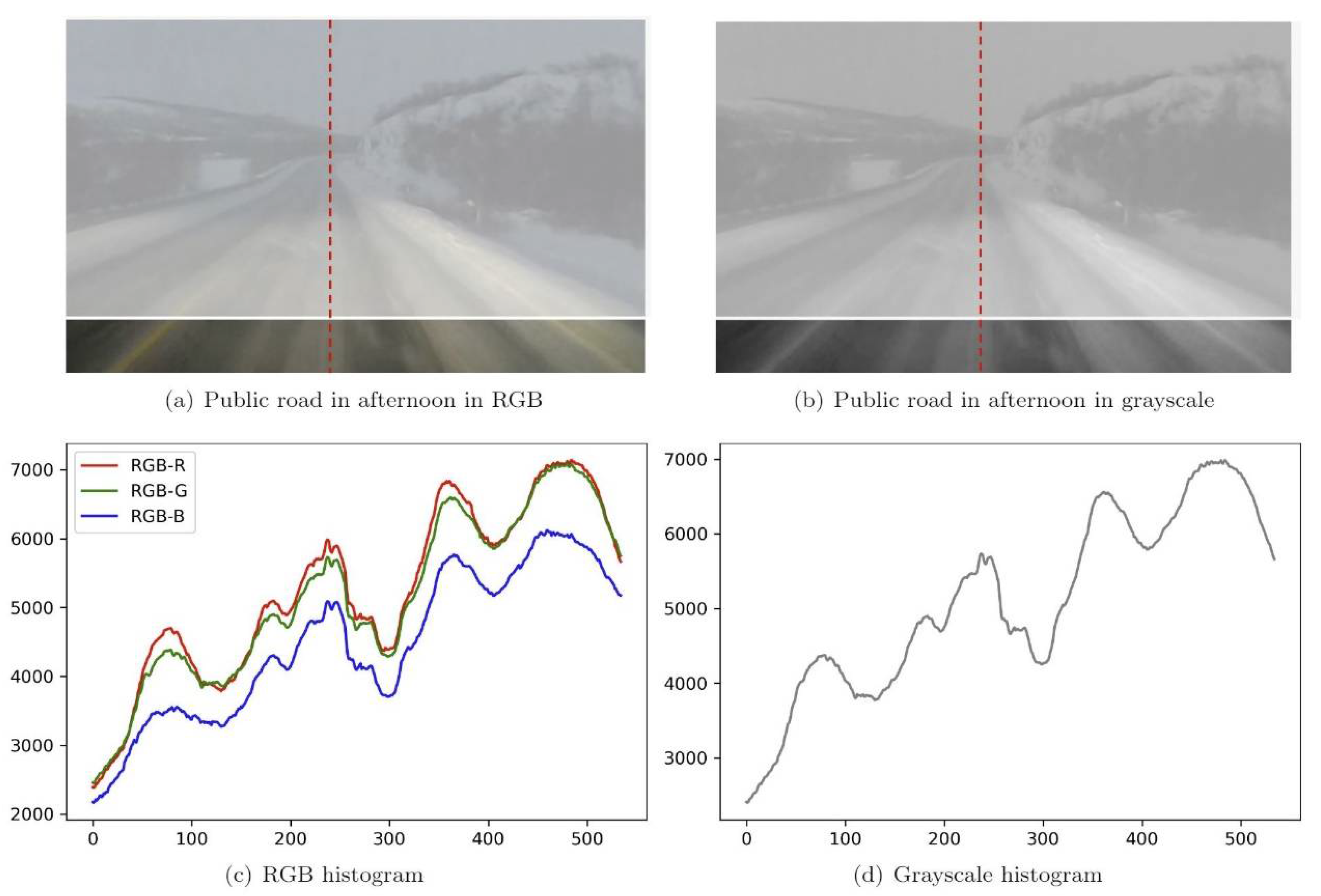

4.3. Histograms of Pixel Values

4.3.1. The Laboratory Road with a 0.5 cm Layer of Snow (Birds-Eye View)

4.3.2. The Plowed Airfield

4.3.3. The Brushed Airfield

4.3.4. The Public Road in the Afternoon

5. Discussion

6. Conclusions

- A more comprehensive investigation of the effect of snow depth on camera-based lane detection;

- The effectiveness of different winter maintenance approaches, including the effect of salting on the visibility of road markings in snowy conditions;

- The effect of different camera characteristics and the position of the camera on the accuracy of automated lane detection;

- How different types of road markings, e.g., color and thickness, affect camera-based lane detection;

- How different types of road surfaces, e.g., color and texture, affect camera-based lane detection.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACC | Adaptive Cruise Control |

| ADAS | Advanced Driver Assistance Systems |

| ADS | Automated Driving Systems |

| LoG | Laplacian of Gaussian |

| LDW | Lateral Departure Warning |

| RGB | Red, Green and Blue (color space) |

| HSL | Hue, Saturation and Lightness (color space) |

| HSL-H | H channel of the HSL color space |

| HSL-S | S channel of the HSL color space |

| HSL-L | L channel of the HSL color space |

| HSV | Hue, Saturation and Value (color space) |

| HSV-H | H channel of the HSV color space |

| HSV-S | S channel of the HSV color space |

| HSV-V | V channel of the HSV color space |

| YUV | Luminance independent of color, blue luminance, red luminance (color space) |

| YUV-Y | Y channel of the YUV color space |

| YUV-U | U channel of the YUV color space |

| YUV-V | V channel of the YUV color space |

References

- Storsæter, A.D.; Pitera, K.; McCormack, E. Using ADAS to Future-Proof Roads—Comparison of Fog Line Detection from an In-Vehicle Camera and Mobile Retroreflectometer. Sensors 2021, 21, 1737. [Google Scholar] [CrossRef]

- Nitsche, P.; Mocanu, I.; Reinthaler, M. Requirements on tomorrow’s road infrastructure for highly automated driving. In Proceedings of the 2014 International Conference on Connected Vehicles and Expo, ICCVE, Vienna, Austria, 3–7 November 2014; pp. 939–940. [Google Scholar] [CrossRef]

- Shladover, S.E. Connected and automated vehicle systems: Introduction and overview. J. Intell. Transp. Syst. Technol. Plan. Oper. 2018, 22, 190–200. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar] [CrossRef]

- Yi, S.-C.; Chen, Y.-C.; Chang, C.-H. A lane detection approach based on intelligent vision. Comput. Electr. Eng. 2015, 42, 23–29. [Google Scholar] [CrossRef]

- Kusano, K.D.; Gabler, H.C. Comparison of Expected Crash and Injury Reduction from Production Forward Collision and Lane Departure Warning Systems. Traffic Inj. Prev. 2015, 16, 109–114. [Google Scholar] [CrossRef]

- Sternlund, S. The safety potential of lane departure warning systems—A descriptive real-world study of fatal lane departure passenger car crashes in Sweden. Traffic Inj. Prev. 2017, 18, S18–S23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hillel, A.B.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar] [CrossRef]

- Chetan, N.B.; Gong, J.; Zhou, H.; Bi, D.; Lan, J.; Qie, L. An Overview of Recent Progress of Lane Detection for Autonomous Driving. In Proceedings of the 2019 6th International Conference on Dependable Systems and Their Applications, DSA 2019, Harbin, China, 3–6 January 2020; pp. 341–346. [Google Scholar] [CrossRef]

- Gruyer, D.; Magnier, V.; Hamdi, K.; Claussmann, L.; Orfila, O.; Rakotonirainy, A. Perception, information processing and modeling: Critical stages for autonomous driving applications. Annu. Rev. Control 2017, 44, 323–341. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Chen, L.; Wang, H.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.-Y. Advances in Vision-Based Lane Detection: Algorithms, Integration, Assessment, and Perspectives on ACP-Based Parallel Vision. IEEE/CAA J. Autom. Sin. 2018, 5, 645–661. [Google Scholar] [CrossRef]

- Huang, A.S.; Moore, D.; Antone, M.; Olson, E.; Teller, S. Finding multiple lanes in urban road networks with vision and lidar. Auton. Robots 2009, 26, 103–122. [Google Scholar] [CrossRef] [Green Version]

- Stević, S.; Dragojević, M.; Krunić, M.; Četić, N. Vision-Based Extrapolation of Road Lane Lines in Controlled Conditions. In Proceedings of the 2020 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 26–27 May 2020; pp. 174–177. [Google Scholar] [CrossRef]

- Xuan, H.; Liu, H.; Yuan, J.; Li, Q. Robust Lane-Mark Extraction for Autonomous Driving under Complex Real Conditions. IEEE Access 2017, 6, 5749–5765. [Google Scholar] [CrossRef]

- Yinka, A.O.; Ngwira, S.M.; Tranos, Z.; Sengar, P.S. Performance of drivable path detection system of autonomous robots in rain and snow scenario. In Proceedings of the 2014 International Conference on Signal Processing and Integrated Networks, SPIN, Noida, India, 20–21 February 2014; pp. 679–684. [Google Scholar] [CrossRef]

- Zhang, L.; Yin, Z.; Zhao, K.; Tian, H. Lane detection in dense fog using a polarimetric dehazing method. Appl. Opt. 2020, 59, 5702. [Google Scholar] [CrossRef]

- Lundkvist, S.-O.; Fors, C. Lane Departure Warning System—LDW Samband Mellan LDW:s och Vägmarkeringars Funktion VTI Notat 15-2010; Linköping, Sweden, 2010; Available online: www.vti.se/publikationer (accessed on 19 December 2019).

- Pike, A.; Carlson, P.; Barrette, T. Evaluation of the Effects of Pavement Marking Width on Detectability By Machine Vision: 4-Inch vs. 6-Inch Markings; VA, USA, 2018; Available online: https://www.researchgate.net/publication/330545262_Evaluation_of_the_Effects_of_Pavement_Marking_Width_on_Detectability_By_Machine_Vision_4-Inch_vs_6-Inch_Markings (accessed on 2 July 2021).

- Son, Y.; Lee, E.S.; Kum, D. Robust multi-lane detection and tracking using adaptive threshold and lane classification. Mach. Vis. Appl. 2019, 30, 111–124. [Google Scholar] [CrossRef]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust lane detection from continuous driving scenes using deep neural networks. IEEE Trans. Veh. Technol. 2020, 69, 41–54. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.; Moon, J.H. Robust lane detection and tracking for real-time applications. IEEE Trans. Intell. Transp. Syst. 2018, 19, 4043–4048. [Google Scholar] [CrossRef]

- Blake, D.M.; Wilson, T.M.; Gomez, C. Road marking coverage by volcanic ash: An experimental approach. Environ. Earth Sci. 2016, 75, 1348. [Google Scholar] [CrossRef]

- Aldibaja, M.; Suganuma, N.; Yoneda, K. Improving localization accuracy for autonomous driving in snow-rain environments. In Proceedings of the 2016 IEEE/SICE International Symposium on System Integration (SII), Sapporo, Japan, 13–15 December 2016; pp. 212–217. [Google Scholar] [CrossRef]

- Rad, S.R.; Farah, H.; Taale, H.; van Arem, B.; Hoogendoorn, S.P. Design and operation of dedicated lanes for connected and automated vehicles on motorways: A conceptual framework and research agenda. Transp. Res. Part C Emerg. Technol. 2020, 117, 102664. [Google Scholar] [CrossRef]

- Pike, A.M.; Bommanayakanahalli, B. Development of a Pavement Marking Life Cycle Cost Tool. Transp. Res. Rec. 2018, 2672, 148–157. [Google Scholar] [CrossRef] [Green Version]

- Vegvesen Vegdirektoratet, S. Trafikksikkerhetsrevisjoner og—Inspeksjoner; Oslo, Norway, 2005. [Google Scholar]

- Statistisk Sentralbyrå. Public Roads in Norway. Official Statistics; 2016. Available online: https://www.ssb.no/statbank/sq/10046513 (accessed on 1 February 2021).

- European Union Road Federation. Marking the Way towards a Safer Future an ERF Position PaPer on How Road Markings Can Make Our Road Safer; Brussels, Belgium, 2012; Available online: http://www.erf.be/images/ERF_Paper_on_Road_Markings_Released.pdf (accessed on 17 September 2020).

- Finnish Transport and Communications Agency. New Road Markings and Traffic Signs. Road Traffic Act 2020, 2020. Available online: https://www.traficom.fi/en/transport/road/new-road-markings-and-traffic-signs (accessed on 2 November 2020).

- Vadeby, A.; Kjellman, E.; Fors, C.; Lundkvist, S.O.; Nielsen, B.; Johansen, T.C.; Nilsson, C. ROMA State Assessment of Road Markings in Denmark, Norway and Sweden—Results from 2017. 2018. Available online: http://vti.diva-portal.org/smash/get/diva2:1292984/FULLTEXT01.pdf (accessed on 20 March 2019).

- Vegvesen Vegdirektoratet, S. Vegoppmerking Tekniske Bestemmelser og Retningslinjer for Anvendelse of Utforming; Statens Vegvesen: Oslo, Norway, 2015. [Google Scholar]

- U.S. Department of Transportation. Manual on Uniform Traffic Control Devices for Streets and Highways; U.S. Department of Transportation: Washington, DC, USA, 2009.

- Schnell, T.; Zwahlen, H.T. Computer-based modeling to determine the visibility and minimum retroreflectivity of pavement markings. Transp. Res. Rec. 2000, 1708, 47–60. [Google Scholar] [CrossRef]

- United States Forces Korea. Guide to Safe Driving in Korea–US Armed Forces; Camp Humphreys: Pyeongtaek, Korea, 2007. [Google Scholar]

- Lin, Q.; Han, Y.; Hahn, H. Real-time lane detection based on extended edge-linking algorithm. In Proceedings of the 2nd International Conference on Computer Research and Development, ICCRD, Kuala Lumpur, Malaysia, 7–10 May 2010; pp. 725–730. [Google Scholar] [CrossRef]

- Cáceres Hernández, D.; Kurnianggoro, L.; Filonenko, A.; Jo, K.H. Real-time lane region detection using a combination of geometrical and image features. Sensors 2016, 16, 1935. [Google Scholar] [CrossRef] [Green Version]

- Acharjya, P.P.; Das, R.; Ghoshal, D. Study and Comparison of Different Edge Detectors for Image Segmentation. Glob. J. Comput. Sci. Technol. Graph. Vis. 2012, 12, 29–32. [Google Scholar]

- Samuel, M.; Mohamad, M.; Saad, S.M.; Hussein, M. Development of Edge-Based Lane Detection Algorithm using Image Processing. JOIV Int. J. Inform. Vis. 2018, 2, 19. [Google Scholar] [CrossRef]

- Kaur, J.; Agrawal, S.; Vig, R. A Comparative Analysis of Thresholding and Edge Detection Segmentation Techniques. Int. J. Comput. Appl. 2012, 39, 29–34. [Google Scholar] [CrossRef]

- Li, L.; Luo, W.; Wang, K.C.P. Lane marking detection and reconstruction with line-scan imaging data. Sensors 2018, 18, 1635. [Google Scholar] [CrossRef] [Green Version]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Wang, X.; Hänsch, R.; Ma, L.; Hellwich, O. Comparison of different color spaces for image segmentation using graph-cut. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 1, pp. 301–308. [Google Scholar] [CrossRef]

- Podpora, M.; Korbas, G.P.; Kawala-Janik, A. YUV vs. RGB—Choosing a Color Space for Human-Machine Interaction. In Proceedings of the Position Papers of the 2014 Federated Conference on Computer Science and Information Systems, Warsaw, Poland, 7–10 September 2014; Volume 3, pp. 29–34. [Google Scholar] [CrossRef] [Green Version]

- Haque, M.R.; Islam, M.M.; Alam, K.S.; Iqbal, H.; Shaik, M.E. A Computer Vision based Lane Detection Approach. Int. J. Image Graph. Signal Process. 2019, 11, 27–34. [Google Scholar] [CrossRef] [Green Version]

- GoPro. GoPro Hero 7 manual. 2020. Available online: https://gopro.com/content/dam/help/hero7-black/manuals/HERO7Black_UM_ENG_REVA.pdf (accessed on 7 September 2021).

- Statens Vegvesen. Håndbok N100—Veg- og Gateutforming; Vegdirektoratet: Oslo, Norway, 2019.

- American Association of State Highway and Transportation Officials. A Policy on Geometric Design of Highways and Streets, 6th ed.; American Association of State Highway and Transportation Officials: Washington, DC, USA, 2011. [Google Scholar]

- Statens Vegvesen. Asfalt 2005—Materialer og Utførelse Håndbok 246; Vegdirektoratet: Trondheim, Norway, 2005.

- Perovich, D.K. Light reflection and transmission by a temperate snow cover. J. Glaciol. 2007, 53, 201–210. [Google Scholar] [CrossRef] [Green Version]

- Markvart, T.; Castañer, L. Practical Handbook of Photovoltaics: Fundamentals and Applications; Elsevier Ltd.: Oxford, UK, 2003. [Google Scholar]

- O’neill, A.D.J.; Gray, D.M. Solar radiation penetration through snow. In Proceedings of the UNESCO-WMO-IAHS Symposium Role Snow Ice Hydrology; 1972; Volume 1, pp. 229–249. Available online: http://www.usask.ca/hydrology/papers/ONeill_Gray_1973_2.pdf (accessed on 6 June 2021).

- Kokhanovsky, A.A.; Aoki, T.; Hachikubo, A.; Hori, M.; Zege, E. Reflective properties of natural snow: Approximate asymptotic theory versus in situ measurements. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1529–1535. [Google Scholar] [CrossRef]

- Giudici, H.; Fenre, M.D.; Klein-Paste, A.; Rekila, K.P. A technical description of LARS and Lumi: Two apparatus for studying tire-pavement interactions. Routes/Roads Mag. 2017, 375, 49–54. [Google Scholar]

- Armstrong, R.L.; Paterson, W.S.B. The Physics of Glaciers. Arct. Alp. Res. 1982, 14, 176. [Google Scholar] [CrossRef]

| Case | Markings | Camera | ||

|---|---|---|---|---|

| White | Yellow | |||

| a | Laboratory, 1:10 road model, bare road | Yes | Yes | GoPro Hero7 |

| b | Laboratory, 1:10 road model, 0.5 cm snow, rear-view mirror perspective | Yes | Yes | GoPro Hero7 |

| c | Laboratory, 1:10 road model, 0.5 cm snow, bird’s-eye perspective | Yes | Yes | Canon EOS 5D |

| d | Airfield strip, 2.5 cm snow | No | Yes | GoPro Hero7 |

| e | Airfield strip, plowed | No | Yes | GoPro Hero7 |

| f | Airfield strip, brushed | No | Yes | GoPro Hero7 |

| g | Public road in the afternoon (low ambient light) | Yes | Yes | GoPro Hero7 |

| Image | Gray | RGB-R | RGB-G | RGB-B | HSL-H | HSL-S | HSL-L | HSV-H | HSV-S | HSV-V | YUV-Y | YUV-U | YUV-V | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case | ||||||||||||||

| Lab 0.5 cm snow white | ||||||||||||||

| Lab 0.5 cm snow yellow | ||||||||||||||

| Airfield plowed | ||||||||||||||

| Airfield brushed | ||||||||||||||

| Public road white | ||||||||||||||

| Public road yellow | ||||||||||||||

| Image | Gray | RGB-R | RGB-G | RGB-B | HSL-H | HSL-S | HSL-L | HSV-H | HSV-S | HSV-V | YUV-Y | YUV-U | YUV-V | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case | ||||||||||||||

| Lab 0.5 cm snow white | ||||||||||||||

| Lab 0.5 cm snow yellow | ||||||||||||||

| Airfield plowed | ||||||||||||||

| Airfield brushed | ||||||||||||||

| Public road white | ||||||||||||||

| Public road yellow | ||||||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Storsæter, A.D.; Pitera, K.; McCormack, E. Camera-Based Lane Detection—Can Yellow Road Markings Facilitate Automated Driving in Snow? Vehicles 2021, 3, 661-690. https://doi.org/10.3390/vehicles3040040

Storsæter AD, Pitera K, McCormack E. Camera-Based Lane Detection—Can Yellow Road Markings Facilitate Automated Driving in Snow? Vehicles. 2021; 3(4):661-690. https://doi.org/10.3390/vehicles3040040

Chicago/Turabian StyleStorsæter, Ane Dalsnes, Kelly Pitera, and Edward McCormack. 2021. "Camera-Based Lane Detection—Can Yellow Road Markings Facilitate Automated Driving in Snow?" Vehicles 3, no. 4: 661-690. https://doi.org/10.3390/vehicles3040040

APA StyleStorsæter, A. D., Pitera, K., & McCormack, E. (2021). Camera-Based Lane Detection—Can Yellow Road Markings Facilitate Automated Driving in Snow? Vehicles, 3(4), 661-690. https://doi.org/10.3390/vehicles3040040