An Intelligent Baby Monitor with Automatic Sleeping Posture Detection and Notification

Abstract

1. Introduction

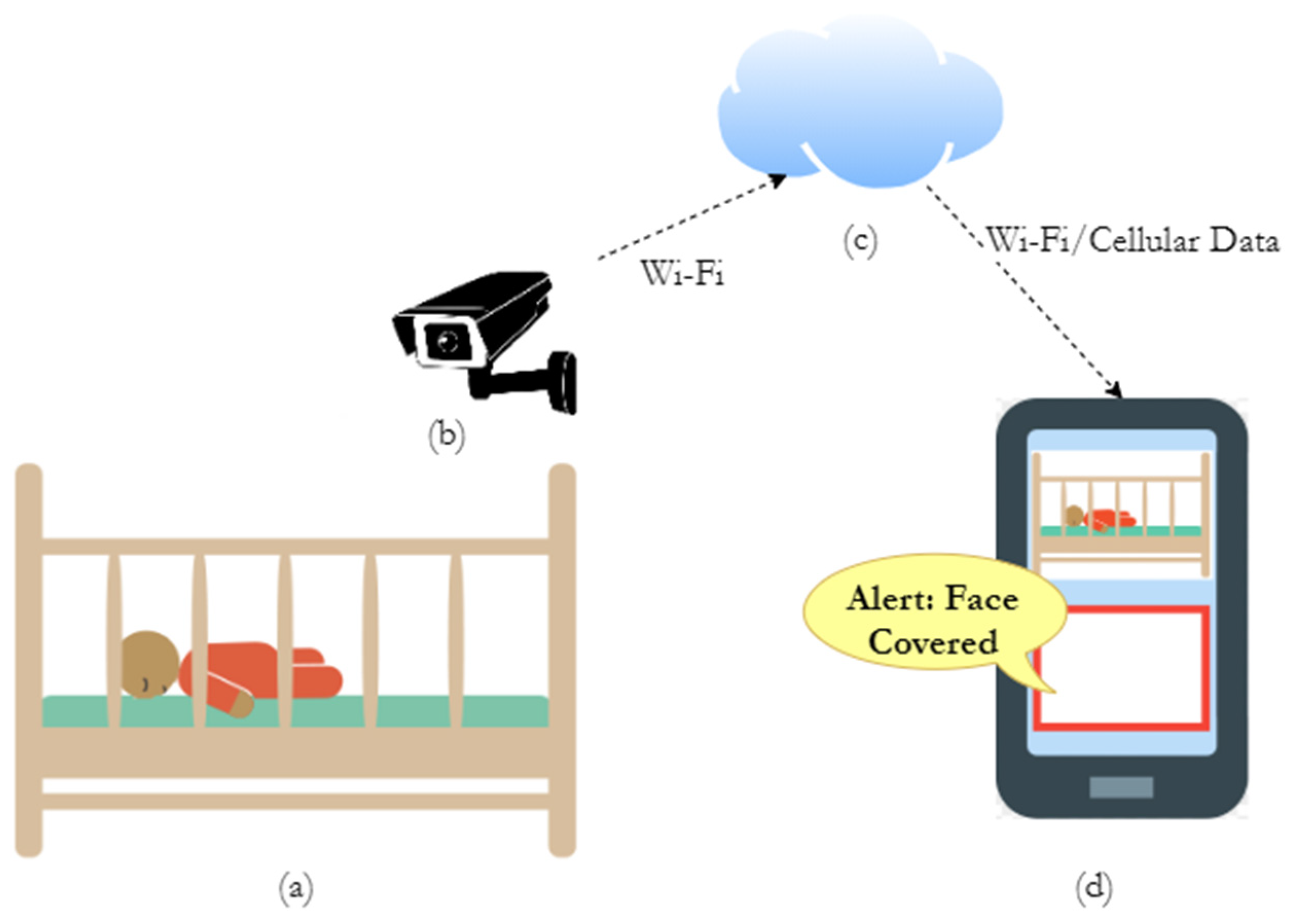

- About 1300 babies died due to sudden infant death syndrome (SIDS), about 1300 deaths were due to unknown causes, and about 800 deaths were caused by accidental suffocation and strangulation in bed in 2018 in the USA [1]. Babies are at higher risk for SIDS if they sleep on their stomachs as it causes them to breathe less air. The best and only position for a baby to sleep is on the back—which the American Academy of Pediatrics recommends through the baby’s first year [2]. Sleeping on the back improves airflow. To reduce the risk of SIDS, the baby’s face should be uncovered, and body temperature should be appropriate [3]. The proposed baby monitor will automatically detect these harmful postures of the baby and notify the caregiver. This will help to reduce SIDS.

- Babies—especially four months or older—move frequently during sleep and can throw off the blanket from their body [4]. The proposed system will alert when the baby is moving frequently and also whether the blanket is removed. Thus, it helps to keep the baby warm.

- Babies may wake up in the middle of the night due to hunger, pain, or just to play with the parent. There is an increasing call in the medical community to pay attention to parents when they say their babies do not sleep [5]. The smart baby monitor detects whether the baby’s eyes are open and sends an alert. Thus, it helps the parents know when the baby is awake even if he/she is not crying.

- When a baby sleeps in a different room, the caregivers need to check the sleeping condition of the baby after a regular interval. Parents lose an average of six months’ sleep during the first 24 months of their child’s life. Approximately 10% of parents manage to get only 2.5 h of continuous sleep each night. Over 60% of parents with babies aged less than 24 months get no more than 3.25 h of sleep each night. A lack of sleep can affect the quality of work and driving; create mental health problems, such as anxiety disorders and depression; and cause physical health problems, such as obesity, high blood pressure, diabetes, and heart disease [6]. The proposed smart device will automatically detect the situations when the caregiver’s attention is required and generate alerts. Thus, it will reduce the stress of checking the baby at regular intervals and help the caregiver to have better sleep.

- The proposed baby monitor can send video and alerts using the Internet even when the parent/caregiver is out of the home Wi-Fi network. Thus, the parent/caregiver can monitor the baby with the smartphone while at work, grocery, park, etc.

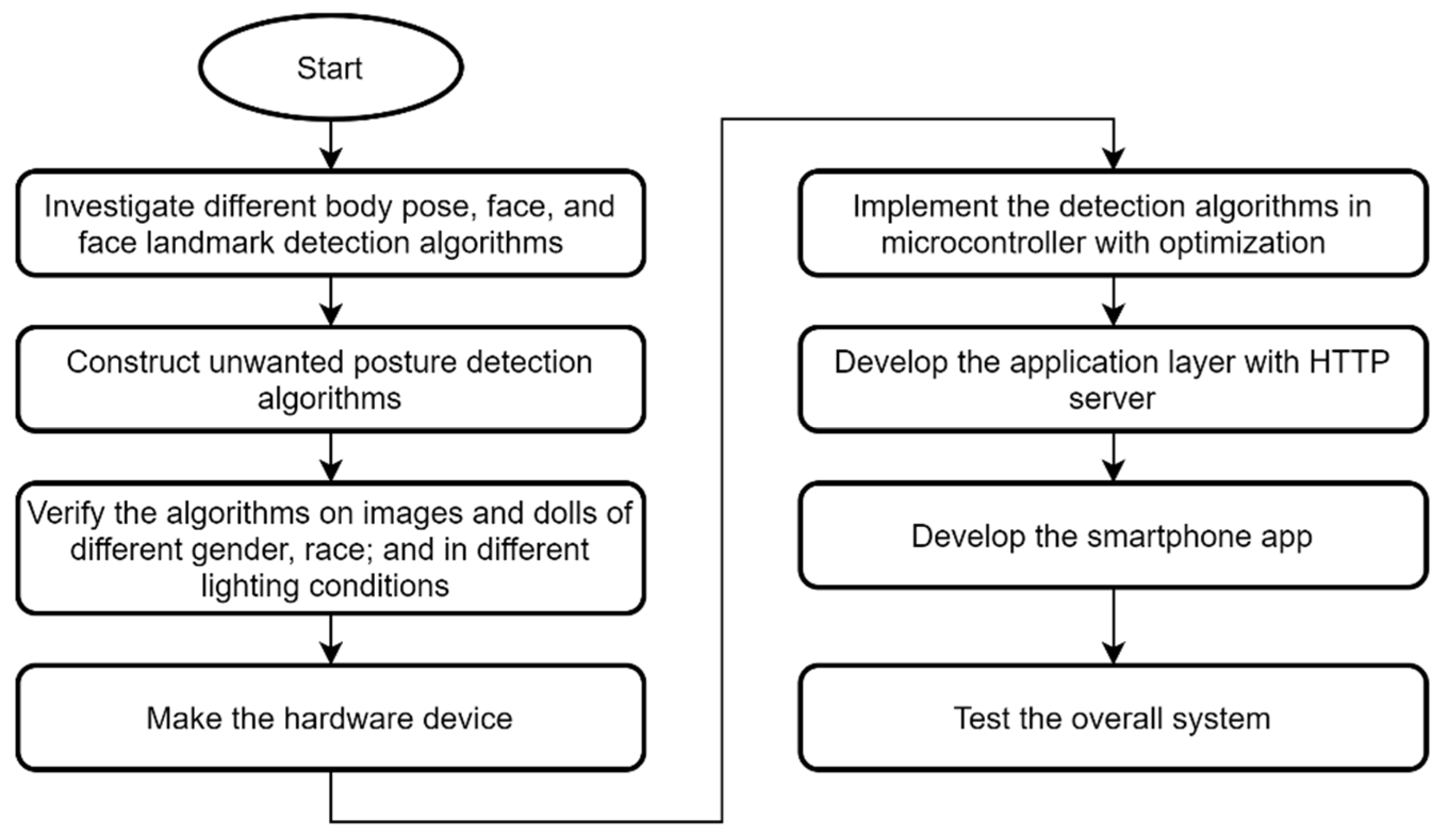

2. Materials and Methods

2.1. Detection Algorithms

2.1.1. Detection of Face Covered and Blanket Removed

2.1.2. Frequent Moving Detection

2.1.3. Awake Detection

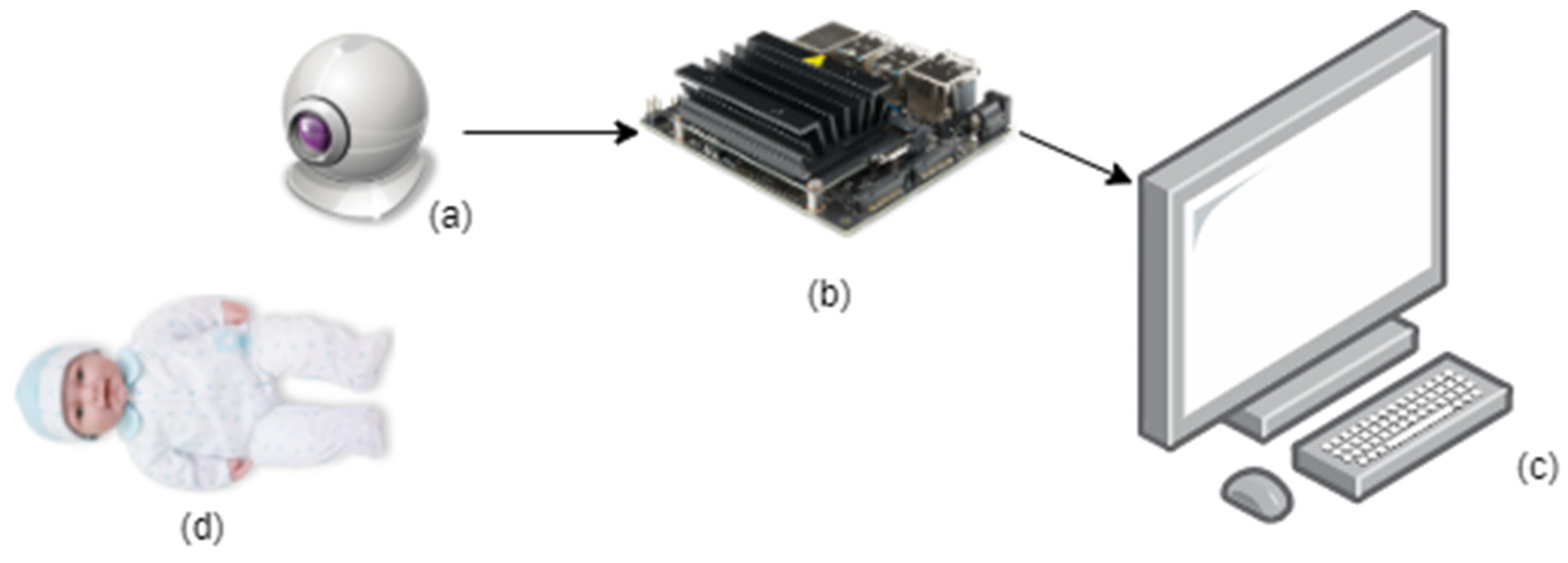

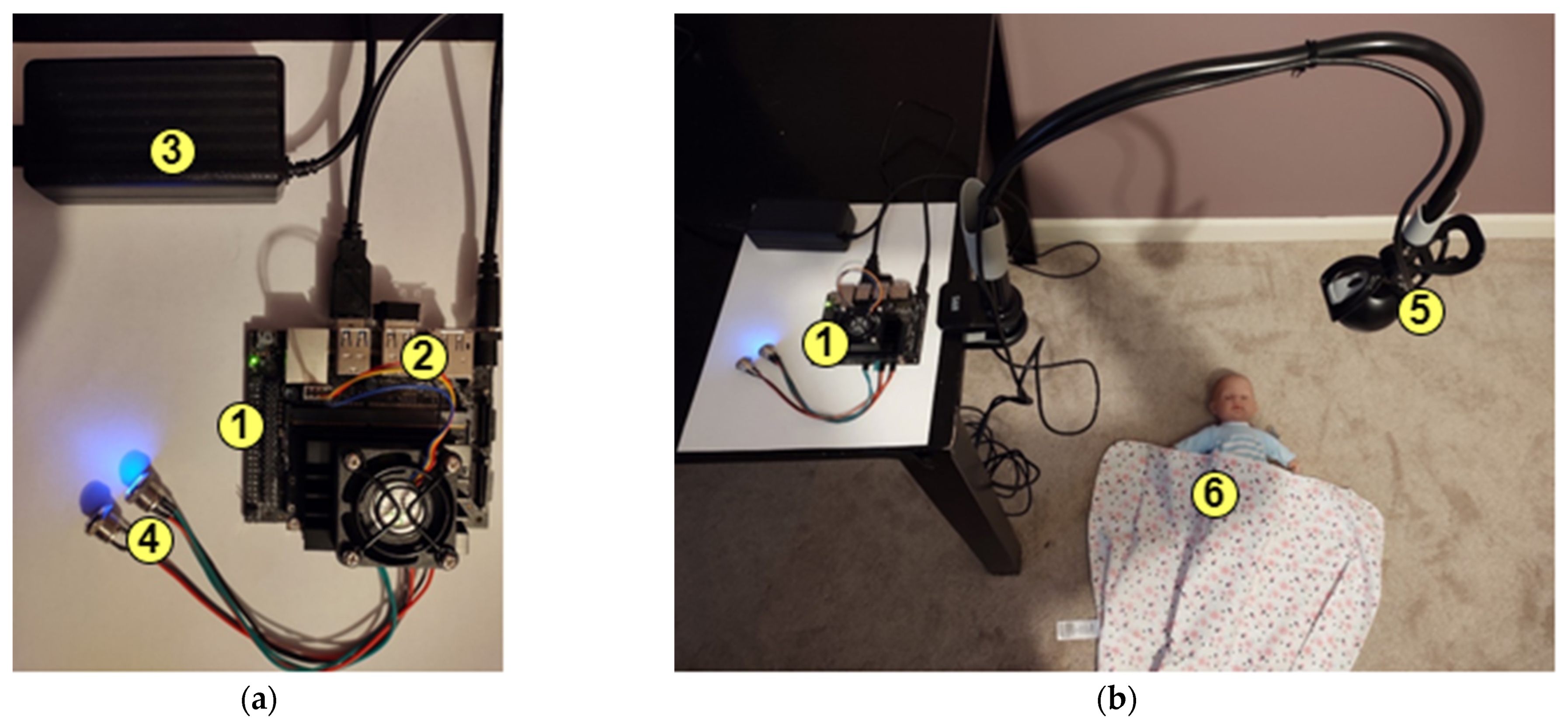

2.2. Prototype Development

2.2.1. Smart Baby Monitor Device

2.2.2. Smartphone App

3. Results

3.1. Detection Algorithm Results

3.2. Prototype Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- U.S. Department of Health & Human Services. Sudden Unexpected Infant Death and Sudden Infant Death Syndrome. Available online: https://www.cdc.gov/sids/data.htm/ (accessed on 22 February 2021).

- The Bump. Is It Okay for Babies to Sleep on Their Stomach? Available online: https://www.thebump.com/a/baby-sleeps-on-stomach/ (accessed on 22 February 2021).

- Illinois Department of Public Health. SIDS Fact Sheet. Available online: http://www.idph.state.il.us/sids/sids_factsheet.htm (accessed on 22 February 2021).

- How Can I Keep My Baby Warm at Night? Available online: https://www.babycenter.in/x542042/how-can-i-keep-my-baby-warm-at-night (accessed on 4 March 2021).

- 5 Reasons Why Your Newborn Isn’t Sleeping at Night. Available online: https://www.healthline.com/health/parenting/newborn-not-sleeping (accessed on 4 March 2021).

- Nordqvist, C. New Parents Have 6 Months Sleep Deficit During First 24 Months of Baby’s Life. Medical News Today. 25 July 2010. Available online: https://www.medicalnewstoday.com/articles/195821.php/ (accessed on 22 February 2021).

- Belyh, A. The Future of Human Work is Imagination, Creativity, and Strategy. CLEVERISM. 25 September 2019. Available online: https://www.cleverism.com/future-of-human-work-is-imagination-creativity-and-strategy/ (accessed on 22 February 2021).

- Janssen, C.P.; Donker, S.F.; Brumby, D.P.; Kun, A.L. History and future of human-automation interaction. Int. J. Hum. Comput. Stud. 2019, 131, 99–107. [Google Scholar] [CrossRef]

- MOTOROLA. MBP36XL Baby Monitor. Available online: https://www.motorola.com/us/motorola-mbp36xl-2-5-portable-video-baby-monitor-with-2-cameras/p (accessed on 3 June 2021).

- Infant Optics. DXR-8 Video Baby Monitor. Available online: https://www.infantoptics.com/dxr-8/ (accessed on 3 June 2021).

- Nanit Pro Smart Baby Monitor. Available online: https://www.nanit.com/products/nanit-pro-complete-monitoring-system?mount=wall-mount (accessed on 3 June 2021).

- Lollipop Baby Monitor with True Crying Detection. Available online: https://www.lollipop.camera/ (accessed on 22 February 2021).

- Cubo Ai Smart Baby Monitor. Available online: https://us.getcubo.com (accessed on 1 June 2021).

- Day and Night Vision USB Camera. Available online: https://www.amazon.com/gp/product/B00VFLWOC0 (accessed on 3 March 2021).

- Jetson Nano Developer Kit. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 3 March 2021).

- 15” Realistic Soft Body Baby Doll with Open/Close Eyes. Available online: https://www.amazon.com/gp/product/B00OMVPX0K (accessed on 3 March 2021).

- Asian 20-Inch Large Soft Body Baby Doll. Available online: https://www.amazon.com/JC-Toys-Asian-Baby-20-inch/dp/B074N42T3S (accessed on 3 March 2021).

- African American 20-Inch Large Soft Body Baby Doll. Available online: https://www.amazon.com/gp/product/B01MS9SY16 (accessed on 3 March 2021).

- Caucasian 20-Inch Large Soft Body Baby Doll. Available online: https://www.amazon.com/JC-Toys-Baby-20-inch-Soft/dp/B074JL7MYM (accessed on 3 March 2021).

- Hispanic 20-Inch Large Soft Body Baby Doll. Available online: https://www.amazon.com/JC-Toys-Hispanic-20-inch-Purple/dp/B074N4C6J7 (accessed on 3 March 2021).

- NVIDIA AI IOT TRT Pose. Available online: https://github.com/NVIDIA-AI-IOT/trt_pose (accessed on 8 March 2021).

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- COCO Dataset. Available online: https://cocodataset.org (accessed on 8 March 2021).

- Faber, M. How to Analyze the COCO Dataset for Pose Estimation. Available online: https://towardsdatascience.com/how-to-analyze-the-coco-dataset-for-pose-estimation-7296e2ffb12e (accessed on 8 March 2021).

- Rosebrock. Available online: https://www.pyimagesearch.com/2015/05/25/basic-motion-detection-and-tracking-with-python-and-opencv/ (accessed on 18 March 2021).

- Opencv-Motion-Detector. Available online: https://github.com/methylDragon/opencv-motion-detector (accessed on 18 March 2021).

- Gedraite, E.S.; Hadad, M. Investigation on the effect of a Gaussian Blur in image filtering and segmentation. In Proceedings of the ELMAR-2011, Zadar, Croatia, 14–16 September 2011; pp. 393–396. [Google Scholar]

- Image Thresholding. Available online: https://docs.opencv.org/master/d7/d4d/tutorial_py_thresholding.html (accessed on 18 March 2021).

- Dilation. Available online: https://docs.opencv.org/3.4/db/df6/tutorial_erosion_dilatation.html (accessed on 18 March 2021).

- Contours. Available online: https://docs.opencv.org/master/d4/d73/tutorial_py_contours_begin.html (accessed on 18 March 2021).

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Dlib Shape Predictor. Available online: http://dlib.net/imaging.html#shape_predictor (accessed on 1 April 2021).

- Rosebrock. Facial Landmarks with Dlib, OpenCV, and Python. Available online: https://www.pyimagesearch.com/2017/04/03/facial-landmarks-dlib-opencv-python (accessed on 1 April 2021).

- Facial Point Annotations. Available online: https://ibug.doc.ic.ac.uk/resources/facial-point-annotations (accessed on 1 April 2021).

- Edimax 2-in-1 WiFi and Bluetooth 4.0 Adapter. Available online: https://www.sparkfun.com/products/15449 (accessed on 7 April 2021).

- Flask Server. Available online: https://flask.palletsprojects.com/en/1.1.x (accessed on 7 April 2021).

- Yatritrivedi; Fitzpatrick, J. How to Forward Ports on Your Router. Available online: https://www.howtogeek.com/66214/how-to-forward-ports-on-your-router/ (accessed on 7 April 2021).

- Thread-Based Parallelism. Available online: https://docs.python.org/3/library/threading.html (accessed on 7 April 2021).

- NVIDIA-TensorRT. Available online: https://developer.nvidia.com/tensorrt (accessed on 1 March 2021).

- TensorRT Demos. Available online: https://github.com/jkjung-avt/tensorrt_demos (accessed on 1 March 2021).

- Firebase Cloud Messaging. Available online: https://firebase.google.com/docs/cloud-messaging (accessed on 7 April 2021).

- WebView. Available online: https://developer.android.com/guide/webapps/webview (accessed on 13 April 2021).

- Jetson’s Tegrastats Utility. Available online: https://docs.nvidia.com/jetson/l4t/index.html#page/Tegra%20Linux%20Driver%20Package%20Development%20Guide/AppendixTegraStats.html (accessed on 21 April 2021).

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Lienhart, R.; Maydt, J. An extended set of Haar-like features for rapid object detection. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. In Artificial Neural Networks and Machine Learning—ICANN 2018; Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I., Eds.; ICANN 2018; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11141. [Google Scholar] [CrossRef]

| Video Streaming | Detection | Latency (Second) |

|---|---|---|

| Yes | Body parts (for face covered and blanket removed) | 0.1096 |

| Moving | 0.0001 | |

| Awake | 0.0699 | |

| All (Body parts + Moving + Awake) | 0.1821 | |

| No | Body parts (for face covered and blanket removed) | 0.1091 |

| Moving | 0.0001 | |

| Awake | 0.6820 | |

| All (Body parts + Moving + Awake) | 0.1807 |

| Work | Motorola [9] | Infant Optics [10] | Nanit [11] | Lollipop [12] | Cubo Ai [49] | Proposed |

|---|---|---|---|---|---|---|

| Live Video | Yes | Yes | Yes | Yes | Yes | Yes |

| Boundary Cross Detection | No | No | No | Yes | Yes | No |

| Cry detection | No | No | No | Yes | Yes | No |

| Breathing Monitoring | No | No | Yes | No | No | No |

| Face Covered Detection | No | No | No | No | Yes | Yes |

| Blanket Removed Detection | No | No | No | No | No | Yes |

| Frequent Moving Detection | No | No | Yes | No | No | Yes |

| Awake Detection from Eye | No | No | No | No | No | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, T. An Intelligent Baby Monitor with Automatic Sleeping Posture Detection and Notification. AI 2021, 2, 290-306. https://doi.org/10.3390/ai2020018

Khan T. An Intelligent Baby Monitor with Automatic Sleeping Posture Detection and Notification. AI. 2021; 2(2):290-306. https://doi.org/10.3390/ai2020018

Chicago/Turabian StyleKhan, Tareq. 2021. "An Intelligent Baby Monitor with Automatic Sleeping Posture Detection and Notification" AI 2, no. 2: 290-306. https://doi.org/10.3390/ai2020018

APA StyleKhan, T. (2021). An Intelligent Baby Monitor with Automatic Sleeping Posture Detection and Notification. AI, 2(2), 290-306. https://doi.org/10.3390/ai2020018