Journal Description

AI

AI

is an international, peer-reviewed, open access journal on artificial intelligence (AI), including broad aspects of cognition and reasoning, perception and planning, machine learning, intelligent robotics, and applications of AI, published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within ESCI (Web of Science), Scopus, EBSCO, and other databases.

- Journal Rank: JCR - Q1 (Computer Science, Interdisciplinary Applications) / CiteScore - Q2 (Artificial Intelligence)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 20.7 days after submission; acceptance to publication is undertaken in 3.9 days (median values for papers published in this journal in the first half of 2025).

- Recognition of Reviewers: APC discount vouchers, optional signed peer review, and reviewer names published annually in the journal.

- Journal Cluster of Artificial Intelligence: AI, AI in Medicine, Algorithms, BDCC, MAKE, MTI, Stats, Virtual Worlds and Computers.

Impact Factor:

5.0 (2024);

5-Year Impact Factor:

4.6 (2024)

Latest Articles

An Adaptative Wavelet Time–Frequency Transform with Mamba Network for OFDM Automatic Modulation Classification

AI 2025, 6(12), 323; https://doi.org/10.3390/ai6120323 - 9 Dec 2025

Abstract

Background: With the development of wireless communication technologies, the rapid advancement of 5G and 6G communication systems has spawned an urgent demand for low latency and high data rates. Orthogonal Frequency Division Multiplexing (OFDM) communication using high-order digital modulation has become a

[...] Read more.

Background: With the development of wireless communication technologies, the rapid advancement of 5G and 6G communication systems has spawned an urgent demand for low latency and high data rates. Orthogonal Frequency Division Multiplexing (OFDM) communication using high-order digital modulation has become a key technology due to its characteristics, such as high reliability, high data rate, and low latency, and has been widely applied in various fields. As a component of cognitive radios, automatic modulation classification (AMC) plays an important role in remote sensing and electromagnetic spectrum sensing. However, under current complex channel conditions, there are issues such as low signal-to-noise ratio (SNR), Doppler frequency shift, and multipath propagation. Methods: Coupled with the inherent problem of indistinct characteristics in high-order modulation, these currently make it difficult for AMC to focus on OFDM and high-order digital modulation. Existing methods are mainly based on a single model-driven approach or data-driven approach. The Adaptive Wavelet Mamba Network (AWMN) proposed in this paper attempts to combine model-driven adaptive wavelet transform feature extraction with the Mamba deep learning architecture. A module based on the lifting wavelet scheme effectively captures discriminative time–frequency features using learnable operations. Meanwhile, a Mamba network constructed based on the State Space Model (SSM) can capture long-term temporal dependencies. This network realizes a combination of model-driven and data-driven methods. Results: Tests conducted on public datasets and a custom-built real-time received OFDM dataset show that the proposed AWMN achieves a performance reaching higher accuracies of 62.39%, 64.50%, and 74.95% on the public Rml2016(a) and Rml2016(b) datasets and our formulated EVAS dataset, while maintaining a compact parameter size of 0.44M. Conclusions: These results highlight its potential for improving the automatic modulation classification of high-order OFDM modulation in 5G/6G systems.

Full article

(This article belongs to the Topic AI-Driven Wireless Channel Modeling and Signal Processing)

Open AccessReview

Artificial Intelligence in Medical Education: A Narrative Review

by

Mateusz Michalczak, Wiktoria Zgoda, Jakub Michalczak, Anna Żądło, Ameen Nasser and Tomasz Tokarek

AI 2025, 6(12), 322; https://doi.org/10.3390/ai6120322 - 8 Dec 2025

Abstract

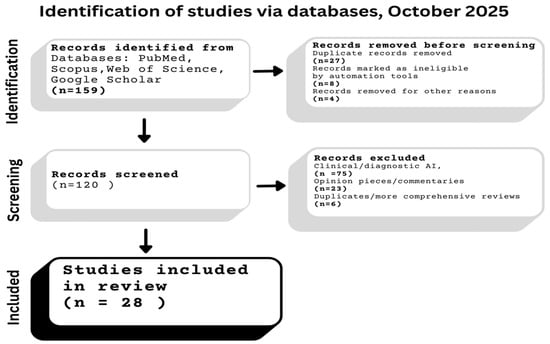

Background: Artificial intelligence (AI) is increasingly shaping medical education through adaptive learning systems, simulations, and large language models. These tools can enhance knowledge retention, clinical reasoning, and feedback, while raising concerns related to equity, bias, and institutional readiness. Methods: This narrative review examined

[...] Read more.

Background: Artificial intelligence (AI) is increasingly shaping medical education through adaptive learning systems, simulations, and large language models. These tools can enhance knowledge retention, clinical reasoning, and feedback, while raising concerns related to equity, bias, and institutional readiness. Methods: This narrative review examined AI applications in medical and health-profession education. A structured search of PubMed, Scopus, and Web of Science (2010–October 2025), supplemented by grey literature, identified empirical studies, reviews, and policy documents addressing AI-supported instruction, simulation, communication, procedural skills, assessment, or faculty development. Non-educational clinical AI studies were excluded. Results: AI facilitates personalized and interactive learning, improving clinical reasoning, communication practice, and simulation-based training. However, linguistic bias in Natural language processing (NLP) tools may disadvantage non-native English speakers, and limited digital infrastructure hinders adoption in rural or low-resource settings. When designed inclusively, AI can amplify accessibility for learners with disabilities. Faculty and students commonly report low confidence and infrequent use of AI tools, yet most support structured training to build competence. Conclusions: AI can shift medical education toward more adaptive, learner-centered models. Effective adoption requires addressing bias, ensuring equitable access, strengthening infrastructure, and supporting faculty development. Clear governance policies are essential for safe and ethical integration.

Full article

(This article belongs to the Special Issue Development of Artificial Intelligence and Computational Thinking: Future Directions, Opportunities, and Challenges)

►▼

Show Figures

Figure 1

Open AccessArticle

Integrating Convolutional Neural Networks with a Firefly Algorithm for Enhanced Digital Image Forensics

by

Abed Al Raoof Bsoul and Yazan Alshboul

AI 2025, 6(12), 321; https://doi.org/10.3390/ai6120321 - 8 Dec 2025

Abstract

►▼

Show Figures

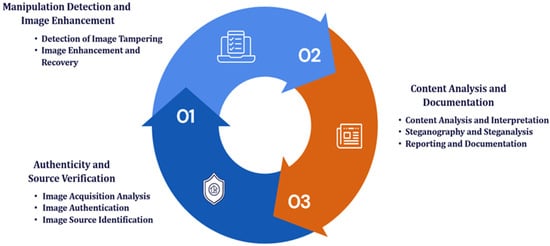

Digital images play an increasingly central role in journalism, legal investigations, and cybersecurity. However, modern editing tools make image manipulation difficult to detect with traditional forensic methods. This research addresses the challenge of improving the accuracy and stability of deep-learning-based forgery detection by

[...] Read more.

Digital images play an increasingly central role in journalism, legal investigations, and cybersecurity. However, modern editing tools make image manipulation difficult to detect with traditional forensic methods. This research addresses the challenge of improving the accuracy and stability of deep-learning-based forgery detection by developing a convolutional neural network enhanced through automated hyperparameter optimisation. The framework integrates a Firefly-based search strategy to optimise key network settings such as learning rate, filter size, depth, dropout, and batch configuration, reducing reliance on manual tuning and the risk of suboptimal model performance. The model is trained and evaluated on a large raster dataset of tampered and authentic images, as well as a custom vector-based dataset containing manipulations involving geometric distortion, object removal, and gradient editing. The Firefly-optimised model achieves higher accuracy, faster convergence, and improved robustness than baseline networks and traditional machine-learning classifiers. Cross-domain evaluation demonstrates that these gains extend across both raster and vector image types, even when vector files are rasterised for deep-learning analysis. The findings highlight the value of metaheuristic optimisation for enhancing the reliability of deep forensic systems and underscore the potential of combining deep learning with nature-inspired search methods to support more trustworthy image authentication in real-world environments.

Full article

Figure 1

Open AccessArticle

DERI1000: A New Benchmark for Dataset Explainability Readiness

by

Andrej Pisarcik, Robert Hudec and Roberta Hlavata

AI 2025, 6(12), 320; https://doi.org/10.3390/ai6120320 - 8 Dec 2025

Abstract

Deep learning models are increasingly evaluated not only for predictive accuracy but also for their robustness, interpretability, and data quality dependencies. However, current benchmarks largely isolate these dimensions, lacking a unified evaluation protocol that integrates data-centric and model-centric properties. To bridge the gap

[...] Read more.

Deep learning models are increasingly evaluated not only for predictive accuracy but also for their robustness, interpretability, and data quality dependencies. However, current benchmarks largely isolate these dimensions, lacking a unified evaluation protocol that integrates data-centric and model-centric properties. To bridge the gap between data quality assessment and eXplainable Artificial Intelligence (XAI), we introduce DERI1000—the Dataset Explainability Readiness Index—a benchmark that quantifies how suitable and well-prepared a dataset is for explainable and trustworthy deep learning. DERI1000 combines eleven measurable factors—sharpness, noise artifacts, exposure, resolution, duplicates, diversity, separation, imbalance, label noise proxy, XAI overlay, and XAI stability—into a single normalized score calibrated around a reference baseline of 1000. Using five MedMNIST datasets (PathMNIST, ChestMNIST, BloodMNIST, OCTMNIST, OrganCMNIST) and five convolutional neural architectures (DenseNet121, ResNet50, ResNet18, VGG16, EfficientNet-B0), we fitted factor weights through multi-dataset impact analysis. The results indicate that imbalance (0.3319), separation (0.1377), and label noise proxy (0.2161) are the dominant contributors to explainability readiness. Experiments demonstrate that DERI1000 effectively distinguishes models with superficially high accuracy (ACC) but poor interpretability or robustness. The framework thereby enables cross-domain, reproducible evaluation of model performance and data quality under unified metrics. We conclude that DERI1000 provides a scalable, interpretable, and extensible foundation for benchmarking deep learning systems across both data-centric and explainability-driven dimensions.

Full article

(This article belongs to the Special Issue Explainable and Trustworthy AI in Health and Biology: Enabling Transparent and Actionable Decision-Making)

►▼

Show Figures

Figure 1

Open AccessArticle

A Hybrid Type-2 Fuzzy Double DQN with Adaptive Reward Shaping for Stable Reinforcement Learning

by

Hadi Mohammadian KhalafAnsar, Jaime Rohten and Jafar Keighobadi

AI 2025, 6(12), 319; https://doi.org/10.3390/ai6120319 - 6 Dec 2025

Abstract

►▼

Show Figures

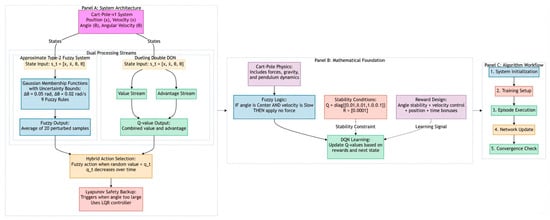

Objectives: This paper presents an innovative control framework for the classical Cart–Pole problem. Methods: The proposed framework combines Interval Type-2 Fuzzy Logic, the Dueling Double DQN deep reinforcement learning algorithm, and adaptive reward shaping techniques. Specifically, fuzzy logic acts as an a priori

[...] Read more.

Objectives: This paper presents an innovative control framework for the classical Cart–Pole problem. Methods: The proposed framework combines Interval Type-2 Fuzzy Logic, the Dueling Double DQN deep reinforcement learning algorithm, and adaptive reward shaping techniques. Specifically, fuzzy logic acts as an a priori knowledge layer that incorporates measurement uncertainty in both angle and angular velocity, allowing the controller to generate adaptive actions dynamically. Simultaneously, the deep Q-network is responsible for learning the optimal policy. To ensure stability, the Double DQN mechanism successfully alleviates the overestimation bias commonly observed in value-based reinforcement learning. An accelerated convergence mechanism is achieved through a multi-component reward shaping function that prioritizes angle stability and survival. Results: Given the training results, the method stabilizes rapidly; it achieves a 100% success rate by episode 20 and maintains consistent high rewards (650–700) throughout training. While Standard DQN and other baselines take 100+ episodes to become reliable, our method converges in about 20 episodes (4–5 times faster). It is observed that in comparison with advanced baselines like C51 or PER, the proposed method is about 15–20% better in final performance. We also found that PPO and QR-DQN surprisingly struggle on this task, highlighting the need for stability mechanisms. Conclusions: The proposed approach provides a practical solution that balances exploration with safety through the integration of fuzzy logic and deep reinforcement learning. This rapid convergence is particularly important for real-world applications where data collection is expensive, achieving stable performance much faster than existing methods without requiring complex theoretical guarantees.

Full article

Figure 1

Open AccessArticle

Improved Productivity Using Deep Learning-Assisted Major Coronal Curve Measurement on Scoliosis Radiographs

by

Xi Zhen Low, Mohammad Shaheryar Furqan, Kian Wei Ng, Andrew Makmur, Desmond Shi Wei Lim, Tricia Kuah, Aric Lee, You Jun Lee, Ren Wei Liu, Shilin Wang, Hui Wen Natalie Tan, Si Jian Hui, Xinyi Lim, Dexter Seow, Yiong Huak Chan, Premila Hirubalan, Lakshmi Kumar, Jiong Hao Jonathan Tan, Leok-Lim Lau and James Thomas Patrick Decourcy Hallinan

AI 2025, 6(12), 318; https://doi.org/10.3390/ai6120318 - 5 Dec 2025

Abstract

Background: Deep learning models have the potential to enable fast and consistent interpretations of scoliosis radiographs. This study aims to assess the impact of deep learning assistance on the speed and accuracy of clinicians in measuring major coronal curves on scoliosis radiographs. Methods:

[...] Read more.

Background: Deep learning models have the potential to enable fast and consistent interpretations of scoliosis radiographs. This study aims to assess the impact of deep learning assistance on the speed and accuracy of clinicians in measuring major coronal curves on scoliosis radiographs. Methods: We utilized a deep learning model (Context Axial Reverse Attention Network, or CaraNet) to assist in measuring Cobb’s angles on scoliosis radiographs in a simulated clinical setting. Four trainee radiologists with no prior experience and four trainee orthopedists with four to six months of prior experience analyzed the radiographs retrospectively, both with and without deep learning assistance, using a six-week washout period. We recorded the interpretation time and mean angle differences, with a consultant spine surgeon providing the reference standard. The dataset consisted of 640 radiographs from 640 scoliosis patients, aged 10–18 years; we divided the dataset into 75% for training, 16% for validation, and 9% for testing. Results: Deep learning assistance achieved non-statistically significant improvements in mean accuracy of 0.32 for trainee orthopedists (95% CI −1.4 to 0.8, p > 0.05) and 0.43 degrees (95% CI −1.6 to 0.8, p > 0.05) for trainee radiologists (non-inferior across all readers). Mean interpretation time decreased by 13.25 s for trainee radiologists, but increased by 3.85 s for trainee orthopedists (p = 0.005). Conclusions: Deep learning assistance for measuring Cobb’s angles was as accurate as unaided interpretation and slightly improved measurement accuracy. It increased the interpretation speeds of trainee radiologists but slightly slowed trainee orthopedists, suggesting that its effect on speed depended on prior experience.

Full article

(This article belongs to the Special Issue AI-Driven Innovations in Medical Computer Engineering and Healthcare)

►▼

Show Figures

Figure 1

Open AccessArticle

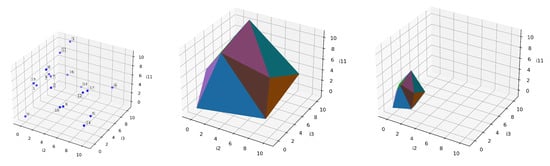

Deep Learning for Unsupervised 3D Shape Representation with Superquadrics

by

Mahmoud Eltaher and Michael Breuß

AI 2025, 6(12), 317; https://doi.org/10.3390/ai6120317 - 4 Dec 2025

Abstract

The representation of 3D shapes from point clouds remains a fundamental challenge in computer vision. A common approach decomposes 3D objects into interpretable geometric primitives, enabling compact, structured, and efficient representations. Building upon prior frameworks, this study introduces an enhanced unsupervised deep learning

[...] Read more.

The representation of 3D shapes from point clouds remains a fundamental challenge in computer vision. A common approach decomposes 3D objects into interpretable geometric primitives, enabling compact, structured, and efficient representations. Building upon prior frameworks, this study introduces an enhanced unsupervised deep learning approach for 3D shape representation using superquadrics. The proposed framework fits a set of superquadric primitives to 3D objects through a fully integrated, differentiable pipeline that enables efficient optimization and parameter learning, directly extracting geometric structure from 3D point clouds without requiring ground-truth segmentation labels. This work introduces three key advancements that substantially improve representation quality, interpretability, and evaluation rigor: (1) A uniform sampling strategy that enhances training stability compared with random sampling used in earlier models; (2) An overlapping loss that penalizes intersections between primitives, reducing redundancy and improving reconstruction coherence; and (3) A novel evaluation framework comprising Primitive Accuracy, Structural Accuracy, and Overlapping Percentage metrics. This new metric design transitions from point-based to structure-aware assessment, enabling fairer and more interpretable comparison across primitive-based models. Comprehensive evaluations on benchmark 3D shape datasets demonstrate that the proposed modifications yield coherent, compact, and semantically consistent shape representations, establishing a robust foundation for interpretable and quantitative evaluation in primitive-based 3D reconstruction.

Full article

(This article belongs to the Section AI Systems: Theory and Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

A Comparative Analysis of Federated Learning for Multi-Class Breast Cancer Classification in Ultrasound Imaging

by

Marwa Ali Elshenawy, Noha S. Tawfik, Nada Hamada, Rania Kadry, Salema Fayed and Noha Ghatwary

AI 2025, 6(12), 316; https://doi.org/10.3390/ai6120316 - 4 Dec 2025

Abstract

Breast cancer is the second leading cause of cancer-related mortality among women. Early detection enables timely treatment, improving survival outcomes. This paper presents a comparative evaluation of federated learning (FL) frameworks for multiclass breast cancer classification using ultrasound images drawn from three datasets:

[...] Read more.

Breast cancer is the second leading cause of cancer-related mortality among women. Early detection enables timely treatment, improving survival outcomes. This paper presents a comparative evaluation of federated learning (FL) frameworks for multiclass breast cancer classification using ultrasound images drawn from three datasets: BUSI, BUS-UCLM, and BCMID, which include 600, 38, and 323 patients, respectively. Five state-of-the-art networks were tested, with MobileNet, ResNet and InceptionNet identified as the most effective for FL deployment. Two aggregation strategies, FedAvg and FedProx, were assessed under varying levels of data heterogeneity in two and three client settings. Results from experiments indicate that the FL models outperformed local and centralized training, bypassing the adverse impacts of data isolation and domain shift. In the two-client federations, FL achieving up to 8% higher accuracy and almost 6% higher macro-F1 scores on average that local and centralized training. FedProx on MobileNet maintained a stable performance in the three-client federation with best average accuracy of 73.31%, and macro-F1 of 67.3% despite stronger heterogeneity. Consequently, these results suggest that the proposed multiclass model has the potential to support clinical workflows by assisting in automated risk stratification. If deployed, such a system could allow radiologists to prioritize high-risk patients more effectively. The findings emphasize the potential of federated learning as a scalable, privacy-preserving infrastructure for collaborative medical imaging and breast cancer diagnosis.

Full article

(This article belongs to the Special Issue Artificial Intelligence for Future Healthcare: Advancement, Impact, and Prospect in the Field of Cancer)

►▼

Show Figures

Figure 1

Open AccessArticle

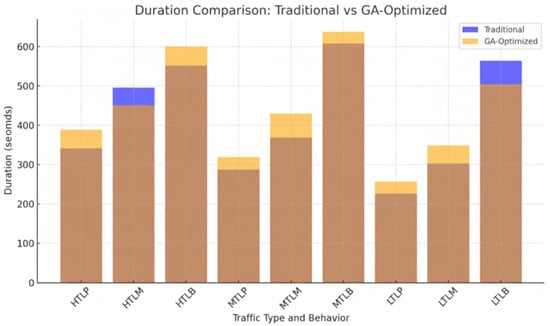

Optimizing Urban Travel Time Using Genetic Algorithms for Intelligent Transportation Systems

by

Suhail Odeh, Murad Al Rajab, Mahmoud Obaid, Rafik Lasri and Djemel Ziou

AI 2025, 6(12), 315; https://doi.org/10.3390/ai6120315 - 4 Dec 2025

Abstract

Urban congestion causes further increases in travel times, fuel consumption and greenhouse-gas emissions. In this regard, we conduct a systematic study of a Genetic Algorithm (GA) for real-time routing in an urban scenario in Bethlehem City, based on a SUMO microsimulation that has

[...] Read more.

Urban congestion causes further increases in travel times, fuel consumption and greenhouse-gas emissions. In this regard, we conduct a systematic study of a Genetic Algorithm (GA) for real-time routing in an urban scenario in Bethlehem City, based on a SUMO microsimulation that has been calibrated using real data from the field. Our work makes four main contributions: (i) the implementation of a reproducible GA framework for dynamic routing with explicit constraints and adaptive termination criterion; (ii) design of a weight sensitivity study for studying a multi term fitness function with travel time and waiting time, and optionally fuel usage; (iii) an edge-assisted distributed architecture on roadside units (RSUs) supported by cloud services; and (iv) specifying and refining the data set description and experimental protocol with a planned statistical analysis. Empirical evidence from the Bethlehem case study shows a consistent decline in total travel time under high congestion cases. Variations in the waiting time between different scenarios are exhibited, reflecting the trade-offs in the fitness weighting scheme. We recognize that we have some limitations, including the manual resolution of data and the inherent problem of differences between simulations and real world, and we are proposing a road-map towards a pilot deployment that handles these issues. Rather than proposing a new GA variant, we present a deployment-oriented framework-an edge- assisted GA with explicit protocols and a latency envelope, and a reproducible multi-objective tuning procedure validated on a city-scale network under severe congestion.

Full article

(This article belongs to the Section AI Systems: Theory and Applications)

►▼

Show Figures

Figure 1

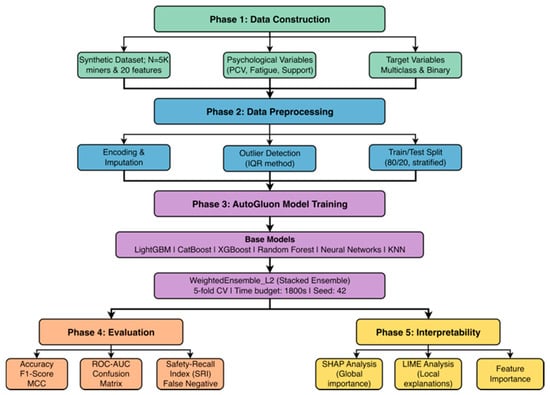

Open AccessFeature PaperArticle

Interpretable AutoML for Predicting Unsafe Miner Behaviors via Psychological-Contract Signals

by

Yong Yan and Jizu Li

AI 2025, 6(12), 314; https://doi.org/10.3390/ai6120314 - 3 Dec 2025

Abstract

Occupational safety in high-risk sectors, such as mining, depends heavily on understanding and predicting workers’ behavioural risks. However, existing approaches often overlook the psychological dimension of safety, particularly how psychological-contract violations (PCV) between miners and their organizations contribute to unsafe behavior, and they

[...] Read more.

Occupational safety in high-risk sectors, such as mining, depends heavily on understanding and predicting workers’ behavioural risks. However, existing approaches often overlook the psychological dimension of safety, particularly how psychological-contract violations (PCV) between miners and their organizations contribute to unsafe behavior, and they rarely leverage interpretable artificial intelligence. This study bridges that gap by developing an explainable AutoML framework that integrates AutoGluon, SHAP, and LIME to classify miners’ safety behaviors using psychological and organizational indicators. An empirically calibrated synthetic dataset of 5000 miner profiles (20 features) was used to train multiclass (Safe, Moderate, and Unsafe) and binary (Safe and Unsafe) classifiers. The WeightedEnsemble_L2 model achieved the best performance, with 97.6% accuracy (multiclass) and 98.3% accuracy (binary). Across tasks, Post-Intervention Score, Fatigue Level, and Supervisor Support consistently emerge as high-impact features. SHAP summarizes global importance patterns, while LIME provides per-case rationale, enabling auditable, actionable guidance for safety managers. We outline ethics and deployment considerations (human-in-the-loop review, transparency, bias checks) and discuss transfer to real-world logs as future work. Results suggest that interpretable AutoML can bridge behavioural safety theory and operational decision-making by producing high-accuracy predictions with transparent attributions, informing targeted interventions to reduce unsafe behaviours in high-risk mining contexts.

Full article

(This article belongs to the Topic Theories, Techniques, and Real-World Applications for Advancing Explainable AI)

►▼

Show Figures

Figure 1

Open AccessArticle

The Artificial Intelligence Quotient (AIQ): Measuring Machine Intelligence Based on Multi-Domain Complexity and Similarity

by

Christopher Pereyda and Lawrence Holder

AI 2025, 6(12), 313; https://doi.org/10.3390/ai6120313 - 1 Dec 2025

Abstract

►▼

Show Figures

The development of AI systems and benchmarks has been rapidly increasing, yet there has been a disproportionately small amount of examination into the domains used to evaluate these systems. Most benchmarks introduce bias by focusing on a particular type of domain or combine

[...] Read more.

The development of AI systems and benchmarks has been rapidly increasing, yet there has been a disproportionately small amount of examination into the domains used to evaluate these systems. Most benchmarks introduce bias by focusing on a particular type of domain or combine different domains without consideration of their relative complexity or similarity. We propose the Artificial Intelligence Quotient (AIQ) framework as a means for measuring the similarity and complexity of domains in order to remove these biases and assess the scope of intelligent capabilities evaluated by a benchmark composed of multiple domains. These measures are evaluated with several intuitive experiments using simple domains with known complexities and similarities. We construct test suites using the AIQ framework and evaluate them using known AI systems to validate that AIQ-based benchmarks capture an agent’s intelligence.

Full article

Figure 1

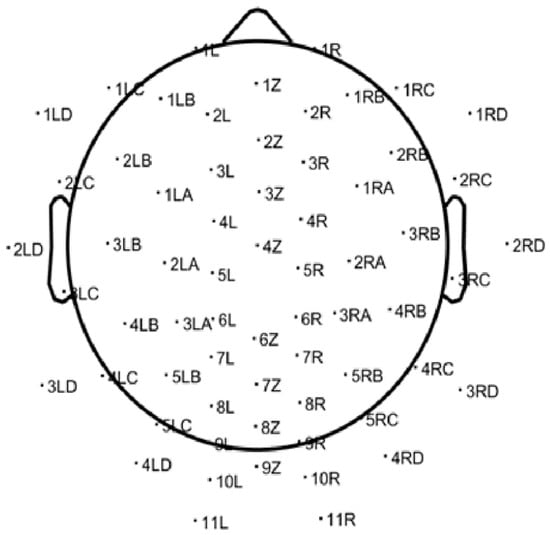

Open AccessArticle

Optimizing EEG ICA Decomposition with Machine Learning: A CNN-Based Alternative to EEGLAB for Fast and Scalable Brain Activity Analysis

by

Nuphar Avital, Tal Gelkop, Danil Brenner and Dror Malka

AI 2025, 6(12), 312; https://doi.org/10.3390/ai6120312 - 28 Nov 2025

Abstract

►▼

Show Figures

Electroencephalography (EEG) provides excellent temporal resolution for brain activity analysis but limited spatial resolution at the sensors, making source unmixing essential. Our objective is to enable accurate brain activity analysis from EEG by providing a fast, calibration-free alternative to independent component analysis (ICA)

[...] Read more.

Electroencephalography (EEG) provides excellent temporal resolution for brain activity analysis but limited spatial resolution at the sensors, making source unmixing essential. Our objective is to enable accurate brain activity analysis from EEG by providing a fast, calibration-free alternative to independent component analysis (ICA) that preserves ICA-like component interpretability for real-time and large-scale use. We introduce a convolutional neural network (CNN) that estimates ICA-like component activations and scalp topographies directly from short, preprocessed EEG epochs, enabling real-time and large-scale analysis. EEG data were acquired from 44 participants during a 40-min lecture on image processing and preprocessed using standard EEGLAB procedures. The CNN was trained to estimate ICA-like components and evaluated against ICA using waveform morphology, spectral characteristics, and scalp topographies. We term the approach “adaptive” because, at test time, it is calibration-free and remains robust to user/session variability, device/montage perturbations, and within-session drift via per-epoch normalization and automated channel quality masking. No online weight updates are performed; robustness arises from these inference-time mechanisms and multi-subject training. The proposed method achieved an average F1-score of 94.9%, precision of 92.9%, recall of 97.2%, and overall accuracy of 93.2%. Moreover, mean processing time per subject was reduced from 332.73 s with ICA to 4.86 s using the CNN, a ~68× improvement. While our primary endpoint is ICA-like decomposition fidelity (waveform, spectral, and scalp-map agreement), the clean/artifact classification metrics are reported only as a downstream utility check confirming that the CNN-ICA outputs remain practically useful for routine quality control. These results show that CNN-based EEG decomposition provides a practical and accurate alternative to ICA, delivering substantial computational gains while preserving signal fidelity and making ICA-like decomposition feasible for real-time and large-scale brain activity analysis in clinical, educational, and research contexts.

Full article

Figure 1

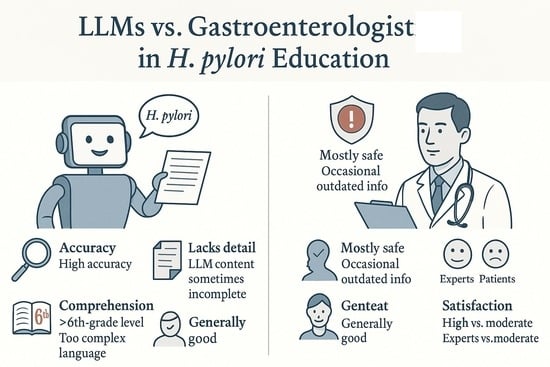

Open AccessReview

Educational Materials for Helicobacter pylori Infection: A Comparative Evaluation of Large Language Models Versus Human Experts

by

Giulia Ortu, Elettra Merola, Giovanni Mario Pes and Maria Pina Dore

AI 2025, 6(12), 311; https://doi.org/10.3390/ai6120311 - 28 Nov 2025

Abstract

►▼

Show Figures

Helicobacter pylori infects about half of the global population and is a major cause of peptic ulcer disease and gastric cancer. Improving patient education can increase screening participation, enhance treatment adherence, and help reduce gastric cancer incidence. Recently, large language models (LLMs) such

[...] Read more.

Helicobacter pylori infects about half of the global population and is a major cause of peptic ulcer disease and gastric cancer. Improving patient education can increase screening participation, enhance treatment adherence, and help reduce gastric cancer incidence. Recently, large language models (LLMs) such as ChatGPT, Gemini, and DeepSeek-R1 have been explored as tools for producing patient-facing educational materials; however, their performance compared to expert gastroenterologists remains under evaluation. This narrative review analyzed seven peer-reviewed studies (2024–2025) assessing LLMs’ ability to answer H. pylori-related questions or generate educational content, evaluated against physician- and patient-rated benchmarks across six domains: accuracy, completeness, readability, comprehension, safety, and user satisfaction. LLMs demonstrated high accuracy, with mean accuracies typically ranging from approximately 77% to 95% across different models and studies, and with most models achieving values above 90%, comparable to or exceeding that of general gastroenterologists and approaching senior specialist levels. However, their responses were often judged as incomplete, described as “correct but insufficient.” Readability exceeded the recommended sixth-grade level, though comprehension remained acceptable. Occasional inaccuracies in treatment advice raised safety concerns. Experts and medical trainees rated LLM outputs positively, while patients found them less clear and helpful. Overall, LLMs demonstrate strong potential to provide accurate and scalable H. pylori education for patients; however, heterogeneity between LLM versions (e.g., GPT-3.5, GPT-4, GPT-4o, and various proprietary or open-source architectures) and prompting strategies results in variable performance across studies. Enhancing completeness, simplifying language, and ensuring clinical safety are key to their effective integration into gastroenterology patient education.

Full article

Graphical abstract

Open AccessArticle

Persona, Break Glass, Name Plan, Jam (PBNJ): A New AI Workflow for Planning and Problem Solving

by

Laurie Faith, Tiffanie Zaugg, Nicole Stolys, Madeline Szabo, Fatemeh Haghi, Charles Badlis and Simon Lefever Olmedo

AI 2025, 6(12), 310; https://doi.org/10.3390/ai6120310 - 28 Nov 2025

Abstract

This self-study presents Persona, Break Glass, Name Plan, Jam (PBNJ), a human-centered workflow for using generative AI to support differentiated lesson planning and problem solving. Although differentiated instruction (DI) is widely endorsed, early-career teachers often lack the time and capacity to implement it

[...] Read more.

This self-study presents Persona, Break Glass, Name Plan, Jam (PBNJ), a human-centered workflow for using generative AI to support differentiated lesson planning and problem solving. Although differentiated instruction (DI) is widely endorsed, early-career teachers often lack the time and capacity to implement it consistently. Through four iterative cycles of collaborative self-study, seven educator-researchers examined how they used AI for lesson planning, identified key challenges, and refined their approach. When engaged, the PBNJ sequence—set a persona, use a ‘break glass’ starter prompt, name a preliminary plan, and iteratively ‘jam’ with the AI—improved teacher confidence, yielded more feasible lesson plans, and supported professional learning. We discuss implications for problem solving beyond educational contexts and the potential for use with young learners.

Full article

(This article belongs to the Special Issue Development of Artificial Intelligence and Computational Thinking: Future Directions, Opportunities, and Challenges)

Open AccessArticle

Decentralizing AI Economics for Poverty Alleviation: Web3 Social Innovation Systems in the Global South

by

Igor Calzada

AI 2025, 6(12), 309; https://doi.org/10.3390/ai6120309 - 27 Nov 2025

Abstract

►▼

Show Figures

Artificial Intelligence (AI) is increasingly framed as a driver of economic transformation, yet its capacity to alleviate poverty in the Global South remains contested. This article introduces the notion of AI Economics—the political economy of value creation, extraction, and redistribution in AI

[...] Read more.

Artificial Intelligence (AI) is increasingly framed as a driver of economic transformation, yet its capacity to alleviate poverty in the Global South remains contested. This article introduces the notion of AI Economics—the political economy of value creation, extraction, and redistribution in AI systems—to interrogate h ow innovation agendas intersect with structural inequalities. This article examines how Social Innovation (SI) systems, when coupled with decentralized Web3 technologies such as blockchain, Decentralized Autonomous Organizations (DAOs), and data cooperatives, may challenge data monopolies, redistribute economic gains, and support inclusive development. Drawing on Action Research (AR) conducted during the AI4SI International Summer School in Donostia-San Sebastián, this article compares two contrasting ecosystems: (i) the Established AI4SI Ecosystem, marked by centralized governance and uneven benefits, and (ii) the Decentralized Web3 Emerging Ecosystem, which promotes community-driven innovation, data sovereignty, and alternative economic models. Findings underscore AI’s dual economic role: while it can expand digital justice, service provision, and empowerment, it also risks reinforcing dependency and inequality where infrastructures and governance remain weak. This article concludes that embedding AI Economics in context-sensitive, decentralized social innovation systems—aligned with ethical governance and the SDGs—is essential for realizing AI’s promise of poverty alleviation in the Global South.

Full article

Figure 1

Open AccessArticle

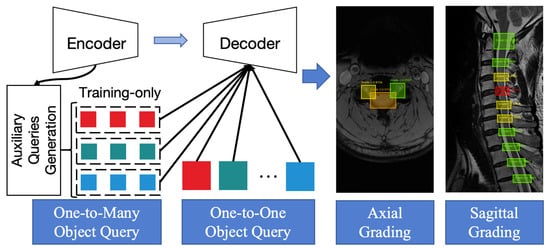

Transformer-Based Deep Learning for Multiplanar Cervical Spine MRI Interpretation: Comparison with Spine Surgeons and Radiologists

by

Aric Lee, Junran Wu, Changshuo Liu, Andrew Makmur, Yong Han Ting, You Jun Lee, Wilson Ong, Tricia Kuah, Juncheng Huang, Shuliang Ge, Alex Quok An Teo, Joey Chan Yiing Beh, Desmond Shi Wei Lim, Xi Zhen Low, Ee Chin Teo, Qai Ven Yap, Shuxun Lin, Jonathan Jiong Hao Tan, Naresh Kumar, Beng Chin Ooi, Swee Tian Quek and James Thomas Patrick Decourcy Hallinanadd

Show full author list

remove

Hide full author list

AI 2025, 6(12), 308; https://doi.org/10.3390/ai6120308 - 27 Nov 2025

Abstract

►▼

Show Figures

Background: Degenerative cervical spondylosis (DCS) is a common and potentially debilitating condition, with surgery indicated in selected patients. Deep learning models (DLMs) can improve consistency in grading DCS neural stenosis on magnetic resonance imaging (MRI), though existing models focus on axial images, and

[...] Read more.

Background: Degenerative cervical spondylosis (DCS) is a common and potentially debilitating condition, with surgery indicated in selected patients. Deep learning models (DLMs) can improve consistency in grading DCS neural stenosis on magnetic resonance imaging (MRI), though existing models focus on axial images, and comparisons are mostly limited to radiologists. Methods: We developed an enhanced transformer-based DLM that trains on sagittal images and optimizes axial and foraminal classification using a maximized dataset. DLM training utilized 648 scans, with internal testing on 75 scans and external testing on an independent 75-scan dataset. Performance of the DLM, spine surgeons, and radiologists of varying subspecialities/seniority were compared against a consensus reference standard. Results: On internal testing, the DLM achieved high agreement for all-class classification: axial spinal canal κ = 0.80 (95%CI: 0.72–0.82), sagittal spinal canal κ = 0.83 (95%CI: 0.81–0.85), and neural foramina κ = 0.81 (95%CI: 0.77–0.84). In comparison, human readers demonstrated lower levels of agreement (κ = 0.60–0.80). External testing showed modestly degraded model performance (κ = 0.68–0.77). Conclusions: These results demonstrate the utility of transformer-based DLMs in multiplanar MRI interpretation, surpassing spine surgeons and radiologists on internal testing and highlighting its potential for real-world clinical adoption.

Full article

Figure 1

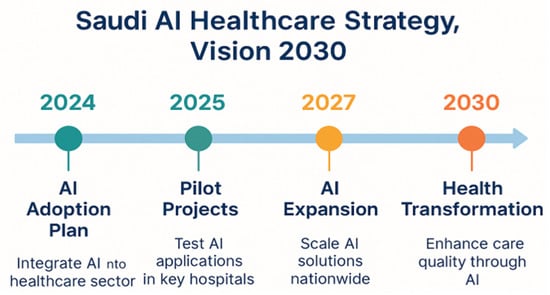

Open AccessReview

Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives

by

Hazem Mathker S. Alotaibi, Wamadeva Balachandran and Ziad Hunaiti

AI 2025, 6(12), 307; https://doi.org/10.3390/ai6120307 - 26 Nov 2025

Abstract

►▼

Show Figures

Artificial intelligence is reshaping healthcare project management in Saudi Arabia, yet most deployments lack culturally grounded ethics. This paper synthesises global AI-ethics guidance and Islamic bioethics, then proposes a maqāṣid-al-sharīʿah-aligned conceptual framework for ANN-based decision support. Ethical signals derived from the preservation of

[...] Read more.

Artificial intelligence is reshaping healthcare project management in Saudi Arabia, yet most deployments lack culturally grounded ethics. This paper synthesises global AI-ethics guidance and Islamic bioethics, then proposes a maqāṣid-al-sharīʿah-aligned conceptual framework for ANN-based decision support. Ethical signals derived from the preservation of life, dignity, justice, faith, and intellect are embedded as logic-gate filters on ANN outputs. The framework specifies a dual-metric evaluation that reports predictive performance (e.g., accuracy, MAE, AUC) alongside ethical compliance, with auditable thresholds for fairness (δ = 0.1) and confidence (α = 0.8) calibrated through stakeholder workshops. It incorporates a co-design protocol with clinicians, patients, Islamic scholars, and policymakers to ensure cultural and clinical legitimacy. Unlike UNESCO and EU frameworks, which remain principle-oriented, this study introduces a measurable dual-layer assessment that combines technical accuracy with ethical compliance, supported by audit artefacts such as model cards, traceability logs, and human override records. The framework yields technically efficient and Shariah-compliant recommendations and sets a roadmap for empirical pilots under Vision 2030. The paper moves beyond a general review by formalising an Islamic-values-driven conceptual framework that operationalises ethical constraints inside ANN–DSS pipelines and defines auditable compliance metrics. This paper combines a critical review of AI in healthcare project management with the development of a maqāṣid-aligned conceptual framework, thereby bridging systematic synthesis with an implementable proposal for ethical AI.

Full article

Figure 1

Open AccessArticle

AI-Driven Threat Hunting in Enterprise Networks Using Hybrid CNN-LSTM Models for Anomaly Detection

by

Mark Kamande, Kwame Assa-Agyei, Frederick Edem Junior Broni, Tawfik Al-Hadhrami and Ibrahim Aqeel

AI 2025, 6(12), 306; https://doi.org/10.3390/ai6120306 - 26 Nov 2025

Abstract

Objectives: This study aims to present an AI-driven threat-hunting framework that automates both hypothesis generation and hypothesis validation through a hybrid deep learning model that combines Convolutional Neural Networks (CNN) with Long Short-Term Memory (LSTM) networks. The objective is to operationalize proactive threat

[...] Read more.

Objectives: This study aims to present an AI-driven threat-hunting framework that automates both hypothesis generation and hypothesis validation through a hybrid deep learning model that combines Convolutional Neural Networks (CNN) with Long Short-Term Memory (LSTM) networks. The objective is to operationalize proactive threat hunting by embedding anomaly detection within a structured workflow, improving detection performance, reducing analyst workload, and strengthening overall security posture. Methods: The framework begins with automated hypothesis generation, in which the model analyzes network flows, telemetry data, and logs sourced from IoT/IIoT devices, Windows/Linux systems, and interconnected environments represented in the TON_IoT dataset. Deviations from baseline behavior are detected as potential threat indicators, and hypotheses are prioritized according to anomaly confidence scores derived from output probabilities. Validation is conducted through iterative classification, where CNN-extracted spatial features and LSTM-captured temporal features are jointly used to confirm or refute hypotheses, minimizing manual data pivoting and contextual enrichment. Principal Component Analysis (PCA) and Recursive Feature Elimination with Random Forest (RFE-RF) are employed to extract and rank features based on predictive importance. Results: The hybrid model, trained on the TON_IoT dataset, achieved strong performance metrics: 99.60% accuracy, 99.71% precision, 99.32% recall, an AUC of 99%, and a 99.58% F1-score. These results outperform baseline models such as Random Forest and Autoencoder. By integrating spatial and temporal feature extraction, the model effectively identifies anomalies with minimal false positives and false negatives, while the automation of the hypothesis lifecycle significantly reduces analyst workload. Conclusions: Automating threat-hunting processes through hybrid deep learning shifts organizations from reactive to proactive defense. The proposed framework improves threat visibility, accelerates response times, and enhances overall security posture. The findings offer valuable insights for researchers, practitioners, and policymakers seeking to advance AI adoption in threat intelligence and enterprise security.

Full article

(This article belongs to the Topic Artificial Intelligence and Machine Learning in Cyber–Physical Systems)

►▼

Show Figures

Figure 1

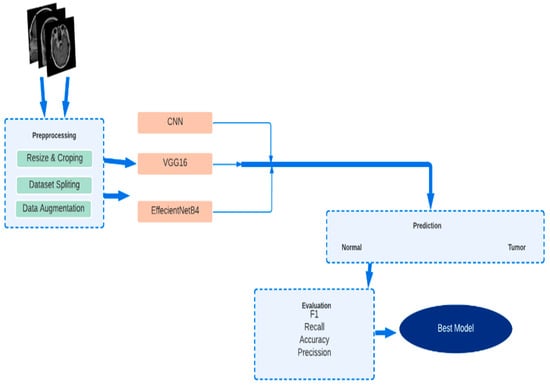

Open AccessArticle

Enhancing Brain Tumor Detection from MRI-Based Images Through Deep Transfer Learning Models

by

Awad Bin Naeem, Biswaranjan Senapati and Abdelhamid Zaidi

AI 2025, 6(12), 305; https://doi.org/10.3390/ai6120305 - 26 Nov 2025

Abstract

Brain tumors are abnormal tissue growth characterized by uncontrolled and rapid cell proliferation. Early detection of brain tumors is critical for improving patient outcomes, and magnetic resonance imaging (MRI) has become the most widely used modality for diagnosis due to its superior image

[...] Read more.

Brain tumors are abnormal tissue growth characterized by uncontrolled and rapid cell proliferation. Early detection of brain tumors is critical for improving patient outcomes, and magnetic resonance imaging (MRI) has become the most widely used modality for diagnosis due to its superior image quality and non-invasive nature. Deep learning, a subset of artificial intelligence, has revolutionized automated medical image analysis by enabling highly accurate and efficient classification tasks. The objective of this study is to develop a robust and effective brain tumor detection system using MRI images through transfer learning. A diagnostic framework is constructed based on convolutional neural networks (CNN), integrating both a custom sequential CNN model and pretrained architectures, namely VGG16 and EfficientNetB4, trained on the ImageNet dataset. Prior to model training, image preprocessing techniques are applied to enhance feature extraction and overall model performance. This research addresses the common challenge of limited MRI datasets by combining EfficientNetB4 with targeted preprocessing, data augmentation, and an appropriate optimizer selection strategy. The proposed methodology significantly reduces overfitting, improves classification accuracy on small datasets, and remains computationally efficient. Unlike previous studies that focus solely on CNN or VGG16 architectures, this work systematically compares multiple transfer learning models and demonstrates the superiority of EfficientNetB4. Experimental results on the Br35H dataset show that EfficientNetB4, combined with the ADAM optimizer, achieves outstanding performance with an accuracy of 99.66%, precision of 99.68%, and an F1-score of 100%. The findings confirm that integrating EfficientNetB4 with dataset-specific preprocessing and transfer learning provides a highly accurate and cost-effective solution for brain tumor classification, facilitating rapid and reliable medical diagnosis.

Full article

(This article belongs to the Special Issue AI in Bio and Healthcare Informatics)

►▼

Show Figures

Figure 1

Open AccessArticle

A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge

by

Luca Cirillo, Marco Gotelli, Marina Massei, Xhulia Sina and Vittorio Solina

AI 2025, 6(12), 304; https://doi.org/10.3390/ai6120304 - 25 Nov 2025

Abstract

In capital-intensive industries, operational knowledge is often trapped in unstructured technical manuals, creating a barrier to efficient and reliable maintenance planning. This work addresses the need for an integrated system that can automate knowledge extraction and generate optimized, resilient, operational plans. A synergistic

[...] Read more.

In capital-intensive industries, operational knowledge is often trapped in unstructured technical manuals, creating a barrier to efficient and reliable maintenance planning. This work addresses the need for an integrated system that can automate knowledge extraction and generate optimized, resilient, operational plans. A synergistic multi-agent framework is introduced that transforms unstructured documents into a structured knowledge base using a self-validating pipeline. This validated knowledge feeds a scheduling engine that combines multi-objective optimization with discrete-event simulation to generate robust, capacity-aware plans. The framework was validated on a complex maritime case study. The system successfully constructed a high-fidelity knowledge base from unstructured manuals and the scheduling engine produced a viable, capacity-aware operational plan for 118 interventions. The optimized plan respected all daily (6) and weekly (28) task limits, executing 64 tasks on their nominal date, bringing 8 forward, and deferring 46 by an average of only 2.0 days (95th percentile 4.8 days) to smooth the workload and avoid bottlenecks. An interactive user interface with a chatbot and planning calendar provides verifiable “plan-to-page” traceability, demonstrating a novel, end-to-end synthesis of document intelligence, agentic AI, and simulation to unlock strategic value from legacy documentation in high-stakes environments.

Full article

(This article belongs to the Special Issue AI for Industrial Operation and Maintenance: Recognition Challenges with Limited Data Condition)

►▼

Show Figures

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Electronics, Eng, Future Internet, Information, Sensors, Sustainability, AI

Artificial Intelligence and Machine Learning in Cyber–Physical Systems

Topic Editors: Wei Wang, Junxin Chen, Jinyu TianDeadline: 31 December 2025

Topic in

AI, Computers, Electronics, Information, MAKE, Signals

Recent Advances in Label Distribution Learning

Topic Editors: Xin Geng, Ning Xu, Liangxiao JiangDeadline: 31 January 2026

Topic in

AI, Buildings, Electronics, Symmetry, Smart Cities, Urban Science, Automation

Application of Smart Technologies in Buildings

Topic Editors: Yin Zhang, Limei Peng, Ming TaoDeadline: 28 February 2026

Topic in

AI, Computers, Education Sciences, Societies, Future Internet, Technologies

AI Trends in Teacher and Student Training

Topic Editors: José Fernández-Cerero, Marta Montenegro-RuedaDeadline: 11 March 2026

Conferences

Special Issues

Special Issue in

AI

Assisted Living of the Elderly: Recent Advances, Systems, and Frameworks

Guest Editor: Nirmalya ThakurDeadline: 31 December 2025

Special Issue in

AI

When Trust Meets Intelligence: The Intersection Between Blockchain, Artificial Intelligence and Internet of Things

Guest Editors: Layth Sliman, Amine Dhraief, Hachemi Nabil DellysDeadline: 31 December 2025

Special Issue in

AI

Artificial Intelligence in Biomedical Engineering: Challenges and Developments

Guest Editor: Ioannis KakkosDeadline: 31 December 2025

Special Issue in

AI

Artificial Intelligence for Future Healthcare: Advancement, Impact, and Prospect in the Field of Cancer

Guest Editor: Arka BhowmikDeadline: 31 December 2025