Machine-Learning-Based Prediction Modelling in Primary Care: State-of-the-Art Review

Abstract

1. Introduction

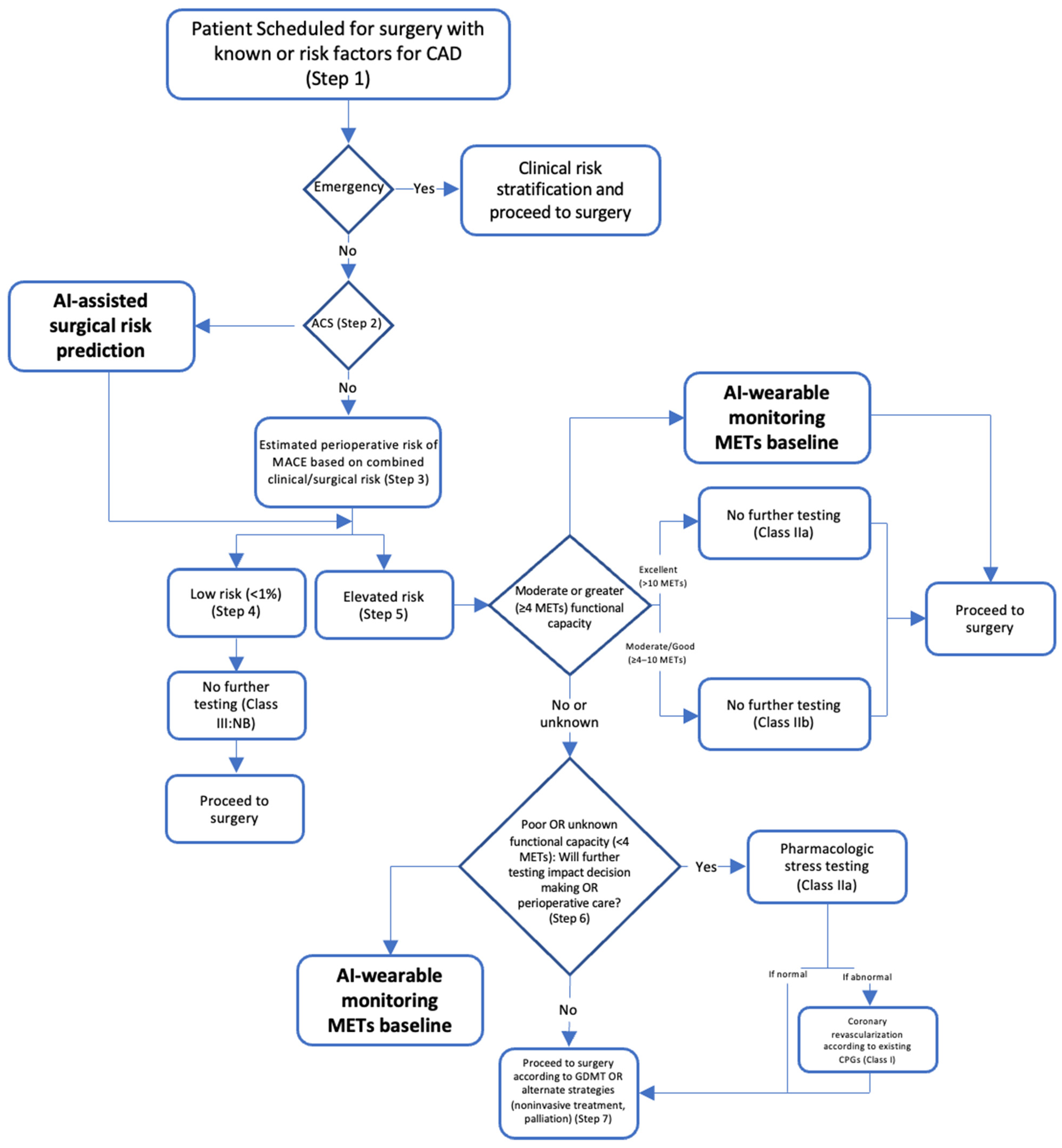

2. Pre-Operative Care

Post-Operative Complications

3. Screening

3.1. Hypertension

3.2. Hypercholesterolemia

3.3. Cardiovascular Disease

3.4. Eye Disorders and Diseases

3.5. Diabetes

3.6. Cancer

3.7. Human Immunodeficiency Virus and Sexually Transmitted Diseases

3.8. Obstructive Sleep Apnea Syndrome

3.9. Osteoporosis

3.10. Chronic Conditions

3.11. Detecting COVID-19 and Influenza

3.12. Detecting Atrial Fibrillation

4. Limitations

5. Implementing AI in Primary Care

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Collins, C.; Dennehy, D.; Conboy, K.; Mikalef, P. Artificial intelligence in information systems research: A systematic literature review and research agenda. Int. J. Inf. Manag. 2021, 60, 102383. [Google Scholar] [CrossRef]

- Kersting, K. Machine Learning and Artificial Intelligence: Two Fellow Travelers on the Quest for Intelligent Behavior in Machines. Front. Big Data 2018, 1, 6. [Google Scholar] [CrossRef] [PubMed]

- Ghuwalewala, S.; Kulkarni, V.; Pant, R.; Kharat, A. Levels of Autonomous Radiology. Interact. J. Med. Res. 2022, 11, e38655. [Google Scholar] [CrossRef]

- Bignami, E.G.; Cozzani, F.; Del Rio, P.; Bellini, V. Artificial intelligence and perioperative medicine. Minerva Anestesiol. 2020, 87, 755–756. [Google Scholar] [CrossRef] [PubMed]

- Chiew, C.J.; Liu, N.; Wong, T.H.; Sim, Y.E.; Abdullah, H.R. Utilizing Machine Learning Methods for Preoperative Prediction of Postsurgical Mortality and Intensive Care Unit Admission. Ann. Surg. 2020, 272, 1133–1139. [Google Scholar] [CrossRef]

- Fernandes, M.P.B.; de la Hoz, M.A.; Rangasamy, V.; Subramaniam, B. Machine Learning Models with Preoperative Risk Factors and Intraoperative Hypotension Parameters Predict Mortality After Cardiac Surgery. J. Cardiothorac. Vasc. Anesth. 2021, 35, 857–865. [Google Scholar] [CrossRef] [PubMed]

- Jalali, A.; Lonsdale, H.; Do, N.; Peck, J.; Gupta, M.; Kutty, S.; Ghazarian, S.R.; Jacobs, J.P.; Rehman, M.; Ahumada, L.M. Deep Learning for Improved Risk Prediction in Surgical Outcomes. Sci. Rep. 2020, 10, 9289. [Google Scholar] [CrossRef]

- Pfitzner, B.; Chromik, J.; Brabender, R.; Fischer, E.; Kromer, A.; Winter, A.; Moosburner, S.; Sauer, I.M.; Malinka, T.; Pratschke, J.; et al. Perioperative Risk Assessment in Pancreatic Surgery Using Machine Learning. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Scotland, UK, 1–5 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2211–2214. [Google Scholar] [CrossRef]

- Sahara, K.; Paredes, A.Z.; Tsilimigras, D.I.; Sasaki, K.; Moro, A.; Hyer, J.M.; Mehta, R.; Farooq, S.A.; Wu, L.; Endo, I.; et al. Machine learning predicts unpredicted deaths with high accuracy following hepatopancreatic surgery. HepatoBiliary Surg. Nutr. 2021, 10, 20–30. [Google Scholar] [CrossRef]

- COVIDSurg Collaborativ; Dajti, I.; Valenzuela, J.I.; Boccalatte, L.A.; Gemelli, N.A.; Smith, D.E.; Dudi-Venkata, N.N.; Kroon, H.M.; Sammour, T.; Roberts, M.; et al. Machine learning risk prediction of mortality for patients undergoing surgery with perioperative SARS-CoV-2: The COVIDSurg mortality score. Br. J. Surg. 2021, 108, 1274–1292. [Google Scholar] [CrossRef]

- Xue, B.; Li, D.; Lu, C.; King, C.R.; Wildes, T.; Avidan, M.S.; Kannampallil, T.; Abraham, J. Use of Machine Learning to Develop and Evaluate Models Using Preoperative and Intraoperative Data to Identify Risks of Postoperative Complications. JAMA Netw. Open 2021, 4, e212240. [Google Scholar] [CrossRef]

- Corey, K.M.; Kashyap, S.; Lorenzi, E.; Lagoo-Deenadayalan, S.A.; Heller, K.; Whalen, K.; Balu, S.; Heflin, M.T.; McDonald, S.R.; Swaminathan, M.; et al. Development and validation of machine learning models to identify high-risk surgical patients using automatically curated electronic health record data (Pythia): A retrospective, single-site study. PLOS Med. 2018, 15, e1002701. [Google Scholar] [CrossRef]

- Bonde, A.; Varadarajan, K.M.; Bonde, N.; Troelsen, A.; Muratoglu, O.K.; Malchau, H.; Yang, A.D.; Alam, H.; Sillesen, M. Assessing the utility of deep neural networks in predicting postoperative surgical complications: A retrospective study. Lancet Digit. Health 2021, 3, e471–e485. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, X.; Xu, Y.; Gao, L.; Ma, Z.; Sun, Y.; Wang, W. Predicting the Risk of Hypertension Based on Several Easy-to-Collect Risk Factors: A Machine Learning Method. Front. Public Health 2021, 9, 619429. [Google Scholar] [CrossRef]

- Alkaabi, L.A.; Ahmed, L.S.; Al Attiyah, M.F.; Abdel-Rahman, M.E. Predicting hypertension using machine learning: Findings from Qatar Biobank Study. PLoS ONE 2020, 15, e0240370. [Google Scholar] [CrossRef]

- Ye, C.; Fu, T.; Hao, S.; Zhang, Y.; Wang, O.; Jin, B.; Xia, M.; Liu, M.; Zhou, X.; Wu, Q.; et al. Prediction of Incident Hypertension Within the Next Year: Prospective Study Using Statewide Electronic Health Records and Machine Learning. J. Med. Internet Res. 2018, 20, e22. [Google Scholar] [CrossRef]

- LaFreniere, D.; Zulkernine, F.; Barber, D.; Martin, K. Using machine learning to predict hypertension from a clinical dataset. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Khalid, S.G.; Zhang, J.; Chen, F.; Zheng, D. Blood Pressure Estimation Using Photoplethysmography Only: Comparison between Different Machine Learning Approaches. J. Healthc. Eng. 2018, 2018, 1548647. [Google Scholar] [CrossRef]

- Myers, K.D.; Knowles, J.W.; Staszak, D.; Shapiro, M.D.; Howard, W.; Yadava, M.; Zuzick, D.; Williamson, L.; Shah, N.H.; Banda, J.; et al. Precision screening for familial hypercholesterolaemia: A machine learning study applied to electronic health encounter data. Lancet Digit. Health 2019, 1, e393–e402. [Google Scholar] [CrossRef]

- Pina, A.; Helgadottir, S.; Mancina, R.M.; Pavanello, C.; Pirazzi, C.; Montalcini, T.; Henriques, R.; Calabresi, L.; Wiklund, O.; Macedo, M.P.; et al. Virtual genetic diagnosis for familial hypercholesterolemia powered by machine learning. Eur. J. Prev. Cardiol. 2020, 27, 1639–1646. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Q.; Zhao, G.; Liu, G.; Liu, Z. Deep Learning-Based Method of Diagnosing Hyperlipidemia and Providing Diagnostic Markers Automatically. Diabetes Metab. Syndr. Obes. Targets Ther. 2020, 13, 679–691. [Google Scholar] [CrossRef]

- Tsigalou, C.; Panopoulou, M.; Papadopoulos, C.; Karvelas, A.; Tsairidis, D.; Anagnostopoulos, K. Estimation of low-density lipoprotein cholesterol by machine learning methods. Clin. Chim. Acta 2021, 517, 108–116. [Google Scholar] [CrossRef]

- Çubukçu, H.C.; Topcu, D.I. Estimation of Low-Density Lipoprotein Cholesterol Concentration Using Machine Learning. Lab. Med. 2022, 53, 161–171. [Google Scholar] [CrossRef] [PubMed]

- Weng, S.F.; Reps, J.; Kai, J.; Garibaldi, J.M.; Qureshi, N. Can machine-learning improve cardiovascular risk prediction using routine clinical data? PLoS ONE 2017, 12, e0174944. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Feng, Q.; Wu, P.; Lupu, R.A.; Wilke, R.A.; Wells, Q.S.; Denny, J.C.; Wei, W.-Q. Learning from Longitudinal Data in Electronic Health Record and Genetic Data to Improve Cardiovascular Event Prediction. Sci. Rep. 2019, 9, 717. [Google Scholar] [CrossRef] [PubMed]

- Kusunose, K.; Hirata, Y.; Tsuji, T.; Kotoku, J.; Sata, M. Deep learning to predict elevated pulmonary artery pressure in patients with suspected pulmonary hypertension using standard chest X-ray. Sci. Rep. 2020, 10, 19311. [Google Scholar] [CrossRef]

- Madani, A.; Moradi, M.; Karargyris, A.; Syeda-Mahmood, T. Chest X-ray generation and data augmentation for cardiovascular abnormality classification. In Medical Imaging 2018: Image Processing; Angelini, E.D., Landman, B.A., Eds.; SPIE: Houston, TX, USA, 2018; p. 57. [Google Scholar] [CrossRef]

- Ambale-Venkatesh, B.; Yang, X.; Wu, C.O.; Liu, K.; Hundley, W.G.; McClelland, R.; Gomes, A.S.; Folsom, A.R.; Shea, S.; Guallar, E.; et al. Cardiovascular Event Prediction by Machine Learning: The Multi-Ethnic Study of Atherosclerosis. Circ. Res. 2017, 121, 1092–1101. [Google Scholar] [CrossRef]

- Alaa, A.M.; Bolton, T.; Di Angelantonio, E.; Rudd, J.H.F.; van der Schaar, M. Cardiovascular disease risk prediction using automated machine learning: A prospective study of 423,604 UK Biobank participants. PLoS ONE 2019, 14, e0213653. [Google Scholar] [CrossRef]

- Pfohl, S.; Marafino, B.; Coulet, A.; Rodriguez, F.; Palaniappan, L.; Shah, N.H. Creating Fair Models of Atherosclerotic Cardiovascular Disease Risk. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, Honolulu, HI, USA, 27–28 January 2019. [Google Scholar] [CrossRef]

- Al’aref, S.J.; Maliakal, G.; Singh, G.; van Rosendael, A.R.; Ma, X.; Xu, Z.; Alawamlh, O.A.H.; Lee, B.; Pandey, M.; Achenbach, S.; et al. Machine learning of clinical variables and coronary artery calcium scoring for the prediction of obstructive coronary artery disease on coronary computed tomography angiography: Analysis from the CONFIRM registry. Eur. Heart J. 2020, 41, 359–367. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Cheung, C.Y.-L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; Yeo, I.Y.S.; Lee, S.Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef]

- Kim, Y.D.; Noh, K.J.; Byun, S.J.; Lee, S.; Kim, T.; Sunwoo, L.; Lee, K.J.; Kang, S.-H.; Park, K.H.; Park, S.J. Effects of Hypertension, Diabetes, and Smoking on Age and Sex Prediction from Retinal Fundus Images. Sci. Rep. 2020, 10, 4623. [Google Scholar] [CrossRef]

- Ravaut, M.; Harish, V.; Sadeghi, H.; Leung, K.K.; Volkovs, M.; Kornas, K.; Watson, T.; Poutanen, T.; Rosella, L.C. Development and Validation of a Machine Learning Model Using Administrative Health Data to Predict Onset of Type 2 Diabetes. JAMA Netw. Open 2021, 4, e2111315. [Google Scholar] [CrossRef]

- Ravaut, M.; Sadeghi, H.; Leung, K.K.; Volkovs, M.; Kornas, K.; Harish, V.; Watson, T.; Lewis, G.F.; Weisman, A.; Poutanen, T.; et al. Predicting adverse outcomes due to diabetes complications with machine learning using administrative health data. Npj Digit. Med. 2021, 4, 24. [Google Scholar] [CrossRef]

- Deberneh, H.M.; Kim, I. Prediction of Type 2 Diabetes Based on Machine Learning Algorithm. Int. J. Environ. Res. Public Health 2021, 18, 3317. [Google Scholar] [CrossRef]

- Alhassan, Z.; McGough, A.S.; Alshammari, R.; Daghstani, T.; Budgen, D.; Al Moubayed, N. Type-2 Diabetes Mellitus Diagnosis from Time Series Clinical Data Using Deep Learning Models. In Artificial Neural Networks and Machine Learning—ICANN 2018; Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11141, pp. 468–478. [Google Scholar] [CrossRef]

- Boutilier, J.J.; Chan, T.C.Y.; Ranjan, M.; Deo, S. Risk Stratification for Early Detection of Diabetes and Hypertension in Resource-Limited Settings: Machine Learning Analysis. J. Med. Internet Res. 2021, 23, e20123. [Google Scholar] [CrossRef]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Alharbi, F.; Vakanski, A. Machine Learning Methods for Cancer Classification Using Gene Expression Data: A Review. Bioengineering 2023, 10, 173. [Google Scholar] [CrossRef]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef]

- Gould, M.K.; Huang, B.Z.; Tammemagi, M.C.; Kinar, Y.; Shiff, R. Machine Learning for Early Lung Cancer Identification Using Routine Clinical and Laboratory Data. Am. J. Respir. Crit. Care Med. 2021, 204, 445–453. [Google Scholar] [CrossRef]

- Yeh, M.C.-H.; Wang, Y.-H.; Yang, H.-C.; Bai, K.-J.; Wang, H.-H.; Li, Y.-C.J. Artificial Intelligence–Based Prediction of Lung Cancer Risk Using Nonimaging Electronic Medical Records: Deep Learning Approach. J. Med. Internet Res. 2021, 23, e26256. [Google Scholar] [CrossRef]

- Guo, Y.; Yin, S.; Chen, S.; Ge, Y. Predictors of underutilization of lung cancer screening: A machine learning approach. Eur. J. Cancer Prev. 2022, 31, 523–529. [Google Scholar] [CrossRef]

- Mehmood, M.; Rizwan, M.; Ml, M.G.; Abbas, S. Machine Learning Assisted Cervical Cancer Detection. Front. Public Health 2021, 9, 788376. [Google Scholar] [CrossRef] [PubMed]

- Rahaman, M.; Li, C.; Yao, Y.; Kulwa, F.; Wu, X.; Li, X.; Wang, Q. DeepCervix: A deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Comput. Biol. Med. 2021, 136, 104649. [Google Scholar] [CrossRef] [PubMed]

- Wentzensen, N.; Lahrmann, B.; Clarke, M.A.; Kinney, W.; Tokugawa, D.; Poitras, N.; Locke, A.; Bartels, L.; Krauthoff, A.; Walker, J.; et al. Accuracy and Efficiency of Deep-Learning–Based Automation of Dual Stain Cytology in Cervical Cancer Screening. JNCI J. Natl. Cancer Inst. 2020, 113, 72–79. [Google Scholar] [CrossRef] [PubMed]

- Shen, L.; Margolies, L.R.; Rothstein, J.H.; Fluder, E.; McBride, R.; Sieh, W. Deep Learning to Improve Breast Cancer Detection on Screening Mammography. Sci. Rep. 2019, 9, 12495. [Google Scholar] [CrossRef]

- Buda, M.; Saha, A.; Walsh, R.; Ghate, S.; Li, N.; Swiecicki, A.; Lo, J.Y.; Mazurowski, M.A. A Data Set and Deep Learning Algorithm for the Detection of Masses and Architectural Distortions in Digital Breast Tomosynthesis Images. JAMA Netw. Open 2021, 4, e2119100. [Google Scholar] [CrossRef]

- Maghsoudi, O.H.; Gastounioti, A.; Scott, C.; Pantalone, L.; Wu, F.-F.; Cohen, E.A.; Winham, S.; Conant, E.F.; Vachon, C.; Kontos, D. Deep-LIBRA: An artificial-intelligence method for robust quantification of breast density with independent validation in breast cancer risk assessment. Med. Image Anal. 2021, 73, 102138. [Google Scholar] [CrossRef]

- Ming, C.; Viassolo, V.; Probst-Hensch, N.; Dinov, I.D.; Chappuis, P.O.; Katapodi, M.C. Machine learning-based lifetime breast cancer risk reclassification compared with the BOADICEA model: Impact on screening recommendations. Br. J. Cancer 2020, 123, 860–867. [Google Scholar] [CrossRef]

- Perera, M.; Mirchandani, R.; Papa, N.; Breemer, G.; Effeindzourou, A.; Smith, L.; Swindle, P.; Smith, E. PSA-based machine learning model improves prostate cancer risk stratification in a screening population. World J. Urol. 2021, 39, 1897–1902. [Google Scholar] [CrossRef]

- Chiu, P.K.-F.; Shen, X.; Wang, G.; Ho, C.-L.; Leung, C.-H.; Ng, C.-F.; Choi, K.-S.; Teoh, J.Y.-C. Enhancement of prostate cancer diagnosis by machine learning techniques: An algorithm development and validation study. Prostate Cancer Prostatic Dis. 2021, 25, 672–676. [Google Scholar] [CrossRef]

- Beinecke, J.M.; Anders, P.; Schurrat, T.; Heider, D.; Luster, M.; Librizzi, D.; Hauschild, A.-C. Evaluation of machine learning strategies for imaging confirmed prostate cancer recurrence prediction on electronic health records. Comput. Biol. Med. 2022, 143, 105263. [Google Scholar] [CrossRef]

- Turbé, V.; Herbst, C.; Mngomezulu, T.; Meshkinfamfard, S.; Dlamini, N.; Mhlongo, T.; Smit, T.; Cherepanova, V.; Shimada, K.; Budd, J.; et al. Deep learning of HIV field-based rapid tests. Nat. Med. 2021, 27, 1165–1170. [Google Scholar] [CrossRef]

- Bao, Y.; Medland, N.A.; Fairley, C.K.; Wu, J.; Shang, X.; Chow, E.P.; Xu, X.; Ge, Z.; Zhuang, X.; Zhang, L. Predicting the diagnosis of HIV and sexually transmitted infections among men who have sex with men using machine learning approaches. J. Infect. 2020, 82, 48–59. [Google Scholar] [CrossRef]

- Marcus, J.L.; Hurley, L.B.; Krakower, D.S.; Alexeeff, S.; Silverberg, M.J.; Volk, J.E. Use of electronic health record data and machine learning to identify candidates for HIV pre-exposure prophylaxis: A modelling study. Lancet HIV 2019, 6, e688–e695. [Google Scholar] [CrossRef]

- Elder, H.R.; Gruber, S.; Willis, S.J.; Cocoros, N.; Callahan, M.; Flagg, E.W.; Klompas, M.; Hsu, K.K. Can Machine Learning Help Identify Patients at Risk for Recurrent Sexually Transmitted Infections? Sex. Transm. Dis. 2020, 48, 56–62. [Google Scholar] [CrossRef]

- Gadalla, A.A.H.; Friberg, I.M.; Kift-Morgan, A.; Zhang, J.; Eberl, M.; Topley, N.; Weeks, I.; Cuff, S.; Wootton, M.; Gal, M.; et al. Identification of clinical and urine biomarkers for uncomplicated urinary tract infection using machine learning algorithms. Sci. Rep. 2019, 9, 19694. [Google Scholar] [CrossRef]

- Taylor, R.A.; Moore, C.L.; Cheung, K.-H.; Brandt, C. Predicting urinary tract infections in the emergency department with machine learning. PLoS ONE 2018, 13, e0194085. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Liu, W.-T.; Lin, Y.-T.; Lin, S.-Y.; Houghton, R.; Hsu, W.-H.; Wu, D.; Lee, H.-C.; Wu, C.-J.; Li, L.Y.J.; et al. Machine learning approaches for screening the risk of obstructive sleep apnea in the Taiwan population based on body profile. Inform. Health Soc. Care 2021, 47, 373–388. [Google Scholar] [CrossRef]

- Álvarez, D.; Cerezo-Hernández, A.; Crespo, A.; Gutiérrez-Tobal, G.C.; Vaquerizo-Villar, F.; Barroso-García, V.; Moreno, F.; Arroyo, C.A.; Ruiz, T.; Hornero, R.; et al. A machine learning-based test for adult sleep apnoea screening at home using oximetry and airflow. Sci. Rep. 2020, 10, 5332. [Google Scholar] [CrossRef]

- Mencar, C.; Gallo, C.; Mantero, M.; Tarsia, P.; Carpagnano, G.E.; Barbaro, M.P.F.; Lacedonia, D. Application of machine learning to predict obstructive sleep apnea syndrome severity. Health Inform. J. 2020, 26, 298–317. [Google Scholar] [CrossRef]

- Park, H.W.; Jung, H.; Back, K.Y.; Choi, H.J.; Ryu, K.S.; Cha, H.S.; Lee, E.K.; Hong, A.R.; Hwangbo, Y. Application of Machine Learning to Identify Clinically Meaningful Risk Group for Osteoporosis in Individuals Under the Recommended Age for Dual-Energy X-Ray Absorptiometry. Calcif. Tissue Int. 2021, 109, 645–655. [Google Scholar] [CrossRef]

- Kim, S.K.; Yoo, T.K.; Oh, E.; Kim, D.W. Osteoporosis risk prediction using machine learning and conventional methods. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 188–191. [Google Scholar] [CrossRef]

- Liu, L.; Si, M.; Ma, H.; Cong, M.; Xu, Q.; Sun, Q.; Wu, W.; Wang, C.; Fagan, M.J.; Mur, L.A.J.; et al. A hierarchical opportunistic screening model for osteoporosis using machine learning applied to clinical data and CT images. BMC Bioinform. 2022, 23, 63. [Google Scholar] [CrossRef] [PubMed]

- Lim, H.K.; Ha, H.I.; Park, S.-Y.; Han, J. Prediction of femoral osteoporosis using machine-learning analysis with radiomics features and abdomen-pelvic CT: A retrospective single center preliminary study. PLoS ONE 2021, 16, e0247330. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Nasoz, F.; Jung, J.; Bhattarai, B.; Han, M.V. Machine Learning Approaches for Fracture Risk Assessment: A Comparative Analysis of Genomic and Phenotypic Data in 5130 Older Men. Calcif. Tissue Int. 2020, 107, 353–361. [Google Scholar] [CrossRef] [PubMed]

- Moslemi, A.; Kontogianni, K.; Brock, J.; Wood, S.; Herth, F.; Kirby, M. Differentiating COPD and asthma using quantitative CT imaging and machine learning. Eur. Respir. J. 2022, 60, 2103078. [Google Scholar] [CrossRef] [PubMed]

- Zeng, S.; Arjomandi, M.; Tong, Y.; Liao, Z.C.; Luo, G. Developing a Machine Learning Model to Predict Severe Chronic Obstructive Pulmonary Disease Exacerbations: Retrospective Cohort Study. J. Med. Internet Res. 2022, 24, e28953. [Google Scholar] [CrossRef]

- Nishat, M.; Faisal, F.; Dip, R.; Nasrullah, S.; Ahsan, R.; Shikder, F.; Asif, M.A. Hoque A Comprehensive Analysis on Detecting Chronic Kidney Disease by Employing Machine Learning Algorithms. EAI Endorsed Trans. Pervasive Health Technol. 2018, 7, 170671. [Google Scholar] [CrossRef]

- Bai, Q.; Su, C.; Tang, W.; Li, Y. Machine learning to predict end stage kidney disease in chronic kidney disease. Sci. Rep. 2022, 12, 8377. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Unal, M.; Toumaj, S. Machine learning applications for COVID-19 outbreak management. Neural Comput. Appl. 2022, 34, 15313–15348. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Z.; Li, S.; Liu, T.; Wang, X.; Xia, J.; Zhao, Y. Machine Learning-Based Decision Model to Distinguish Between COVID-19 and Influenza: A Retrospective, Two-Centered, Diagnostic Study. Risk Manag. Healthc. Policy 2021, 14, 595–604. [Google Scholar] [CrossRef]

- Zan, A.; Xie, Z.-R.; Hsu, Y.-C.; Chen, Y.-H.; Lin, T.-H.; Chang, Y.-S.; Chang, K.Y. DeepFlu: A deep learning approach for forecasting symptomatic influenza A infection based on pre-exposure gene expression. Comput. Methods Programs Biomed. 2021, 213, 106495. [Google Scholar] [CrossRef]

- Nadda, W.; Boonchieng, W.; Boonchieng, E. Influenza, dengue and common cold detection using LSTM with fully connected neural network and keywords selection. BioData Min. 2022, 15, 5. [Google Scholar] [CrossRef]

- Hogan, C.A.; Rajpurkar, P.; Sowrirajan, H.; Phillips, N.A.; Le, A.T.; Wu, M.; Garamani, N.; Sahoo, M.K.; Wood, M.L.; Huang, C.; et al. Nasopharyngeal metabolomics and machine learning approach for the diagnosis of influenza. EbioMedicine 2021, 71, 103546. [Google Scholar] [CrossRef]

- Choo, H.; Kim, M.; Choi, J.; Shin, J.; Shin, S.-Y. Influenza Screening via Deep Learning Using a Combination of Epidemiological and Patient-Generated Health Data: Development and Validation Study. J. Med. Internet Res. 2020, 22, e21369. [Google Scholar] [CrossRef]

- Lown, M.; Brown, M.; Brown, C.; Yue, A.M.; Shah, B.N.; Corbett, S.J.; Lewith, G.; Stuart, B.; Moore, M.; Little, P. Machine learning detection of Atrial Fibrillation using wearable technology. PLoS ONE 2020, 15, e0227401. [Google Scholar] [CrossRef]

- Ali, F.; Hasan, B.; Ahmad, H.; Hoodbhoy, Z.; Bhuriwala, Z.; Hanif, M.; Ansari, S.U.; Chowdhury, D. Detection of subclinical rheumatic heart disease in children using a deep learning algorithm on digital stethoscope: A study protocol. BMJ Open 2021, 11, e044070. [Google Scholar] [CrossRef]

- Kwon, S.; Hong, J.; Choi, E.-K.; Lee, E.; Hostallero, D.E.; Kang, W.J.; Lee, B.; Jeong, E.-R.; Koo, B.-K.; Oh, S.; et al. Deep Learning Approaches to Detect Atrial Fibrillation Using Photoplethysmographic Signals: Algorithms Development Study. JMIR mHealth uHealth 2019, 7, e12770. [Google Scholar] [CrossRef]

- Tiwari, P.; Colborn, K.L.; Smith, D.E.; Xing, F.; Ghosh, D.; Rosenberg, M.A. Assessment of a Machine Learning Model Applied to Harmonized Electronic Health Record Data for the Prediction of Incident Atrial Fibrillation. JAMA Netw. Open 2020, 3, e1919396. [Google Scholar] [CrossRef]

- Sekelj, S.; Sandler, B.; Johnston, E.; Pollock, K.G.; Hill, N.R.; Gordon, J.; Tsang, C.; Khan, S.; Ng, F.S.; Farooqui, U. Detecting undiagnosed atrial fibrillation in UK primary care: Validation of a machine learning prediction algorithm in a retrospective cohort study. Eur. J. Prev. Cardiol. 2021, 28, 598–605. [Google Scholar] [CrossRef]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef]

- Sunarti, S.; Rahman, F.F.; Naufal, M.; Risky, M.; Febriyanto, K.; Masnina, R. Artificial intelligence in healthcare: Opportunities and risk for future. Gac. Sanit. 2021, 35, S67–S70. [Google Scholar] [CrossRef]

- Christodoulou, E.; Ma, J.; Collins, G.S.; Steyerberg, E.W.; Verbakel, J.Y.; Van Calster, B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J. Clin. Epidemiol. 2019, 110, 12–22. [Google Scholar] [CrossRef]

- Ahuja, A.S. The impact of artificial intelligence in medicine on the future role of the physician. PeerJ 2019, 7, e7702. [Google Scholar] [CrossRef] [PubMed]

- Raschka, S. Model Evaluation, Model Selection, and Algorithm Selection in Machine Learning. arXiv 2018, arXiv:1811.12808. [Google Scholar] [CrossRef]

- de Vos, J.; Visser, L.A.; de Beer, A.A.; Fornasa, M.; Thoral, P.J.; Elbers, P.W.; Cinà, G. The Potential Cost-Effectiveness of a Machine Learning Tool That Can Prevent Untimely Intensive Care Unit Discharge. Value Health 2021, 25, 359–367. [Google Scholar] [CrossRef] [PubMed]

| Name | Abbreviation |

|---|---|

| Acute kidney injury | AKI |

| Adaptive boosting | ADA |

| Age-related macular degeneration | AMD |

| Artificial intelligence | AI |

| Atherosclerotic cardiovascular disease | ACSVD |

| Atrial fibrillation | AF |

| Blood pressure | BP |

| Chronic kidney disease | CKD |

| Chronic obstructive pulmonary disease | COPD |

| Convolutional neural network | CNN |

| Coronary artery calcium score | CACS |

| Coronary artery disease | CAD |

| Decision tree | DT |

| Deep learning | DL |

| Deep neural network | DNN |

| Deep vein thrombus | DVT |

| Diabetes mellitus | DM |

| Electronic health records | EHR |

| Extreme gradient boosting | XGB |

| Familial hypercholesterolemia | FH |

| Generative adversarial network | GAN |

| Gradient boosting | GB |

| Gradient boosting tree | GBT |

| Heart failure | HF |

| Human immunodeficiency virus | HIV |

| K-nearest neighbors | KNN |

| Logistic regression | LR |

| Low-density lipoprotein | LDL |

| Machine learning | ML |

| Neural network | NN |

| Obstructive sleep apnea syndrome | OSAS |

| Photoplethysmogram | PPG |

| Potential pre-exposure prophylaxis | PrEP |

| Pulmonary embolism | PE |

| Pulmonary hypertension | PH |

| Random forest | RF |

| Support vector machine | SVM |

| Urinary tract infection | UTI |

| Trial or Registry | N | Aim | Inclusion Criteria | Exclusion Criteria | Status |

|---|---|---|---|---|---|

| NCT05166122 | 1600 | Use AI to screen for diabetic retinopathy | >18 years, screened for diabetic retinopathy, with diabetes, can take retina pictures | Part of community hospital with ophthalmologist, previously diagnosed with some retinal conditions, laser retinal treatment, has other eye diseases | Recruiting |

| NCT05286034 | 4000 | AI ChatBot to improve women participation in cervical cancer screening program | 30–65, did not perform pap smear in last 4 years, living in deprived clusters | Outside age group, had pap smear in last 3 years, had hysterectomy including cervix, pregnant beyond 6 months, already scheduled screening appointment | Not yet recruiting |

| NCT04551287 | 16,164 | Cervical cancer AI screening for cytopathological diagnosis | 25–65 years old, availably of confirmed diagnosis results of cytological exam | Unsatisfactory samples of cytological exam, women diagnosed with other malignant tumors | Completed |

| NCT05435872 | 2000 | AI for gastrointestinal endoscopy screening | Patients received gastroscopy and colonoscopy, endoscopic exam with AI can be accepted | Patients refusing to participate, patients with intolerance or contraindications to endoscopic exams | Recruiting |

| NCT05697601 | 2905 | Finding predictors of ovarian and endometrial cancer for AI screening tool | Women with gynecological symptoms, women underwent routine gynecological exam | Unable to undergo serial gynecological exam | Recruiting |

| NCT04838756 | 100,000 | AI for mammography screening | Women eligible for population-based mammography screening | None | Active, not recruiting |

| NCT05452993 | 330 | AI screening for diabetic retinopathy | Adult patients with diabetes, ongoing diabetes treatment, regular pharmacy customer, informed consent | Unable to read, write, or give consent, refusing to share results with general practitioner | Not yet recruiting |

| NCT04778670 | 55,579 | AI for large-scale breast screening | Participants in regular population-based breast cancer | Incomplete exam, breast implant, complete mastectomy, participant in surveillance program | Active, not recruiting |

| NCT05139797 | 300 | AI-guided echo screening of rare diseases | Patients with high suspicion for cardiac amyloidosis by AI | Patients that decline to be seen at specialty clinic, patients that passed away | Recruiting |

| NCT05139940 | 2432 | AI-enabled TB screening in Zambia | 18 years or older with known HIV status | Individuals that do not meet inclusion criteria | Recruiting |

| NCT04743479 | 5000 | AI screening of pancreatic cancer | Subject can provide informed consent, detailed questionnaire filled, and subject has one of several listed conditions | Subject has been diagnosed with pancreatic cancer or other malignant tumors in past 5 years, subject contraindicates MRI or CT, subjects is in another clinical trial | Recruiting |

| NCT04949776 | 27,000 | AI for breast cancer screening | 50–69 years old, women studied in the program in the set period and for the first time | Unable to give consent, breast prostheses, symptoms or signs of suspected breast cancer | Recruiting |

| NCT05587452 | 950 | AI screening for colorectal cancer | Informed consent, provide blood samples, diagnosed with colorectal cancer or colorectal adenoma | Pregnant or breastfeeding, diagnosed with another cancer, selective exclusions for colorectal cancer and healthy people | Recruiting |

| NCT05456126 | 125 | AI for infant motor screening | Mothers older than 20, no history of recreational drugs, married or live with fathers. Specific criteria for term and preterm infants | None | Recruiting |

| NCT05024591 | 32,714 | AI for breast cancer screening | Eligible for national screening, provides consent | History or current breast cancer, currently pregnant or plans to become pregnant, history of breast surgery, has mammography for diagnostic purposes | Recruiting |

| NCT04732208 | 410 | AI screening of diabetic retinopathy using smartphone camera | Over 18 years, informed consent, established cases of DM, subjects dilated for ophthalmic evaluation | Acute vision loss, contraindicated for fundus imaging, treated for retinopathy, other retinal pathologies, at risk of acute angle closure glaucoma | Completed |

| NCT05311046 | 2400 | AI screening for pediatric sepsis | 3 months–17 years of age, diagnosed with sepsis, blood sample collection | Participating in outside interventions, parents or LARs that do not speak English or Spanish, pregnancy | Recruiting |

| NCT05391659 | 1200 | AI screening for diabetic retinopathy | Diagnosed with DM, >18 years old, informed consent, fluent in written and oral Dutch | History of diabetic retinopathy or diabetic macular edema treatment, contraindicated for imaging by fundus imaging | Recruiting |

| NCT04307030 | 5000 | AI screening for congenital heart disease by heart sounds | 0–18 years of age, children with or without congenital heart disease, informed consent | >18 years of age, unable to undergo echo, not able to provide informed consent | Not yet recruiting |

| NCT04000087 | 358 | ECG AI-guided screening for low ejection fraction | Primary care clinicians who are part of a participating care team | Primary care clinicians working in pediatrics, acute care, nursing homes, and resident care teams | Completed |

| NCT04156880 | 1000 | AI in mammography-based breast cancer screening | Women had undergone standard mammography, histopathology-proven diagnosis | Concurring lesions on mammograms, no available pathologic diagnosis or long term follow up exams, undergone breast surgery, diagnosed with other kinds of malignancy | Recruiting |

| NCT05645341 | 400 | AI screening of malignant pigmented tumors on ocular surface | Dark-brown lesions on ocular surface | Non-pigmented ocular surface tumors and image quality does not meet clinical requirements | Recruiting |

| NCT05048095 | 15,500 | AI in breast cancer screening | Women participating in regular breast cancer screening program | Women with breast implants or other foreign implants in mammogram and women with symptoms or signs of suspected breast cancer | Completed |

| NCT04894708 | 1572 | AI for polyp detection in colonoscopy | >35 years, planned diagnostic colonoscopy’s screening colonoscopy for men >50 or women >55 | Colon bleeding, colon carcinoma, known polyps for removal, IBD, colonic stenosis, other suspected colon disease, follow-up care after colon cancer surgery, anticoagulant drugs, poor general condition, incomplete colonoscopy planned | Recruiting |

| NCT04160988 | 703 | AI for screening diabetic retinopathy | >20 years, DM, image taken by color fundus, include includes macula and optic nerve | Color fundus image previously use, macula, optic nerve or other part is unclear | Completed |

| NCT04213183 | 1789 | DL screening for hepatobiliary diseases | Quality of fundus and slit-lamp images is acceptable, more than 90% of fundus image area includes four main regions, more than 90% of slit-lamp image area includes three main regions | Images with light leakage (>10% of the area) | Completed |

| NCT04832594 | 2500 | AI screening for breast cancer for supplemental MRI | Four-view screening mammography exam | Women in surveillance program, breast implants, prior breast cancer, breast feeding, MRI contraindication | Recruiting |

| NCT05704491 | 100 | AI screening for diabetic retinopathy | DM diagnosis, diabetes duration >5 years, >18 years old, informed consent, fluent in writing and speaking German | History of laser treatment, contraindication to fundus imaging systems | Not yet recruiting |

| NCT04699864 | 630 | AI for screening diabetic retinopathy | >18 years and older, informed consent, diagnostic for diabetes, diabetic patient followed and referred by physician | Patients less than 18 years old, no informed consent, patient already had treatment for retinal condition | Not yet recruiting |

| NCT04859634 | 2000 | AI for detecting multiple ocular fundus lesions | Participants who agree to take ultra-widefield fundus images | Patients that cannot cooperate with photographer, no informed consent | Recruiting |

| NCT05734820 | 312 | AI screening colonoscopy | >45 years old, referred for screening colonoscopy, adequate bowel preparation, authorized for endoscopic approach | Pregnancy, clinical condition making endoscopy inviable, history of colorectal carcinoma, IBD, no informed consent | Recruiting |

| NCT04859530 | 5886 | AI smartphone for cervical cancer screening | Informed consent | No initiation of sexual intercourse, pregnancy, condition altering cervix visualization, previous hysterectomy, health not sufficient | Recruiting |

| NCT03773458 | 500 | AI for large-scale screening of scoliosis | Pretreatment back photos and whole spine standing X-ray or ultrasound images | Patients considered as non-idiopathic scoliosis | Completed |

| NCT05704920 | 2722 | AI for lung cancer screening | 50–80 years old, active or ex-smoker, smoking history of at least 20 pack-years, informed consent, affiliated with French social security | Clinical signs of cancer, recent chest scan, health problems affecting life expectancy or limiting ability to undergo lung surgery, vulnerable people | Not yet recruiting |

| NCT05236855 | 200 | AI and spectroscopy for cervical cancer screening | Women undergoing standard HPV screening | NA | Not yet recruiting |

| NCT05527535 | 34,500 | AI for diabetic retinopathy screening | T1DM or T2DM, no full-time ophthalmologist, >18 years old, eligible for fundus photo imaging | T1DM or T2DM with an ophthalmologist, previous diagnosed with macular edema, history of retinal laser, other ocular disease, not eligible for fundus imaging | Not yet recruiting |

| NCT05745480 | 2 | NLP for screening opioid misuse | Adults hospitalized at UW health | NA | Recruiting |

| NCT05490823 | 1000 | AI smartphone for anemia screening | Informed consent | Ophthalmic or fingernail surgery in past 30 days | Recruiting |

| NCT04896827 | 244 | DL and AI for DNIC | 18–70 years old, chronic or no chronic pain, informed consent | CVD, Raynaud syndrome, severe psychiatric disease, injuries or loss sensitivity, pregnant women | Recruiting |

| NCT05752045 | 1389 | AI for screening eye diseases | >18 years, T1DM or T2DM, presenting screening for diabetic retinopathy, benefits of social security scheme, informed consent | Patient with known DR, any condition affecting study, presenting social or psychological factors, participates in another clinical research study | Not yet recruiting |

| NCT05243121 | 5000 | AI for MRI in screening breast cancer | Patients with clinical symptoms, undergoing full sequence BMRI exam, at least 6 months of follow-up results | Received therapy, contraindications of breast-enhanced MRI exams, prosthesis is implanted in affected breast, patients during lactation or pregnancy | Recruiting |

| NCT04996615 | 924 | AI for identifying diabetic retinopathy and diabetic macular edema | Routine exams, routine laser treatment, diagnosed with T1DM or T2DM, presents visual acuity | Currently using AI system integrated into clinical care, inability to provide informed consent | Recruiting |

| NCT03975504 | 6000 | AI for lung cancer screening | Eligible participants aged 45–75 years with one of several risk factors | Had CT scan of chest in past 12 months, history of any cancer within 5 years | Recruiting |

| NCT05626517 | 2000 | Developing risk stratification tools using AI | 21 years or older, sufficient English or Chinese language skills, informed consent | <21 years old, cardiac event, no informed consent | Not yet recruiting |

| NCT04994899 | 800 | AI screening for mental health | 13–79 years old, English-speaking | Previous participant, unable to verbally respond to standard questions, cannot participate in virtual visit, no informed consent | Recruiting |

| NCT05195385 | 2400 | Lung cancer screening with low-dose CT scans | 50–74 years, smoked at least 20 pack years, quit less than 15 years ago, gives consent, affiliated with social security system | Presence of clinical symptoms suggesting malignancy, evolving cancer, history of lung cancer, 2-year follow-up not possible, chest CT scan performed | Recruiting |

| NCT04240652 | 500,000 | AI for diabetic retinopathy screening | T2DM or T1DM, subjects from other medical institutes are diabetes, non-diabetic patients and healthy participants | History of drug abuse, STDs, any condition not suitable for study | Recruiting |

| NCT04126239 | 1610 | AI for food addiction screening test | BMI >30, able to give informed consent | Non-French speaker, unable to use internet tools | Recruiting |

| NCT04603404 | 430 | Multimodality imaging in screening, diagnosis, and risk stratification of HFpEF | LVEF > 50%, NT-proBNP > 220pg/mL or BNP > pg/mL, symptoms and syndromes of HF, at least one criteria of cardiac structure | Special types of cardiomyopathies, infarction, myocardial fibrosis, severe arrhythmia, severe primary cardiac valvular disease, restrictive pericardial disease, refuses to participate in study | Recruiting |

| NCT05159661 | 1000 | AI for screening brain connectivity and dementia risk estimation | Male and female 60–75 years, MCI diagnosis with MMSE > 25, MCI diagnosis with MoCa > 17 | Confirmed dementia, history of cerebrovascular disease, AUD identification test, severe medical disorders associated with cognitive impairment, severe head trauma, severe mental disorders | Recruiting |

| NCT05650086 | 700 | AI for breast screening | Understands the study, informed consent, complies with schedule, >21 years, fits cohort specific criteria | Does not fit cohort specific criteria, unable to complete study procedures | Recruiting |

| NCT05426135 | 3000 | AI for tumor risk assessment | Participants with suspected cancer, informed consent, detailed EHR data, healthy participants | Participants with primary clinical and pathological missing data, lost to follow-up, poor medical image quality | Recruiting |

| NCT05639348 | 650 | AI for risk assessment of postoperative delirium | Surgical patients, >60 years old, planned postoperative hospital stay >2 days, informed consent | Preoperative delirium, insufficient knowledge in German or French, intracranial surgery, cardiac surgery, surgery within two previous weeks, unable to provide informed consent | Recruiting |

| NCT05466864 | 120 | Screening of OSA using BSP | Hospitalized with acute ischemic stroke, 18–80, informed consent | History of AF, LVEF < 45%, aphasia, unstable cardiopulmonary status, recent surgery including tracheotomy in 30 days, narcotics, on O2, PAP device, ventilator, unable to understand instructions | Recruiting |

| NCT05655117 | 440 | AI for detecting eye complications in diabetics | Diabetic patients aged 18–90 | Severely ill patient or patient with cancer | Not yet recruiting |

| NCT03688906 | 3275 | AI colorectal cancer screening test | Differs across three cohorts | Differs across three cohorts | Completed |

| NCT05246163 | 1500 | AI smartphone for skin cancer detection | Patients with one or two lesions meeting one of several criteria, informed consent | Lack of informed consent | Recruiting |

| NCT05730192 | 950 | AI for detection of gastrointestinal lesions in endoscopy | Screening or surveillance colonoscopy, age 40 or older, informed consent | Emergency colonoscopies, IBD, CRC, previous colonic resection, returning for elective colonoscopy, polyposis syndromes, contraindications | Not yet recruiting |

| NCT05566002 | 2000 | AI evaluation of pulmonary hypertension | >18 years, previous received diagnostic imaging | Patients without RHC, quality of exams cannot meet requirement, severe loss of results | Recruiting |

| ML Model | Advantages | Limitations | Clinical Applications in Primary Care |

|---|---|---|---|

| Logistic Regression | Easy to implement and interpret, handles binary and multi-class classification | Does not perform well with outliers, assumes linear relationship | Diagnostic tests, selection of treatment, prognostic modeling, predicting disease risk |

| Convolutional Neural Network | Excels in video and image recognition, learns hierarchical features | Needs a lot of data and resource, interpretation is limited | Image classification, diagnosing from medical imaging |

| Support Vector Machine | Handles non-linear decision boundaries, great generalization | Precise kernel function and hyperparameters selection, difficult with noisy data | Diagnosing disease, risk stratification, classifying clinical data |

| K-Nearest Neighbors | Easy, simple, handles non-linear decision boundaries | Needs a lot of memory and time, sensitivities to certain features | Assisting in disease progression through forecasting |

| Random Forest | Performs well with high-dimensional data, handles non-linear effects | Hard to interpret, overfits noisy data | Identifying risk factors, predicting outcomes, |

| Adaptive Boosting | Handles regression and classification problems, combines weak learners | Overfits with weak learners, sensitive to noisy data | Predicting risk of disease, and detecting high risk |

| Gradient Boosting | Performs with large datasets, handles regression and classification | Overfits with weak learners, sensitive to noisy data | Forecasting outcomes and diagnosing disease |

| Neural Network | Handles large datasets, performs well on speech and image recognition | Needs a lot of computational resources and data, overfits if complex | Diagnosing disease, selecting treatment, predicting risk of disease |

| Extreme Gradient Boosting | Fast with large datasets, handles regression and classification | Needs tuning of hyperparameters, overfits with complex weak learners | Predicting outcomes, detecting high-risk patients, diagnosing disease |

| Decision Tree | Simple, easy, handles categorical and numerical data | Overfits with noisy data, sensitivity to variations in training | Identifying risk factors, diagnosing disease, predicting risk of disease |

| Deep Neural Network | Good performer with large datasets, automatically learns hierarchical features | Requires a lot of data, overfits with complex network | Diagnosing disease, detecting high-risk patients, predicting the risk of disease |

| Gated Recurrent Unit | Great performer with time-series data, handles variable-length sequences | Sensitivity to some conditions and parameters, poor generalization to new data | Predicting risk of disease, diagnosing diseases, and determining outcomes |

| XGBoost | Fast, accurate, handles regressions and classification problems | Needs tuning of hyperparameters, overfits with noisy data | Predicting outcomes, identifying risk factors |

| CatBoost | Handles categorical data, handles regression and classification problems | Needs resources and data, needs tuning of hyperparameters | Identifying risk factors, forecasting outcomes |

| Naïve Bayes | Simple, efficient, handles high-dimensional data | Independent between features, poor performer with correlated features | Diagnosing diseases, forecasting risk of disease |

| Logistic Model Tree | Combination of DT and LR to get non-linear effects | Overfits with noisy data, needs tuning of hyperparameters | Determining risk factors, predicting risk of disease |

| Long Short-Term Memory | Good performer with time-series data, handles variable-length sequence | Computational complexity, difficult interpretation, overfitting, difficult to handle long sequences | Forecasting outcomes, diagnosing diseases, forecasting risk of disease |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Sherbini, A.H.; Hassan Virk, H.U.; Wang, Z.; Glicksberg, B.S.; Krittanawong, C. Machine-Learning-Based Prediction Modelling in Primary Care: State-of-the-Art Review. AI 2023, 4, 437-460. https://doi.org/10.3390/ai4020024

El-Sherbini AH, Hassan Virk HU, Wang Z, Glicksberg BS, Krittanawong C. Machine-Learning-Based Prediction Modelling in Primary Care: State-of-the-Art Review. AI. 2023; 4(2):437-460. https://doi.org/10.3390/ai4020024

Chicago/Turabian StyleEl-Sherbini, Adham H., Hafeez Ul Hassan Virk, Zhen Wang, Benjamin S. Glicksberg, and Chayakrit Krittanawong. 2023. "Machine-Learning-Based Prediction Modelling in Primary Care: State-of-the-Art Review" AI 4, no. 2: 437-460. https://doi.org/10.3390/ai4020024

APA StyleEl-Sherbini, A. H., Hassan Virk, H. U., Wang, Z., Glicksberg, B. S., & Krittanawong, C. (2023). Machine-Learning-Based Prediction Modelling in Primary Care: State-of-the-Art Review. AI, 4(2), 437-460. https://doi.org/10.3390/ai4020024