Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots

Abstract

1. Introduction

1.1. The Importance of Error Correction in ML

1.2. Article Contributions and Overview

2. Understanding Chatbots

2.1. Types of Chatbots: Rule-Based vs. AI-Based

2.1.1. Rule-Based Chatbots

2.1.2. AI-Based Chatbots

2.2. Common Applications of Chatbots

3. The Nature of Mistakes in Chatbots

3.1. Types of Errors in Chatbot Responses

3.2. Impact of Chatbot Errors on User Experience and Trust

4. Foundations of ML for Chatbots

4.1. Key ML Concepts in Chatbots

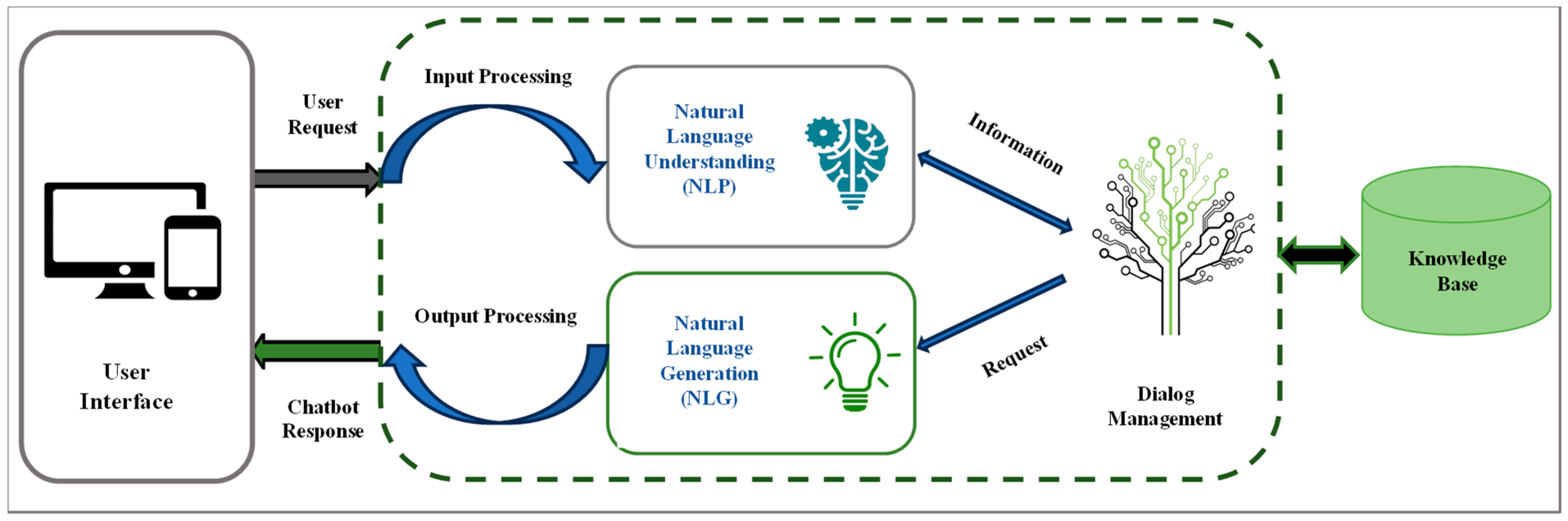

4.1.1. Natural Language Processing (NLP)

4.1.2. Learning Algorithms Specific to Chatbots

4.1.3. Sentiment Analysis for Emotional Context

4.1.4. Large Language Models (LLMs)

- (1)

- Understand user input: LLMs analyze text input from users, deciphering the meaning, intent, and context behind their queries.

- (2)

- Generate human-like responses: LLMs can craft responses that mimic human conversation, making interactions feel more natural and engaging.

- (3)

- Adapt and learn: LLMs can continuously learn from new data and interactions, improving their performance and responsiveness over time.

4.1.5. Performance Metrics and Evaluation

4.2. Learning from Interactions

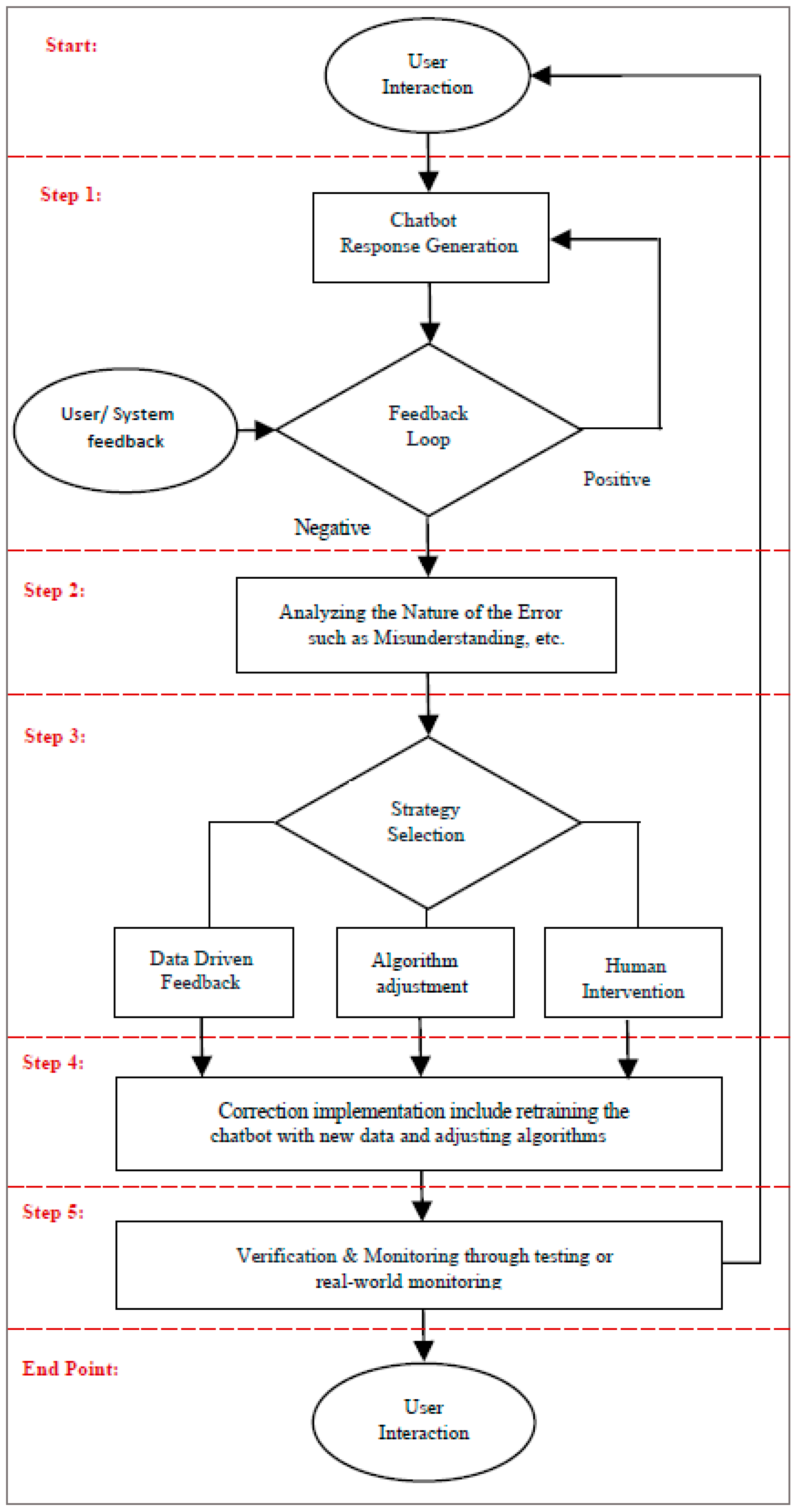

5. Strategies for Error Correction

5.1. Data-Driven Approach

5.2. Algorithmic Adjustments

5.2.1. RL in Chatbots

5.2.2. Supervised Learning in Chatbots

5.3. Overcoming Data and Label Scarcity

5.3.1. Semi-Supervised Learning

5.3.2. Weakly Supervised Learning

5.3.3. Few-Shot, Zero-Shot, and One-Shot Learning

5.4. Integrating Human Oversight

6. Case Studies: Error Correction in Chatbots

6.1. Effective Chatbot Learning Examples

6.1.1. Customer Service Chatbot in E-Commerce

6.1.2. Healthcare Assistant Chatbot

6.1.3. Banking Support Chatbot

6.1.4. Travel Booking Chatbot

6.1.5. Education

6.1.6. Language Learning Assistant

6.2. Strategy Analysis and Outcomes

6.2.1. Feedback Loops and User Engagement

6.2.2. Supervised Learning for Domain-Specific Accuracy

6.2.3. Semi-Supervised Learning for Expansive Understanding

6.2.4. RL for Dynamic Adaptation

6.2.5. Human-in-the-Loop for Nuanced Corrections

6.2.6. Overall Impact and Business Value

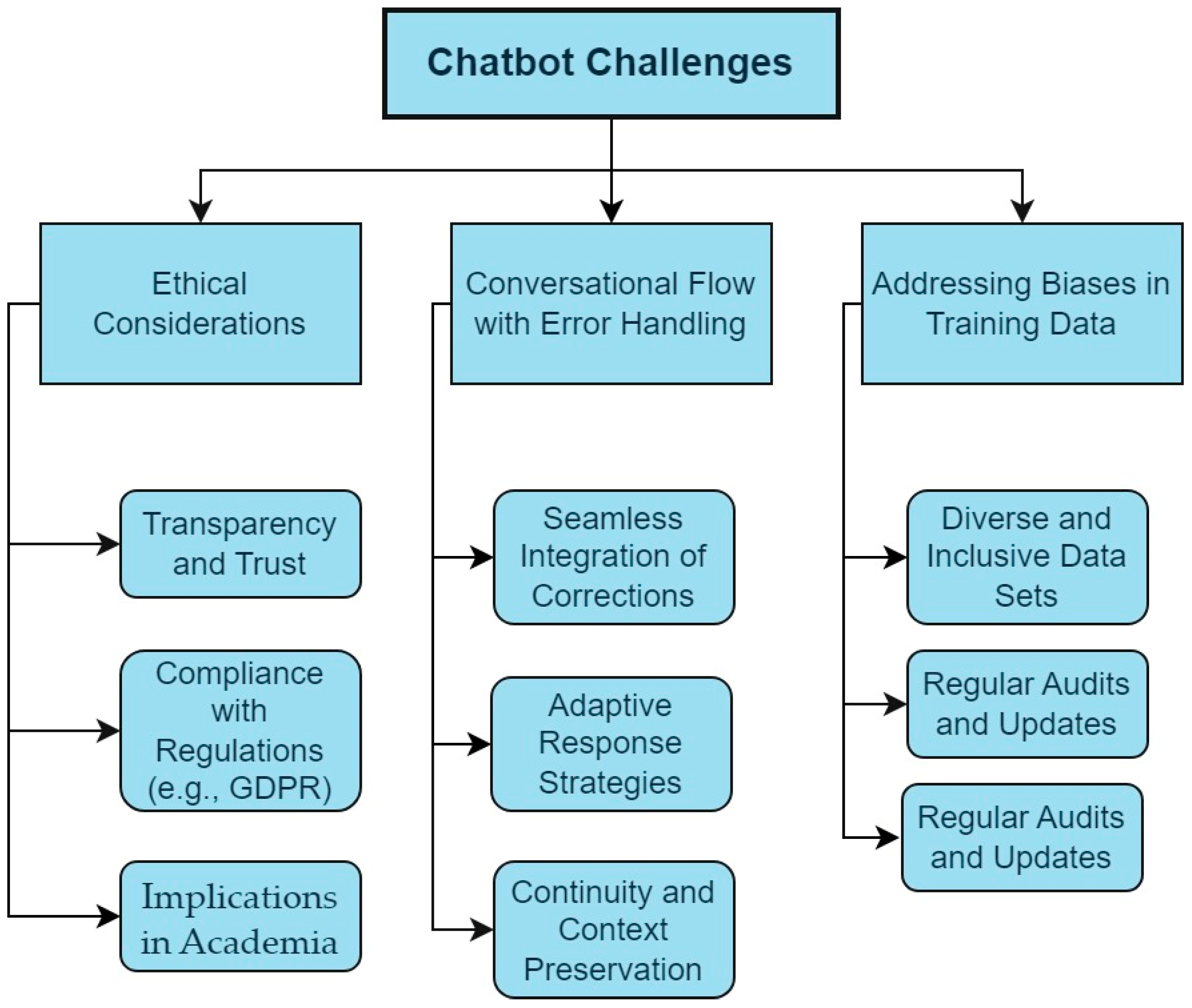

7. Challenges and Considerations

7.1. Ethical Considerations in Chatbot Training

7.2. Balancing Error Correction with Maintaining Conversational Flow

7.3. Addressing Biases in Training Data

8. Future of Chatbot Training

8.1. Emerging Technologies and Methods in Chatbot Training

8.2. Innovative Algorithms in Chatbot Training

8.3. Evolution of Error Correction in Chatbots

9. Conclusions

Funding

Conflicts of Interest

References

- Gupta, A.; Hathwar, D.; Vijayakumar, A. Introduction to AI chatbots. Int. J. Eng. Res. Technol. 2020, 9, 255–258. [Google Scholar]

- Adamopoulou, E.; Moussiades, L. Chatbots: History technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Suhaili, S.M.; Salim, N.; Jambli, M.N. Service chatbots: A systematic review. Expert Syst. Appl. 2021, 184, 115461. [Google Scholar] [CrossRef]

- Adam, M.; Wessel, M.; Benlian, A. AI-based chatbots in customer service and their effects on user compliance. Electron. Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Moriuchi, E.; Landers, V.M.; Colton, D.; Hair, N. Engagement with chatbots versus augmented reality interactive technology in e-commerce. J. Strateg. Mark. 2021, 29, 375–389. [Google Scholar] [CrossRef]

- Bhirud, N.; Tataale, S.; Randive, S.; Nahar, S. A literature review on chatbots in healthcare domain. Int. J. Sci. Technol. Res. 2019, 8, 225–231. [Google Scholar]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Kecht, C.; Egger, A.; Kratsch, W.; Röglinger, M. Quantifying chatbots’ ability to learn business processes. Inf. Syst. 2023, 113, 102176. [Google Scholar] [CrossRef]

- Kaczorowska-Spychalska, D. How chatbots influence marketing. Management 2019, 23, 251–270. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Hwang, G.-J.; Chang, C.-Y. A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. 2023, 31, 4099–4112. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Ying, X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Amodei, D.; Olah, C.; Steinhardt, J.; Christiano, P.; Schulman, J.; Mané, D. Concrete problems in AI safety. arXiv 2016, arXiv:1606.06565. [Google Scholar]

- Manjarrés, Á.; Fernández-Aller, C.; López-Sánchez, M.; Rodríguez-Aguilar, J.A.; Castañer, M.S. Artificial intelligence for a fair, just, and equitable world. IEEE Technol. Soc. Mag. 2021, 40, 19–24. [Google Scholar] [CrossRef]

- Kamishima, T.; Akaho, S.; Asoh, H.; Sakuma, J. Fairness-aware classifier with prejudice remover regularizer. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2012, Bristol, UK, 24–28 September 2012; Proceedings, Part II 23. Springer: Berlin/Heidelberg, Germany, 2012; pp. 35–50. [Google Scholar]

- Davis, S.E.; Walsh, C.G.; Matheny, M.E. Open questions and research gaps for monitoring and updating AI-enabled tools in clinical settings. Front. Digit. Health 2022, 4, 958284. [Google Scholar] [CrossRef] [PubMed]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Dennison, D. Hidden technical debt in machine learning systems. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar]

- Horvitz, E. Principles and applications of continual computation. Artif. Intell. 2001, 126, 159–196. [Google Scholar] [CrossRef]

- Amershi, S.; Begel, A.; Bird, C.; DeLine, R.; Gall, H.; Kamar, E.; Zimmermann, T. Software engineering for machine learning: A case study. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Montréal, Canada, 25–31 May 2019; IEEE: Piscataway, NJ, USA; pp. 291–300. [Google Scholar]

- Adamopoulou, E.; Moussiades, L. An overview of chatbot technology. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Neos Marmaras, Greece, 5–7 June 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 373–383. [Google Scholar]

- McTear, M.; Ashurkina, M. A New Era in Conversational AI. In Transforming Conversational AI: Exploring the Power of Large Language Models in Interactive Conversational Agents; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–16. [Google Scholar]

- Galitsky, B.; Galitsky, B. Adjusting chatbot conversation to user personality and mood. Artificial Intelligence for Customer Relationship Management: Solving Customer Problems; Springer: Berlin/Heidelberg, Germany, 2021; pp. 93–127. [Google Scholar]

- Peng, Z.; Ma, X. A survey on construction and enhancement methods in service chatbots design. CCF Trans. Pervasive Comput. Interact. 2019, 1, 204–223. [Google Scholar] [CrossRef]

- Rožman, M.; Oreški, D.; Tominc, P. Artificial-intelligence-supported reduction of employees’ workload to increase the company’s performance in today’s VUCA Environment. Sustainability 2023, 15, 5019. [Google Scholar] [CrossRef]

- Toader, D.-C.; Boca, G.; Toader, R.; Măcelaru, M.; Toader, C.; Ighian, D.; Rădulescu, A.T. The effect of social presence and chatbot errors on trust. Sustainability 2019, 12, 256. [Google Scholar] [CrossRef]

- Thorat, S.A.; Jadhav, V. A review on implementation issues of rule-based chatbot systems. In Proceedings of the International Conference on Innovative Computing & Communications (ICICC), New Delhi, India, 20–22 February 2020. [Google Scholar]

- Singh, J.; Joesph, M.H.; Jabbar, K.B.A. Rule-based chabot for student enquiries. J. Phys. Conf. Ser. 2019, 1228, 012060. [Google Scholar] [CrossRef]

- Miura, C.; Chen, S.; Saiki, S.; Nakamura, M.; Yasuda, K. Assisting personalized healthcare of elderly people: Developing a rule-based virtual caregiver system using mobile chatbot. Sensors 2022, 22, 3829. [Google Scholar] [CrossRef] [PubMed]

- Lalwani, T.; Bhalotia, S.; Pal, A.; Rathod, V.; Bisen, S. Implementation of a Chatbot System using AI and NLP. Int. J. Innov. Res. Comput. Sci. Technol. (IJIRCST) 2018, 6, 1–5. [Google Scholar] [CrossRef]

- Kocaballi, A.B.; Sezgin, E.; Clark, L.; Carroll, J.M.; Huang, Y.; Huh-Yoo, J.; Kim, J.; Kocielnik, R.; Lee, Y.-C.; Mamykina, L.; et al. Design and evaluation challenges of conversational agents in health care and well-being: Selective review study. J. Med. Internet Res. 2022, 24, e38525. [Google Scholar] [CrossRef] [PubMed]

- Al-Sharafi, M.A.; Al-Emran, M.; Iranmanesh, M.; Al-Qaysi, N.; Iahad, N.A.; Arpac, I.I. Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interact. Learn. Environ. 2023, 31, 7491–7510. [Google Scholar] [CrossRef]

- Park, K.-R. Development of Artificial Intelligence-based Legal Counseling Chatbot System. J. Korea Soc. Comput. Inf. 2021, 26, 29–34. [Google Scholar]

- Agarwal, R.; Wadhwa, M. Review of state-of-the-art design techniques for chatbots. SN Comput. Sci. 2020, 1, 246. [Google Scholar] [CrossRef]

- Stoilova, E. AI chatbots as a customer service and support tool. ROBONOMICS J. Autom. Econ. 2021, 2, 21. [Google Scholar]

- Hildebrand, C.; Bergner, A. AI-driven sales automation: Using chatbots to boost sales. NIM Mark. Intell. Rev. 2019, 11, 36–41. [Google Scholar] [CrossRef]

- Patel, N.; Trivedi, S. Leveraging predictive modeling, machine learning personalization, NLP customer support, and AI chatbots to increase customer loyalty. Empir. Quests Manag. Essences 2020, 3, 1–24. [Google Scholar]

- Maia, E.; Vieira, P.; Praça, I. Empowering Preventive Care with GECA Chatbot. Healthcare 2023, 11, 2532. [Google Scholar] [CrossRef] [PubMed]

- Doherty, D.; Curran, K. Chatbots for online banking services. Web Intell. 2019, 17, 327–342. [Google Scholar] [CrossRef]

- Mendoza, S.; Sánchez-Adame, L.M.; Urquiza-Yllescas, J.F.; González-Beltrán, B.A.; Decouchant, D. A model to develop chatbots for assisting the teaching and learning process. Sensors 2022, 22, 5532. [Google Scholar] [CrossRef]

- Nawaz, N.; Gomes, A.M. Artificial intelligence chatbots are new recruiters. (IJACSA) Int. J. Adv. Comput. Sci. Appl. 2019, 10, 1–5. [Google Scholar] [CrossRef]

- Lasek, M.; Jessa, S. Chatbots for customer service on hotels’ websites. Inf. Syst. Manag. 2013, 2, 146–158. [Google Scholar]

- García-Méndez, S.; De Arriba-Pérez, F.; González-Castaño, F.J.; Regueiro-Janeiro, J.A.; Gil-Castiñeira, F. Entertainment chatbot for the digital inclusion of elderly people without abstraction capabilities. IEEE Access 2021, 9, 75878–75891. [Google Scholar] [CrossRef]

- Cheng, Y.; Jiang, H. How do AI-driven chatbots impact user experience? Examining gratifications, perceived privacy risk, satisfaction, loyalty, and continued use. J. Broadcast. Electron. Media 2020, 64, 592–614. [Google Scholar] [CrossRef]

- De Sá Siqueira, M.A.; Müller, B.C.; Bosse, T. When do we accept mistakes from chatbots? The impact of human-like communication on user experience in chatbots that make mistakes. Int. J. Hum. –Comput. Interact. 2023, 40, 2862–2872. [Google Scholar] [CrossRef]

- Luttikholt, T. The Influence of Error Types on the User Experience of Chatbots. Master’s Thesis, Radboud University Nijmegen, Nijmegen, The Netherlands, 2023. [Google Scholar]

- Zamora, J. I’m sorry, dave, i’m afraid i can’t do that: Chatbot perception and expectations. In Proceedings of the 5th International Conference on Human Agent Interaction, Gothenberg, Sweden, 4–7 December 2023; pp. 253–260. [Google Scholar]

- Chen, H.; Liu, X.; Yin, D.; Tang, J. A survey on dialogue systems: Recent advances and new frontiers. Acm Sigkdd Explor. Newsl. 2017, 19, 25–35. [Google Scholar] [CrossRef]

- Radford, A.; Jozefowicz, R.; Sutskever, I. Learning to generate reviews and discovering sentiment. arXiv 2017, arXiv:1704.01444. [Google Scholar]

- Han, X.; Zhou, M.; Wang, Y.; Chen, W.; Yeh, T. Democratizing Chatbot Debugging: A Computational Framework for Evaluating and Explaining Inappropriate Chatbot Responses. In Proceedings of the 5th International Conference on Conversational User Interfaces, Eindhoven, The Netherlands, 19–21 July 2023; pp. 1–7. [Google Scholar]

- Henderson, P.; Sinha, K.; Angelard-Gontier, N.; Ke, N.R.; Fried, G.; Lowe, R.; Pineau, J. Ethical challenges in data-driven dialogue systems. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; pp. 123–129. [Google Scholar]

- Gehman, S.; Gururangan, S.; Sap, M.; Choi, Y.; Smith, N.A. Realtoxicityprompts: Evaluating neural toxic degeneration in language models. arXiv 2020, arXiv:2009.11462. [Google Scholar]

- Gabrilovich, E.; Markovitch, S. Wikipedia-based semantic interpretation for natural language processing. J. Artif. Intell. Res. 2009, 34, 443–498. [Google Scholar] [CrossRef]

- Dong, X.; Gabrilovich, E.; Heitz, G.; Horn, W.; Lao, N.; Murphy, K.; Zhang, W. Knowledge vault: A web-scale approach to probabilistic knowledge fusion. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 601–610. [Google Scholar]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A diversity-promoting objective function for neural conversation models. arXiv 2015, arXiv:1510.03055. [Google Scholar]

- Shao, L.; Gouws, S.; Britz, D.; Goldie, A.; Strope, B.; Kurzweil, R. Generating Long and Diverse Responses with Neural Conversation Models. 2016. Available online: https://www.researchgate.net/publication/312447509_Generating_Long_and_Diverse_Responses_with_Neural_Conversation_Models (accessed on 1 April 2024).

- Zheng, Y.; Chen, G.; Huang, M.; Liu, S.; Zhu, X. Personalized dialogue generation with diversified traits. arXiv 2019, arXiv:1901.09672. [Google Scholar]

- Zhang, S.; Dinan, E.; Urbanek, J.; Szlam, A.; Kiela, D.; Weston, J. Personalizing dialogue agents: I have a dog, do you have pets too? arXiv 2018, arXiv:1801.07243. [Google Scholar]

- Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.; Wattenberg, M.; Corrado, G.; et al. Google’s multilingual neural machine translation system: Enabling zero-shot translation. Trans. Assoc. Comput. Linguist. 2017, 5, 339–351. [Google Scholar] [CrossRef]

- Vulić, I.; Moens, M.-F. Monolingual and cross-lingual information retrieval models based on (bilingual) word embeddings. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, Chile, 9–13 August 2015; pp. 363–372. [Google Scholar]

- Alkaissi, H.; McFarlane, S.I. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

- Hannigan, T.R.; McCarthy, I.P.; Spicer, A. Beware of botshit: How to manage the epistemic risks of generative chatbots. Bus. Horiz. 2024. [Google Scholar] [CrossRef]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On faithfulness and factuality in abstractive summarization. arXiv 2020, arXiv:2005.00661. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Rane, N. Enhancing Customer Loyalty through Artificial Intelligence (AI), Internet of Things (IoT), and Big Data Technologies: Improving Customer Satisfaction, Engagement, Relationship, and Experience (October 13, 2023). Available online: https://ssrn.com/abstract=4616051 (accessed on 1 March 2024).

- Hsu, C.-L.; Lin, J.C.-C. Understanding the user satisfaction and loyalty of customer service chatbots. J. Retail. Consum. Serv. 2023, 71, 103211. [Google Scholar] [CrossRef]

- Luger, E.; Sellen, A. Like Having a Really Bad PA" The Gulf between User Expectation and Experience of Conversational Agents. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 5286–5297. [Google Scholar]

- Cassell, J.; Bickmore, T. External manifestations of trustworthiness in the interface. Commun. ACM 2000, 43, 50–56. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Ho, R.C. Chatbot for online customer service: Customer engagement in the era of artificial intelligence. In Impact of Globalization and Advanced Technologies on Online Business Models; IGI Global: Hershey, PA, USA, 2021; pp. 16–31. [Google Scholar]

- Galitsky, B.; Galitsky, B.; Goldberg, S. Explainable machine learning for chatbots. In Developing Enterprise Chatbots: Learning Linguistic Structures; Springer: Berlin/Heidelberg, Germany, 2019; pp. 53–83. [Google Scholar]

- Suta, P.; Lan, X.; Wu, B.; Mongkolnam, P.; Chan, J.H. An overview of machine learning in chatbots. Int. J. Mech. Eng. Robot. Res. 2020, 9, 502–510. [Google Scholar] [CrossRef]

- Yoo, S.; Jeong, O. An intelligent chatbot utilizing BERT model and knowledge graph. J. Soc. e-Bus. Stud. 2020, 24, 87–98. [Google Scholar]

- Kondurkar, I.; Raj, A.; Lakshmi, D. Modern Applications With a Focus on Training ChatGPT and GPT Models: Exploring Generative AI and NLP. In Advanced Applications of Generative AI and Natural Language Processing Models; IGI Global: Hershey, PA, USA, 2024; pp. 186–227. [Google Scholar]

- Yenduri, G.; Srivastava, G.; Maddikunta, P.K.R.; Jhaveri, R.H.; Wang, W.; Vasilakos, A.V.; Gadekallu, T.R. Generative pre-trained transformer: A comprehensive review on enabling technologies, potential applications, emerging challenges, and future directions. arXiv 2023, arXiv:2305.10435. [Google Scholar] [CrossRef]

- Kamphaug, Å.; Granmo, O.-C.; Goodwin, M.; Zadorozhny, V.I. Towards open domain chatbots—A gru architecture for data driven conversations. In Proceedings of the Internet Science: INSCI 2017 International Workshops, IFIN, DATA ECONOMY, DSI, and CONVERSATIONS, Thessaloniki, Greece, 22 November 2017; Revised Selected Papers 4. Springer: Berlin/Heidelberg, Germany, 2018; pp. 213–222. [Google Scholar]

- Galitsky, B.; Galitsky, B. Chatbot components and architectures. Developing Enterprise Chatbots: Learning Linguistic Structures; Springer: Berlin/Heidelberg, Germany, 2019; pp. 13–51. [Google Scholar]

- Hussain, S.; Sianaki, O.A.; Ababneh, N. A survey on conversational agents/chatbots classification and design techniques. In Web, Artificial Intelligence and Network Applications, Proceedings of the Workshops of the 33rd International Conference on Advanced Information Networking and Applications (WAINA-2019), Matsue, Japan, 27–29 March 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 946–956. [Google Scholar]

- Wang, R.; Wang, J.; Liao, Y.; Wang, J. Supervised machine learning chatbots for perinatal mental healthcare. In Proceedings of the 2020 International Conference on Intelligent Computing and Human-Computer Interaction (ICHCI), Sanya, China, 4–6 December 2020; IEEE: Piscataway, NJ, USA; pp. 378–383. [Google Scholar]

- Cuayáhuitl, H.; Lee, D.; Ryu, S.; Cho, Y.; Choi, S.; Indurthi, S.; Yu, S.; Choi, H.; Hwang, I.; Kim, J. Ensemble-based deep reinforcement learning for chatbots. Neurocomputing 2019, 366, 118–130. [Google Scholar] [CrossRef]

- Jadhav, H.M.; Mulani, A.; Jadhav, M.M. Design and development of chatbot based on reinforcement learning. In Machine Learning Algorithms for Signal and Image Processing; Wiley-ISTE: Hoboken, NJ, USA, 2022; pp. 219–229. [Google Scholar]

- El-Ansari, A.; Beni-Hssane, A. Sentiment analysis for personalized chatbots in e-commerce applications. Wirel. Pers. Commun. 2023, 129, 1623–1644. [Google Scholar] [CrossRef]

- Svikhnushina, E.; Pu, P. PEACE: A model of key social and emotional qualities of conversational chatbots. ACM Trans. Interact. Intell. Syst. 2022, 12, 1–29. [Google Scholar] [CrossRef]

- Majid, R.; Santoso, H.A. Conversations sentiment and intent categorization using context RNN for emotion recognition. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; IEEE: Piscataway, NJ, USA; pp. 46–50. [Google Scholar]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Safi, Z.; Alajlani, M.; Warren, J.; Househ, M.; Denecke, K. Technical metrics used to evaluate health care chatbots: Scoping review. J. Med. Internet Res. 2020, 22, e18301. [Google Scholar] [CrossRef] [PubMed]

- Chaves, A.P.; Gerosa, M.A. How should my chatbot interact? A survey on social characteristics in human–chatbot interaction design. Int. J. Hum. Comput. Interact. 2021, 37, 729–758. [Google Scholar] [CrossRef]

- Rhim, J.; Kwak, M.; Gong, Y.; Gweon, G. Application of humanization to survey chatbots: Change in chatbot perception, interaction experience, and survey data quality. Comput. Human Behav. 2022, 126, 107034. [Google Scholar] [CrossRef]

- Xu, A.; Liu, Z.; Guo, Y.; Sinha, V.; Akkiraju, R. A new chatbot for customer service on social media. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 3506–3510. [Google Scholar]

- Chang, D.H.; Lin, M.P.-C.; Hajian, S.; Wang, Q.Q. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability 2023, 15, 12921. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement learning: An introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Abdellatif, A.; Badran, K.; Costa, D.E.; Shihab, E. A comparison of natural language understanding platforms for chatbots in software engineering. IEEE Trans. Softw. Eng. 2021, 48, 3087–3102. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Park, S.; Jung, Y.; Kang, H. Effects of Personalization and Types of Interface in Task-oriented Chatbot. J. Converg. Cult. Technol. 2021, 7, 595–607. [Google Scholar]

- Shi, W.; Wang, X.; Oh, Y.J.; Zhang, J.; Sahay, S.; Yu, Z. Effects of persuasive dialogues: Testing bot identities and inquiry strategies. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Ait-Mlouk, A.; Jiang, L. KBot: A Knowledge graph based chatBot for natural language understanding over linked data. IEEE Access 2020, 8, 149220–149230. [Google Scholar] [CrossRef]

- Alaaeldin, R.; Asfoura, E.; Kassem, G.; Abdel-Haq, M.S. Developing Chatbot System To Support Decision Making Based on Big Data Analytics. J. Manag. Inf. Decis. Sci. 2021, 24, 1–15. [Google Scholar]

- Bhagwat, V.A. Deep learning for chatbots. Master’s Thesis, San Jose State University, San Jose, CA, USA, 2018. [Google Scholar]

- Denecke, K.; Abd-Alrazaq, A.; Househ, M.; Warren, J. Evaluation metrics for health chatbots: A Delphi study. Methods Inf. Med. 2021, 60, 171–179. [Google Scholar] [CrossRef] [PubMed]

- Jannach, D.; Manzoor, A.; Cai, W.; Chen, L. A survey on conversational recommender systems. ACM Comput. Surv. (CSUR) 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Følstad, A.; Taylor, C. Investigating the user experience of customer service chatbot interaction: A framework for qualitative analysis of chatbot dialogues. Qual. User Exp. 2021, 6, 6. [Google Scholar] [CrossRef]

- Akhtar, M.; Neidhardt, J.; Werthner, H. The potential of chatbots: Analysis of chatbot conversations. In Proceedings of the 2019 IEEE 21st Conference on Business Informatics (CBI), Moscow, Russia, 15–17 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 397–404. [Google Scholar]

- Rebelo, H.D.; de Oliveira, L.A.; Almeida, G.M.; Sotomayor, C.A.; Magalhães, V.S.; Rochocz, G.L. Automatic update strategy for real-time discovery of hidden customer intents in chatbot systems. Knowl. -Based Syst. 2022, 243, 108529. [Google Scholar] [CrossRef]

- Panda, S.; Chakravarty, R. Adapting intelligent information services in libraries: A case of smart AI chatbots. Libr. Hi Tech News 2022, 39, 12–15. [Google Scholar] [CrossRef]

- Yorita, A.; Egerton, S.; Oakman, J.; Chan, C.; Kubota, N. Self-adapting Chatbot personalities for better peer support. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; IEEE: Piscataway, NJ, USA; pp. 4094–4100. [Google Scholar]

- Vijayaraghavan, V.; Cooper, J.B. Algorithm inspection for chatbot performance evaluation. Procedia Comput. Sci. 2020, 171, 2267–2274. [Google Scholar]

- Han, X.; Zhou, M.; Turner, M.J.; Yeh, T. Designing effective interview chatbots: Automatic chatbot profiling and design suggestion generation for chatbot debugging. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Online Virtual, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Shumanov, M.; Johnson, L. Making conversations with chatbots more personalized. Comput. Hum. Behav. 2021, 117, 106627. [Google Scholar] [CrossRef]

- Qian, H.; Dou, Z. Topic-Enhanced Personalized Retrieval-Based Chatbot. In Proceedings of the European Conference on Information Retrieval, Dublin, Ireland, 2–6 April 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 79–93. [Google Scholar]

- Wang, H.-N.; Liu, N.; Zhang, Y. Deep reinforcement learning: A survey. Front. Inf. Technol. Electron. Eng. 2020, 21, 1726–1744. [Google Scholar] [CrossRef]

- Serban, I.V.; Cheng, G.S. A deep reinforcement learning chatbot. arXiv 2017, arXiv:1709.02349. [Google Scholar]

- Liu, J.; Pan, F.; Luo, L. Gochat: Goal-oriented chatbots with hierarchical reinforcement learning. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 1793–1796. [Google Scholar]

- Li, J.; Monroe, W.; Ritter, A.; Galley, M.; Gao, J.; Jurafsky, D. Deep reinforcement learning for dialogue generation. arXiv 2016, arXiv:1606.01541. [Google Scholar]

- Jaques, N.; Ghandeharioun, A.; Shen, J.H.; Ferguson, C.; Lapedriza, A.; Jones, N.; Picard, R. Way off-policy batch deep reinforcement learning of implicit human preferences in dialog. arXiv 2019, arXiv:1907.00456. [Google Scholar]

- Lapan, M. Deep Reinforcement Learning Hands-On: Apply Modern RL Methods to Practical Problems of Chatbots, Robotics, Discrete Optimization, Web Automation, and More; Packt Publishing Ltd.: Birmingham, UK, 2020. [Google Scholar]

- Liu, C.; Jiang, J.; Xiong, C.; Yang, Y.; Ye, J. Towards building an intelligent chatbot for customer service: Learning to respond at the appropriate time. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 23–27 August 2020; pp. 3377–3385. [Google Scholar]

- Gunel, B.; Du, J.; Conneau, A.; Stoyanov, V. Supervised contrastive learning for pre-trained language model fine-tuning. arXiv 2020, arXiv:2011.01403. [Google Scholar]

- Uprety, S.P.; Jeong, S.R. The Impact of Semi-Supervised Learning on the Performance of Intelligent Chatbot System. Comput. Mater. Contin. 2022, 71, 3937–3952. [Google Scholar]

- Luo, B.; Lau, R.Y.; Li, C.; Si, Y.W. A critical review of state-of-the-art chatbot designs and applications. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, e1434. [Google Scholar] [CrossRef]

- Kulkarni, M.; Kim, K.; Garera, N.; Trivedi, A. Label efficient semi-supervised conversational intent classification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 5: Industry Track), Toronto, Canada, 10–12 July 2023; pp. 96–102. [Google Scholar]

- Prabhu, S.; Brahma, A.K.; Misra, H. Customer Support Chat Intent Classification using Weak Supervision and Data Augmentation. In Proceedings of the 5th Joint International Conference on Data Science & Management of Data (9th ACM IKDD CODS and 27th COMAD), Bangalore, India, 8–10 January 2022; pp. 144–152. [Google Scholar]

- Raisi, E. Weakly Supervised Machine Learning for Cyberbullying Detection. Ph.D. Thesis, Virginia Tech., Blacksburg, VA, USA, 2019. [Google Scholar]

- Ahmed, M.; Khan, H.U.; Munir, E.U. Conversational ai: An explication of few-shot learning problem in transformers-based chatbot systems. IEEE Trans. Comput. Soc. Syst. 2023, 11, 1888–1906. [Google Scholar] [CrossRef]

- Tavares, D. Zero-Shot Generalization of Multimodal Dialogue Agents. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 6935–6939. [Google Scholar]

- Chai, Y.; Liu, G.; Jin, Z.; Sun, D. How to keep an online learning chatbot from being corrupted. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Piscataway, NJ, USA; pp. 1–8. [Google Scholar]

- Madotto, A.; Lin, Z.; Wu, C.-S.; Fung, P. Personalizing dialogue agents via meta-learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5454–5459. [Google Scholar]

- Dingliwal, S.; Gao, B.; Agarwal, S.; Lin, C.-W.; Chung, T.; Hakkani-Tur, D. Few shot dialogue state tracking using meta-learning. arXiv 2021, arXiv:2101.06779. [Google Scholar]

- Bird, J.J.; Ekárt, A.; Faria, D.R. Chatbot Interaction with Artificial Intelligence: Human data augmentation with T5 and language transformer ensemble for text classification. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 3129–3144. [Google Scholar] [CrossRef]

- Paul, A.; Latif, A.H.; Adnan, F.A.; Rahman, R.M. Focused domain contextual AI chatbot framework for resource poor languages. J. Inf. Telecommun. 2019, 3, 248–269. [Google Scholar] [CrossRef]

- Gallo, S.; Malizia, A.; Paternò, F. Towards a Chatbot for Creating Trigger-Action Rules based on ChatGPT and Rasa. In Proceedings of the International Symposium on End-User Development (IS-EUD), Cagliari, Italy, 6–8 June 2023. [Google Scholar]

- Gupta, A.; Zhang, P.; Lalwani, G.; Diab, M. Casa-nlu: Context-aware self-attentive natural language understanding for task-oriented chatbots. arXiv 2019, arXiv:1909.08705. [Google Scholar]

- Ilievski, V.; Musat, C.; Hossmann, A.; Baeriswyl, M. Goal-oriented chatbot dialog management bootstrapping with transfer learning. arXiv 2018, arXiv:1802.00500. [Google Scholar]

- Shi, N.; Zeng, Q.; Lee, R. The design and implementation of language learning chatbot with xai using ontology and transfer learning. arXiv 2020, arXiv:2009.13984. [Google Scholar]

- Syed, Z.H.; Trabelsi, A.; Helbert, E.; Bailleau, V.; Muths, C. Question answering chatbot for troubleshooting queries based on transfer learning. Procedia Comput. Sci. 2021, 192, 941–950. [Google Scholar] [CrossRef]

- Zhang, W.N.; Zhu, Q.; Wang, Y.; Zhao, Y.; Liu, T. Personalized response generation via domain adaptation. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 1021–1024. [Google Scholar]

- Lee, C.-J.; Croft, W.B. Generating queries from user-selected text. In Proceedings of the 4th Information Interaction in Context Symposium, Nijmegen, The Netherlands, 21–24 August 2012; pp. 100–109. [Google Scholar]

- Gnewuch, U.; Morana, S.; Hinz, O.; Kellner, R.; Maedche, A. More than a bot? The impact of disclosing human involvement on customer interactions with hybrid service agents. Inf. Syst. Res. 2023, 1–20. [Google Scholar] [CrossRef]

- Wu, X.; Xiao, L.; Sun, Y.; Zhang, J.; Ma, T.; He, L. A survey of human-in-the-loop for machine learning. Future Gener. Comput. Syst. 2022, 135, 364–381. [Google Scholar] [CrossRef]

- Wiethof, C.; Roocks, T.; Bittner, E.A. Gamifying the human-in-the-loop: Toward increased motivation for training AI in customer service. In Proceedings of the International Conference on Human-Computer Interaction, Virtual Event, 1–26 June 2022; Springer: Berlin/Heidelberg, Germany; pp. 100–117. [Google Scholar]

- Melo dos Santos, G. Adaptive Human-Chatbot Interactions: Contextual Factors, Variability Design and Levels of Automation. 2023. Available online: https://uwspace.uwaterloo.ca/handle/10012/20139 (accessed on 1 March 2024).

- Wu, J.; Huang, Z.; Hu, Z.; Lv, C. Toward human-in-the-loop AI: Enhancing deep reinforcement learning via real-time human guidance for autonomous driving. Engineering 2023, 21, 75–91. [Google Scholar] [CrossRef]

- Wardhana, A.K.; Ferdiana, R.; Hidayah, I. Empathetic chatbot enhancement and development: A literature review. In Proceedings of the 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Jakarta, Indonesia, 28–30 April 2021; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Chen, F. Human-AI cooperation in education: Human in loop and teaching as leadership. J. Educ. Technol. Innov. 2022, 2, 1. [Google Scholar] [CrossRef]

- Barletta, V.S.; Caivano, D.; Colizzi, L.; Dimauro, G.; Piattini, M. Clinical-chatbot AHP evaluation based on “quality in use” of ISO/IEC 25010. Int. J. Med. Inform. 2023, 170, 104951. [Google Scholar] [CrossRef]

- Gronsund, T.; Aanestad, M. Augmenting the algorithm: Emerging human-in-the-loop work configurations. J. Strateg. Inf. Syst. 2020, 29, 101614. [Google Scholar] [CrossRef]

- Rayhan, R.; Rayhan, S. AI and human rights: Balancing innovation and privacy in the digital age. Comput. Sci. Eng. 2023, 2, 353964. [Google Scholar] [CrossRef]

- Fan, H.; Han, B.; Gao, W. (Im) Balanced customer-oriented behaviors and AI chatbots’ Efficiency–Flexibility performance: The moderating role of customers’ rational choices. J. Retail. Consum. Serv. 2022, 66, 102937. [Google Scholar] [CrossRef]

- Ngai, E.W.; Lee, M.C.; Luo, M.; Chan, P.S.; Liang, T. An intelligent knowledge-based chatbot for customer service. Electron. Commer. Res. Appl. 2021, 50, 101098. [Google Scholar] [CrossRef]

- Pandey, S.; Sharma, S.; Wazir, S. Mental healthcare chatbot based on natural language processing and deep learning approaches: Ted the therapist. Int. J. Inf. Technol. 2022, 14, 3757–3766. [Google Scholar] [CrossRef]

- Kulkarni, C.S.; Bhavsar, A.U.; Pingale, S.R.; Kumbhar, S.S. BANK CHAT BOT–an intelligent assistant system using NLP and machine learning. Int. Res. J. Eng. Technol. 2017, 4, 2374–2377. [Google Scholar]

- Argal, A.; Gupta, S.; Modi, A.; Pandey, P.; Shim, S.; Choo, C. Intelligent travel chatbot for predictive recommendation in echo platform. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; IEEE: Piscataway, NJ, USA; pp. 176–183. [Google Scholar]

- Abu-Rasheed, H.; Abdulsalam, M.H.; Weber, C.; Fathi, M. Supporting Student Decisions on Learning Recommendations: An LLM-Based Chatbot with Knowledge Graph Contextualization for Conversational Explainability and Mentoring. arXiv 2024, arXiv:2401.08517. [Google Scholar]

- Kohnke, L. A pedagogical chatbot: A supplemental language learning tool. RELC J. 2023, 54, 828–838. [Google Scholar] [CrossRef]

- Haristiani, N. Artificial Intelligence (AI) chatbot as language learning medium: An inquiry. J. Phys. Conf. Ser. 2019, 1387, 012020. [Google Scholar] [CrossRef]

- Tamayo, P.A.; Herrero, A.; Martín, J.; Navarro, C.; Tránchez, J.M. Design of a chatbot as a distance learning assistant. Open Prax. 2020, 12, 145–153. [Google Scholar] [CrossRef]

- McTear, M. Conversational ai: Dialogue Systems, Conversational Agents, and Chatbots; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Braggaar, A.; Verhagen, J.; Martijn, G.; Liebrecht, C. Conversational repair strategies to cope with errors and breakdowns in customer service chatbot conversations. In Proceedings of the Conversations: Workshop on Chatbot Research, Oslo, Norway, 22–23 November 2023. [Google Scholar]

- Fang, K.Y.; Bjering, H. Development of an interactive Messenger chatbot for medication and health supplement reminders. In Proceedings of the 36th National Conference Health Information Management: Celebrating, Cape Town, South Africa, 20–23 October 2019; Volume 70, p. 51. [Google Scholar]

- Griffin, A.C.; Khairat, S.; Bailey, S.C.; Chung, A.E. A chatbot for hypertension self-management support: User-centered design, development, and usability testing. JAMIA Open 2023, 6, ooad073. [Google Scholar] [CrossRef]

- Alt, M.-A.; Vizeli, I.; Săplăcan, Z. Banking with a chatbot—A study on technology acceptance. Stud. Univ. Babes-Bolyai Oeconomica 2021, 66, 13–35. [Google Scholar] [CrossRef]

- Ukpabi, D.C.; Aslam, B.; Karjaluoto, H. Chatbot adoption in tourism services: A conceptual exploration. In Robots, Artificial Intelligence, and Service Automation in Travel, Tourism and Hospitality; Emerald Publishing Limited: Bingley, UK, 2019; pp. 105–121. [Google Scholar]

- Ji, H.; Han, I.; Ko, Y. A systematic review of conversational AI in language education: Focusing on the collaboration with human teachers. J. Res. Technol. Educ. 2023, 55, 48–63. [Google Scholar] [CrossRef]

- Heller, B.; Proctor, M.; Mah, D.; Jewell, L.; Cheung, B. Freudbot: An investigation of chatbot technology in distance education. In EdMedia+ Innovate Learning; Association for the Advancement of Computing in Education (AACE): Asheville, NC, USA, 2005; pp. 3913–3918. [Google Scholar]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2022, 38, 237–257. [Google Scholar] [CrossRef]

- Kim, H.; Yang, H.; Shin, D.; Lee, J.H. Design principles and architecture of a second language learning chatbot. Lang. Learn. Technol. 2022, 26, 1–18. [Google Scholar]

- Li, L.; Lee, K.Y.; Emokpae, E.; Yang, S.-B. What makes you continuously use chatbot services? Evidence from chinese online travel agencies. Electron. Mark. 2021, 31, 575–599. [Google Scholar] [CrossRef] [PubMed]

- Shafi, P.M.; Jawalkar, G.S.; Kadam, M.A.; Ambawale, R.R.; Bankar, S.V. AI—Assisted chatbot for e-commerce to address selection of products from multiple products. In Internet of Things, Smart Computing and Technology: A Roadmap Ahead; Springer: Berlin/Heidelberg, Germany, 2020; pp. 57–80. [Google Scholar]

- Sundar, S.S.; Bellur, S.; Oh, J.; Jia, H.; Kim, H.-S. Theoretical importance of contingency in human-computer interaction: Effects of message interactivity on user engagement. Commun. Res. 2016, 43, 595–625. [Google Scholar] [CrossRef]

- Janssen, A.; Cardona, D.R.; Passlick, J.; Breitner, M.H. How to Make chatbots productive–A user-oriented implementation framework. Int. J. Hum. Comput. Stud. 2022, 168, 102921. [Google Scholar] [CrossRef]

- Rakshit, S.; Clement, N.; Vajjhala, N.R. Exploratory review of applications of machine learning in finance sector. In Advances in Data Science and Management: Proceedings of ICDSM 2021; Springer Verlag: Singapore, 2022; pp. 119–125. [Google Scholar]

- Le, A.-C. Improving Chatbot Responses with Context and Deep Seq2Seq Reinforcement Learning; Springer Verlag: Singapore, 2023. [Google Scholar]

- Wang, J.; Oyama, S.; Kurihara, M.; Kashima, H. Learning an accurate entity resolution model from crowdsourced labels. In Proceedings of the 8th International Conference on Ubiquitous Information Management and Communication, 2014, Belfast, UK, 9–11 January 2014; pp. 1–8. [Google Scholar]

- Maskat, R.; Paton, N.W.; Embury, S.M. Pay-as-you-go configuration of entity resolution. In Transactions on Large-Scale Data-and Knowledge-Centered Systems XXIX; Springer: Berlin/Heidelberg, Germany, 2016; pp. 40–65. [Google Scholar]

- Budd, S.; Robinson, E.C.; Kainz, B. A survey on active learning and human-in-the-loop deep learning for medical image analysis. Med. Image Anal. 2021, 71, 102062. [Google Scholar] [CrossRef] [PubMed]

- Selamat, M.A.; Windasari, N.A. Chatbot for SMEs: Integrating customer and business owner perspectives. Technol. Soc. 2021, 66, 101685. [Google Scholar] [CrossRef]

- Heo, M.; Lee, K.J. Chatbot as a new business communication tool: The case of naver talktalk. Bus. Commun. Res. Pract. 2018, 1, 41–45. [Google Scholar] [CrossRef]

- Cui, L.; Huang, S.; Wei, F.; Tan, C.; Duan, C.; Zhou, M. Superagent: A customer service chatbot for e-commerce websites. In Proceedings of the ACL 2017, System Demonstrations, Vancouver, Canada, 30 July–4 August 2017; pp. 97–102. [Google Scholar]

- Abdellatif, A.; Costa, D.; Badran, K.; Abdalkareem, R.; Shihab, E. Challenges in chatbot development: A study of stack overflow posts. In Proceedings of the 17th International Conference on Mining Software Repositories, Seoul, Republic of Korea, 29–30 June 2020; pp. 174–185. [Google Scholar]

- Hasal, M.; Nowaková, J.; Saghair, K.A.; Abdulla, H.; Snášel, V.; Ogiela, L. Chatbots: Security, privacy, data protection, and social aspects. Concurr. Comput. Pract. Exp. 2021, 33, e6426. [Google Scholar] [CrossRef]

- Atkins, S.; Badrie, I.; van Otterloo, S. Applying Ethical AI Frameworks in practice: Evaluating conversational AI chatbot solutions. Comput. Soc. Res. J. 2021, 1, qxom4114. [Google Scholar] [CrossRef]

- Tamimi, A. Chatting with Confidence: A Review on the Impact of User Interface, Trust, and User Experience in Chatbots, and a Proposal of a Redesigned Prototype. 2023. Available online: https://hdl.handle.net/10365/33240 (accessed on 1 February 2024).

- Valencia, O.A.G.; Suppadungsuk, S.; Thongprayoon, C.; Miao, J.; Tangpanithandee, S.; Craici, I.M.; Cheungpasitporn, W. Ethical implications of chatbot utilization in nephrology. J. Pers. Med. 2023, 13, 1363. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Alshurafat, H. The usefulness and challenges of chatbots for accounting professionals: Application on ChatGPT. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4345921 (accessed on 1 February 2024).

- Jiang, Y.; Yang, X.; Zheng, T. Make chatbots more adaptive: Dual pathways linking human-like cues and tailored response to trust in interactions with chatbots. Comput. Human Behav. 2023, 138, 107485. [Google Scholar] [CrossRef]

- Jeong, H.; Yoo, J.H.; Han, O. Next-Generation Chatbots for Adaptive Learning: A proposed Framework. J. Internet Comput. Serv. 2023, 24, 37–45. [Google Scholar]

- Wang, D.; Fang, H. An adaptive response matching network for ranking multi-turn chatbot responses. In Proceedings of the Natural Language Processing and Information Systems: 25th International Conference on Applications of Natural Language to Information Systems, NLDB 2020, Saarbrücken, Germany, 24–26 June 2020; Proceedings 25. Springer: Berlin/Heidelberg, Germany, 2020; pp. 239–251. [Google Scholar]

- Han, S.; Lee, M.K. FAQ chatbot and inclusive learning in massive open online courses. Comput. Educ. 2022, 179, 104395. [Google Scholar] [CrossRef]

- Gondaliya, K.; Butakov, S.; Zavarsky, P. SLA as a mechanism to manage risks related to chatbot services. In Proceedings of the 2020 IEEE 6th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing,(HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), New York, NY, USA, 25–27 May 2020; IEEE: Piscataway, NJ, USA; pp. 235–240. [Google Scholar]

- Park, D.-M.; Jeong, S.-S.; Seo, Y.-S. Systematic review on chatbot techniques and applications. J. Inf. Process. Syst. 2022, 18, 26–47. [Google Scholar]

- Jeon, J.; Lee, S.; Choe, H. Beyond ChatGPT: A conceptual framework and systematic review of speech-recognition chatbots for language learning. Comput. Educ. 2023, 206, 104898. [Google Scholar] [CrossRef]

- Bilquise, G.; Ibrahim, S.; Shaalan, K. Emotionally intelligent chatbots: A systematic literature review. Hum. Behav. Emerg. Technol. 2022, 2022, 9601630. [Google Scholar] [CrossRef]

- Hilken, T.; Chylinski, M.; de Ruyter, K.; Heller, J.; Keeling, D.I. Exploring the frontiers in reality-enhanced service communication: From augmented and virtual reality to neuro-enhanced reality. J. Serv. Manag. 2022, 33, 657–674. [Google Scholar] [CrossRef]

- Gao, M.; Liu, X.; Xu, A.; Akkiraju, R. Chat-XAI: A new chatbot to explain artificial intelligence. In Intelligent Systems and Applications: Proceedings of the 2021 Intelligent Systems Conference (IntelliSys) Volume 3; Springer: Berlin/Heidelberg, Germany, 2022; pp. 125–134. [Google Scholar]

- Kapočiūtė-Dzikienė, J. A domain-specific generative chatbot trained from little data. Appl. Sci. 2020, 10, 2221. [Google Scholar] [CrossRef]

- Golizadeh, N.; Golizadeh, M.; Forouzanfar, M. Adversarial grammatical error generation: Application to Persian language. Int. J. Nat. Lang. Comput. 2022, 11, 19–28. [Google Scholar] [CrossRef]

- Jain, U.; Zhang, Z.; Schwing, A.G. Creativity: Generating diverse questions using variational autoencoders. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6485–6494. [Google Scholar]

- Liu, M.; Bao, X.; Liu, J.; Zhao, P.; Shen, Y. Generating emotional response by conditional variational auto-encoder in open-domain dialogue system. Neurocomputing 2021, 460, 106–116. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Bengesi, S.; El-Sayed, H.; Sarker, M.K.; Houkpati, Y.; Irungu, J.; Oladunni, T. Advancements in Generative AI: A Comprehensive Review of GANs, GPT, Autoencoders, Diffusion Model, and Transformers. IEEE Access 2024, 12, 1. [Google Scholar] [CrossRef]

- Varitimiadis, S.; Kotis, K.; Pittou, D.; Konstantakis, G. Graph-based conversational AI: Towards a distributed and collaborative multi-chatbot approach for museums. Appl. Sci. 2021, 11, 9160. [Google Scholar] [CrossRef]

- Preskill, J. Quantum computing 40 years later. In Feynman Lectures on Computation; CRC Press: Boca Raton, FL, USA, 2023; pp. 193–244. [Google Scholar]

- Aragonés-Soria, Y.; Oriol, M. C4Q: A Chatbot for Quantum. arXiv 2024, arXiv:2402.01738. [Google Scholar]

- Jalali, N.A.; Chen, H. Comprehensive Framework for Implementing Blockchain-enabled Federated Learning and Full Homomorphic Encryption for Chatbot security System. Clust. Comput. 2024, 1–24. [Google Scholar] [CrossRef]

- Hamsath Mohammed Khan, R. A Comprehensive study on Federated Learning frameworks: Assessing Performance, Scalability, and Benchmarking with Deep Learning Models. Master’s Thesis, University of Skövde, Skövde, Sweden, 2023. [Google Scholar]

- Drigas, A.; Mitsea, E.; Skianis, C. Meta-learning: A Nine-layer model based on metacognition and smart technologies. Sustainability 2023, 15, 1668. [Google Scholar] [CrossRef]

- Kulkarni, U.; SM, M.; Hallyal, R.; Sulibhavi, P.; Guggari, S.; Shanbhag, A.R. Optimisation of deep neural network model using Reptile meta learning approach. Cogn. Comput. Syst. 2023, 1–8. [Google Scholar] [CrossRef]

- Yamamoto, K.; Inoue, K.; Kawahara, T. Character expression for spoken dialogue systems with semi-supervised learning using Variational Auto-Encoder. Comput. Speech Lang. 2023, 79, 101469. [Google Scholar] [CrossRef]

- Fijačko, N.; Prosen, G.; Abella, B.S.; Metličar, Š.; Štiglic, G. Can novel multimodal chatbots such as Bing Chat Enterprise, ChatGPT-4 Pro, and Google Bard correctly interpret electrocardiogram images? Resuscitation 2023, 193, 110009. [Google Scholar] [CrossRef] [PubMed]

- Das, A.; Kottur, S.; Gupta, K.; Singh, A.; Yadav, D.; Moura, J.M. Visual dialog. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 326–335. [Google Scholar]

- Tran, Q.-D.L.; Le, A.-C. Exploring bi-directional context for improved chatbot response generation using deep reinforcement learning. Appl. Sci. 2023, 13, 5041. [Google Scholar] [CrossRef]

- Cai, W.; Grossman, J.; Lin, Z.J.; Sheng, H.; Wei, J.T.-Z.; Williams, J.J.; Goel, S. Bandit algorithms to personalize educational chatbots. Mach. Learn. 2021, 110, 2389–2418. [Google Scholar] [CrossRef]

- Liu, W.; He, Q.; Li, Z.; Li, Y. Self-learning modeling in possibilistic model checking. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 264–278. [Google Scholar] [CrossRef]

- Lee, Y.-J.; Roger, P. Cross-platform language learning: A spatial perspective on narratives of language learning across digital platforms. System 2023, 118, 103145. [Google Scholar] [CrossRef]

| Error Type | Description | Examples |

|---|---|---|

| Misunderstanding | Errors where the chatbot fails to grasp the user’s intent due to ambiguity in language, slang, or complex queries. | A user asks for “bank holidays,” and the chatbot responds with information on holiday loans instead of actual dates. |

| Inappropriate Response | Situations where the chatbot’s reply is out of context, offensive, or irrelevant. These can result from flawed training data or poor language understanding. | A chatbot, designed for customer support, uses casual language in a serious complaint scenario, worsening the issue. |

| Factual Inaccuracy | Occurs when a chatbot provides outdated, incorrect, or misleading information. Often a result of not updating the knowledge base regularly. | A health advice chatbot gives outdated dietary recommendations that have been debunked by recent studies. |

| Repetitive Responses | Chatbots may get stuck in a loop, providing the same response to varied inputs due to limited understanding or options. | A customer service chatbot repeats, “Can you rephrase that?” regardless of how the user alters their question. |

| Lack of Personalization | Fails to tailor responses to the individual user’s context or history, resulting in a generic interaction that might not be helpful. | A chatbot treats a returning customer as a new user every time, asking repeatedly for the same basic information. |

| Language Limitations | Difficulty in processing and understanding multilingual inputs, dialects, or idiomatic expressions, leading to errors in response. | A chatbot fails to understand a common regional slang term, responding with unrelated information. |

| Hallucinations | AI model fills in gaps in its knowledge with fabricated information. | A chatbot, when asked about a recent scientific breakthrough, confidently describes a new drug that cures a major disease, even though such a drug does not exist and the breakthrough was not in that field. |

| Strategy | Description | Benefits | Challenges |

|---|---|---|---|

| Data-Driven Approach | Collects and analyzes user feedback to pinpoint and correct errors. | Adapts to user needs, increases satisfaction, and can enable personalization. | Requires significant data collection and analysis; potential for bias in the feedback data. |

| Algorithmic Adjustments | Supervised learning: Trains on labeled data (input–output pairs) to learn patterns. | Reliable for well-defined tasks; straightforward to implement. | Requires large amounts of labeled data; may struggle with unseen scenarios. |

| Reinforcement learning (RL): Learns by trial and error, receiving rewards or penalties for actions. | Optimizes responses based on feedback; adapts to evolving situations. | Complex to design reward systems; can be computationally expensive. | |

| Semi-supervised learning: Leverages both labeled and unlabeled data. | Improves performance when labeled data are scarce. | Requires careful data balancing; unlabeled data can introduce noise. | |

| Weakly supervised learning: Uses noisy or incomplete labels for training. | Enables learning with less manual effort. | May not be as accurate as strong supervision methods. | |

| Few-shot/zero-shot learning: Adapts to new tasks with minimal or no new labeled examples. | Efficient for rapidly expanding chatbot capabilities. | Performance heavily relies on pre-training quality; may struggle with complex tasks. | |

| Human-in-the-Loop | Leverages human oversight during chatbot training and operation. | Increases accuracy, ensures ethical responses, and provides more nuanced understanding of user interactions. | Potential for slower response times; requires ongoing human resources. |

| Domain | Chatbot | Main Challenge | Strategy Implemented | Outcome |

|---|---|---|---|---|

| E-commerce | Intelligent conversational agent for customer service [149] | Handling complex customer service inquiries | Data-driven feedback loops, continuous learning | Enhanced customer interaction by adapting to user preferences, leading to improved satisfaction. |

| Healthcare | “Ted”, designed to assist individuals with mental health concerns [150] | Providing accurate health advice | Human intervention, continuous learning models | Increased usability and reliability in providing health advice, improved patient engagement. |

| Banking | Customer service chatbot for processing natural language queries [151] | Processing natural language queries efficiently | Semi-supervised learning, feedback mechanism | Improved efficiency in customer service, better accuracy in understanding and classifying queries. |

| Travel | Advanced chatbot system on the Echo platform for travel planning [152] | Personalizing travel recommendations | RL, deep neural network (DNN) approach | Improved travel planning with personalized recommendations, enhanced user experience. |

| Education | LLM-based chatbot designed to enhance student understanding and engagement with personalized learning recommendations [153] | Ensuring student commitment through clear explanations of the rationale behind personalized recommendations | Utilized a knowledge graph (KG) to guide LLM responses, incorporated group chat with human mentors for additional support | User study demonstrated the potential benefits and limitations of using chatbots for conversational explainability in educational settings |

| Language Learning | Language learning chatbot developed during COVID-19 pandemic [154] | Correcting language mistakes and providing explanations | Human-in-the-loop, continuous user interaction data | More effective language learning through personalized instruction and feedback. |

| Technology/Trend | Description | Key Benefits |

|---|---|---|

| Advanced NLU Capabilities | Chatbots will have an enhanced understanding of natural language, including regional dialects, nuances, and idioms. | Interactions become more natural and human-like. |

| Voice Recognition and Synthesis Improvements | Chatbots will excel at understanding spoken language, recognizing intonation and emotion in addition to words. | Makes chatbots more accessible, especially alongside voice-based assistants. |

| Emotional Intelligence (EI) | Chatbots can recognize and respond to a user’s emotional state. | Conversations feel more empathetic and tailored to the user’s needs. |

| Augmented and Virtual Reality (AR/VR) Integration | Chatbots provide dynamic and interactive experiences in immersive environments. | Offers new ways for users to interact, like virtual shopping assistance. |

| Blockchain, IoT, 5G | Blockchain (secure data), IoT (smart device control), and 5G (reduced latency) will work together with chatbots. | Enhances security, provides new levels of interactivity, and makes chatbots faster. |

| Explainable AI (XAI) | Makes the ”black box” of AI decision-making transparent. | Builds trust as users understand how the chatbot functions. |

| Generative Adversarial Networks (GANs) | Generates realistic data through adversarial training of generator and discriminator networks. | Produces highly realistic and sharp outputs. |

| Variational Autoencoders (VAEs) | Learns to generate diverse samples by compressing and reconstructing data. | Generates diverse and contextually relevant responses by learning the underlying distribution of dialogue data. |

| Difussion Models (DMs) | Generative models that learn to generate data by reversing a gradual noising process. | Produces high-quality, diverse samples with better stability and control compared to previous models. |

| Graph Neural Networks (GNNs) | Allows chatbots to process complex data structures like social networks or CRM data. | Provides personalized experiences, as bots better understand user context. |

| Quantum Computing | (While far off) promises vastly improved processing power for real-time learning. | Could lead to major leaps in chatbot capabilities, but is not an immediate factor. |

| Federated Learning | Training occurs on decentralized data, prioritizing user privacy. | Protects sensitive data, builds trust, and lets chatbots train on a broader range of real-life interactions. |

| Meta-Learning | Chatbots adapt to new topics or conversational styles quickly and easily. | Makes chatbots versatile and adaptable to different scenarios. |

| Semi-Supervised Learning | Leverages unlabeled data, reducing time-consuming labeling tasks. | Makes training easier with abundant real-world conversational data. |

| Multimodal Chatbots | Chatbots understand and respond to text, images, videos, and voice simultaneously. | Offers richer, more dynamic user experiences. |

| Personalization using Reinforcement Learning (RL) | Chatbots use reward feedback systems to tailor responses to individual users. | Conversations become more satisfying and successful. |

| Error Correction Improvements | Chatbots proactively identify and fix errors, using self-learning and pattern recognition. | Interactions become more accurate and reliable. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Izadi, S.; Forouzanfar, M. Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots. AI 2024, 5, 803-841. https://doi.org/10.3390/ai5020041

Izadi S, Forouzanfar M. Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots. AI. 2024; 5(2):803-841. https://doi.org/10.3390/ai5020041

Chicago/Turabian StyleIzadi, Saadat, and Mohamad Forouzanfar. 2024. "Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots" AI 5, no. 2: 803-841. https://doi.org/10.3390/ai5020041

APA StyleIzadi, S., & Forouzanfar, M. (2024). Error Correction and Adaptation in Conversational AI: A Review of Techniques and Applications in Chatbots. AI, 5(2), 803-841. https://doi.org/10.3390/ai5020041