Minimally Distorted Adversarial Images with a Step-Adaptive Iterative Fast Gradient Sign Method

Abstract

1. Introduction

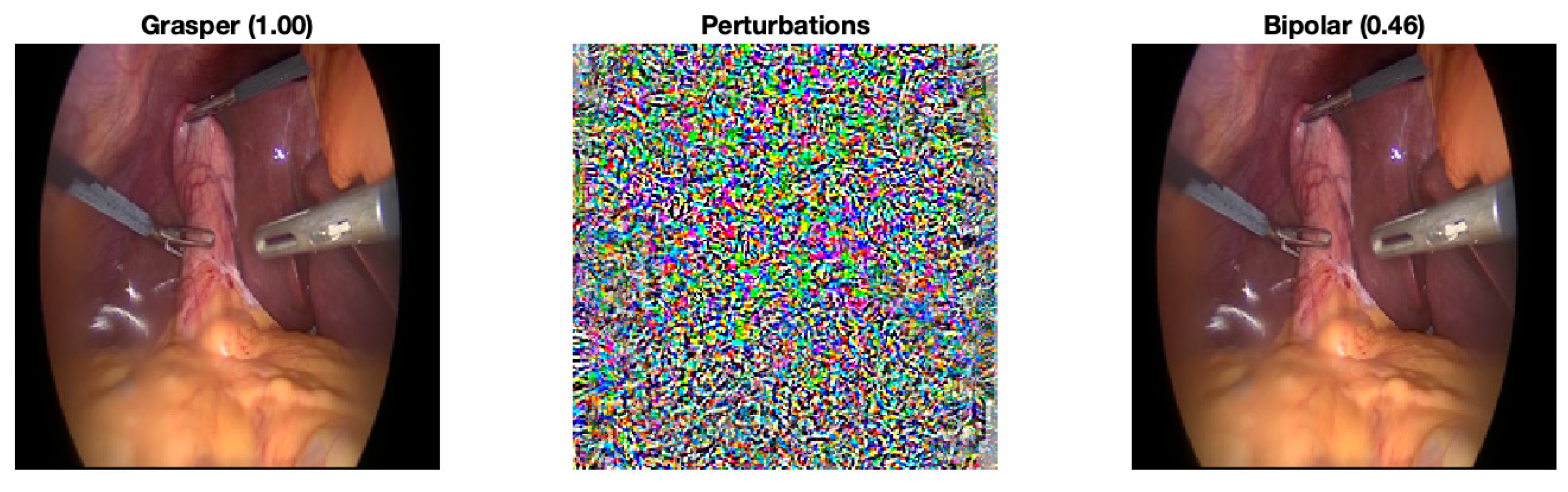

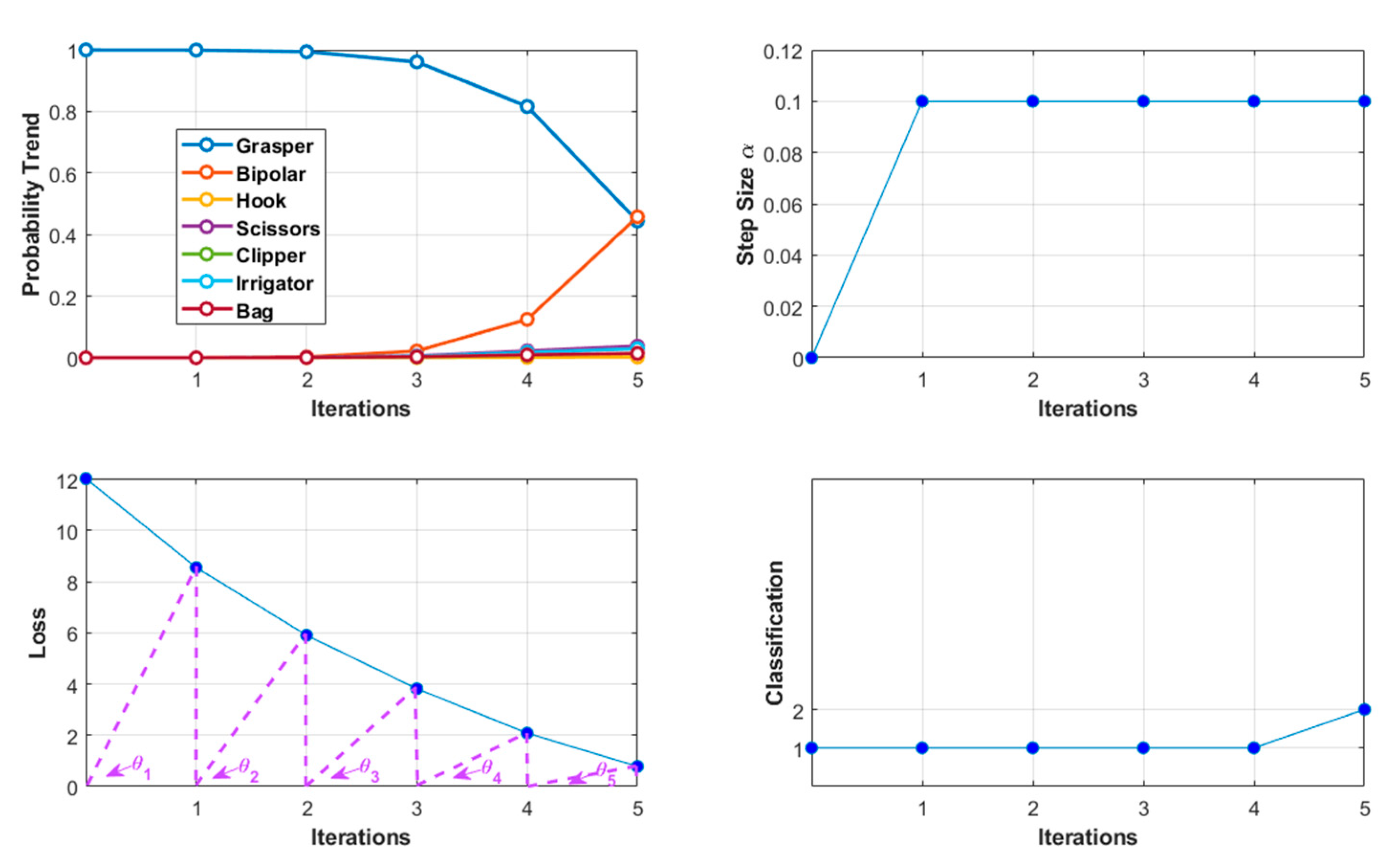

- We propose the step size adaptive i-FGSM to generate adversaries with fewer perturbations. Initially, the classic fast gradient sign method (FGSM) was applied to seek a minimum perturbation individually for each input that is sufficient to change the model decision. When the size of the perturbation is not large enough to change prediction, the algorithm leads to an iterative form (i-FGSM) until the input is misclassified. This method is rather crude and usually lacks a minimal solution. Therefore, we modified this minimum search and formulated an adaptive gradient descent problem [19]. To solve this problem, this paper further extends the method from our previous work in [19].

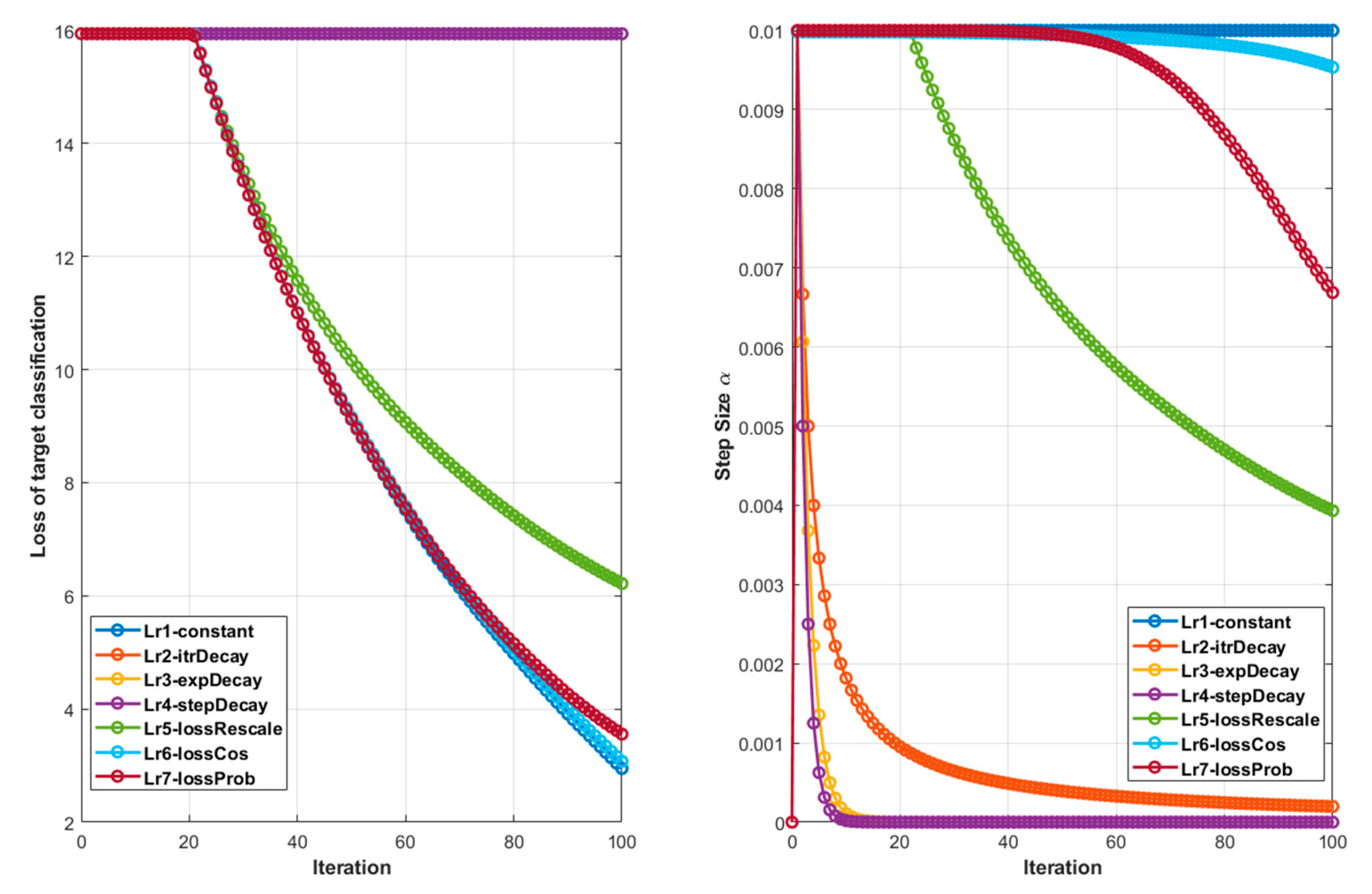

- We introduce a loss adaptive algorithm to adjust the step size. Three decay algorithms from the literature were applied to the step size (or learning rate in the context of machine learning). Additionally, we also introduce a novel decay algorithm that keeps track of the loss of the current iteration and uses it as feedback to adjust the step size for the next iteration. In total, there are three different loss-tracking functions: the loss rescale function, loss trigonometric function, and loss-related original classification probability.

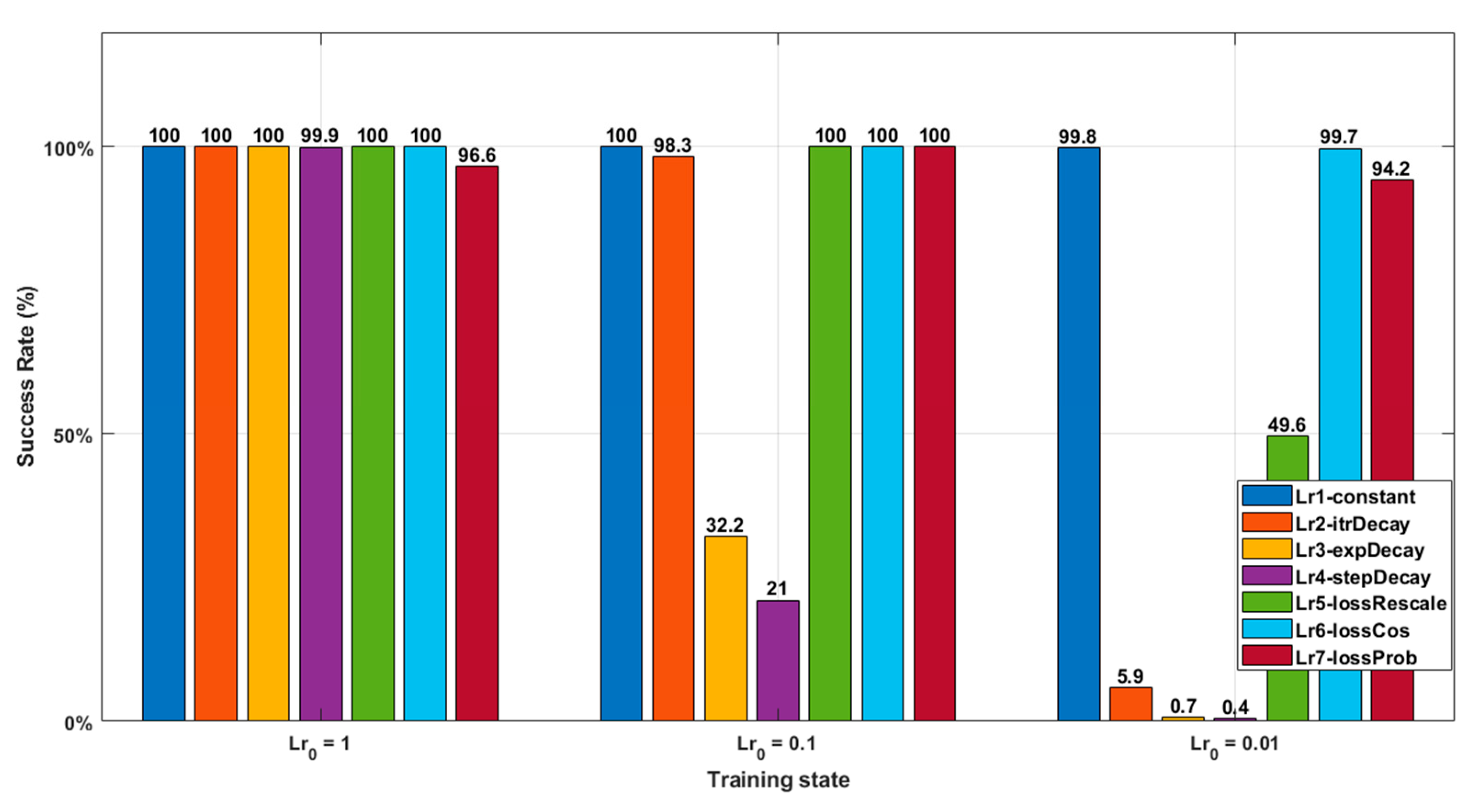

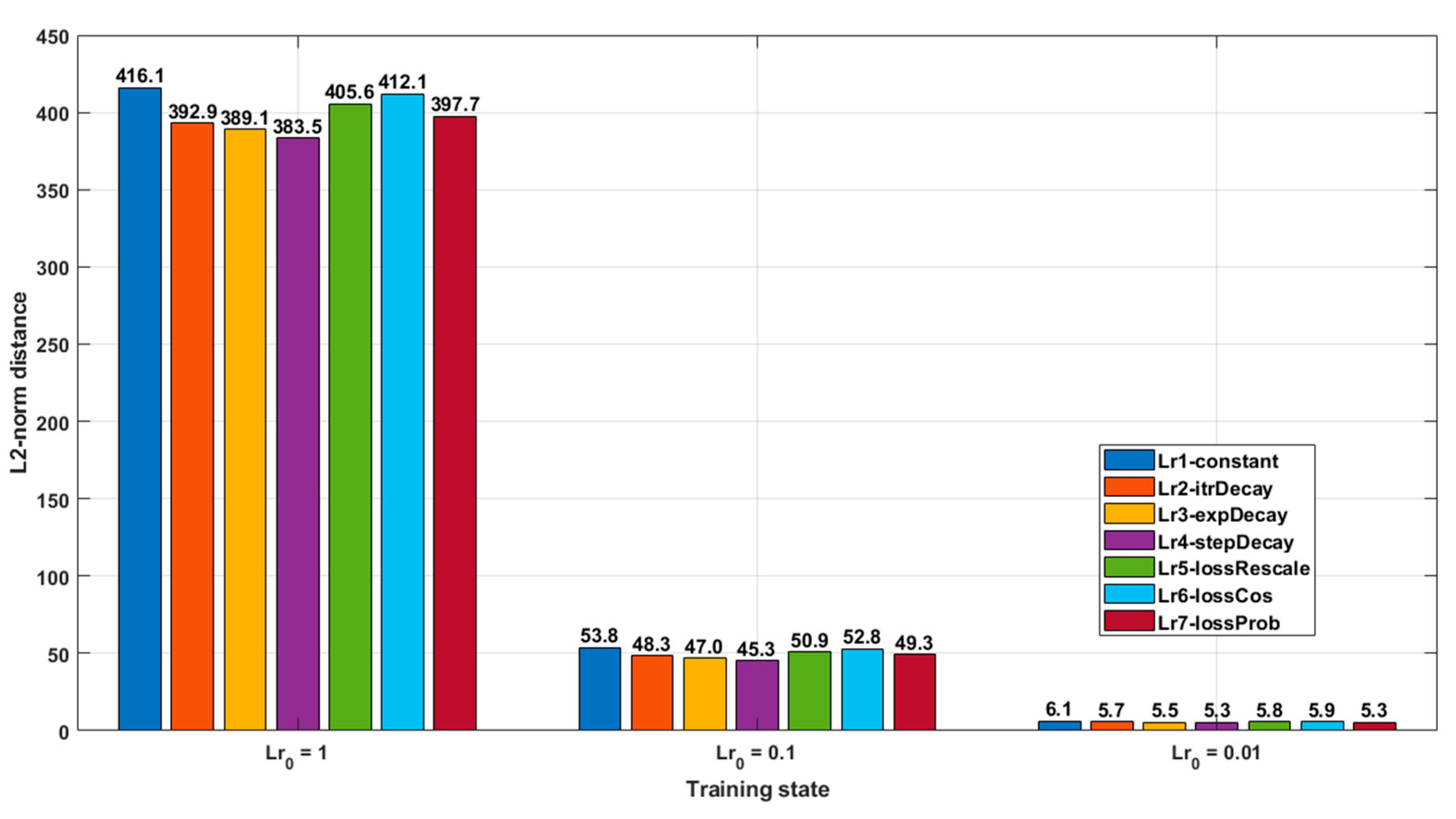

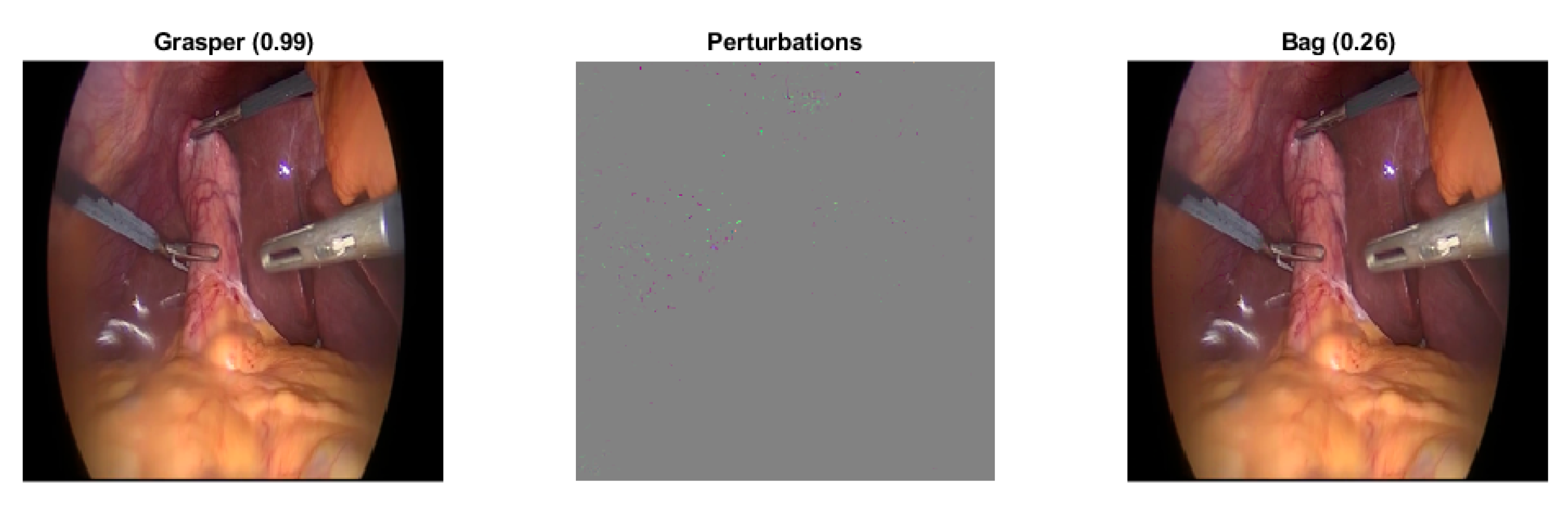

- We provide the experimental results that indicate the influence of different step sizes in the adversary-generated process. The experiment includes two parts. Depending on whether a target classification is given, i.e., the adversary can be generated by moving the input away from its original classification or moving the input close to a target classification. The experimental results illustrate that our loss adaptive step size algorithms could efficiently generate adversaries with fewer perturbations while maintaining a high adversarial success rate.

2. Methods

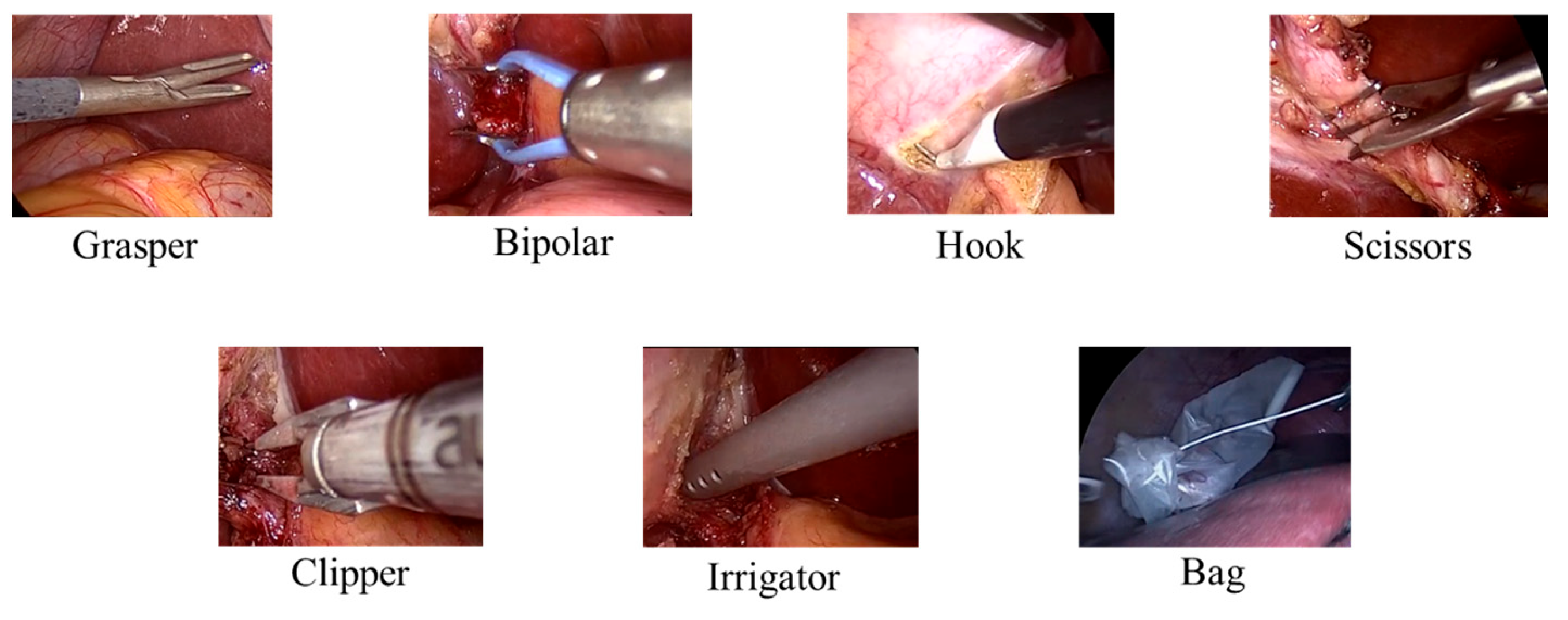

2.1. Material

2.2. Adversarial Attack

2.3. Step Size Decay Function

- 1.

- Rescale function.

- 2.

- Trigonometric functions. The trigonometric function can rescale the loss vector without adding a prediction, as there is an angle at each iteration to measure the loss value (see Figure 3 for the loss axis).

- 3.

- Directly apply the probability score of the original classification. It is an efficient and simple method to track the current loss status.

2.4. Evaluation Metric

- The maximum iteration is 100. The iteration was stopped when the generated image was misclassified in case of the non-target attack or changed to the target class in case of the target attack. When the iteration exceeds 100, it is considered a failed case.

- 3.

2.5. Adversary-Generating Algorithm

| Algorithm 1: Generate adversarial images with adaptive step size |

| Input: Trained model , test sample set , original class , target class , the generated image and its classification at current iteration , the probability score of the current input is , the cross-entropy loss at current iteration , gradient sign map , step size , the initial step size , iterations , stopping criterion with maximum iteration limitation . Output: The adversarial images around the classification boundary. |

| Part 1, Target Adversarial Attack: For < : If ~= , Calculate the current cross-entropy loss between the prediction and . Backpropagation through to get the gradient sign map . Loss adaptive decay algorithms: 1. Concatenate with the previous loss , rescale as the learning rate factor between [0, 1]: ; ; 2. ; ; 3. ; Update : Elseif == , break; Part 2, Non-target Adversarial Attack: For < : If == , Calculate the current cross-entropy loss between the prediction and . Backpropagation through to get the gradient sign map . Loss adaptive decay algorithms: 4. Concatenate with the previous loss rescale as the learning rate factor between [0, 1]: ; 5. ; ; 6. ; Update : Elseif ~= , break; Return: The generated image with minimal perturbations. |

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; de Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2016, 36, 86–97. [Google Scholar] [CrossRef]

- Puttagunta, M.K.; Ravi, S.; Babu, C.N.K. Adversarial examples: Attacks and defences on medical deep learning systems. Multimed. Tools Appl. 2023, 82, 33773–33809. [Google Scholar] [CrossRef]

- Carlini, N.; Athalye, A.; Papernot, N.; Brendel, W.; Rauber, J.; Tsipras, D.; Goodfellow, I.; Madry, A.; Kurakin, A. On evaluating adversarial robustness. arXiv 2019, arXiv:1902.06705. [Google Scholar]

- Zhang, J.; Chen, L. Adversarial examples: Opportunities and challenges. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 2578–2593. [Google Scholar] [CrossRef]

- Balda, E.R.; Behboodi, A.; Mathar, R. Adversarial examples in deep neural networks: An overview. In Deep Learning: Algorithms and Applications; Springer: Cham, Switzerland, 2020; pp. 31–65. [Google Scholar]

- Wiyatno, R.R.; Xu, A.; Dia, O.; De Berker, A. Adversarial examples in modern machine learning: A review. arXiv 2019, arXiv:1911.05268. [Google Scholar]

- Ding, N.; Möller, K. The Image flip effect on a CNN model classification. Proc. Autom. Med. Eng. 2023, 2, 755. [Google Scholar]

- Ren, K.; Zheng, T.; Qin, Z.; Liu, X. Adversarial attacks and defenses in deep learning. Engineering 2020, 6, 346–360. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial machine learning at scale. arXiv 2016, arXiv:1611.01236. [Google Scholar]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting adversarial attacks with momentum. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 372–387. [Google Scholar]

- Xiao, C.; Li, B.; Zhu, J.Y.; He, W.; Liu, M.; Song, D. Generating adversarial examples with adversarial networks. arXiv 2018, arXiv:1801.02610. [Google Scholar]

- Carlini, N.; Katz, G.; Barrett, C.; Dill, D.L. Provably minimally-distorted adversarial examples. arXiv 2017, arXiv:1709.10207. [Google Scholar]

- Croce, F.; Matthias, H. Minimally distorted adversarial examples with a fast adaptive boundary attack. In Proceedings of the 2020 International Conference on Machine Learning, PMLR, Virtual Event, 13–18 July 2020. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Du, Z.; Liu, F.; Yan, X. Minimum adversarial examples. Entropy 2022, 24, 396. [Google Scholar] [CrossRef]

- Ding, N.; Möller, K. Using adaptive learning rate to generate adversarial images. Curr. Dir. Biomed. Eng. 2023, 9, 359–362. [Google Scholar] [CrossRef]

- Ding, N.; Möller, K. Robustness evaluation on different training state of a CNN model. Curr. Dir. Biomed. Eng. 2022, 8, 497–500. [Google Scholar] [CrossRef]

- Ding, N.; Möller, K. Generate adversarial images with gradient search. Proc. Autom. Med. Eng. 2023, 2, 754. [Google Scholar]

- Ding, N.; Arabian, H.; Möller, K. Feature space separation by conformity loss driven training of CNN. IFAC J. Syst. Control 2024, 28, 100260. [Google Scholar] [CrossRef]

- Gao, B.; Pavel, L. On the properties of the softmax function with application in game theory and reinforcement learning. arXiv 2017, arXiv:1704.00805. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- Learning Rate Schedules and Adaptive Learning Rate Methods for Deep Learning. 2017. Available online: https://towardsdatascience.com/learning-rate-schedules-and-adaptive-learning-rate-methods-for-deep-learning-2c8f433990d1 (accessed on 22 March 2017).

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. In Neural Networks: Tricks of the Trade, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–478. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Darken, C.; Moody, J. Note on learning rate schedules for stochastic optimization. In Proceedings of the Advances in Neural Information Processing Systems 3, Denver, CO, USA, 26–29 November 1990. [Google Scholar]

- Moreira, M.; Fiesler, E. Neural Networks with Adaptive Learning Rate and Momentum Terms; IDIAP: Martigny, Switzerland, 1995. [Google Scholar]

- Li, Z.; Arora, S. An exponential learning rate schedule for deep learning. arXiv 2019, arXiv:1910.07454. [Google Scholar]

- Ge, R.; Kakade, S.M.; Kidambi, R.; Netrapalli, P. The step decay schedule: A near optimal, geometrically decaying learning rate procedure for least squares. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

| Class | Surgical Tool | Number of Frames |

|---|---|---|

| 1 | Grasper | 23,507 |

| 2 | Bipolar | 3222 |

| 3 | Hook | 44,887 |

| 4 | Scissors | 1483 |

| 5 | Clipper | 2647 |

| 6 | Irrigator | 2899 |

| 7 | Bag | 1545 |

| Index | Decay Algorithms | Formulars |

|---|---|---|

| 1 | Constant | |

| 2 | Iteration decay | |

| 3 | Exponential decay | |

| 4 | Step decay | |

| 5 | Loss rescales | |

| 6 | Loss trigonometric | |

| 7 | Loss probability |

| Final Class with Maximum Percentage | |||

|---|---|---|---|

| Origin Class | |||

| 1 | 7-(30.5%) | 7-(30.5%) | 7-(31.0%) |

| 2 | 1-(60.5%) | 1-(61.0%) | 1-(60.5%) |

| 3 | 1-(69.0%) | 1-(64.5%) | 1-(64.5%) |

| 4 | 1-(42.0%) | 1-(43.5%) | 1-(44.0%) |

| 5 | 1-(51.5%) | 1-(46.0%) | 1-(46.5%) |

| 6 | 1-(45.0%) | 1-(44.5%) | 1-(43.5%) |

| 7 | 1-(77.5%) | 1-(78.5%) | 1-(78.5%) |

| Decay Algorithms | Target Attack | Non-Target Attack | ||||

|---|---|---|---|---|---|---|

| 1st Constant | 2621 | 453 | 10 | 1050 | 317 | 9 |

| 2nd Iteration decay | 2621 | 453 | 10 | 1050 | 317 | 9 |

| 3rd Exponential decay | 2621 | 453 | 10 | 1050 | 317 | 9 |

| 4th Step decay | 2621 | 453 | 10 | 1050 | 317 | 9 |

| 5th Loss rescales | 2621 | 453 | 10 | 1050 | 317 | 9 |

| 6th Loss trigonometric | 2617 | 447 | 10 | 1050 | 317 | 9 |

| 7th Loss probability | 2608 | 434 | 6 | 1050 | 313 | 6 |

| Threshold | Origin Class | Final Class | Iteration | L1-Norm | L2-Norm | Modify Portion |

|---|---|---|---|---|---|---|

| 0 | 1 | 7 | 2 | 15.31 × 104 | 545.89 | 99.55% |

| 1/2 | 1 | 4 | 2 | 6.70 × 104 | 270.15 | 49.30% |

| 1/4 | 1 | 4 | 2 | 2.82 × 104 | 181.21 | 18.65% |

| Top 5000 pixel | 1 | 4 | 3 | 1.25 × 104 | 127.61 | 7.71% |

| Top 500 pixel | 1 | 4 | 12 | 5.00 × 103 | 119.13 | 2.04% |

| Top 100 pixel | 1 | 7 | 55 | 4.08 × 103 | 158.33 | 1.18% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, N.; Möller, K. Minimally Distorted Adversarial Images with a Step-Adaptive Iterative Fast Gradient Sign Method. AI 2024, 5, 922-937. https://doi.org/10.3390/ai5020046

Ding N, Möller K. Minimally Distorted Adversarial Images with a Step-Adaptive Iterative Fast Gradient Sign Method. AI. 2024; 5(2):922-937. https://doi.org/10.3390/ai5020046

Chicago/Turabian StyleDing, Ning, and Knut Möller. 2024. "Minimally Distorted Adversarial Images with a Step-Adaptive Iterative Fast Gradient Sign Method" AI 5, no. 2: 922-937. https://doi.org/10.3390/ai5020046

APA StyleDing, N., & Möller, K. (2024). Minimally Distorted Adversarial Images with a Step-Adaptive Iterative Fast Gradient Sign Method. AI, 5(2), 922-937. https://doi.org/10.3390/ai5020046