A Review of Natural-Language-Instructed Robot Execution Systems

Abstract

:1. Introduction

1.1. Background

1.2. Two Pushing Forces: Natural Language Processing (NLP) and Robot Executions

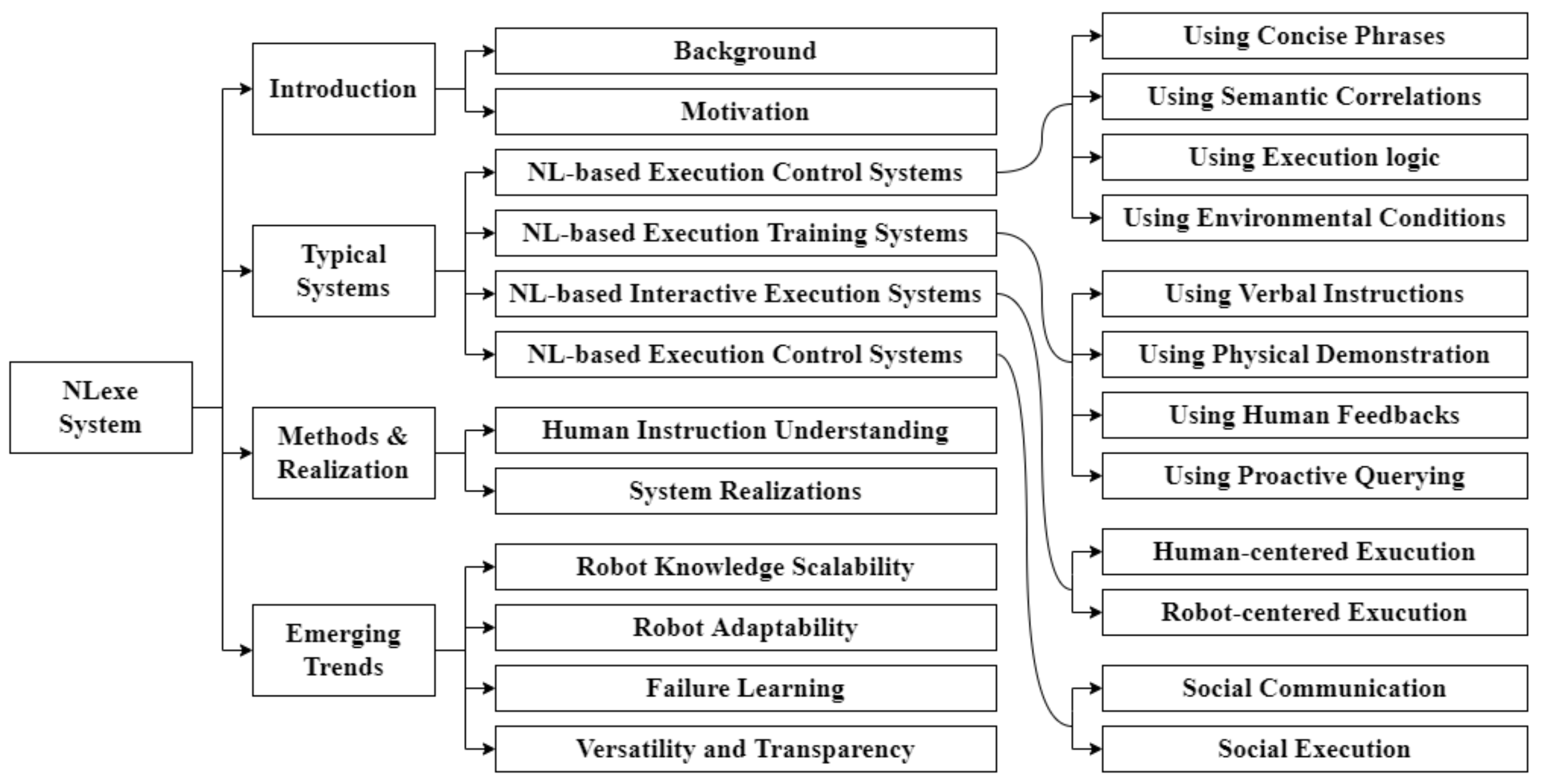

1.3. Systematic Overview of NLexe Research

2. Scope of This Review

3. NL-Based Execution Control Systems

3.1. Typical NLexe Systems

3.1.1. NL-Based Execution Control Systems Using Concise Phrases

3.1.2. NL-Based Execution Control Systems Using Semantic Correlations

3.1.3. NL-Based Execution Control Systems Using Execution Logic

3.1.4. NL-Based Execution Control Systems Using Environmental Conditions

3.2. Open Problems

4. NL-Based Execution Training Systems

4.1. Typical NLexe Systems

4.1.1. NL-Based Execution Training Systems Using Verbal Instructions

4.1.2. NL-Based Execution Training Systems Using Physical Demonstration

4.1.3. NL-Based Execution Training Systems Using Human Feedbacks

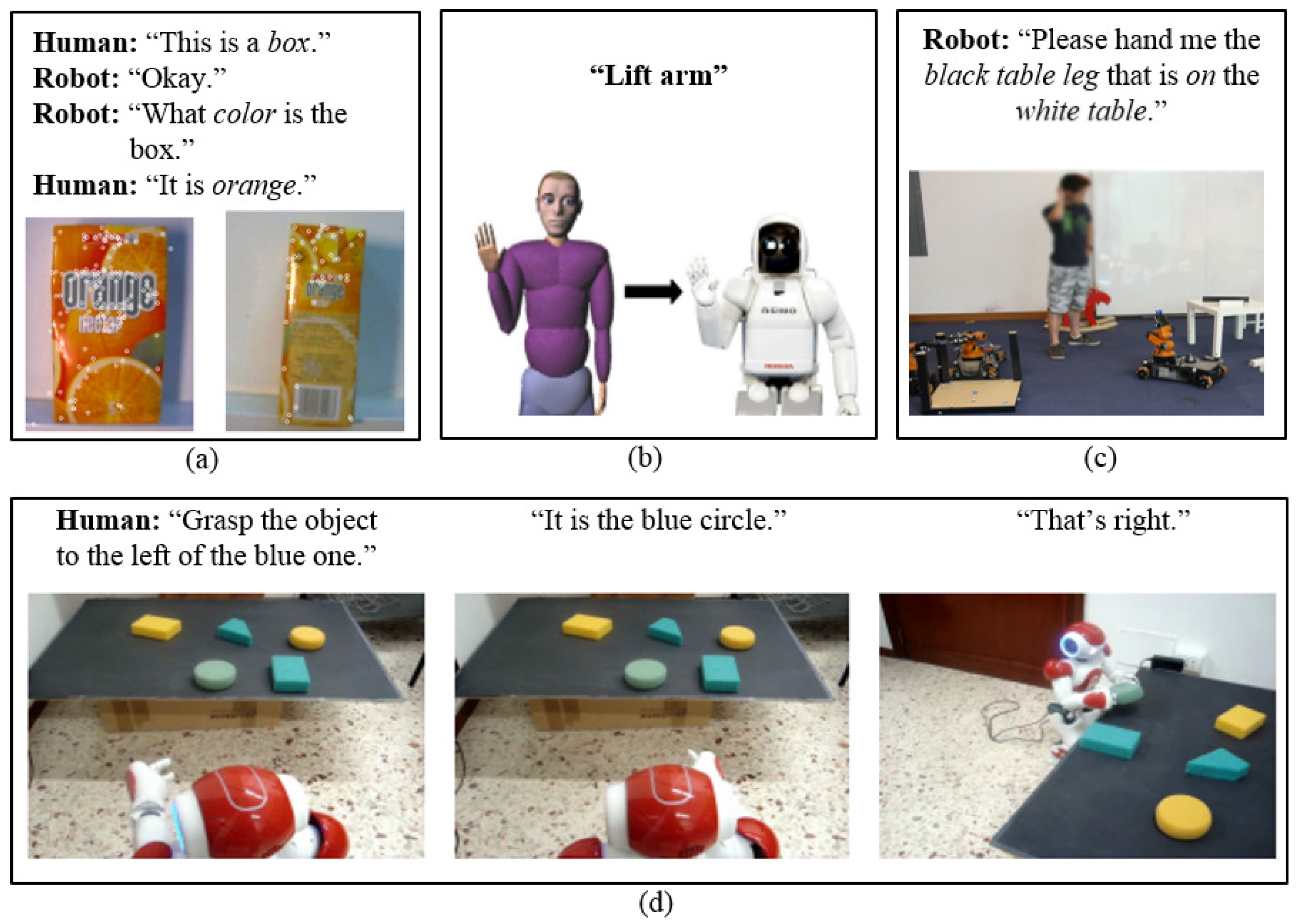

4.1.4. NL-Based Execution Training Systems Using Proactive Querying

4.2. Open Problems

5. NL-Based Interactive Execution Systems

5.1. Typical NLexe Systems

5.1.1. Human-Centered Task Execution Systems

5.1.2. Robot-Centered Task Execution Systems

5.2. Open Problems

6. NL-Based Social Execution Systems

6.1. Typical NLexe System

6.1.1. NL-Based Social Communication Systems

6.1.2. NL-Based Social Execution Systems

6.2. Open Problems

7. Methods and Realizations for Human Instruction Understanding

7.1. Models for Human Instruction Understanding

7.1.1. Literal Understanding Model

7.1.2. Interpreted Understanding Model

7.1.3. Model Discussion

7.2. System Realizations

7.2.1. NLP Techniques

7.2.2. Speech Recognition Systems

7.2.3. Evaluations

8. Emerging Trends of NLexe

8.1. Robot Knowledge Scalability

8.2. Robot Adaptability

8.3. Failure Learning

8.4. Versatility and Transparency

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NL | Natural Language |

| NLexe | NL-instructed Robot Execution |

| NLP | Natural Language Processing |

| VAE | Variational Autoencoders |

| GAN | Generative Adversarial Network |

| HRI | Human-Robot Interaction |

| WWW | World Wide Web |

References

- Baraglia, J.; Cakmak, M.; Nagai, Y.; Rao, R.; Asada, M. Initiative in robot assistance during collaborative task execution. In Proceedings of the 11th IEEE International Conference on Human Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; pp. 67–74. [Google Scholar] [CrossRef]

- Gemignani, G.; Bastianelli, E.; Nardi, D. Teaching robots parametrized executable plans through spoken interaction. In Proceedings of the 2015 International Conference on Autonomous Agents and Multi-Agent Systems, Istanbul, Turkey, 4–8 May 2015; pp. 851–859. [Google Scholar]

- Brooks, D.J.; Lignos, C.; Finucane, C.; Medvedev, M.S.; Perera, I.; Raman, V.; Kress-Gazit, H.; Marcus, M.; Yanco, H.A. Make it so: Continuous, flexible natural language interaction with an autonomous robot. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012. [Google Scholar]

- Fong, T.; Thorpe, C.; Baur, C. Collaboration, Dialogue, Human-Robot Interaction. Robotics Research; Springer: Berlin/Heidelberg, Germany, 2003; pp. 255–266. [Google Scholar] [CrossRef]

- Krüger, J.; Surdilovic, D. Robust control of force-coupled human–robot-interaction in assembly processes. CIRP Ann.-Manuf. Technol. 2008, 57, 41–44. [Google Scholar] [CrossRef]

- Liu, R.; Webb, J.; Zhang, X. Natural-language-instructed industrial task execution. In Proceedings of the 2016 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Charlotte, NC, USA, 21–24 August 2016; p. V01BT02A043. [Google Scholar] [CrossRef]

- Tellex, S.; Kollar, T.; Dickerson, S.; Walter, M.R.; Banerjee, A.G.; Teller, S.J.; Roy, N. Understanding natural language commands for robotic navigation and mobile manipulation. Assoc. Adv. Artif. Intell. 2011, 1, 2. [Google Scholar] [CrossRef]

- Iwata, H.; Sugano, S. Human-robot-contact-state identification based on tactile recognition. IEEE Trans. Ind. Electron. 2005, 52, 1468–1477. [Google Scholar] [CrossRef]

- Kjellström, H.; Romero, J.; Kragić, D. Visual object-action recognition: Inferring object affordances from human demonstration. Comput. Vis. Image Underst. 2011, 115, 81–90. [Google Scholar] [CrossRef]

- Kim, S.; Jung, J.; Kavuri, S.; Lee, M. Intention estimation and recommendation system based on attention sharing. In Proceedings of the 26th International Conference on Neural Information Processing, Red Hook, NY, USA, 5–10 December 2013; pp. 395–402. [Google Scholar] [CrossRef]

- Hu, N.; Englebienne, G.; Lou, Z.; Kröse, B. Latent hierarchical model for activity recognition. IEEE Trans. Robot. 2015, 31, 1472–1482. [Google Scholar] [CrossRef]

- Barattini, P.; Morand, C.; Robertson, N.M. A proposed gesture set for the control of industrial collaborative robots. In Proceedings of the 21st International Symposium on Robot and Human Interactive Communication (RO-MAN), Paris, France, 9–13 September 2012; pp. 132–137. [Google Scholar] [CrossRef]

- Jain, A.; Sharma, S.; Joachims, T.; Saxena, A. Learning preferences for manipulation tasks from online coactive feedback. Int. J. Robot. Res. 2015, 34, 1296–1313. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, X. Understanding human behaviors with an object functional role perspective for robotics. IEEE Trans. Cogn. Dev. Syst. 2016, 8, 115–127. [Google Scholar] [CrossRef]

- Ramirez-Amaro, K.; Beetz, M.; Cheng, G. Transferring skills to humanoid robots by extracting semantic representations from observations of human activities. Artif. Intell. 2017, 247, 95–118. [Google Scholar] [CrossRef]

- Zampogiannis, K.; Yang, Y.; Fermüller, C.; Aloimonos, Y. Learning the spatial semantics of manipulation actions through preposition grounding. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 1389–1396. [Google Scholar] [CrossRef]

- Takano, W.; Nakamura, Y. Action database for categorizing and inferring human poses from video sequences. Robot. Auton. Syst. 2015, 70, 116–125. [Google Scholar] [CrossRef]

- Karpathy, A.; Fei-Fei, L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar] [CrossRef]

- Raman, V.; Lignos, C.; Finucane, C.; Lee, K.C.; Marcus, M.; Kress-Gazit, H. Sorry Dave, I’m Afraid I Can’t Do That: Explaining Unachievable Robot Tasks Using Natural Language; Technical Report; University of Pennsylvania: Philadelphia, PA, USA, 2013. [Google Scholar] [CrossRef]

- Hemachandra, S.; Walter, M.; Tellex, S.; Teller, S. Learning semantic maps from natural language descriptions. In Proceedings of the 2013 Robotics: Science and Systems IX Conference, Berlin, Germany, 24–28 June 2013. [Google Scholar] [CrossRef]

- Duvallet, F.; Walter, M.R.; Howard, T.; Hemachandra, S.; Oh, J.; Teller, S.; Roy, N.; Stentz, A. Inferring maps and behaviors from natural language instructions. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 373–388. [Google Scholar] [CrossRef]

- Matuszek, C.; Herbst, E.; Zettlemoyer, L.; Fox, D. Learning to parse natural language commands to a robot control system. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 403–415. [Google Scholar] [CrossRef]

- Ott, C.; Lee, D.; Nakamura, Y. Motion capture based human motion recognition and imitation by direct marker control. In Proceedings of the IEEE International Conference on Humanoid Robots, Daejeon, Republic of Korea, 1–3 December 2008; pp. 399–405. [Google Scholar] [CrossRef]

- Waldherr, S.; Romero, R.; Thrun, S. A gesture based interface for human-robot interaction. Auton. Robot. 2000, 9, 151–173. [Google Scholar] [CrossRef]

- Dillmann, R. Teaching and learning of robot tasks via observation of human performance. Robot. Auton. Syst. 2004, 47, 109–116. [Google Scholar] [CrossRef]

- Medina, J.R.; Shelley, M.; Lee, D.; Takano, W.; Hirche, S. Towards interactive physical robotic assistance: Parameterizing motion primitives through natural language. In Proceedings of the 21st International Symposium on Robot and Human Interactive Communication (RO-MAN), Paris, France, 9–13 September 2012; pp. 1097–1102. [Google Scholar] [CrossRef]

- Hemachandra, S.; Walter, M.R. Information-theoretic dialog to improve spatial-semantic representations. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5115–5121. [Google Scholar] [CrossRef]

- Hunston, S.; Francis, G. Pattern Grammar: A Corpus-Driven Approach to the Lexical Grammar of English; No. 4; John Benjamins Publishing: Amsterdam, The Netherlands, 2000. [Google Scholar] [CrossRef]

- Bybee, J.L.; Hopper, P.J. Frequency and the Emergence of Linguistic Structure; John Benjamins Publishing: Amsterdam, The Netherlands, 2001; Volume 45. [Google Scholar] [CrossRef]

- Yang, Y.; Li, Y.; Fermüller, C.; Aloimonos, Y. Robot learning manipulation action plans by “watching” unconstrained videos from the world wide web. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 3686–3693. [Google Scholar] [CrossRef]

- Cheng, Y.; Jia, Y.; Fang, R.; She, L.; Xi, N.; Chai, J. Modelling and analysis of natural language controlled robotic systems. Int. Fed. Autom. Control. 2014, 47, 11767–11772. [Google Scholar] [CrossRef]

- Wu, C.; Lenz, I.; Saxena, A. Hierarchical semantic labeling for task-relevant rgb-d perception. In Proceedings of the 2014 Robotics: Science and Systems X Conference, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Hemachandra, S.; Duvallet, F.; Howard, T.M.; Roy, N.; Stentz, A.; Walter, M.R. Learning models for following natural language directions in unknown environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5608–5615. [Google Scholar] [CrossRef]

- Tenorth, M.; Perzylo, A.C.; Lafrenz, R.; Beetz, M. The roboearth language: Representing and exchanging knowledge about actions, objects, and environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St. Paul, MN, USA, 14–18 May 2012; pp. 1284–1289. [Google Scholar] [CrossRef]

- Pineau, J.; West, R.; Atrash, A.; Villemure, J.; Routhier, F. On the feasibility of using a standardized test for evaluating a speech-controlled smart wheelchair. Int. J. Intell. Control. Syst. 2011, 16, 124–131. [Google Scholar]

- Granata, C.; Chetouani, M.; Tapus, A.; Bidaud, P.; Dupourqué, V. Voice and graphical-based interfaces for interaction with a robot dedicated to elderly and people with cognitive disorders. In Proceedings of the 19th International Symposium on Robot and Human Interactive Communication (RO-MAN), Viareggio, Italy, 13–15 September 2010; pp. 785–790. [Google Scholar] [CrossRef]

- Stenmark, M.; Malec, J. A helping hand: Industrial robotics, knowledge and user-oriented services. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems Workshop: AI-based Robotics, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Schulz, R.; Talbot, B.; Lam, O.; Dayoub, F.; Corke, P.; Upcroft, B.; Wyeth, G. Robot navigation using human cues: A robot navigation system for symbolic goal-directed exploration. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1100–1105. [Google Scholar] [CrossRef]

- Boularias, A.; Duvallet, F.; Oh, J.; Stentz, A. Grounding spatial relations for outdoor robot navigation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1976–1982. [Google Scholar] [CrossRef]

- Kory, J.; Breazeal, C. Storytelling with robots: Learning companions for preschool children’s language development. In Proceedings of the 23rd International Symposium on Robot and Human Interactive Communication (RO-MAN), Edinburgh, UK, 25–29 August 2014; pp. 643–648. [Google Scholar] [CrossRef]

- Salvador, M.J.; Silver, S.; Mahoor, M.H. An emotion recognition comparative study of autistic and typically-developing children using the zeno robot. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6128–6133. [Google Scholar] [CrossRef]

- Breazeal, C. Social interactions in hri: The robot view. IEEE Trans. Syst. Man Cybern. 2004, 34, 81–186. [Google Scholar] [CrossRef]

- Belpaeme, T.; Baxter, P.; Greeff, J.D.; Kennedy, J.; Read, R.; Looije, R.; Neerincx, M.; Baroni, I.; Zelati, M.C. Child-robot interaction: Perspectives and challenges. In Proceedings of the International Conference on Social Robotics, Bristol, UK, 27–29 October 2013; pp. 452–459. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, X. Generating machine-executable plans from end-user’s natural-language instructions. Knowl.-Based Syst. 2018, 140, 15–26. [Google Scholar] [CrossRef]

- Alterovitz, R.; Sven, K.; Likhachev, M. Robot planning in the real world: Research challenges and opportunities. Ai Mag. 2016, 37, 76–84. [Google Scholar] [CrossRef]

- Misra, D.K.; Sung, J.; Lee, K.; Saxena, A. Tell me dave: Context-sensitive grounding of natural language to manipulation instructions. Int. J. Robot. Res. 2016, 35, 281–300. [Google Scholar] [CrossRef]

- Twiefel, J.; Hinaut, X.; Borghetti, M.; Strahl, E.; Wermter, S. Using natural language feedback in a neuro-inspired integrated multimodal robotic architecture. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016. [Google Scholar] [CrossRef]

- Ranjan, N.; Mundada, K.; Phaltane, K.; Ahmad, S. A survey on techniques in nlp. Int. J. Comput. Appl. 2016, 134, 6–9. [Google Scholar] [CrossRef]

- Kulić, D.; Croft, E.A. Safe planning for human-robot interaction. J. Field Robot. 2005, 22, 383–396. [Google Scholar] [CrossRef]

- Tuffield, P.; Elias, H. The shadow robot mimics human actions. Ind. Robot. Int. J. 2003, 30, 56–60. [Google Scholar] [CrossRef]

- He, J.; Spokoyny, D.; Neubig, G.; Berg-Kirkpatrick, T. Lagging inference networks and posterior collapse in variational autoencoders. In Proceedings of the 7th International Conference on Learning Representations, ICLR, New Orleans, LA, USA, 6–9 May 2019; Available online: https://openreview.net/forum?id=rylDfnCqF7 (accessed on 7 May 2024).

- Guo, J.; Lu, S.; Cai, H.; Zhang, W.; Yu, Y.; Wang, J. Long text generation via adversarial training with leaked information. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Ferreira, T.C.; Lee, C.v.; Miltenburg, E.v.; Krahmer, E. Neural data-to-text generation: A comparison between pipeline and end-to-end architectures. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 552–562. [Google Scholar] [CrossRef]

- McColl, D.; Louie, W.-Y.G.; Nejat, G. Brian 2.1: A socially assistive robot for the elderly and cognitively impaired. IEEE Robot. Autom. Mag. 2013, 20, 74–83. [Google Scholar] [CrossRef]

- Oord, A.v.d.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. In Proceedings of the 9th ISCA Speech Synthesis Workshop, Sunnyvale, CA, USA, 13–15 September 2016; p. 125. Available online: https://dblp.org/rec/journals/corr/OordDZSVGKSK16.bib (accessed on 7 May 2024).

- Kalchbrenner, N.; Elsen, E.; Simonyan, K.; Noury, S.; Casagrande, N.; Lockhart, E.; Stimberg, F.; Oord, A.v.d.; Dieleman, S.; Kavukcuoglu, K. Efficient neural audio synthesis. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 2410–2419. Available online: https://dblp.org/rec/journals/corr/abs-1802-08435.bib (accessed on 7 May 2024).

- Cid, F.; Moreno, J.; Bustos, P.; Núnez, P. Muecas: A multi-sensor robotic head for affective human robot interaction and imitation. Sensors 2014, 14, 7711. [Google Scholar] [CrossRef] [PubMed]

- Ke, X.; Cao, B.; Bai, J.; Zhang, W.; Zhu, Y. An interactive system for humanoid robot shfr-iii. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420913787. [Google Scholar] [CrossRef]

- Zhao, X.; Luo, Q.; Han, B. Survey on robot multi-sensor information fusion technology. In Proceedings of the 2008 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 5019–5023. [Google Scholar] [CrossRef]

- Denoyer, L.; Zaragoza, H.; Gallinari, P. Hmm-based passage models for document classification and ranking. In Proceedings of the European Conference on Information Retrieval, Darmstadt, Germany; 2001; pp. 126–135. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/hugoz_ecir01.pdf (accessed on 7 May 2024).

- Busch, J.E.; Lin, A.D.; Graydon, P.J.; Caudill, M. Ontology-Based Parser for Natural Language Processing. U.S. Patent 7,027,974, 11 April 2006. Available online: https://aclanthology.org/J15-2006.pdf (accessed on 7 May 2024).

- Alani, H.; Kim, S.; Millard, D.E.; Weal, M.J.; Hall, W.; Lewis, P.H.; Shadbolt, N.R. Automatic ontology-based knowledge extraction from web documents. IEEE Intell. Syst. 2003, 18, 14–21. [Google Scholar] [CrossRef]

- Cambria, E.; Hussain, A. Sentic Computing: Techniques, Tools, and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 2. [Google Scholar] [CrossRef]

- Young, R.M. Story and discourse: A bipartite model of narrative generation in virtual worlds. Interact. Stud. 2007, 8, 177–208. [Google Scholar] [CrossRef]

- Bex, F.J.; Prakken, H.; Verheij, B. Formalising argumentative story-based analysis of evidence. In Proceedings of the International Conference on Artificial Intelligence and Law, Stanford, CA, USA, 4–8 June 2007; pp. 1–10. [Google Scholar] [CrossRef]

- Stenzel, A.; Chinellato, E.; Bou, M.A.T.; del Pobil, P.; Lappe, M.; Liepelt, R. When humanoid robots become human-like interaction partners: Corepresentation of robotic actions. J. Exp. Psychol. Hum. Percept. Perform. 2012, 38, 1073. [Google Scholar] [CrossRef] [PubMed]

- Mitsunaga, N.; Smith, C.; Kanda, T.; Ishiguro, H.; Hagita, N. Adapting robot behavior for human-robot interaction. IEEE Trans. Robot. 2008, 24, 911–916. [Google Scholar] [CrossRef]

- Bruce, A.; Nourbakhsh, I.; Simmons, R. The role of expressiveness and attention in human-robot interaction. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Washington, DC, USA, 11–15 May 2002; Volume 4, pp. 4138–4142. [Google Scholar] [CrossRef]

- Staudte, M.; Crocker, M.W. Investigating joint attention mechanisms through spoken human–Robot interaction. Cognition 2011, 120, 268–291. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Zhang, X.; Webb, J.; Li, S. Context-specific intention awareness through web query in robotic caregiving. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1962–1967. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, X.; Li, S. Use context to understand user’s implicit intentions in activities of daily living. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 3–6 August 2014; pp. 1214–1219. [Google Scholar] [CrossRef]

- Selman, B. Nri: Collaborative Research: Jointly Learning Language and Affordances. 2014. Available online: https://www.degruyter.com/document/doi/10.1515/9783110787719/html?lang=en (accessed on 7 May 2024).

- Mooney, R. Nri: Robots that Learn to Communicate Through Natural Human Dialog. 2016. Available online: https://www.nsf.gov/awardsearch/showAward?AWD_ID=1637736&HistoricalAwards=false (accessed on 7 May 2024).

- Roy, N. Nri: Collaborative Research: Modeling and Verification of Language-Based Interaction. 2014. Available online: https://www.nsf.gov/awardsearch/showAward?AWD_ID=1427030&HistoricalAwards=false (accessed on 7 May 2024).

- University of Washington. Robotics and State Estimation Lab. 2017. Available online: http://rse-lab.cs.washington.edu/projects/language-grounding/ (accessed on 5 January 2017).

- Lund University. Robotics and State Estimation Lab. 2017. Available online: http://rss.cs.lth.se/ (accessed on 5 January 2017).

- Argall, B.D.; Chernova, S.; Veloso, M.; Browning, B. A survey of robot learning from demonstration. Robot. Auton. Syst. 2009, 57, 469–483. [Google Scholar] [CrossRef]

- Bethel, C.L.; Salomon, K.; Murphy, R.R.; Burke, J.L. Survey of psychophysiology measurements applied to human-robot interaction. In Proceedings of the 16th International Symposium on Robot and Human Interactive Communication (RO-MAN), Jeju Island, Republic of Korea, 26–29 August 2007; pp. 732–737. [Google Scholar] [CrossRef]

- Argall, B.D.; Billard, A.G. Survey of tactile human–robot interactions. Robot. Auton. Syst. 2010, 58, 1159–1176. [Google Scholar] [CrossRef]

- House, B.; Malkin, J.; Bilmes, J. The voicebot: A voice controlled robot arm. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 183–192. [Google Scholar] [CrossRef]

- Stenmark, M.; Nugues, P. Natural language programming of industrial robots. In Proceedings of the International Symposium on Robotics (ISR), Seoul, Republic of Korea, 24–26 October 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Jain, D.; Mosenlechner, L.; Beetz, M. Equipping robot control programs with first-order probabilistic reasoning capabilities. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 3626–3631. [Google Scholar] [CrossRef]

- Zelek, J.S. Human-robot interaction with minimal spanning natural language template for autonomous and tele-operated control. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Grenoble, France, 7–11 September 1997; Volume 1, pp. 299–305. [Google Scholar] [CrossRef]

- Romano, J.M.G.; Camacho, E.F.; Ortega, J.G.; Bonilla, M.T. A generic natural language interface for task planning—Application to a mobile robot. Control Eng. Pract. 2000, 8, 1119–1133. [Google Scholar] [CrossRef]

- Wang, B.; Li, Z.; Ding, N. Speech control of a teleoperated mobile humanoid robot. In Proceedings of the IEEE International Conference on Automation and Logistics (ICAL), Chongqing, China, 15–16 August 2011; pp. 339–344. [Google Scholar] [CrossRef]

- Gosavi, S.D.; Khot, U.P.; Shah, S. Speech recognition for robotic control. Int. J. Eng. Res. Appl. 2013, 3, 408–413. Available online: https://www.ijera.com/papers/Vol3_issue5/BT35408413.pdf (accessed on 7 May 2024).

- Tellex, S.; Roy, D. Spatial routines for a simulated speech-controlled vehicle. In Proceedings of the ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 156–163. [Google Scholar] [CrossRef]

- Stiefelhagen, R.; Fugen, C.; Gieselmann, R.; Holzapfel, H.; Nickel, K.; Waibel, A. Natural human-robot interaction using speech, head pose and gestures. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2422–2427. [Google Scholar] [CrossRef]

- Chen, S.; Kazi, Z.; Beitler, M.; Salganicoff, M.; Chester, D.; Foulds, R. Gesture-speech based hmi for a rehabilitation robot. In Proceedings of the IEEE Southeastcon’96: Bringing Together Education, Science and Technology, Tampa, FL, USA, 11–14 April 1996; pp. 29–36. [Google Scholar] [CrossRef]

- Bischoff, R.; Graefe, V. Integrating vision, touch and natural language in the control of a situation-oriented behavior-based humanoid robot. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Tokyo, Japan, 12–15 October 1999; Volume 2, pp. 999–1004. [Google Scholar] [CrossRef]

- Landau, B.; Jackendoff, R. Whence and whither in spatial language and spatial cognition? Behav. Brain Sci. 1993, 16, 255–265. [Google Scholar] [CrossRef]

- Ferre, M.; Macias-Guarasa, J.; Aracil, R.; Barrientos, A. Voice command generation for teleoperated robot systems. In Proceedings of the 7th International Symposium on Robot and Human Interactive Communication (RO-MAN), Kagawa, Japan, 30 September–2 October 1998; p. 679685. Available online: https://www.academia.edu/65732196/Voice_command_generation_for_teleoperated_robot_systems (accessed on 7 May 2024).

- Savage, J.; Hernández, E.; Vázquez, G.; Hernandez, A.; Ronzhin, A.L. Control of a Mobile Robot Using Spoken Commands. In Proceedings of the Conference Speech and Computer, St. Petersburg, Russia, 20–22 September 2004; Available online: https://workshops.aapr.at/wp-content/uploads/2019/05/ARW-OAGM19_24.pdf (accessed on 7 May 2024).

- Jayawardena, C.; Watanabe, K.; Izumi, K. Posture control of robot manipulators with fuzzy voice commands using a fuzzy coach–player system. Adv. Robot. 2007, 21, 293–328. [Google Scholar] [CrossRef]

- Antoniol, G.; Cattoni, R.; Cettolo, M.; Federico, M. Robust speech understanding for robot telecontrol. In Proceedings of the International Conference on Advanced Robotics, Tokyo, Japan, 8–9 November 1993; pp. 205–209. Available online: https://www.researchgate.net/publication/2771643_Robust_Speech_Understanding_for_Robot_Telecontrol (accessed on 7 May 2024).

- Levinson, S.; Zhu, W.; Li, D.; Squire, K.; Lin, R.-s.; Kleffner, M.; McClain, M.; Lee, J. Automatic language acquisition by an autonomous robot. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; Volume 4, pp. 2716–2721. [Google Scholar] [CrossRef]

- Scioni, E.; Borghesan, G.; Bruyninckx, H.; Bonfè, M. Bridging the gap between discrete symbolic planning and optimization-based robot control. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5075–5081. [Google Scholar] [CrossRef]

- Lallée, S.; Yoshida, E.; Mallet, A.; Nori, F.; Natale, L.; Metta, G.; Warneken, F.; Dominey, P.F. Human-robot cooperation based on interaction learning. In From Motor Learning to Interaction Learning in Robots; Springer: Berlin/Heidelberg, Germany, 2010; pp. 491–536. [Google Scholar] [CrossRef]

- Allen, J.; Duong, Q.; Thompson, C. Natural language service for controlling robots and other agents. In Proceedings of the International Conference on Integration of Knowledge Intensive Multi-Agent Systems, Waltham, MA, USA, 18–21 April 2005; pp. 592–595. [Google Scholar] [CrossRef]

- Fainekos, G.E.; Kress-Gazit, H.; Pappas, G.J. Temporal logic motion planning for mobile robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Barcelona, Spain, 18–22 April 2005; pp. 2020–2025. [Google Scholar] [CrossRef]

- Thomason, J.; Zhang, S.; Mooney, R.J.; Stone, P. Learning to interpret natural language commands through human-robot dialog. In Proceedings of the International Joint Conferences on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 1923–1929. Available online: https://dblp.org/rec/conf/ijcai/ThomasonZMS15.bib (accessed on 7 May 2024).

- Oates, T.; Eyler-Walker, Z.; Cohen, P. Using Syntax to Learn Semantics: An Experiment in Language Acquisition with a Mobile Robot; Technical Report; University of Massachusetts Computer Science Department: Amherst, MA, USA, 1999; Available online: https://www.researchgate.net/publication/2302747_Using_Syntax_to_Learn_Semantics_An_Experiment_in_Language_Acquisition_with_a_Mobile_Robot (accessed on 7 May 2024).

- Stenmark, M.; Malec, J.; Nilsson, K.; Robertsson, A. On distributed knowledge bases for robotized small-batch assembly. IEEE Trans. Autom. Sci. Eng. 2015, 12, 519–528. [Google Scholar] [CrossRef]

- Vogel, A.; Raghunathan, K.; Krawczyk, S. A Situated, Embodied Spoken Language System for Household Robotics. 2009. Available online: https://cs.stanford.edu/~rkarthik/Spoken%20Language%20System%20for%20Household%20Robotics.pdf (accessed on 7 May 2024).

- Nordmann, A.; Wrede, S.; Steil, J. Modeling of movement control architectures based on motion primitives using domain-specific languages. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5032–5039. [Google Scholar] [CrossRef]

- Bollini, M.; Tellex, S.; Thompson, T.; Roy, N.; Rus, D. Interpreting and executing recipes with a cooking robot. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 481–495. [Google Scholar] [CrossRef]

- Kruijff, G.-J.M.; Kelleher, J.D.; Berginc, G.; Leonardis, A. Structural descriptions in human-assisted robot visual learning. In Proceedings of the ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 343–344. [Google Scholar] [CrossRef]

- Salem, M.; Kopp, S.; Wachsmuth, I.; Joublin, F. Towards an integrated model of speech and gesture production for multi-modal robot behavior. In Proceedings of the 19th International Symposium on Robot and Human Interactive Communication (RO-MAN), Viareggio, Italy, 13–15 September 2010; pp. 614–619. [Google Scholar] [CrossRef]

- Knepper, R.A.; Tellex, S.; Li, A.; Roy, N.; Rus, D. ecovering from failure by asking for help. Auton. Robot. 2015, 39, 347–362. [Google Scholar] [CrossRef]

- Dindo, H.; Zambuto, D. A probabilistic approach to learning a visually grounded language model through human-robot interaction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 790–796. [Google Scholar] [CrossRef]

- Cuayáhuitl, H. Robot learning from verbal interaction: A brief survey. In Proceedings of the New Frontiers in Human-Robot Interaction, Canterbury, UK, 21–22 April 2015; Available online: https://www.cs.kent.ac.uk/events/2015/AISB2015/proceedings/hri/14-Cuayahuitl-robotlearningfrom.pdf (accessed on 7 May 2024).

- Yu, C.; Ballard, D.H. On the integration of grounding language and learning objects. In Proceedings of the 19th National Conference on Artificial Intelligence, San Jose, CA, USA, 25–29 July 2004; Volume 4, pp. 488–493. Available online: https://dl.acm.org/doi/abs/10.5555/1597148.1597228 (accessed on 7 May 2024).

- Nicolescu, M.; Mataric, M.J. Task learning through imitation and human-robot interaction. In Imitation and Social Learning in Robots, Humans and Animals: Behavioural, Social and Communicative Dimensions; Cambridge University Press: Cambridge, UK, 2007; pp. 407–424. [Google Scholar] [CrossRef]

- Roy, D. Learning visually grounded words and syntax of natural spoken language. Evol. Commun. 2000, 4, 33–56. [Google Scholar] [CrossRef]

- Lauria, S.; Bugmann, G.; Kyriacou, T.; Bos, J.; Klein, A. Training personal robots using natural language instruction. IEEE Intell. Syst. 2001, 16, 38–45. [Google Scholar] [CrossRef]

- Nicolescu, M.N.; Mataric, M.J. Natural methods for robot task learning: Instructive demonstrations, generalization and practice. In Proceedings of the International Joint Conference on Autonomous Agents and Multiagent Systems, Melbourne, Australia, 14–18 July 2003; pp. 241–248. [Google Scholar] [CrossRef]

- Sugiura, K.; Iwahashi, N. Learning object-manipulation verbs for human-robot communication. In Proceedings of the 2007 Workshop on Multimodal Interfaces in Semantic Interaction, Nagoya, Japan, 15 November 2007; pp. 32–38. [Google Scholar] [CrossRef]

- Kordjamshidi, P.; Hois, J.; van Otterlo, M.; Moens, M.-F. Learning to interpret spatial natural language in terms of qualitative spatial relations. In Representing Space in Cognition: Interrelations of Behavior, Language, and Formal Models; Oxford University Press: Oxford, UK, 2013; pp. 115–146. [Google Scholar] [CrossRef]

- Iwahashi, N. Robots that learn language: A developmental approach to situated human-robot conversations. In Human-Robot Interaction; IntechOpen: London, UK, 2007; pp. 95–118. [Google Scholar] [CrossRef]

- Yi, D.; Howard, T.M.; Goodrich, M.A.; Seppi, K.D. Expressing homotopic requirements for mobile robot navigation through natural language instructions. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1462–1468. [Google Scholar] [CrossRef]

- Paul, R.; Arkin, J.; Roy, N.; Howard, T.M. Efficient grounding of abstract spatial concepts for natural language interaction with robot manipulators. In Proceedings of the 2016 Robotics: Science and Systems XII Conference, Ann Arbor, MI, USA, 18–22 June 2016. [Google Scholar] [CrossRef]

- Uyanik, K.F.; Calskan, Y.; Bozcuoglu, A.K.; Yuruten, O.; Kalkan, S.; Sahin, E. Learning social affordances and using them for planning. In Proceedings of the Annual Meeting of the Cognitive Science Society, Berlin, Germany, 31 July–3 August 2013; Volume 35. No. 35. Available online: https://escholarship.org/uc/item/9cj412wg (accessed on 7 May 2024).

- Holroyd, A.; Rich, C. Using the behavior markup language for human-robot interaction. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 147–148. [Google Scholar] [CrossRef]

- Arumugam, D.; Karamcheti, S.; Gopalan, N.; Wong, L.L.; Tellex, S. Accurately and efficiently interpreting human-robot instructions of varying granularities. In Proceedings of the 2017 Robotics: Science and Systems XIII Conference, Cambridge, MA, USA, 12–16 July 2017. [Google Scholar] [CrossRef]

- Montesano, L.; Lopes, M.; Bernardino, A.; Santos-Victor, J. Modeling affordances using bayesian networks. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007; pp. 4102–4107. [Google Scholar] [CrossRef]

- Matuszek, C.; Bo, L.; Zettlemoyer, L.; Fox, D. Learning from unscripted deictic gesture and language for human-robot interactions. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 2556–2563. [Google Scholar] [CrossRef]

- Forbes, M.; Chung, M.J.-Y.; Cakmak, M.; Zettlemoyer, L.; Rao, R.P. Grounding antonym adjective pairs through interaction. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction—Workshop on Humans and Robots in Asymmetric Interactions, Bielefeld, Germany, 3–6 March 2014; pp. 1–4. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7016190/ (accessed on 7 May 2024).

- Krause, E.A.; Zillich, M.; Williams, T.E.; Scheutz, M. Learning to recognize novel objects in one shot through human-robot interactions in natural language dialogues. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 2796–2802. [Google Scholar] [CrossRef]

- Chai, J.Y.; Fang, R.; Liu, C.; She, L. Collaborative language grounding toward situated human-robot dialogue. AI Mag. 2016, 37, 32–45. [Google Scholar] [CrossRef]

- Liu, C.; Yang, S.; Saba-Sadiya, S.; Shukla, N.; He, Y.; Zhu, S.-C.; Chai, J. Jointly learning grounded task structures from language instruction and visual demonstration. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1482–1492. [Google Scholar] [CrossRef]

- Williams, T.; Briggs, G.; Oosterveld, B.; Scheutz, M. Going beyond literal command-based instructions: Extending robotic natural language interaction capabilities. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 1387–1393. [Google Scholar] [CrossRef]

- Bannat, A.; Blume, J.; Geiger, J.T.; Rehrl, T.; Wallhoff, F.; Mayer, C.; Radig, B.; Sosnowski, S.; Kühnlenz, K. A multimodal human-robot-dialog applying emotional feedbacks. In Proceedings of the International Conference on Social Robotics, Singapore, 23–24 November 2010; pp. 1–10. [Google Scholar] [CrossRef]

- Thomaz, A.L.; Breazeal, C. Teachable robots: Understanding human teaching behavior to build more effective robot learners. Artif. Intell. 2008, 172, 716–737. [Google Scholar] [CrossRef]

- Savage, J.; Rosenblueth, D.A.; Matamoros, M.; Negrete, M.; Contreras, L.; Cruz, J.; Martell, R.; Estrada, H.; Okada, H. Semantic reasoning in service robots using expert systems. Robot. Auton. Syst. 2019, 114, 77–92. [Google Scholar] [CrossRef]

- Brick, T.; Scheutz, M. Incremental natural language processing for hri. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 10–12 March 2007; pp. 263–270. [Google Scholar] [CrossRef]

- Gkatzia, D.; Lemon, O.; Rieser, V. Natural language generation enhances human decision-making with uncertain information. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 264–268. [Google Scholar] [CrossRef]

- Hough, J. Incremental semantics driven natural language generation with self-repairing capability. In Proceedings of the Student Research Workshop Associated with RANLP, Hissar, Bulgaria, 13 September 2011; pp. 79–84. Available online: https://aclanthology.org/R11-2012/ (accessed on 7 May 2024).

- Koller, A.; Petrick, R.P. Experiences with planning for natural language generation. Comput. Intell. 2011, 27, 23–40. [Google Scholar] [CrossRef]

- Tellex, S.; Knepper, R.; Li, A.; Rus, D.; Roy, N. Asking for help using inverse semantics. In Proceedings of the 2014 Robotics: Science and Systems X Conference, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Medina, J.R.; Lawitzky, M.; Mörtl, A.; Lee, D.; Hirche, S. An experience-driven robotic assistant acquiring human knowledge to improve haptic cooperation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 2416–2422. [Google Scholar] [CrossRef]

- Sugiura, K.; Iwahashi, N.; Kawai, H.; Nakamura, S. Situated spoken dialogue with robots using active learning. Adv. Robot. 2011, 25, 2207–2232. [Google Scholar] [CrossRef]

- Whitney, D.; Rosen, E.; MacGlashan, J.; Wong, L.L.; Tellex, S. Reducing errors in object-fetching interactions through social feedback. In Proceedings of the International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017. [Google Scholar] [CrossRef]

- Thomason, J.; Padmakumar, A.; Sinapov, J.; Walker, N.; Jiang, Y.; Yedidsion, H.; Hart, J.; Stone, P.; Mooney, R.J. Improving grounded natural language understanding through human-robot dialog. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6934–6941. [Google Scholar] [CrossRef]

- Alok, A.; Gupta, R.; Ananthakrishnan, S. Design considerations for hypothesis rejection modules in spoken language understanding systems. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 8049–8053. [Google Scholar] [CrossRef]

- Bicho, E.; Louro, L.; Erlhagen, W. Integrating verbal and nonverbal communication in a dynamic neural field architecture for human-robot interaction. Front. Neurorobot. 2010, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Broad, A.; Arkin, J.; Ratliff, N.; Howard, T.; Argall, B.; Graph, D.C. Towards real-time natural language corrections for assistive robots. In Proceedings of the Robotics: Science and Systems Workshop on Model Learning for Human-Robot Communication, Ann Arbor, MI, USA, 18–22 June 2016; Available online: https://journals.sagepub.com/doi/full/10.1177/0278364917706418 (accessed on 7 May 2024).

- Deits, R.; Tellex, S.; Thaker, P.; Simeonov, D.; Kollar, T.; Roy, N. Clarifying commands with information-theoretic human-robot dialog. J. Hum.-Robot. Interact. 2013, 2, 58–79. [Google Scholar] [CrossRef]

- Rybski, P.E.; Stolarz, J.; Yoon, K.; Veloso, M. Using dialog and human observations to dictate tasks to a learning robot assistant. Intell. Serv. Robot. 2008, 1, 159–167. [Google Scholar] [CrossRef]

- Dominey, P.F.; Mallet, A.; Yoshida, E. Progress in programming the hrp-2 humanoid using spoken language. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Roma, Italy, 10–14 April 2007; pp. 2169–2174. [Google Scholar] [CrossRef]

- Profanter, S.; Perzylo, A.; Somani, N.; Rickert, M.; Knoll, A. Analysis and semantic modeling of modality preferences in industrial human-robot interaction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1812–1818. [Google Scholar] [CrossRef]

- Lu, D.; Chen, X. Interpreting and extracting open knowledge for human-robot interaction. IEEE/CAA J. Autom. Sin. 2017, 4, 686–695. [Google Scholar] [CrossRef]

- Thomas, B.J.; Jenkins, O.C. Roboframenet: Verb-centric semantics for actions in robot middleware. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), St. Paul, MN, USA, 14–18 May 2012; pp. 4750–4755. [Google Scholar] [CrossRef]

- Ovchinnikova, E.; Wachter, M.; Wittenbeck, V.; Asfour, T. Multi-purpose natural language understanding linked to sensorimotor experience in humanoid robots. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), Seoul, Republic of Korea, 3–5 November 2015; pp. 365–372. [Google Scholar] [CrossRef]

- Burger, B.; Ferrané, I.; Lerasle, F.; Infantes, G. Two-handed gesture recognition and fusion with speech to command a robot. Auton. Robot. 2012, 32, 129–147. [Google Scholar] [CrossRef]

- Fong, T.; Nourbakhsh, I.; Kunz, C.; Fluckiger, L.; Schreiner, J.; Ambrose, R.; Burridge, R.; Simmons, R.; Hiatt, L.; Schultz, A.; et al. The peer-to-peer human-robot interaction project. Space 2005, 6750. [Google Scholar] [CrossRef]

- Bischoff, R.; Graefe, V. Dependable multimodal communication and interaction with robotic assistants. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Berlin, Germany, 27 September 2002; pp. 300–305. [Google Scholar] [CrossRef]

- Clodic, A.; Alami, R.; Montreuil, V.; Li, S.; Wrede, B.; Swadzba, A. A study of interaction between dialog and decision for human-robot collaborative task achievement. In Proceedings of the 16th International Symposium on Robot and Human Interactive Communication (RO-MAN), Jeju Island, Republic of Korea, 26–29 August 2007; pp. 913–918. [Google Scholar] [CrossRef]

- Ghidary, S.S.; Nakata, Y.; Saito, H.; Hattori, M.; Takamori, T. Multi-modal human robot interaction for map generation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Maui, HI, USA, 29 October–3 November 2001; Volume 4, pp. 2246–2251. [Google Scholar] [CrossRef]

- Kollar, T.; Tellex, S.; Roy, D.; Roy, N. Grounding verbs of motion in natural language commands to robots. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 31–47. [Google Scholar] [CrossRef]

- Bos, J. Applying automated deduction to natural language understanding. J. Appl. Log. 2009, 7, 100–112. [Google Scholar] [CrossRef]

- Huang, A.S.; Tellex, S.; Bachrach, A.; Kollar, T.; Roy, D.; Roy, N. Natural language command of an autonomous micro-air vehicle. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 2663–2669. [Google Scholar] [CrossRef]

- Moore, R.K. Is spoken language all-or-nothing? Implications for future speech-based human-machine interaction. In Dialogues with Social Robots; Springer: Berlin/Heidelberg, Germany, 2017; pp. 281–291. [Google Scholar] [CrossRef]

- Sakita, K.; Ogawara, K.; Murakami, S.; Kawamura, K.; Ikeuchi, K. Flexible cooperation between human and robot by interpreting human intention from gaze information. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 1, pp. 846–851. [Google Scholar] [CrossRef]

- Abioye, A.O.; Prior, S.D.; Thomas, G.T.; Saddington, P.; Ramchurn, S.D. The multimodal speech and visual gesture (msvg) control model for a practical patrol, search, and rescue aerobot. In Proceedings of the Annual Conference towards Autonomous Robotic Systems, Bristol, UK, 25–27 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 423–437. [Google Scholar] [CrossRef]

- Schiffer, S.; Hoppe, N.; Lakemeyer, G. Natural language interpretation for an interactive service robot in domestic domains. In Proceedings of the International Conference on Agents and Artificial Intelligence, Algarve, Portugal, 6–8 February 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 39–53. [Google Scholar] [CrossRef]

- Strait, M.; Briggs, P.; Scheutz, M. Gender, more so than age, modulates positive perceptions of language-based human-robot interactions. In Proceedings of the International Symposium on New Frontiers in Human Robot Interaction, Canterbury, UK, 21–22 April 2015; Available online: https://hrilab.tufts.edu/publications/straitetal15aisb/ (accessed on 7 May 2024).

- Gorostiza, J.F.; Salichs, M.A. Natural programming of a social robot by dialogs. In Proceedings of the Association for the Advancement of Artificial Intelligence Fall Symposium: Dialog with Robots, Arlington, VA, USA, 11–13 November 2010; Available online: https://dblp.org/rec/conf/aaaifs/GorostizaS10.bib (accessed on 7 May 2024).

- Mutlu, B.; Forlizzi, J.; Hodgins, J. A storytelling robot: Modeling and evaluation of human-like gaze behavior. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Genova, Italy, 4–6 December 2006; pp. 518–523. [Google Scholar] [CrossRef]

- Wang, W.; Athanasopoulos, G.; Yilmazyildiz, S.; Patsis, G.; Enescu, V.; Sahli, H.; Verhelst, W.; Hiolle, A.; Lewis, M.; Cañamero, L.C. Natural emotion elicitation for emotion modeling in child-robot interactions. In Proceedings of the WOCCI, Singapore, 19 September 2014; pp. 51–56. Available online: http://www.isca-speech.org/archive/wocci_2014/wc14_051.html (accessed on 7 May 2024).

- Breazeal, C.; Aryananda, L. Recognition of affective communicative intent in robot-directed speech. Auton. Robot. 2002, 12, 83–104. [Google Scholar] [CrossRef]

- Lockerd, A.; Breazeal, C. Tutelage and socially guided robot learning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3475–3480. [Google Scholar] [CrossRef]

- Breazeal, C. Toward sociable robots. Robot. Auton. Syst. 2003, 42, 167–175. [Google Scholar] [CrossRef]

- Severinson-Eklundh, K.; Green, A.; Hüttenrauch, H. Social and collaborative aspects of interaction with a service robot. Robot. Auton. Syst. 2003, 42, 223–234. [Google Scholar] [CrossRef]

- Austermann, A.; Esau, N.; Kleinjohann, L.; Kleinjohann, B. Fuzzy emotion recognition in natural speech dialogue. In Proceedings of the 24th International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2005; pp. 317–322. [Google Scholar] [CrossRef]

- Coeckelbergh, M. You, robot: On the linguistic construction of artificial others. AI Soc. 2011, 26, 61–69. [Google Scholar] [CrossRef]

- Read, R.; Belpaeme, T. How to use non-linguistic utterances to convey emotion in child-robot interaction. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 219–220. [Google Scholar] [CrossRef]

- Kruijff-Korbayová, I.; Baroni, I.; Nalin, M.; Cuayáhuitl, H.; Kiefer, B.; Sanna, A. Children’s turn-taking behavior adaptation in multi-session interactions with a humanoid robot. Int. J. Humanoid Robot. 2013, 11, 1–27. Available online: https://schiaffonati.faculty.polimi.it/TFI/ijhr.pdf (accessed on 7 May 2024).

- Sabanovic, S.; Michalowski, M.P.; Simmons, R. Robots in the wild: Observing human-robot social interaction outside the lab. In Proceedings of the IEEE International Workshop on Advanced Motion Control, Auckland, New Zealand, 22–24 April 2016; pp. 596–601. [Google Scholar] [CrossRef]

- Okuno, H.G.; Nakadai, K.; Kitano, H. Social interaction of humanoid robot based on audio-visual tracking. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Cairns, Australia, 17–20 June 2002; pp. 725–735. [Google Scholar] [CrossRef]

- Chella, A.; Barone, R.E.; Pilato, G.; Sorbello, R. An emotional storyteller robot. In Proceedings of the Association for the Advancement of Artificial Intelligence Spring Symposium: Emotion, Personality, and Social Behavior, Stanford, CA, USA, 26–28 March 2008; pp. 17–22. Available online: https://dblp.org/rec/conf/aaaiss/ChellaBPS08.bib (accessed on 7 May 2024).

- Petrick, R. Extending the knowledge-level approach to planning for social interaction. In Proceedings of the 31st Workshop of the UK Planning and Scheduling Special Interest Group, Edinburgh, Scotland, UK, 29–30 January 2014; p. 2. Available online: http://plansig2013.org/ (accessed on 7 May 2024).

- Schuller, B.; Rigoll, G.; Can, S.; Feussner, H. Emotion sensitive speech control for human-robot interaction in minimal invasive surgery. In Proceedings of the 17th International Symposium on Robot and Human Interactive Communication (RO-MAN), Munich, Germany, 1–3 August 2008; pp. 453–458. [Google Scholar] [CrossRef]

- Schuller, B.; Eyben, F.; Can, S.; Feussner, H. Speech in minimal invasive surgery-towards an affective language resource of real-life medical operations. In Proceedings of the 3rd Intern. Workshop on EMOTION (Satellite of LREC): Corpora for Research on Emotion and Affect, Valletta, Malta, 17–23 May 2010; pp. 5–9. Available online: http://www.lrec-conf.org/proceedings/lrec2010/workshops/W24.pdf (accessed on 7 May 2024).

- Romero-González, C.; Martínez-Gómez, J.; García-Varea, I. Spoken language understanding for social robotics. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–17 April 2020; pp. 152–157. [Google Scholar] [CrossRef]

- Logan, D.E.; Breazeal, C.; Goodwin, M.S.; Jeong, S.; O’Connell, B.; Smith-Freedman, D.; Heathers, J.; Weinstock, P. Social robots for hospitalized children. Pediatrics 2019, 144. [Google Scholar] [CrossRef] [PubMed]

- Hong, J.H.; Taylor, J.; Matson, E.T. Natural multi-language interaction between firefighters and fire fighting robots. In Proceedings of the 2014 IEEE/WIC/ACM International Joint Conferences on Web Intelligence (WI) and Intelligent Agent Technologies (IAT), Warsaw, Poland, 11–14 August 2014; Volume 3, pp. 183–189. [Google Scholar] [CrossRef]

- Fernández-Llamas, C.; Conde, M.A.; Rodríguez-Lera, F.J.; Rodríguez-Sedano, F.J.; García, F. May i teach you? Students’ behavior when lectured by robotic vs. human teachers. Comput. Hum. Behav. 2018, 80, 460–469. [Google Scholar] [CrossRef]

- Fry, J.; Asoh, H.; Matsui, T. Natural dialogue with the jijo-2 office robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Victoria, BC, Canada, 13–17 October 1998; Volume 2, pp. 1278–1283. [Google Scholar] [CrossRef]

- Lee, K.W.; Kim, H.-R.; Yoon, W.C.; Yoon, Y.-S.; Kwon, D.-S. Designing a human-robot interaction framework for home service robot. In Proceedings of the 14th International Symposium on Robot and Human Interactive Communication (RO-MAN), Nashville, Tennessee, 13–15 August 2005; pp. 286–293. [Google Scholar] [CrossRef]

- Hsiao, K.-y.; Vosoughi, S.; Tellex, S.; Kubat, R.; Roy, D. Object schemas for responsive robotic language use. In Proceedings of the ACM/IEEE International Conference on Human Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 233–240. [Google Scholar] [CrossRef]

- Motallebipour, H.; Bering, A. A Spoken Dialogue System to Control Robots. 2002. Available online: https://lup.lub.lu.se/luur/download?func=downloadFile&recordOId=3129332&fileOId=3129339 (accessed on 7 May 2024).

- McGuire, P.; Fritsch, J.; Steil, J.J.; Rothling, F.; Fink, G.A.; Wachsmuth, S.; Sagerer, G.; Ritter, H. Multi-modal human-machine communication for instructing robot grasping tasks. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Lausanne, Switzerland, 30 September–4 October 2002; Volume 2, pp. 1082–1088. [Google Scholar] [CrossRef]

- Zender, H.; Jensfelt, P.; Mozos, O.M.; Kruijff, G.-J.M.; Burgard, W. An integrated robotic system for spatial understanding and situated interaction in indoor environments. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22–26 July 2007; Volume 7, pp. 1584–1589. Available online: https://dblp.org/rec/conf/aaai/ZenderJMKB07.bib (accessed on 7 May 2024).

- Foster, M.E.; By, T.; Rickert, M.; Knoll, A. Human-robot dialogue for joint construction tasks. In Proceedings of the International Conference on Multimodal Interfaces, Banff, AB, Canada, 2–4 November 2006; pp. 68–71. [Google Scholar] [CrossRef]

- Dominey, P.F. Spoken language and vision for adaptive human-robot cooperation. In Humanoid Robots: New Developments; IntechOpen: London, UK, 2007. [Google Scholar] [CrossRef]

- Ranaldi, L.; Pucci, G. Knowing knowledge: Epistemological study of knowledge in transformers. Appl. Sci. 2023, 13, 677. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: New York, NY, USA; pp. 5998–6008. Available online: https://dblp.org/rec/conf/nips/VaswaniSPUJGKP17.bib (accessed on 7 May 2024).

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Perkins, J. Python Text Processing with NLTK 2.0 Cookbook; Packt Publishing Ltd.: Birmingham, UK, 2010; Available online: https://dl.acm.org/doi/10.5555/1952104 (accessed on 7 May 2024).

- Cunningham, H.; Maynard, D.; Bontcheva, K.; Tablan, V. Gate: An architecture for development of robust hlt applications. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 168–175. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, J.H. Speech and language processing: An introduction to natural language processing, computational linguistics, and speech recognition. In Prentice Hall Series in Artificial Intelligence; Prentice Hall: Saddle River, NJ, USA, 2009; pp. 1–1024. Available online: https://dblp.org/rec/books/lib/JurafskyM09.bib (accessed on 7 May 2024).

- Fellbaum, C. Wordnet. 2010. Available online: https://link.springer.com/chapter/10.1007/978-90-481-8847-5_10#citeas (accessed on 7 May 2024).

- Manning, C.; Surdeanu, M.; Bauer, J.; Finkel, J.; Bethard, S.; McClosky, D. The stanford corenlp natural language processing toolkit. In Proceedings of the Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 22–27 June 2014; pp. 55–60. [Google Scholar] [CrossRef]

- Foundation, A.S. Opennlp Natural Language Processing Library. 2017. Available online: http://opennlp.apache.org (accessed on 5 January 2017).

- McCandless, M.; Hatcher, E.; Gospodnetic, O. Lucene in Action: Covers Apache Lucene 3.0; Manning Publications Co.: Shelter Island, NY, USA, 2010. [Google Scholar]

- Cunningham, H. Gate, a general architecture for text engineering. Comput. Humanit. 2002, 36, 223–254. [Google Scholar] [CrossRef]

- Honnibal, M.; Montani, I. spaCy 2: Natural Language Understanding with Bloom Embeddings, Convolutional Neural Networks and Incremental Parsing. 2017. Available online: https://spacy.io (accessed on 5 January 2017).

- Speer, R.; Chin, J.; Havasi, C. Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar] [CrossRef]

- Weide, R. The Carnegie Mellon Pronouncing Dictionary of American English. 2014. Available online: http://www.speech.cs.cmu.edu/cgi-bin/cmudict (accessed on 5 January 2017).

- Wilson, M. Mrc psycholinguistic database: Machine-usable dictionary, version 2.00. Behav. Res. Methods Instrum. Comput. 1988, 20, 6–10. [Google Scholar] [CrossRef]

- Davies, M. Word Frequency Data: Most Frequent 100,000 Word Forms in English (Based on Data from the Coca Corpus). 2011. Available online: http://www.wordfrequency.info/ (accessed on 5 January 2017).

- Beth, L.; John, S.; Bonnie, D.; Martha, P.; Timothy, C.; Charles, F. Verb Semantics Ontology Project. 2011. Available online: http://lingo.stanford.edu/vso/ (accessed on 5 January 2017).

- Daan, V.E. Leiden Weibo Corpus. 2012. Available online: http://lwc.daanvanesch.nl/ (accessed on 5 January 2017).

- Carlos, S.-R. Spanish Framenet: A Frame-Semantic Analysis of the Spanish Lexicon.(w:) Multilingual Framenets in Computational Lexicography: Methods and Applications.(red.) Hans Boas; Mouton de Gruyter: Berlin, Germany; New York, NY, USA, 2009; pp. 135–162. Available online: https://www.researchgate.net/publication/230876727_Spanish_Framenet_A_frame-semantic_analysis_of_the_Spanish_lexicon (accessed on 5 January 2017).

- Lee, S.; Kim, C.; Lee, J.; Noh, H.; Lee, K.; Lee, G.G. Affective effects of speech-enabled robots for language learning. In Proceedings of the Spoken Language Technology Workshop (SLT), Berkeley, CA, USA, 12–15 December 2010; pp. 145–150. [Google Scholar] [CrossRef]

- Majdalawieh, O.; Gu, J.; Meng, M. An htk-developed hidden markov model (hmm) for a voice-controlled robotic system. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 4050–4055. [Google Scholar] [CrossRef]

- Tikhanoff, V.; Cangelosi, A.; Metta, G. Integration of speech and action in humanoid robots: Icub simulation experiments. IEEE Trans. Auton. Ment. Dev. 2011, 3, 17–29. [Google Scholar] [CrossRef]

- Linssen, J.; Theune, M. R3d3: The rolling receptionist robot with double dutch dialogue. In Proceedings of the Companion of the ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 189–190. [Google Scholar] [CrossRef]

- Mitsunaga, N.; Miyashita, T.; Ishiguro, H.; Kogure, K.; Hagita, N. Robovie-iv: A communication robot interacting with people daily in an office. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5066–5072. [Google Scholar] [CrossRef]

- Sinyukov, D.A.; Li, R.; Otero, N.W.; Gao, R.; Padir, T. Augmenting a voice and facial expression control of a robotic wheelchair with assistive navigation. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014; pp. 1088–1094. [Google Scholar] [CrossRef]

- Nikalaenka, K.; Hetsevich, Y. Training Algorithm for Speaker-Independent Voice Recognition Systems Using Htk. 2016. Available online: https://elib.bsu.by/bitstream/123456789/158753/1/Nikalaenka_Hetsevich.pdf (accessed on 7 May 2024).

- Maas, A.; Xie, Z.; Jurafsky, D.; Ng, A.Y. Lexicon-free conversational speech recognition with neural networks. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 345–354. [Google Scholar] [CrossRef]

- Graves, A.; Jaitly, N. Towards end-to-end speech recognition with recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1764–1772. Available online: https://dblp.org/rec/conf/icml/GravesJ14.bib (accessed on 7 May 2024).

- Xiong, W.; Wu, L.; Alleva, F.; Droppo, J.; Huang, X.; Stolcke, A. The microsoft 2017 conversational speech recognition system. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5934–5938. [Google Scholar] [CrossRef]

- Saon, G.; Kurata, G.; Sercu, T.; Audhkhasi, K.; Thomas, S.; Dimitriadis, D.; Cui, X.; Ramabhadran, B.; Picheny, M.; Lim, L.-L.; et al. English conversational telephone speech recognition by humans and machines. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 132–136. [Google Scholar] [CrossRef]

- Synnaeve, G.; Xu, Q.; Kahn, J.; Grave, E.; Likhomanenko, T.; Pratap, V.; Sriram, A.; Liptchinsky, V.; Collobert, R. End-to-end asr: From supervised to semi-supervised learning with modern architectures. In Proceedings of the Workshop on Self-Supervision in Audio and Speech (SAS) at the 37th International Conference on Machine Learning, Virtual Event, 13–18 July 2020; Available online: https://dblp.org/rec/journals/corr/abs-1911-08460.bib (accessed on 7 May 2024).

- Graciarena, M.; Franco, H.; Sonmez, K.; Bratt, H. Combining standard and throat microphones for robust speech recognition. IEEE Signal Process. Lett. 2003, 10, 72–74. [Google Scholar] [CrossRef]

- Lauria, S.; Bugmann, G.; Kyriacou, T.; Klein, E. Mobile robot programming using natural language. Robot. Auton. Syst. 2002, 38, 171–181. [Google Scholar] [CrossRef]

- Sung, J.; Ponce, C.; Selman, B.; Saxena, A. Unstructured human activity detection from rgbd images. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St. Paul, MN, USA, 14–18 May 2012; pp. 842–849. [Google Scholar] [CrossRef]

- Tenorth, M.; Bandouch, J.; Beetz, M. The tum kitchen data set of everyday manipulation activities for motion tracking and action recognition. In Proceedings of the International Conference on Computer Vision Workshops (ICCV), Kyoto, Japan, 27 September–4 October 2009; pp. 1089–1096. [Google Scholar] [CrossRef]

- Nehmzow, U.; Walker, K. Quantitative description of robot–environment interaction using chaos theory. Robot. Auton. Syst. 2005, 53, 177–193. [Google Scholar] [CrossRef]

- Hirsch, H.-G.; Pearce, D. The aurora experimental framework for the performance evaluation of speech recognition systems under noisy conditions. In Proceedings of the ASR2000-Automatic Speech Recognition: Challenges for the New Millenium ISCA Tutorial and Research Workshop (ITRW), Pairs, France, 18–20 September 2000; pp. 181–188. [Google Scholar] [CrossRef]

- Krishna, G.; Tran, C.; Yu, J.; Tewfik, A.H. Speech recognition with no speech or with noisy speech. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1090–1094. [Google Scholar] [CrossRef]

- Rashno, E.; Akbari, A.; Nasersharif, B. A convolutional neural network model based on neutrosophy for noisy speech recognition. In Proceedings of the 2019 4th International Conference on Pattern Recognition and Image Analysis (IPRIA), Tehran, Iran, 6–7 March 2019. [Google Scholar] [CrossRef]

- Errattahi, R.; Hannani, A.E.; Ouahmane, H. Automatic speech recognition errors detection and correction: A review. Procedia Comput. Sci. 2018, 128, 32–37. [Google Scholar] [CrossRef]

- Guo, J.; Sainath, T.N.; Weiss, R.J. A spelling correction model for end-to-end speech recognition. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 5651–5655. [Google Scholar] [CrossRef]

- Abella, A.; Gorin, A.L. Method for Dialog Management. U.S. Patent 8,600,747, 3 December 2013. Available online: https://patentimages.storage.googleapis.com/05/ba/43/94a73309a3c9ef/US8600747.pdf (accessed on 7 May 2024).

- Lu, D.; Zhang, S.; Stone, P.; Chen, X. Leveraging commonsense reasoning and multimodal perception for robot spoken dialog systems. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6582–6588. [Google Scholar] [CrossRef]

- Zare, M.; Ayub, A.; Wagner, A.R.; Passonneau, R.J. Show me how to win: A robot that uses dialog management to learn from demonstrations. In Proceedings of the 14th International Conference on the Foundations of Digital Games, San Luis Obispo, CA, USA, 26–30 August 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Jayawardena, C.; Kuo, I.H.; Unger, U.; Igic, A.; Wong, R.; Watson, C.I.; Stafford, R.; Broadbent, E.; Tiwari, P.; Warren, J.; et al. Deployment of a service robot to help older people. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 5990–5995. [Google Scholar] [CrossRef]

- Levit, M.; Chang, S.; Buntschuh, B.; Kibre, N. End-to-end speech recognition accuracy metric for voice-search tasks. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 5141–5144. [Google Scholar] [CrossRef]

- Godfrey, J.J.; Holliman, E. Switchboard-1 release 2 ldc97s62. In Philadelphia: Linguistic Data Consortium; The Trustees of the University of Pennsylvania: Philadelphia, PA, USA, 1993. [Google Scholar] [CrossRef]

- Cieri, C.; Graff, D.; Kimball, O.; Miller, D.; Walker, K. Fisher english training speech part 1 transcripts ldc2004t19. In Philadelphia: Linguistic Data Consortium; The Trustees of the University of Pennsylvania: Philadelphia, PA, USA, 2004. [Google Scholar] [CrossRef]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An asr corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar] [CrossRef]

- Xiong, W.; Droppo, J.; Huang, X.; Seide, F.; Seltzer, M.; Stolcke, A.; Yu, D.; Zweig, G. Toward human parity in conversational speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2410–2423. [Google Scholar] [CrossRef]

- Coucke, A.; Saade, A.; Ball, A.; Bluche, T.; Caulier, A.; Leroy, D.; Doumouro, C.; Gisselbrecht, T.; Caltagirone, F.; Lavril, T.; et al. Snips voice platform: An embedded spoken language understanding system for private-by-design voice interfaces. arXiv 2018, arXiv:1805.10190. Available online: https://dblp.org/rec/journals/corr/abs-1805-10190.bib (accessed on 7 May 2024).

- Bastianelli, E.; Vanzo, A.; Swietojanski, P.; Rieser, V. SLURP: A Spoken Language Understanding Resource Package. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7252–7262, Association for Computational Linguistics. [Google Scholar] [CrossRef]

- Steinfeld, A.; Fong, T.; Kaber, D.; Lewis, M.; Scholtz, J.; Schultz, A.; Goodrich, M. Common metrics for human-robot interaction. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 33–40. [Google Scholar] [CrossRef]

- Buhrmester, M.; Kwang, T.; Gosling, S.D. Amazon’s mechanical turk: A new source of inexpensive, yet high-quality data? In Methodological Issues and Strategies in Clinical Research; American Psychological Association: Washington, DC, USA, 2016; pp. 133–139. [Google Scholar] [CrossRef]

- Chen, Z.; Fu, R.; Zhao, Z.; Liu, Z.; Xia, L.; Chen, L.; Cheng, P.; Cao, C.C.; Tong, Y.; Zhang, C.J. Gmission: A general spatial crowdsourcing platform. In Proceedings of the VLDB Endowment, Hangzhou, China, 1–5 September 2014; Volume 7, pp. 1629–1632. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. Available online: https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 7 May 2024).

- Hatori, J.; Kikuchi, Y.; Kobayashi, S.; Takahashi, K.; Tsuboi, Y.; Unno, Y.; Ko, W.; Tan, J. Interactively picking real-world objects with unconstrained spoken language instructions. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 3774–3781. [Google Scholar] [CrossRef]

- Patki, S.; Daniele, A.F.; Walter, M.R.; Howard, T.M. Inferring compact representations for efficient natural language understanding of robot instructions. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6926–6933. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. Albert: A lite bert for self-supervised learning of language representations. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020; Available online: https://openreview.net/forum?id=H1eA7AEtvS (accessed on 7 May 2024).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Glasgow, UK, 2020; Volume 33, pp. 1877–1901. Available online: https://proceedings.neurips.cc/paper/2020/file/1457c0d6bfcb4967418bfb8ac142f64a-Paper.pdf (accessed on 7 May 2024).

- Dai, Z.; Callan, J. Deeper text understanding for ir with contextual neural language modeling. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 985–988. [Google Scholar] [CrossRef]

- Massouh, N.; Babiloni, F.; Tommasi, T.; Young, J.; Hawes, N.; Caputo, B. Learning deep visual object models from noisy web data: How to make it work. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5564–5571. [Google Scholar] [CrossRef]

- Ronzano, F.; Saggion, H. Knowledge extraction and modeling from scientific publications. In Semantics, Analytics, Visualization. Enhancing Scholarly Data; González-Beltrán, A., Osborne, F., Peroni, S., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 11–25. [Google Scholar] [CrossRef]

- Liu, C. Automatic discovery of behavioral models from software execution data. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1897–1908. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, X.; Zhang, H. Web-video-mining-supported workflow modeling for laparoscopic surgeries. Artif. Intell. Med. 2016, 74, 9–20. [Google Scholar] [CrossRef] [PubMed]

- Kawakami, T.; Morita, T.; Yamaguchi, T. Building wikipedia ontology with more semi-structured information resources. In Semantic Technology; Wang, Z., Turhan, A.-Y., Wang, K., Zhang, X., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 3–18. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, X. Context-specific grounding of web natural descriptions to human-centered situations. Knowl.-Based Syst. 2016, 111, 1–16. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Ritchie, D.; Wu, J.; Xu, K.; Zhang, H. Learning generative models of 3d structures. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2020; Volume 39, pp. 643–666. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- Tanevska, A.; Rea, F.; Sandini, G.; Cañamero, L.; Sciutti, A. A cognitive architecture for socially adaptable robots. In Proceedings of the 2019 Joint IEEE 9th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), Oslo, Norway, 19–22 August 2019; pp. 195–200. [Google Scholar] [CrossRef]

- Koppula, H.S.; Saxena, A. Anticipating human activities using object affordances for reactive robotic response. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 14–29. [Google Scholar] [CrossRef] [PubMed]

- MacGlashan, J.; Ho, M.K.; Loftin, R.; Peng, B.; Wang, G.; Roberts, D.L.; Taylor, M.E.; Littman, M.L. Interactive learning from policy-dependent human feedback. In Proceedings of the 34th International Conference on Machine Learning—Volume 70, ICML’17, Sydney, NSW, Australia, 6–11 August 2017; pp. 2285–2294, JMLR.org. Available online: https://dblp.org/rec/conf/icml/MacGlashanHLPWR17.bib (accessed on 7 May 2024).

- Raccuglia, P.; Elbert, K.C.; Adler, P.D.; Falk, C.; Wenny, M.B.; Mollo, A.; Zeller, M.; Friedler, S.A.; Schrier, J.; Norquist, A.J. Machine-learning-assisted materials discovery using failed experiments. Nature 2016, 533, 73. [Google Scholar] [CrossRef] [PubMed]

- Ling, H.; Fidler, S. Teaching machines to describe images with natural language feedback. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Glasgow, UK, 2017; Volume 30. [Google Scholar]

- Honig, S.; Oron-Gilad, T. Understanding and resolving failures in human-robot interaction: Literature review and model development. Front. Psychol. 2018, 9, 861. [Google Scholar] [CrossRef]

- Ritschel, H.; André, E. Shaping a social robot’s humor with natural language generation and socially-aware reinforcement learning. In Proceedings of the Workshop on NLG for Human—Robot Interaction, Tilburg, The Netherlands, 31 December 2018; pp. 12–16. [Google Scholar] [CrossRef]

- Shah, P.; Fiser, M.; Faust, A.; Kew, C.; Hakkani-Tur, D. Follownet: Robot navigation by following natural language directions with deep reinforcement learning. In Proceedings of the Third Machine Learning in Planning and Control of Robot Motion Workshop at ICRA, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Li, X.; Serlin, Z.; Yang, G.; Belta, C. A formal methods approach to interpretable reinforcement learning for robotic planning. Sci. Robot. 2019, 4, eaay6276. [Google Scholar] [CrossRef]

- Chevalier-Boisvert, M.; Bahdanau, D.; Lahlou, S.; Willems, L.; Saharia, C.; Nguyen, T.H.; Bengio, Y. Babyai: A platform to study the sample efficiency of grounded language learning. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; Available online: https://dblp.org/rec/conf/iclr/Chevalier-Boisvert19.bib (accessed on 7 May 2024).

- Cao, T.; Wang, J.; Zhang, Y.; Manivasagam, S. Babyai++: Towards grounded-language learning beyond memorization. In Proceedings of the ICLR 2020 Workshop: Beyond Tabula Rasa in RL, Addis Ababa, Ethiopia, 26–30 April 2020; Available online: https://dblp.org/rec/journals/corr/abs-2004-07200.bib (accessed on 7 May 2024).

| NLexe Systems | Application Scenarios | Robot Cognition Level | Human-Robot Role Relations | Human Involvement | Robot Involvements |

|---|---|---|---|---|---|

| Execution Control | action selection, manipulation pose adjustment, navigation planning | minimal | leader-follower | cognitive burden | physical burden |

| Execution Training | assembly process learning, object identification, instruction disambiguation, speech-motion mapping | moderate | leader-follower | heavy cognitive burden | heavy physical burden |

| Interactive Executions | assembly, navigation in unstructured environments | elevated | cooperator-cooperator | cognitive and physical burden | cognitive and physical burden |

| NL-based social execution systems | restaurant reception, interpersonal spacing measures, kinesic cues learning in conjunction with oral communication, human-mimetic strategies for object interaction | maximum | cooperator-cooperator | partial cognitive/physical burden | partial cognitive/physical burden |

| Concise Phrases | Semantic Correlations | Execution Logic | Environmental Conditions | |

|---|---|---|---|---|

| Instruction Manner | predefined | predefined | predefined | sensing |

| Instruction Format | symbolic words | linguistic structure | control logic formulas | real-world context |

| Applications | object grasping, trajectory planning, navigation | object grasping, navigation | sequential task planning, hand-pose selection, assembly | safe grasping, daily assistance, precise navigation |

| Advantages | concise, accurate | flexible | flexible | task adaptive |

| Disadvantages | limited adaptability | limited adaptability | ignore real conditions | lack commonsense |

| References | [83,85,86,90,92] | [81,89,96,97,98] | [99,100,101,102,103] | [39,97,104,105,106] |