Abstract

The recent surge of generative artificial intelligence (AI) in higher education presents a fascinating landscape of opportunities and challenges. AI has the potential to personalize education and create more engaging learning experiences. However, the effectiveness of AI interventions relies on well-considered implementation strategies. The impact of AI platforms in education is largely determined by the particular learning environment and the distinct needs of each student. Consequently, investigating the attitudes of future educators towards this technology is becoming a critical area of research. This study explores the impact of generative AI platforms on students’ learning performance, experience, and satisfaction within higher education. It specifically focuses on students’ experiences with varying levels of technological proficiency. A comparative study was conducted with two groups from different academic contexts undergoing the same experimental condition to design, develop, and implement instructional design projects using various AI platforms to produce multimedia content tailored to their respective subjects. Undergraduates from two disciplines—Early Childhood Education (n = 32) and Computer Science (n = 34)—participated in this study, which examined the integration of generative AI platforms into educational content implementation. Results indicate that both groups demonstrated similar learning performance in designing, developing, and implementing instructional design projects. Regarding user experience, the general outcomes were similar across both groups; however, Early Childhood Education students rated the usefulness of AI multimedia platforms significantly higher. Conversely, Computer Science students reported a slightly higher comfort level with these tools. In terms of overall satisfaction, Early Childhood Education students expressed greater satisfaction with AI software than their counterparts, acknowledging its importance for their future careers. This study contributes to the understanding of how AI platforms affect students from diverse backgrounds, bridging a gap in the knowledge of user experience and learning outcomes. Furthermore, by exploring best practices for integrating AI into educational contexts, it provides valuable insights for educators and scholars seeking to optimize the potential of AI to enhance educational outcomes.

1. Introduction

Higher education is undergoing a rapid transformation as artificial intelligence (AI) integration accelerates digital literacy innovation [1]. There is a growing interest in fostering digital literacy with a focus on AI-supported digital literacy among undergraduates, driven by the necessity of equipping them with knowledge and skills [2]. Digital literacy encompasses a diverse range of skills that empower individuals not only to access and utilize information effectively but also to critically evaluate, create, and communicate within the digital realm [3,4]. Key aspects of digital literacy in the context of AI include the following [5,6]: (a) recognizing and interacting with AI systems in various applications, (b) understanding the basic principles of AI, including its capabilities and limitations, and (c) thinking critically about the impact of AI on society and various fields. Digital literacy in the context of AI goes beyond basic computer skills and delves into understanding how AI functions, its potential and limitations, and the societal implications of its use [7]. Subsequently, educators and scholars in both fields play a crucial role in bridging the gap between traditional teaching methods to offer more opportunities for enhancing students’ overall experience and satisfaction using AI technology in higher education [8,9,10].

Generative AI, an innovative subset of AI technology, represents a paradigm shift in how machines create, innovate, and interact with human-generated content. It has emerged as a powerful tool for researchers and educators, enabling the creation of entirely new data formats. These formats can span text, where AI generates realistic and coherent writing, from scientific papers to creative narratives. In the visual domain, generative models produce novel images, from realistic portraits to fantastical landscapes, based on textual descriptions or learned patterns from vast image datasets [11]. AI-powered content creation tools also include virtual avatars, video generation models, voices, and animations. Even multimedia content composition benefits from generative AI, with models creating new pieces in various styles by analyzing existing features and identifying underlying structures [12]. Technically, generative AI often relies on deep learning models trained on massive datasets. These models learn the statistical relationships within the data, allowing them to create new outputs that are statistically probable and often indistinguishable from real data [13]. This data-driven approach is crucial, as the quality and quantity of training data heavily influence the quality of the generated outputs. Generative AI goes beyond mere copying. It utilizes statistical learning to create novel outputs that adhere to the learned patterns, resulting in new and potentially new data [14].

However, a large number of challenges in balancing multiple teaching styles, managing student needs, maintaining motivation, and creating interactive learning environments using generative AI platforms still exist. Additionally, ensuring that young learners develop the necessary digital literacy and 21st-century skills requires a comprehensive approach that encompasses the design, development, and use of various learning subjects [15,16,17]. Current research endeavors aim to inform the design of developmentally appropriate AI tools and learning experiences. By leveraging AI platforms, undergraduates, for example, majoring in schools of education—who often lack the technological proficiency to integrate these tools into their future classrooms—aim to identify and learn how to use them purposefully. This initiative will enable them to create engaging and effective methods for introducing young learners to fundamental AI concepts [13]. Conversely, a notable contradiction emerges with other undergraduates with better experience and background in technological advancements. For instance, Computer Science students, whose proficiency lies in the technical intricacies of algorithms and sophisticated coding structures, often lack familiarity with school contexts and the nurturing of social–emotional development, which are critical components of AI-supported educational practices [17]. This discrepancy highlights a potential disconnect between the technical domain of AI development and the learning approaches in higher education [4,5]. Consequently, exploring how to effectively merge the technical prowess of Computer Science students with the unique needs of young learners is crucial.

As the integration of generative AI continues to evolve in our daily life, the rapid proliferation of various platforms in higher education has shown significant potential in enhancing students’ academic performance, as well as their overall experience and satisfaction regardless of their prior technology experience [18,19,20]. Recent studies have demonstrated that AI tools can positively influence students’ learning outcomes by providing personalized feedback, adaptive learning pathways, and data-driven insights that help tailor educational experiences to individual needs. For example, AI-driven platforms can enhance academic performance by offering real-time support and resources that address specific learning gaps, thereby fostering a deeper understanding of course material [12,15]. Additionally, user experience is improved using different AI interfaces that streamline administrative tasks, facilitate seamless communication between students and educators, and provide engaging, interactive learning environments. These advancements not only boost academic performance but also increase student satisfaction by making learning more accessible, efficient, and enjoyable [17,18,19,20,21]. Therefore, integrating AI technologies into educational settings is not just about technological enhancement but also about creating a more supportive, personalized, and motivating learning experience that aligns with the educational goals of the 21st century [22].

While research suggests AI holds promise for enhancing educational outcomes [9,16,22], a research gap remains in understanding its impact on students’ academic performance, user experience, and overall satisfaction across diverse educational contexts and backgrounds. To address the challenges and foster engaging and equitable learning experiences using AI platforms for undergraduates, there is a need to develop appropriate strategies for tutors to integrate technology into their teaching practices [23]. Identifying any alignment or misalignment between the Early Childhood Education curriculum and the Computer Science undergraduate curriculum is crucial for institutions and universities to ensure a smooth educational transition for students.

This study aims to bridge a critical knowledge gap by employing a comparative design. It will examine how students with disparate technological proficiencies interact with and are impacted by AI platforms within higher education. Furthermore, the current study seeks to investigate whether AI use can lead to academic performance and satisfaction improvements in diverse instructional design projects that involve the development of educational videos, image creation, and animations. By comparing achievements developed during Early Childhood Education (ECE) with those acquired in Computer Science (CS) undergraduate studies, educators, and scholars can gain valuable insights into the critical competencies needed at various stages of academic development and tailor curricula that better meet the evolving needs of students.

2. Review of Recent Literature

As AI continues to permeate various aspects of higher education, it is becoming crucial to assess its impact on student performance and satisfaction, especially considering the different level of students’ experience with this technology. Empirical studies have provided insights into these intertwined domains, highlighted existing research gaps and future directions, and highlighted the diverse ways AI is impacting students’ academic performance. Maurya, Hussain, and Singh [22] addressed the challenge of student placement in engineering institutions by developing machine learning classifiers to predict placements in the IT industry based on academic performance metrics. The classifiers utilized academic records from high school to graduation and backlog data. Various algorithms, including Support Vector Machine, Gaussian Naive Bayes, and neural networks, the same authors tested and compared accuracy scores, confusion matrices, heatmaps, and classification reports, emphasizing their effectiveness in placement prediction. Wang, Sun, and Chen [20] explored the impact of AI capabilities in higher education institutions on students’ learning performance, self-efficacy, and creativity. By analyzing data resources, technical skills, teaching applications, and innovation consciousness, the same authors established a dual mediation model showing AI’s positive effects on learning outcomes to boost students’ creativity and confidence. Jiao et al. [19] tackled the challenge of predicting academic performance in online education by developing an AI-enabled model based on learning process data and summative assessments. Using evolutionary computation techniques, the model demonstrates that key performance indicators include knowledge acquisition, class participation, and summative performance, while prior knowledge is less influential, positioning it as a valuable tool for online education. Lastly, Dekker et al. [18] proposed AI chatbot integration for mental health with life-crafting interventions aimed at enhancing academic performance and retention. By combining personalized follow-up and interactive goal setting through chatbots with curriculum-based life-crafting, the approach aims to address the interconnected issues of student mental health and academic success. The same study emphasized the need for user-friendly design and technology acceptance to maximize intervention effectiveness.

Recent literature highlights varied research exploring how AI tools are shaping the student experience in higher education. It emphasizes the importance of responsible integration, the role of cognitive and psychological factors in learning, and the need to consider individual student characteristics when implementing innovative technologies. For instance, Yang, Wei, and Pu [24] proposed a methodology to measure and improve user experience in mobile application design using artificial intelligence-aided design. The same study involves designing projected application pages to train neural networks, which aggregate user behavior features for optimization. The methodology’s efficiency is verified through its application in a social communication app. Sunitha [25] explored the potential impact of AI on user interface and user experience design to examine whether AI can replace human designers or enhance their capabilities by automating tasks like user behavior analysis, testing, and prototype generation. It also discusses AI’s limitations in understanding user emotions and adapting to unforeseen needs. Padmashri et al. [23] investigated the role of AI in user experience design through a study guided by the design thinking process. The results of the same study showed that AI technologies empowered user experience professionals to create user-centric solutions that can enhance design thinking and user engagement.

The evolving landscape of higher education is witnessing the emergence of AI chatbots, raising questions about their impact on student learning and their overall satisfaction as well. Using self-determination theory, Xia et al. [21] examined how previous technical (AI) and disciplinary (English) knowledge impact SRL, mediated by needs satisfaction (autonomy, competence, and relatedness). The same study found that while previous English knowledge directly affects SRL, AI knowledge does not. Satisfaction of autonomy and competence mediates the relationships between both types of knowledge and SRL, whereas relatedness does not. These findings underscore the importance of cognitive engagement and the need for satisfaction in fostering effective SRL. Saqr, Al-Somali, and Sarhan [17] analyzed the acceptance and satisfaction of AI-driven e-learning platforms (Blackboard, Moodle, Edmodo, Coursera, and edX) among Saudi university students, focusing on the perceived usefulness and ease of use of AI-based social learning networks, personal learning portfolios, and environments. The results showed significant positive influences of perceived usefulness and ease of use on student satisfaction, which in turn affected attitudes toward e-learning but not intentions to use it. Fakhri et al. [26] investigated the impact of ChatGPT on students’ attitudes, satisfaction, and competence in higher education. Findings of the same study showed that while reliability and satisfaction with ChatGPT positively influence learning outcomes, the perceived impact on competence is not significant. Frequent use diminishes these positive effects, highlighting the complexity of integrating AI tools like ChatGPT in educational settings, as initial positive perceptions may wane over time.

Despite the promising potential of AI in enhancing educational outcomes [20,22], a significant research gap remains in understanding student learning, satisfaction, and user experience, particularly for students with varying levels of technological proficiency. This lack of knowledge hinders efforts to fully harness AI’s potential, particularly regarding the needs of educators with limited technological backgrounds who are crucial for effectively integrating AI into diverse classroom settings. Based on the above, there is a need for further exploration of the sustained effects of AI platforms, cultural variations in their implementation, and how these tools can be seamlessly integrated with existing pedagogical approaches to maximize their benefits for all undergraduates.

3. Materials and Methods

3.1. The Present Study

The above literature reveals several gaps in understanding regarding the interconnected impact of AI technology on academic performance, satisfaction, and user experience. Moreover, studies by Fakhri et al. [26] and Xia et al. [21] highlighted the initial positive impacts of AI tools like ChatGPT and conversational chatbots on learning outcomes and self-regulated learning. Nonetheless, they also indicate that these positive effects may diminish with increased usage frequency, suggesting a need for longitudinal studies to assess sustained impacts over time. Yang et al. [24] focused on AI’s role in improving user experience through deep learning models, but their research is primarily technical. Padmashri et al. [23] suggested that AI can support the design thinking process to improve user experience, but there is limited research on how AI tools can be seamlessly integrated into regular teaching practices to enhance both students’ academic performance and satisfaction. There is a lack of comprehensive studies that combine the technical aspects with the psychological and emotional dimensions of user experience, as suggested by Sunitha [25]. Saqr et al. [17] emphasized the varying impacts of AI-driven e-learning platforms on student satisfaction and perceived usefulness, particularly within higher education contexts. As a result, there is a need for studies that explore how different educational contexts and individual characteristics influence the effectiveness of AI tools in improving academic performance and user experience. Existing research often treats AI platforms as standalone interventions rather than integrated “tools” of the broader educational contexts for students.

To address the above research “gap”, this study delves into the comparative analysis of two groups of undergraduates with diverse backgrounds in technological advancements using AI platforms to assess their impact on undergraduate student learning academic performance and satisfaction using AI-powered instructional design projects. This study investigates the following research questions:

- RQ1—Do ECE undergraduates who utilize AI-generated platforms achieve higher academic performance in designing, developing, and implementing instructional design projects compared to their CS counterparts?

- RQ2—Do ECE undergraduates who utilize AI-generated platforms have different user experiences (usefulness of AI tools, comfort level, challenges, and utilization) in their projects compared to their CS counterparts?

- RQ3—Do ECE undergraduates exhibit higher levels of overall satisfaction using AI-powered instructional design projects compared to CS undergraduates?

Employing comparative design contexts, this study examines how undergraduate students from diverse educational contexts (ECE vs. CS) and technological backgrounds learn to use AI platforms. More specifically, it aims (a) to identify the impact of AI platforms on the learning performance, user experience, and satisfaction of students with diverse technological proficiency levels to design, develop, and implement various instructional design projects, and (b) to explore best practices for integrating AI in diverse learning subjects to optimize overall educational outcomes. Focusing on the development of educational videos and animations, this study assesses potential changes in students’ attitudes and behaviors toward learning about AI capabilities. The current study contributes to a deeper understanding of how to effectively utilize AI tools in different educational settings. It can also lead to new research and best practices on the effective integration of AI technology in education, potentially influencing educational policies and training programs.

3.2. Research Context

To gain a more comprehensive understanding of AI’s impact on higher education, a comparative study design is crucial. While existing research explores the potential of AI in education, a critical gap remains in understanding how students with varying technological backgrounds interact with and learn from these platforms. This comparative approach, following the guidelines by Campbell and Stanley [27], allows researchers to directly address this knowledge gap by comparing the experiences of two distinct student groups. The current study involved ECE students, who may have less experience with technology, and CS students, who are likely to possess stronger skills in technologically advanced environments. By exposing both groups to AI platforms within the context of a similar task, such as designing, developing, and implementing instructional design projects, the present study explores whether these platforms lead to improvements in students’ academic performance and satisfaction. Through data collection methods like the analysis of project work, this study can assess learning outcomes, satisfaction with the AI tools, and user experience challenges faced by each group. It directly addresses the identified knowledge gap by highlighting how students with varying technological proficiency interact with and learn from AI platforms.

3.3. Participants

A total of 66 Greek participants, aged 19 to 44 (mean: M = 21.29, standard deviation: SD = 5.72), were enrolled in the study. These participants were undergraduate students from two of the largest universities in Greece, majoring in instructional design and educational technologies. Specifically, 32 students were from the Department of Early Childhood Education (ECE), and 34 students were from the Department of Computer Science (CS), which provides pedagogical and teaching proficiency.

All participants had basic digital skills, with no beginners. Most were familiar with generative AI tools. Specifically, 38% (25 participants) had used AI for image generation, and 48% (32 participants) had experience with AI-generated videos. Approximately 88% understood generative AI and had used tools like ChatGPT and Google’s Gemini. Notably, 88% believed integrating AI into teaching materials was essential for improved learning outcomes.

Standardization of demographic attributes and generative AI usage aimed to reduce biases, enhance internal validity, and minimize external variable influence. Understanding participants’ prior exposure to or unfamiliarity with these tools is crucial for informing technology-driven initiatives and assessments.

3.4. Instructional Design Context

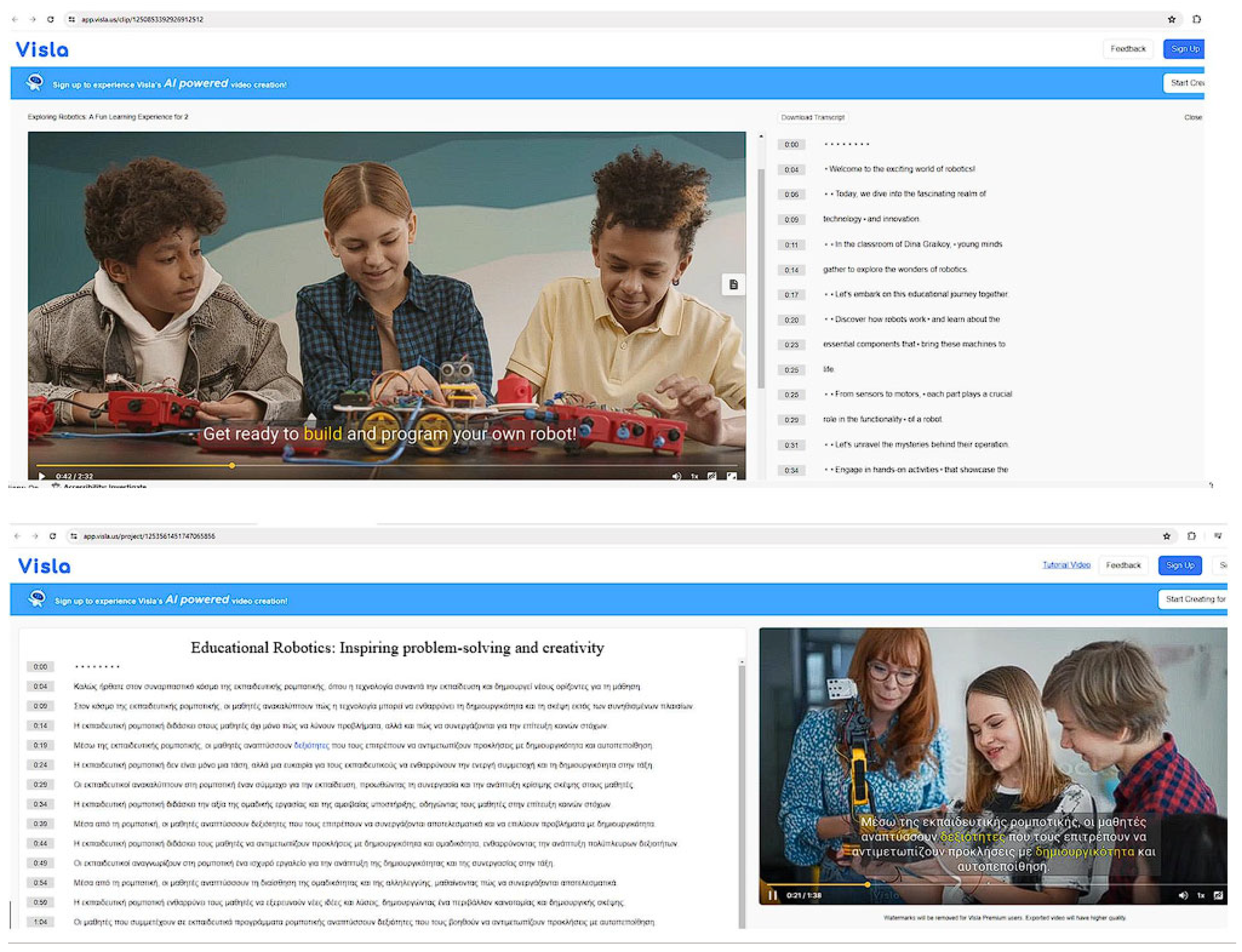

In today’s digital era, undergraduates must possess a solid foundation in AI concepts, and include tasks in curricula. This can equip them with the ability to critically evaluate and effectively utilize generative AI chatbots in their respective fields. ECE and CS undergraduates who want to work in public or private school settings can engage in valuable research utilizing generative AI chatbots, like ChatGPT and Google’s Gemini, to assess the effectiveness of existing AI-supported applications or designing frameworks for integrating AI into digital literacy courses. In this vein, all undergraduates can gain practical skills in building AI-supported educational tools designed to diverse age groups and learning objectives. This could involve designing and developing educational videos and images incorporating interactive digital elements to enhance learners’ digital literacy across various domains. Project activities and goals were employed via AI video generator platforms, such as Sudowrite (https://www.sudowrite.com, accessed on 10 June 2024), Visla (https://www.visla.us, accessed on 10 June 2024), Jasper (https://www.jasper.ai, accessed on 10 June 2024), Animaker (https://www.animaker.com, accessed on 10 June 2024), lumen5 (https://lumen5.com, accessed on 10 June 2024) and AI image generators https://www.lumiere3d.ai, accessed on 8 July 2024), Craiyon (https://www.craiyon.com, accessed on 8 July 2024), Fotor (https://www.fotor.com, accessed on 8 July 2024), PIXLR (https://pixlr.com, accessed on 8 July 2024), Deep dream generator (https://deepdreamgenerator.com, accessed on 8 July 2024), and Image Creator from Microsoft Designer (https://www.bing.com/images/create, accessed on 8 July 2024) for image creation and development that can be integrated into ECE and CS curricula. Some indicative projects are depicted in Figure 1 below.

Figure 1.

Designing, developing, and implementing an AI-powered video using Visla.

The target audience was undergraduates who would become educators, curriculum developers, and instructors interested in integrating AI tools into education. Some indicative activities and objectives are described below.

- A. Learning activities:

1. Exploring the AI tools:

- Research and compare features: Divide and assign each participant of the mentioned AI tools (Sudowrite, Jasper, ShortlyAI, Lumiere3D, Lumen5, Animaker AI). For this study’s purpose, we gave participants time to experiment with one or two of the tools and encouraged them to create examples of how these tools could be used for educational purposes to discuss their creations, focusing on the learning potential and potential challenges.

2. Designing AI-powered learning experiences:

- Identify curriculum topics: Brainstorm specific topics within ECE and CS that could benefit from AI-generated content, considering areas such as storytelling, coding basics, or creative expression.

- Storyboard development: Divide participants into small groups, each assigned a chosen topic with a twofold purpose: (a) create a storyboard outlining how they would use AI tools to develop an engaging and educational learning experience on their chosen topic and (b) encourage them to consider factors like interactivity and assessments associated with learning objectives depending on their educational disciplines.

- Presentation and peer feedback: Each group presents their storyboard, explaining their rationale and design choices to discuss the feasibility and effectiveness of each approach.

- B. Learning projects:

- Content creation: Participants can generate (video and image) presentations, and create artifacts designed to interact with learning subjects based on ECE and CS curricula using various AI platforms, which are described in the above subsection (see “Instructional design context”). These projects aim to explore how AI can improve video editing by automating tasks such as scene segmentation, color grading, and audio enhancement. This not only contributes to formal professional development by building new skills and knowledge, but also offers informal benefits by allowing participants to explore the potential of AI in this field.

- Student motivation: The project area aligns with departmental interests, fostering collaboration and knowledge sharing beyond individual roles. This facilitates the creation of intra-departmental connections and the exchange of ideas.

- Evaluating AI-generated content creation: This project area proposes investigating the current state of AI-powered content creation tools, including virtual avatars, video generation models, voices, and animations. This evaluation could assess the quality, effectiveness, and potential applications of these tools within educational settings, along with their potential impact on existing workflows.

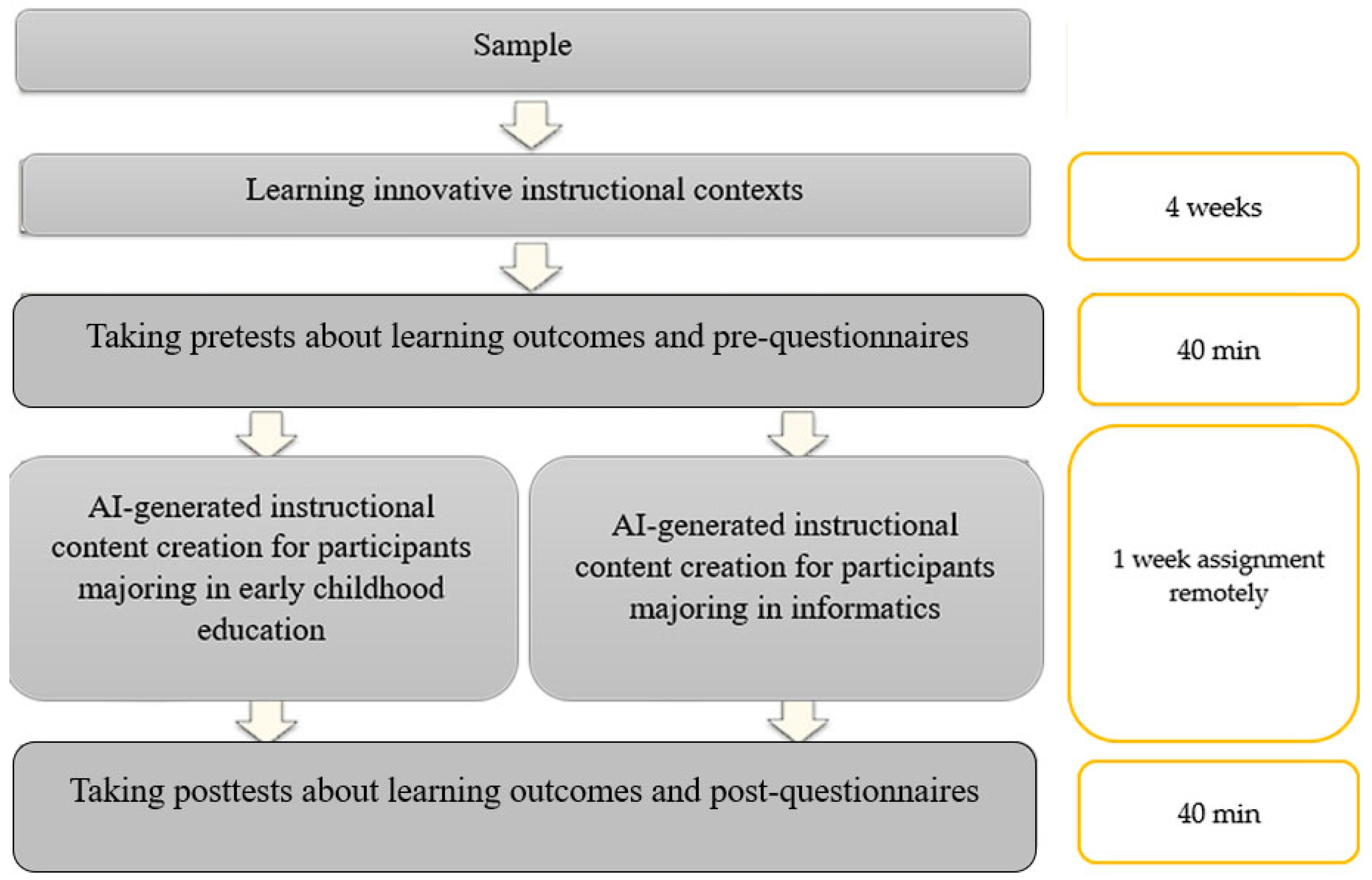

3.5. Experimental Procedure

The current study followed the principles provided to conduct a comparative study with a pretest–posttest design [27]. Adding knowledge and attitudes measures would strengthen the assessment of change and isolate the effect of AI integration. Before the experiment, both groups participated in conventional learning activities for 4 weeks. Participants then completed a series of assessments to measure their academic performance. Additionally, they engaged remotely in a 1-week assignment in which they had to incorporate AI platforms. Following these activities, participants will complete questionnaires to gauge their satisfaction and user experience with the AI tools (Figure 2).

Figure 2.

Experimental setup.

None of the participants had any experience in experimental conditions, where AI tools are seamlessly integrated into digital learning environments to suit the specific requirements of their academic disciplines. This method ensures a direct contrast of the intervention’s impact within distinct educational contexts, enabling a comprehensive assessment of its efficacy across varied academic domains. While the absence of a control group limits the ability to establish causal relationships definitively, this adjusted research method can still provide valuable insights into the effects of integrating AI-generated educational content within specific academic contexts [27]. It allows for a comparison of changes in learning outcomes and behavioral intentions over time within each group, contributing to our understanding of the potential benefits of AI integration in education.

3.6. Ethical Considerations

This study prioritized the ethical treatment of participants by upholding their rights and well-being. Key considerations included informed consent, confidentiality, and anonymity. Participation was entirely voluntary, and comprehensive information about the study’s aims and potential impacts was provided to all participants based on informed consent. To ensure the anonymity of research participants, all data were kept confidential during the entire analysis process. This transparency ensured that their decision to participate was informed and freely given. Additionally, a detailed consent form addressed the potential side effects of AI-generated instructional content, data usage under General Data Protection Regulation (GDPR) guidelines, and the right to withdraw without consequences. Protecting participant privacy was paramount. This commitment to confidentiality aligns with ethical research principles and underscores the study’s dedication to the highest privacy standards [28].

3.7. Measuring Tools

The current study employed structured and validated questionnaires to gather quantitative data related to academic performance, user experience, and perceived learning outcomes in ECE and CS. Participants responded to all questionnaires using a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree).

A 7-item questionnaire was used to evaluate student achievement of learning objectives outlined in the “Instructional design context” section. Four items assessed learning activities (A objectives) while three focused on learning projects (B objectives). The evaluation compared the performance of two different groups in relation to the study’s intervention.

The course instructor employed a rubric with several criteria to assess student learning outcomes, resulting in a maximum score of 10. The rubric demonstrated good internal consistency (Cronbach’s alpha, α = 0.813), indicating that the items within the rubric are correlated and likely measure a single latent construct related to learning outcomes. However, it is important to note that Cronbach’s alpha measures internal consistency, not the validity of whether the rubric accurately measures actual learning outcomes. To establish validity, we cross-referenced rubric scores with performance on external assessments, such as exams or practical projects.

High inter-rater reliability was established through various methods, including two-way mixed absolute agreement, intra-class correlations, and single measures. Any discrepancies were resolved following the procedures outlined by Barchard and Pace [29], minimizing measurement error.

In addition, this study explored user experience measurement through the User Experience Questionnaire (UEQ) validated by Law et al. [30]. The UEQ offers a comprehensive approach to user experience evaluation, employing a questionnaire format that allows users to directly express their feelings, impressions, and attitudes formed during their interaction with a new product. The UEQ consists of six distinct scales encompassing various facets of user experience:

- Attractiveness: Measures the user’s overall impression of the product, whether they find it appealing or not.

- Efficiency: Assesses how easy and quick it is to use the product and how well organized the interface is.

- Perspicuity: Evaluates how easy it is to understand how to use the product and get comfortable with it.

- Dependability: Focuses on users’ feelings of control during interaction, the product’s security, and whether it meets their expectations.

- Stimulation: Assesses how interesting and enjoyable the product is to use and whether it motivates users to keep coming back.

- Novelty: Evaluates how innovative and creative the product’s design is and how much it captures the user’s attention.

The UEQ demonstrated good internal consistency, with Cronbach’s alpha coefficient reaching 0.82, exceeding the acceptable threshold set by Cortina [31].

To assess learning satisfaction, the study adapted a questionnaire originally developed by Wei & Chou [32]. This instrument consists of 10 items measuring three key constructs: Learner Control (α = 0.78), Motivation for Learning (α = 0.79), and Self-directed Learning (α = 0.83).

In summary, while Cronbach’s alpha provides evidence of the internal consistency of our measurement instruments, further validation through external assessments is necessary to confirm that these instruments accurately measure learning outcomes. This approach ensures a robust evaluation of the effectiveness of AI-generated educational tools in enhancing students’ learning experiences.

3.8. Data Collection and Analysis

Participant anonymity was ensured through data processing methods employed by the authors. To collect data from participants, all questionnaires were self-reported and delivered via hardcopies or Google docs. The completion time was capped at 40 min to avoid categorizing participants as novices or experts, ensuring equal opportunity and unbiased responses regardless of experience. The two authors also developed weekly lesson plans that guided the entire process. Two key factors influenced student participation: (1) alignment with the standard 13-week university spring calendar (February–June 2024, sprinter semester), and (2) the introduction of AI-generated video and image tools as alternative platforms for completing various learning projects.

The researchers employed a multi-step data analysis process to ensure a comprehensive examination of the study’s variables. This included meticulous translation of the subscales into Greek, the participants’ native language, following Brislin’s [33] back-translation method. Responses to the questionnaires were then coded using a standardized 5-point Likert scale. Likert-scale analysis and calculation of overall scores based on correct answers ensured robust evaluation. Finally, descriptive statistics (mean and Standard deviation) were applied to analyze the overall scores.

Finally, IBM® Statistical Package for the Social Sciences (SPSS® version 27) software was utilized to conduct the data analysis, ensuring a comprehensive examination and accurate interpretation of the data.

3.9. Data Integrity and Reliability

This research employed rigorous measures to ensure the validity and reliability of its findings. To confirm that results from different data collection methods aligned, the primary researcher and the first author used diverse data sources [34]. They provided t-test analyses alongside side-by-side comparisons within these sources. To minimize bias and verify data analysis accuracy, a second rater analyzed all data and participated in coding as proposed by Marsden [35]. Both researchers (the authors) assessed the effectiveness of both the effectiveness of the rubric’s content and structure at each procedural level. The latter provided insightful feedback on interpreting results and drawing conclusions from the data analysis. Two-way mixed absolute agreement, within-class correlations, and single measures identified high inter-rater reliability. Discrepancies were addressed and resolved as per Barchard and Pace [29] to add only minimal measurement error.

4. Results

The results of this study are presented in several sections, focusing on different aspects of the collected data. Initially, we provide a comprehensive overview of the demographic characteristics of the participants, followed by detailed analyses of their academic performance, user experience, and overall satisfaction with AI-generated educational tools.

The demographic analysis revealed significant differences between the two groups (Table 1). The ECE group, comprising 32 students, had a higher mean age (M = 22.78, SD = 7.91) compared to the CS group, which consisted of 34 students (M = 19.88, SD = 1.23). This age difference was statistically significant (t(64) = 2.11, p < 0.05), suggesting that ECE students tend to be older than their counterparts. However, a closer analysis revealed that this happened largely because the ECE group had four students who were more than 30 years old.

Table 1.

Demographics and previous experience.

Experience with AI-generated images differed between the groups. In ECE students, 56% of them had prior experience with AI-generated images (M = 0.56, SD = 0.504), whereas only 21% of Computer Science Department students reported similar experience (M = 0.21, SD = 0.410). This difference was statistically significant (t(64) = 3.16, p < 0.01), indicating that students in ECE are more likely to have interacted with AI-generated images than those in CS studies.

Experience with AI-generated videos showed less variation between the groups. In the ECE group, 53% of the students had experience with AI-generated videos (M = 0.53, SD = 0.507), compared to 44% in the CS group (M = 0.44, SD = 0.561). The difference in experience with AI-generated videos was not statistically significant (t(64) = 0.68, p = 0.50), suggesting a similar level of exposure to this technology across both groups.

The analysis of familiarity with generative AI technologies revealed significant differences between the two groups. In the ECE group, the average familiarity score with generative AI was significantly higher (M = 3.88, SD = 0.421) compared to the CS group (M = 3.15, SD = 1.048). This difference was statistically significant, as indicated by an independent sample t-test (t(64) = 3.741, p < 0.01). Levene’s test for equality of variances indicated that the assumption of equal variances was not met (F = 15.215, p < 0.001), and thus the results from the t-test assuming unequal variances were used.

When assessing attitudes towards the importance of AI integration in education, 88% of participants from ECE believed that integrating AI into teaching materials is crucial for enhancing learning effectiveness (M = 4.29, SD = 0.588). In the CS group, 68% shared this belief (M = 3.68, SD = 1.007). The difference in attitudes was statistically significant (t(63) = 2.96, p < 0.01), reflecting a stronger belief in the importance of AI in education among ECE students.

4.1. Analysis of Academic Performance

The analysis of academic performance focused on comparing the learning outcomes of students from two groups after their engagement with AI-generated educational tools to answer RQ1. An independent sample t-test was conducted to compare the academic performance scores between ECE and CS students (Table 2). Levene’s test for equality of variances indicated homogeneity of variances (F = 0.540, p = 0.465). The t-test results revealed no significant difference between the two groups (t(64) = −0.22, p = 0.83), suggesting that both groups achieved comparable levels of academic performance despite their differing backgrounds and prior experiences with AI technologies. The mean scores were 8.250 (SD = 0.8890) for ECE students and 8.294 (SD = 0.7499) for CS students.

Table 2.

Independent samples test for academic performance.

Given that the maximum possible score was 10, these mean scores indicate that both groups performed well on average. A score above eight reflects a high level of proficiency and engagement with the instructional design projects using AI platforms. This suggests that both ECE and CS students were able to successfully apply their knowledge and skills in designing, developing, and implementing AI-generated educational content.

Further analysis indicated that both groups demonstrated a high level of engagement and proficiency in designing, developing, and implementing instructional design projects using AI platforms. The similar performance outcomes could be attributed to the different previous backgrounds of the students. While ECE students had more prior experience with AI tools, the CS students succeeded in achieving slightly better grades than their counterparts. It seems that their familiarity with Computer Science software and programming likely helped mitigate any initial disparities in AI tools proficiency.

This parity in academic performance, despite differences in familiarity with AI, indicates that the integration of AI into educational practices can support equitable learning opportunities and outcomes. Furthermore, this suggests that the foundational skills acquired in CS and informatics generally can be effectively leveraged to quickly adapt to new AI technologies, highlighting the importance of interdisciplinary learning and adaptability in modern education.

4.2. Analysis of Students’ Experience

To answer RQ2, the analysis of students’ experience with AI tools focused on several key factors: usefulness, comfort level, and overall user experience. The general outcomes indicated similarities across both groups, with some notable differences (Table 3).

Table 3.

Independent samples test for user experience.

Independent samples t-tests were conducted to compare user experience between ECE and CS students. Levene’s test for equality of variances indicated homogeneity of variances for usefulness (F = 22.727, p = 0.000) and user experience (F = 4.675, p = 0.034). The t-test results revealed a statistically significant difference in the usefulness of AI tools. More specifically, students of ECE rated the usefulness of AI tools significantly higher (M = 4.30, SD = 0.46) compared to their CS counterparts (M = 3.71, SD = 1.05). This difference was statistically significant (t(64) = 2.93, p < 0.01), suggesting that ECE students perceived AI tools as more beneficial for their instructional design projects. This could be attributed to the immediate applicability of AI tools in creating engaging educational content, which aligns closely with the needs of ECE students. Conversely, CS students, being more familiar with traditional images and video software, may have alternative methods for content development.

On the other hand, CS students reported a slightly higher comfort level when using AI tools (M = 3.92, SD = 0.60) compared to ECE students (M = 3.88, SD = 0.70), although this difference was not statistically significant (t(64) = 0.25, p = 0.81). This finding is consistent with the CS students’ background in software and programming, which likely facilitated a smoother adaptation to AI technologies. Their comfort with these tools may reflect their broader exposure to and familiarity with complex technological environments, thus reducing the learning curve associated with adopting new AI tools.

When examining the overall user experience, ECE students reported a slightly higher mean score (M = 4.08, SD = 0.46) compared to CS students (M = 3.85, SD = 0.64), though this difference approached but did not reach statistical significance (t(64) = 1.67, p = 0.10). These results suggest that while both groups found the AI tools to be user-friendly and effective, ECE students might have perceived a greater enhancement in their instructional design capabilities. This greater appreciation of AI tools among ECE students could be linked to the direct impact of these tools on their ability to create visually engaging and pedagogically effective content, which is crucial in ECE.

Overall, the data indicate that integrating AI tools into diverse educational disciplines can be beneficial, though the specific experiences and perceived utility may vary based on the students’ academic backgrounds and prior technological proficiency.

4.3. Analysis of Students’ Satisfaction

Independent samples t-tests were conducted to compare overall satisfaction, satisfaction with images, and satisfaction with videos generated by AI tools between ECE and CS students to address RQ3 (Table 4). Levene’s test for equality of variances indicated homogeneity of variances for all three measures (satisfaction: F = 0.674, p = 0.415; satisfaction with images: F = 1.565, p = 0.216; satisfaction with videos: F = 0.020, p = 0.889).

Table 4.

Independent samples test for user satisfaction.

The analysis of students’ satisfaction with AI-generated tools revealed differences between the two groups. ECE students expressed greater overall satisfaction (M = 4.08, SD = 0.53) compared to their CS counterparts (M = 3.92, SD = 0.54). Although this difference was not statistically significant (t(64) = 1.19, p = 0.24), the higher mean satisfaction score among ECE students suggests a generally more positive reception towards the integration of AI tools in their instructional design projects. This greater satisfaction could be due to the direct applicability of AI-generated content in creating educational content without the knowledge of complex image- and video-creating tools.

When examining specific aspects of satisfaction, such as the use of AI-generated images, ECE students again reported higher satisfaction (M = 4.06, SD = 0.63) compared to CS students (M = 3.87, SD = 0.68). This trend, while not statistically significant (t(64) = 1.21, p = 0.23), indicates that ECE students found AI-generated images particularly useful for developing visually appealing and pedagogically effective content. Similarly, satisfaction with AI-generated videos was higher among ECE students (M = 4.16, SD = 0.61) compared to CS students (M = 3.97, SD = 0.61). Again, this difference was not statistically significant (t(64) = 1.23, p = 0.23).

Overall, the analysis of satisfaction levels indicates that while both groups benefited from the use of AI tools, ECE students found these tools to be particularly impactful in enhancing their instructional design projects. This highlights the potential for AI technologies to meet the specific needs of different educational disciplines. The positive reception of AI tools among ECE students suggests that these technologies can play a significant role in improving the quality and effectiveness of educational content for young learners. However, the similar satisfaction levels reported by CS students also emphasize the versatility of AI tools in supporting diverse student backgrounds and disciplines.

5. Discussion

This study aimed to explore the impact of generative AI tools on undergraduate students’ learning performance, user experience, and satisfaction across two distinct academic disciplines. It was initially assumed that ECE students would have less experience with AI tools compared to CS students (Section 3.1). However, this study’s findings, as detailed in Table 1, revealed the opposite because ECE students generally had more prior experience with AI tools than CS students. This unexpected result suggests that assumptions regarding AI experience based on academic discipline may not always hold true. These findings also indicate that ECE students have prior exposure to other similar digital tools, which do not require a strong background in programming and are widely used in creating a wide array of educational materials. The findings reveal that while both groups demonstrated similar levels of academic performance in response to RQ1, there were differences in their experience and satisfaction with AI tools. Although differences in user experience were observed, only one construct showed statistical significance, and no significant differences in satisfaction were found between the groups during the instructional period. There was not a significant difference in achievement between ECE and CS students, even though ECE students had more prior experience with AI tools. This suggests the educational tools were effective and that CS students’ Computer Science background helped them learn to use the AI tools quickly. While ECE students had more prior experience with AI tools, the CS students achieved slightly better grades. Their stronger foundation in Computer Science and programming likely facilitated their adaptation to AI technologies, minimizing the initial challenges encountered during the learning process. This aligns with findings from prior works [19,20]. This finding aligns with prior research indicating that familiarity with technological concepts can mitigate initial disparities in tool proficiency [18]. In this vein, the findings show that AI in education can create equal learning opportunities and that Computer Science skills are valuable for adapting to new technologies.

In terms of user experience, ECE students rated the usefulness of AI tools significantly higher than their CS counterparts with regard to RQ2. This could be due to the immediate applicability of AI-generated content in creating engaging educational materials, which is crucial for ECE [23,24,25]. However, CS students reported a higher comfort level with these tools, likely reflecting their broader exposure to and familiarity with complex technological environments. This contradiction underscores the importance of context-specific applications of AI tools, where the perceived usefulness and comfort levels vary based on the students’ academic backgrounds and the nature of their tasks. Xia et al. [21] also emphasized the need for user satisfaction and engagement in the successful implementation of AI tools in educational settings, which supports this study’s findings. CS students, on the other hand, had more experience with other software and may have found alternative methods for content creation. Even though CS students reported being slightly more comfortable using the AI tools due to their Computer Science background, the overall user experience was similar for both groups. This suggests that AI tools can be beneficial in various educational fields, but students may find them more useful depending on their area of study and prior experience with technology [25]. While there were differences observed in prior experience with AI tools, only one of the three constructs for user experience showed a statistically significant difference.

Satisfaction levels, in response to RQ3, further emphasized these differences, with ECE students expressing greater satisfaction with AI-generated images and videos. This aligns with the findings of Fakhri et al. [26], who noted that visual and interactive content significantly enhances learning experiences, particularly in disciplines that rely heavily on visual aids. The higher satisfaction among ECE students suggests that AI tools are particularly effective in disciplines that prioritize creative and visual content, highlighting the importance of tailoring AI applications to the specific needs of different educational fields. Additionally, the ability to easily create and integrate high-quality images and videos into their teaching materials likely contributed to their higher satisfaction levels—as also indicated by previous studies [17,21]—otherwise, these students should use complex photo and video editing platforms.

While both ECE and CS students found the AI tools user-friendly, ECE students reported greater overall satisfaction, particularly with the AI-generated images and videos. This is likely because these tools directly addressed a need in ECE for creating engaging educational content, as also indicated in previous studies [8,11]. CS students, on the other hand, had alternative methods for content creation, maybe due to their background in software and programming. Therefore, these findings suggest that AI tools can be beneficial in various educational fields, but students may find them more impactful depending on their area of study and prior experience with technology [1,17].

To summarize, the results of this study suggest that while generative AI tools have the potential to enhance learning outcomes across diverse academic disciplines, their effectiveness is influenced by the students’ backgrounds and the specific requirements of their fields. These results contribute to a deeper understanding of how AI can be integrated into diverse educational contexts and highlight the potential for such technologies to enhance learning achievements.

In addition to its positive features, it is important to acknowledge the limitations and potential risks associated with generative AI tools. First, generative AI tools can sometimes produce inaccurate or misleading information, which can be problematic in educational contexts where accuracy is crucial. For instance, a recent study of UK undergraduate students and careers advisers highlighted concerns about the reliability of AI-generated content for career advice provision, pointing out that inaccuracies could lead to misguided decisions [36].

Second, the use of generative AI raises significant ethical and privacy issues. AI systems often require large amounts of data, which can include sensitive information. Ensuring the privacy and security of these data is a critical challenge. Moreover, there are concerns about the ethical use of AI, particularly regarding bias and fairness in AI-generated content. While AI chatbots have shown promise in mental health support, there are notable risks. For example, the US eating disorder helpline recently disabled its AI chatbot after concerns arose that it was providing inappropriate advice, potentially contributing to harmful outcomes for users [37]. This incident stresses the need for rigorous oversight and continuous monitoring of AI systems used in sensitive areas such as mental health.

Third, there is a risk that students and educators may become overly dependent on AI tools, potentially undermining the development of critical thinking and problem-solving skills. It is essential to strike a balance between leveraging AI’s capabilities and fostering independent learning and critical analysis [1,12].

Fourth, generative AI tools can also face technical limitations, such as difficulty in understanding human language or context more accurately. These limitations can lead to misunderstandings or ineffective communication, which can hinder the learning process. In light of these limitations, it is crucial to approach the integration of generative AI tools in education with caution. While they offer significant benefits, it is essential to address and mitigate the associated risks to ensure their effective and ethical use [5,15]. These considerations highlight the importance of ongoing research and dialog around the use of generative AI in education, ensuring that its implementation is both beneficial and responsible.

6. Conclusions

This study explored the learning processes and outcomes of undergraduates engaged in designing, developing, and integrating AI-generated educational content to provide insights and practical challenges with regard to incorporating AI in digital literacy innovation. Moreover, the findings indicate that integrating AI tools can effectively teach students to design, develop, and utilize AI-generated educational content. By comparing these groups, this research highlighted the potential influences of educational background and prior technological experience on user experience and learning outcomes with AI tools, though further research with larger samples is needed to confirm these influences. Both groups benefited from these experiences, showing increased learning outcomes and a greater intention to explore AI’s potential.

This study explored the learning processes and outcomes of undergraduates engaged in designing, developing, and integrating AI-generated educational content. Contrary to our initial assumptions, ECE students had more prior experience with AI tools compared to CS students. This finding challenges the assumption that AI experience correlates directly with technical knowledge or academic discipline. Instead, it suggests that AI experience may be influenced by the specific applications and relevance of AI tools within different fields. However, specific learning experiences and outcomes vary based on the students’ backgrounds and chosen subject areas.

This study’s findings reveal important practical and theoretical implications for the integration of AI in education. Some practical implications are as follows:

- Incorporating AI integration projects: Educational institutions should consider integrating AI projects into digital literacy courses to equip students with valuable technical and pedagogical skills. This research confirms the effectiveness of integrating AI tools in digital literacy training. Students, even those with limited background in technology, can successfully learn to design, develop, and utilize AI-generated content.

- Provide guidance and support: Offering clear guidance and support throughout the project, especially during the initial stages, can motivate and engage students with varying levels of technical expertise. This study highlights the importance of considering students’ educational backgrounds and prior technological experience. Design activities that cater to these differences, for example, offer more scaffolding or support for ECE students compared to CS undergraduates.

- Consider user experience and satisfaction: The differences in user experience and satisfaction between ECE and CS students provide insights into the contextual factors that influence the adoption and effectiveness of AI tools in education. These findings support the theoretical perspective that user experience and satisfaction are critical factors in the successful implementation of educational technologies. Future research should further explore these contextual factors to develop more nuanced theories on technology adoption in education.

Some theoretical implications are as follows:

- Differentiated learning approaches: Modified learning approaches may be necessary based on students’ backgrounds and interests. While this study’s findings suggest that ECE undergraduates in our sample benefited from video development projects aligned with their future careers, and CS students from our sample were more engaged with animation development tasks, these observations are based on small-scale cohorts from a single context. Therefore, further research with larger and more diverse samples is needed to validate these findings and to explore their applicability to broader cohorts of ECE and CS undergraduates.

- Tailored educational approaches: The differences in user experience and satisfaction between ECE and CS students highlight the need for differentiated learning approaches based on students’ backgrounds and interests. For instance, ECE students may benefit more from projects involving video development, which aligns with their future careers, while CS students might be more engaged with tasks related to animation development. Tailoring educational approaches to the specific needs of different student groups can enhance the effectiveness of AI integration in education.

- Reevaluated assumptions about AI experience: Our findings highlight the need to reassess assumptions about AI experience based on academic discipline. While we initially assumed that ECE students would have less AI experience, the opposite was true in our sample. This suggests that AI experience may be more closely related to the practical applications of AI in different fields rather than the level of technical knowledge.

This study adds to the body of literature by providing a more comprehensive understanding of how AI impacts students with diverse backgrounds. It also offers practical guidance on how to integrate AI effectively into existing educational practices to maximize its benefits without restrictions.

7. Limitations and Considerations for Future Research

This study acknowledges several limitations that warrant further discussion and exploration in future research. First, the study exclusively comprised Greek undergraduate students, resulting in a homogenous sample regarding socio-cognitive backgrounds. This limits the generalizability of the findings to broader, international student populations with diverse cultural and educational experiences. Further research should involve more diverse participant pools to ensure wider applicability and inform strategies for adapting tasks to different contexts. Second, the study relied solely on quantitative data between two groups. While valuable, this approach overlooks the richness of qualitative data. Incorporating focus groups or interviews in future research would offer deeper insights into students’ interactions with the learning materials, their thought processes, and potential challenges encountered. These qualitative data would enrich the understanding of how AI-driven intervention will influence the participants. Third, the curriculum knowledge examined focused solely on ECE and Information Technology (or CS) textbooks used in Greek schools. This restricted scope limits the generalizability of the findings to different educational contexts worldwide.

While this study provides valuable insights, it also has several limitations that should be addressed in future research:

- Larger and more diverse samples to enhance the generalizability of the findings need to be implemented in future studies. Including participants from different institutions and backgrounds can provide a more comprehensive understanding of the impact of AI tools in education.

- Longitudinal studies are needed to examine the long-term effects of AI integration on students’ learning outcomes, user experience, and satisfaction. Such studies can provide deeper insights into the sustained impact of AI tools on education.

- Incorporating qualitative research methods, such as interviews and focus groups, can complement the quantitative findings and provide richer insights into students’ experiences with AI tools. Qualitative data can help uncover the nuances and contextual factors that influence the effectiveness of AI in education.

- External validation of the measurement instruments to confirm that they accurately measure learning outcomes is also crucial. Future research should employ external assessments, such as exams or practical projects, to validate the findings and ensure the robustness of the evaluation methods.

By addressing these limitations and incorporating these considerations into future research, we can build a more comprehensive understanding of the role of AI in education and develop effective strategies for its integration into diverse educational contexts.

Author Contributions

Conceptualization, N.P. and I.K.; methodology, N.P.; software, I.K.; validation, N.P. and I.K.; formal analysis, I.K.; investigation, N.P. and I.K.; resources, N.P. and I.K.; data curation, N.P. and I.K.; writing—original draft preparation, N.P.; writing—review and editing, I.K.; visualization, I.K.; supervision, I.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used are publicly available and their citation is provided in the manuscript.

Acknowledgments

We would like to express our gratitude to all participants who voluntarily participated in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rospigliosi, P.A. Artificial intelligence in teaching and learning: What questions should we ask of ChatGPT? Interact. Learn. Environ. 2023, 31, 1–3. [Google Scholar] [CrossRef]

- Luo (Jess), J. A critical review of GenAI policies in Higher Education Assessment: A call to reconsider the “originality” of students’ work. Assess. Eval. High. Educ. 2024, 49, 651–664. [Google Scholar] [CrossRef]

- Lodge, J.M.; Yang, S.; Furze, L.; Dawson, P. It is not like a calculator, so what is the relationship between learners and Generative Artificial Intelligence? Learn. Res. Pract. 2023, 9, 117–124. [Google Scholar] [CrossRef]

- Su, J. Development, and validation of an artificial intelligence literacy assessment for kindergarten children. Educ. Inf. Technol. 2024. [Google Scholar] [CrossRef]

- Pellas, N. The influence of sociodemographic factors on students’ attitudes toward AI-generated video content creation. Smart Learn. Environ. 2023, 10, 57. [Google Scholar] [CrossRef]

- Jiang, Y.; Hao, J.; Fauss, M.; Li, C. Detecting ChatGPT-generated essays in a large-scale writing assessment: Is there a bias against non-native English speakers? Comput. Educ. 2024, 217, 105070. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Artificial intelligence in early childhood education: A scoping review. Comput. Educ. Artif. Intell. 2022, 3, 100049. [Google Scholar] [CrossRef]

- Adeshola, I.; Adepoju, A.P. The opportunities and challenges of ChatGPT in Education. Interact. Learn. Environ. 2023, 1–14. [Google Scholar] [CrossRef]

- Bhullar, P.S.; Joshi, M.; Chugh, R. ChatGPT in higher education: A synthesis of literature and a future research agenda. Educ. Inf. Technol. 2024. [Google Scholar] [CrossRef]

- Chai, C.S.; Lin, P.-Y.; Jong, M.S.-Y.; Dai, Y.; Chiu, T.K.F.; Qin, J. Perceptions of and behavioral intentions towards learning artificial intelligence in primary school students. J. Educ. Technol. Soc. 2021, 24, 89–101. [Google Scholar]

- Mao, J.; Chen, B.; Liu, J.C. Generative artificial intelligence in education and its implications for assessment. TechTrends 2024, 68, 58–66. [Google Scholar] [CrossRef]

- Pellas, N. The effects of generative AI platforms on undergraduates’ narrative intelligence and writing self-efficacy. Educ. Sci. 2023, 13, 1155. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Artificial Intelligence (AI) literacy in early childhood education: An intervention study in Hong Kong. Interact. Learn. Environ. 2023, 1–15. [Google Scholar] [CrossRef]

- Al Naqbi, H.; Bahroun, Z.; Ahmed, V. Enhancing work productivity through generative artificial intelligence: A comprehensive literature review. Sustainability 2024, 16, 1166. [Google Scholar] [CrossRef]

- Chiu, T.K. The impact of Generative AI (genai) on practices, policies, and research direction in education: A case of ChatGPT and Midjourney. Interact. Learn. Environ. 2023, 1–17. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behav. Inf. Technol. 2022, 42, 1324–1337. [Google Scholar] [CrossRef]

- Saqr, R.R.; Al-Somali, S.A.; Sarhan, M.Y. Exploring the acceptance and user satisfaction of AI-driven e-learning platforms (Blackboard, Moodle, Edmodo, Coursera and EDX): An integrated technology model. Sustainability 2023, 16, 204. [Google Scholar] [CrossRef]

- Dekker, I.; De Jong, E.M.; Schippers, M.C.; De Bruijn-Smolders, M.; Alexiou, A.; Giesbers, B. Optimizing students’ mental health and academic performance: AI-enhanced life crafting. Front. Psychol. 2020, 11, 1063. [Google Scholar] [CrossRef] [PubMed]

- Jiao, P.; Ouyang, F.; Zhang, Q.; Alavi, A.H. Artificial intelligence-enabled prediction model of student academic performance in online engineering education. Artif. Intell. Rev. 2022, 55, 6321–6344. [Google Scholar] [CrossRef]

- Wang, S.; Sun, Z.; Chen, Y. Effects of higher education institutes’ artificial intelligence capability on students’ self-efficacy, creativity and learning performance. Educ. Inf. Technol. 2022, 28, 4919–4939. [Google Scholar] [CrossRef]

- Xia, Q.; Chiu, T.K.F.; Chai, C.S.; Xie, K. The mediating effects of needs satisfaction on the relationships between prior knowledge and self-regulated learning through artificial intelligence chatbot. Br. J. Educ. Technol. 2023, 54, 967–986. [Google Scholar] [CrossRef]

- Maurya, L.S.; Hussain, M.S.; Singh, S. Developing classifiers through machine learning algorithms for student placement prediction based on academic performance. Appl. Artif. Intell. 2021, 35, 403–420. [Google Scholar] [CrossRef]

- Padmasiri, P.; Kalutharage, P.; Jayawardhane, N.; Wickramarathne, J. AI-Driven User Experience Design: Exploring Innovations and challenges in delivering tailored user experiences. In Proceedings of the 8th International Conference on Information Technology Research (ICITR), Colombo, Sri Lanka, 7–8 December 2023. [Google Scholar] [CrossRef]

- Yang, B.; Wei, L.; Pu, Z. Measuring and improving user experience through Artificial Intelligence-aided design. Front. Psychol. 2020, 11, 595374. [Google Scholar] [CrossRef] [PubMed]

- Sunitha, B.K. The impact of AI on human roles in the user interface & user experience design industry. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–5. [Google Scholar]

- Fakhri, M.M.; Ahmar, A.S.; Isma, A.; Rosidah, R.; Fadhilatunisa, D. Exploring generative AI tools frequency: Impacts on attitude, satisfaction, and competency in achieving higher education learning goals. EduLine J. Educ. Learn. Innov. 2024, 4, 196–208. [Google Scholar] [CrossRef]

- Campbell, D.T.; Stanley, J.C. Experimental and Quasi-Experimental Designs for Research on Teaching. In Handbook of Research on Teaching; Gage, N.L., Ed.; Rand McNally: Chicago, IL, USA, 1963. [Google Scholar]

- Murchan, D.; Siddiq, F. A call to action: A systematic review of ethical and regulatory issues in using process data in educational assessment. Large-Scale Assess. Educ. 2021, 9, 25–38. [Google Scholar] [CrossRef]

- Barchard, K.A.; Pace, L.A. Preventing human error: The impact of data entry methods on data accuracy and statistical results. Comput. Hum. Behav. 2011, 27, 1834–1839. [Google Scholar] [CrossRef]

- Law, E.L.; Van Schaik, P.; Roto, V. Attitudes towards user experience (UX) measurement. Int. J. Hum. Comput. Stud. 2014, 72, 526–541. [Google Scholar] [CrossRef]

- Cortina, J.M. What is the coefficient alpha? An examination of theory and applications. J. Appl. Psychol. 1993, 78, 98–104. [Google Scholar] [CrossRef]

- Wei, H.C.; Chou, C. Online learning performance and satisfaction: Do perceptions and readiness matter? Distance Educ. 2020, 41, 48–69. [Google Scholar] [CrossRef]

- Brislin, R.W. Back-translation for cross-cultural research. J. Cross-Cult. Psychol. 1970, 1, 185–216. [Google Scholar] [CrossRef]

- Bridgeman, B.; Cline, F. Effects of differentially time-consuming tests on computer-adaptive test scores. J. Educ. Meas. 2004, 41, 137–148. [Google Scholar] [CrossRef]

- Marsden, C.J.T. Single group, pre- and posttest research designs: Some methodological concerns. Oxf. Rev. Educ. 2012, 38, 583–616. [Google Scholar] [CrossRef]

- Hughes, D.; Percy, C.; Tolond, C. LLMs for HE Careers Provision; Jisc (Prospects Luminate): Bristol, UK, 2023; Available online: https://luminate.prospects.ac.uk/large-language-models-for-careers-provision-in-higher-education (accessed on 1 May 2024).

- The Guardian. Eating Disorder Hotline Union AI Chatbot Harm. 2023. Available online: https://www.theguardian.com/technology/2023/may/31/eating-disorder-hotline-union-ai-chatbot-harm (accessed on 1 May 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).