Drone Polariscopy—Towards Remote Sensing Applications †

Abstract

:1. Introduction

2. Materials and Methods

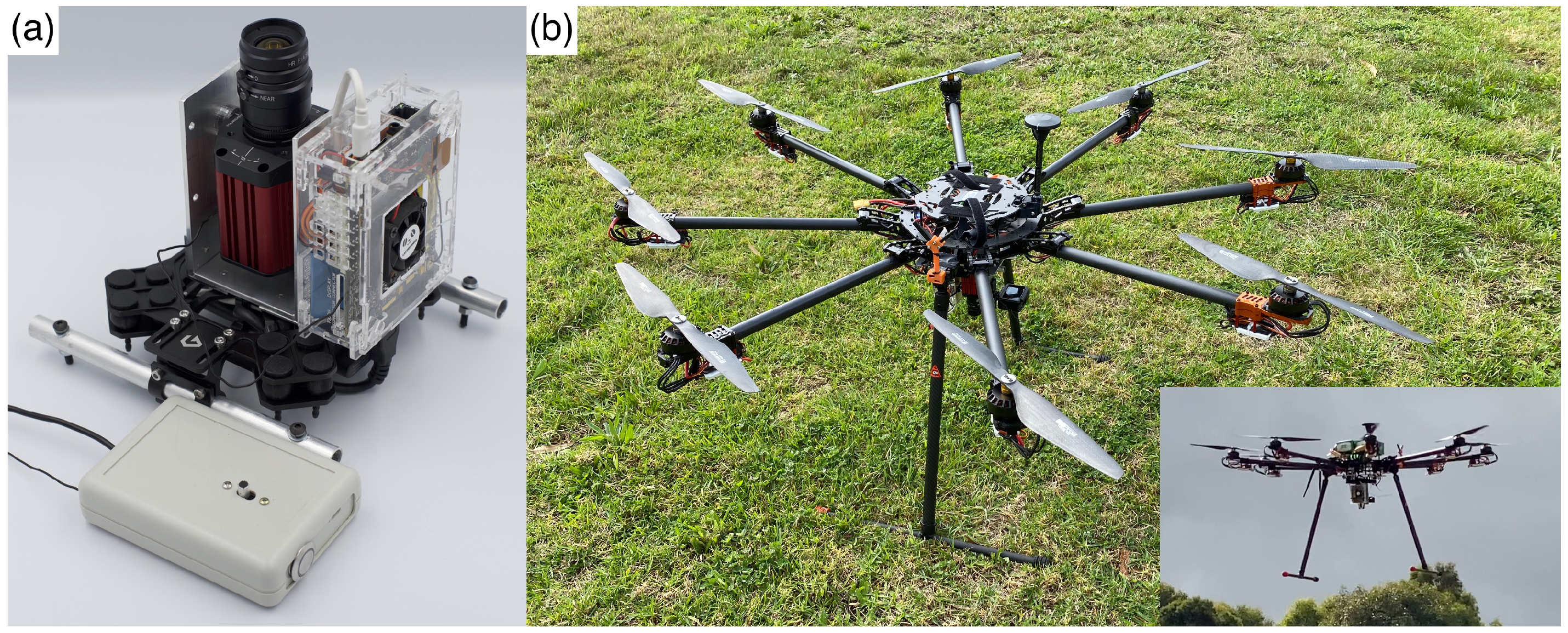

2.1. Drone Flight with Polarisation Camera

2.2. Data Processing

3. Results and Discussion

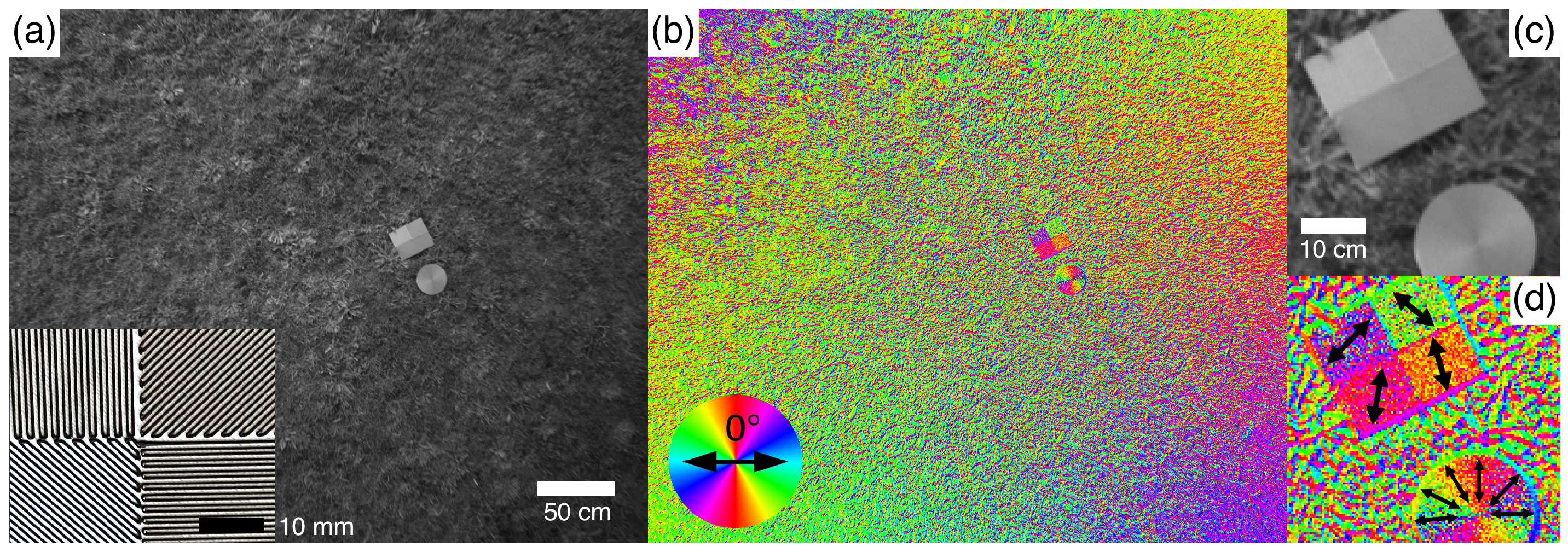

3.1. Orientation Determination

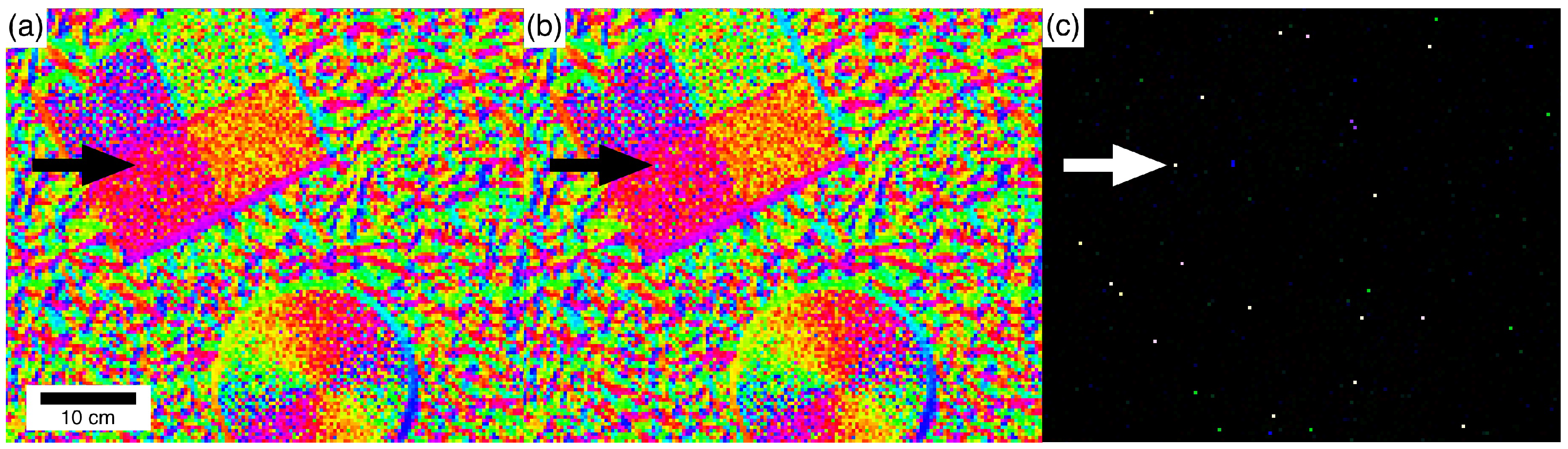

3.2. Data Processing Method

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Atkinson, G.A.; Ernst, J.D. High-sensitivity analysis of polarization by surface reflection. Mach. Vis. Appl. 2018, 29, 1171–1189. [Google Scholar] [CrossRef] [Green Version]

- Schuler, D.L.; Lee, J.S.; Kasilingam, D.; Pottier, E. Measurement of ocean surface slopes and wave spectra using polarimetric SAR image data. Remote. Sens. Environ. 2004, 91, 198–211. [Google Scholar] [CrossRef]

- Viana, R.D.; Lorenzzetti, J.A.; Carvalho, J.T.; Nunziata, F. Estimating energy dissipation rate from breaking waves using polarimetric SAR images. Sensors 2020, 20, 6540. [Google Scholar] [CrossRef] [PubMed]

- Hikima, Y.; Morikawa, J.; Hashimoto, T. FT-IR Image Processing Algorithms for In-Plane Orientation Function and Azimuth Angle of Uniaxially Drawn Polyethylene Composite Film. Macromolecules 2011, 44, 3950–3957. [Google Scholar] [CrossRef]

- Ryu, M.; Balčytis, A.; Wang, X.; Vongsvivut, J.; Hikima, Y.; Li, J.; Tobin, M.J.; Juodkazis, S.; Morikawa, J. Orientational Mapping Augmented Sub-Wavelength Hyper-Spectral Imaging of Silk. Sci. Rep. 2017, 7, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Honda, R.; Ryu, M.; Moritake, M.; Balčytis, A.; Mizeikis, V.; Vongsvivut, J.; Tobin, M.J.; Appadoo, D.; Li, J.L.; Ng, S.H.; et al. Infrared Polariscopy Imaging of Linear Polymeric Patterns with a Focal Plane Array. Nanomaterials 2019, 9, 732. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ng, S.H.; Anand, V.; Duffy, A.; Babanin, A.; Ryu, M.; Morikawa, J.; Juodkazis, S. Remote-sensing concept using polariscopy for orientation determination below the spatial resolution limit. In Photonic Instrumentation Engineering VIII; Soskind, Y., Busse, L.E., Eds.; SPIE: Bellingham, WA, USA, 2021; Volume 1169306, p. 4. [Google Scholar] [CrossRef]

- Ryu, M.; Nishijima, Y.; Morimoto, S.; To, N.; Hashizume, T.; Matsubara, R.; Kubono, A.; Hu, J.; Ng, S.H.; Juodkazis, S.; et al. Hyperspectral Molecular Orientation Mapping in Metamaterials. Appl. Sci. 2021, 11, 1544. [Google Scholar] [CrossRef]

- Coulson, K.L. Polarization and Intensity of Light in the Atmosphere; A. Deepak: Hampton, VA, USA, 1988. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, S.H.; Allan, B.; Ierodiaconou, D.; Anand, V.; Babanin, A.; Juodkazis, S. Drone Polariscopy—Towards Remote Sensing Applications. Eng. Proc. 2021, 11, 46. https://doi.org/10.3390/ASEC2021-11161

Ng SH, Allan B, Ierodiaconou D, Anand V, Babanin A, Juodkazis S. Drone Polariscopy—Towards Remote Sensing Applications. Engineering Proceedings. 2021; 11(1):46. https://doi.org/10.3390/ASEC2021-11161

Chicago/Turabian StyleNg, Soon Hock, Blake Allan, Daniel Ierodiaconou, Vijayakumar Anand, Alexander Babanin, and Saulius Juodkazis. 2021. "Drone Polariscopy—Towards Remote Sensing Applications" Engineering Proceedings 11, no. 1: 46. https://doi.org/10.3390/ASEC2021-11161