Abstract

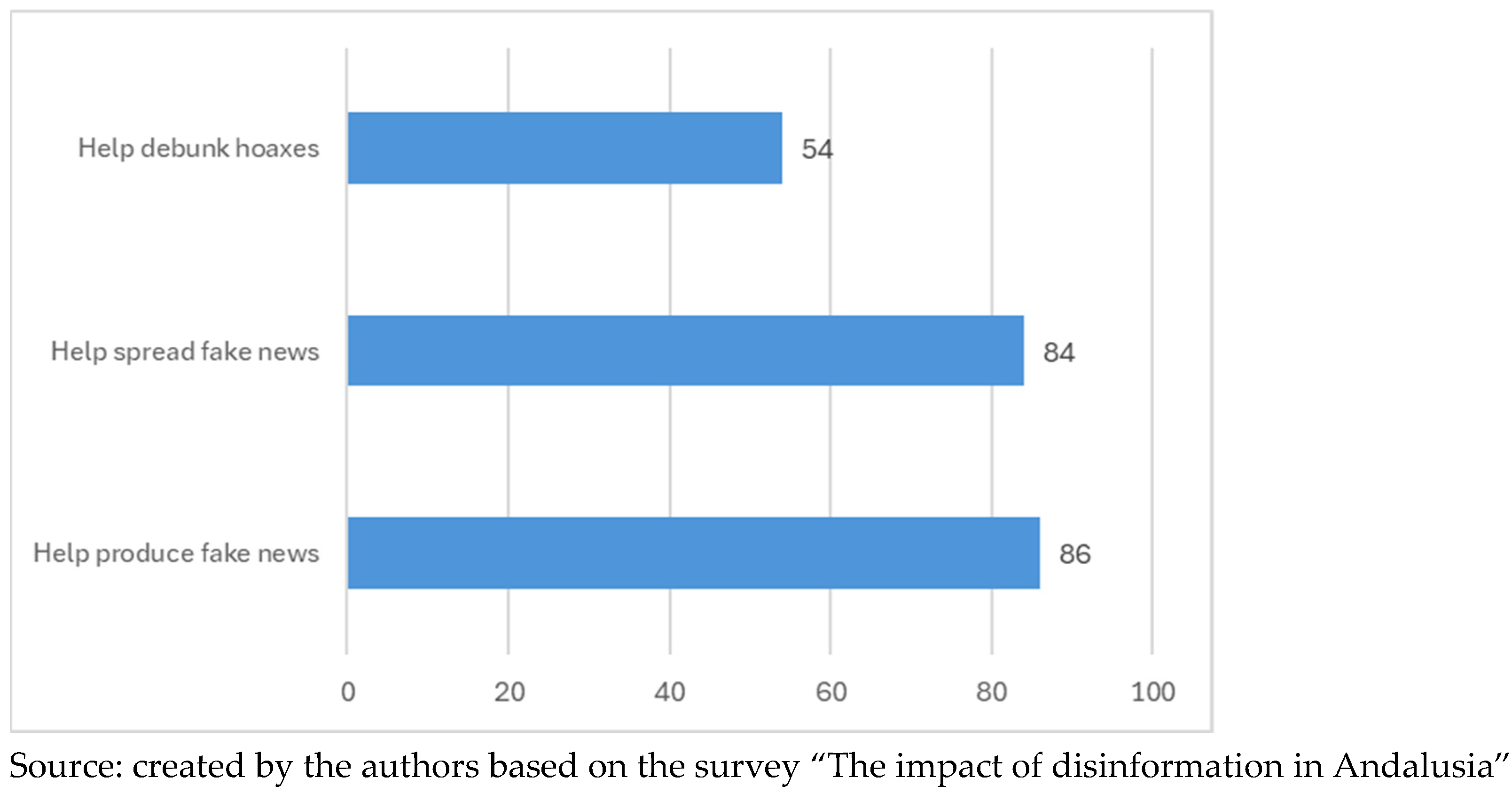

This study addresses public perception of the relationship between artificial intelligence (AI) and disinformation. The level of general awareness of AI is considered, and based on this, an analysis is carried out of whether it may favor the creation and distribution of false content or, conversely, the public perceive its potential to counteract information disorders. A survey has been conducted on a representative sample of the Andalusian population aged 15 and over (1550 people). The results show that over 90% of the population have heard of AI, although it is less well known among the eldest age group (78%). There is a consensus that AI helps to produce (86%) and distribute (84%) fake news. Descriptive analyses show no major differences by sex, age, social class, ideology, type of activity or size of municipality, although those less educated tend to mention these negative effects to a lesser extent. However, 54% of the population consider that it may help in combating hoaxes, with women, the lower class and the left wing having positive views. Logistic regressions broadly confirm these results, showing that education, ideology and social class are the most relevant factors when explaining opinions about the role of AI in disinformation.

1. Introduction

The emergence of artificial intelligence (AI) and its impact on the fight against disinformation is one of the most complex and urgent dilemmas that contemporary society must address. It is essential to study this association because it is the beginning of a disruptive process that will not only have technological consequences, but also unpredictable legislative, cultural, social and educational fallout (Hajli et al., 2022; Garriga et al., 2024).

The referendum on the United Kingdom’s membership in the European Union (Greene et al., 2021) and the election of Donald Trump as President of the United States are events that took place on the international scene in 2016 and illustrated the impact of this information disorder on the political sphere (Teruel, 2023). More recently, the global health crisis caused by COVID-19 has dramatically increased the presence of false content on social media (Allcott & Gentzkow, 2017; Salaverría et al., 2020). In addition to generating a global public debate concerning the problem of disinformation, there have been internal political events in several countries that have resulted in increased concern among both the general public and academia about information disorder. Some notable cases are those of Brazil, where Jair Bolsonaro won the presidential election in 2018 (Canavilhas et al., 2019; López-López et al., 2022), or Spain, with the events surrounding the independence referendum in Catalonia (Aparici et al., 2019).

The European Commission has addressed the problem and defines disinformation as “verifiably false or misleading information that is created, presented and disseminated for profit or to deliberately mislead the population, and which may cause public harm” (European Commission, 2018a, p. 3). It has also begun to periodically include indicators in its public opinion surveys to carry out comparative studies on the significance in the member States, since false information is used to undermine the legitimacy of democracies by eroding trust in institutions and their electoral processes, promoting polarization and affecting the quality of public debate According to the Eurobarometer Media & News Survey, 61% of European citizens had been exposed to fake news in the previous seven days (European Commission, 2022). Not only the political sphere has shown concern about the phenomenon, but there have been numerous academic research projects in recent years that have strived to analyze, understand and combat it (Paniagua-Rojano & Rúas-Araujo, 2023).

The conceptual relationship between AI and disinformation is multifaceted, involving both the enhancement and mitigation of false information dissemination. AI technologies, particularly generative AI, have significantly amplified the speed and sophistication of disinformation spread, making it increasingly challenging to identify and counteract false information. This is evident in the use of AI to create realistic yet misleading content, which can manipulate public perception and erode trust in information sources (Islas et al., 2024). AI’s role in disinformation is further complicated by its ability to control content distribution through machine learning algorithms, which can favor sensational content, thereby facilitating the diffusion of disinformation. However, AI also offers potential solutions, such as automated fact-checking, to combat the spread of false information (Duberry, 2022) (The challenge lies in the convincing quality of AI-generated content, which necessitates a shift in disinformation management strategies from detection to influence, focusing on the impact rather than the volume of disinformation (Karinshak & Jin, 2023). Moreover, the cognitive mechanisms of users, such as their heuristic processing and ethical values, play a crucial role in their ability to discern disinformation, highlighting the importance of digital literacy and inoculation strategies to build resistance against AI-driven disinformation (Shin, 2024). As AI continues to evolve, its dual role as both a tool for and a defense against disinformation underscores the need for comprehensive frameworks and proactive strategies to manage the risks and crises associated with AI-driven disinformation (Karinshak & Jin, 2023).

Contemporary studies have looked more deeply into the importance of various socio-demographic variables in light of this problem. According to Standard Eurobarometer 94 (2021), groups with a better social position (men, the more highly educated, managers, the upper-middle class) are more confident in their ability to detect fake news (García-Faroldi & Blanco-Castilla, 2023). It has also been shown that younger people distrust the ability of older people to perceive that content is false, and vice versa (Martínez-Costa et al., 2023). The belief that others are more exposed to disinformation than oneself is an indicator of this contemporary danger that segregates societies by degrees of vulnerability (Altay & Acerbi, 2023).

There is a consensus that educational attainment is a barrier against the phenomenon. People with a university degree are more confident of detecting it (Beauvais, 2022; Martínez-Costa et al., 2023). It has been observed in the United States that sex does not generate statistically significant differences about who is assigned responsibility for combating the phenomenon, but it does offer different characteristics. Women are more likely to attribute the main responsibility to social media, while men tend to perceive the government as the chief culprit (Belhadjali et al., 2019). Moreover, it has been found at the national level that women are more cautious when transmitting content when they doubt its veracity (Rodríguez-Virgili et al., 2021) and that they express greater concern about the social consequences of information disorders (Almenar et al., 2021).

Studies have traditionally addressed the different social concerns about disinformation among different age groups. Adaji’s (2023) research, although it only has a small survey size, highlights that young people aged 18 to 34 are more likely to spread disinformation, and points out the factors that make them more vulnerable and which need to be reflected upon: the availability of technology, entertainment consumption, fear of missing out (FOMO), peer pressure and greater consumption of information on social media, despite perceiving it as less trustworthy and more likely to spread fake news (Pérez Escoda et al., 2021). Thus, although young people feel themselves capable of detecting false information, they claim to turn to older people and traditional media to verify content and, even if they doubt its veracity, they may share it within their circle (Zozaya-Durazo et al., 2023).

However, the work of Rodríguez-Virgili et al. (2021) states that age does not influence the perception of implausible information. Prull and Yockelson (2013) had previously confirmed that there were no age-related differences, except the fact that older adults reported disinformation more than younger ones did.

The scientific literature has looked into the importance of ideology in detecting and sharing false information. It concludes that there is a link, as a higher level of acceptance is observed for content that is congruent with one’s ideology (Gawronski et al., 2023). It was observed during the pandemic that, on social media, people with conservative ideologies were more likely to see and share disinformation (Rao et al., 2022). Social media is a space for the dissemination of information disorders, and after the pandemic, it has been concluded that right-wing individuals are more likely than left-wing ones to spread false content within their echo chambers (Nikolov et al., 2021).

Sociological studies on information disorders in Spain reflect these same patterns. They have focused mainly on the relationship with electoral campaigns and dialogue on social media (López López et al., 2023). The emergence of the far right has been considered an incentive for the increase in false information circulating in the country through all media (Díez Garrido et al., 2021). Regarding the sociodemographic factors of the audience, it has been confirmed that ideology is a determining factor for trust in the different media: based on this, one or another medium is considered a creator of disinformation (Masip et al., 2020). The greater vulnerability linked to age and lower education levels is also noted (Herrero-Curiel & La-Rosa, 2022). This characteristic is important because, among those at the ideological extremes, people under 25 years of age are the least afraid of expressing their political opinions in public (Beatriz Fernández et al., 2020).

Since 2018, Spanish citizens have perceived themselves as more vulnerable to disinformation than the rest of Europe and, at the same time, have low confidence in their own ability to identify it (European Commission, 2018b, 2021). It is therefore considered important to measure citizens’ concern about this phenomenon both internationally and by observing territorial manifestations.

Few studies have looked into the perception of disinformation at a regional level. According to data from the Digital News Report 2022 (Vara-Miguel et al., 2022), it has been concluded that there are fairly comparable values among Catalonia, Madrid and Andalusia with respect to concern about this phenomenon (Montiel-Torres, 2024). In this homogeneity, it is noteworthy, in terms of sex, that Catalan and Andalusian women are more distrustful than men in the respective regions, both of the news they consume and of media content in general.

Disinformation and Artificial Intelligence

The second axis of this study, along with disinformation, is the public perception of the possibilities of artificial intelligence. Recently, knowledge and concern about the impact that AI can have on disinformation has become popular, and not only in academia (Bontridder & Poullet, 2021), but also in the professional field (Peña-Fernández et al., 2023) and in the media, where it is also understood that the use of AI for data verification and analysis of fake news will gradually grow over coming years (Beckett & Yaseen, 2023), and transparency in these journalistic routines, which allow the traceability of information, becomes a strength that benefits trust in the medium (Palomo et al., 2024). Furthermore, a significant portion of the public is apprehensive about AI’s role in spreading disinformation, particularly in politically sensitive contexts like the 2024 US presidential election, where four out of five Americans express concern about AI’s potential to disseminate false information (Yan et al., 2024). This anxiety is exacerbated by the media’s portrayal of AI as a tool that can amplify disinformation, creating immersive and emotionally engaging narratives that are difficult to counteract without adequate digital literacy (Islas et al., 2024).

In general terms, artificial intelligence is usually defined as the ability of a machine to imitate or perform human-like capabilities and functions, such as decision-making and problem-solving (Kennedy & Wanless, 2022), but in the field of journalism, Simon’s proposal is more precise: “The act of computationally simulating human activities and skills in narrowly defined areas, which usually consists of the application of machine learning approaches through which machines learn from data and/or their own performance” (Simon, 2024, p. 11).

While AI has the potential to detect false information, it can also be used to create and spread it more effectively (Garriga et al., 2024). AI-powered multimodal language models, such as ChatGPT-4, can generate convincing text that mimics human writing, as well as synthetic images and videos that are difficult to distinguish from reality, which could be used for malicious purposes such as the creation of fake news or deepfakes (Bontridder & Poullet, 2021; World Economic Forum, 2024). However, AI can also help in the fight against such disinformation (López-López et al., 2022) by identifying patterns and inconsistencies that may indicate false information, or establish content polarization, thus helping fact-checkers and platforms (Shu et al., 2017; Alonso et al., 2021). In just a few years, the possibilities of interaction between applications intended for information and verification of political content have been surpassed, and AI can therefore be useful for personalized fact-checking (Sánchez-Gonzales & Sánchez-González, 2020).

Therefore, in order to continue deepening our understanding of disinformation, it is essential to also consider the disruption that began with OpenAI’s launch of ChatGPT-3.5 in November 2022, which in just two months (January 2023) surpassed 100 million active users, becoming the fastest-growing application in history (Lian et al., 2024). The emergence of ChatGPT and competing platforms such as Gemini (formerly Google’s Bard) and Copilot (formerly Microsoft’s Bing), among others, has facilitated public access to a range of advanced generative AI tools.

Previous research has shown the public’s limited understanding of and familiarity with artificial intelligence (Selwyn & Gallo Cordoba, 2022). These studies also reveal a growing interest and attraction towards a deeper understanding of AI, particularly noticeable in the 35–49 age group (Sánchez-Holgado et al., 2022).

The social perception of its role in disinformation is complex and dual, combining optimism in some respects with pessimism in others. Part of society sees this new technology as a tool that can help reduce the spread of false information by automating the detection and removal of false content (Santos, 2023). Another part is concerned about its potential to make the problem worse, especially as the content generated becomes more sophisticated and difficult to distinguish from authentic human-made content (Bontridder & Poullet, 2021). Furthermore, there are concerns about the limitations of artificial intelligence systems to understand the context in which content is shared on social media, which can lead, on one hand, to the deletion of posts that should be protected by freedom of expression (Duberry, 2022) and, on the other, to the manipulation of the truth in two very plausible ways: that false content passes for true, but also that real content is taken to be artificially generated (Bontridder & Poullet, 2021). It is therefore necessary to first consider the integration of AI in journalism as a way to combat disinformation, while also addressing ethical considerations such as transparency and accountability (Santos, 2023), with the necessary collaboration and involvement of large technology companies for the labeling and metadata of artificially generated content. A recent survey by the Andalusian Studies Center (Centro de Estudios Andaluces, 2024) found 53.8% of respondents think it is wrong for news to be written using AI, compared to 39% who viewed it positively.

Due to the disruption that AI has brought about in false information, there is still little research that interrelates both phenomena. There are studies on opinion and perception on social media, both among journalists (Peña-Fernández et al., 2023) and the general population (Lian et al., 2024), as well as some surveys on opinions about artificial intelligence (Selwyn & Gallo Cordoba, 2022). A recent survey by the Sociological Research Center (Centro de Investigaciones Sociológicas, 2023), “Perception and Science of Technology” (2023), considered its benefits and dangers but was not related to information disorders. On the other hand, a 2020 study asked about the degree of familiarity with different applications that use it and Big Data (Sánchez-Holgado et al., 2022). However, only one Ipsos survey (2023) has been found that specifically asks citizens about the perceived relationship between artificial intelligence and the generation of fake news, though not about its distribution or usefulness in combating such disinformation, aspects that are addressed in this study, conducted in Andalusia, which provides originality, novelty and relevance to the work.

For all these reasons, this paper addresses how the public perceives the interaction between artificial intelligence and disinformation. The paper focuses on three specific objectives:

- OB1. Study the degree of awareness of AI.

- OB2. Analyze public perception regarding AI’s ability to foster the creation and distribution of fake content or, on the contrary, its potential to offer solutions to combat them.

- OB3. Observe the differences in perceptions of AI and its relationship with disinformation based on the socio-demographic variables analyzed.

After reviewing the literature, and in accordance with the research objectives listed above, the following hypotheses, which are essentially descriptive in nature, are proposed:

Hypothesis 1 (H1).

Knowledge of AI differs by socio-demographic variables; higher technological skills imply higher knowledge of AI.

Hypothesis 2 (H2).

The social groups most exposed to disinformation will present a more negative view of the impact of AI on the production and distribution of fake news or their ability to combat it.

2. Materials and Methods

In order to achieve the research objectives, data have been analyzed from a representative survey of the Andalusian population aged 15 and over, carried out within the framework of the “Impact of disinformation in Andalusia: transversal analysis of audiences and journalistic routines and agendas (Desinfoand)” research project. The fieldwork was carried out by a polling company during the month of December 2023, employing a mixed method to survey 1550 people: 1250 surveys were completed online through a citizen panel, and 300 more were conducted by telephone among those aged 60 and over. This mixed design was adopted due to the older population’s lower participation in online surveys. We decided to include people between 15 and 17 years old due to their intensive use of social networks, where false information usually circulates, and because young people are often underrepresented in other quantitative studies, despite their distinct media consumption patterns compared to immediately preceding generations. The sample was stratified by size of the municipality, while sex and age quotas were established according to the distribution of the Andalusian population following the data collected in the Ongoing Residence Statistics (Statistics National Instituto, Instituto Nacional de Estadística, INE, 2022). The sampling error for a confidence level of 95.5% is ±2.55% for the entire sample, assuming simple random sampling.

The survey collects information on the use of different channels for information, the frequency with which false information is found, reactions to false information, opinions on who are the agents that generate the most disinformation and what measures can be adopted to curb it, among other aspects. Many of these questions are validated at the international level, being used in the Eurobarometers conducted by the European Commission. Before starting the field work, a pilot test was carried out by the research team to refine the questionnaire, and subsequently, the company also tested it with people of different socio-demographic profiles.

The questionnaire ends asking about the impact of AI on disinformation. The objectives and hypotheses set out for this study require that four questions from the survey be considered: one on awareness of artificial intelligence and three related to its impact on fake news. Excepting the first question, which only had two response options (Yes/No), the rest had four response categories in their original formulation (two expressed agreement and two disagreement), but in order to simplify the presentation of results, they have been recoded to have only two options. Specifically, the questions are as follows:

There have been a large number of news reports recently, both in the media and on the Internet, about artificial intelligence (AI) and its different applications. Artificial intelligence is a field of computer science that creates systems that can perform tasks that would normally call for human intelligence, such as learning, reasoning and creativity. Have you heard of it?

I would like you to indicate your degree of agreement or disagreement with these statements:

- Artificial intelligence (AI) makes it easier, faster and cheaper to produce fake news.

- Artificial intelligence (AI) makes it easier, faster and cheaper to distribute fake news.

- Artificial intelligence (AI) will be a useful tool in helping citizens debunk hoaxes or lies that are spread as true news.

Each of these questions has been cross-checked utilizing six socio-demographic variables:

- Sex: male, female.

- Age: 15–24, 25–44, 45–59 and over 60.

- Level of education: primary or no studies, compulsory secondary studies, non-compulsory secondary studies and university studies.

- Subjective social class: upper and upper-middle class, middle class, lower-middle class and lower class.

- Ideological self-positioning: left, center and right.

- Main activity: working, retired, unemployed, student and homemaker.

- Size of residence: less than 10,000 inhabitants; 10,001 to 50,000 inhabitants; and over 50,000 inhabitants.

The analysis carried out has two steps. First, we used descriptive analysis using contingency tables (or crosstab) to relate each research question with the socio-demographic variables. To find out if the association is significant, the Chi-squared test was utilized, and if an association was found (significance equal to or less than 0.005), the corrected standardized residuals were analyzed to find out which cells differ significantly from chance. Cells marked with an asterisk (*) in the tables indicate that a significant difference exists from what would be expected if random distribution had occurred.1 As it is recommended not to consider those cells with a very low number of cases, only those with at least 20 cases have been taken into account. Second, a multivariate analysis was carried out using a logistic regression for each of the questions analyzed, to investigate what factors influence the probability of agreeing that AI helps produce fake news, distribute it or combat it. This technique allow the monitoring of the effect of each independent variable by controlling for the others.

3. Results

Regarding the first objective (studying the degree of awareness of AI), 91.9% of the population surveyed said they had heard of it, while 8.1% had not. There are no significant differences in the degree of awareness of AI by sex, social class, subjectivity or ideology (Table 1). Despite this data, there are relevant differences by age group: from the age of 60, one in five people admit to not having heard of AI. There are also differences corresponding to the level of education, since AI is less well-known among those who did not finish their studies or have primary or compulsory secondary education. Those who are studying declare themselves more aware of it (over 97%), while the retired are the collective who show the lowest level of awareness. Finally, those who live in large cities (more than 50,000 inhabitants) declare themselves to have greater awareness of it, in contrast to those who live in municipalities with fewer than 10,000 inhabitants. These results confirm our first hypothesis.

Table 1.

Degree of awareness of artificial intelligence according to various socio-demographic variables (valid %).

Regarding the second and third objectives (analyzing public perception of the influence of AI on the creation of fake news and the fight against disinformation and observing whether there are differences according to the socio-demographic variables studied), Figure 1 and Table 2 offer interesting results. In total, 86% of the population agree that AI makes it easier to produce fake news, and a very similar number, 84.1%, consider that it helps to distribute it. There are no significant differences by sex, age, social class, type of activity or size of municipality, and this opinion is widespread among all population groups. The only relevant difference is found in the level of education: those with compulsory secondary education or lower show a lesser degree of agreement with the statement about the production of fake news, while those with non-compulsory secondary education are more likely to think that AI makes it easier to produce and distribute fake news.

Figure 1.

Impact of AI on disinformation.

Table 2.

Opinion on the impact of artificial intelligence on disinformation according to various socio-demographic variables (valid %).

Unlike the negative assessments of the impact of artificial intelligence on disinformation, which are fairly evenly distributed across the population, there are significant differences regarding the opinion that AI can be a tool to help citizens detect fake news, a statement supported by 54.2% of the population surveyed. Women have a more positive view of this function of AI than men do (50.7% men versus 57.6% women), as do lower-class and left-wing people, while those who position themselves politically in the center are more critical of it (only 48.7% think it can help debunk hoaxes). In this case, there are no significant differences by level of education, nor by age, type of activity or size of place of residence.

As a final step, a logistic regression analysis (Table 3) was performed to jointly analyze how the independent variables influence the probability that a person considers that AI helps to produce fake news (column 1), to distribute it (column 2) or to combat it (column 3). To see how the independent variables are associated with the dependent variable, we analyze the Exp(B) (logistic regression coefficient), which represents the probabilities of change in the dependent variable associated with a one-unit change in the independent variable. As all the independent variables have been included in a dichotomous way (with values 0 and 1) and one of the categories has been taken as a reference, Exp(B) is interpreted in the following way: when the values are greater than 1, the probabilities that the person agrees are higher in that category than in the one taken as a reference (which appears in brackets); when the value is less than 1, the probabilities are lower than in the reference category. For example, analyzing Table 3, it can be noticed in the third column that the probability of a person on the left considering that AI helps to dismantle hoaxes is 1.613 times higher than that of a person in the center, while in the first column, we can see that a person with no education or with primary education has a probability of 0.215 of considering that AI helps to produce disinformation compared to a person with non-compulsory secondary education.

Table 3.

Logistic regressions: determinants of the opinion on the impact of artificial intelligence on disinformation.

The regression analysis shows results that largely coincide with those observed in Table 2, when each independent variable was studied separately, although there are also some differences. Of the three dependent variables analyzed, two models are significant (the production of fake news and combating it) and one is not (the distribution of fake news), so we will focus on the first two cases. Starting with the similarities between the results found in the regression in Table 3 and those included in Table 2, in both cases, there are no significant differences by gender, type of occupation or size of municipality for either of the two variables analyzed, nor are there any significant differences for age when studying the production of fake news. Finally, educational level again stands out as a relevant variable, showing that those with less than non-compulsory secondary education are less likely to indicate that AI helps to produce fake news compared to those with such education.

However, there is a difference because this group is joined by the group of people with a university education, who also have a lower level of agreement with the statement. The other significant difference is that, in the case of the variable on fighting fake news, age shows significant differences in the case of the 45–59 age group, which agrees more strongly with the statement than those aged 25–44. Ideology is also relevant in this case, since, as mentioned above, people on the left agree more with the role of AI in combating disinformation compared to those in the centrist group. Finally, subjective social class is the only variable that is significant in both models: compared to middle-class people, lower-middle- and lowerclass people are more likely to think that AI helps to produce false information, while in the case of helping to combat it, upper- and upper-middle- and lower-class people agree more with this role than the middle class.

One interesting and novel result of this study is that there is a positive association between the two questions about the negative impact of AI on disinformation and the question about AI’s positive impact: those who tend to agree that AI helps produce and distribute fake news also agree that it can help debunk hoaxes (association data could be provided under request). The relationship occurs both with all the original categories and in the dichotomous ones. It would seem then that the perception of AI regarding false content is complex and nuanced, given that there are individuals who see positive and negative aspects at the same time.

When studying the Andalusian public’s perception of the impact of artificial intelligence on disinformation, it must be borne in mind that around 11% of the population surveyed indicated that they did not know (around 10%) or did not want to answer (1.5%). The figures are higher among the population aged 60 and over, as well as among the retired (around one in three) and are also significantly higher than the average among those with a maximum of primary education (one in four chose one of these two options) and people dedicated to homemaking (one in five). At the other extreme, those who opt for these replies are fewer than 5% among the population aged 15 to 44, among people with university studies and among students. The data shown refer only to those who have stated that they have an opinion on the consequences of AI in the production and distribution of fake news and in the fight against it.

4. Discussion and Conclusions

This paper offers the novelty of being one of the first empirical measurements of how citizens perceive the correlation between AI and false information. Three objectives and two hypotheses were established for its study, addressed through a survey conducted with 1550 subjects in Andalusia in December 2023.

The first aim was to determine the general population’s degree of awareness of AI (Table 1). The result is significantly high considering its recent popularization: 91.9% have heard of it. It is interesting that no marked differences are found based on age, subjective social class or ideology. That provides an idea of the transversality and speed with which said technological advance has been disseminated and perceived by public opinion.

However, the data show that people over 60, with a lower level of education, who are retired or who live in small towns are more likely to be unaware of AI. These profiles allow us to begin to define the groups that may present, due to lack of awareness, a higher degree of vulnerability to this phenomenon as well as to information disorder (Herrero-Curiel & La-Rosa, 2022). These collectives, together with people dedicated to homemaking, had a lower response rate to the questions posed; therefore, it is they who should be the focus of literacy strategies intended to face this challenge. Education in media and information literacy (MIL) is crucial. The integration of AI into media literacy is essential to teach how to identify false content that can be created with current information tools, as well as to have basic notions of verification (Deuze & Beckett, 2022).

The second objective was to look into public perception of the influence of AI on the creation and distribution of fake news, or its ability, on the contrary, to offer solutions to help citizens in the struggle against fake content (Figure 1). The balance leans towards the negative, which is hardly out of kilter in a general context of mistrust towards traditional social institutions (Edelman, 2023). A total of 86% believe that AI will be used to create fake news, a figure significantly higher (12 points) than the average result of other, international surveys (Ipsos, 2023), and 84.1% think that AI will improve its distribution. No differences are found between sexes, subjective social class, age or ideology, although the figure increases in people with a higher level of education (Table 2). Just as one’s level of education is a proven factor in perceiving disinformation (Martínez-Costa et al., 2023), it is also considered a determinant factor in having a pessimistic attitude towards AI’s ability to worsen information disorder.

At the other end of the scale, 54.2% of respondents believe that AI will help detect fake news. In net terms, therefore, pessimism carries the day. Differences are observed between the perception of women and men, as the former place greater trust in the help that AI can provide in fighting disinformation. There is also a positive relationship between trust and other socio-demographic variables: social class—lower-class people—and ideology—left wing. These last pieces of data are significant since it has been proven that people who identify with the right wing are more likely to share false content, and therefore, are more vulnerable to this problem (Nikolov et al., 2021).

Finally, the multivariate analysis using logistic regression (Table 3) shows results generally similar to those in Table 2 and shows that the socio-demographic variables commonly used in social research do not have a great predictive capacity for opinions about Artificial Intelligence. Educational level is a relevant factor only in the case of the production of fake news, as the group most likely to mention this relationship is that with non-compulsory secondary education.

Of the other variables analyzed, only the 45–59 age group (vs. 25–44) and left-wingers (vs. centrists) are more likely to see AI as a tool to combat fake news. Subjective social class emerges as a relevant variable, with lower-class people being more likely to both believe that AI helps to produce false information and to combat it, an example of how complex it is to study this phenomenon.

A possible explanation for the low predictive capacity of the models is that only those who have answered the question are included in them, and as mentioned above, there are large differences in the non-response rate between different social categories. It seems, therefore, that the socio-demographic variables are more related to having sufficient knowledge to have an educated opinion on AI than to the specific assessment of AI, which is quite homogeneous in the population. The second hypothesis (The social groups most exposed to disinformation will present a more negative view of the impact of AI on the production and distribution of fake news or their ability to combat it) is only partly confirmed.

It is interesting to contrast these data, which focus on the relationship between disinformation and artificial intelligence, with more general questions about the social effects of AI. The CIS study “Perception of Science and Technology” (2023) shows 76.3% of the population agree that AI benefits society, with greater optimism among those under 34 years of age and those with higher education. On the other hand, 69.9% consider it a danger, without major differences by sex and age, although people with higher education tend to perceive danger to a greater extent. The CIS showed several years ago how an overwhelming majority of citizens, 80.7%, agreed that AI and robotics will eliminate more jobs than they create (Centro de Investigaciones Sociológicas, 2017). These data show, on the one hand, that public opinion does perceive the complexity of the social effects that AI may have, both the beneficial and the harmful, and on the other, that when questioned about specific aspects (jobs, disinformation), negative perceptions are more widespread than the perception of advantages in general.

Throughout these conclusions, those variables have been analyzed that predict vulnerability to artificial intelligence; confidence in its positive aspects in the fight against disinformation; or, on the contrary, belief in its danger, as established in the third objective—observing the differences in the perception of AI and its relationship with disinformation in the socio-demographic variables analyzed. In summary, level of education is the only determining characteristic against both phenomena (Beauvais, 2022). Therefore, the value of media literacy against information disorders and artificial intelligence is an urgent public need, especially for people over 60 years of age.

Socially, one achievement is that sex is not a variable that produces significantly different values in the face of these phenomena. Women are more optimistic about the potential of AI to prevent information disorders, which is significant since they are the ones who, to a greater extent, suffer as the victims of hoaxes on social media (Queralt Jiménez, 2023).

In conclusion, this study presents, as a novel element, a primary approach with data from a representative survey to how citizens perceive what the relationship between AI and disinformation could be, within its limitations. Given that the data have been taken from a representative sample of Andalusia—the most populous Spanish region—it would be interesting to be able to compare these findings with those obtained in other regions of the country or with data at a national level, to observe if there are similarities or differences. To the best of our knowledge, no other regional study focuses on public perception of the impact of AI on disinformation. This finding strengthens the novelty of our work and highlights the interest in replicating it in other Spanish regions. On the other hand, the fact that this study is a first approach to the incipient phenomenon of generative AI, something accessible to all, calls for further studies in this line of research, combining quantitative and qualitative techniques. It would be interesting to consider other variables in the future beyond the classic socio-demographic ones. For instance, we could study the extent to which people use AI in their daily lives (at work, at leisure, at school, etc.), as well as exposure to different information channels, in order to study how they impact on knowledge and opinions towards AI and its relationship with disinformation.

Author Contributions

Conceptualization, L.G.-F. and L.T.; methodology, L.G-F.; formal analysis, L.G.-F.; funding acquisition, L.G.-F., L.T. and S.B.; writing—original draft preparation, L.G.-F., L.T. and S.B.; writing—review and editing, L.G.-F., L.T. and S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Junta de Andalucía (grant number ProyExcel_00143) and University of Málaga (grant number JA.B3-23). The APC was funded by Junta de Andalucía (grant number ProyExcel_00143) and University of Málaga (grant number JA.B3-23).

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of University of Málaga (protocol code 160-2024-H, 31 October 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to authors.

Acknowledgments

This work is part of the Excellence Project “Impact of disinformation in Andalusia: transversal analysis of audiences and journalistic routines and agendas (Desinfoand)”, of the Andalusian Plan for Research, Development and Innovation (PAIDI 2020, ProyExcel_00143). In addition, it partially benefits from the gen-IA project: Library of artificial intelligence tools for media content creation. Ref: JA.B3-23. Funded by the II Research, Transfer and Scientific Dissemination Plan. University of Malaga, and PID 2023-147486OB-I00. Journalistic applications of AI to mitigate disinformation: trends, uses and perceptions of professionals and audiences. (DESINFOPERIA), funded by Ministry of Science and Innovation.

Conflicts of Interest

The authors declare no conflicts of interest.

Note

| 1 | Corrected standardized residuals are calculated by taking the cell residual (observed values minus the predicted value if there were no relationship between the variables) divided by an estimate of its standard error. The resulting residual is expressed in units of standard deviation, above the mean (positive values) or below it (negative values). If a value is greater than 1.95 or less than −1.95, the residuals are considered to differ significantly from what would be expected in a random distribution, with no relationship between the variables. |

References

- Adaji, I. (2023, May 18–19). Age differences in the spread of misinformation online. European Conference on Social Media (Vol. 10, pp. 12–19), Krakow, Poland. [Google Scholar] [CrossRef]

- Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. [Google Scholar] [CrossRef]

- Almenar, E., Aran-Ramspott, S., Suau, J., & Masip, P. (2021). Gender differences in tackling fake news: Different degrees of concern, but same problems. Media and Communication, 9(1), 229–238. [Google Scholar] [CrossRef]

- Alonso, M. A., Vilares, D., Gómez-Rodríguez, C., & Vilares, J. (2021). Sentiment analysis for fake news detection. Electronics, 10(11), 1348. [Google Scholar] [CrossRef]

- Altay, S., & Acerbi, A. (2023). People believe misinformation is a threat because they assume others are gullible. New Media & Society, 26(11), 6440–6461. [Google Scholar] [CrossRef]

- Aparici, R., García-Marín, D., & Rincón-Manzano, L. (2019). Noticias falsas, bulos y trending topics. Anatomía y estrategias de la desinformación en el conflicto catalán. El Profesional de la Información, 28(3), e280313. [Google Scholar] [CrossRef]

- Beatriz Fernández, C., Rodríguez-Virgili, J., & Serrano-Puche, J. (2020). Expresión de opiniones en las redes sociales: Un estudio comparado de Argentina, Chile, España y México desde la perspectiva de la espiral del silencio. Journal of Iberian and Latin American Research, 26(3), 389–406. [Google Scholar] [CrossRef]

- Beauvais, C. (2022). Fake news: Why do we believe it? Joint Bone Spine, 89(4), 105371. [Google Scholar] [CrossRef] [PubMed]

- Beckett, C., & Yaseen, M. (2023). Generando el cambio: Un informe global sobre qué están haciendo los medios con IA. Polis. Journalism at LSE. Available online: https://tinyurl.com/4spnw9xy (accessed on 27 February 2024).

- Belhadjali, M., Whaley, G., & Abbasi, S. (2019). Assigning responsibility for preventing the spread of misinformation online: Some findings on gender differences. International Journal for Innovation Education and Research, 7(5), 195–201. [Google Scholar] [CrossRef]

- Bontridder, N., & Poullet, Y. (2021). The role of artificial intelligence in disinformation. Data & Policy, 3, e32. [Google Scholar] [CrossRef]

- Canavilhas, J., Colussi, J., & Moura, Z.-B. (2019). Desinformación en las elecciones presidenciales 2018 en Brasil: Un análisis de los grupos familiares en WhatsApp. El Profesional de la Información, 28(5), e280503. [Google Scholar] [CrossRef]

- Centro de Estudios Andaluces. (2024). Barómetro Andaluz. Marzo. Available online: https://tinyurl.com/53azkbwe (accessed on 10 March 2024).

- Centro de Investigaciones Sociológicas. (2017). Latinobarómetro (estudio 319). Available online: https://www.latinobarometro.org/LATDocs/F00006433-InfLatinobarometro2017.pdf (accessed on 12 March 2024).

- Centro de Investigaciones Sociológicas. (2023). Percepciones de la ciencia y la tecnología, estudio 3406. Centro de Investigaciones Sociológicas. Available online: https://tinyurl.com/mr3yrwdf (accessed on 18 March 2024).

- Deuze, M., & Beckett, C. (2022). Imagination, algorithms and news: Developing AI literacy for journalism. Digital Journalism, 10(10), 1913–1918. [Google Scholar] [CrossRef]

- Díez Garrido, M., Renedo Farpón, C., & Cano-Orón, L. (2021). La desinformación en las redes de mensajería instantánea. Estudio de las fake news en los canales relacionados con la ultraderecha española en Telegram. Miguel Hernández Communication Journal, 12, 467489. [Google Scholar] [CrossRef]

- Duberry, J. (2022). AI and the weaponization of information: Hybrid threats against trust between citizens and democratic institutions. In Artificial intelligence and democracy (pp. 158–194). Edward Elgar Publishing. Available online: https://tinyurl.com/2s3jt4u4 (accessed on 22 February 2024).

- Edelman. (2023). Edelman trust barometer. Navigating a polarized world. Available online: https://tinyurl.com/adzr5kk5 (accessed on 22 February 2024).

- European Commission. (2018a). Flash eurobarometer 464: Fake news and disinformation online. European Commission. Available online: https://tinyurl.com/2fx8sy5v (accessed on 21 March 2024).

- European Commission. (2018b). Tackling online disinformation: A European approach. (Communication from the commission to the European parliament, the council, the European economic and social committe and the committee of the regions. COM. (2018) 236). Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52018DC0236 (accessed on 15 March 2024).

- European Commission. (2021). Standard eurobarometer 94—Winter 2020–2021. European Commission. Available online: https://tinyurl.com/mpezcnhz (accessed on 15 February 2022).

- European Commission. (2022). Flash eurobarometer media & news survey. Comisión Europea. Available online: https://tinyurl.com/5bh5sst3 (accessed on 10 March 2024).

- García-Faroldi, L., & Blanco-Castilla, E. (2023). Noticias falsas y percepción ciudadana: El papel del periodismo de calidad contra la desinformación. In Nuevos y viejos desafíos del periodismo (pp. 159–178). Tirant lo Blanch. [Google Scholar]

- Garriga, M., Ruiz-Incertis, R., & Magallón-Rosa, R. (2024). Artificial intelligence, disinformation and media literacy proposals around deepfakes. Observatorio (OBS*). [Google Scholar] [CrossRef]

- Gawronski, B., Ng, N. L., & Luke, D. M. (2023). Truth sensitivity and partisan bias in responses to misinformation. Journal of Experimental Psychology: General, 152(8), 2205–2236. [Google Scholar] [CrossRef] [PubMed]

- Greene, C. M., Nash, R. A., & Murphy, G. (2021). Misremembering Brexit: Partisan bias and individual predictors of false memories for fake news stories among Brexit voters. Memory, 29(5), 587–604. [Google Scholar] [CrossRef] [PubMed]

- Hajli, N., Saeed, U., Tajvidi, M., & Shirazi, F. (2022). Social bots and the spread of disinformation in social media: The challenges of artificial intelligence. British Journal of Management, 33(3), 1238–1253. [Google Scholar] [CrossRef]

- Herrero-Curiel, E., & La-Rosa, L. (2022). Secondary education students and media literacy in the age of disinformation. Comunicar, 30(73), 95–106. [Google Scholar] [CrossRef]

- Ipsos. (2023). Global views on A.I. and disinformation. Perception of disinformation risks in the age of generative A.I. ipsos. Available online: https://tinyurl.com/mrxh6hvj (accessed on 15 January 2024).

- Islas, O., Gutiérrez, F., & Arribas, A. (2024). Artificial intelligence, a powerful battering ram in the disinformation industry. New Explorations, 4(1), 1111639ar. [Google Scholar] [CrossRef]

- Karinshak, E., & Jin, Y. (2023). AI-driven disinformation: A framework for organizational preparation and response. Journal of Communication Management, 27(4), 539–562. [Google Scholar] [CrossRef]

- Kennedy, H., & Wanless, L. (2022). Artificial intelligence. In The routledge handbook of digital sport management. Routledge. [Google Scholar]

- Lian, Y., Tang, H., Xiang, M., & Dong, X. (2024). Public attitudes and sentiments toward ChatGPT in China: A text mining analysis based on social media. Technology in Society, 76, 102442. [Google Scholar] [CrossRef]

- López-López, P. C., Díez, N. L., & Puentes-Rivera, I. (2022). La inteligencia artificial contra la desinformación: Una visión desde la comunicación política. Razón y Palabra, 25(112), 5–11. [Google Scholar] [CrossRef]

- López López, P. C., Mila Maldonado, A., & Ribeiro, V. (2023). La desinformación en las democracias de América Latina y de la península ibérica: De las redes sociales a la inteligencia artificial (2015–2022). Uru: Revista de Comunicación y Cultura, 8, 69–89. [Google Scholar] [CrossRef]

- Martínez-Costa, M.-P., López-Pan, F., Buslón, N., & Salaverría, R. (2023). Nobody-fools-me perception: Influence of age and education on overconfidence about spotting disinformation. Journalism Practice, 17(10), 2084–2102. [Google Scholar] [CrossRef]

- Masip, P., Suau, J., & Ruiz-Caballero, C. (2020). Percepciones sobre medios de comunicación y desinformación: Ideología y polarización en el sistema mediático español. El profesional de la Información, 29, e290527. [Google Scholar] [CrossRef]

- Montiel-Torres, F. (2024). Desórdenes informativos. Hombres y mujeres ante la información falsa: Un análisis regional. In J. S. Sánchez, & D. Lavilla Muñoz (Eds.), Conexiones digitales: La revolución de la comunicación en la sociedad contemporánea (pp. 669–686). Mc Graw Hill. [Google Scholar]

- Nikolov, D., Flammini, A., & Menczer, F. (2021). Right and left, partisanship predicts (asymmetric) vulnerability to misinformation. Harvard Kennedy School Misinformation Review. [Google Scholar] [CrossRef]

- Palomo, B., Blanco, S., & Sedano, J. (2024). Intelligent networks for real-time data: Solutions for tracking disinformation. In J. Sixto-García, A. Quian, A.-I. Rodríguez-Vázquez, A. Silva-Rodríguez, & X. Soengas-Pérez (Eds.), Journalism, digital media and the fourth industrial revolution (pp. 41–54). Springer Nature Switzerland. [Google Scholar] [CrossRef]

- Paniagua-Rojano, F. J., & Rúas-Araujo, J. (2023). Aproximación al mapa sobre la investigación en desinformación y verificación en España: Estado de la cuestión. Revista ICONO 14. Revista Científica de Comunicación y Tecnologías Emergentes, 21(1), 1. [Google Scholar] [CrossRef]

- Peña-Fernández, S., Peña-Alonso, U., & Eizmendi-Iraola, M. (2023). El discurso de los periodistas sobre el impacto de la inteligencia artificial generativa en la desinformación. Estudios Sobre el Mensaje Periodístico, 29(4), 833–841. [Google Scholar] [CrossRef]

- Pérez Escoda, A., Barón-Dulce, G., & Rubio-Romero, J. (2021). Mapeo del consumo de medios en los jóvenes: Redes sociales, «fake news» y confianza en tiempos de pandemia. Index Comunicación, 11(2), 187–208. [Google Scholar] [CrossRef]

- Prull, M. W., & Yockelson, M. B. (2013). Adult age-related differences in the misinformation effect for context-consistent and context-inconsistent objects. Applied Cognitive Psychology, 27(3), 384–395. [Google Scholar] [CrossRef]

- Queralt Jiménez, A. (2023). Desinformación por razón de sexo y redes socialesGendered disinformation and social networks. International Journal of Constitutional Law, 21(5), 1589–1619. [Google Scholar] [CrossRef]

- Rao, A., Morstatter, F., & Lerman, K. (2022). Partisan asymmetries in exposure to misinformation (versión 1). arXiv, arXiv:2203.01350. [Google Scholar] [CrossRef]

- Rodríguez-Virgili, J., Serrano-Puche, J., & Fernández, C. B. (2021). Digital disinformation and preventive actions: Perceptions of users from Argentina, Chile, and Spain. Media and Communication, 9(1), 323–337. [Google Scholar] [CrossRef]

- Salaverría, R., Buslón, N., López-Pan, F., León, B., López-Goñi, I., & Erviti, M.-C. (2020). Desinformación en tiempos de pandemia: Tipología de los bulos sobre la COVID-19. El Profesional de la Información, 29(3), e290315. [Google Scholar] [CrossRef]

- Santos, F. C. C. (2023). Artificial intelligence in automated detection of disinformation: A thematic analysis. Journalism and Media, 4(2), 679–687. [Google Scholar] [CrossRef]

- Sánchez-Gonzales, H.-M., & Sánchez-González, M. (2020). Bots conversacional en la información política desde la experiencia de los usuarios: Politibot. Communication & Society, 33(4), 155–168. [Google Scholar] [CrossRef]

- Sánchez-Holgado, P., Calderón, C. A., & Blanco-Herrero, D. (2022). Conocimiento y actitudes de la ciudadanía española sobre el big data y la inteligencia artificial. Revista ICONO 14. Revista científica de Comunicación y Tecnologías emergentes, 20(1). [Google Scholar] [CrossRef]

- Selwyn, N., & Gallo Cordoba, B. (2022). Australian public understandings of artificial intelligence. AI & SOCIETY, 37(4), 1645–1662. [Google Scholar] [CrossRef]

- Shin, D. (2024). Misinformation and inoculation: Algorithmic inoculation against misinformation resistance. In D. Shin (Ed.), Artificial misinformation: Exploring human-algorithm interaction online (pp. 197–226). Springer Nature Switzerland. [Google Scholar]

- Shu, K., Sliva, A., Wang, S., Tang, J., & Liu, H. (2017). Fake news detection on social media: A data mining perspective. ACM SIGKDD Explorations Newsletter, 19(1), 22–36. [Google Scholar] [CrossRef]

- Simon, F. M. (2024). Artificial intelligence in the news: How AI retools, rationalizes, and reshapes. Journalism and the Public Arena, 4(2), 149–152. Available online: https://tinyurl.com/25988sce (accessed on 3 December 2024).

- Teruel, L. (2023). Increasing political polarization with disinformation: A comparative analysis of the European quality press. El Profesional de la Información, 32(6), e320612. [Google Scholar] [CrossRef]

- Vara-Miguel, A., Amoedo-Casais, A., Moreno-Moreno, E., Negredo-Bruna, S., & Kaufmann-Argueta, J. (2022). Digital news report España 2022. Reconectar con las audiencias de noticias. Servicio de Publicaciones de la Universidad de Navarra. [Google Scholar] [CrossRef]

- World Economic Forum. (2024). The global risks report 2024. World Economic Forum. Available online: https://tinyurl.com/3224ujt6 (accessed on 18 March 2024).

- Yan, H. Y., Morrow, G., Yang, K.-C., & Wihbey, J. (2024). The origin of public concerns over ai-supercharging misinformation in the 2024 US presidential election. OSF. [Google Scholar] [CrossRef]

- Zozaya-Durazo, L. D., Sádaba-Chalezquer, C., & Feijoo-Fernández, B. (2023). Fake or not, I’m sharing it: Teen perception about disinformation in social networks. Young Consumers, 25(4), 425–438. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).