Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi

Abstract

1. Introduction

2. Materials and Methods

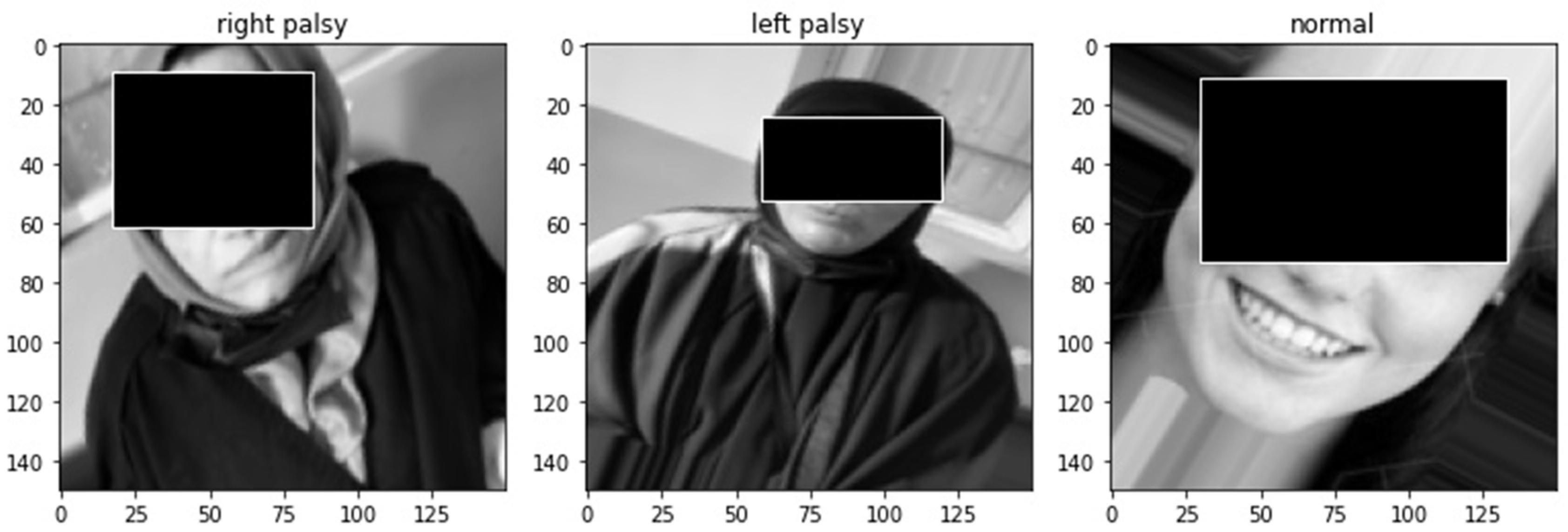

2.1. Research Ethics and Participants

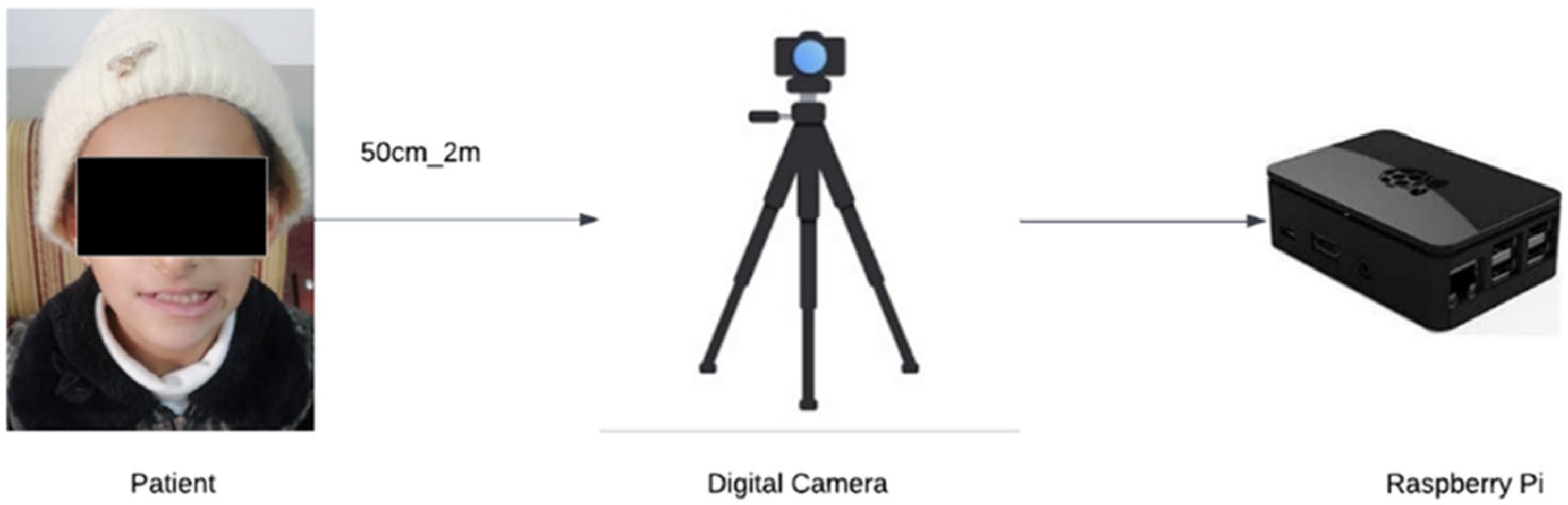

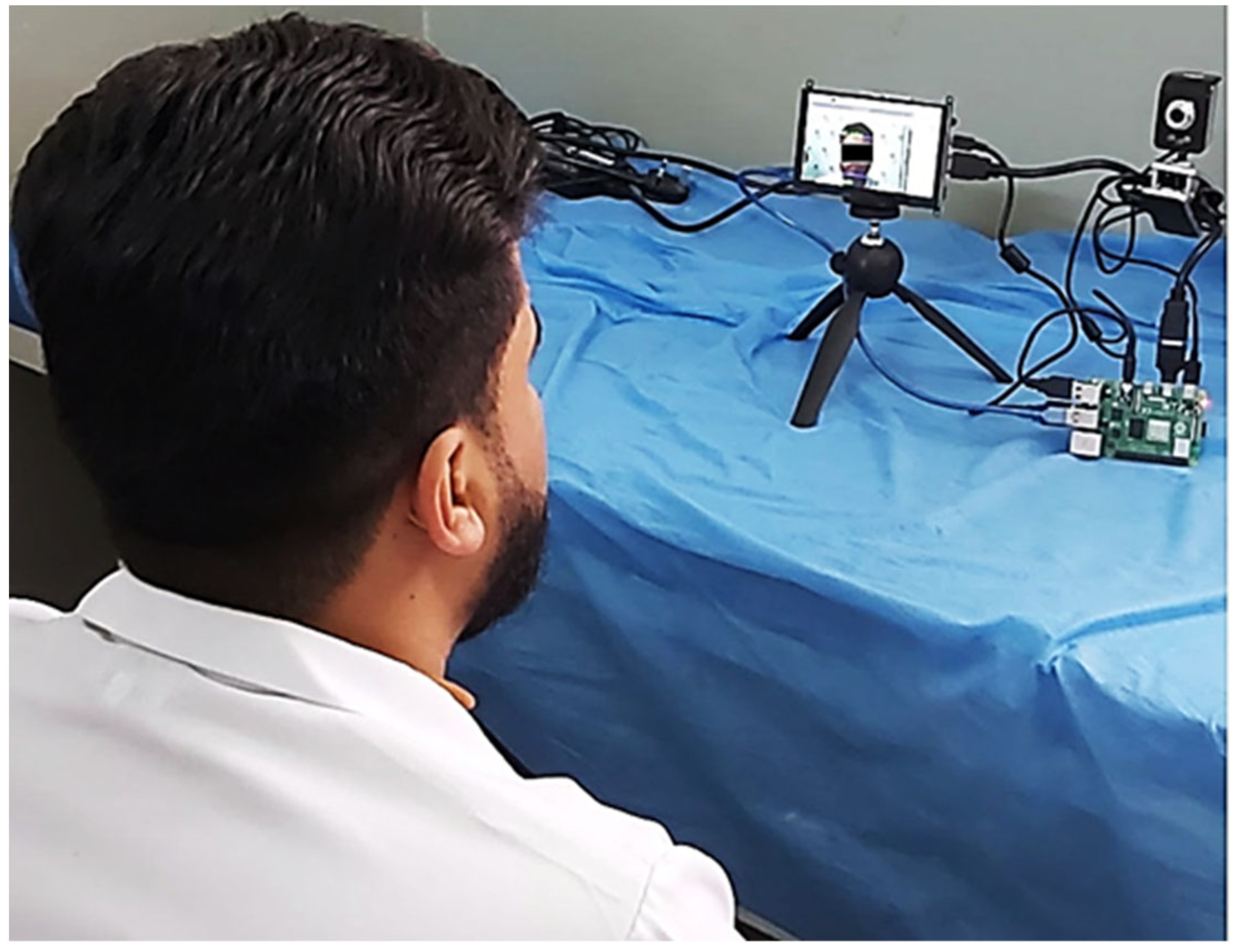

2.2. Experimental Setup

2.3. Hardware

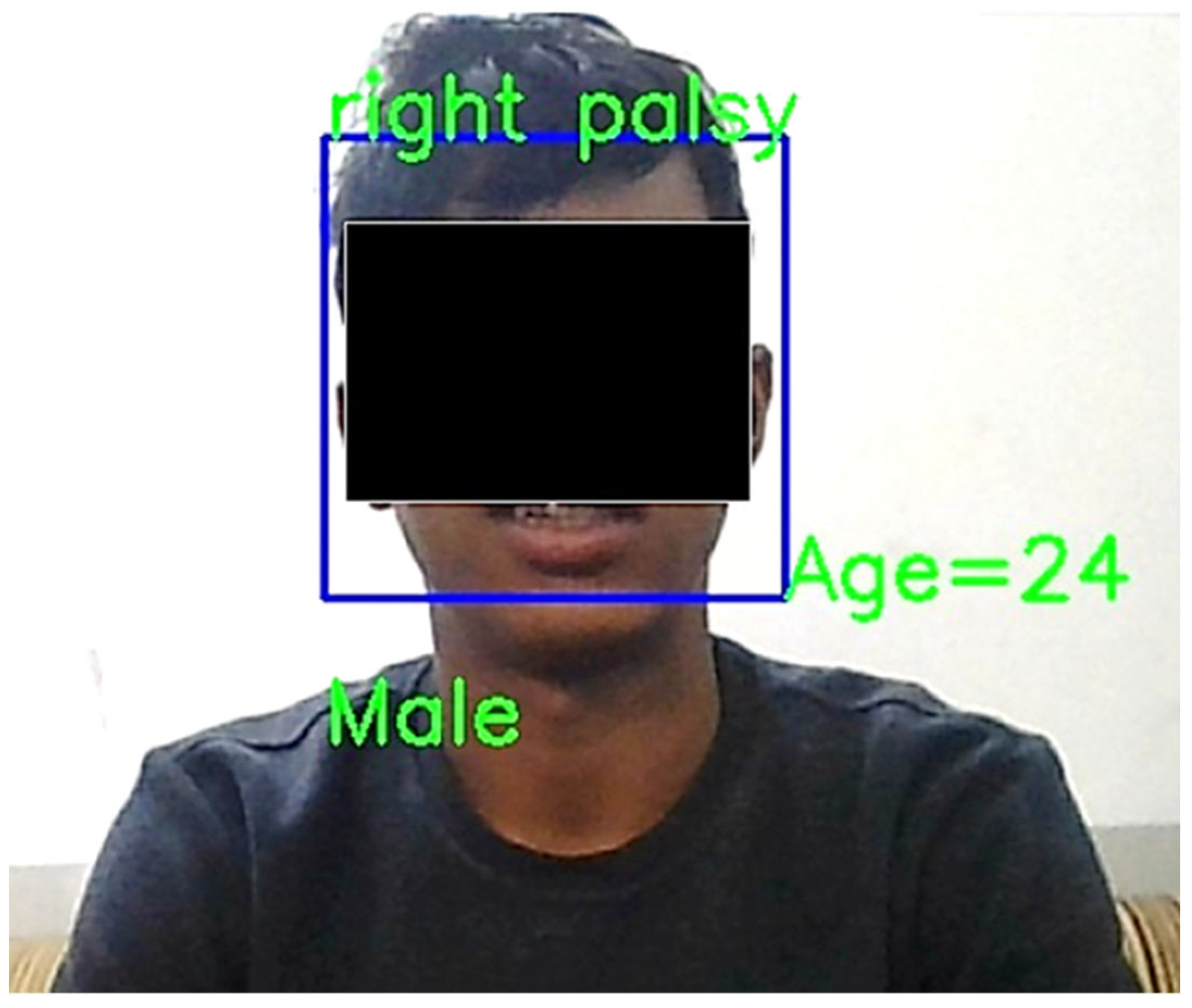

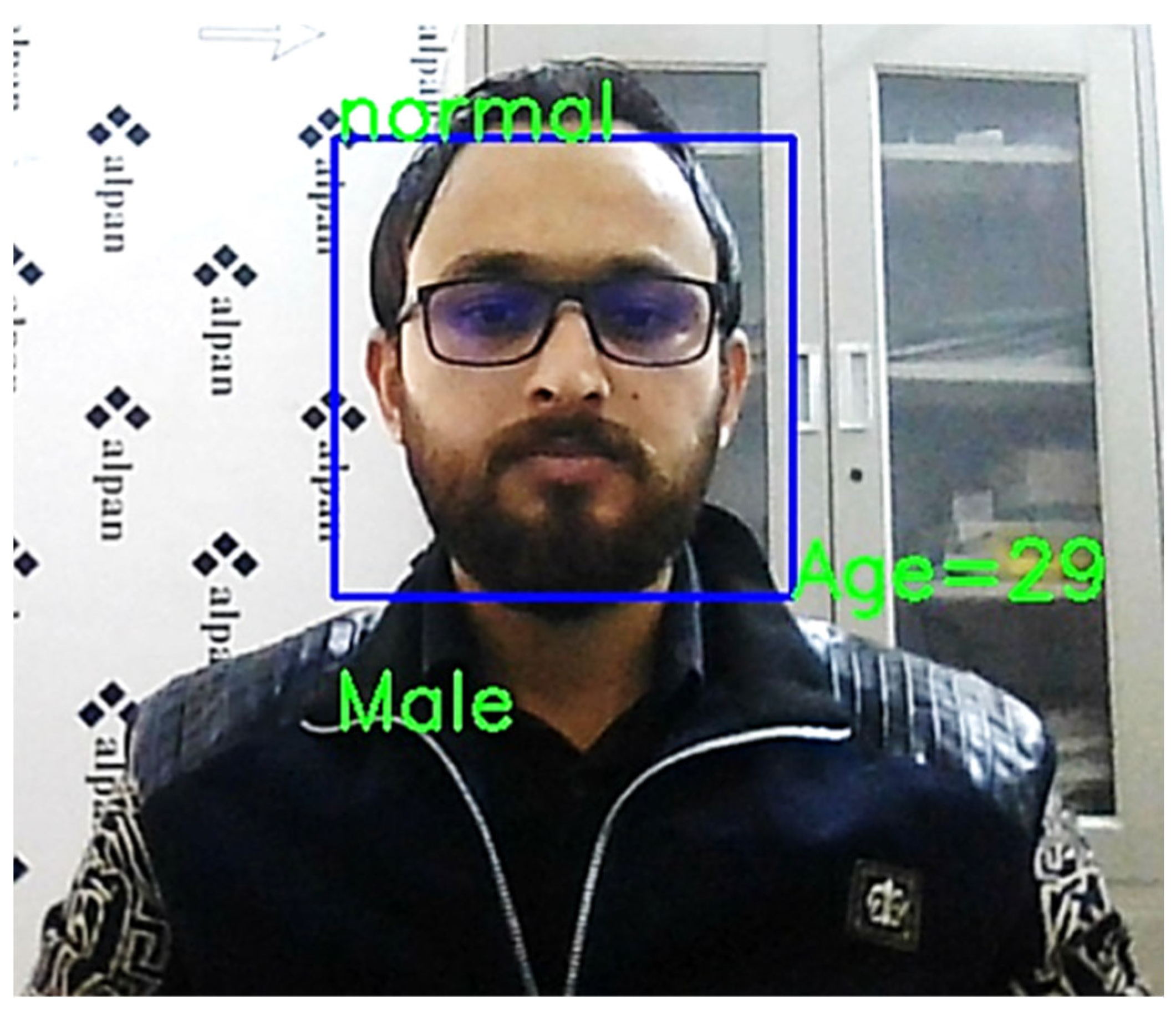

2.4. System Design

2.5. Features Extraction

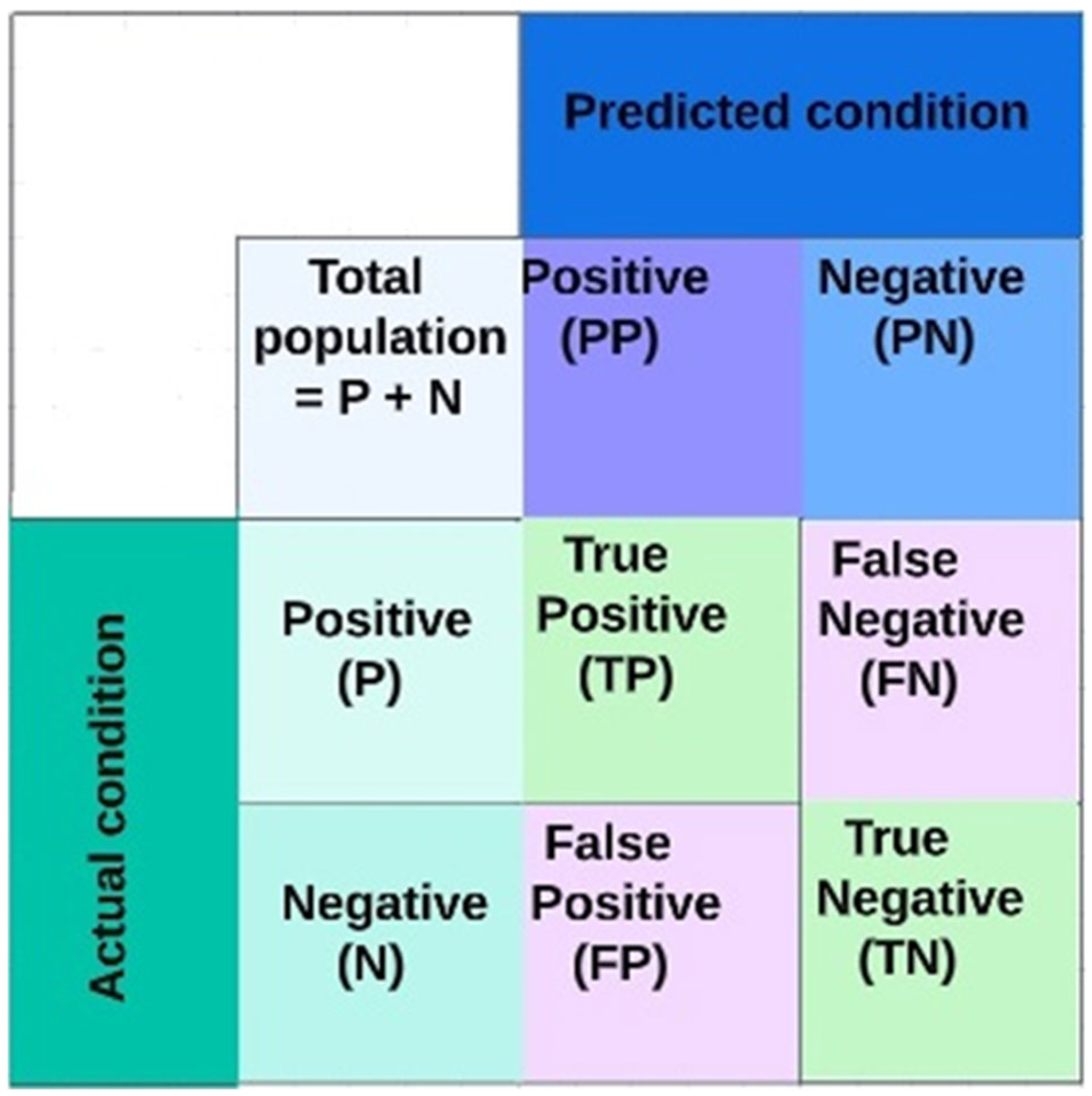

3. Evaluation Metrics

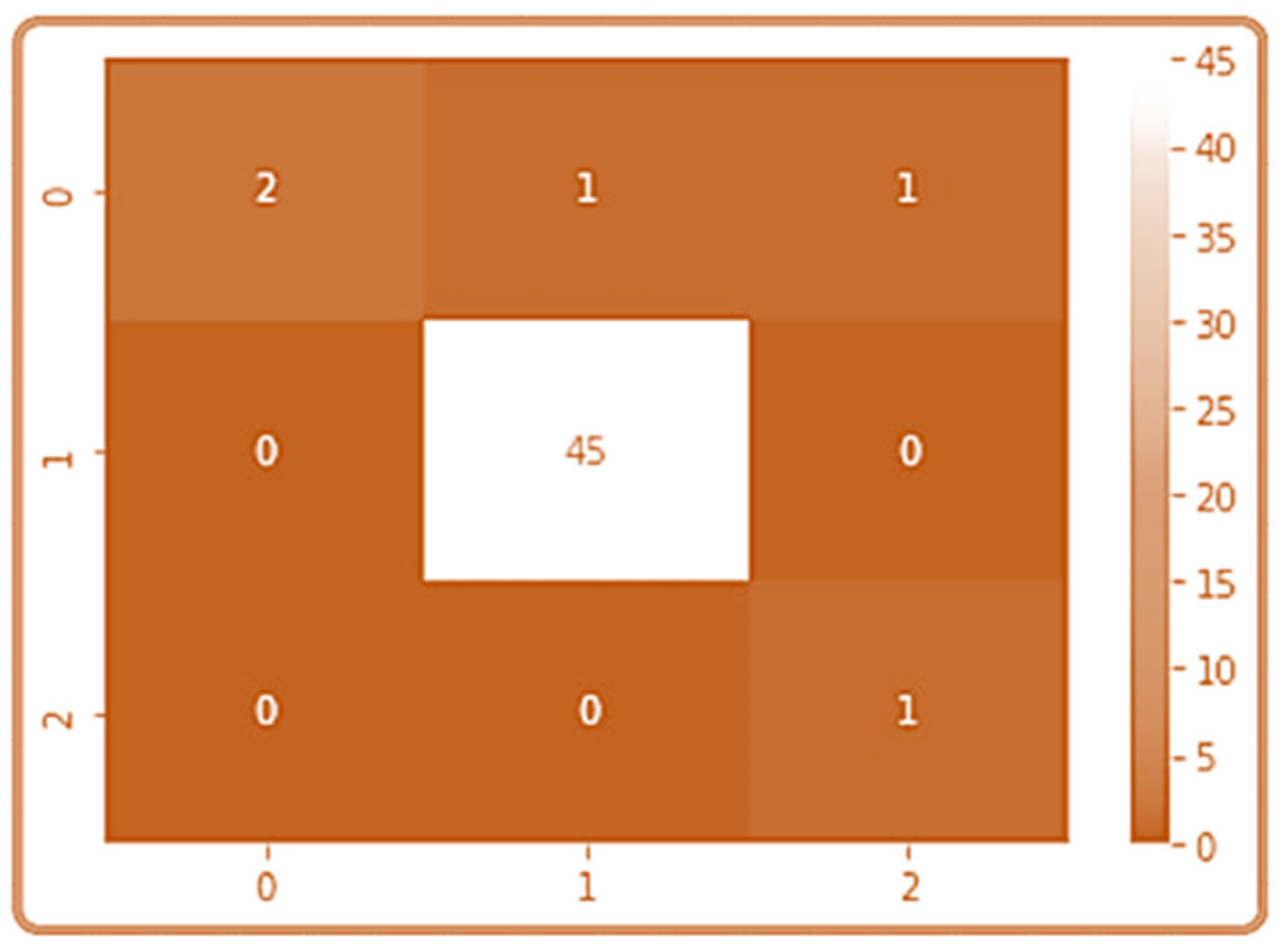

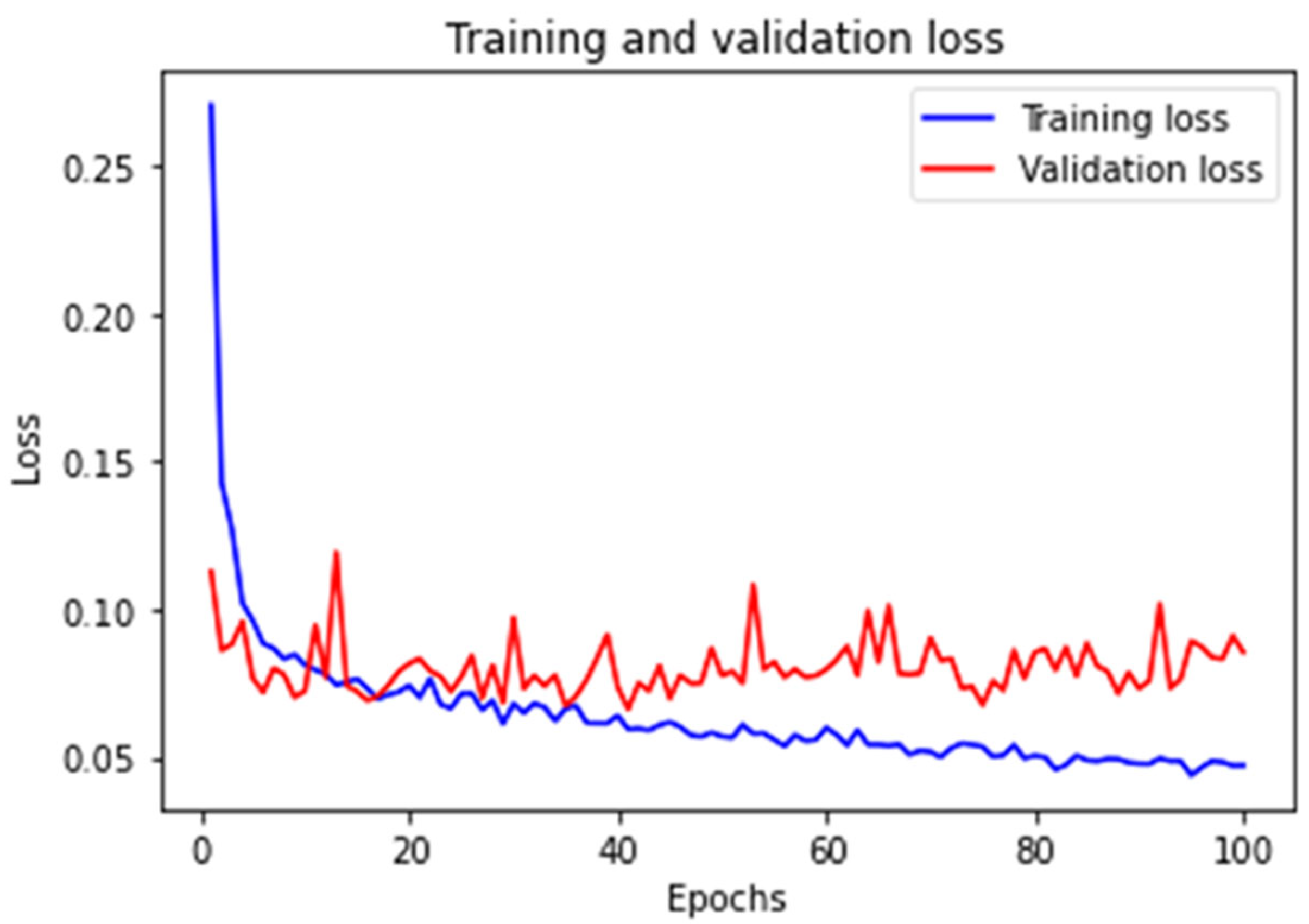

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barbosa, J.; Lee, K.; Lee, S.; Lodhi, B.; Cho, J.-G.; Seo, W.-K.; Kang, J. Efficient quantitative assessment of facial paralysis using iris segmentation and active contour-based key points detection with hybrid classifier. BMC Med. Med. Imaging 2016, 16, 23. [Google Scholar] [CrossRef] [PubMed]

- Baugh, R.F.; Basura, G.J.; Ishii, L.E.; Schwartz, S.R.; Drumheller, C.M.; Burkholder, R.; Deckard, N.A.; Dawson, C.; Driscoll, C.; Gillespie, M.B. Clinical practice guideline: Bell’s palsy. Otolaryngol. Head Neck Surg. 2013, 149, S1–S27. [Google Scholar] [PubMed]

- Amsalam, A.S.; Al-Naji, A.; Daeef, A.Y.; Chahl, J. Computer Vision System for Facial Palsy Detection. J. Tech. 2023, 5, 44–51. [Google Scholar] [CrossRef]

- Ahmed, A. When is facial paralysis Bell palsy? Current diagnosis and treatment. Cleve Clin. J. Med. 2005, 72, 398–401. [Google Scholar] [CrossRef] [PubMed]

- Movahedian, B.; Ghafoornia, M.; Saadatnia, M.; Falahzadeh, A.; Fateh, A. Epidemiology of Bell’s palsy in Isfahan, Iran. Neurosci. J. 2009, 14, 186–187. [Google Scholar]

- Szczepura, A.; Holliday, N.; Neville, C.; Johnson, K.; Khan, A.J.K.; Oxford, S.W.; Nduka, C. Raising the digital profile of facial palsy: National surveys of patients’ and clinicians’ experiences of changing UK treatment pathways and views on the future role of digital technology. J. Med. Internet Res. 2020, 22, e20406. [Google Scholar] [CrossRef]

- Chiesa-Estomba, C.M.; Echaniz, O.; Suarez, J.A.S.; González-García, J.A.; Larruscain, E.; Altuna, X.; Medela, A.; Graña, M. Machine learning models for predicting facial nerve palsy in parotid gland surgery for benign tumors. J. Surg. Res. 2021, 262, 57–64. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Lindeberg, T. Scale invariant feature transform. DiVA 2012, 7, 10491. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Proceedings of the Science and Information Conference, Las Vegas, NV, USA, 25–26 April 2019; pp. 128–144. [Google Scholar]

- Soo, S. Object detection using Haar-cascade Classifier. Inst. Comput. Sci. Univ. Tartu 2014, 2, 1–12. [Google Scholar]

- Dong, J.; Ma, L.; Li, Q.; Wang, S.; Liu, L.-a.; Lin, Y.; Jian, M. An approach for quantitative evaluation of the degree of facial paralysis based on salient point detection. In Proceedings of the 2008 International Symposium on Intelligent Information Technology Application Workshops, Shanghai, China, 21–22 December 2008; pp. 483–486. [Google Scholar]

- Azoulay, O.; Ater, Y.; Gersi, L.; Glassner, Y.; Bryt, O.; Halperin, D. Mobile application for diagnosis of facial palsy. In Proceedings of the 2nd International Conference on Mobile and Information Technologies in Medicine, Prague, Czech Republic, 20 November 2014. [Google Scholar]

- Haase, D.; Minnigerode, L.; Volk, G.F.; Denzler, J.; Guntinas-Lichius, O. Automated and objective action coding of facial expressions in patients with acute facial palsy. Eur. Arch. Oto-Rhino-Laryngol. 2015, 272, 1259–1267. [Google Scholar] [CrossRef] [PubMed]

- Ngo, T.H.; Seo, M.; Matsushiro, N.; Xiong, W.; Chen, Y.-W. Quantitative analysis of facial paralysis based on limited-orientation modified circular Gabor filters. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 349–354. [Google Scholar]

- Wang, T.; Zhang, S.; Dong, J.; Liu, L.a.; Yu, H. Automatic evaluation of the degree of facial nerve paralysis. Multimed. Tools Appl. 2016, 75, 11893–11908. [Google Scholar] [CrossRef]

- Codari, M.; Pucciarelli, V.; Stangoni, F.; Zago, M.; Tarabbia, F.; Biglioli, F.; Sforza, C. Facial thirds–based evaluation of facial asymmetry using stereophotogrammetric devices: Application to facial palsy subjects. J. Cranio-Maxillofac. Surg. 2017, 45, 76–81. [Google Scholar] [CrossRef] [PubMed]

- Storey, G.; Jiang, R.; Bouridane, A. Role for 2D image generated 3D face models in the rehabilitation of facial palsy. Healthc. Technol. Lett. 2017, 4, 145–148. [Google Scholar] [CrossRef]

- Storey, G.; Jiang, R.; Keogh, S.; Bouridane, A.; Li, C.-T. 3DPalsyNet: A facial palsy grading and motion recognition framework using fully 3D convolutional neural networks. IEEE Access 2019, 7, 121655–121664. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, S.; Liu, L.A.; Wu, G.; Dong, J. Automatic facial paralysis evaluation augmented by a cascaded encoder network structure. IEEE Access 2019, 7, 135621–135631. [Google Scholar] [CrossRef]

- Barbosa, J.; Seo, W.-K.; Kang, J. paraFaceTest: An ensemble of regression tree-based facial features extraction for efficient facial paralysis classification. BMC Med. Imaging 2019, 19, 30. [Google Scholar] [CrossRef]

- Jiang, C.; Wu, J.; Zhong, W.; Wei, M.; Tong, J.; Yu, H.; Wang, L. Automatic facial paralysis assessment via computational image analysis. J. Healthc. Eng. 2020, 2020, 2398542. [Google Scholar] [CrossRef]

- Barrios Dell’Olio, G.; Sra, M. FaraPy: An Augmented Reality Feedback System for Facial Paralysis using Action Unit Intensity Estimation. In Proceedings of the the 34th Annual ACM Symposium on User Interface Software and Technology, Online, 10–14 October 2021; pp. 1027–1038. [Google Scholar]

- Liu, X.; Wang, Y.; Luan, J. Facial paralysis detection in infrared thermal images using asymmetry analysis of temperature and texture features. Diagnostics 2021, 11, 2309. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.-P.; Ho Ba Tho, M.-C.; Dao, T.-T. Enhanced facial expression recognition using 3D point sets and geometric deep learning. Med. Biol. Eng. Comput. 2021, 59, 1235–1244. [Google Scholar] [CrossRef] [PubMed]

- Parra-Dominguez, G.S.; Sanchez-Yanez, R.E.; Garcia-Capulin, C.H. Facial paralysis detection on images using key point analysis. Appl. Sci. 2021, 11, 2435. [Google Scholar] [CrossRef]

- Zhang, Y.; Ding, L.; Xu, Z.; Zha, H.; Tang, X.; Li, C.; Xu, S.; Yan, Z.; Jia, J. The Feasibility of An Automatical Facial Evaluation System Providing Objective and Reliable Results for Facial Palsy. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 1680–1686. [Google Scholar] [CrossRef]

- Vletter, C.; Burger, H.; Alers, H.; Sourlos, N.; Al-Ars, Z. Towards an Automatic Diagnosis of Peripheral and Central Palsy Using Machine Learning on Facial Features. arXiv 2022, arXiv:2201.11852. [Google Scholar]

- Kaggle. FER-2013. Available online: https://www.kaggle.com/msambare/fer2013 (accessed on 5 August 2022).

- Chandaliya, P.K.; Kumar, V.; Harjani, M.; Nain, N. Scdae: Ethnicity and gender alteration on CLF and UTKface dataset. In Proceedings of the International Conference on Computer Vision and Image Processing, Jaipur, India, 27–29 September 2019; pp. 294–306. [Google Scholar]

- Zahara, L.; Musa, P.; Wibowo, E.P.; Karim, I.; Musa, S.B. The facial emotion recognition (FER-2013) dataset for prediction system of micro-expressions face using the convolutional neural network (CNN) algorithm based Raspberry Pi. In Proceedings of the 2020 Fifth International Conference on Informatics and Computing (ICIC), Gorontalo, Indonesia, 3–4 November 2020; pp. 1–9. [Google Scholar]

- Liu, Y.; Xu, Z.; Ding, L.; Jia, J.; Wu, X. Automatic Assessment of Facial Paralysis Based on Facial Landmarks. In Proceedings of the 2021 IEEE 2nd International Conference on Pattern Recognition and Machine Learning (PRML), Chengdu, China, 16–18 July 2021; pp. 162–167. [Google Scholar]

- Kumar, G.; Bhatia, P.K. A detailed review of feature extraction in image processing systems. In Proceedings of the 2014 Fourth International Conference on Advanced Computing & Communication Technologies, Rohtak, India, 8–9 February 2014; pp. 5–12. [Google Scholar]

- Yustiawati, R.; Husni, N.L.; Evelina, E.; Rasyad, S.; Lutfi, I.; Silvia, A.; Alfarizal, N.; Rialita, A. Analyzing of different features using Haar cascade classifier. In Proceedings of the 2018 International Conference on Electrical Engineering and Computer Science (ICECOS), Pangkal, Indonesia, 2–4 October 2018; pp. 129–134. [Google Scholar]

- Codeluppi, L.; Venturelli, F.; Rossi, J.; Fasano, A.; Toschi, G.; Pacillo, F.; Cavallieri, F.; Giorgi Rossi, P.; Valzania, F. Facial palsy during the COVID-19 pandemic. Brain Behav. 2021, 11, e01939. [Google Scholar] [CrossRef]

- Ansari, S.A.; Jerripothula, K.R.; Nagpal, P.; Mittal, A. Eye-focused Detection of Bell’s Palsy in Videos. arXiv 2022, arXiv:2201.11479. [Google Scholar] [CrossRef]

- Saxena, K.; Khan, Z.; Singh, S. Diagnosis of diabetes mellitus using k nearest neighbor algorithm. Int. J. Comput. Sci. Trends Technol. (IJCST) 2014, 2, 36–43. [Google Scholar]

- Yao, J.; Shepperd, M. Assessing software defection prediction performance: Why using the Matthews correlation coefficient matters. In Proceedings of the Evaluation and Assessment in Software Engineering, Trondheim, Norway, 15–17 April 2020; pp. 120–129. [Google Scholar]

- Visa, S.; Ramsay, B.; Ralescu, A.L.; Van Der Knaap, E. Confusion matrix-based feature selection. Maics 2011, 710, 120–127. [Google Scholar]

- Caelen, O. A Bayesian interpretation of the confusion matrix. Ann. Math. Artif. Intell. 2017, 81, 429–450. [Google Scholar] [CrossRef]

| Work | Method | Technique | Training Images | Time | Accuracy |

|---|---|---|---|---|---|

| Ngo et al., 2016 [18] | Facial palsy | LO-MCGFs | 85 subjects | Not real-time | 81.2% |

| Jiang et al., 2020 [25] | Facial palsy | K-NN, SVM, and NN | 80 participants | Not real-time | 87.22% and 95.69% |

| Parra-Dominguez et al., 2021. [29] | Facial Paralysis | multi-layer perceptron | 480 images | Not real-time | 94.06% to 97.22% |

| Vletter et al., 2022. [31] | Facial paralysis | KNN | 203 pictures | Not real-time | 85.1% |

| Amsalam et al., 2023 [3] | Facial palsy | CNN | 570 images | Not real-time | 98% |

| Proposed system | Facial palsy | CNN | 20,600 images | Real-time | 98% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amsalam, A.S.; Al-Naji, A.; Daeef, A.Y.; Chahl, J. Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi. BioMedInformatics 2023, 3, 455-466. https://doi.org/10.3390/biomedinformatics3020031

Amsalam AS, Al-Naji A, Daeef AY, Chahl J. Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi. BioMedInformatics. 2023; 3(2):455-466. https://doi.org/10.3390/biomedinformatics3020031

Chicago/Turabian StyleAmsalam, Ali Saber, Ali Al-Naji, Ammar Yahya Daeef, and Javaan Chahl. 2023. "Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi" BioMedInformatics 3, no. 2: 455-466. https://doi.org/10.3390/biomedinformatics3020031

APA StyleAmsalam, A. S., Al-Naji, A., Daeef, A. Y., & Chahl, J. (2023). Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi. BioMedInformatics, 3(2), 455-466. https://doi.org/10.3390/biomedinformatics3020031