Impact of Critical Situations on Autonomous Vehicles and Strategies for Improvement

Abstract

:1. Introduction

2. Sensor Technologies in AVs

2.1. Radar Technology Application

2.2. LiDAR Technology Application

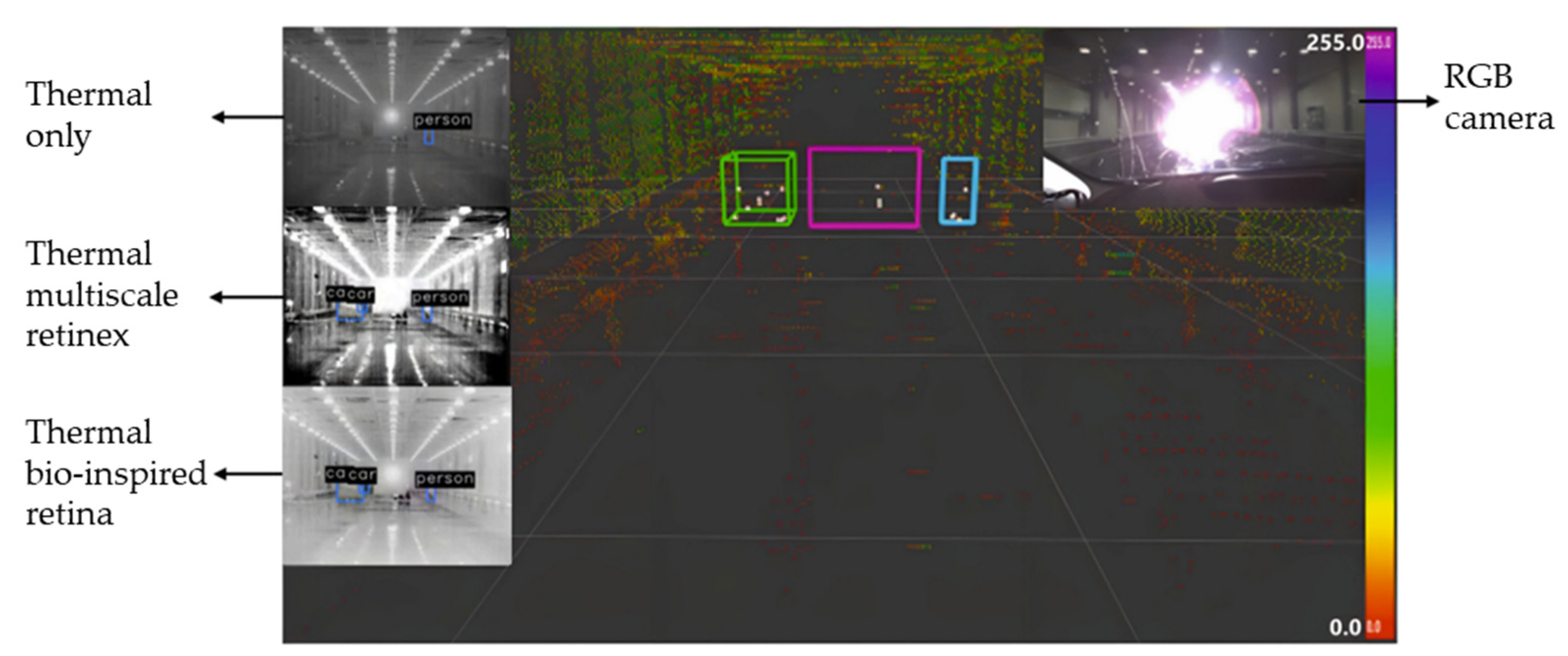

2.3. Camera Technology Application

2.4. Global Navigation Satellite System Technology Application

| Sensors | Sensor Applications | Improvement Method | Performance Evaluation | Key Findings | Reference |

|---|---|---|---|---|---|

| Thermal infrared camera and LiDAR | Object detection and classification in low-light and adverse weather conditions. | Sensor fusion calibrated with a 3D target. | Experiments conducted in day and night environments. | Improved object detection accuracy. | [5] |

| Millimeter wave radar | Real-time wide-area vehicle trajectory tracking. | Unlimited roadway tracking using millimeter wave radar. | Validation with Real-Time Kinematic (RTK) and UAV video data. | 92% vehicle capture accuracy; position accuracy within 0.99 m of ground truth. | [29] |

| Traffic surveillance camera system | Detection, localization, and AI networking in autonomous vehicles. | Sensor fusion with AI networking capabilities. | Tested with various sensor combinations and machine learning models. | Improved detection accuracy and networking efficiency for autonomous operations. | [30] |

| High-resolution satellite images | Road detection in very high-resolution images | Semantic segmentation with attention blocks and hybrid loss functions for better edge detection. | Extensive testing on urban satellite images (Saudi Arabia and Massachusetts) using segmentation masks and edge detection metrics. | Significantly improved road detection and edge delineation with high accuracy in complex backgrounds | [31] |

| mm-wave radar | Recognition of vulnerable road users (pedestrians, cyclists) in intelligent transportation systems. | Shallow neural networks (CNN, RNN) for micro-Doppler signature analysis. | Tested recognition using CNN, RNN, and hybrid CNN-RNN on simulated datasets. | Achieved high recognition accuracy, enhancing road safety for vulnerable users. | [32] |

| LiDAR and camera | Road detection | LiDAR-camera fusion using fully convolutional neural networks (FCNs). | Evaluated on the KITTI road benchmark. | Achieved state-of-the-art MaxF score of 96.03%, outperforming single-sensor systems and ensuring robust detection in varying lighting conditions. | [4] |

| Deep Visible and Thermal Image Fusion | Enhanced pedestrian visibility in low-light and foggy conditions. | Learning-based fusion method producing RGB-like images with added informative details. | Qualitative and quantitative evaluations using no-reference quality metrics and human detection performance metrics, compared with existing fusion methods. | Outperformed existing methods, significantly improving pedestrian visibility and information quality while maintaining natural image appearance. | [33] |

| 3D LiDAR + Monocular Camera | Urban road detection. | Inverse-depth induced fusion framework with IDA-FCNN and line scanning strategy using LiDAR’s 3D point cloud. | Evaluated on KITTI-Road benchmark with Conditional Random Field (CRF) for result fusion. | Achieved state-of-the-art road detection accuracy, significantly outperforming existing methods on the benchmark. | [34] |

| LiDAR and Monocular Camera | Pedestrian classification | Multimodal CNN leveraging LiDAR (depth and reflectance) and camera data fusion. | Evaluated on the KITTI Vision Benchmark Suite using binary classification for pedestrians, comparing early and late fusion strategies. | Achieved significant improvements in pedestrian classification accuracy through LiDAR-camera data fusion. | [35] |

| LIDAR and Vision (Camera) | Vehicle detection | PC-CNN framework fusing LiDAR point cloud and camera images via shared convolutional layers. | Evaluated on the KITTI dataset with 77.6% average recall for proposal generation and 89.4% average precision for car detection. | Achieved significant improvements in proposal accuracy and detection precision, highlighting its potential for real-time applications. | [36] |

| Multispectral (Visible and Thermal Cameras) | Pedestrian detection | Early and late deep fusion CNN architectures for visible and thermal data fusion. | Evaluated on the KAIST multispectral pedestrian detection benchmark, outperforming the ACF + T + THOG baseline with pre-trained late-fusion models. | Achieved superior detection performance, demonstrating robustness in varying lighting conditions. | [37] |

| LIDAR and RGB Cameras | Pedestrian detection | Fusion of LiDAR (up-sampled to a dense depth map) and RGB data using HHA. | Validated various fusion methods within CNN architectures using the KITTI pedestrian detection dataset. | Late fusion of RGB and HHA data at different CNN levels yielded the best results, especially when fine-tuned. | [38] |

| Dynamic Vision Sensor (DVS) | Computer vision in challenging scenarios. | Adaptive slicing of spatiotemporal event streams to reduce motion blur and information loss. | Evaluated on public and proprietary datasets with object information entropy deviation under 1%. | Achieved accurate, blur-free virtual frames, enhancing object recognition and tracking in dynamic scenes | [39] |

| GNSS, INS, Radar, Vision, LiDAR, Odometer | Vehicle navigation state estimation (position, velocity, attitude) | Multi-sensor integration with motion constraints (NHC, ZUPT, ZIHR) and radar-based feature matching. | Tightly coupled FMCW radar and IMU integration, tested for GNSS outage scenarios. | Improved navigation reliability by mitigating GNSS outages and correcting IMU drift, ensuring robust performance in diverse conditions. | [40] |

| Thermal cameras (LWIR range) | Pedestrian and cyclist detection in low-visibility conditions. | Deep neural network tailored for thermal imaging in variable lighting. | Evaluated on KAIST Pedestrian Benchmark dataset with paired RGB and thermal data. | Achieved an F1-score of 81.34%, significantly enhancing detection under challenging conditions where RGB systems struggle. | [41] |

3. Critical Situations for AVs

3.1. Adverse Weather Conditions

3.1.1. Rain Effects

3.1.2. Snow and Hail Effects

3.1.3. Fog Effects

3.1.4. Lightening Effects

3.1.5. Severe Light Effects

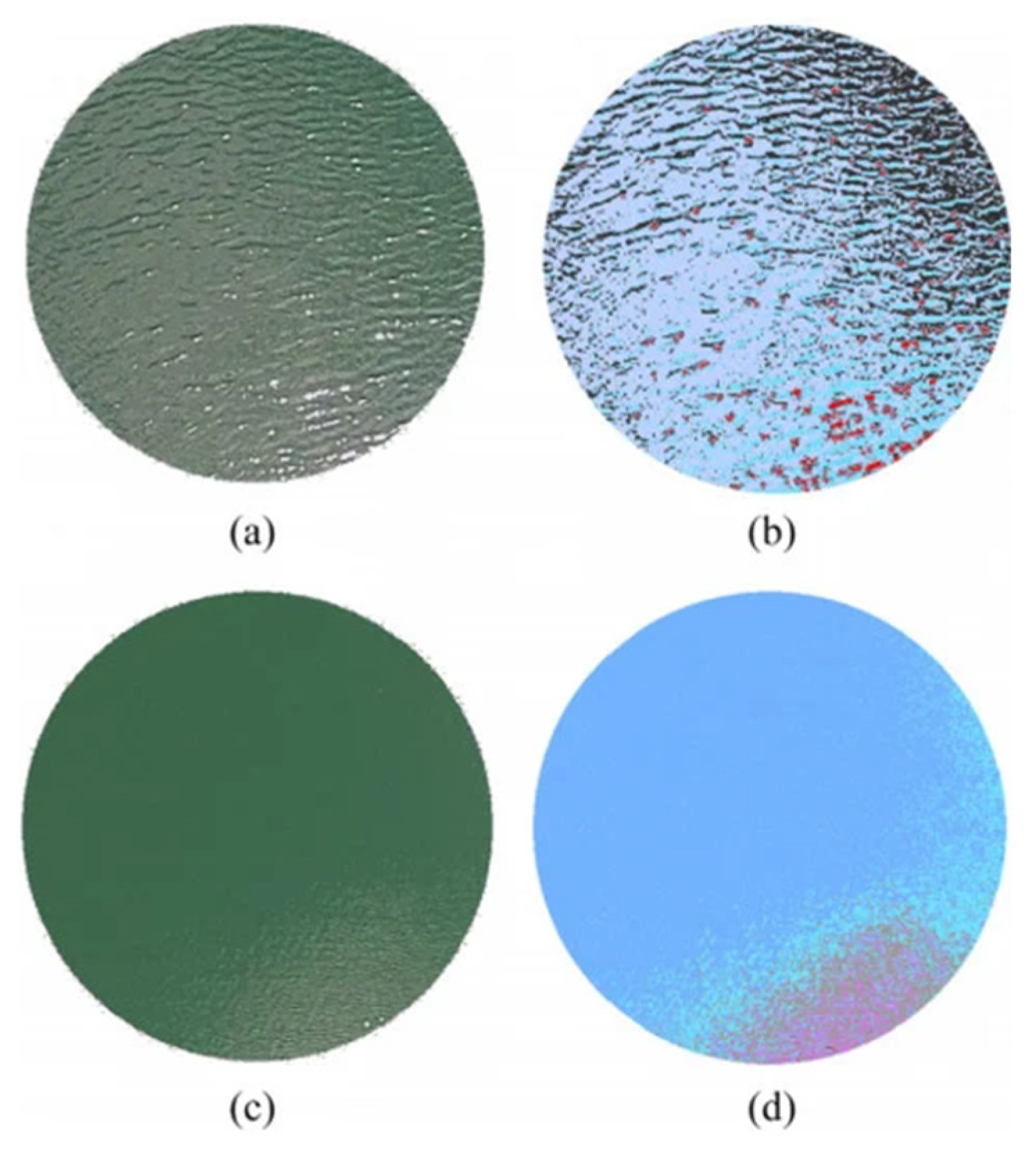

3.1.6. Dust and Sandstorm and Contamination Effects

| Category | Sensor | Adverse Weather | References | Contribution | Challenges |

|---|---|---|---|---|---|

| CNNs and Variants | Camera | Rain | [64,65,66,67,68,69,70,71,72,73] |

|

|

| Snow | [74] | ||||

| Rain, Fog | [75,76] | ||||

| Haze and Fog | [9,77,78,79,80,81,82] | ||||

| LiDAR | Rain | [8] | |||

| All Weather | [83] | ||||

| RNNs and Variants | Camera | Rain | [84,85,86] |

|

|

| GANs and Related Techniques | Camera | Rain | [87,88,89,90,91,92,93,94] |

|

|

| Snow | [95,96] | ||||

| Haze and Fog | [97,98,99,100,101,102,103,104,105] | ||||

| Soil | [62] | ||||

| Fusion and Multi-Modal Networks | Camera | Rain | [70,93,106,107,108,109,110,111] |

|

|

| Haze | [112,113,114,115,116,117,118] | ||||

| Domain Adaptation and Unsupervised/Semi-Supervised Learning | LiDAR | Rain | [119,120] |

|

|

| Camera | Rain and Haze | [121] | |||

| Haze | [122,123,124,125] | ||||

| Rain and Snow | [126] | ||||

| Specialized Segmentation and Detection Networks | Camera | Rain | [74,127,128,129,130,131,132,133,134,135,136] |

|

|

| Snow | [137,138] | ||||

| Haze and Fog | [108,139,140,141,142,143,144] | ||||

| LiDAR | Rain and Snow | [145] | |||

| Simulation and Testing | LiDAR | Rain, Fog | [45,146] |

|

|

| Snow | [147] | ||||

| Sensor Fusion | LiDAR + Camera | Rain, Haze, and Fog | [148] |

|

|

| Rainy and Snowy | [149] | ||||

| Fog | [150] | ||||

| RADAR + Camera | Snow, Fog, Rain | [151] | |||

| LiDAR + RADAR | Haze and Fog | [152] | |||

| Rain, Smoke | [153] | ||||

| Hardware Enhancements | LiDAR | Snow | [154] |

|

|

3.2. Complex Environment Conditions

| Category | References | Contribution | Challenges |

|---|---|---|---|

| Deep Learning Models | [6,175] |

|

|

| Sensory Data Integration | [7,176,177] |

|

|

| Simulation and Testing | [178,179] |

|

|

| Autonomous Navigation | [180,181,182] |

|

|

| Reinforcement Learning | [183,184,185] |

|

|

| Collaborative Systems | [186,187] |

|

|

| Sensor Fusion | [188,189] |

|

|

3.3. Road Infrastructure Conditions

4. Datasets Availability

5. Future Research Directions

6. Discussion, Conclusions, and Future Work

6.1. Discussion

6.2. Conclusions and Future Work

6.2.1. Adverse Weather Summary

6.2.2. Complex Environments Summary

6.2.3. Road Infrastructure Summary

Author Contributions

Funding

Conflicts of Interest

References

- Guanetti, J.; Kim, Y.; Borrelli, F. Control of Connected and Automated Vehicles: State of the Art and Future Challenges. Annu. Rev. Control 2018, 45, 18–40. [Google Scholar] [CrossRef]

- Vargas, J.; Alsweiss, S.; Toker, O.; Razdan, R.; Santos, J. An Overview of Autonomous Vehicles Sensors and Their Vulnerability to Weather Conditions. Sensors 2021, 21, 5397. [Google Scholar] [CrossRef] [PubMed]

- Steinbaeck, J.; Steger, C.; Holweg, G.; Druml, N. Next Generation Radar Sensors in Automotive Sensor Fusion Systems. In Proceedings of the 2017 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 10–12 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–Camera Fusion for Road Detection Using Fully Convolutional Neural Networks. Rob. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Choi, J.D.; Kim, M.Y. A Sensor Fusion System with Thermal Infrared Camera and LiDAR for Autonomous Vehicles and Deep Learning Based Object Detection. ICT Express 2023, 9, 222–227. [Google Scholar] [CrossRef]

- Cunnington, D.; Manotas, I.; Law, M.; De Mel, G.; Calo, S.; Bertino, E.; Russo, A. A Generative Policy Model for Connected and Autonomous Vehicles. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1558–1565. [Google Scholar] [CrossRef]

- Eskandarian, A.; Wu, C.; Sun, C. Research Advances and Challenges of Autonomous and Connected Ground Vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 683–711. [Google Scholar] [CrossRef]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. CNN-Based Lidar Point Cloud De-Noising in Adverse Weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Ullah, H.; Member, S.; Muhammad, K.; Irfan, M.; Anwar, S.; Sajjad, M.; Imran, A.S.; De Albuquerque, V.H.C.; Member, S. Light-DehazeNet: A Novel Lightweight CNN Architecture for Single Image Dehazing. IEEE Trans. Image Process. 2021, 30, 8968–8982. [Google Scholar] [CrossRef]

- Zang, S.; Ding, M.; Smith, D.; Tyler, P.; Rakotoarivelo, T.; Kaafar, M.A. The Impact of Adversary Weather Conditions on Autonomous Vehicles. IEEE Veh. Technol. Mag. 2019, 14, 103–111. [Google Scholar] [CrossRef]

- Rana, M.M.; Hossain, K. Connected and Autonomous Vehicles and Infrastructures: A Literature Review. Int. J. Pavement Res. Technol. 2023, 16, 264–284. [Google Scholar] [CrossRef]

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated Driving Recognition Technologies for Adverse Weather Conditions. IATSS Res. 2019, 43, 253–262. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and Sensing for Autonomous Vehicles under Adverse Weather Conditions: A Survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-guzman, J. Lidar for Autonomous Driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Electron_one RADAR, LiDAR and Cameras Technologies for ADAS and Autonomous Vehicles. Available online: https://www.onelectrontech.com/radar-lidar-and-cameras-technologies-for-adas-and-autonomous-vehicles/ (accessed on 15 December 2019).

- Ignatious, H.A.; El Sayed, H.; Khan, M. An Overview of Sensors in Autonomous Vehicles. Procedia Comput. Sci. 2021, 198, 736–741. [Google Scholar] [CrossRef]

- Gade, R.; Moeslund, T.B. Thermal Cameras and Applications: A Survey. Mach. Vis. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef]

- Olmeda, D.; De La Escalera, A.; Armingol, J.M. Far Infrared Pedestrian Detection and Tracking for Night Driving. Robotica 2011, 29, 495–505. [Google Scholar] [CrossRef]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian Detection at Day/Night Time with Visible and FIR Cameras: A Comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef]

- Forslund, D.; Bjarkefur, J. Night Vision Animal Detection. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 737–742. [Google Scholar] [CrossRef]

- Yogamani, S.; Witt, C.; Rashed, H.; Nayak, S.; Mansoor, S.; Varley, P.; Perrotton, X.; Odea, D.; Perez, P.; Hughes, C.; et al. WoodScape: A Multi-Task, Multi-Camera Fisheye Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9307–9317. [Google Scholar] [CrossRef]

- Heng, L.; Choi, B.; Cui, Z.; Geppert, M.; Hu, S.; Kuan, B.; Liu, P.; Nguyen, R.; Yeo, Y.C.; Geiger, A.; et al. Project Autovision: Localization and 3d Scene Perception for an Autonomous Vehicle with a Multi-Camera System. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4695–4702. [Google Scholar] [CrossRef]

- Yahiaoui, M.; Rashed, H.; Mariotti, L.; Sistu, G.; Clancy, I.; Yahiaoui, L.; Kumar, V.R.; Yogamani, S. FisheyeMODNet: Moving Object Detection on Surround-View Cameras for Autonomous Driving. arXiv 2019, arXiv:1908.11789. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Krpalkova, L.; Riordan, D.; Walsh, J.; Murphy, A.; Ryan, C. Computer Vision for 3d Perception a Review; Springer International Publishing: Cham, Switzerland, 2018; Volume 869, ISBN 9783030010577. [Google Scholar]

- Altaf, M.A.; Ahn, J.; Khan, D.; Kim, M.Y. Usage of IR Sensors in the HVAC Systems, Vehicle and Manufacturing Industries: A Review. IEEE Sens. J. 2022, 22, 9164–9176. [Google Scholar] [CrossRef]

- Dasgupta, S.; Rahman, M.; Islam, M.; Chowdhury, M. A Sensor Fusion-Based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 23559–23572. [Google Scholar] [CrossRef]

- Raza, S.; Al-Kaisy, A.; Teixeira, R.; Meyer, B. The Role of GNSS-RTN in Transportation Applications. Encyclopedia 2022, 2, 1237–1249. [Google Scholar] [CrossRef]

- Wang, J.; Fu, T.; Xue, J.; Li, C.; Song, H.; Xu, W.; Shangguan, Q. Realtime Wide-Area Vehicle Trajectory Tracking Using Millimeter-Wave Radar Sensors and the Open TJRD TS Dataset. Int. J. Transp. Sci. Technol. 2023, 12, 273–290. [Google Scholar] [CrossRef]

- Hasanujjaman, M.; Chowdhury, M.Z.; Jang, Y.M. Sensor Fusion in Autonomous Vehicle with Traffic Surveillance Camera System: Detection, Localization, and AI Networking. Sensors 2023, 23, 3335. [Google Scholar] [CrossRef]

- Ghandorh, H.; Boulila, W.; Masood, S.; Koubaa, A.; Ahmed, F.; Ahmad, J. Semantic Segmentation and Edge Detection—Approach to Road Detection in Very High Resolution Satellite Images. Remote Sens. 2022, 14, 613. [Google Scholar] [CrossRef]

- Islam Minto, M.R.; Tan, B.; Sharifzadeh, S.; Riihonen, T.; Valkama, M. Shallow Neural Networks for MmWave Radar Based Recognition of Vulnerable Road Users. In Proceedings of the 2020 12th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Porto, Portugal, 20–22 July 2020. [Google Scholar] [CrossRef]

- Shopovska, I.; Jovanov, L.; Philips, W. Deep Visible and Thermal Image Fusion for Enhanced Pedestrian Visibility. Sensors 2019, 19, 3727. [Google Scholar] [CrossRef]

- Gu, S.; Lu, T.; Zhang, Y.; Alvarez, J.M.; Yang, J.; Kong, H. 3-D LiDAR + Monocular Camera: An Inverse-Depth-Induced Fusion Framework for Urban Road Detection. IEEE Trans. Intell. Veh. 2018, 3, 351–360. [Google Scholar] [CrossRef]

- Melotti, G.; Premebida, C.; Goncalves, N.M.M.D.S.; Nunes, U.J.C.; Faria, D.R. Multimodal CNN Pedestrian Classification: A Study on Combining LIDAR and Camera Data. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3138–3143. [Google Scholar] [CrossRef]

- Du, X.; Ang, M.H.; Rus, D. Car Detection for Autonomous Vehicle: LIDAR and Vision Fusion Approach through Deep Learning Framework. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 749–754. [Google Scholar] [CrossRef]

- Wagner, J.; Fischer, V.; Herman, M.; Behnke, S. Multispectral Pedestrian Detection Using Deep Fusion Convolutional Neural Networks. In Proceedings of the ESANN 2016—24th European Symposium on Artificial Neural Networks, Bruges, Belgium, 27–29 April 2016; pp. 509–514. [Google Scholar]

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing LIDAR and Images for Pedestrian Detection Using Convolutional Neural Networks. In Proceedings of the 016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2198–2205. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Y.; Lv, H.; Feng, Y.; Liu, H.; Han, C. Adaptive Slicing Method of the Spatiotemporal Event Stream Obtained from a Dynamic Vision Sensor. Sensors 2022, 22, 2614. [Google Scholar] [CrossRef]

- Elkholy, M. Radar and INS Integration for Enhancing Land Vehicle Navigation in GNSS-Denied Environment. Doctoral Thesis, University of Calgary, Calgary, AB, Canada, 2024. [Google Scholar]

- Annapareddy, N.; Sahin, E.; Abraham, S.; Islam, M.M.; DePiro, M.; Iqbal, T. A Robust Pedestrian and Cyclist Detection Method Using Thermal Images. In Proceedings of the 2021 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 29–30 April 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Wallace, H.B. Millimeter Wave Propagation Measurements At The Ballistic Research Laboratory. Opt. Eng. 1983, 22, 24–31. [Google Scholar] [CrossRef]

- Bertoldo, S.; Lucianaz, C.; Allegretti, M. 77 GHz Automotive Anti-Collision Radar Used for Meteorological Purposes. In Proceedings of the 2017 IEEE-APS Topical Conference on Antennas and Propagation in Wireless Communications (APWC), Verona, Italy, 11–15 September 2017; pp. 49–52. [Google Scholar] [CrossRef]

- Gourova, R.; Krasnov, O.; Yarovoy, A. Analysis of Rain Clutter Detections in Commercial 77 GHz Automotive Radar. In Proceedings of the 2017 European Radar Conference (EURAD), Nuremberg, Germany, 11–13 October 2017; pp. 25–28. [Google Scholar]

- Hadj-bachir, M.; De Souza, P. LIDAR Sensor Simulation in Adverse Weather Condition for Driving Assistance Development to Cite This Version: HAL Id: Hal-01998668. 2019. Available online: https://hal.science/hal-01998668/ (accessed on 15 December 2019).

- Fu, X.; Huang, J.; Ding, X.; Liao, Y.; Paisley, J. Clearing the Skies: A Deep Network Architecture for Single-Image Rain Removal. IEEE Trans. Image Process. 2017, 26, 2944–2956. [Google Scholar] [CrossRef]

- Kulemin, G.P. Influence of Propagation Effects on Millimeter Wave Radar Operation. Radar Sens. Technol. IV 1999, 3704, 170–178. [Google Scholar]

- Battan, L.J. Radar Attenuation by Wet Ice Spheres. J. Appl. Meteorol. Climatol. 1971, 10, 247–252. [Google Scholar]

- Lhermitte, R. Attenuation and Scattering of Millimeter Wavelength Radiation by Clouds and Precipitation. J. Atmos. Ocean. Technol. 1990, 7, 464–479. [Google Scholar] [CrossRef]

- Meydani, A. State-of-the-Art Analysis of the Performance of the Sensors Utilized in Autonomous Vehicles in Extreme Conditions. In Artificial Intelligence and Smart Vehicles; Ghatee, M., Hashemi, S.M., Eds.; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2023; Volume 1883. [Google Scholar]

- Caccia, L.; Hoof, H.V.; Courville, A.; Pineau, J. Deep Generative Modeling of LiDAR Data. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5034–5040. [Google Scholar] [CrossRef]

- Wojtanowski, J.; Zygmunt, M.; Kaszczuk, M.; Mierczyk, Z.; Muzal, M. Comparison of 905 Nm and 1550 Nm Semiconductor Laser Rangefinders’ Performance Deterioration Due to Adverse Environmental Conditions. Opto-Electron. Rev. 2014, 22, 183–190. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykonen, P.; Holzhuter, H.; Colomb, M.; Duthon, P. Automotive LiDAR Performance Verification in Fog and Rain. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1695–1701. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykönen, P.; Ritter, W.; Sawade, O.; Schäufele, B. Automotive LIDAR Sensor Development Scenarios for Harsh Weather Conditions. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 265–270. [Google Scholar] [CrossRef]

- Yadav, G.; Maheshwari, S.; Agarwal, A. Fog Removal Techniques from Images: A Comparative Review and Future Directions. In Proceedings of the 2014 International Conference on Signal Propagation and Computer Technology (ICSPCT 2014), Ajmer, India, 12–13 July 2014; pp. 44–52. [Google Scholar] [CrossRef]

- Vehicles, A. Identification of Lightning Overvoltage in Unmanned. Energies 2022, 15, 6609. [Google Scholar] [CrossRef]

- Yahiaoui, L.; Uřičář, M.; Das, A.; Senthil, Y. Let The Sunshine in: Sun Glare Detection on Automotive Surround-View Cameras Let The Sunshine in: Sun Glare Detection on Automotive Surround-View Cameras. Electron. Imaging 2020, 2020, 80–81. [Google Scholar] [CrossRef]

- Pham, L.H.; Tran, D.N.-N.; Jeon, J.W. Low-Light Image Enhancement for Autonomous Driving Systems Using DriveRetinex-Net. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Republic of Korea, 1–3 November 2020. [Google Scholar]

- Trierweiler, M.; Peterseim, T.; Neumann, C. Automotive LiDAR Pollution Detection System Based on Total Internal Reflection Techniques. Light-Emit. Devices Mater. Appl. XXIV 2020, 11302, 135–144. [Google Scholar]

- Starr, J.W.; Lattimer, B.Y. Evaluation of Navigation Sensors in Fire Smoke Environments. Fire Technol. 2014, 50, 1459–1481. [Google Scholar] [CrossRef]

- Kovalev, V.A.; Hao, W.M.; Wold, C. Determination of the Particulate Extinction-Coefficient Profile and the Column-Integrated Lidar Ratios Using the Backscatter-Coefficient and Optical-Depth Profiles. Appl. Opt. 2007, 46, 8627–8634. [Google Scholar]

- Uricar, M.; Sistu, G.; Rashed, H.; Vobecky, A.; Kumar, V.R.; Krizek, P.; Burger, F.; Yogamani, S. Let’s Get Dirty: GAN Based Data Augmentation for Camera Lens Soiling Detection in Autonomous Driving. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 766–775. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, F.; Ma, X.; Pan, X. Fuel Economy in Truck Platooning: A Literature Overview and Directions for Future Research. J. Adv. Transp. 2020, 2020, 2604012. [Google Scholar] [CrossRef]

- Wang, C.; Pan, J.; Wu, X.M. Online-Updated High-Order Collaborative Networks for Single Image Deraining. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 28 February–1 March 2022; Volume 36, pp. 2406–2413. [Google Scholar] [CrossRef]

- Zhang, H.; Xie, Q.; Lu, B.; Gai, S. Dual Attention Residual Group Networks for Single Image Deraining. Digit. Signal Process. 2021, 116, 103106. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, C.; Su, Z.; Chen, J. Dense Feature Pyramid Grids Network for Single Image Deraining. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2024; pp. 2025–2029. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, H.; Wang, Q.; Gao, Q.; Tang, Y. Context-Wise Attention-Guided Network for Single Image Deraining. Electron. Lett. 2022, 58, 148–150. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Li, Q.; Wang, J.; Qi, M.; Sun, H.; Xu, H.; Kong, J. A Lightweight Fusion Distillation Network for Image Deblurring and Deraining. Sensors 2021, 21, 5312. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Chen, C.; Wang, Z.; Lin, C. Progressive Coupled Network for Real-Time Image Deraining. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1759–1763. [Google Scholar] [CrossRef]

- Wang, Q.; Jiang, K.; Wang, Z.; Member, S.; Ren, W.; Zhang, J.; Lin, C. Multi-Scale Fusion and Decomposition Network for Single Image Deraining. IEEE Trans. Image Process. 2024, 33, 191–204. [Google Scholar] [CrossRef]

- Su, Z.; Zhang, Y.; Zhang, X.P.; Qi, F. Non-Local Channel Aggregation Network for Single Image Rain Removal. Neurocomputing 2022, 469, 261–272. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, J. Dynamic Multi-Domain Translation Network for Single Image Deraining. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1754–1758. [Google Scholar]

- Khatab, E.; Onsy, A.; Varley, M.; Abouelfarag, A. A Lightweight Network for Real-Time Rain Streaks and Rain Accumulation Removal from Single Images Captured by AVs. Appl. Sci. 2023, 13, 219. [Google Scholar] [CrossRef]

- Li, M.; Cao, X.; Zhao, Q.; Zhang, L.; Meng, D. Online Rain/Snow Removal From. IEEE Trans. Image Process. 2021, 30, 2029–2044. [Google Scholar] [CrossRef]

- Hu, X.; Zhu, L.; Wang, T.; Fu, C.W.; Heng, P.A. Single-Image Real-Time Rain Removal Based on Depth-Guided Non-Local Features. IEEE Trans. Image Process. 2021, 30, 1759–1770. [Google Scholar] [CrossRef] [PubMed]

- Ali, A.; Sarkar, R.; Chaudhuri, S.S. Wavelet-Based Auto-Encoder for Simultaneous Haze and Rain Removal from Images. Pattern Recognit. 2024, 150, 110370. [Google Scholar] [CrossRef]

- Susladkar, O.; Deshmukh, G.; Nag, S.; Mantravadi, A.; Makwana, D.; Ravichandran, S.; Teja, R.S.C.; Chavhan, G.H.; Mohan, C.K.; Mittal, S. ClarifyNet: A High-Pass and Low-Pass Filtering Based CNN for Single Image Dehazing. J. Syst. Archit. 2022, 132, 102736. [Google Scholar] [CrossRef]

- Xu, Y.J.; Zhang, Y.J.; Li, Z.; Cui, Z.W.; Yang, Y.T. Multi-Scale Dehazing Network via High-Frequency Feature Fusion. Comput. Graph. 2022, 107, 50–59. [Google Scholar] [CrossRef]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive Learning for Compact Single Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; Volume 1, pp. 10551–10560. [Google Scholar]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M. Single Image Dehazing via Multi-Scale Convolutional Neural Networks with Holistic Edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Das, S.D. Fast Deep Multi-Patch Hierarchical Network for Nonhomogeneous Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 1–8. [Google Scholar]

- Liang, X.; Huang, Z.; Lu, L.; Tao, Z.; Yang, B.; Li, Y. Deep Learning Method on Target Echo Signal Recognition for Obscurant Penetrating Lidar Detection in Degraded Visual Environments. Sensors 2020, 20, 3424. [Google Scholar] [CrossRef]

- Wang, C.; Zhu, H.; Fan, W.; Wu, X.M.; Chen, J. Single Image Rain Removal Using Recurrent Scale-Guide Networks. Neurocomputing 2022, 467, 242–255. [Google Scholar] [CrossRef]

- Zheng, Y.; Yu, X.; Liu, M.; Zhang, S. Single-Image Deraining via Recurrent Residual Multiscale Networks. IEEE Trans. Neural Networks Learn. Syst. 2022, 33, 1310–1323. [Google Scholar] [CrossRef]

- Xue, X.; Meng, X.; Ma, L.; Liu, R.; Fan, X. GTA-Net: Gradual Temporal Aggregation Network for Fast Video Deraining. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2020–2024. [Google Scholar] [CrossRef]

- Matsui, T.; Ikehara, M. GAN-Based Rain Noise Removal from Single-Image Considering Rain Composite Models. IEEE Access 2020, 8, 40892–40900. [Google Scholar] [CrossRef]

- Yan, X.; Loke, Y.R. RainGAN: Unsupervised Raindrop Removal via Decomposition and Composition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 14–23. [Google Scholar]

- Zhang, H.; Sindagi, V.; Patel, V.M. Image De-Raining Using a Conditional Generative Adversarial Network. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 3943–3956. [Google Scholar] [CrossRef]

- Wei, Y.; Member, S.; Zhang, Z.; Member, S.; Wang, Y.; Member, S.; Xu, M.; Yang, Y.; Member, S.; Yan, S. DerainCycleGAN: Rain Attentive CycleGAN for Single Image Deraining and Rainmaking. IEEE Trans. Image Process. 2021, 30, 4788–4801. [Google Scholar] [CrossRef]

- Guo, Z.; Hou, M.; Sima, M.; Feng, Z. DerainAttentionGAN: Unsupervised Single-Image Deraining Using Attention-Guided Generative Adversarial Networks. Signal Image Video Process. 2022, 16, 185–192. [Google Scholar] [CrossRef]

- Ding, Y.; Li, M.; Yan, T.; Zhang, F.; Liu, Y.; Lau, R.W.H. Rain Streak Removal From Light Field Images. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 467–482. [Google Scholar] [CrossRef]

- Yang, F.; Ren, J.; Lu, Z.; Zhang, J.; Zhang, Q. Rain-Component-Aware Capsule-GAN for Single Image de-Raining. Pattern Recognit. 2022, 123, 108377. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, J.; Ren, X.; Wang, A.; Wang, W. Joint Raindrop and Haze Removal from a Single Image. IEEE Trans. Image Process. 2020, 29, 9508–9519. [Google Scholar] [CrossRef]

- Jaw, D.W.; Huang, S.C.; Kuo, S.Y. Desnowgan: An Efficient Single Image Snow Removal Framework Using Cross-Resolution Lateral Connection and Gans. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1342–1350. [Google Scholar] [CrossRef]

- Sung, T.; Lee, H.J. Removing Snow from a Single Image Using a Residual Frequency Module and Perceptual RaLSGAN. IEEE Access 2021, 9, 152047–152056. [Google Scholar] [CrossRef]

- Deng, Q.; Huang, Z.; Tsai, C.-C.; Lin, C.-W. HardGAN: A Haze-Aware Representation Distillation GAN for Single Image Dehazing; Springer International Publishing: Cham, Switzerland, 2020; Volume 12350, ISBN 9783030660956. [Google Scholar]

- Kan, S.; Zhang, Y.; Zhang, F.; Cen, Y. Signal Processing: Image Communication A GAN-Based Input-Size Flexibility Model for Single Image Dehazing. Signal Process. Image Commun. 2022, 102, 116599. [Google Scholar] [CrossRef]

- Liu, W.; Hou, X.; Duan, J.; Qiu, G. End-to-End Single Image Fog Removal Using Enhanced Cycle Consistent Adversarial Networks. IEEE Trans. Image Process. 2020, 29, 7819–7833. [Google Scholar] [CrossRef]

- Mo, Y.; Li, C.; Zheng, Y.; Wu, X. Journal of Visual Communication and Image Representation DCA-CycleGAN: Unsupervised Single Image Dehazing Using Dark Channel Attention Optimized CycleGAN. J. Vis. Commun. Image Represent. 2022, 82, 103431. [Google Scholar] [CrossRef]

- Park, J.; Member, S.; Han, D.K.; Member, S. Fusion of Heterogeneous Adversarial Networks for Single Image Dehazing. IEEE Trans. Image Process. 2020, 29, 4721–4732. [Google Scholar] [CrossRef]

- Jin, Y.; Gao, G.; Liu, Q.; Wang, Y. Unsupervised Conditional Disentangle Network for Image Dehazing. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020. [Google Scholar]

- Dong, Y.; Liu, Y.; Zhang, H.; Chen, S.; Qiao, Y. FD-GAN: Generative Adversarial Networks with Fusion-Discriminator for Single Image Dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Fu, M.; Liu, H.; Yu, Y.; Chen, J.; Wang, K. DW-GAN: A Discrete Wavelet Transform GAN for NonHomogeneous Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, P.; Zhu, H.; Huang, H.; Zhang, H.; Wang, N. TMS-GAN: A Twofold Multi-Scale Generative Adversarial Network for Single Image Dehazing. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2760–2772. [Google Scholar] [CrossRef]

- Fu, X.; Qi, Q.; Zha, Z.J.; Zhu, Y.; Ding, X. Rain Streak Removal via Dual Graph Convolutional Network. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–21 May 2021; pp. 1352–1360. [Google Scholar] [CrossRef]

- Zhang, K.; Luo, W.; Yu, Y.; Ren, W.; Zhao, F.; Li, C.; Ma, L.; Liu, W.; Li, H. Beyond Monocular Deraining: Parallel Stereo Deraining Network Via Semantic Prior. Int. J. Comput. Vis. 2022, 130, 1754–1769. [Google Scholar] [CrossRef]

- Zhou, J.; Leong, C.; Lin, M.; Liao, W.; Li, C. Task Adaptive Network for Image Restoration with Combined Degradation Factors. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1–8. [Google Scholar]

- Jiang, K.; Wang, Z.; Yi, P.; Chen, C.; Huang, B.; Luo, Y.; Ma, J.; Jiang, J. Multi-Scale Progressive Fusion Network for Single Image Deraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8346–8355. [Google Scholar]

- Wang, Q.; Sun, G.; Fan, H.; Li, W.; Tang, Y. APAN: Across-Scale Progressive Attention Network for Single Image Deraining. IEEE Signal Process. Lett. 2022, 29, 159–163. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, H.; Fu, M.; Chen, J.; Wang, X.; Wang, K. A Two-Branch Neural Network for Non-Homogeneous Dehazing via Ensemble Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Hu, B. Multi-Scale Feature Fusion Network with Attention for Single Image Dehazing. Pattern Recognit. Image Anal. 2021, 31, 608–615. [Google Scholar] [CrossRef]

- Wang, J.; Li, C.; Xu, S. An Ensemble Multi-Scale Residual Attention Network (EMRA-Net) for Image Dehazing. Multimed. Tools Appl. 2021, 80, 29299–29319. [Google Scholar]

- Zhao, D.; Xu, L.; Ma, L. Pyramid Global Context Network for Image Dehazing. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3037–3050. [Google Scholar] [CrossRef]

- Sheng, J.; Lv, G.; Du, G.; Wang, Z.; Feng, Q. Multi-Scale Residual Attention Network for Single Image Dehazing. Digit. Signal Process. 2022, 121, 103327. [Google Scholar] [CrossRef]

- Fan, G.; Hua, Z.; Li, J. Multi-Scale Depth Information Fusion Network for Image Dehazing. Appl. Intell. 2021, 51, 7262–7280. [Google Scholar]

- Liu, J.; Wu, H.; Xie, Y.; Qu, Y.; Ma, L. Trident Dehazing Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Jo, E.; Sim, J. Multi-Scale Selective Residual Learning for Non-Homogeneous Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Saleh, K.; Abobakr, A.; Attia, M.; Iskander, J.; Nahavandi, D.; Hossny, M.; Nahavandi, S. Domain Adaptation for Vehicle Detection from Bird’s Eye View LiDAR Point Cloud Data. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Wang, Y.; Yin, J.; Li, W.; Frossard, P.; Yang, R.; Shen, J. SSDA3D: Semi-Supervised Domain Adaptation for 3D Object Detection from Point Cloud. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–21 May 2021. [Google Scholar]

- Sindagi, V.A.; Oza, P.; Yasarla, R.; Patel, M.V. Prior-Based Domain Adaptive Object Detection for Hazy and Rainy Conditions; Springer International Publishing: Cham, Switzerland, 2020; Volume 12350, ISBN 978-3-030-58557-7. [Google Scholar]

- Zhao, S.; Zhang, L.; Member, S.; Shen, Y. RefineDNet: A Weakly Supervised Refinement Framework for Single Image Dehazing. IEEE Trans. Image Process. 2021, 30, 3391–3404. [Google Scholar] [CrossRef]

- Li, B.; Gou, Y.; Gu, S.; Zitao, J.; Joey, L.; Zhou, T.; Peng, X. You Only Look Yourself: Unsupervised and Untrained Single Image Dehazing Neural Network. Int. J. Comput. Vis. 2021, 129, 1754–1767. [Google Scholar] [CrossRef]

- Shao, Y.; Li, L.; Ren, W.; Gao, C.; Sang, N. Domain Adaptation for Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2808–2817. [Google Scholar]

- Chen, Z.; Wang, Y.; Yang, Y.; Liu, D. PSD: Principled Synthetic-to-Real Dehazing Guided by Physical Priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7180–7189. [Google Scholar]

- Wang, Y.; Ma, C.; Liu, J. SmartAssign: Learning A Smart Knowledge Assignment Strategy for Deraining and Desnowing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3677–3686. [Google Scholar]

- Li, Y.; Monno, Y.; Okutomi, M. Single Image Deraining Network with Rain Embedding Consistency and Layered LSTM. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 4060–4069. [Google Scholar]

- Rai, S.N.; Saluja, R.; Arora, C.; Balasubramanian, V.N.; Subramanian, A.; Jawahar, C.V. FLUID: Few-Shot Self-Supervised Image Deraining. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022. [Google Scholar]

- Jasuja, C.; Gupta, H.; Gupta, D.; Parihar, A.S. SphinxNet—A Lightweight Network for Single Image Deraining. In Proceedings of the 2021 International Conference on Intelligent Technologies (CONIT), Hubli, India, 25–27 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, S.C.; Jaw, D.W.; Hoang, Q.V.; Le, T.H. 3FL-Net: An Efficient Approach for Improving Performance of Lightweight Detectors in Rainy Weather Conditions. IEEE Trans. Intell. Transp. Syst. 2023, 24, 4293–4305. [Google Scholar] [CrossRef]

- Cho, J.; Kim, S. Memory-Guided Image De-Raining Using Time-Lapse Data. IEEE Trans. Image Process. 2022, 31, 4090–4103. [Google Scholar] [CrossRef]

- Jiang, K.; Member, S.; Wang, Z.; Yi, P.; Chen, C.; Han, Z.; Lu, T.; Huang, B.; Jiang, J. Decomposition Makes Better Rain Removal: An Improved Attention-Guided Deraining Network. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3981–3995. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, Z.; Xu, M.; Hong, R.; Fan, J.; Yan, S. Robust Attention Deraining Network for Synchronous Rain Streaks and Raindrops Removal. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 6464–6472. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Feng, J.; Guo, Z.; Yan, S.; Liu, J.; Member, S. Joint Rain Detection and Removal from a Single Image with Contextualized Deep Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1377–1393. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Xie, Q.; Zhao, Q.; Meng, D. A Model-Driven Deep Neural Network for Single Image Rain Removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3103–3112. [Google Scholar]

- Zheng, S.; Lu, C.; Wu, Y.; Gupta, G. SAPNet: Segmentation-Aware Progressive Network for Perceptual Contrastive Deraining. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 52–62. [Google Scholar]

- Chen, W.T.; Fang, H.Y.; Hsieh, C.L.; Tsai, C.C.; Chen, I.H.; Ding, J.J.; Kuo, S.Y. ALL Snow Removed: Single Image Desnowing Algorithm Using Hierarchical Dual-Tree Complex Wavelet Representation and Contradict Channel Loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4176–4185. [Google Scholar] [CrossRef]

- Chen, W.-T.; Fang, H.-Y.; Ding, J.-J.; Tsai, C.-C.; Kuo, S.-Y. JSTASR: Joint Size and Transparency-Aware Snow Removal Algorithm Based on Modified Partial Convolution and Veiling Effect Removal. In Computer Vision–ECCV 2020: Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Ju, M.; Ding, C.; Ren, W.; Yang, Y.; Zhang, D.; Guo, Y.J. IDE: Image Dehazing and Exposure Using an Enhanced Atmospheric Scattering Model. IEEE Trans. Image Process. 2021, 30, 2180–2192. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Song, Z.; Wu, D.; Wang, J.; Wang, L. Mean Shift-Based Single Image Dehazing with Re-Refined Transmission Map. In Proceedings of the 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014; pp. 4058–4064. [Google Scholar]

- Yuan, H.; Liu, C.; Guo, Z.; Sun, Z. A Region-Wised Medium Transmission Based Image Dehazing Method. IEEE Access 2017, 5, 1735–1742. [Google Scholar] [CrossRef]

- He, K. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 1956–1963. [Google Scholar] [CrossRef]

- Hautière, N.; Tarel, J.P.; Aubert, D. Towards Fog-Free in-Vehicle Vision Systems through Contrast Restoration. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar] [CrossRef]

- Jiang, N.; Hu, K.; Zhang, T.; Chen, W.; Xu, Y.; Zhao, T. Deep Hybrid Model for Single Image Dehazing and Detail Refinement. Pattern Recognit. 2023, 136, 109227. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J.; Zhao, J. Automatic Vehicle Detection With Roadside LiDAR Data Under Rainy and Snowy Conditions. IEEE Intell. Transp. Syst. Mag. 2021, 13, 197–209. [Google Scholar] [CrossRef]

- Shih, Y.; Liao, W.; Lin, W.; Wong, S.; Wang, C. Reconstruction and Synthesis of Lidar Point Clouds of Spray. IEEE Robot. Autom. Lett. 2022, 7, 3765–3772. [Google Scholar] [CrossRef]

- Hahner, M.; Sakaridis, C.; Bijelic, M.; Heide, F.; Yu, F.; Dai, D.; Gool, L. Van LiDAR Snowfall Simulation for Robust 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16364–16374. [Google Scholar]

- Godfrey, J.; Kumar, V.; Subramanian, S.C. Evaluation of Flash LiDAR in Adverse Weather Conditions Toward Active Road Vehicle Safety. IEEE Sens. J. 2023, 23, 20129–20136. [Google Scholar] [CrossRef]

- Wen, L.; Peng, Y.; Lin, M.; Gan, N.; Tan, R. Multi-Modal Contrastive Learning for LiDAR Point Cloud Rail-Obstacle Detection in Complex Weather. Electronics 2024, 13, 220. [Google Scholar] [CrossRef]

- Anh, N.; Mai, M.; Duthon, P.; Khoudour, L.; Crouzil, A.; Velastin, S.A. 3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions. Sensors 2021, 21, 6711. [Google Scholar] [CrossRef]

- Liu, Z.; Cai, Y.; Wang, H.; Chen, L.; Gao, H.; Jia, Y.; Member, S.; Li, Y. Robust Target Recognition and Tracking of Self-Driving Cars With Radar and Camera Information Fusion Under Severe Weather Conditions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6640–6653. [Google Scholar] [CrossRef]

- Qian, K.; Zhu, S.; Zhang, X.; Li, L.E. Robust Multimodal Vehicle Detection in Foggy Weather Using Complementary Lidar and Radar Signals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 444–453. [Google Scholar]

- Lin, S.L.; Wu, B.H. Application of Kalman Filter to Improve 3d Lidar Signals of Autonomous Vehicles in Adverse Weather. Appl. Sci. 2021, 11, 3018. [Google Scholar] [CrossRef]

- Park, J.; Member, S.; Park, J.; Kim, K. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar]

- Kaygisiz, B.H.; Erkmen, I.; Erkmen, A.M. GPS/INS Enhancement for Land Navigation Using Neural Network. J. Navig. 2004, 57, 297–310. [Google Scholar] [CrossRef]

- He, Y.; Li, J.; Liu, J. Research on GNSS INS & GNSS/INS Integrated Navigation Method for Autonomous Vehicles: A Survey. IEEE Access 2023, 11, 79033–79055. [Google Scholar] [CrossRef]

- Guilloton, A.; Arethens, J.P.; Escher, A.C.; MacAbiau, C.; Koenig, D. Multipath Study on the Airport Surface. In Proceedings of the 2012 IEEE/ION Position, Location and Navigation Symposium, Myrtle Beach, SC, USA, 23–26 April 2012; pp. 355–365. [Google Scholar] [CrossRef]

- Kaplan, E.D.; Hegarty, C.J. Understanding GPS: Principles and Applications; Artech House: Norwood, MA, USA, 2006. [Google Scholar]

- Godha, S.; Cannon, M.E. GPS/MEMS INS Integrated System for Navigation in Urban Areas. GPS Solut. 2007, 11, 193–203. [Google Scholar] [CrossRef]

- Breßler, J.; Obst, M. GNSS Positioning in Non-Line-of-Sight Context—A Survey for Technological Innovation. Adv. Sci. Technol. Eng. Syst. 2017, 2, 722–731. [Google Scholar] [CrossRef]

- Wen, W.W.; Hsu, L.T. 3D LiDAR Aided GNSS NLOS Mitigation in Urban Canyons. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18224–18236. [Google Scholar] [CrossRef]

- Angrisano, A.; Vultaggio, M.; Gaglione, S.; Crocetto, N. Pedestrian Localization with PDR Supplemented by GNSS. In Proceedings of the 2019 European Navigation Conference (ENC), Warsaw, Poland, 9–12 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Groves, P.D.; Jiang, Z. Height Aiding, C/N0 Weighting and Consistency Checking for Gnss Nlos and Multipath Mitigation in Urban Areas. J. Navig. 2013, 66, 653–669. [Google Scholar] [CrossRef]

- Groves, P.D.; Wang, L.; Ziebart, M. Shadow Matching: Improved GNSS Accuracy in Urban Canyons. GPS World 2012, 23, 14–18. [Google Scholar]

- Zhai, H.Q.; Wang, L.H. The Robust Residual-Based Adaptive Estimation Kalman Filter Method for Strap-down Inertial and Geomagnetic Tightly Integrated Navigation System. Rev. Sci. Instrum. 2020, 91, 104501. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, Q.; Niu, X. Estimate the Pitch and Heading Mounting Angles of the IMU for Land Vehicular GNSS/INS Integrated System. IEEE Trans. Intell. Transp. Syst. 2021, 22, 6503–6515. [Google Scholar] [CrossRef]

- Li, W.; Li, W.; Cui, X.; Zhao, S.; Lu, M. A Tightly Coupled RTK/INS Algorithm with Ambiguity Resolution in the Position Domain for Ground Vehicles in Harsh Urban Environments. Sensors 2018, 18, 2160. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Dong, Y.; Li, Q.; Li, Z.; Wu, J. Using Allan Variance to Improve Stochastic Modeling for Accurate GNSS/INS Integrated Navigation. GPS Solut. 2018, 22, 53. [Google Scholar] [CrossRef]

- Ning, Y.; Wang, J.; Han, H.; Tan, X.; Liu, T. An Optimal Radial Basis Function Neural Network Enhanced Adaptive Robust Kalman Filter for GNSS/INS Integrated Systems in Complex Urban Areas. Sensors 2018, 18, 3091. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Nassar, S.; El-Sheimy, N. Two-Filter Smoothing for Accurate INS/GPS Land-Vehicle Navigation in Urban Centers. IEEE Trans. Veh. Technol. 2010, 59, 4256–4267. [Google Scholar] [CrossRef]

- Yao, Y.; Xu, X.; Zhu, C.; Chan, C.Y. A Hybrid Fusion Algorithm for GPS/INS Integration during GPS Outages. Meas. J. Int. Meas. Confed. 2017, 103, 42–51. [Google Scholar] [CrossRef]

- Li, Z.; Wang, J.; Li, B.; Gao, J.; Tan, X. GPS/INS/Odometer Integrated System Using Fuzzy Neural Network for Land Vehicle Navigation Applications. J. Navig. 2014, 67, 967–983. [Google Scholar] [CrossRef]

- Abdolkarimi, E.S.; Mosavi, M.R.; Abedi, A.A.; Mirzakuchaki, S. Optimization of the Low-Cost INS/GPS Navigation System Using ANFIS for High Speed Vehicle Application. In Proceedings of the 2015 Signal Processing and Intelligent Systems Conference (SPIS), Tehran, Iran, 16–17 December 2015; pp. 93–98. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A.; Osman, A.; El-Sheimy, N. Online INS/GPS Integration with a Radial Basis Function Neural Network. IEEE Aerosp. Electron. Syst. Mag. 2005, 20, 8–14. [Google Scholar] [CrossRef]

- Sun, J.; Kousik, S.; Fridovich-Keil, D.; Schwager, M. Connected Autonomous Vehicle Motion Planning with Video Predictions from Smart, Self-Supervised Infrastructure. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; pp. 1721–1726. [Google Scholar] [CrossRef]

- Nguyen, A.; Nguyen, N.; Tran, K.; Tjiputra, E.; Tran, Q.D. Autonomous Navigation in Complex Environments with Deep Multimodal Fusion Network. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5824–5830. [Google Scholar] [CrossRef]

- Martínez, C.; Jiménez, F. Implementation of a Potential Field-Based Decision-Making Algorithm on Autonomous Vehicles for Driving in Complex Environments. Sensors 2019, 19, 3318. [Google Scholar] [CrossRef]

- Dehman, A.; Farooq, B. Are Work Zones and Connected Automated Vehicles Ready for a Harmonious Coexistence? A Scoping Review and Research Agenda. Transp. Res. Part C Emerg. Technol. 2021, 133, 103422. [Google Scholar] [CrossRef]

- Malik, S.; Khan, M.A.; Aadam; El-Sayed, H.; Iqbal, F.; Khan, J.; Ullah, O. CARLA+: An Evolution of the CARLA Simulator for Complex Environment Using a Probabilistic Graphical Model. Drones 2023, 7, 111. [Google Scholar] [CrossRef]

- Beul, M.; Krombach, N.; Zhong, Y.; Droeschel, D.; Nieuwenhuisen, M.; Behnke, S. A High-Performance MAV for Autonomous Navigation in Complex 3D Environments. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 1241–1250. [Google Scholar] [CrossRef]

- Dharmadhikari, M.; Nguyen, H.; Mascarich, F.; Khedekar, N.; Alexis, K. Autonomous Cave Exploration Using Aerial Robots. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 942–949. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.; Shen, Y.; Zhang, X. Autonomous Navigation of UAVs in Large-Scale Complex Environments: A Deep Reinforcement Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 2124–2136. [Google Scholar] [CrossRef]

- Mirowski, P.; Pascanu, R.; Viola, F.; Soyer, H.; Ballard, A.J.; Banino, A.; Denil, M.; Goroshin, R.; Sifre, L.; Kavukcuoglu, K.; et al. Learning to Navigate in Complex Environments. arXiv 2016, arXiv:1611.03673. [Google Scholar]

- Lin, J.; Yang, X.; Zheng, P.; Cheng, H. End-to-End Decentralized Multi-Robot Navigation in Unknown Complex Environments via Deep Reinforcement Learning. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; pp. 2493–24500. [Google Scholar] [CrossRef]

- Bouton, M.; Nakhaei, A.; Fujimura, K.; Kochenderfer, M.J. Safe Reinforcement Learning with Scene Decomposition for Navigating Complex Urban Environments. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1469–1476. [Google Scholar] [CrossRef]

- Zhang, J.; Yu, Z.; Mao, S.; Periaswamy, S.C.G.; Patton, J.; Xia, X. IADRL: Imitation Augmented Deep Reinforcement Learning Enabled UGV-UAV Coalition for Tasking in Complex Environments. IEEE Access 2020, 8, 102335–102347. [Google Scholar] [CrossRef]

- Lauzon, M.; Rabbath, C.-A.; Gagnon, E. UAV Autonomy for Complex Environments. Unmanned Syst. Technol. VIII 2006, 6230, 184–195. [Google Scholar] [CrossRef]

- Shen, S.; Mulgaonkar, Y.; Michael, N.; Kumar, V. Vision-Based State Estimation for Autonomous Rotorcraft MAVs in Complex Environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1758–1764. [Google Scholar] [CrossRef]

- Kim, B.; Azhari, M.B.; Park, J.; Shim, D.H. An Autonomous UAV System Based on Adaptive LiDAR Inertial Odometry for Practical Exploration in Complex Environments. J. Field Robot. 2024, 41, 669–698. [Google Scholar] [CrossRef]

- Kuutti, S.; Fallah, S.; Katsaros, K.; Dianati, M.; Mccullough, F.; Mouzakitis, A. A Survey of the State-of-the-Art Localization Techniques and Their Potentials for Autonomous Vehicle Applications. IEEE Internet Things J. 2018, 5, 829–846. [Google Scholar] [CrossRef]

- Johnson, C. Readiness of the Road Network for Connected and Autonomous Vehicles; RAC Foundation: London, UK, 2017; pp. 1–42. [Google Scholar]

- Bruno, D.R.; Sales, D.O.; Amaro, J.; Osorio, F.S. Analysis and Fusion of 2D and 3D Images Applied for Detection and Recognition of Traffic Signs Using a New Method of Features Extraction in Conjunction with Deep Learning. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar] [CrossRef]

- Liu, Y.; Tight, M.; Sun, Q.; Kang, R. A Systematic Review: Road Infrastructure Requirement for Connected and Autonomous Vehicles (CAVs). J. Phys. Conf. Ser. 2019, 1187, 042073. [Google Scholar] [CrossRef]

- Tengilimoglu, O.; Carsten, O.; Wadud, Z. Implications of Automated Vehicles for Physical Road Environment: A Comprehensive Review. Transp. Res. Part E Logist. Transp. Rev. 2023, 169, 102989. [Google Scholar] [CrossRef]

- Lawson, S. Roads That Cars Can Read REPORT III: Tackling the Transition to Automated Vehicles. 2018. Available online: http://resources.irap.org/Report/2018_05_30_Roads (accessed on 15 December 2019).

- Ambrosius, E. Autonomous Driving and Road Markings. IRF & UNECE ITS Event “Governance and Infrastructure for Smart and Autonomous Mobility. 2018, pp. 1–17. Available online: https://unece.org/fileadmin/DAM/trans/doc/2018/wp29grva/s1p5._Eva_Ambrosius.pdf (accessed on 15 December 2019).

- Xing, Y.; Lv, C.; Chen, L.; Wang, H.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Advances in Vision-Based Lane Detection: Algorithms, Integration, Assessment, and Perspectives on ACP-Based Parallel Vision. IEEE/CAA J. Autom. Sin. 2018, 5, 645–661. [Google Scholar] [CrossRef]

- Dong, Y.; Patil, S.; van Arem, B.; Farah, H. A Hybrid Spatial–Temporal Deep Learning Architecture for Lane Detection. Comput. Civ. Infrastruct. Eng. 2023, 38, 67–86. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, Z.; Zhang, X.; Xue, J.H.; Liao, Q. Deep Learning in Lane Marking Detection: A Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 5976–5992. [Google Scholar] [CrossRef]

- Wang, B.; Liao, Z.; Guo, S. Adaptive Curve Passing Control in Autonomous Vehicles with Integrated Dynamics and Camera-Based Radius Estimation. Vehicles 2024, 6, 1648–1660. [Google Scholar] [CrossRef]

- Sanusi, F.; Choi, J.; Kim, Y.H.; Moses, R. Development of a Knowledge Base for Multiyear Infrastructure Planning for Connected and Automated Vehicles. J. Transp. Eng. Part A Syst. 2022, 148, 03122001. [Google Scholar] [CrossRef]

- Khan, S.M.; Chowdhury, M.; Morris, E.A.; Deka, L. Synergizing Roadway Infrastructure Investment with Digital Infrastructure for Infrastructure-Based Connected Vehicle Applications: Review of Current Status and Future Directions. J. Infrastruct. Syst. 2019, 25, 03119001. [Google Scholar] [CrossRef]

- Luu, Q.; Nguyen, T.M.; Zheng, N.; Vu, H.L. Digital Infrastructure for Connected and Automated Vehicles. arXiv 2023, arXiv:2401.08613. [Google Scholar]

- Gomes Correia, M.; Ferreira, A. Road Asset Management and the Vehicles of the Future: An Overview, Opportunities, and Challenges. Int. J. Intell. Transp. Syst. Res. 2023, 21, 376–393. [Google Scholar] [CrossRef]

- Tang, Z.; He, J.; Flanagan, S.K.; Procter, P.; Cheng, L. Cooperative Connected Smart Road Infrastructure and Autonomous Vehicles for Safe Driving. In Proceedings of the 2021 IEEE 29th International Conference on Network Protocols (ICNP), Dallas, TX, USA, 1–5 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Labi, S.; Saeed, T.U.; Sinha, K.C. Design and Management of Highway Infrastructure to Accommodate CAVs Design and Management of Highway Infrastructure to Accommodate CAVs; Purdue University: West Lafayette, IN, USA, 2023; ISBN 3551747105. [Google Scholar]

- Sobanjo, J.O. Civil Infrastructure Management Models for the Connected and Automated Vehicles Technology. Infrastructures 2019, 4, 49. [Google Scholar] [CrossRef]

- Saeed, T.U.; Alabi, B.N.T.; Labi, S. Preparing Road Infrastructure to Accommodate Connected and Automated Vehicles: System-Level Perspective. J. Infrastruct. Syst. 2021, 27, 2–4. [Google Scholar] [CrossRef]

- Ran, B.; Cheng, Y.; Li, S.; Li, H.; Parker, S. Classification of Roadway Infrastructure and Collaborative Automated Driving System. SAE Int. J. Connect. Autom. Veh. 2023, 6, 387–395. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, Y.; Zhang, J.; Tian, C.; Ren, R.; Han, T.; Proctor, R.W. Human-Centred Design of next Generation Transportation Infrastructure with Connected and Automated Vehicles: A System-of-Systems Perspective. Theor. Issues Ergon. Sci. 2024, 25, 287–315. [Google Scholar] [CrossRef]

- Rios-Torres, J.; Malikopoulos, A.A. Automated and Cooperative Vehicle Merging at Highway On-Ramps. IEEE Trans. Intell. Transp. Syst. 2017, 18, 780–789. [Google Scholar] [CrossRef]

- Ghanipoor Machiani, S.; Ahmadi, A.; Musial, W.; Katthe, A.; Melendez, B.; Jahangiri, A. Implications of a Narrow Automated Vehicle-Exclusive Lane on Interstate 15 Express Lanes. J. Adv. Transp. 2021, 2021, 6617205. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Z.; Yin, Y.; Peeta, S. Deployment of Roadside Units to Overcome Connectivity Gap in Transportation Networks with Mixed Traffic. Transp. Res. Part C Emerg. Technol. 2020, 111, 496–512. [Google Scholar] [CrossRef]

- Rios-Torres, J.; Malikopoulos, A.A. A Survey on the Coordination of Connected and Automated Vehicles at Intersections and Merging at Highway On-Ramps. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1066–1077. [Google Scholar] [CrossRef]

- van Geelen, H.; Redant, K. Connected & Autonomous Vehicles and Road Infrastructure—State of Play and Outlook. Transp. Res. Procedia 2023, 72, 1311–1317. [Google Scholar] [CrossRef]

- Fu, X.; Huang, J.; Zeng, D.; Huang, Y.; Ding, X.; Paisley, J. Removing Rain from Single Images via a Deep Detail Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1715–1723. [Google Scholar] [CrossRef]

- Zhang, H.; Patel, V.M. Density-Aware Single Image De-Raining Using a Multi-Stream Dense Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 695–704. [Google Scholar] [CrossRef]

- Xie, L.; Xiang, C.; Yu, Z.; Xu, G.; Yang, Z.; Cai, D.; He, X. PI-RCNN: An Efficient Multi-Sensor 3D Object Detector with Point-Based Attentive Cont-Conv Fusion Module. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12460–12467. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep Continuous Fusion for Multi-Sensor 3D Object Detection. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 663–678. [Google Scholar] [CrossRef]

- Liu, L.; He, J.; Ren, K.; Xiao, Z.; Hou, Y. A LiDAR–Camera Fusion 3D Object Detection Algorithm. Information 2022, 13, 169. [Google Scholar] [CrossRef]

- Kenk, M.A.; Hassaballah, M. DAWN: Vehicle Detection in Adverse Weather Nature Dataset. arXiv 2020, arXiv:2008.05402. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Timofte, R.; De Vleeschouwer, C. I-HAZE: A Dehazing Benchmark with Real Hazy and Haze-Free Indoor Images. In Advanced Concepts for Intelligent Vision Systems: Proceedings of the 19th International Conference, ACIVS 2018, Poitiers, France, 24–27 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 620–631. [Google Scholar] [CrossRef]

- Zheng, Z.; Ren, W.; Cao, X.; Hu, X.; Wang, T.; Song, F.; Jia, X. Ultra-High-Definition Image Dehazing via Multi-Guided Bilateral Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16180–16189. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, L.; Huang, S.; Shen, Y.; Zhao, S. Dehazing Evaluation: Real-World Benchmark Datasets, Criteria, and Baselines. IEEE Trans. Image Process. 2020, 29, 6947–6962. [Google Scholar] [CrossRef]

- Zhang, X.; Dong, H.; Pan, J.; Zhu, C.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wang, F. Learning to Restore Hazy Video: A New Real-World Dataset and A New Method. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9235–9244. [Google Scholar] [CrossRef]

- Li, P.; Yun, M.; Tian, J.; Tang, Y.; Wang, G.; Wu, C. Stacked Dense Networks for Single-Image Snow Removal. Neurocomputing 2019, 367, 152–163. [Google Scholar] [CrossRef]

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian Adverse Driving Conditions Dataset. Int. J. Rob. Res. 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Kurup, A.M.; Bos, J.P. Winter Adverse Driving Dataset for Autonomy in Inclement Winter Weather. Opt. Eng. 2023, 62, 031207. [Google Scholar] [CrossRef]

| Factors | Camera | LiDAR | RADAR | GNSS |

|---|---|---|---|---|

| Velocity | -- | ✖ | ✔ | -- |

| Object Detection | ✖ | ✔ | ✔ | -- |

| Resolution | ✔ | -- | ✖ | -- |

| Range | -- | ✔ | ✔ | ✔ |

| Distance Accuracy | -- | ✔ | ✔ | ✔ |

| Lane Detection | ✔ | ✔ | ✖ | ✖ |

| Obstacle Edge Detection | ✔ | ✔ | ✖ | ✖ |

| Weather Conditions | ✖ | -- | ✔ | ✔ |

| Situation Awareness | ✔ | ✔ | ✔ | ✔ |

| Cost | Low | High | Moderate | Moderate |

| Processing Time | Fast | Moderate | Fast | Moderate |

| Maintenance Requirements | Low | Moderate | Low | Low |

| Compatibility | High | Moderate | Moderate | High |

| Durability | Moderate | High | High | High |

| Spatial Coverage | Wide | Moderate | Wide | Wide |

| Category | References | Contribution | Challenges |

|---|---|---|---|

| Road Infrastructure Upgrades | [193,201,202] |

|

|

| Digital Infrastructure and V2X Communication | [202,203,204,205] |

|

|

| Management and Planning Models | [201,206,207,208] |

|

|

| Human-Centered and Cooperative Design | [205,209,210] |

|

|

| Simulation and Testing Environments | [147,205,211,212] |

|

|

| Sensor Integration and Optimization | [205,208,213,214] |

|

|

| Infrastructure Impact Studies | [11,206,212,215] |

|

|

| Dataset | Type of Data | Reference |

|---|---|---|

| Rain12600 | Synthetic | [216] |

| Rain100L | Synthetic | [65,66,67,68,71,72,73,84,90,91,127,128,129,132,134,135] |

| Rain200H | Synthetic | [64,71,127,133] |

| Rain800 | Real | [76,89,90,91,109,127,129,134] |

| Rain12 | Synthetic | [64,66,68,87,109,134] |

| Test100 | Synthetic | [68,70,73,89,132] |

| Test1200 | Synthetic | [70,73,110] |

| Test2800 | Synthetic | [70] |

| RainTrainH | Synthetic | [217] |

| RainTrainL | Synthetic | [217] |

| Rain100H | Synthetic | [65,67,70,71,72,73,84,91,93,107,109,111,127,129,132,134,135,136] |

| KITTI | Real | [76,107,150,218,219,220] |

| Raindrop | Real | [108,133] |

| Cityscapes | Real | [62,70,107,121,128] |

| RID | Real | [70,109] |

| RIS | Real | [70,109] |

| Rain12000 | Synthetic | [85] |

| Rain1400 | Synthetic | [64,71,72,107,109,131,135] |

| DAWN/Rainy | Real | [221] |

| NTURain | Synthetic | [86,109] |

| SPA-Data | Real | [90,127] |

| RESIDE | Synthetic and Real | [76,77,78,97,139,144] |

| I-HAZE | Synthetic | [77,222] |

| O-HAZE | Synthetic | [77] |

| DENSE-HAZE | Synthetic | [77] |

| NH-HAZE | Synthetic | [77,144] |

| HazeRD | Synthetic | [78,144] |

| SOTS | Synthetic | [78,108] |

| 4KID | Synthetic | [223] |

| BeDDE | Real | [224] |

| REVIDE | Synthetic | [225] |

| Snow-100K | Synthetic and Real | [95] |

| SITD | Synthetic | [226] |

| CADC | Real | [227] |

| WADS | Real | [228] |

| CSD | Synthetic | [137] |

| SRRS | Synthetic and Real | [138] |

| AVPolicy | Synthetic | [6] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beigi, S.A.; Park, B.B. Impact of Critical Situations on Autonomous Vehicles and Strategies for Improvement. Future Transp. 2025, 5, 39. https://doi.org/10.3390/futuretransp5020039

Beigi SA, Park BB. Impact of Critical Situations on Autonomous Vehicles and Strategies for Improvement. Future Transportation. 2025; 5(2):39. https://doi.org/10.3390/futuretransp5020039

Chicago/Turabian StyleBeigi, Shahriar Austin, and Byungkyu Brian Park. 2025. "Impact of Critical Situations on Autonomous Vehicles and Strategies for Improvement" Future Transportation 5, no. 2: 39. https://doi.org/10.3390/futuretransp5020039

APA StyleBeigi, S. A., & Park, B. B. (2025). Impact of Critical Situations on Autonomous Vehicles and Strategies for Improvement. Future Transportation, 5(2), 39. https://doi.org/10.3390/futuretransp5020039