Abstract

This paper presents the design and implementation of a deep-learning-based aspect-level sentiment analysis model for the aspect term extraction and sentiment polarity classification of Chinese text comments. The model utilizes two layers of BERT models and fully connected neural networks for feature extraction and classification. It also incorporates a context feature dynamic weighting strategy to focus on aspect words and enhance the model’s performance. Experimental results demonstrate that the proposed model performs well in aspect-level sentiment analysis tasks and effectively extracts sentiment information from the text. Additionally, to facilitate user interaction, a lightweight system is built, which enables model invocation and the visualization of analysis results, offering practical value.

1. Introduction

An aspect-based sentiment analysis (ABSA) is a fine-grained sentiment analysis task that focuses on sentence-level texts. It analyzes aspect terms, opinion terms, aspect categories, and sentiment polarity in different contexts [1,2]. The task can be divided into two subtasks: aspect term extraction and aspect sentiment polarity classification. The descriptions of these subtasks are as follows:

Aspect term extraction refers to the identification and extraction of information related to specific aspects from the text, such as product names, product features, and user evaluations in the context of product reviews. In the field of text analysis and natural language processing, aspect term extraction is an important task that provides foundational information for subsequent applications such as a sentiment analysis and question-answering systems.

Sentiment polarity classification involves categorizing the text into one of the sentiment categories, such as positive, neutral, or negative. It is a fundamental task in the field of natural language processing that helps with understanding the public’s attitudes and sentiments towards events or products.

2. Related Works

A sentiment analysis can be categorized into three levels based on the granularity of the text: a document-level sentiment analysis, sentence-level sentiment analysis, and aspect-level sentiment analysis [3].

Existing datasets for sentiment classification are usually divided into either two or three categories. The first type involves three classes: negative sentiment, positive sentiment, and neutral sentiment, as seen in datasets like SemEval-2014. The second type involves two classes: negative sentiment and positive sentiment, which is the case for the four Chinese datasets used in this paper.

3. Methodology

3.1. Model Architecture

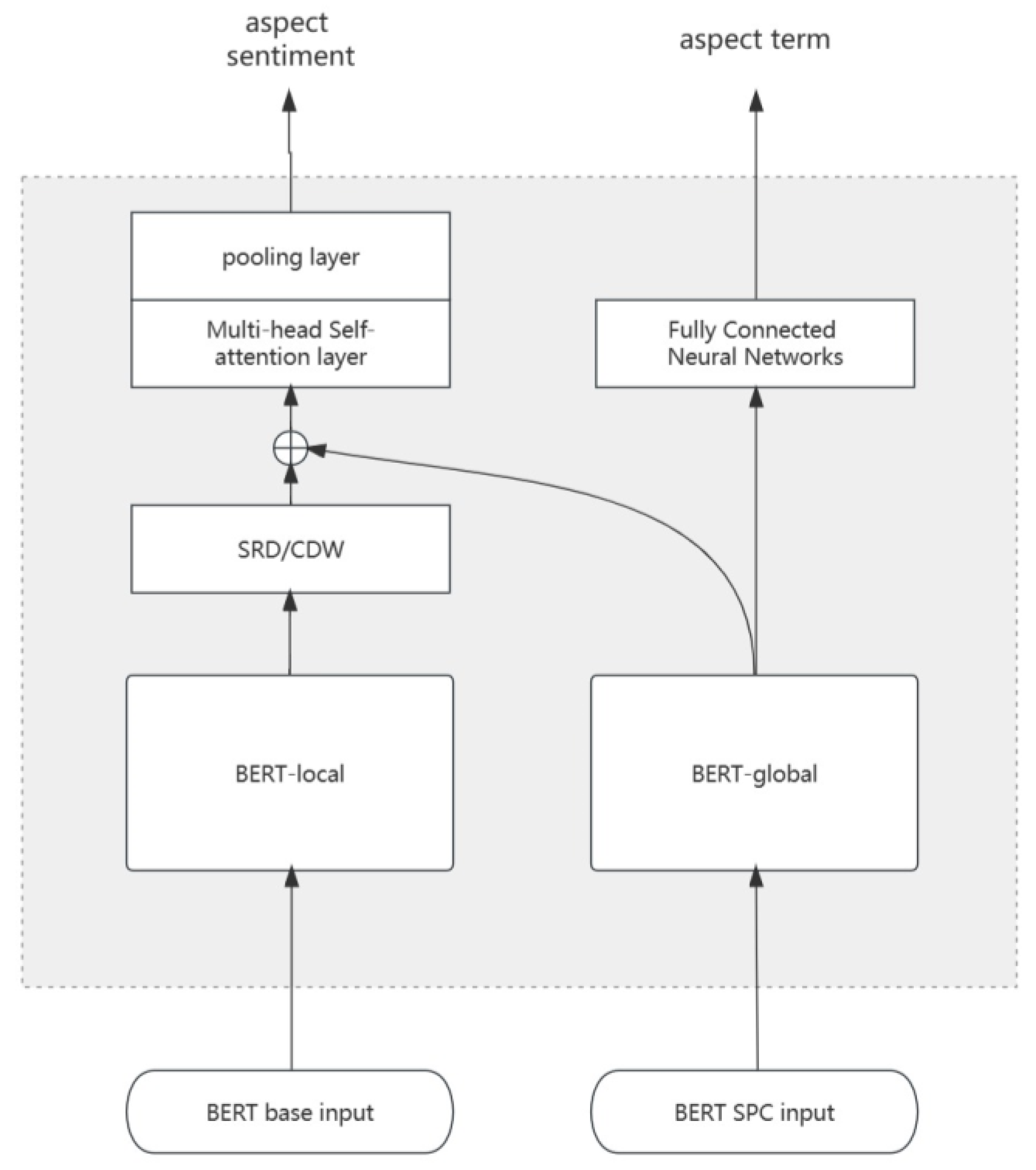

The overall structure of the model is shown in Figure 1. The left half of the model is responsible for generating local context features, while the right half is responsible for generating global context features. Both context feature generator units contain an independent pre-trained BERT layer [4,5], namely BERT-base and BERT-local. In this section, we will provide a detailed description of the structure of the aspect-level sentiment analysis model, focusing on aspect term extraction and sentiment polarity classification.

Figure 1.

Aspect-level sentiment analysis model architecture diagram.

3.1.1. Aspect Term Extraction

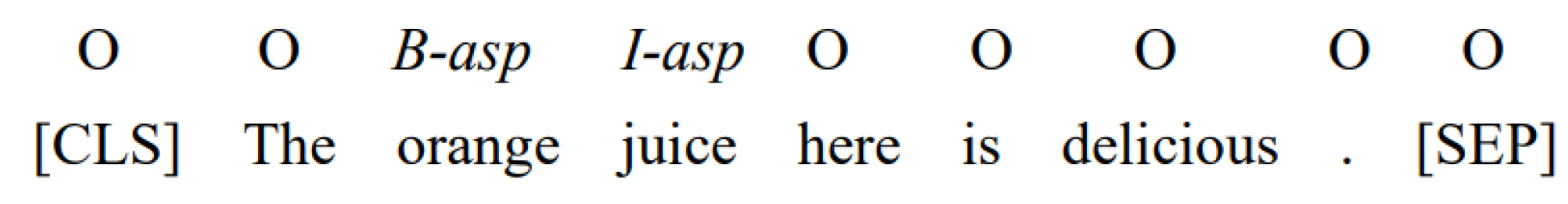

In this task, the comment text is defined as , where w represents each token and n is the total number of tokens. This step generates an annotated sequence Y, where each character in the input sentences corresponds to a label annotation. The label list includes three tags: “O”, “B-asp”, and “I-asp”, which, respectively, represent non-aspect terms, the beginning of an aspect term, and the inside of an aspect term [6]. Figure 2 provides an example of an annotated result, where Y = {O, O, B-asp, I-asp, O, O, O, O, O}.

Figure 2.

Example of annotation results.

In this subtask, we first obtain the initial output of the input sequence through the BERT model, denoted as , where represents the input to the model, and refers to the BERT layer in the global context feature generator. Then, we use to represent the feature of the i-th token in the sequence, and perform token-level classification on the sequence. The classification result corresponds to the annotated result of aspect term extraction, as shown in Equation (1). Here, N represents the number of label categories, and represents the inferred results of the label categories.

Finally, by comparing the probabilities of these labels, the label for each character can be determined, resulting in the aspect term extraction results.

3.1.2. Aspect Polarity Classification

In this step, still represents the token sequence of the comment text. denotes the aspect sequence within the sequence S, where i and j are the starting and ending positions of the aspect word sequence, respectively.

Similar to the aspect term extraction task, we first obtain the preliminary output of the input sequence through the BERT model, denoted as , where represents the model input and corresponds to the BERT layer in the local context feature generator. Next, to determine whether a segment of text belongs to the context of an aspect term, this study employs a semantic relative-distance-based judgment strategy. This strategy represents the distance between each token and the aspect term, calculated as shown in Equation (2). Here, i represents the position of the token, is the center of the aspect term, and m is the length of the aspect term.

To mask the non-local context features learned by the BERT model, we set α as the threshold for semantic relative distance. Contexts with distances greater than the threshold are considered non-local, while those with distances less than or equal to the threshold are considered local contexts.

In addition, this study employs a method called “Context-features Dynamic Weighting (CDW)” to focus on the context surrounding the aspect terms. Specifically, this strategy maintains the local context features unchanged while applying weighted decay to the non-local context features based on their semantic relative distance to the aspect term.

Next, the features learned through these two strategies are combined, and their linear transformations result in the local context features. These local context features are then fused with the previously obtained global features, resulting in integrated context features. These features are further processed through a multi-head self-attention layer and a pooling layer. Finally, applying the SoftMax operation to predict the sentiment polarity yields the results of sentiment polarity classification.

Through this approach, the model can capture the sentiment information surrounding aspect terms in the text more accurately, thereby improving the accuracy of sentiment polarity classification.

3.2. Training Details

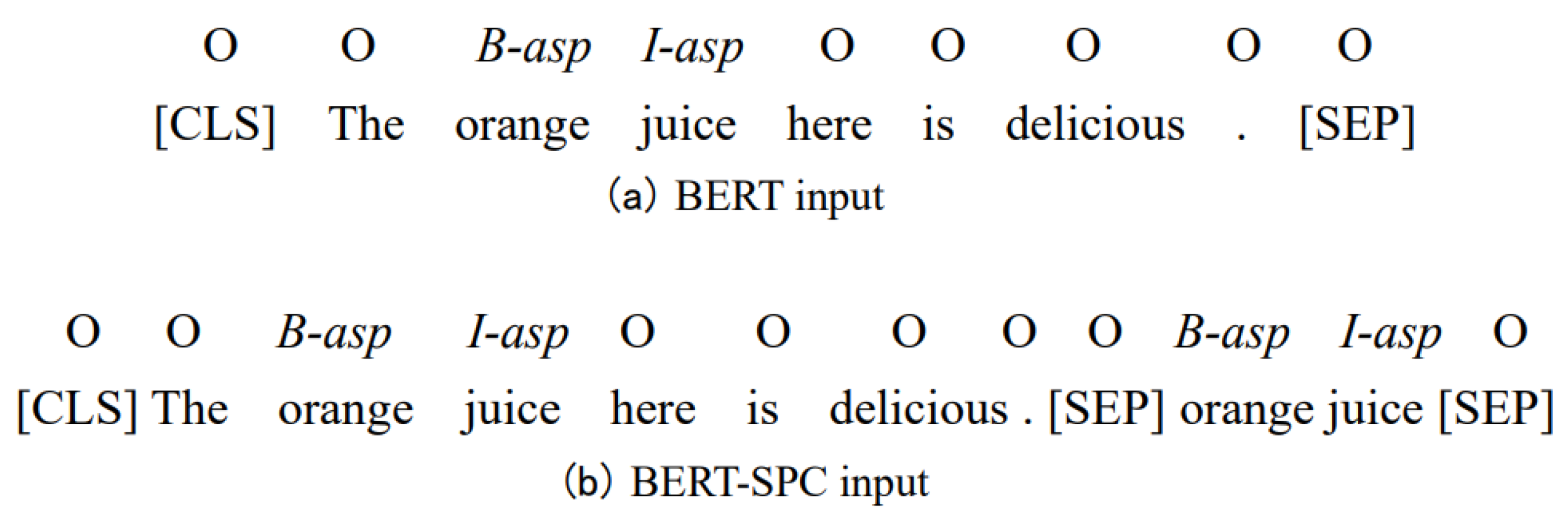

As previous studies have shown, compared to regular BERT inputs, BERT-SPC inputs allow the model to leverage the extracted aspect term information, thereby enhancing the performance of the sentiment polarity classification component. Specifically, BERT-SPC inputs restructure the format of the BERT input sequence by appending the aspect term after the original sentence-ending separator [SEP], followed by another [SEP] token. Figure 3 provides an example comparing the two types of inputs, where the input data in the format of example a are used for training the aspect term extraction model, while the input data in the format of example b are used for training the sentiment polarity analysis model.

Figure 3.

Examples of model input.

4. Experiments

4.1. Datasets

To evaluate the model’s Chinese language ability, it is necessary to train and test the model on Chinese review datasets. Therefore, in addition to evaluating the model on three commonly used ABSA datasets in the field (i.e., laptop and restaurant datasets from SemEval-2014 Task4, and the ACL Twitter dataset), this study also conducted experiments on four publicly available Chinese review datasets (automobile, mobile phone, laptop, and camera) [7] with a total of 20,574 samples.

Next, we divided the datasets into training and testing sets, with 80% of the data used for training and 20% for testing. The specific data distribution can be found in Table 1.

Table 1.

The ABSA datasets, including three English datasets and four Chinese datasets.

The sample distribution of these datasets is uneven, with, for example, a majority of positive reviews in the restaurant dataset, and a majority of neutral comments in the Twitter dataset.

4.2. Hyperparameters’ Setting

Apart from some hyperparameters’ setting referred to previous research, we also conducted controlled trials and analyzed the experimental results to optimize the hyperparameters’ setting. The superior hyperparameters are listed in Table 2. The default SRD setting for all experiments is 5, with additional instructions for experiments with different SRD.

Table 2.

Global hyperparameters’ settings for the model in the experiments.

4.3. Performance Analysis

Table 3 displays the training performance of the model on different datasets. From the results in the table, it can be observed that the model used in this study demonstrates a good ability in identifying aspect terms and evaluating sentiment polarity.

Table 3.

The experimental results (%) of the model on different datasets.

5. Aspect-Based Sentiment Analysis System

The main objective of this phase is to incorporate the model into the system, which involves completing the interaction between the system and the user, as well as invoking the model to achieve the desired functionality of the system. The main processing flow of the system includes data input, data preprocessing, model processing, output generation, and result handling.

By completing these steps, the system seamlessly integrates the aspect-level sentiment analysis model, allowing users to interact with the system and obtain meaningful insights and analysis results.

6. Conclusions

In this research work, we explored existing methods and techniques in the field of aspect-level sentiment analyses and designed and implemented a highly accurate aspect-level sentiment analysis system based on pre-trained language models. This paper provides a comprehensive overview of aspect-level sentiment analysis tasks, as well as detailed discussions on the background, significance, related technologies, and implementation methods of the research. Through steps such as data preprocessing, model parameter tuning, training and evaluation, system construction, and debugging, this study successfully completed the design and implementation of the aspect-level sentiment analysis task, resulting in promising experimental results.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://alt.qcri.org/semeval2014/task4/index.php?id=data-and-tools# (accessed on 1 Juny 2023).

Conflicts of Interest

The author declares no conflict of interest.

References

- Nazir, A.; Rao, Y.; Wu, L.; Sun, L. Issues and challenges of aspect-based sentiment analysis: A comprehensive survey. IEEE Trans. Affect. Comput. 2020, 13, 845–863. [Google Scholar] [CrossRef]

- Mao, Y.; Shen, Y.; Yu, C.; Cai, L. A joint training dual-mrc framework for aspect based sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; Volume 35, pp. 13543–13551. [Google Scholar]

- Xu, L.; Chia, Y.K.; Bing, L. Learning span-level interactions for aspect sentiment triplet extraction. arXiv 2021, arXiv:2107.12214. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Li, X.; Bing, L.; Zhang, W.; Lam, W. Exploiting BERT for end-to-end aspect-based sentiment analysis. arXiv 2019, arXiv:1910.00883. [Google Scholar]

- Yang, H.; Zeng, B.; Yang, J.; Song, Y.; Xu, R. A multi-task learning model for chinese-oriented aspect polarity classification and aspect term extraction. Neurocomputing 2021, 419, 344–356. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).