Graphs and Networks from an Algorithmic Information Perspective

Share This Topical Collection

Editors

Dr. Narsis A. Kiani

Dr. Narsis A. Kiani

Dr. Narsis A. Kiani

Dr. Narsis A. Kiani

E-Mail

Website

Collection Editor

Algorithmic Dynamics Lab, Department of Oncology-Pathology & Center of Molecular Medicine, Karolinska Institutet, 171 77 Solna, Sweden

Interests: dynamical system; network theory; algorithmic information theory; machine learning; fuzzy logic; precision medicine; computational pharmacology; algorithmic information dynamics

Special Issues, Collections and Topics in MDPI journals

Dr. Hector Zenil

Dr. Hector Zenil

Dr. Hector Zenil

Dr. Hector Zenil

E-Mail

Website

Collection Editor

1. Oxford Immune Algorithmics, Reading RG1 3EU, UK2. Algorithmic Dynamics Lab Unit of Computational Medicine, SE-17177 Stockholm, Sweden

Interests: algorithmic information dynamics; causality; algorithmic information theory; cellular automata; machine learning; randomness

Special Issues, Collections and Topics in MDPI journals

Prof. Dr. Jesper Tegnér

Prof. Dr. Jesper Tegnér

Prof. Dr. Jesper Tegnér

Prof. Dr. Jesper Tegnér

E-Mail

Website

Collection Editor

1. Unit of Computational Medicine, Center for Molecular Medicine, Department of Medicine Solna, Karolinska Institute, 17177 Stockholm, Sweden

2. Biological and Environmental Sciences and Engineering Division, King Abdullah University of Science and Technology (KAUST), Thuwal 23955, Saudi Arabia

3. Computer, Electrical and Mathematical Sciences and Engineering Division, King Abdullah University of Science and Technology (KAUST), Thuwal 23955, Saudi Arabia

Interests: living systems; machine learning; systems medicine; causality

Topical Collection Information

Dear Colleagues,

Graph theory and network science are classic subjects in mathematics widely investigated in the 20th century, transforming research in many fields of science from economy to medicine. However, it has become increasingly clear that the challenge of analyzing these networks cannot be addressed by tools relying solely on statistical methods. Therefore, model-driven approaches are needed. The analysis of such networks is even more challenging for multiscale, multilayer networks, that are neither static nor in an equilibrium state. Recent advances in network science suggest that algorithmic information theory could play an increasingly important role in breaking those limits imposed by traditional statistical analysis in modeling evolving complex networks or interacting networks. Further progress on this front calls for new techniques for an improved mechanistic understanding of complex systems, thereby calling out for increased interaction between network theory and algorithmic information theory.

This Topical Collection is a forum for the presentation and exploration of the foundations of new and improved techniques for the analysis and interpretation of real-world natural and engineered complex systems. Not only from the perspective of algorithmic information theory but also in connection with dynamical systems and causality. Contributions addressing any of these issues and topics are very welcome. Model-driven techniques augmenting explainability or better interpretable approaches to machine learning, better causation-grounded graphical models, and algorithmic information dynamics in application to networks, all fall within the scope of this Topical Collection. However, approaches based on popular statistical compression algorithms such as Lempel-Ziv and its cognates are excluded.

Dr. Narsis A. Kiani

Dr. Hector Zenil

Prof. Dr. Jesper Tegnér

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Entropy is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Keywords

- Graph theory

- algorithmic information theory

- complexity

- machine learning

- dynamic networks

- complex networks

- multilayer network

- deep learning

- causality

- explainability.

Published Papers (5 papers)

Open AccessArticle

Auxiliary Graph for Attribute Graph Clustering

by

Wang Li, Siwei Wang, Xifeng Guo, Zhenyu Zhou and En Zhu

Cited by 2 | Viewed by 2502

Abstract

Attribute graph clustering algorithms that include topological structural information into node characteristics for building robust representations have proven to have promising efficacy in a variety of applications. However, the presented topological structure emphasizes local links between linked nodes but fails to convey relationships

[...] Read more.

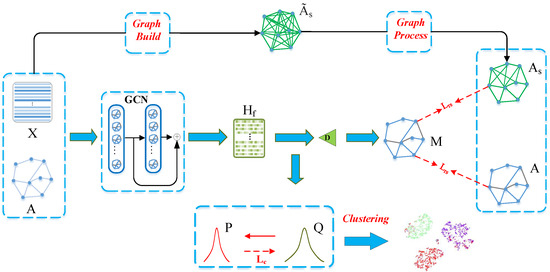

Attribute graph clustering algorithms that include topological structural information into node characteristics for building robust representations have proven to have promising efficacy in a variety of applications. However, the presented topological structure emphasizes local links between linked nodes but fails to convey relationships between nodes that are not directly linked, limiting the potential for future clustering performance improvement. To solve this issue, we offer the Auxiliary Graph for Attribute Graph Clustering technique (AGAGC). Specifically, we construct an additional graph as a supervisor based on the node attribute. The additional graph can serve as an auxiliary supervisor that aids the present one. To generate a trustworthy auxiliary graph, we offer a noise-filtering approach. Under the supervision of both the pre-defined graph and an auxiliary graph, a more effective clustering model is trained. Additionally, the embeddings of multiple layers are merged to improve the discriminative power of representations. We offer a clustering module for a self-supervisor to make the learned representation more clustering-aware. Finally, our model is trained using a triplet loss. Experiments are done on four available benchmark datasets, and the findings demonstrate that the proposed model outperforms or is comparable to state-of-the-art graph clustering models.

Full article

►▼

Show Figures

Open AccessArticle

A Block-Based Adaptive Decoupling Framework for Graph Neural Networks

by

Xu Shen, Yuyang Zhang, Yu Xie, Ka-Chun Wong and Chengbin Peng

Cited by 3 | Viewed by 2716

Abstract

Graph neural networks (GNNs) with feature propagation have demonstrated their power in handling unstructured data. However, feature propagation is also a smooth process that tends to make all node representations similar as the number of propagation increases. To address this problem, we propose

[...] Read more.

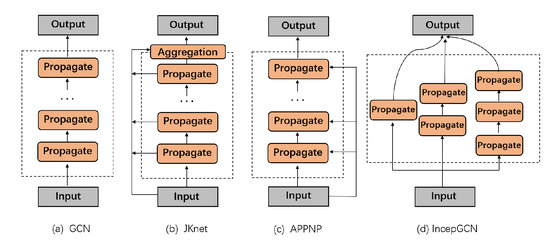

Graph neural networks (GNNs) with feature propagation have demonstrated their power in handling unstructured data. However, feature propagation is also a smooth process that tends to make all node representations similar as the number of propagation increases. To address this problem, we propose a novel Block-Based Adaptive Decoupling (BBAD) Framework to produce effective deep GNNs by utilizing backbone networks. In this framework, each block contains a shallow GNN with feature propagation and transformation decoupled. We also introduce layer regularizations and flexible receptive fields to automatically adjust the propagation depth and to provide different aggregation hops for each node, respectively. We prove that the traditional coupled GNNs are more likely to suffer from over-smoothing when they become deep. We also demonstrate the diversity of outputs from different blocks of our framework. In the experiments, we conduct semi-supervised and fully supervised node classifications on benchmark datasets, and the results verify that our method can not only improve the performance of various backbone networks, but also is superior to existing deep graph neural networks with less parameters.

Full article

►▼

Show Figures

Open AccessArticle

Relative Entropy of Distance Distribution Based Similarity Measure of Nodes in Weighted Graph Data

by

Shihu Liu, Yingjie Liu, Chunsheng Yang and Li Deng

Cited by 6 | Viewed by 2900

Abstract

Many similarity measure algorithms of nodes in weighted graph data have been proposed by employing the degree of nodes in recent years. Despite these algorithms obtaining great results, there may be still some limitations. For instance, the strength of nodes is ignored. Aiming

[...] Read more.

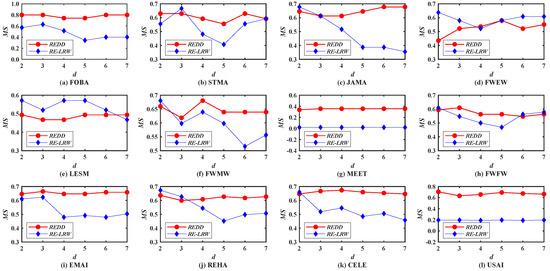

Many similarity measure algorithms of nodes in weighted graph data have been proposed by employing the degree of nodes in recent years. Despite these algorithms obtaining great results, there may be still some limitations. For instance, the strength of nodes is ignored. Aiming at this issue, the relative entropy of the distance distribution based similarity measure of nodes is proposed in this paper. At first, the structural weights of nodes are given by integrating their degree and strength. Next, the distance between any two nodes is calculated with the help of their structural weights and the Euclidean distance formula to further obtain the distance distribution of each node. After that, the probability distribution of nodes is constructed by normalizing their distance distributions. Thus, the relative entropy can be applied to measure the difference between the probability distributions of the top

d important nodes and all nodes in graph data. Finally, the similarity of two nodes can be measured in terms of this above-mentioned difference calculated by relative entropy. Experimental results demonstrate that the algorithm proposed by considering the strength of node in the relative entropy has great advantages in the most similar node mining and link prediction.

Full article

►▼

Show Figures

Open AccessArticle

Structural Entropy of the Stochastic Block Models

by

Jie Han, Tao Guo, Qiaoqiao Zhou, Wei Han, Bo Bai and Gong Zhang

Cited by 1 | Viewed by 2941

Abstract

With the rapid expansion of graphs and networks and the growing magnitude of data from all areas of science, effective treatment and compression schemes of context-dependent data is extremely desirable. A particularly interesting direction is to compress the data while keeping the “structural

[...] Read more.

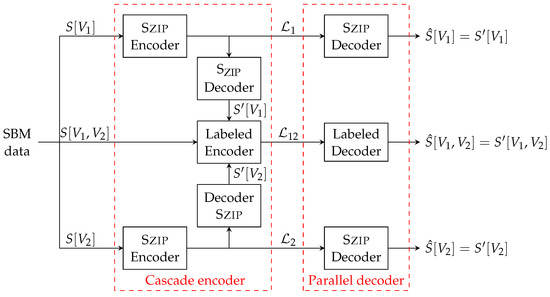

With the rapid expansion of graphs and networks and the growing magnitude of data from all areas of science, effective treatment and compression schemes of context-dependent data is extremely desirable. A particularly interesting direction is to compress the data while keeping the “structural information” only and ignoring the concrete labelings. Under this direction, Choi and Szpankowski introduced the structures (unlabeled graphs) which allowed them to compute the structural entropy of the Erdős–Rényi random graph model. Moreover, they also provided an asymptotically optimal compression algorithm that (asymptotically) achieves this entropy limit and runs in expectation in linear time. In this paper, we consider the stochastic block models with an arbitrary number of parts. Indeed, we define a partitioned structural entropy for stochastic block models, which generalizes the structural entropy for unlabeled graphs and encodes the partition information as well. We then compute the partitioned structural entropy of the stochastic block models, and provide a compression scheme that asymptotically achieves this entropy limit.

Full article

►▼

Show Figures

Open AccessArticle

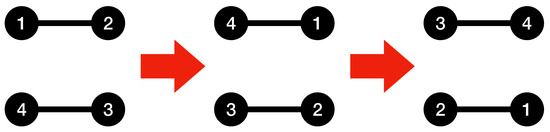

Kolmogorov Basic Graphs and Their Application in Network Complexity Analysis

by

Amirmohammad Farzaneh, Justin P. Coon and Mihai-Alin Badiu

Cited by 2 | Viewed by 3791

Abstract

Throughout the years, measuring the complexity of networks and graphs has been of great interest to scientists. The Kolmogorov complexity is known as one of the most important tools to measure the complexity of an object. We formalized a method to calculate an

[...] Read more.

Throughout the years, measuring the complexity of networks and graphs has been of great interest to scientists. The Kolmogorov complexity is known as one of the most important tools to measure the complexity of an object. We formalized a method to calculate an upper bound for the Kolmogorov complexity of graphs and networks. Firstly, the most simple graphs possible, those with

Kolmogorov complexity, were identified. These graphs were then used to develop a method to estimate the complexity of a given graph. The proposed method utilizes the simple structures within a graph to capture its non-randomness. This method is able to capture features that make a network closer to the more non-random end of the spectrum. The resulting algorithm takes a graph as an input and outputs an upper bound to its Kolmogorov complexity. This could be applicable in, for example evaluating the performances of graph compression methods.

Full article

►▼

Show Figures