-

Predicting Cartographic Symbol Location with Eye-Tracking Data and Machine Learning Approach

Predicting Cartographic Symbol Location with Eye-Tracking Data and Machine Learning Approach -

Influence of Time Pressure on Successive Visual Searches

Influence of Time Pressure on Successive Visual Searches -

eyeNotate: Interactive Annotation of Mobile Eye Tracking Data Based on Few-Shot Image Classification

eyeNotate: Interactive Annotation of Mobile Eye Tracking Data Based on Few-Shot Image Classification -

Improving Reading and Eye Movement Control in Readers with Oculomotor and Visuo-Attentional Deficits

Improving Reading and Eye Movement Control in Readers with Oculomotor and Visuo-Attentional Deficits

Journal Description

Journal of Eye Movement Research

Journal of Eye Movement Research

(JEMR) is an international, peer-reviewed, open access journal on all aspects of oculomotor functioning including methodology of eye recording, neurophysiological and cognitive models, attention, reading, as well as applications in neurology, ergonomy, media research and other areas published bimonthly online by MDPI (from Volume 18, Issue 1, 2025).

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, SCIE (Web of Science), PubMed, PMC, and other databases.

- Journal Rank: JCR - Q1 (Ophthalmology) / CiteScore - Q2 (Ophthalmology)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 39.9 days after submission; acceptance to publication is undertaken in 5.8 days (median values for papers published in this journal in the first half of 2025).

- Recognition of Reviewers: APC discount vouchers, optional signed peer review, and reviewer names published annually in the journal.

Impact Factor:

2.8 (2024);

5-Year Impact Factor:

2.8 (2024)

Latest Articles

An Exploratory Eye-Tracking Study of Breast-Cancer Screening Ads: A Visual Analytics Framework and Descriptive Atlas

J. Eye Mov. Res. 2025, 18(6), 64; https://doi.org/10.3390/jemr18060064 - 4 Nov 2025

Abstract

►

Show Figures

Successful health promotion involves messages that are quickly captured and held long enough to permit eligibility, credibility, and calls to action to be coded. This research develops an exploratory eye-tracking atlas of breast cancer screening ads viewed by midlife women and a replicable

[...] Read more.

Successful health promotion involves messages that are quickly captured and held long enough to permit eligibility, credibility, and calls to action to be coded. This research develops an exploratory eye-tracking atlas of breast cancer screening ads viewed by midlife women and a replicable pipeline that distinguishes early capture from long-term processing. Areas of Interest are divided into design-influential categories and graphed with two complementary measures: first hit and time to first fixation for entry and a tie-aware pairwise dominance model for dwell that produces rankings and an “early-vs.-sticky” quadrant visualization. Across creatives, pictorial and symbolic features were more likely to capture the first glance when they were perceptually dominant, while layouts containing centralized headlines or institutional cues deflected entry to the message and source. Prolonged attention was consistently focused on blocks of text, locations, and badges of authoring over ornamental pictures, demarcating the functional difference between capture and processing. Subgroup differences indicated audience-sensitive shifts: Older and household families shifted earlier toward source cues, more educated audiences shifted toward copy and locations, and younger or single viewers shifted toward symbols and images. Internal diagnostics verified that pairwise matrices were consistent with standard dwell summaries, verifying the comparative approach. The atlas converts the patterns into design-ready heuristics: defend sticky and early pieces, encourage sticky but late pieces by pushing them toward probable entry channels, de-clutter early but not sticky pieces to convert to processing, and re-think pieces that are neither. In practice, the diagnostics can be incorporated into procurement, pretesting, and briefs by agencies, educators, and campaign managers in order to enhance actionability without sacrificing segmentation of audiences. As an exploratory investigation, this study invites replication with larger and more diverse samples, generalizations to dynamic media, and associations with downstream measures such as recall and uptake of services.

Full article

Open AccessArticle

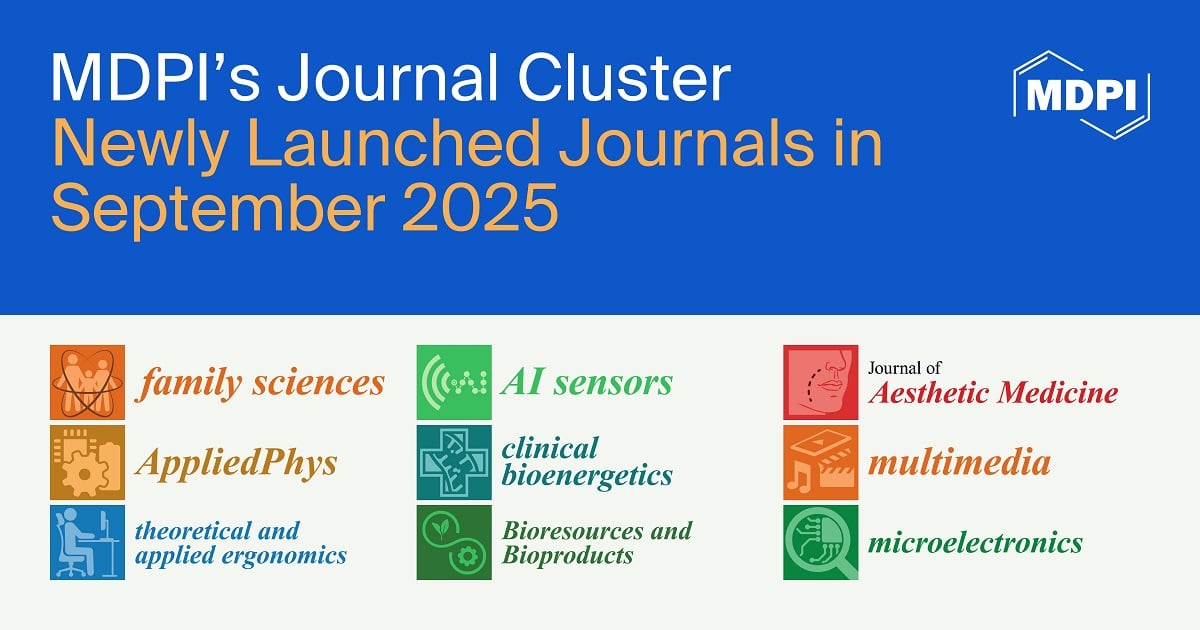

Effects of Multimodal AR-HUD Navigation Prompt Mode and Timing on Driving Behavior

by

Qi Zhu, Ziqi Liu, Youlan Li and Jung Euitay

J. Eye Mov. Res. 2025, 18(6), 63; https://doi.org/10.3390/jemr18060063 - 4 Nov 2025

Abstract

►▼

Show Figures

Current research on multimodal AR-HUD navigation systems primarily focuses on the presentation forms of auditory and visual information, yet the effects of synchrony between auditory and visual prompts as well as prompt timing on driving behavior and attention mechanisms remain insufficiently explored. This

[...] Read more.

Current research on multimodal AR-HUD navigation systems primarily focuses on the presentation forms of auditory and visual information, yet the effects of synchrony between auditory and visual prompts as well as prompt timing on driving behavior and attention mechanisms remain insufficiently explored. This study employed a 2 (prompt mode: synchronous vs. asynchronous) × 3 (prompt timing: −2000 m, −1000 m, −500 m) within-subject experimental design to assess the impact of multimodal prompt synchrony and prompt distance on drivers’ reaction time, sustained attention, and eye movement behaviors, including average fixation duration and fixation count. Behavioral data demonstrated that both prompt mode and prompt timing significantly influenced drivers’ response performance (indexed by reaction time) and attention stability, with synchronous prompts at −1000 m yielding optimal performance. Eye-tracking results further revealed that synchronous prompts significantly enhanced fixation stability and reduced visual load, indicating more efficient information integration. Therefore, prompt mode and prompt timing significantly affect drivers’ perceptual processing and operational performance. Delivering synchronous auditory and visual prompts at −1000 m achieves an optimal balance between information timeliness and multimodal integration. This study recommends the following: (1) maintaining temporal consistency in multimodal prompts to facilitate perceptual integration and (2) controlling prompt distance within an intermediate range (−1000 m) to optimize the perception–action window, thereby improving the safety and efficiency of AR-HUD navigation systems.

Full article

Graphical abstract

Open AccessArticle

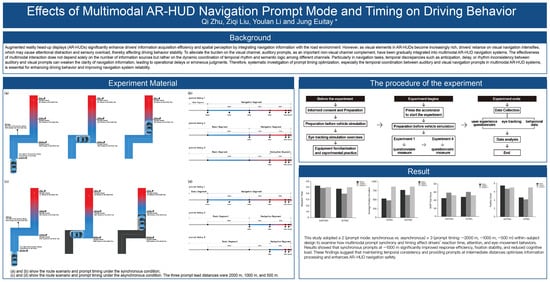

The Influence of Social Media-like Cues on Visual Attention—An Eye-Tracking Study with Food Products

by

Maria Mamalikou, Konstantinos Gkatzionis and Malamatenia Panagiotou

J. Eye Mov. Res. 2025, 18(6), 62; https://doi.org/10.3390/jemr18060062 - 4 Nov 2025

Abstract

►▼

Show Figures

Social media has developed into a leading advertising platform, with Instagram likes serving as visual cues that may influence consumer perception and behavior. The present study investigated the effect of Instagram likes on visual attention, memory, and food evaluations focusing on traditional Greek

[...] Read more.

Social media has developed into a leading advertising platform, with Instagram likes serving as visual cues that may influence consumer perception and behavior. The present study investigated the effect of Instagram likes on visual attention, memory, and food evaluations focusing on traditional Greek food posts, using eye-tracking technology. The study assessed whether a higher number of likes increased attention to the food area, enhanced memory recall of food names, and influenced subjective ratings (liking, perceived tastiness, and intention to taste). The results demonstrated no significant differences in overall viewing time, memory performance, or evaluation ratings between high-like and low-like conditions. Although not statistically significant, descriptive trends suggested that posts with a higher number of likes tended to be evaluated more positively and the AOIs likes area showed a trend towards attracting more visual attention. The observed trends point to a possible subtle role of likes in user’s engagement with food posts, influencing how they process and evaluate such content. These findings add to the discussion about the effect of social media likes on information processing when individuals observe food pictures on social media.

Full article

Graphical abstract

Open AccessArticle

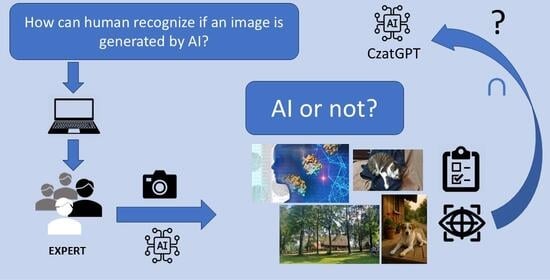

AI Images vs. Real Photographs: Investigating Visual Recognition and Perception

by

Veslava Osińska, Weronika Kortas, Adam Szalach and Marc Welter

J. Eye Mov. Res. 2025, 18(6), 61; https://doi.org/10.3390/jemr18060061 - 3 Nov 2025

Abstract

►▼

Show Figures

Recently, the photorealism of generated images has improved noticeably due to the development of AI algorithms. These are high-resolution images of human faces and bodies, cats and dogs, vehicles, and other categories of objects that the untrained eye cannot distinguish from authentic photographs.

[...] Read more.

Recently, the photorealism of generated images has improved noticeably due to the development of AI algorithms. These are high-resolution images of human faces and bodies, cats and dogs, vehicles, and other categories of objects that the untrained eye cannot distinguish from authentic photographs. The study assessed how people perceive 12 pictures generated by AI vs. 12 real photographs. Six main categories of stimuli were selected: architecture, art, faces, cars, landscapes, and pets. The visual perception of selected images was studied by means of eye tracking and gaze patterns as well as time characteristics, compared with consideration to the respondent groups’ gender and knowledge of AI graphics. After the experiment, the study participants analysed the pictures again in order to describe the reasons for their choice. The results show that AI images of pets and real photographs of architecture were the easiest to identify. The largest differences in visual perception are between men and women as well as between those experienced in digital graphics (including AI images) and the rest. Based on the analysis, several recommendations are suggested for AI developers and end-users.

Full article

Graphical abstract

Open AccessArticle

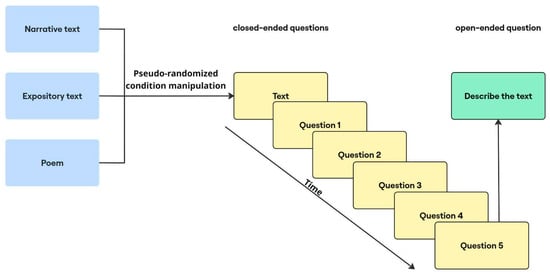

The Influence of Text Genre on Eye Movement Patterns During Reading

by

Maksim Markevich and Anastasiia Streltsova

J. Eye Mov. Res. 2025, 18(6), 60; https://doi.org/10.3390/jemr18060060 - 3 Nov 2025

Abstract

►▼

Show Figures

Successful reading comprehension depends on many factors, including text genre. Eye-tracking studies indicate that genre shapes eye movement patterns at a local level. Although the reading of expository and narrative texts by adolescents has been described in the literature, the reading of poetry

[...] Read more.

Successful reading comprehension depends on many factors, including text genre. Eye-tracking studies indicate that genre shapes eye movement patterns at a local level. Although the reading of expository and narrative texts by adolescents has been described in the literature, the reading of poetry by adolescents remains understudied. In this study, we used scanpath analysis to examine how genre and comprehension level influence global eye movement strategies in adolescents (N = 44). Thus, the novelty of this study lies in the use of scanpath analysis to measure global eye movement strategies employed by adolescents while reading narrative, expository, and poetic texts. Two distinct reading patterns emerged: a forward reading pattern (linear progression) and a regressive reading pattern (frequent lookbacks). Readers tended to use regressive patterns more often with expository and poetic texts, while forward patterns were more common with a narrative text. Comprehension level also played a significant role, with readers with a higher level of comprehension relying more on regressive patterns for expository and poetic texts. The results of this experiment suggest that scanpaths effectively capture genre-driven differences in reading strategies, underscoring how genre expectations may shape visual processing during reading.

Full article

Figure 1

Open AccessArticle

Sequential Fixation Behavior in Road Marking Recognition: Implications for Design

by

Takaya Maeyama, Hiroki Okada and Daisuke Sawamura

J. Eye Mov. Res. 2025, 18(5), 59; https://doi.org/10.3390/jemr18050059 - 21 Oct 2025

Abstract

►▼

Show Figures

This study examined how drivers’ eye fixations change before, during, and after recognizing road markings, and how these changes relate to driving speed, visual complexity, cognitive functions, and demographics. 20 licensed drivers viewed on-board movies showing digit or character road markings while their

[...] Read more.

This study examined how drivers’ eye fixations change before, during, and after recognizing road markings, and how these changes relate to driving speed, visual complexity, cognitive functions, and demographics. 20 licensed drivers viewed on-board movies showing digit or character road markings while their eye movements were tracked. Fixation positions and dispersions were analyzed. Results showed that, regardless of marking type, fixations were horizontally dispersed before and after recognition but became vertically concentrated during recognition, with fixation points shifting higher (p < 0.001) and horizontal dispersion decreasing (p = 0.01). During the recognition period, fixations moved upward and narrowed horizontally toward the final third (p = 0.034), suggesting increased focus. Longer fixations were linked to slower speeds for digits (p = 0.029) and more characters for character markings (p < 0.001). No significant correlations were found with cognitive functions or demographics. These findings suggest that drivers first scan broadly, then concentrate on markings as they approach. For optimal recognition, simple or essential information should be placed centrally or lower, while detailed content should appear higher to align with natural gaze patterns. In high-speed environments, markings should prioritize clarity and brevity in central positions to ensure safe and rapid recognition.

Full article

Figure 1

Open AccessArticle

Oculomotor Behavior of L2 Readers with Typologically Distant L1 Background: The “Big Three” Effects of Word Length, Frequency, and Predictability

by

Marina Norkina, Daria Chernova, Svetlana Alexeeva and Maria Harchevnik

J. Eye Mov. Res. 2025, 18(5), 58; https://doi.org/10.3390/jemr18050058 - 18 Oct 2025

Abstract

►▼

Show Figures

Oculomotor reading behavior is influenced by both universal factors, like the “big three” of word length, frequency, and contextual predictability, and language-specific factors, such as script and grammar. The aim of this study was to examine the influence of the “big three” factors

[...] Read more.

Oculomotor reading behavior is influenced by both universal factors, like the “big three” of word length, frequency, and contextual predictability, and language-specific factors, such as script and grammar. The aim of this study was to examine the influence of the “big three” factors on L2 reading focusing on a typologically distant L1/L2 pair with dramatic differences in script and grammar. A total of 41 native Chinese-speaking learners of Russian (levels A2-B2) and 40 native Russian speakers read a corpus of 90 Russian sentences for comprehension. Their eye movements were recorded with EyeLink 1000+. We analyzed both early (gaze duration and skipping rate) and late (regression rate and rereading time) eye movement measures. As expected, the “big three” effects influenced oculomotor behavior in both L1 and L2 readers, being more pronounced for L2, but substantial differences were also revealed. Word frequency in L1 reading primarily influenced early processing stages, whereas in L2 reading it remained significant in later stages as well. Predictability had an immediate effect on skipping rates in L1 reading, while L2 readers only exhibited it in late measures. Word length was the only factor that interacted with L2 language exposure which demonstrated adjustment to alphabetic script and polymorphemic word structure. Our findings provide new insights into the processing challenges of L2 readers with typologically distant L1 backgrounds.

Full article

Figure 1

Open AccessArticle

Visual Strategies for Guiding Gaze Sequences and Attention in Yi Symbols: Eye-Tracking Insights

by

Bo Yuan and Sakol Teeravarunyou

J. Eye Mov. Res. 2025, 18(5), 57; https://doi.org/10.3390/jemr18050057 - 16 Oct 2025

Abstract

►▼

Show Figures

This study investigated the effectiveness of visual strategies in guiding gaze behavior and attention on Yi graphic symbols using eye-tracking. Four strategies, color brightness, layering, line guidance, and size variation, were tested with 34 Thai participants unfamiliar with Yi symbol meanings. Gaze sequence

[...] Read more.

This study investigated the effectiveness of visual strategies in guiding gaze behavior and attention on Yi graphic symbols using eye-tracking. Four strategies, color brightness, layering, line guidance, and size variation, were tested with 34 Thai participants unfamiliar with Yi symbol meanings. Gaze sequence analysis, using Levenshtein distance and similarity ratio, showed that bright colors, layered arrangements, and connected lines enhanced alignment with intended gaze sequences, while size variation had minimal effect. Bright red symbols and lines captured faster initial fixations (Time to First Fixation, TTFF) on key Areas of Interest (AOIs), unlike layering and size. Lines reduced dwell time at sequence starts, promoting efficient progression, while larger symbols sustained longer attention, though inconsistently. Color and layering showed no consistent dwell time effects. These findings inform Yi graphic symbol design for effective cross-cultural visual communication.

Full article

Graphical abstract

Open AccessArticle

DyslexiaNet: Examining the Viability and Efficacy of Eye Movement-Based Deep Learning for Dyslexia Detection

by

Ramis İleri, Çiğdem Gülüzar Altıntop, Fatma Latifoğlu and Esra Demirci

J. Eye Mov. Res. 2025, 18(5), 56; https://doi.org/10.3390/jemr18050056 - 15 Oct 2025

Abstract

►▼

Show Figures

Dyslexia is a neurodevelopmental disorder that impairs reading, affecting 5–17.5% of children and representing the most common learning disability. Individuals with dyslexia experience decoding, reading fluency, and comprehension difficulties, hindering vocabulary development and learning. Early and accurate identification is essential for targeted interventions.

[...] Read more.

Dyslexia is a neurodevelopmental disorder that impairs reading, affecting 5–17.5% of children and representing the most common learning disability. Individuals with dyslexia experience decoding, reading fluency, and comprehension difficulties, hindering vocabulary development and learning. Early and accurate identification is essential for targeted interventions. Traditional diagnostic methods rely on behavioral assessments and neuropsychological tests, which can be time-consuming and subjective. Recent studies suggest that physiological signals, such as electrooculography (EOG), can provide objective insights into reading-related cognitive and visual processes. Despite this potential, there is limited research on how typeface and font characteristics influence reading performance in dyslexic children using EOG measurements. To address this gap, we investigated the most suitable typefaces for Turkish-speaking children with dyslexia by analyzing EOG signals recorded during reading tasks. We developed a novel deep learning framework, DyslexiaNet, using scalogram images from horizontal and vertical EOG channels, and compared it with AlexNet, MobileNet, and ResNet. Reading performance indicators, including reading time, blink rate, regression rate, and EOG signal energy, were evaluated across multiple typefaces and font sizes. Results showed that typeface significantly affects reading efficiency in dyslexic children. The BonvenoCF font was associated with shorter reading times, fewer regressions, and lower cognitive load. DyslexiaNet achieved the highest classification accuracy (99.96% for horizontal channels) while requiring lower computational load than other networks. These findings demonstrate that EOG-based physiological measurements combined with deep learning offer a non-invasive, objective approach for dyslexia detection and personalized typeface selection. This method can provide practical guidance for designing educational materials and support clinicians in early diagnosis and individualized intervention strategies for children with dyslexia.

Full article

Figure 1

Open AccessArticle

Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study

by

Mark Mervic, Ema Grašič, Polona Jaki Mekjavić, Nataša Vidovič Valentinčič and Ana Fakin

J. Eye Mov. Res. 2025, 18(5), 55; https://doi.org/10.3390/jemr18050055 - 15 Oct 2025

Abstract

►▼

Show Figures

Real-world navigation depends on coordinated head–eye behaviour that standard tests of visual function miss. We investigated how visual impairment affects traffic navigation, whether behaviour differs by visual impairment type, and whether this functional grouping better explains performance than WHO categorisation. Using a virtual

[...] Read more.

Real-world navigation depends on coordinated head–eye behaviour that standard tests of visual function miss. We investigated how visual impairment affects traffic navigation, whether behaviour differs by visual impairment type, and whether this functional grouping better explains performance than WHO categorisation. Using a virtual reality (VR) headset with integrated head and eye tracking, we evaluated detection of moving cars and safe road-crossing opportunities in 40 patients with central, peripheral, or combined visual impairment and 19 controls. Only two patients with a combination of very low visual acuity and severely constricted visual fields failed both visual tasks. Overall, patients identified safe-crossing intervals 1.3–1.5 s later than controls (p ≤ 0.01). Head-eye movement profiles diverged by visual impairment: patients with central impairment showed shorter, more frequent saccades (p < 0.05); patients with peripheral impairment showed exploratory behaviour similar to controls; while patients with combined impairment executed fewer microsaccades (p < 0.05), reduced total macrosaccade amplitude (p < 0.05), and fewer head turns (p < 0.05). Classification by impairment type explained behaviour better than WHO categorisation. These findings challenge acuity/field-based classifications and support integrating functional metrics into risk stratification and targeted rehabilitation, with VR providing a safe, scalable assessment tool.

Full article

Graphical abstract

Open AccessFeature PaperArticle

Test–Retest Reliability of a Computerized Hand–Eye Coordination Task

by

Antonio Ríder-Vázquez, Estanislao Gutiérrez-Sánchez, Clara Martinez-Perez and María Carmen Sánchez-González

J. Eye Mov. Res. 2025, 18(5), 54; https://doi.org/10.3390/jemr18050054 - 14 Oct 2025

Abstract

►▼

Show Figures

Background: Hand–eye coordination is essential for daily functioning and sports performance, but standardized digital protocols for its reliable assessment are limited. This study aimed to evaluate the intra-examiner repeatability and inter-examiner reproducibility of a computerized protocol (COI-SV®) for assessing hand–eye coordination

[...] Read more.

Background: Hand–eye coordination is essential for daily functioning and sports performance, but standardized digital protocols for its reliable assessment are limited. This study aimed to evaluate the intra-examiner repeatability and inter-examiner reproducibility of a computerized protocol (COI-SV®) for assessing hand–eye coordination in healthy adults, as well as the influence of age and sex. Methods: Seventy-eight adults completed four sessions of a computerized visual–motor task requiring rapid and accurate responses to randomly presented targets. Accuracy and response times were analyzed using repeated-measures and reliability analyses. Results: Accuracy showed a small session effect and minor examiner differences on the first day, whereas response times were consistent across sessions. Men generally responded faster than women, and response times increased slightly with age. Overall, reliability indices indicated moderate-to-good repeatability and reproducibility for both accuracy and response time measures. Conclusions: The COI-SV® protocol provides a robust, objective, and reproducible measurement of hand–eye coordination, supporting its use in clinical, sports, and research settings.

Full article

Figure 1

Open AccessArticle

Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults

by

Neşe Alkan

J. Eye Mov. Res. 2025, 18(5), 53; https://doi.org/10.3390/jemr18050053 - 11 Oct 2025

Abstract

►▼

Show Figures

Accurate recognition of basic facial emotions is well documented, yet the mechanisms of misclassification and their relation to gaze allocation remain under-reported. The present study utilized a within-subjects eye-tracking design to examine both accurate and inaccurate recognition of five basic emotions (anger, disgust,

[...] Read more.

Accurate recognition of basic facial emotions is well documented, yet the mechanisms of misclassification and their relation to gaze allocation remain under-reported. The present study utilized a within-subjects eye-tracking design to examine both accurate and inaccurate recognition of five basic emotions (anger, disgust, fear, happiness, and sadness) in healthy young adults. Fifty participants (twenty-four women) completed a forced-choice categorization task with 10 stimuli (female/male poser × emotion). A remote eye tracker (60 Hz) recorded fixations mapped to eyes, nose, and mouth areas of interest (AOIs). The analyses combined accuracy and decision-time statistics with heatmap comparisons of misclassified versus accurate trials within the same image. Overall accuracy was 87.8% (439/500). Misclassification patterns depended on the target emotion, but not on participant gender. Fear male was most often misclassified (typically as disgust), and sadness female was frequently labeled as fear or disgust; disgust was the most incorrectly attributed response. For accurate trials, decision time showed main effects of emotion (p < 0.001) and participant gender (p = 0.033): happiness was categorized fastest and anger slowest, and women responded faster overall, with particularly fast response times for sadness. The AOI results revealed strong main effects and an AOI × emotion interaction (p < 0.001): eyes received the most fixations, but fear drew relatively more mouth sampling and sadness more nose sampling. Crucially, heatmaps showed an upper-face bias (eye AOI) in inaccurate trials, whereas accurate trials retained eye sampling and added nose and mouth AOI coverage, which aligned with diagnostic cues. These findings indicate that the scanpath strategy, in addition to information availability, underpins success and failure in basic-emotion recognition, with implications for theory, targeted training, and affective technologies.

Full article

Figure 1

Open AccessArticle

The Effect of Visual Attention Dispersion on Cognitive Response Time

by

Yejin Lee and Kwangtae Jung

J. Eye Mov. Res. 2025, 18(5), 52; https://doi.org/10.3390/jemr18050052 - 10 Oct 2025

Abstract

►▼

Show Figures

In safety-critical systems like nuclear power plants, the rapid and accurate perception of visual interface information is vital. This study investigates the relationship between visual attention dispersion measured via heatmap entropy (as a specific measure of gaze entropy) and response time during information

[...] Read more.

In safety-critical systems like nuclear power plants, the rapid and accurate perception of visual interface information is vital. This study investigates the relationship between visual attention dispersion measured via heatmap entropy (as a specific measure of gaze entropy) and response time during information search tasks. Sixteen participants viewed a prototype of an accident response support system and answered questions at three difficulty levels while their eye movements were tracked using Tobii Pro Glasses 2. Results showed a significant positive correlation (r = 0.595, p < 0.01) between heatmap entropy and response time, indicating that more dispersed attention leads to longer task completion times. This pattern held consistently across all difficulty levels. These findings suggest that heatmap entropy is a useful metric for evaluating user attention strategies and can inform interface usability assessments in high-stakes environments.

Full article

Graphical abstract

Open AccessArticle

Diagnosing Colour Vision Deficiencies Using Eye Movements (Without Dedicated Eye-Tracking Hardware)

by

Aryaman Taore, Gabriel Lobo, Philip R. K. Turnbull and Steven C. Dakin

J. Eye Mov. Res. 2025, 18(5), 51; https://doi.org/10.3390/jemr18050051 - 2 Oct 2025

Abstract

►▼

Show Figures

Purpose: To investigate the efficacy of a novel test for diagnosing colour vision deficiencies using reflexive eye movements measured using an unmodified tablet. Methods: This study followed a cross-sectional design, where thirty-three participants aged between 17 and 65 years were recruited. The participant

[...] Read more.

Purpose: To investigate the efficacy of a novel test for diagnosing colour vision deficiencies using reflexive eye movements measured using an unmodified tablet. Methods: This study followed a cross-sectional design, where thirty-three participants aged between 17 and 65 years were recruited. The participant group comprised 23 controls, 8 deuteranopes, and 2 protanopes. An anomaloscope was employed to determine the colour vision status of these participants. The study methodology involved using an Apple iPad Pro’s built-in eye-tracking capabilities to record eye movements in response to coloured patterns drifting on the screen. Through an automated analysis of these movements, the researchers estimated individuals’ red–green equiluminant point and their equivalent luminance contrast. Results: Estimates of the red–green equiluminant point and the equivalent luminance contrast were used to classify participants’ colour vision status with a sensitivity rate of 90.0% and a specificity rate of 91.30%. Conclusions: The novel colour vision test administered using an unmodified tablet was found to be effective in diagnosing colour vision deficiencies and has the potential to be a practical and cost-effective alternative to traditional methods. Translation Relevance: The test’s objectivity, its straightforward implementation on a standard tablet, and its minimal requirement for patient cooperation, all contribute to the wider accessibility of colour vision diagnosis. This is particularly advantageous for demographics like children who might be challenging to engage, but for whom early detection is of paramount importance.

Full article

Figure 1

Open AccessArticle

Visual Attention to Economic Information in Simulated Ophthalmic Deficits: A Remote Eye-Tracking Study

by

Cansu Yuksel Elgin and Ceyhun Elgin

J. Eye Mov. Res. 2025, 18(5), 50; https://doi.org/10.3390/jemr18050050 - 2 Oct 2025

Abstract

►▼

Show Figures

This study investigated how simulated ophthalmic visual field deficits affect visual attention and economic information processing. Using webcam-based eye tracking, 227 participants with normal vision recruited through Amazon Mechanical Turk were assigned to control, central vision loss, peripheral vision loss, or scattered vision

[...] Read more.

This study investigated how simulated ophthalmic visual field deficits affect visual attention and economic information processing. Using webcam-based eye tracking, 227 participants with normal vision recruited through Amazon Mechanical Turk were assigned to control, central vision loss, peripheral vision loss, or scattered vision loss simulation conditions. Participants viewed economic stimuli of varying complexity while eye movements, cognitive load, and comprehension were measured. All deficit conditions showed altered oculomotor behaviors. Central vision loss produced the most severe impairments: 43.6% increased fixation durations, 68% longer scanpaths, and comprehension accuracy of 61.2% versus 87.3% for controls. Visual deficits interacted with information complexity, showing accelerated impairment for complex stimuli. Mediation analysis revealed 47% of comprehension deficits were mediated through altered attention patterns. Cognitive load was significantly elevated, with central vision loss participants reporting 84% higher mental demand than controls. These findings demonstrate that visual field deficits fundamentally alter economic information processing through both direct perceptual limitations and compensatory attention strategies. Results demonstrate the feasibility of webcam-based eye tracking for studying simulated visual deficits and suggest that different types of simulated visual deficits may require distinct information presentation strategies.

Full article

Graphical abstract

Open AccessArticle

Guiding the Gaze: How Bionic Reading Influences Eye Movements

by

T. R. Beelders

J. Eye Mov. Res. 2025, 18(5), 49; https://doi.org/10.3390/jemr18050049 - 1 Oct 2025

Abstract

In recent years, Bionic reading has been introduced as a means to combat superficial reading and low comprehension rates. This paper investigates eye movements between participants who read a passage in standard font and an additional Bionic font passage. It was found that

[...] Read more.

In recent years, Bionic reading has been introduced as a means to combat superficial reading and low comprehension rates. This paper investigates eye movements between participants who read a passage in standard font and an additional Bionic font passage. It was found that Bionic font does not significantly change eye movements when reading. Fixation durations, number of fixations and reading speeds were not significantly different between the two formats. Furthermore, fixations were spread throughout the word and not only on leading characters, even when using Bionic font; hence, participants were not able to “auto-complete” the words. Additionally, Bionic font did not facilitate easier processing of low-frequency or unfamiliar words. Overall, it would appear that Bionic font, in the short term, does not affect reading. Further investigation is needed to determine whether a long-term intervention with Bionic font is more meaningful than standard interventions.

Full article

(This article belongs to the Special Issue Eye Movements in Reading and Related Difficulties)

►▼

Show Figures

Graphical abstract

Open AccessArticle

Tracking the Impact of Age and Dimensional Shifts on Situation Model Updating During Narrative Text Comprehension

by

César Campos-Rojas and Romualdo Ibáñez-Orellana

J. Eye Mov. Res. 2025, 18(5), 48; https://doi.org/10.3390/jemr18050048 - 26 Sep 2025

Abstract

►▼

Show Figures

Studies on the relationship between age and situation model updating during narrative text reading have mainly used response or reading times. This study enhances previous measures (working memory, recognition probes, and comprehension) by incorporating eye-tracking techniques to compare situation model updating between young

[...] Read more.

Studies on the relationship between age and situation model updating during narrative text reading have mainly used response or reading times. This study enhances previous measures (working memory, recognition probes, and comprehension) by incorporating eye-tracking techniques to compare situation model updating between young and older Chilean adults. The study included 82 participants (40 older adults and 42 young adults) who read two narrative texts under three conditions (no shift, spatial shift, and character shift) using a between-subject (age) and within-subject (dimensional change) design. The results show that, while differences in working memory capacity were observed between the groups, these differences did not impact situation model comprehension. Younger adults performed better in recognition tests regardless of updating conditions. Eye-tracking data showed increased fixation times for dimensional shifts and longer reading times in older adults, with no interaction between age and dimensional shifts.

Full article

Figure 1

Open AccessReview

A Comprehensive Framework for Eye Tracking: Methods, Tools, Applications, and Cross-Platform Evaluation

by

Govind Ram Chhimpa, Ajay Kumar, Sunita Garhwal, Dhiraj Kumar, Niyaz Ahmad Wani, Mudasir Ahmad Wani and Kashish Ara Shakil

J. Eye Mov. Res. 2025, 18(5), 47; https://doi.org/10.3390/jemr18050047 - 23 Sep 2025

Abstract

►▼

Show Figures

Eye tracking, a fundamental process in gaze analysis, involves measuring the point of gaze or eye motion. It is crucial in numerous applications, including human–computer interaction (HCI), education, health care, and virtual reality. This study delves into eye-tracking concepts, terminology, performance parameters, applications,

[...] Read more.

Eye tracking, a fundamental process in gaze analysis, involves measuring the point of gaze or eye motion. It is crucial in numerous applications, including human–computer interaction (HCI), education, health care, and virtual reality. This study delves into eye-tracking concepts, terminology, performance parameters, applications, and techniques, focusing on modern and efficient approaches such as video-oculography (VOG)-based systems, deep learning models for gaze estimation, wearable and cost-effective devices, and integration with virtual/augmented reality and assistive technologies. These contemporary methods, prevalent for over two decades, significantly contribute to developing cutting-edge eye-tracking applications. The findings underscore the significance of diverse eye-tracking techniques in advancing eye-tracking applications. They leverage machine learning to glean insights from existing data, enhance decision-making, and minimize the need for manual calibration during tracking. Furthermore, the study explores and recommends strategies to address limitations/challenges inherent in specific eye-tracking methods and applications. Finally, the study outlines future directions for leveraging eye tracking across various developed applications, highlighting its potential to continue evolving and enriching user experiences.

Full article

Figure 1

Open AccessArticle

Microsaccade Activity During Visuospatial Working Memory in Early-Stage Parkinson’s Disease

by

Katherine Farber, Linjing Jiang, Mario Michiels, Ignacio Obeso and Hoi-Chung Leung

J. Eye Mov. Res. 2025, 18(5), 46; https://doi.org/10.3390/jemr18050046 - 22 Sep 2025

Abstract

►▼

Show Figures

Fixational saccadic eye movements (microsaccades) have been associated with cognitive processes, especially in tasks requiring spatial attention and memory. Alterations in oculomotor and cognitive control are commonly observed in Parkinson’s disease (PD), though it is unclear to what extent microsaccade activity is affected.

[...] Read more.

Fixational saccadic eye movements (microsaccades) have been associated with cognitive processes, especially in tasks requiring spatial attention and memory. Alterations in oculomotor and cognitive control are commonly observed in Parkinson’s disease (PD), though it is unclear to what extent microsaccade activity is affected. We acquired eye movement data from sixteen participants with early-stage PD and thirteen older healthy controls to examine the effects of dopamine modulation on microsaccade activity during the delay period of a spatial working memory task. Some microsaccade characteristics, like amplitude and duration, were moderately larger in the PD participants when they were “on” their dopaminergic medication than healthy controls, or when they were “off” medication, while PD participants exhibited microsaccades with a linear amplitude–velocity relationship comparable to controls. Both groups showed similar microsaccade rate patterns across task events, with most participants showing a horizontal bias in microsaccade direction during the delay period regardless of the remembered target location. Overall, our data suggest minimal involvement of microsaccades during visuospatial working memory maintenance under conditions without explicit attentional cues in both subject groups. However, moderate effects of PD-related dopamine deficiency were observed for microsaccade size during working memory maintenance.

Full article

Figure 1

Open AccessArticle

Active Gaze Guidance and Pupil Dilation Effects Through Subject Engagement in Ophthalmic Imaging

by

David Harings, Niklas Bauer, Damian Mendroch, Uwe Oberheide and Holger Lubatschowski

J. Eye Mov. Res. 2025, 18(5), 45; https://doi.org/10.3390/jemr18050045 - 19 Sep 2025

Abstract

Modern ophthalmic imaging methods such as optical coherence tomography (OCT) typically require expensive scanner components to direct the light beam across the retina while the patient’s gaze remains fixed. This proof-of-concept experiment investigates whether the patient’s natural eye movements can replace mechanical scanning

[...] Read more.

Modern ophthalmic imaging methods such as optical coherence tomography (OCT) typically require expensive scanner components to direct the light beam across the retina while the patient’s gaze remains fixed. This proof-of-concept experiment investigates whether the patient’s natural eye movements can replace mechanical scanning by guiding the gaze along predefined patterns. An infrared fundus camera setup was used with nine healthy adults (aged 20–57) who completed tasks comparing passive viewing of moving patterns to actively tracing them by drawing using a touchpad interface. The active task involved participant-controlled target movement with real-time color feedback for accurate pattern tracing. Results showed that active tracing significantly increased pupil diameter by an average of 17.8% (range 8.9–43.6%; p < 0.001) and reduced blink frequency compared to passive viewing. More complex patterns led to greater pupil dilation, confirming the link between cognitive load and physiological response. These findings demonstrate that patient driven gaze guidance can stabilize gaze, reduce blinking, and naturally dilate the pupil. These conditions might enhance the quality of scannerless OCT or other imaging techniques benefiting from guided gaze and larger pupils. There could be benefits for children and people with compliance issues, although further research is needed to consider cognitive load.

Full article

(This article belongs to the Special Issue Eye Tracking and Visualization)

►▼

Show Figures

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Conferences

Special Issues

Special Issue in

JEMR

Eye Tracking and Visualization

Guest Editor: Michael BurchDeadline: 20 November 2025

Special Issue in

JEMR

New Horizons and Recent Advances in Eye-Tracking Technology

Guest Editor: Lee FriedmanDeadline: 20 December 2025

Special Issue in

JEMR

Eye Movements in Reading and Related Difficulties

Guest Editors: Argyro Fella, Timothy C. Papadopoulos, Kevin B. Paterson, Daniela ZambarbieriDeadline: 30 June 2026