The experiments were carried out on a P4 IBM platform with a 3 GHz processor and 2 GB memory, running under the Windows XP operating system. The algorithm was developed via the signal processing toolbox, image processing toolbox, and global optimization toolbox of Matlab 2010b.

5.1. Effectiveness of Tsallis Entropy

We compared the maximum Tsallis entropy thresholding (MTT) to other criteria including maximum between class variance thresholding (MBCVT), maximum entropy thresholding (MET), and minimum cross entropy thresholding (MCET). Taking

m = 2 as the example, suppose

PA and

PB denote the probability of class

CA and

CB, respectively,

ωA and

ωB denote the corresponding the cumulative probabilities,

μA and

μB denote the corresponding mean value, the formalism of objective function of different criteria are shown in

Table 2. Here, we add a minus symbol to the objective function of MCET to transform the minimization to maximization.

Table 2.

Objective function of different criteria (m = 2).

Table 2.

Objective function of different criteria (m = 2).

| Criteria | Objective | Type |

|---|

| MET | S(A) + S(B) | Maximize |

| MBCVT | ωA × ωB × (μA − μB)2 | Maximize |

| MCET | −μA × ωA × log(μA) − μB × ωB × log(μB) | Minimize |

| μA × ωA × log(μA) + μB × ωB × log(μB) | Maximize |

| MTT | Sq(A) + Sq(B) + (1 − q) × Sq(A) × Sq(B) | Maximize |

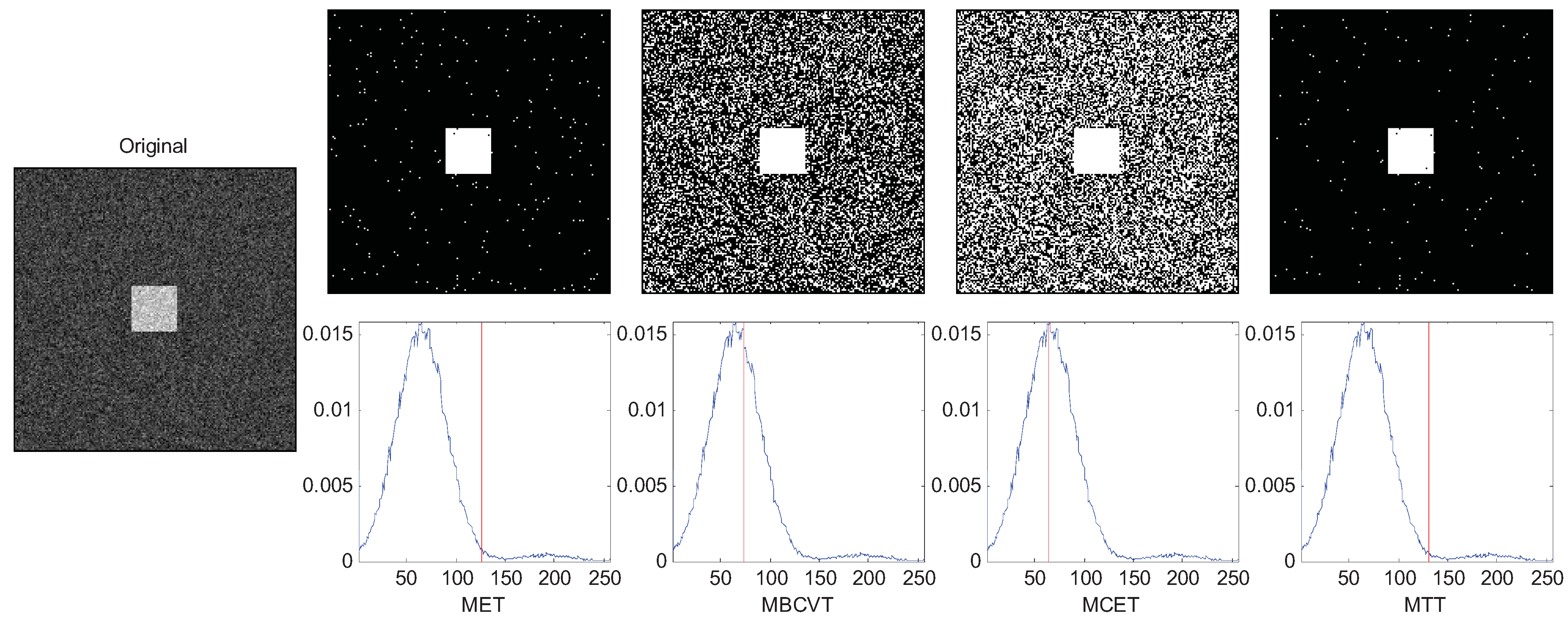

We use some synthetic images to demonstrate the robustness of the MTT criteria. In the synthetic image, a square (gray value 192) is put on a background (gray value 64), and then Gaussian noises (variances = 0.01) was added to the whole image, so the histogram of the image is two Gaussian peaks. The size of the image is 256-by-256 size, and the size of the foreground square is 180-by-180, so that the heights of the two Gaussian peaks of foreground and background are nearly the same.

In addition, we reduce the length of the foreground square to change the height ratio of the two Gaussian peaks, and lower the gray level of the background to enlarge the distance of the two Gaussian peaks. q is determined as 4 for all cases of synthetic images.

The results are chosen and shown in

Figure 1,

Figure 2 and

Figure 3. In

Figure 1, the two Gaussian peaks are nearly the same. The MET, MBCVT, and MTT can find the optimal threshold, while MCET locates the threshold a bit left. In

Figure 2, we shrink the size of the foreground object, so the peak of the background is much higher than the peak of the foreground object. The MET and the MTT perform well with threshold as 128 while MBCVT and MCET obtained the threshold nearly at the position of the higher Gaussian peak.

Figure 1.

Segmentation of the synthetic image.

Figure 1.

Segmentation of the synthetic image.

Figure 2.

Segmentation of the synthetic image (foreground is smaller).

Figure 2.

Segmentation of the synthetic image (foreground is smaller).

Figure 3.

Segmentation of the synthetic image (background is darker).

Figure 3.

Segmentation of the synthetic image (background is darker).

In

Figure 3, we lower the gray level of the background to only 26 so that the distance between two peaks are further. In this case we observe that MBCVT and MTT obtain the right threshold just between the two peaks while MET finds a higher threshold and MCET finds a lower threshold. The results are listed in

Table 3 which indicates that the MTT succeeds in all cases.

Table 3.

Result of segmentation of synthetic image (√ denotes success).

Table 3.

Result of segmentation of synthetic image (√ denotes success).

| Image | MET | MBCVT | MCET | MTT |

|---|

| Two equal Gaussian peaks | √ | √ | | √ |

| One peak is higher | √ | | | √ |

| Larger distance between two peaks | | √ | | √ |

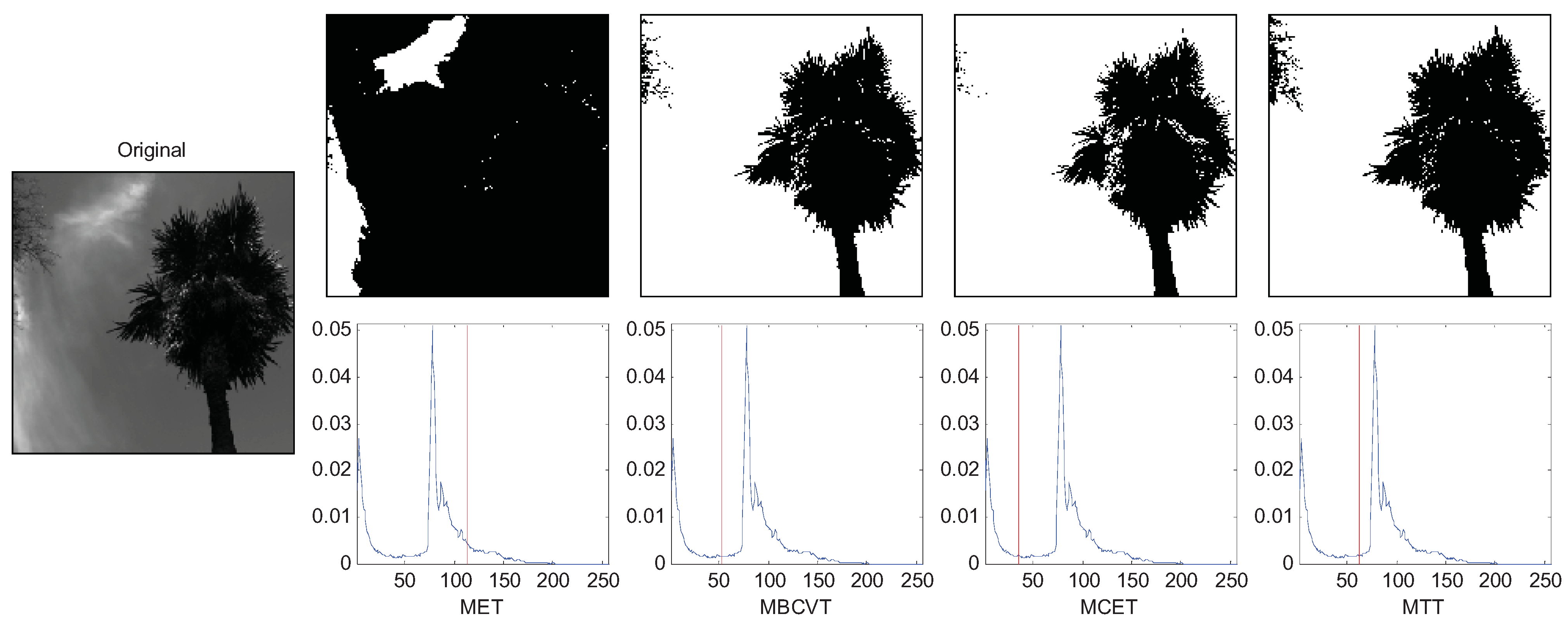

Afterwards, we compared the criteria with several real images, three of which are selected to demonstrate the effectiveness of MTT criterion.

Figure 4 is an image of a flower with an inhomogeneous distribution of light around it. The Tsallis entropy criterion is very useful in such applications where we define a value for the parameter

q to adjust the thresholding level to the correct point. In

Figure 4 the image was segmented with

q equal to 4. The thresholds of MET, MBCVT, MCET, and MTT are 126, 78, 42, and 14, respectively. It indicates that MTT obtains a cleaner segmentation without loss of any part of the flower.

Figure 4.

Segmentation of the rose image.

Figure 4.

Segmentation of the rose image.

Figure 5 is an image of a tree in the right and leaves in the top-left corner. The

q was set to 5. The thresholds of MET, MBCVT, MCET, and MTT are 114, 52, 35, and 63, respectively.

Figure 5 shows that MTT extracts leaves in the top-left corner more completely than those by MET, MBCVT, and MCET. Therefore, the MTT outperforms again.

Figure 5.

Segmentation of the tree image.

Figure 5.

Segmentation of the tree image.

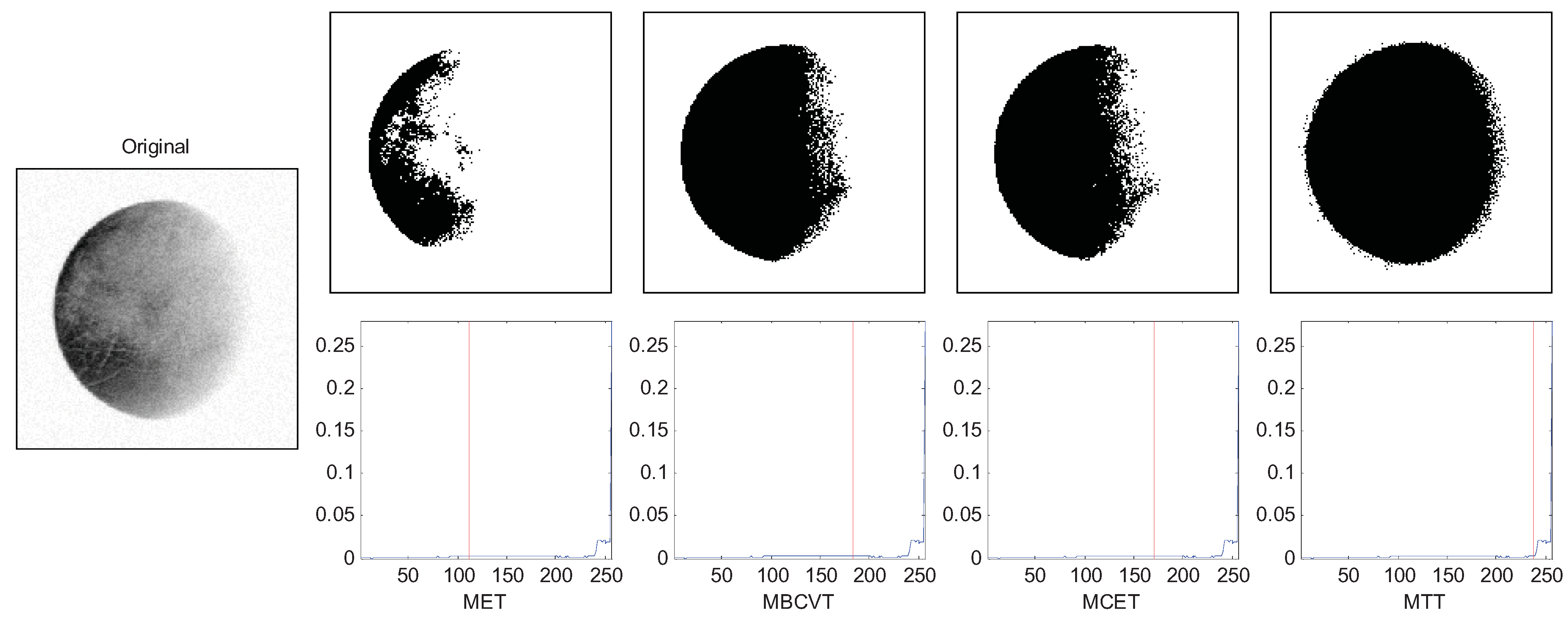

Figure 6 shows an image of Pluto. The

q was set to 4. The thresholds of MET, MBCVT, MCET, and MTT are 111, 183, 170, and 238, respectively. It indicates that the MTT method obtains a fuller Pluto segmentation.

Figure 6.

Segmentation of the Pluto image.

Figure 6.

Segmentation of the Pluto image.

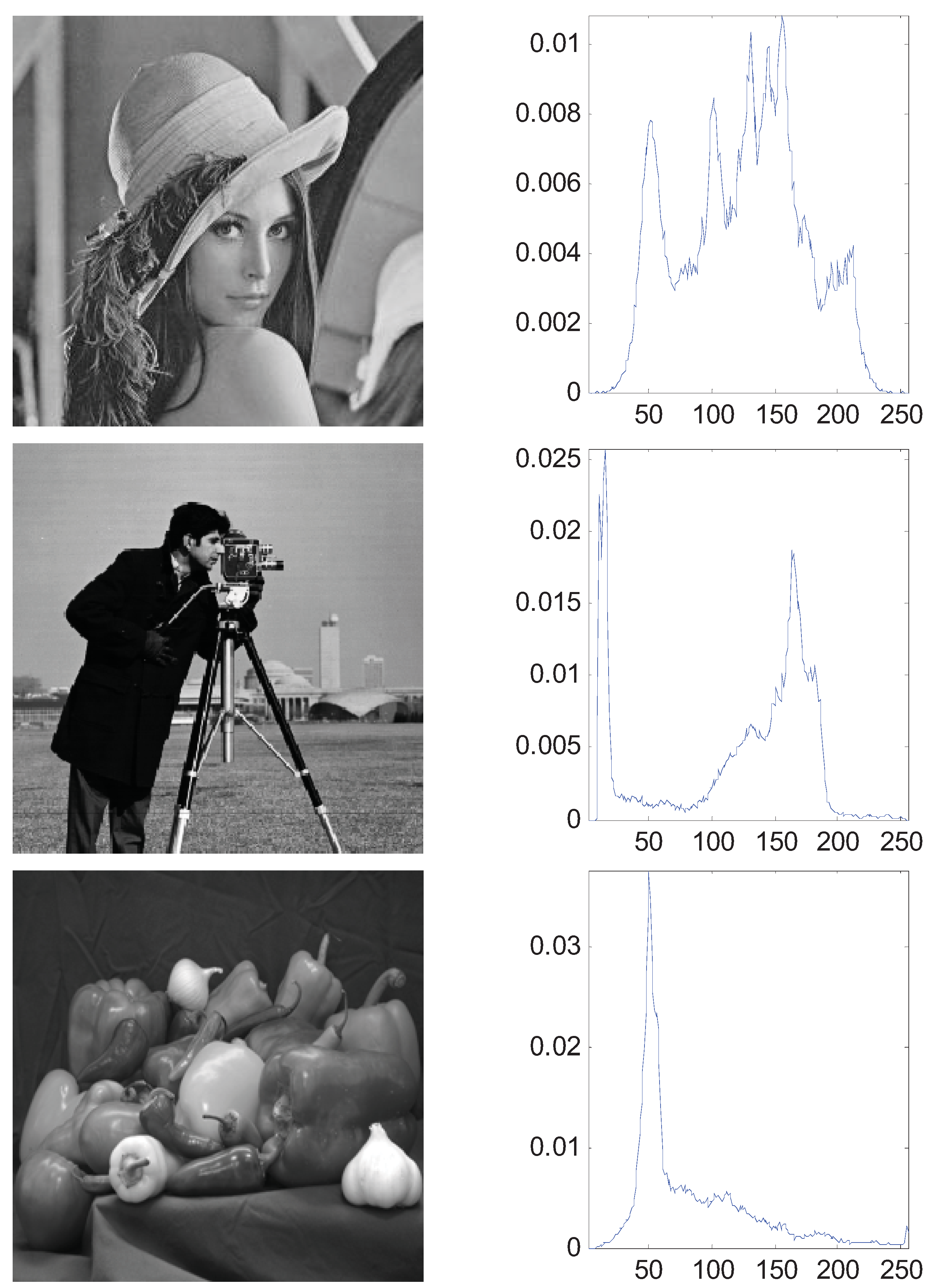

5.2. Rapidness of the ABC

The aforementioned simulation experiments have proven the superiority of the MTT criterion. In this section, we generalize the bi-level thresholding to multi-level thresholding which needs the proposed ABC algorithm for fast computation. A synthetic image (A central square and five surrounding borders) and three real images of 256-by-256 pixels with corresponding histograms were used and shown in

Figure 7. In order to evaluate and analyze the performance of ABC, we use the GA and PSO as the comparative algorithms. Each algorithm was run 30 times to eliminate the randomness.

Figure 7.

Four test images and the histograms: (a) synthetic (b) Lena; (c) cameraman; (d) peppers.

Figure 7.

Four test images and the histograms: (a) synthetic (b) Lena; (c) cameraman; (d) peppers.

A typical run of the resulting segmentation for the four test images based on MTT are shown in

Table 4,

Table 5,

Table 6 and

Table 7, respectively, for classes

m = 3 to 6 with segmented thresholds listed below. The selected thresholds by the ABC are nearly equivalent to the ones obtained by GA and PSO, which reveals that the segmentation results depend heavily on the criterion function selected.

Table 4.

Multi-level segmentation for synthetic image.

Table 5.

Multi-level segmentation for Lena image.

Table 6.

Multi-level segmentation for cameraman image.

Table 7.

Multi-level segmentation for peppers image.

Table 8 shows the average computation time of the three different methods for the four images with

m = 3 to 6. As indicated in this table, with an increase in the class number

m, the runtime tends to increase slowly due to the more complicated criterion function. For the Lena image, the computation algorithm of ABC increases from 1.6611 s to 1.7740 s when

m increases from 3 to 6. Clearly, the efficiency of the intelligence optimization is far higher than traditional exhaustive method. Besides, the ABC costs the least time among all three algorithms.

Table 8.

Average computation time (30 runs).

Table 8.

Average computation time (30 runs).

| Image | m | GA | PSO | ABC |

|---|

| Synthetic | 3 | 1.8849 | 1.8479 | 1.8210 |

| 4 | 1.9487 | 1.8930 | 1.8316 |

| 5 | 1.9780 | 1.9507 | 1.8709 |

| 6 | 2.0453 | 2.0367 | 1.9807 |

| Lena | 3 | 1.9143 | 1.8931 | 1.6611 |

| 4 | 1.9036 | 1.9115 | 1.6594 |

| 5 | 2.0176 | 1.9500 | 1.7121 |

| 6 | 2.1598 | 2.0577 | 1.7740 |

| Cameraman | 3 | 1.8755 | 1.7708 | 1.7083 |

| 4 | 1.9168 | 1.8558 | 1.7003 |

| 5 | 1.9686 | 1.8722 | 1.7975 |

| 6 | 2.0175 | 1.9933 | 1.9191 |

| Peppers | 3 | 1.8890 | 1.8540 | 1.5321 |

| 4 | 1.9636 | 1.8495 | 1.5527 |

| 5 | 1.9985 | 1.9350 | 1.6924 |

| 6 | 2.1395 | 2.0122 | 1.6484 |

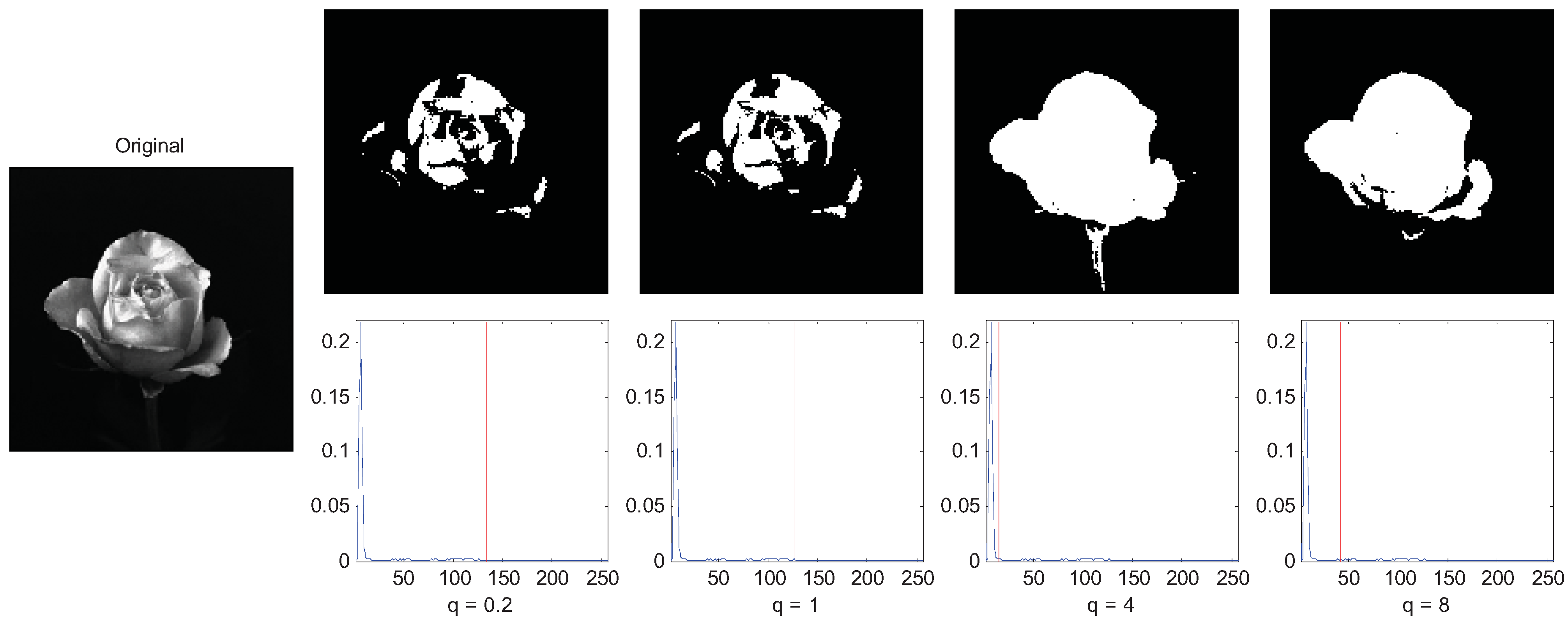

5.3. Influence of the Parameter q

The final experiment is to demonstrate the influence of parameter

q on the segmentation result. We use the rose image as the example and let

q = 0.2, 0.5, 1, and 4. The segmentation results are shown in

Figure 8. The rose image is a super-extensive system since the correlation of the pixels of the flower are long-range, so the MTT segmentation of

q = 0.2 (sub-extensive) and

q = 1(extensive) performed a non-satisfying segmentation result, and the MTT criterion of

q = 4 segmented the rose image best. However, when

q = 8, we do not get a good result since it corresponds to a too long-range correlation image.

Figure 8.

of long-range correlation image with variations of parameter q.

Figure 8.

of long-range correlation image with variations of parameter q.

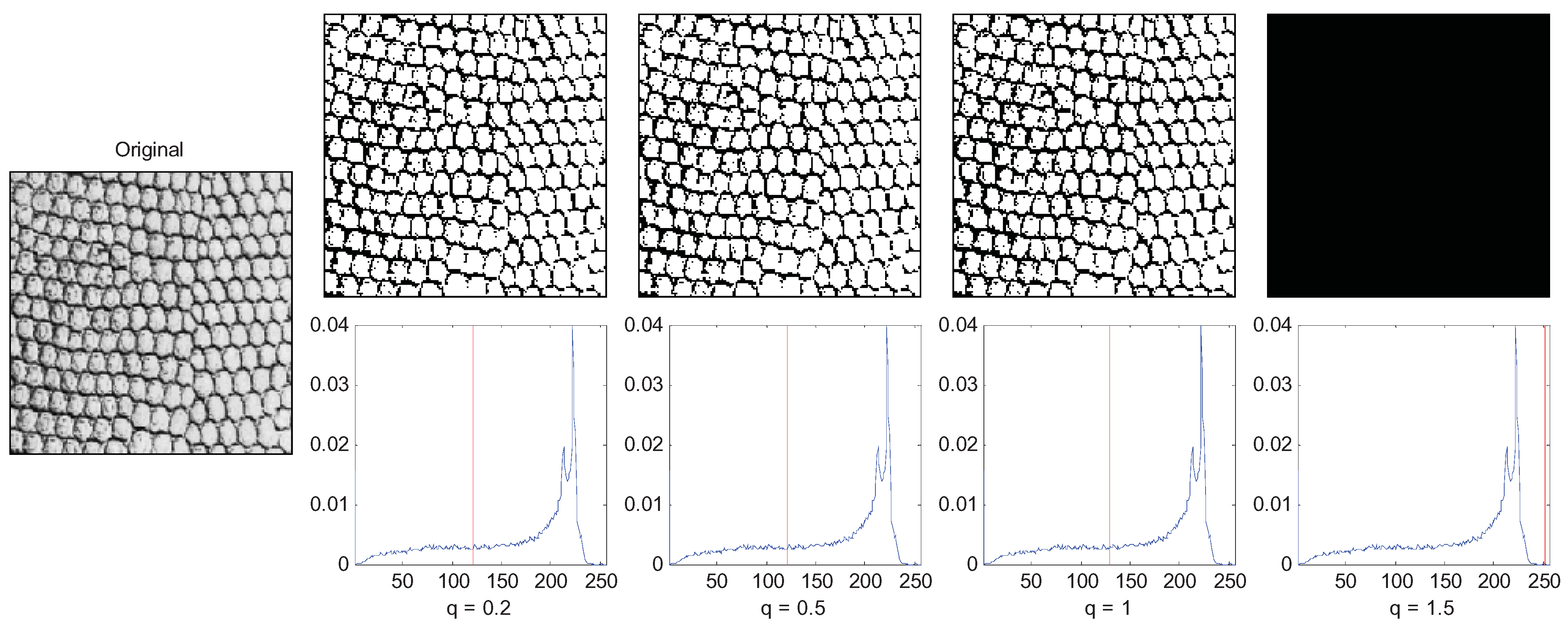

Another example is a texture image of which a large amount of foreground objects cluster together as shown in

Figure 9, so the correlation between pixels of the same object is short range. In this case, the small

q (

q = 1) performs best.

Figure 9.

Segmentation of short-range correlation image with variations of parameter q.

Figure 9.

Segmentation of short-range correlation image with variations of parameter q.

From the above two examples, we can see that the parameter q in an image is usually interpreted as a quantity characterizing the degree of nonextensivity. For image segmentation, the nonextensivity of the image system can be justified by the presence of correlations between pixels of the same object in the image. The correlations can be regarded as a long-range correlation in the case of the image that presents pixels strongly correlated in gray-levels and space fulfilling.