Function Based Fault Detection for Uncertain Multivariate Nonlinear Non-Gaussian Stochastic Systems Using Entropy Optimization Principle

Abstract

:1. Introduction

2. Preliminary

2.1. Plant Models

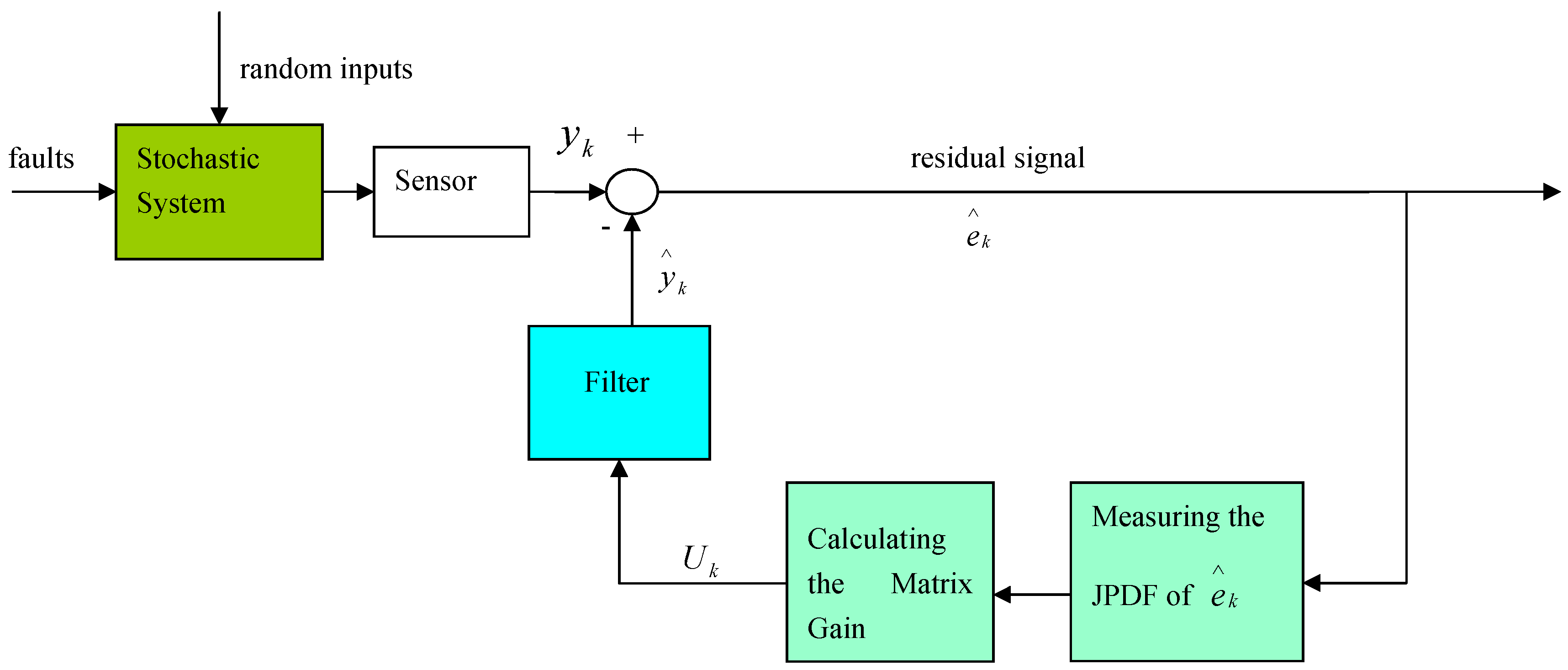

2.2. Filter and Error Dynamics

2.3. Entropy and Its Formulation

3. Main Results

3.1. Performance Indexes

3.2. Formulations for the Error JPDFs

3.3. Simplified Calculation of the Error JPDFs

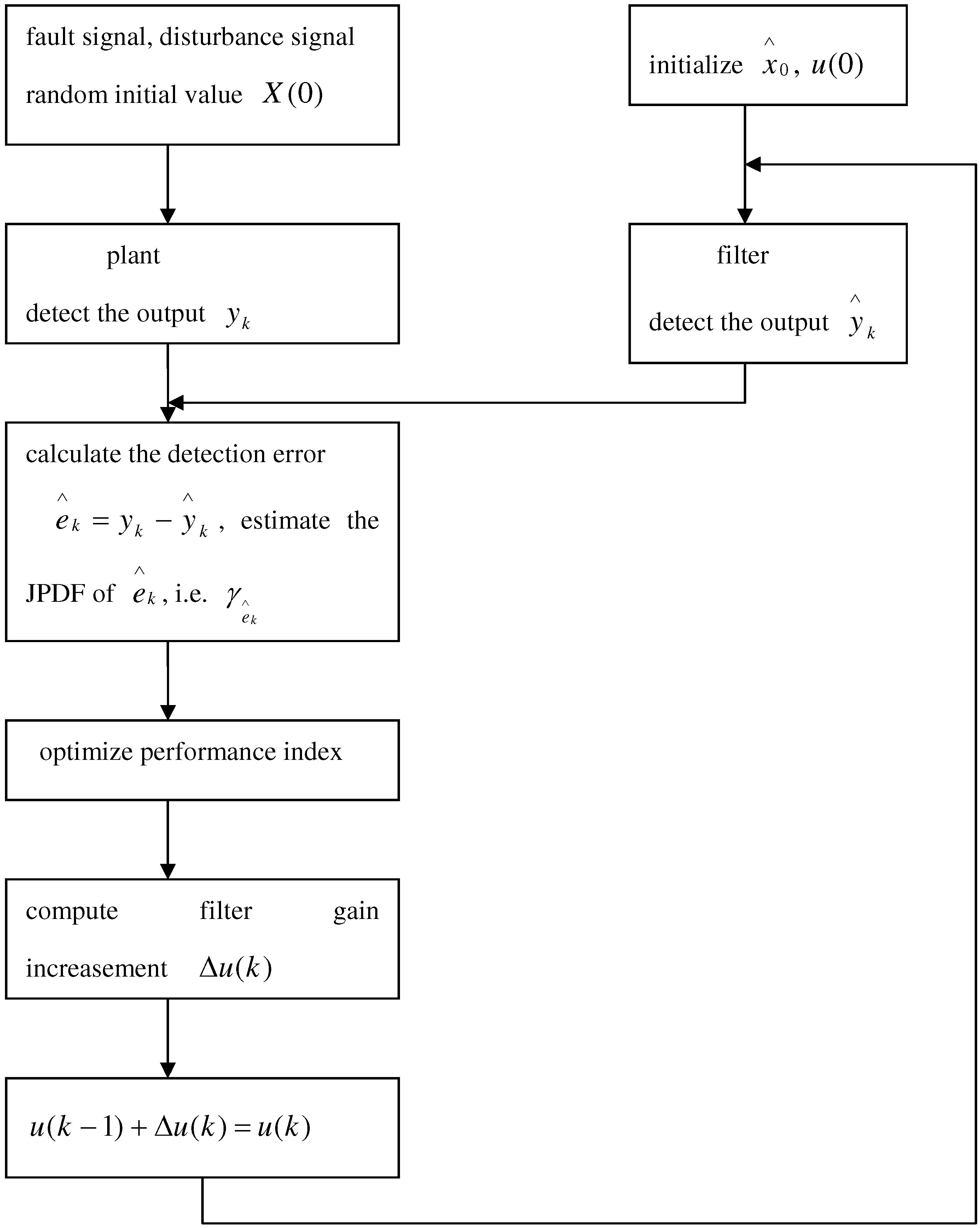

3.4. Optimal FD Filter Design Strategy

- Initialize and

- At the sample time compute , based on Theorem 3;

- At the sample time compute , and then obtain via (18);

- Increase k by 1 to the next step.

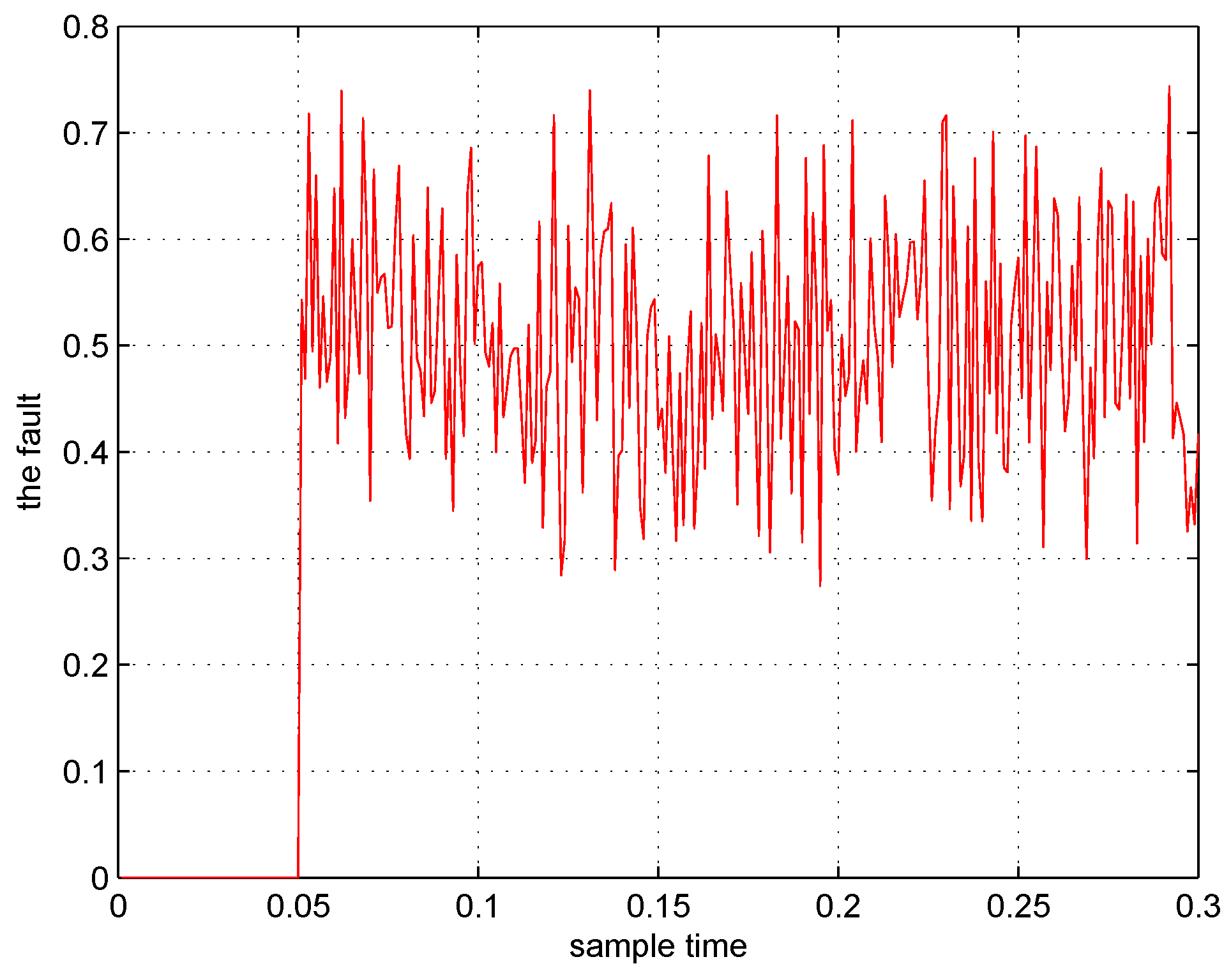

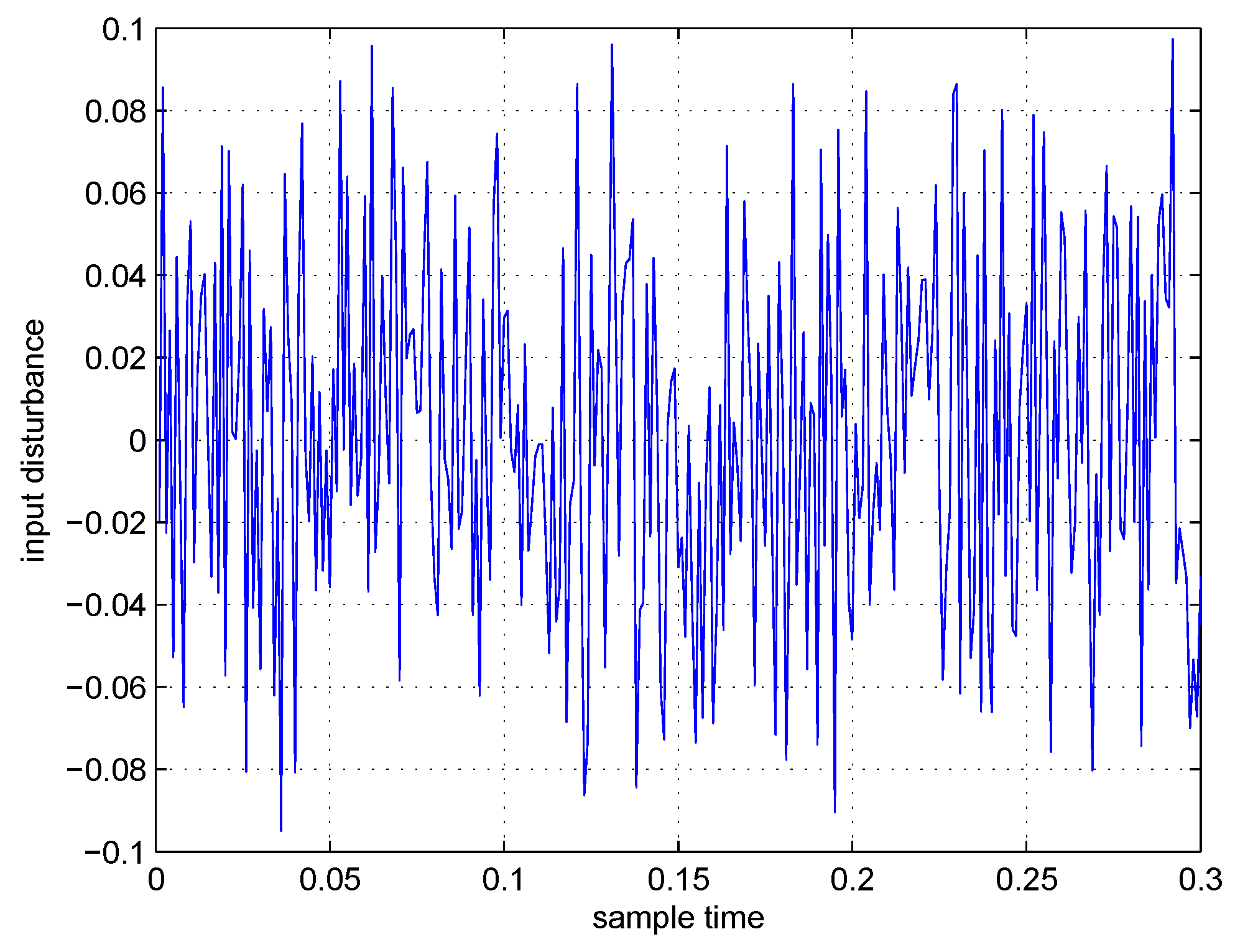

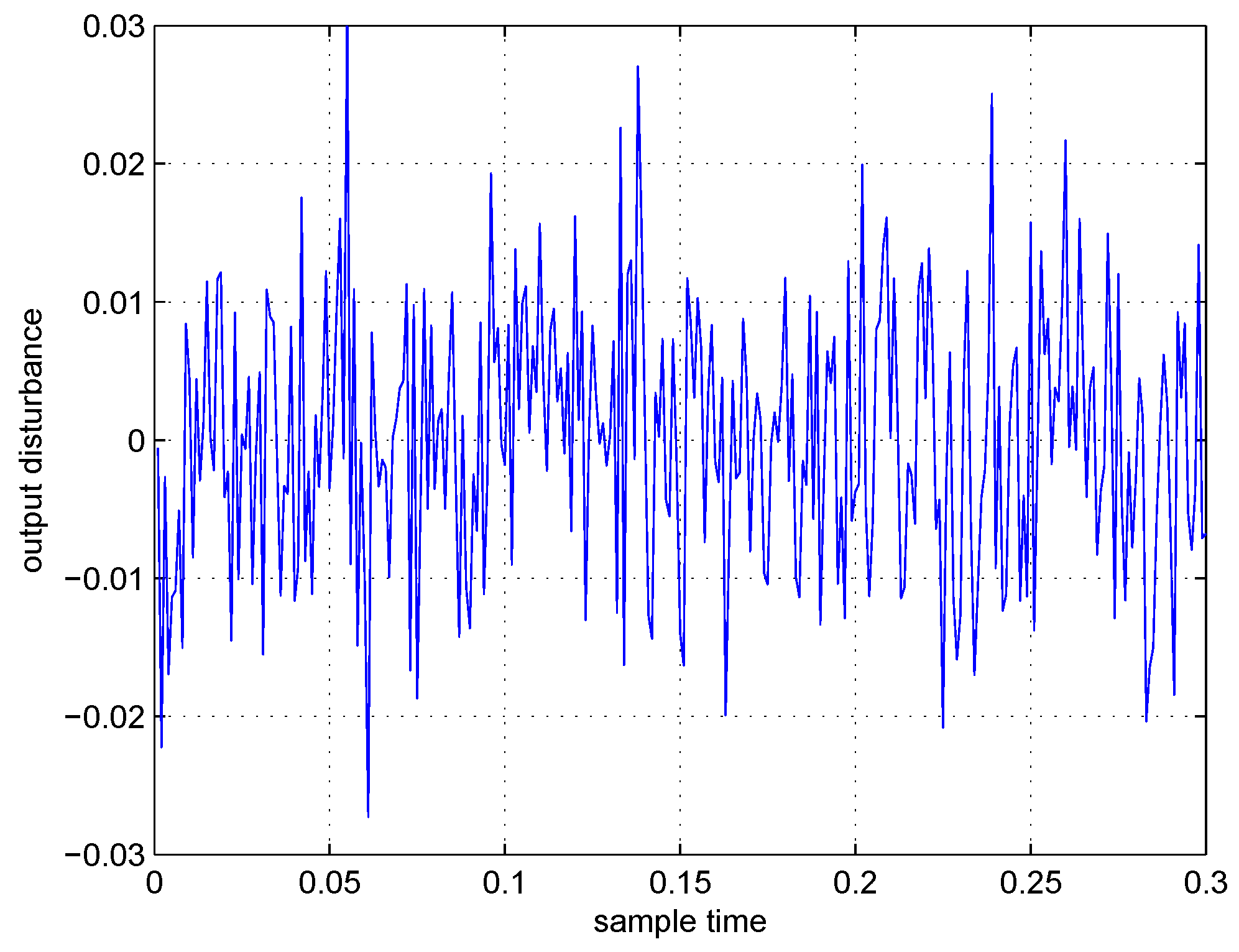

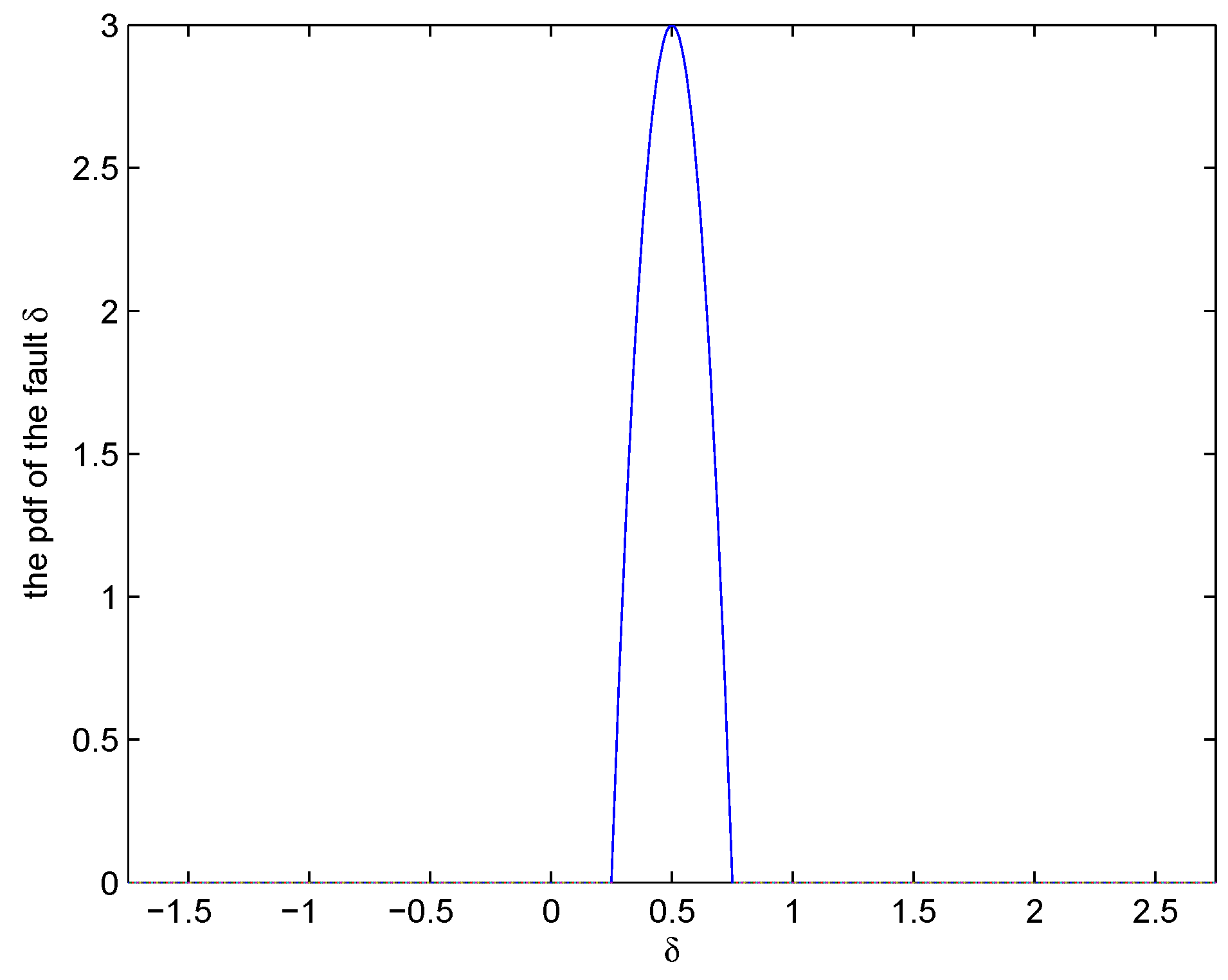

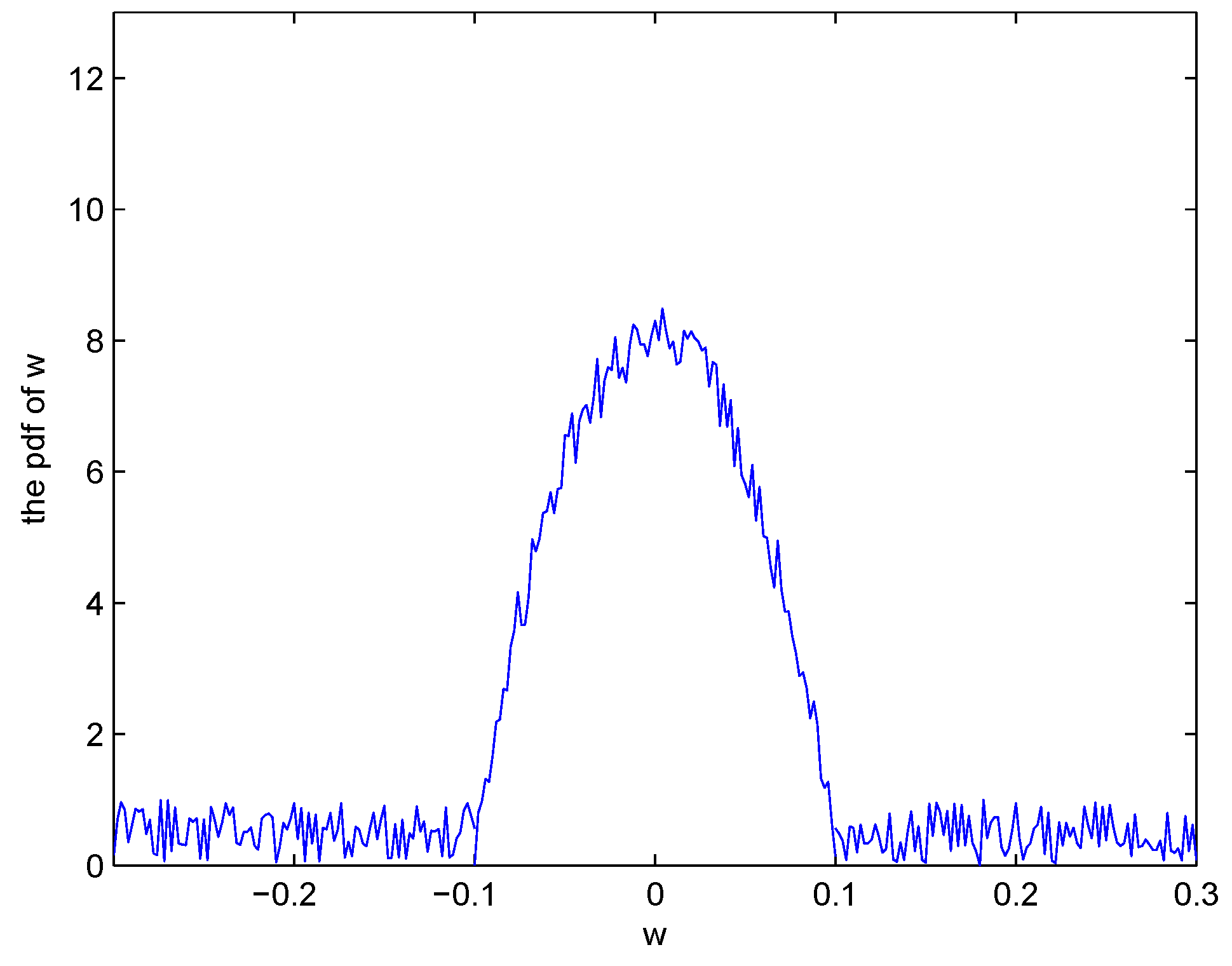

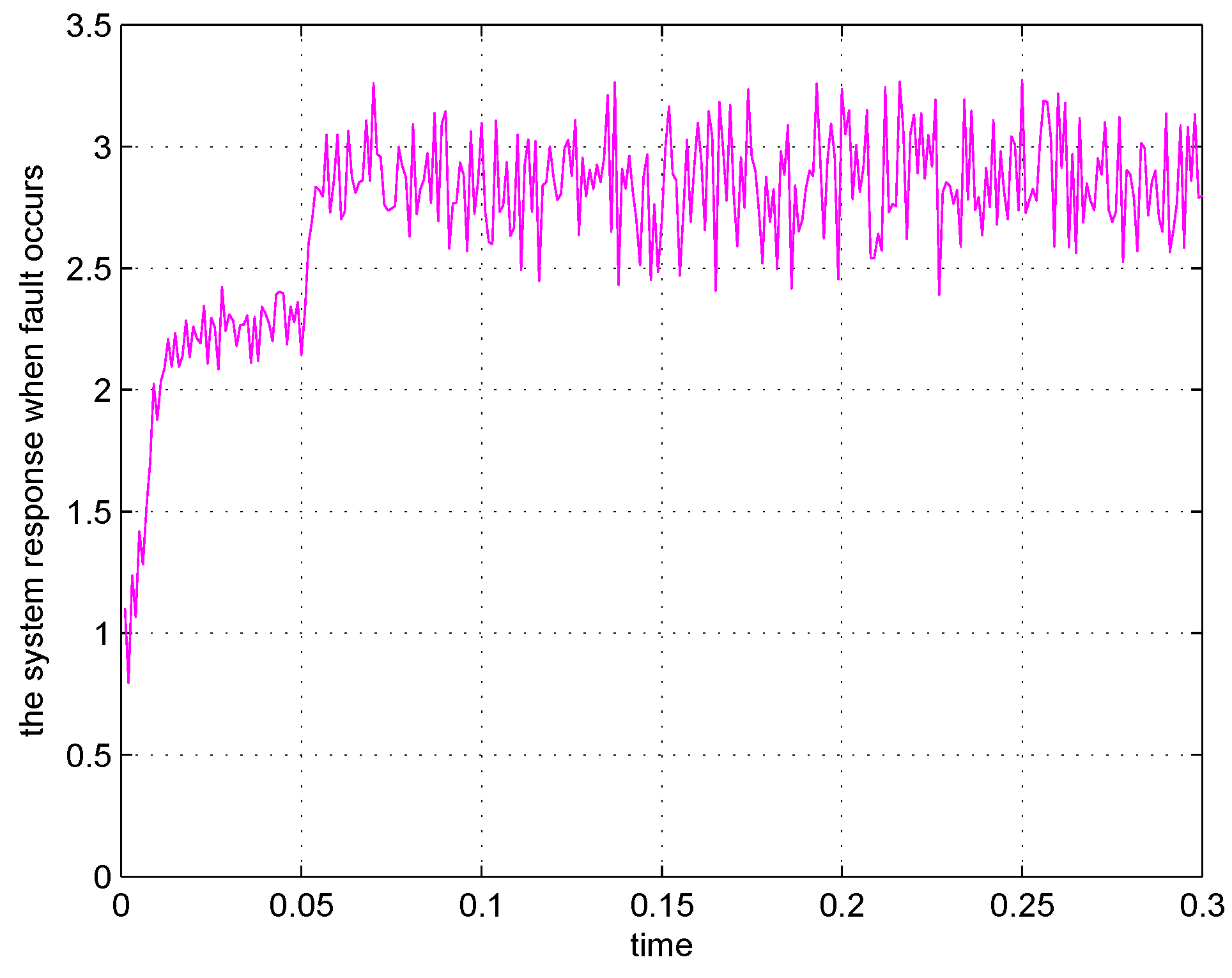

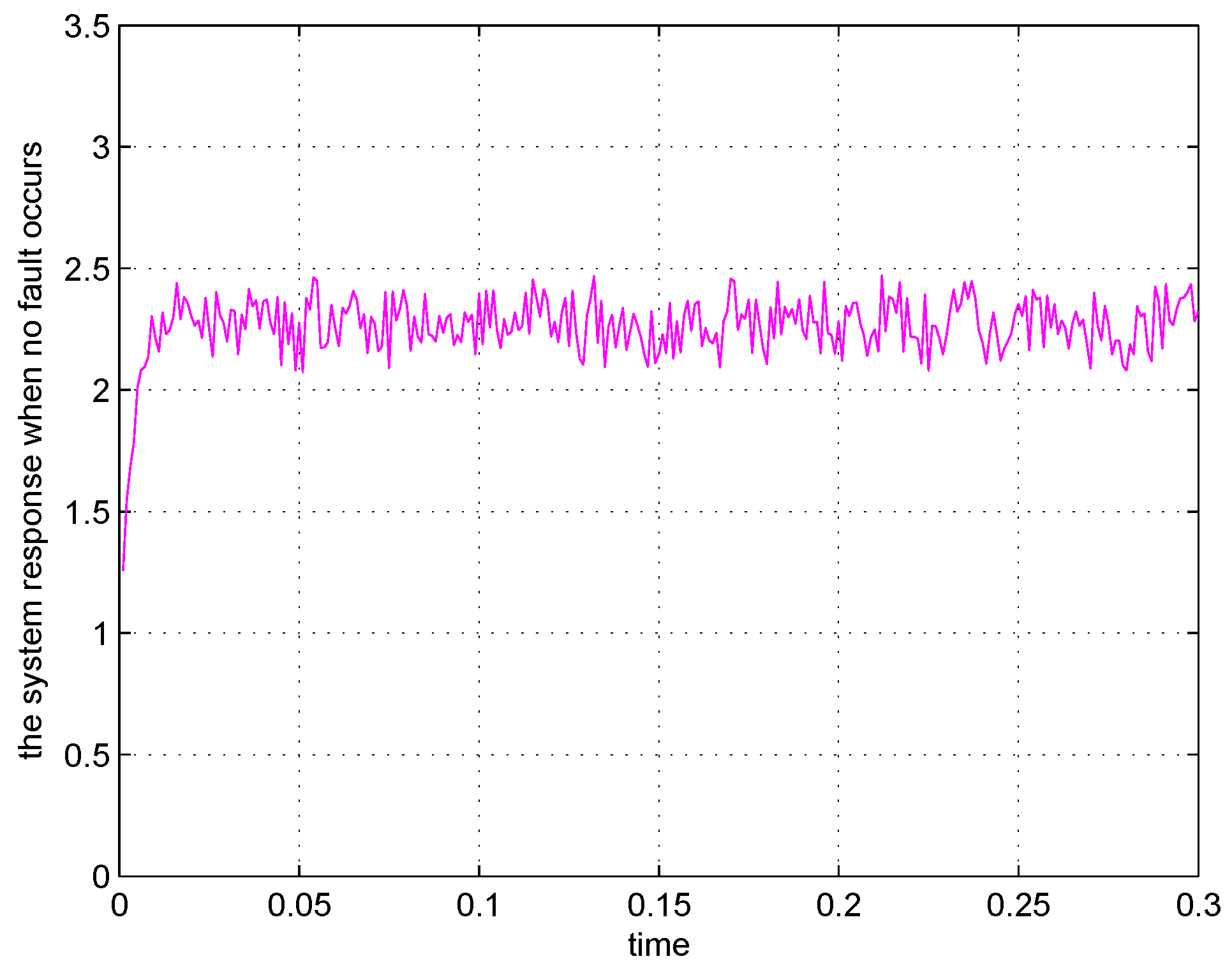

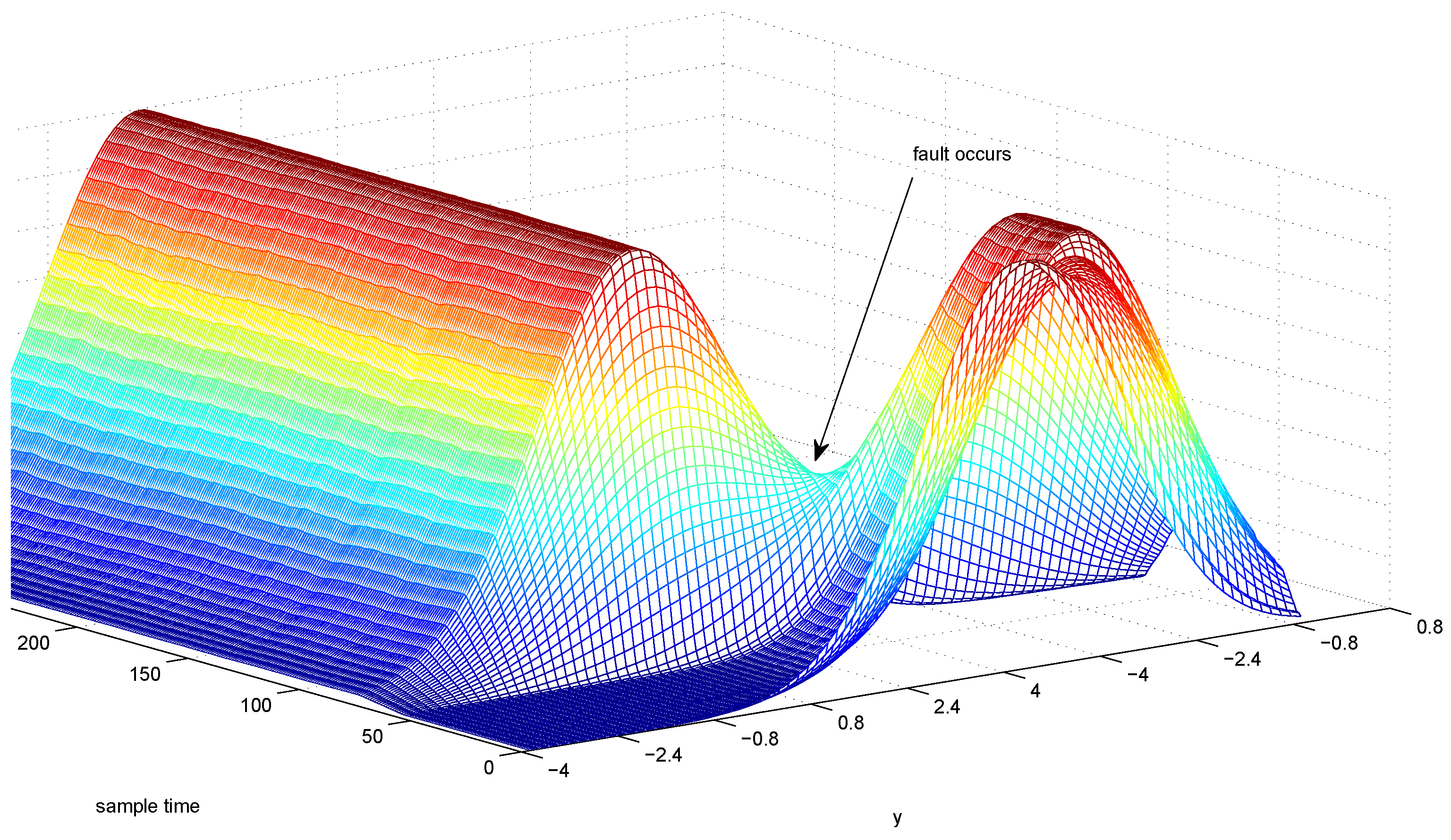

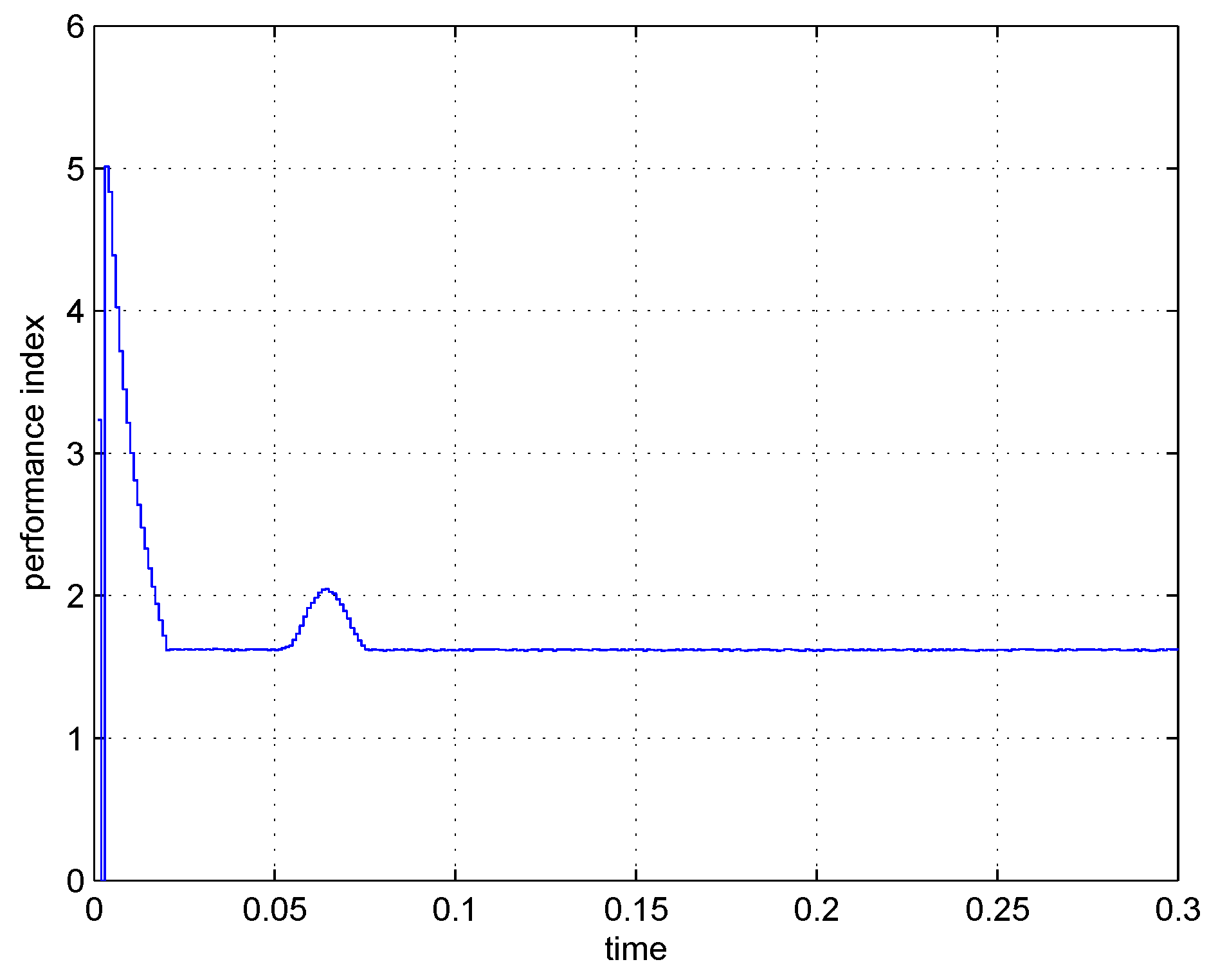

4. Simulation Results

5. Conclusions

- The detected fault and system noises do not have to be Gaussian.

- The entropy optimization principle for FD problems is in parallel to the main results to [2], where linear Gaussian systems were studied and the minimax technology was applied to the variance of errors. It has therefore generalized variance optimization for Gaussian signal.

- This fault detection approach is applicable to multivariate and uncertain systems. It is a generalization of the method in [8] where only single-input-signal-output system is concerned.

Acknowledgment

References

- Basseville, M. On-board component fault detection and isolation using the statistic local approach. Automatic 1998, 34, 1391–1415. [Google Scholar] [CrossRef]

- Chen, R.H.; Mingori, D.L.; Speyer, J.L. Optimal stochastic fault detection filter. Automatica 2003, 39, 377–390. [Google Scholar] [CrossRef]

- Chen, R.H.; Speyer, J.L. A generalized least-squares fault detection filter. Int. J. Adapt. Contr. Signal Process. 2000, 14, 747–757. [Google Scholar] [CrossRef]

- De Persis, C.; Isidori, A. A geometric approach to nonlinear fault detection and isolation. IEEE Trans. Automat. Contr. 2001, 46, 853–856. [Google Scholar] [CrossRef]

- Frank, P.M. Fault diagnosis in dynamic systems using analytical and knowledge-based redundancy a survey and some new results. Automatica 1990, 26, 459–474. [Google Scholar] [CrossRef]

- Guo, L.; Wang, H. Minimum entropy filtering for multivariate stochastic systems with non-Gaussian noises. IEEE Trans. Automat. Contr. 2006, 51, 695–700. [Google Scholar] [CrossRef]

- Shields, D.N.; Ashton, S.A.; Daley, S. Robust fault detection observers for nonlinear polynomial systems. Int. J. Syst. Sci. 2001, 32, 723–737. [Google Scholar] [CrossRef]

- Guo, L.; Wang, H.; Chai, T. Fault detection for non-linear non-Gaussian stochastic systems using entropy optimization principle. Trans. Inst. Meas. Contr. 2006, 28, 145–161. [Google Scholar] [CrossRef]

- Mao, Z.H.; Jiang, B.; Shi, P. H-infinity fault detection filter design for networked control systems modelled by discrete Markovian jump systems. IET Proc. Contr. Theor. Appl. 2007, 1, 1336–1343. [Google Scholar] [CrossRef]

- Jiang, B.; Staroswiecki, M.; Cocquempot, V. Fault accommodation for a class of nonlinear systems. IEEE Trans. Automat. Contr. 2006, 51, 1578–1583. [Google Scholar] [CrossRef]

- Basseville, M.; Nikiforv, I. Fault isolation and diagnosis: Nuisance rejection and multiple hypothesis testing. Annu. Rev. Contr. 2002, 26, 189–202. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R. Estimation and Tracking: Princples, Techniques, and Software; Artech House: Norwood, MA, USA, 1996. [Google Scholar]

- Zhang, J.; Cai, L.; Wang, H. Minimum entropy filtering for networked control systems via information theoretic learning approach. In Proceedings of the 2010 International Conference on Modelling, Identification and Control, Okayama, Japan, 17–19 July 2010; pp. 774–778.

- Orchardand, M.; Vachtsevanos, G. A particle filtering approach for on-line fault diagnosis and failure prognosis. Trans. Inst. Meas. Contr. 2009, 31, 221–246. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, B.; Vachtsevanos, G.; Orchard, M. Machine condition prediction based on adaptive neuro-fuzzy and high-order particle filtering. IEEE Trans. Ind. Electron. 2011, 58, 4353–4364. [Google Scholar] [CrossRef]

- Wang, H.; Afshar, P. ILC-based fixed-structure controller design for output PDF shaping in stochastic systems using LMI techniques. IEEE Trans. Automat. Contr. 2009, 54, 760–773. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, D.; Wang, H.; Guo, L.; Chai, T. Distribution function tracking filter design using hybrid characteristic functions. Automatica 2010, 46, 101–109. [Google Scholar] [CrossRef]

- Chen, C.; Brown, D.; Sconyers, C.; Zhang, B.; Vachtsevanos, G.; Orchard, M. An integrated architecture for fault diagnosis and failure prognosis of complex engineering systems. Expert Syst. Appl. 2012, 39, 9031–9040. [Google Scholar] [CrossRef]

- Yin, L.; Guo, L. Fault isolation for dynamic multivariate nonlinear non-gaussian stochastic systems using generalized entropy optimization principle. Automatica 2009, 45, 2612–2619. [Google Scholar] [CrossRef]

- Yin, L.; Guo, L. Fault detection for NARMAX stochastic systems using entropy optimization principle. In Proceedings of the 2009 Chinese Control and Decision Conference, Guilin, China, June 2009; pp. 870–875.

- Guo, L.; Chen, W. Disturbance attenuation and rejection for systems with nonlinearity via DOBC approach. Int. J. Robust Nonlinear Contr. 2005, 15, 109–125. [Google Scholar] [CrossRef]

- Yang, F.; Wang, Z.; Hung, S. Robust kalman filtering for discrete time-varying uncertain systems with multiplicative noise. IEEE Trans. Automat. Contr. 2002, 47, 1179–1183. [Google Scholar] [CrossRef]

- Guo, L.; Wang, H.; Wang, A.P. Optimal probability density fuction control for NARMAX stochastic systems. Automatica 2008, 44, 1904–1911. [Google Scholar] [CrossRef]

- Yin, L.; Guo, L. Joint stochastic distribution tracking control for multivariate descriptor systems with non-gaussian variables. Int. J. Syst. Sci. 2012, 43, 192–200. [Google Scholar] [CrossRef]

- Guo, L.; Yin, L.; Wang, H. Robust PDF control with guaranteed stability for nonlinear stochastic systems under modeling errors. IET Contr. Theor. Appl. 2009, 3, 575–582. [Google Scholar] [CrossRef]

- Yin, L.; Guo, L.; Wang, H. Robust minimum entropy tracking control with guaranteed stability for nonlinear stochastic systems under modeling errors. In Proceedings of the 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 17–20 December 2008; pp. 1469–1474.

- Papoulis, A. Probablity, Random Variables and Stochastic Processes, 3rd ed.; McGraw-Hill: New York, NY, USA, 1991. [Google Scholar]

- Patton, R.J.; Frank, P.; Clark, R. Fault Diagnosis in Dynamic Systems: Theory and Application; Prentice Hall: Englewood Cliff, NJ, USA, 1989. [Google Scholar]

- Feng, X.B.; Loparo, K.A. Active probing for information in control system with quantized state measurements: A minimum entropy approach. IEEE Trans. Automat. Contr. 1997, 42, 216–238. [Google Scholar] [CrossRef]

- Wang, H. Minimum entropy control of non-Gaussian dynamic stochastic systems. IEEE Trans. Automat. Contr. 2002, 47, 398–403. [Google Scholar] [CrossRef]

- Saridis, G.N. Entropy formulation of optimal and adaptive control. IEEE Trans. Automat. Contr. 1988, 33, 713–720. [Google Scholar] [CrossRef]

- Wang, H. Bounded Dynamic Stochastc Systems: Modelling and Control; Springer-Verlag: London, UK, 2000. [Google Scholar]

- Zhang, J.; Du, L.; Ren, M.; Hou, G. Minimum error entropy filter for fault detection of networked control systems. Entropy 2012, 14, 505–516. [Google Scholar] [CrossRef]

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; Chapman and Hall: London, UK, 1986. [Google Scholar]

- Wang, H.; Lin, W. Applying observer based FDI techniques to detect faults in dynamic and bounded stochastc distributions. Int. J. Contr. 2000, 73, 1424–1436. [Google Scholar] [CrossRef]

- Yue, H.; Wang, H. Minimum entropy control of closed-loop tracking errors for dynamic stochastic systems. IEEE Trans. Automat. Contr. 2003, 48, 118–121. [Google Scholar]

- Guo, L.; Zhang, Y.M.; Wang, H.; Fang, J.C. Observer-based optimal fault detection and diagnosis using conditional probability distributions. IEEE Trans. Signal Process. 2006, 54, 3712–3719. [Google Scholar] [CrossRef]

- Zhang, Y.M.; Guo, L.; Wang, H. Filter-based fault detection and diagnosis using output PDFs for stochastic systems with time delays. Int. J. Adapt. Contr. Signal Process. 2006, 20, 175–194. [Google Scholar] [CrossRef]

- Li, T.; Guo, L.; Wu, L.Y. Observer-based optimal fault detection using PDFs for time-delay stochastic systems. Nonlinear Anal. R. World Appl. 2008, 9, 2337–2349. [Google Scholar] [CrossRef]

- Guo, L.; Yin, L.; Wang, H.; Chai, T.Y. Entropy optimization filtering for fault isolation of nonlinear non-gaussian stochastic systems. IEEE Trans. Automat. Contr. 2009, 54, 804–810. [Google Scholar]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Yin, L.; Zhou, L. Function Based Fault Detection for Uncertain Multivariate Nonlinear Non-Gaussian Stochastic Systems Using Entropy Optimization Principle. Entropy 2013, 15, 32-52. https://doi.org/10.3390/e15010032

Yin L, Zhou L. Function Based Fault Detection for Uncertain Multivariate Nonlinear Non-Gaussian Stochastic Systems Using Entropy Optimization Principle. Entropy. 2013; 15(1):32-52. https://doi.org/10.3390/e15010032

Chicago/Turabian StyleYin, Liping, and Li Zhou. 2013. "Function Based Fault Detection for Uncertain Multivariate Nonlinear Non-Gaussian Stochastic Systems Using Entropy Optimization Principle" Entropy 15, no. 1: 32-52. https://doi.org/10.3390/e15010032

APA StyleYin, L., & Zhou, L. (2013). Function Based Fault Detection for Uncertain Multivariate Nonlinear Non-Gaussian Stochastic Systems Using Entropy Optimization Principle. Entropy, 15(1), 32-52. https://doi.org/10.3390/e15010032