A Novel Belief Entropy for Measuring Uncertainty in Dempster-Shafer Evidence Theory Framework Based on Plausibility Transformation and Weighted Hartley Entropy

Abstract

:1. Introduction

2. Preliminaries

2.1. Dempster-Shafer Evidence Theory

2.2. Probability Transformation

2.3. Shannon Entropy

3. Desired Properties of Uncertainty Measures in The DS Theory

- Probability consistency. Let m be a BPA on FOD X. If m is a Bayesian BPA, then .

- Additivity. Let and be distinct BPAs for FOD X and FOD Y, respectively. The combined BPA using Dempster-Shafer combination rules must satisfy the following equality:where the is a BPA for . For all , where and , we have:

- Sub-additivity. Let m be a BPA on the space , with marginal BPAs and on FOD X and FOD Y, respectively. The uncertainty measure must satisfy the following inequality:

- Set consistency. Let m be a BPA on FOD X. If there exists a focal element and , then an uncertainty measure must degrade to Hartley measure:

- Range. Let m be a BPA on FOD X. The range of an uncertainty measure must be .

- Consistency with DST semantics. Let and be two BPAs in the same FOD. If an uncertainty measure is based on a probability transformation of BPA, which transforms a BPA m to a PMF , then the PMFs of and must satisfy the following condition:where ⊗ denotes the Bayesian combination rule [41], i.e., pointwise multiplication followed by normalization. Notice that this property is not presupposing the use of probability transformation in the uncertainty measure.

- Non-negativity. Let m be a BPA on FOD X. The uncertainty measure must satisfy the following inequality:where the equality holds up if and only if m is Bayesian and with .

- Maximum entropy. Let m be a BPA on FOD X. The vacuous BPA should have the most uncertainty, then the uncertainty measure must satisfy the following inequality:where the equality holds up if and only if .

- Monotonicity. Let and be the vacuous BPAs of FOD X and FOD Y, respectively. If , then .

4. The Belief Entropy for Uncertainty Measure in DST Framework

4.1. The Existing Definitions of Belief Entropy of BPAs

4.2. The Proposed Belief Entropy

5. Numerical Experiment

5.1. Example 1

5.2. Example 2

5.3. Example 3

5.4. Example 4

5.5. Example 5

5.6. Example 6

5.7. Example 7

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Springer: Berlin, Germany, 2008; pp. 57–72. [Google Scholar]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 42. [Google Scholar]

- Wang, J.; Qiao, K.; Zhang, Z. An improvement for combination rule in evidence theory. Futur. Gener. Comput. Syst. 2019, 91, 1–9. [Google Scholar] [CrossRef]

- Jiao, Z.; Gong, H.; Wang, Y. A DS evidence theory-based relay protection system hidden failures detection method in smart grid. IEEE Trans. Smart Grid 2018, 9, 2118–2126. [Google Scholar] [CrossRef]

- Jiang, W.; Zhan, J. A modified combination rule in generalized evidence theory. Appl. Intell. 2017, 46, 630–640. [Google Scholar] [CrossRef]

- de Oliveira Silva, L.G.; de Almeida-Filho, A.T. A multicriteria approach for analysis of conflicts in evidence theory. Inf. Sci. 2016, 346, 275–285. [Google Scholar] [CrossRef]

- Liu, Z.G.; Pan, Q.; Dezert, J.; Martin, A. Combination of classifiers with optimal weight based on evidential reasoning. IEEE Trans. Fuzzy Syst. 2018, 26, 1217–1230. [Google Scholar] [CrossRef]

- Mi, J.; Li, Y.F.; Peng, W.; Huang, H.Z. Reliability analysis of complex multi-state system with common cause failure based on evidential networks. Reliab. Eng. Syst. Saf. 2018, 174, 71–81. [Google Scholar] [CrossRef]

- Zhao, F.J.; Zhou, Z.J.; Hu, C.H.; Chang, L.L.; Zhou, Z.G.; Li, G.L. A new evidential reasoning-based method for online safety assessment of complex systems. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 954–966. [Google Scholar] [CrossRef]

- Xiao, F. Multi-sensor data fusion based on the belief divergence measure of evidences and the belief entropy. Inf. Fusion 2019, 46, 23–32. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, D.; Chan, F.T.S. An Extension to Deng’s Entropy in the Open World Assumption with an Application in Sensor Data Fusion. Sensors 2018, 18, 1902. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, X.; Song, Y. Evaluating the Reliability Coefficient of a Sensor Based on the Training Data within the Framework of Evidence Theory. IEEE Access 2018, 6, 30592–30601. [Google Scholar] [CrossRef]

- Xiao, F.; Qin, B. A Weighted Combination Method for Conflicting Evidence in Multi-Sensor Data Fusion. Sensors 2018, 18, 1487. [Google Scholar] [CrossRef] [PubMed]

- Kang, B.; Chhipi-Shrestha, G.; Deng, Y.; Hewage, K.; Sadiq, R. Stable strategies analysis based on the utility of Z-number in the evolutionary games. Appl. Math. Comput. 2018, 324, 202–217. [Google Scholar] [CrossRef]

- Zheng, X.; Deng, Y. Dependence assessment in human reliability analysis based on evidence credibility decay model and IOWA operator. Ann. Nuclear Energy 2018, 112, 673–684. [Google Scholar] [CrossRef]

- Lin, Y.; Li, Y.; Yin, X.; Dou, Z. Multisensor Fault Diagnosis Modeling Based on the Evidence Theory. IEEE Trans. Reliab. 2018, 67, 513–521. [Google Scholar] [CrossRef]

- Song, L.; Wang, H.; Chen, P. Step-by-step Fuzzy Diagnosis Method for Equipment Based on Symptom Extraction and Trivalent Logic Fuzzy Diagnosis Theory. IEEE Trans. Fuzzy Syst. 2018, 26, 3467–3478. [Google Scholar] [CrossRef]

- Gong, Y.; Su, X.; Qian, H.; Yang, N. Research on fault diagnosis methods for the reactor coolant system of nuclear power plant based on DS evidence theory. Ann. Nucl. Energy 2018, 112, 395–399. [Google Scholar] [CrossRef]

- Zheng, H.; Deng, Y. Evaluation method based on fuzzy relations between Dempster–Shafer belief structure. Int. J. Intell. Syst. 2018, 33, 1343–1363. [Google Scholar]

- Song, Y.; Wang, X.; Zhu, J.; Lei, L. Sensor dynamic reliability evaluation based on evidence theory and intuitionistic fuzzy sets. Appl. Intell. 2018, 48, 3950–3962. [Google Scholar] [CrossRef]

- Ruan, Z.; Li, C.; Wu, A.; Wang, Y. A New Risk Assessment Model for Underground Mine Water Inrush Based on AHP and D–S Evidence Theory. Mine Water Environ. 2019, 1–9. [Google Scholar] [CrossRef]

- Ma, X.; Liu, S.; Hu, S.; Geng, P.; Liu, M.; Zhao, J. SAR image edge detection via sparse representation. Soft Comput. 2018, 22, 2507–2515. [Google Scholar] [CrossRef]

- Moghaddam, H.A.; Ghodratnama, S. Toward semantic content-based image retrieval using Dempster–Shafer theory in multi-label classification framework. Int. J. Multimed. Inf. Retr. 2017, 6, 317–326. [Google Scholar] [CrossRef]

- Torous, J.; Nicholas, J.; Larsen, M.E.; Firth, J.; Christensen, H. Clinical review of user engagement with mental health smartphone apps: evidence, theory and improvements. Evid. Ment. Health 2018, 21, 116–119. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.; Deng, Y.; Chan, F. Evidential supplier selection based on DEMATEL and game theory. Int. J. Fuzzy Syst. 2018, 20, 1321–1333. [Google Scholar] [CrossRef]

- Orient, G.; Babuska, V.; Lo, D.; Mersch, J.; Wapman, W. A Case Study for Integrating Comp/Sim Credibility and Convolved UQ and Evidence Theory Results to Support Risk Informed Decision Making. In Model Validation and Uncertainty Quantification; Springer: Berlin, Germany, 2019; Volume 3, pp. 203–208. [Google Scholar]

- Li, Y.; Xiao, F. Bayesian Update with Information Quality under the Framework of Evidence Theory. Entropy 2019, 21, 5. [Google Scholar] [CrossRef]

- Dietrich, C.F. Uncertainty, Calibration and Probability: the Statistics of Scientific and Industrial Measurement; Routledge: London, NY, USA, 2017. [Google Scholar]

- Rényi, A. On Measures of Entropy and Information; Technical Report; Hungarian Academy of Sciences: Budapest, Hungary, 1961. [Google Scholar]

- Shannon, C. A mathematical theory of communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Zhou, M.; Liu, X.B.; Yang, J.B.; Chen, Y.W.; Wu, J. Evidential reasoning approach with multiple kinds of attributes and entropy-based weight assignment. Knowl. Syst. 2019, 163, 358–375. [Google Scholar] [CrossRef]

- Klir, G.J.; Wierman, M.J. Uncertainty-Based Information: Elements of Generalized Information Theory; Springer: Berlin, Germany, 2013; Volume 15. [Google Scholar]

- Dubois, D.; Prade, H. Properties of measures of information in evidence and possibility theories. Fuzzy Sets Syst. 1987, 24, 161–182. [Google Scholar] [CrossRef]

- Hohle, U. Entropy with respect to plausibility measures. In Proceedings of the 12th International Symposium on Multiple-Valued Logic, Paris, France, 25–27 May 1982. [Google Scholar]

- Yager, R.R. Entropy and specificity in a mathematical theory of evidence. Int. J. Gen. Syst. 1983, 9, 249–260. [Google Scholar] [CrossRef]

- Klir, G.J.; Ramer, A. Uncertainty in the Dempster-Shafer theory: a critical re-examination. Int. J. Gen. Syst. 1990, 18, 155–166. [Google Scholar] [CrossRef]

- Klir, G.J.; Parviz, B. A note on the measure of discord. In Uncertainty in Artificial Intelligence; Elsevier: Amsterdam, The Netherlands, 1992; pp. 138–141. [Google Scholar]

- Jousselme, A.L.; Liu, C.; Grenier, D.; Bossé, É. Measuring ambiguity in the evidence theory. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2006, 36, 890–903. [Google Scholar] [CrossRef]

- Deng, Y. Deng entropy. Chaos, Solitons & Fractals 2016, 91, 549–553. [Google Scholar]

- Pan, L.; Deng, Y. A New Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Belief Function and Plausibility Function. Entropy 2018, 20, 842. [Google Scholar] [CrossRef]

- Jiroušek, R.; Shenoy, P.P. A new definition of entropy of belief functions in the Dempster–Shafer theory. Int. J. Approx. Reason. 2018, 92, 49–65. [Google Scholar] [CrossRef]

- Yang, Y.; Han, D. A new distance-based total uncertainty measure in the theory of belief functions. Knowl. Syst. 2016, 94, 114–123. [Google Scholar] [CrossRef]

- Smets, P. Decision making in the TBM: the necessity of the pignistic transformation. Int. J. Approx. Reason. 2005, 38, 133–148. [Google Scholar] [CrossRef]

- Cobb, B.R.; Shenoy, P.P. On the plausibility transformation method for translating belief function models to probability models. Int. J. Approx. Reason. 2006, 41, 314–330. [Google Scholar] [CrossRef]

- Klir, G.J.; Lewis, H.W. Remarks on “Measuring ambiguity in the evidence theory”. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2008, 38, 995–999. [Google Scholar] [CrossRef]

- Klir, G.J. Uncertainty and Information: Foundations of Generalized Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Abellán, J. Analyzing properties of Deng entropy in the theory of evidence. Chaos Solitons Fractals 2017, 95, 195–199. [Google Scholar] [CrossRef]

- Smets, P. Decision making in a context where uncertainty is represented by belief functions. In Belief Functions in Business Decisions; Springer: Berlin, Germany, 2002; pp. 17–61. [Google Scholar]

- Daniel, M. On transformations of belief functions to probabilities. Int. J. Intell. Syst. 2006, 21, 261–282. [Google Scholar] [CrossRef]

- Cuzzolin, F. On the relative belief transform. Int. J. Approx. Reason. 2012, 53, 786–804. [Google Scholar] [CrossRef]

- Shahpari, A.; Seyedin, S. A study on properties of Dempster-Shafer theory to probability theory transformations. Iran. J. Electr. Electron. Eng. 2015, 11, 87. [Google Scholar]

- Jaynes, E.T. Where do we stand on maximum entropy? Maximum Entropy Formalism 1979, 15, 15–118. [Google Scholar]

- Klir, G.J. Principles of uncertainty: What are they? Why do we need them? Fuzzy Sets Syst. 1995, 74, 15–31. [Google Scholar]

- Ellsberg, D. Risk, ambiguity, and the Savage axioms. Q. J. Econ. 1961, 75, 643–669. [Google Scholar] [CrossRef]

- Dubois, D.; Prade, H. Properties of measures of information in evidence and possibility theories. Fuzzy Sets Syst. 1999, 100, 35–49. [Google Scholar]

- Abellan, J.; Moral, S. Completing a total uncertainty measure in the Dempster-Shafer theory. Int. J. Gen. Syst. 1999, 28, 299–314. [Google Scholar]

- Li, Y.; Deng, Y. Generalized Ordered Propositions Fusion Based on Belief Entropy. Int. J. Comput. Commun. Control 2018, 13. [Google Scholar] [CrossRef]

- Nguyen, H.T. On entropy of random sets and possibility distributions. Anal. Fuzzy Inf. 1987, 1, 145–156. [Google Scholar]

- Pal, N.R.; Bezdek, J.C.; Hemasinha, R. Uncertainty measures for evidential reasoning II: A new measure of total uncertainty. Int. J. Approx. Reason. 1993, 8, 1–16. [Google Scholar] [CrossRef]

- Zhou, D.; Tang, Y.; Jiang, W. An improved belief entropy and its application in decision-making. Complexity 2017, 2017. [Google Scholar] [CrossRef]

- George, T.; Pal, N.R. Quantification of conflict in Dempster-Shafer framework: A new approach. Int. J. Gen. Syst. 1996, 24, 407–423. [Google Scholar] [CrossRef]

| Definition | Cons.w DST | Non-neg | Max. ent | Monoton | Prob. cons | Add | Subadd | Range | Set. cons |

|---|---|---|---|---|---|---|---|---|---|

| Höhle | yes | no | no | no | yes | yes | no | yes | no |

| Smets | yes | no | no | no | no | yes | no | yes | no |

| Yager | yes | no | no | no | yes | yes | no | yes | no |

| Nguyen | yes | no | no | no | yes | yes | no | yes | no |

| Dubois-Prade | yes | no | yes | yes | no | yes | yes | yes | yes |

| Klir-Ramer | yes | yes | no | yes | yes | yes | no | no | yes |

| Klir-Parviz | yes | yes | no | yes | yes | yes | no | no | yes |

| Pal et al. | yes | yes | no | yes | yes | yes | no | no | yes |

| George-Pal | yes | no | no | no | no | no | no | no | yes |

| Maeda-Ichihashi | no | yes | yes | yes | yes | yes | yes | no | yes |

| Harmanec-Klir | no | yes | no | yes | yes | yes | yes | no | no |

| Abellán-Moral | no | yes | yes | yes | yes | yes | yes | no | yes |

| Jousselme et al. | no | yes | no | yes | yes | yes | no | yes | yes |

| Pouly et al. | no | yes | no | yes | yes | yes | no | no | yes |

| Jiroušek-Shenoy | yes | yes | yes | yes | yes | yes | no | no | no |

| Deng | yes | yes | no | yes | yes | no | no | no | no |

| Pan-Deng | yes | yes | no | yes | yes | no | no | no | no |

| Proposed method | yes | yes | no | yes | yes | yes | no | no | yes |

| Cases | |||||||||

|---|---|---|---|---|---|---|---|---|---|

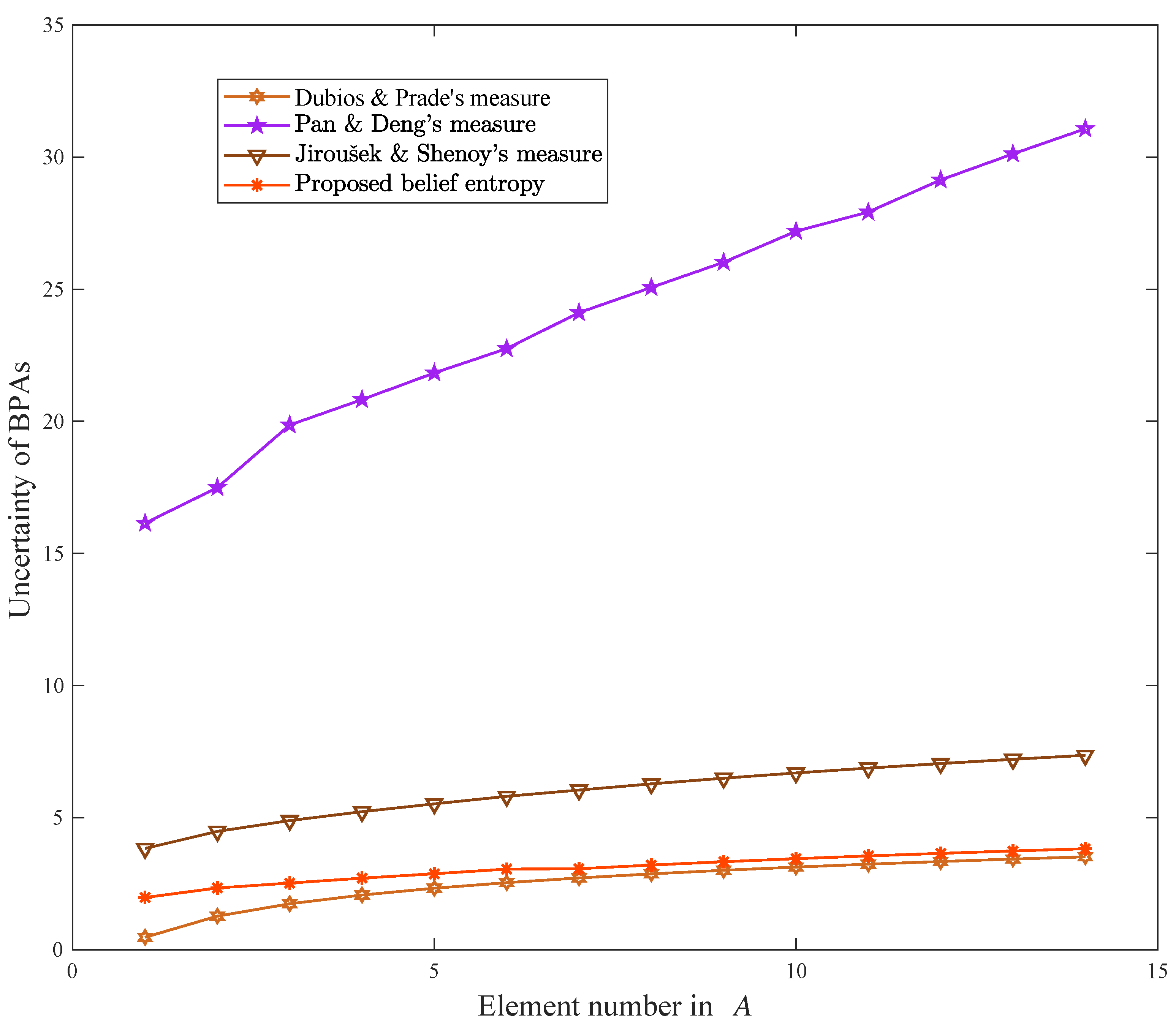

| A = | 0.4699 | 0.6897 | 0.3953 | 6.4419 | 3.3804 | 0.3317 | 16.1443 | 3.8322 | 1.9757 |

| A = | 1.2699 | 0.6897 | 0.3953 | 5.6419 | 3.2956 | 0.3210 | 17.4916 | 4.4789 | 2.3362 |

| A = | 1.7379 | 0.6897 | 0.1997 | 4.2823 | 2.9709 | 0.2943 | 19.8608 | 4.8870 | 2.5232 |

| A = | 2.0699 | 0.6897 | 0.1997 | 3.6863 | 2.8132 | 0.2677 | 20.8229 | 5.2250 | 2.7085 |

| A = | 2.3275 | 0.6198 | 0.1997 | 3.2946 | 2.7121 | 0.2410 | 21.8314 | 5.5200 | 2.8749 |

| A = | 2.5379 | 0.6198 | 0.1997 | 3.2184 | 2.7322 | 0.2383 | 22.7521 | 5.8059 | 3.0516 |

| A = | 2.7158 | 0.5538 | 0.0074 | 2.4562 | 2.5198 | 0.2220 | 24.1131 | 6.0425 | 3.0647 |

| A = | 2.8699 | 0.5538 | 0.0074 | 2.4230 | 2.5336 | 0.2170 | 25.0685 | 6.2772 | 3.2042 |

| A = | 3.0059 | 0.5538 | 0.0074 | 2.3898 | 2.5431 | 0.2108 | 26.0212 | 6.4921 | 3.3300 |

| A = | 3.1275 | 0.5538 | 0.0074 | 2.3568 | 2.5494 | 0.2037 | 27.1947 | 6.6903 | 3.4445 |

| A = | 3.2375 | 0.5538 | 0.0074 | 2.3241 | 2.5536 | 0.1959 | 27.9232 | 6.8743 | 3.5497 |

| A = | 3.3379 | 0.5538 | 0.0074 | 2.2920 | 2.5562 | 0.1877 | 29.1370 | 7.0461 | 3.6469 |

| A = | 3.4303 | 0.5538 | 0.0074 | 2.2605 | 2.5577 | 0.1791 | 30.1231 | 7.2071 | 3.7374 |

| A = | 3.5158 | 0.5538 | 0.0074 | 2.2296 | 2.5582 | 0.1701 | 31.0732 | 7.3587 | 3.8219 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Q.; Zhou, D.; Tang, Y.; Li, X.; Huang, J. A Novel Belief Entropy for Measuring Uncertainty in Dempster-Shafer Evidence Theory Framework Based on Plausibility Transformation and Weighted Hartley Entropy. Entropy 2019, 21, 163. https://doi.org/10.3390/e21020163

Pan Q, Zhou D, Tang Y, Li X, Huang J. A Novel Belief Entropy for Measuring Uncertainty in Dempster-Shafer Evidence Theory Framework Based on Plausibility Transformation and Weighted Hartley Entropy. Entropy. 2019; 21(2):163. https://doi.org/10.3390/e21020163

Chicago/Turabian StylePan, Qian, Deyun Zhou, Yongchuan Tang, Xiaoyang Li, and Jichuan Huang. 2019. "A Novel Belief Entropy for Measuring Uncertainty in Dempster-Shafer Evidence Theory Framework Based on Plausibility Transformation and Weighted Hartley Entropy" Entropy 21, no. 2: 163. https://doi.org/10.3390/e21020163