Abstract

The study of order patterns of three equally-spaced values in a time series is a powerful tool. The lag d is changed in a wide range so that the differences of the frequencies of order patterns become autocorrelation functions. Similar to a spectrogram in speech analysis, four ordinal autocorrelation functions are used to visualize big data series, as for instance heart and brain activity over many hours. The method applies to real data without preprocessing, and outliers and missing data do not matter. On the theoretical side, we study the properties of order correlation functions and show that the four autocorrelation functions are orthogonal in a certain sense. An analysis of variance of a modified permutation entropy can be performed with four variance components associated with the functions.

1. Order Patterns Fit Big Data

1.1. The Need for New Methods

A few decades ago, when time series analysis was created, it did suffer from a lack of data. Now, the challenge is an oversupply of data. Modern sensors are inexpensive. They continuously monitor heart and brain signals of patients or athletes, seismic waves, air pollution, radiation, etc. Within one second, hundreds of values can be obtained in one channel. Such time series must be evaluated automatically. To this end, old methods have to be modified, and new ones have to be developed that fulfil the following requirements.

- Basic methods should be simple and transparent.

- Few assumptions should be made on the underlying process.

- Algorithms should be resilient with respect to outliers and artifacts.

- Computations should be very fast.

Classical probabilistic methods have problems with the second property. They often require conditions like a multivariate normal distribution, which are not fulfilled in practice, or formulate their conditions in terms of limits so that they cannot be checked. On the other hand, machine learning procedures are in fashion and very successful for specific purposes, but they do not have the first property. They do not provide clear insight into the observed phenomena.

The analysis of order patterns in a signal fulfills all four requirements. It had been neglected until recently, when the tedious work of counting “larger” and “smaller” in a large series could be delegated to a computer. Today, the use of permutation entropy is well established [1,2,3]. Pattern frequencies have been studied in particular for chaotic dynamical systems (see [2]), correlated noises [4], reversible processes [5,6], and heartbeat RR-intervals [7,8]. In [9], pattern frequencies were studied for Gaussian processes, and the differences of pattern frequencies were introduced as a kind of autocorrelation function. Some applications and the connection with ordinary autocorrelation were discussed in [10]. A new version of permutation entropy was presented in [11].

1.2. Contents of the Paper

Here, we continue this work, concentrating on applications to big data series with a multiscale structure. We show that for such applications, order patterns are comparable or even more appropriate than classical methods. In Section 2, we explain the basic calculation of pattern frequencies, including treatment of ties and missing values. Section 3 presents the key concepts of the paper. The properties of order correlation functions are discussed in Section 5, leading to an analysis of variance of permutation entropy. The main purpose of this paper, however, is a proof of concept by application to biomedical signals, to speech and music, and to environmental and weather signals.

1.3. A Typical Example

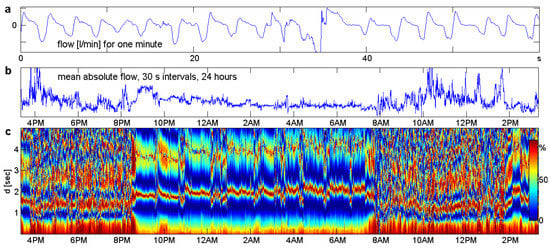

Figure 1 gives an idea of our intentions. Data came from a cooperation with Achim Beule (University of Münster and University of Greifswald, Department ENT, Head and Neck Surgery). To study respiration in everyday life, two sensors measuring air flow intensity with a sampling frequency of 50 Hz were fitted to the nostrils of a healthy volunteer. This experimental setting was by no means perfect. For example, mouth breathing could not be controlled. As a result, the data contained many artifacts, and even the 3000 values of a “clean minute” shown in Figure 1a looked pretty irregular. Traditional analysis takes averages over 30 s to obtain a better signal, as shown in Figure 1b. Much more information is contained in a function defined below. Figure 1c shows the collection of these functions for all minutes of the signal, visualized like a spectrogram. We see phases of activity and sleep, various interruptions of sleep, inaccurate measurements around 8:00 and a little nap after 14:00. The frequency of respiration can be read from the lower dark red stripe, which marks half of the wavelength. The upper light stripe marks the full wavelength: 4 s in sleep and 3 s or less in daily life.

Figure 1.

Our goal is to find structure in big data series. These are respiration data of a healthy volunteer, measured 50 times per second during 24 h of normal life. (a) One minute of clean data. (b) Mean flow for intervals of 30 s. (c) Order correlation function for each minute. While Panel b does not provide much information, our method in Panel c shows the differences and tendencies of respiration during the activity phases and sleep stages. Data from a cooperation with Achim Beule.

2. Pattern Frequencies

2.1. Basic Idea

We assumed we have a time series Let or another small integer, which we call the lag. For any two values that are d steps apart, we can decide whether or We can determine the relative frequency of an upward tendency and the complementary frequency of a downward tendency for the lag The statistics of these “length two order patterns”, or “ups and downs”, was discussed in [10] and will be embedded here into the statistics of patterns of length three.

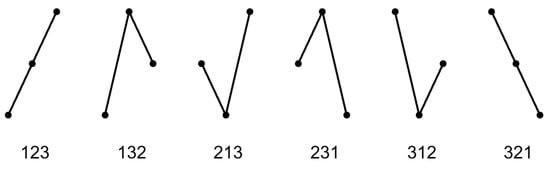

To any three equally-spaced values , we can assign one of the six order patterns in Figure 2. To the pattern , we determine its relative frequency in the time series, and this is done for each pattern as explained below. Patterns of length four or longer are more difficult to understand and will not be studied in this paper.

Figure 2.

The six order patterns of length three.

The important point is that we varied the lag or delay d — between one and 1000, say. Thus, is a function like the autocorrelation function. Both were obtained by a kind of averaging over a certain time period. Our hope was that such functions will express essential features of the process that generates the data and suppress unimportant individual properties of the observed series.

2.2. Stationarity

The are considered as estimates of the probabilities that belong to the underlying process. To justify this viewpoint, we must make a stationarity assumption for the process: the probability of a pattern does not change during time. This is a weak condition, for instance stationary increments in the usual sense will be sufficient [9]. A stronger condition is already needed when we define an average value of the

In practice, stationarity is not fulfilled for the complete time series, which in the case of Figure 1 involves more than four million values. For that reason, we always divided the series into short stationary parts. In the biomedical series of Figure 1, each part corresponds to one minute, with 3000 values This is sufficient to estimate the in a reliable manner and short enough to expect stationarity. There may be a few minutes where respiration drastically changes, but on the whole, the stationarity assumption is natural and appropriate.

2.3. Calculation of Pattern Frequencies

We have the time series with T values. While T for the whole series will be between and we now consider only a small stationary part, with typical length T between 500 and 20,000. Any three consecutive values form one of the six order patterns, or permutations, shown in Figure 2. We also considered three values with a time lag The points represent the pattern 231, for instance, if If there are ties or missing values, the pattern is not defined. The initial time point t runs from 1− The delay parameter d can vary between one and say, and has the same meaning as in classical autocorrelation. For fixed let n be the number of time points t for which a pattern is defined and the number of time points where we have pattern Then, the pattern frequency is

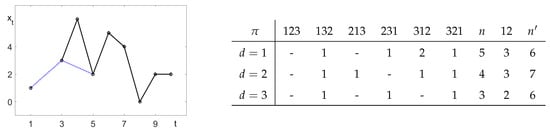

In Figure 3, we have and the value is missing. Thus, cannot be considered for but for all Moreover, there are three equal values , which exclude for and for The resulting are listed in the table. We obtained for instance. In Figure 3, we appended the values of of the length two order pattern 12. The associated number of values t with was larger than the number n for length three.

Figure 3.

Example time series and pattern frequencies The dotted line indicates

Since we had only six patterns, the statistics of pattern frequencies was excellent, even for short time series like For for instance, we would have patterns, and that would need a very long time series to estimate all those pattern frequencies accurately. Permutation entropy would still work, however, since it is an average over all patterns. The accuracy of estimates is an essential reason to restrict ourselves to patterns of length three.

3. Key Concepts and Viewpoints

3.1. Permutation Entropy and

Now, we assume that the pattern frequencies are calculated. For each pattern they could be drawn as a function of like an autocorrelation function. However, it turns out that the pattern frequencies themselves are not so informative. They should be combined in order to form better descriptions of the underlying process. The permutation entropy is:

where the sum is taken over the six patterns in Figure 2 or all permutations of some lengths In other words, we have the probability space of order patterns and take its Shannon entropy. The permutation entropy is a measure of the complexity of the underlying process [12] and has found many applications: distinguishing chaotic and noisy dynamics, classifying sleep stages, detecting epileptic activity from brain signals, etc.

A similar complexity measure introduced in [10,13] is the distance to white noise:

White noise is a series of independent random numbers from a fixed distribution, and it is well known that for this process, all pattern probabilities are equal to for length in particular for length three. We just took the quadratic Euclidean distance between the vectors of observed pattern frequencies and the frequencies of white noise. Such distances were studied in [14,15], using relative entropy. There is an even simpler interpretation: the average pattern frequency is always so the variance of pattern frequencies equals . In [11], it was pointed out that can be considered as a rescaled Taylor approximation of and it has a more convenient scale than

3.2. Order Correlation Functions

For the six patterns of length it turns out that four differences of pattern frequencies provide meaningful autocorrelation functions [9,10].

The names sound a bit clumsy, and the interpretation of the functions given below is not straightforward. However, it will be shown that these four functions include all information of the six pattern frequencies, that they are orthogonal in a certain sense, and form a variance decomposition of given by the Pythagoras-type formula:

3.3. Relative Order Correlation Functions

For the equal pattern probabilities of a white noise process, all terms of this equation are zero. Thus, the definitions were arranged so that white noise was a good null hypothesis for statistical tests. This aspect will not be worked out here. However, the variance components of were considered as some other ordinal autocorrelation functions and used in some applications, as Figure 1c.

A stationary Gaussian process is completely determined by its mean, variance, and autocorrelation function and can be directly calculated from [9]. For stationary Gaussian processes, and more generally, for all reversible processes, and are zero for every Thus, our correlation functions can be considered as a tool for non-Gaussian and irreversible processes. The recent papers of Martinez et al. [5] and Zanin et al. [6] defined the degree of irreversibility by comparing all order patterns with their reversed patterns, for different lengths Our approach provides three measures of irreversibility, with parameter d instead of The calculation of and in our examples will show that processes in practice are mostly irreversible and non-Gaussian.

3.4. Two Types of Data

In the literature on permutation entropy and order patterns [1,2,3,4,5,6,7,8,9,12,14], most models and examples act in discrete time: ARMA and GARCH models, discrete dynamical systems, like logistic or Henon maps, and RR-intervals of heartbeat. There is a smallest time unit, and the state of the system changes step by step. As a rule, these models have a strong deterministic component for small but a rather short memory. The study of order patterns with length between four and seven makes sense, and is justified by dynamical systems with “forbidden” patterns [2]. On the other hand, a study of large d will not reveal a new structure. This also holds for most chaotic systems with continuous time.

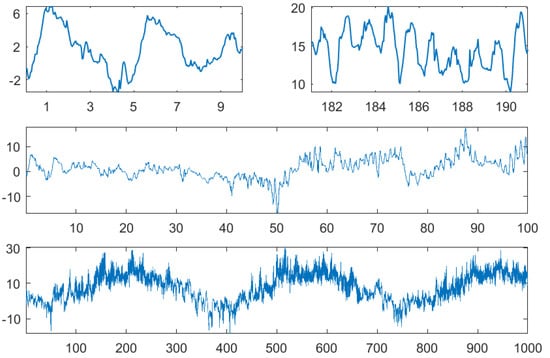

The data considered in this paper are different. Continuous measurements in reality generate “big time series” with a multiscale structure, as indicated in Figure 4, Figure 5, 11, and 16 below. Many rhythms with different periods overlap and interact. Below, we mention tides, with a daily, monthly, and yearly scale, and biomedical signals, with scales for heart, respiration, slow biorhythms, day, and night. For these systems, it is crucial to have a scaling parameter d with a wide range. The study of patterns with large length m and makes little sense.

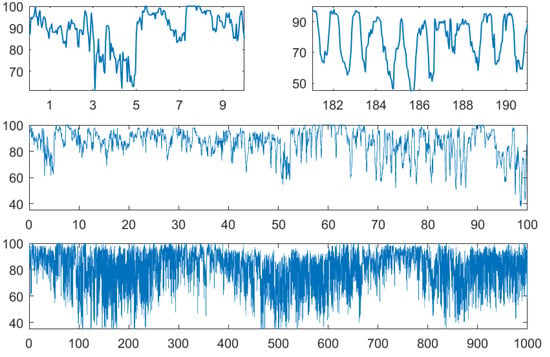

Figure 4.

Temperature in C for the first 10 days in January and July 1978, for 100 and 1000 days.

Figure 5.

Relative humidity in % for the first 10 days in January and July 1978, for 100 and 1000 days.

Current sensor technology makes it possible to measure with high accuracy almost as fast and as long as we want. Sometimes, a higher sampling rate will reveal new phenomena. For brain signals, 500 Hz can be appropriate, as mentioned in Section 9. In optics, sampling rates today are measured in picoseconds. On the conceptual level, the unit time step is replaced by considering t and d as continuous parameters assuming arbitrary positive values. We study limits for instance, even though order correlations are not defined for

Today’s challenge and bottleneck in time series analysis are the great continuous systems with interacting periodicities and dynamical effects over several scales. Here, we present some simple exploratory tools for their study. Appropriate model classes for such systems are still missing, although there is a well-developed theory of random processes in continuous time. Now let us start with data.

4. First Examples: Weather Data

4.1. The Data

To demonstrate the use of the above functions, we took hourly measurements of air temperature and relative humidity from the author’s town: Greifswald, North Germany. The German weather service DWD [16] offers such data for hundreds of stations, where results are expected to be similar. Measurements of our data started 1 January 1978. Figure 4 and Figure 5 show temperature and humidity for the first 10, 100, and 1000 days and also for the first 10 days of July 1978. While in summer, there was an obvious day-night rhythm, this need not be so in winter when bright sunshine often comes together with cold temperature. This effect is also visible in the bottom panel with data of almost three years.

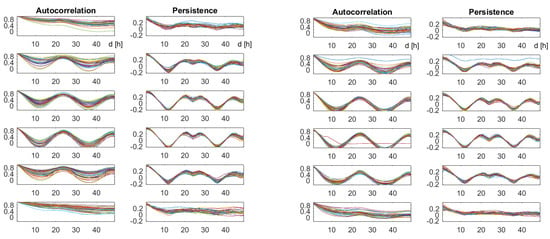

We now look for correlation functions that describe the underlying weather process. We calculated them bimonthly, and drew the curves for 35 years for the same season into one plot. When they agreed, they formed a nice description of the properties of the underlying process. Figure 6 compares classical autocorrelation with our persistence function.

Figure 6.

Autocorrelation and persistence for temperature (left part) and relative humidity (right part). The curves correspond to 35 consecutive years. The lag d runs from 1–49 h. Each row describes a two-month period, from January/February up to November/December.

4.2. Autocorrelation and Persistence

In winter (November to February), a day-night rhythm was not found, and all correlation functions differed greatly over the years. From March until October, the day-night rhythm was well recognized, both by autocorrelation and persistence, and both for temperature and humidity. Classical autocorrelation curves coincided best at the full period maximum at d = 24 h, while persistence accurately showed the minimum at the half period of 12 h. At the full period, there was a local minimum between two maximum points, which will be explained in the next section. Both functions succeeded in defining the basic period within each window of two months (1461 h of values). The coincidence over years seemed even better for persistence.

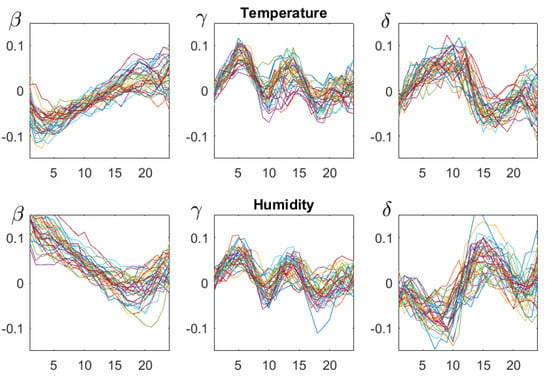

4.3. and

Is there more structure than the day-night rhythm? Figure 7 shows the functions and for the summer season (July–August). In summer, the air warms up fast and cools down slowly, which explains why was negative for small Humidity increases slowly and decreases fast, so was positive for small In view of Equation (11) below, the functions also showed the opposite behavior. This was found for all seasons. The structure of which was similar for temperature and humidity, was found only in spring and summer (April–August), and we have no interpretation for it. It seems that these functions can be used to characterize sites.

Figure 7.

The functions and for h, for July–August of 35 consecutive years. The upper row corresponds to temperature and the bottom row to relative humidity. Although there is considerable variation over the years, some common structures can be seen.

5. Properties of Correlation Functions

5.1. Two Pattern Identities

For fixed the basic equation for is:

The following equation is accurate for probabilities in a stationary process [9].

To show equality on the left, divide the probability into three terms, depending on whether is above, between, or below and For the equality on the right, we considered and summed up three cases for

5.2. Marginal Errors

If we calculate the frequencies for a time series with then is an average over To determine from the three patterns on the left, we averaged over When we took the three patterns on the right, we averaged over There was a marginal difference. Moreover, the exclusion of missing and equal values can be different for patterns of different lengths, as demonstrated above. Such marginal effects were the reason for the difference in the two expressions for in (3). Actually, (3) is a corollary of (10) and see [9].

As an example, we determined for Figure 3 the frequencies of the patterns 12 and 21, that is and For we had and for , we obtained When we determined we obtained If we used the definition in (3), we obtained

For and or 2, such marginal effects are really harmless. For large however, they can become larger since the margins of (10) will involve d time points on the right and left end of the time series. This can be a problem when we have a downward trend at the beginning of the series and an upward trend at the end. For EEG brain data, we helped ourselves by defining the parts of the large series not by equal length, but by zero crossings of the time series from the positive to the negative side [11]. Other data may require other solutions for improved estimates of pattern frequencies.

5.3. Classical Autocorrelation

The classical autocorrelation function (see [17,18]) measures the degree of coincidence of the time series with a copy shifted by d time steps. Thus, autocorrelation is large for small It will decrease, and the rate of decrease reflects the memory of the underlying process. If we have a periodic dynamics, autocorrelation will be large at the period, and small, mostly negative, at the half period. Moreover, the sign of can indicate an increasing or decreasing trend. The last point is not quite correct, because a trend will exclude stationarity, and then, strictly speaking, we cannot estimate autocorrelation.

5.4. Interpretation of

The direction of a trend is always indicated by and this can be consistent with the weak stationarity assumptions to estimate order pattern frequencies, for instance in the case of stationary increments with non-zero mean. The main purpose of as well as of and is the description of certain asymmetries in the process. The up-down balance is positive for small d if the process spends more time with increase than with decrease. When the process is stationary, this means that values will increase more slowly and decrease faster. The functions and are more difficult to interpret, but we can at least say that is tightly connected to since:

This follows from and from the definition of As in Section 2, the equation holds for pattern probabilities of a process and may have a marginal error for frequencies of a concrete time series. This equation justifies the name up-down scaling for

5.5. Persistence and Turning Rate

Persistence is the most common and most important of our functions. It says how often a relation or will persist in the next comparison of with . Up to the sign, it can replace autocorrelation. As shown in [10] (Section 4), it can be interpreted similarly, and is less susceptible to noise and nonlinear distortion. For small persistence measures the degree of smoothness of the time series. When we have a smooth function, then tends to the maximal value for We can also consider the complementary quantity:

which counts the frequencies of turning points (local maxima or minima) in the series. Obviously, is a measure of roughness, or variation [19].

In this paper, however, the functions were chosen so that they were all zero for white noise; and for the slightly more general case of an exchangeable process where all patterns of Figure 2 had the same probability In other words, white noise was the origin point of our coordinate system. This viewpoint was especially useful when we dealt with noisy signals, for instance EEG brain data. All our functions were the differences of pattern frequencies. For , we have:

Both (12) and (13) can be easily checked with (9). In the following, we systematically summarize the properties of autocorrelation functions. For the sake of completeness, the next tables include Spearman’s rank correlation, but not Kendall’s [20]. We think that for big time series, persistence better reflects Kendall’s idea to measure correlation by order comparison. This is why we used the letter

Table 1 shows the range of values for all correlation functions, adding for which series minimal and maximal values were attained. An alternating series changes up and down at each step, like , where the noise is added to avoid equal values. This works for not for continuous For and , we just gave an example series, which may give a feeling for what these functions measure.

Table 1.

Range and extreme cases for various correlation functions.

5.6. Symmetries of Order Functions

A function is even if and odd if = In the case of order correlation functions, even functions are those that assume the same values for a time series and its time-reversed series For odd functions, time reversal changes the sign of the correlation function. We can also ask for invariance under the change of sign of the series, which gives or for the combined change of sign and time reversal, The latter can be interpreted as a rotation of the graph of the series.

All autocorrelation behaved well under these symmetries. They either remained invariant, in which case we wrote a + in Table 2, or they changed sign, in which case we wrote Persistence is an even function like but our functions and were odd. This caused a discontinuity at unless the value was zero there. Actually, none of our correlation functions was defined for We simplified matters by restricting ourselves to strictly positive However, the problem will return when we consider periodic series.

Table 2.

Invariance of correlation functions under symmetries.

5.7. Periodicities

A time series is periodic with period L if for Theoretically, none of our correlation functions was then defined for because there were only equal values to compare. In reality, however, we had only approximate periodicity and could always determine values for Nevertheless, some phenomena occurred at and at half periods For a periodic time series, all autocorrelation functions were periodic with the same period; in practice, only approximately, since only part of the generating mechanism worked periodically. Therefore, it sufficed to consider and in Table 3.

Table 3.

Behavior of correlation functions of periodic series.

For an even function with period L, we had , and hence, That is, an even correlation function is mirror-symmetric with respect to the vertical line , as well as This is the case for and For an odd function with period L, we had , and hence, In particular, The graph of g then had a symmetry center at and at Both symmetry centers will be seen as zero crossings in the graphs of and even though theoretically, we may have a discontinuity at For noisy series, the study of symmetries of correlation functions can be quite helpful to recognize periodicities.

Let us now explain the behavior of persistence, which we have already seen in Figure 6. For we always had in a series with approximate period Thus, if was only a bit larger or smaller, we could not have the pattern 123 or 321. This means that had a clear minimum at If the noise level went to zero, the value would approach the absolute minimum This is the best way to determine a period with

At , the behavior was more complicated and different from the maximum of Assume that much of the time series consists of monotone pieces and is on an increasing branch. If we had the exact period L and took for a small , then , since the shifted values were further downwards on the repeating increasing branch. However, for with small positive , we had , since now the shifted values came further upwards on the repeating branch. Only for , we theoretically had and can say nothing. In practice, there will be some noise that disturbs the monotone branches and changes our conclusion for small Anyway, left and right of , the patterns 123 and 321 dominated, and we had two maxima of persistence there. However, for itself, the patterns 123 and 321 did not dominate anymore, and we obtained a local minimum of This is what we call a bumped maximum. The height and width of the bump decreased when the noise level went to zero. See Figure 6.

One can ask why among our four correlation functions, only was even and the other three were odd. Actually, we had six order patterns, so we could have three even and three odd functions. However, the patterns fulfilled the sum condition (9) and another condition, which directly follows from (10):

Both of these conditions are expressed by even functions of since the frequencies of a permutation and its reversal, like 213 and 312, have the same sign in the equation. Thus, we were left with four degrees of freedom for our pattern frequencies, and it is natural to obtain three odd correlation functions.

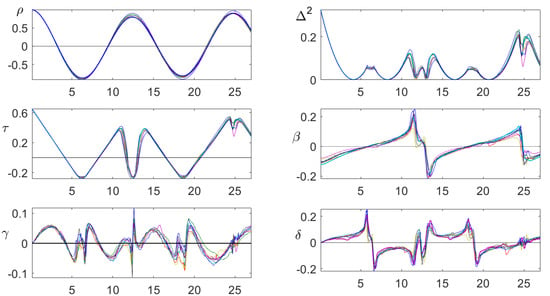

5.8. The Decomposition Theorem

Now, we show that and are an optimal choice of correlation functions, which explains in some way all the difference between our observed time series and white noise, a series of independent random numbers. The information given by these functions is largely independent, with the exception of dependence between and , which cannot be avoided. This will be made precise in a subsequent mathematical paper. Here, we argue that certain vectors corresponding to the functions are orthogonal, which can be the basis for ANOVA (analysis of variance) techniques.

Theorem 1

(Pythagoras theorem for order patterns of length three). For a process with stationary increments and an arbitrary lag the quadratic distance of pattern probabilities to white noise uniform pattern frequencies defined in (2) has the following representation:

Proof.

For arbitrary numbers , we have the following identity.

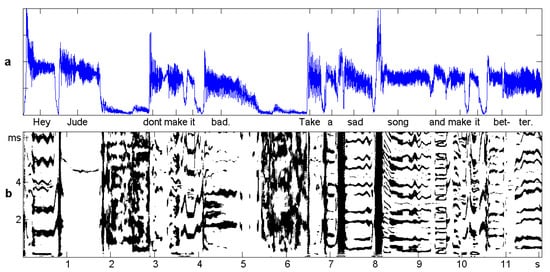

6. Case Study: Speech and Music

One potential field of application of our correlation functions is speech and music: speech recognition, speaker identification, emotional content of speech sounds, etc. This field is dominated by spectral techniques and by machine learning, and additional information on speech processes is certainly welcome. We are not going into detail, since we know this is hard work.Here, we just analyze the first 12 s of the song “Hey Jude” by The Beatles, to indicate what is possible.

Sliding Windows

The intensity of the signal is shown in Figure 8. Since music is sampled at 44 kHz, there are half a million amplitude values. For a rough analysis, we divided the large time series into 240 non-overlapping pieces, called windows, of length 50 ms. Thus, there were 240 time series of length for which we could determine the mean absolute amplitude and correlation functions. The delay d ran from ms to , which is 7 ms. The functions will not be drawn as curves, but as color-coded vertical sections of an image, which describes the whole dataset. The windows, or columns of the matrix, are numbered and the rows written as 0.7 ms.

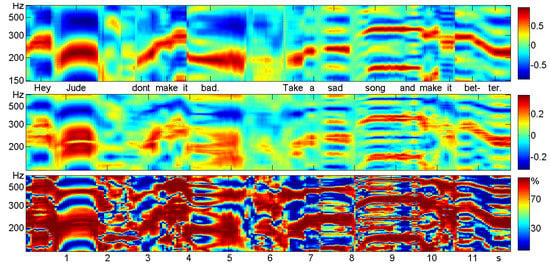

Figure 8.

Twelve seconds of the song “Hey Jude” by The Beatles. (a) The signal: mean of the absolute amplitude over non-overlapping windows of 50 ms. (b) The noisy places for which drawn in black. The vertical axis represents the lag considered as the wavelength, which ranges from 0–7 ms. Each column of the matrix corresponds to one window

As a first experiment, we chose the points for which They are colored black in Figure 8b. At these places, we could perhaps still confuse the signal with white noise; according to the simulations in [11], the p-value was larger than These places occupied 26% of the matrix, notably the “k” of “take” and “s” of “sad” and “song” with almost any For all the other , Theorem 1 says that some of our correlation functions are significant.

Sliding window analysis of speech and music and visualization in a matrix is well known from the spectrogram, where columns correspond to the Fourier spectrum of the windows. Since here, we were satisfied with the melody (the so-called pitch frequency of the singer), we took the correlogram instead and compared with persistence in Figure 9. For convenience, the d-scale was transformed into frequencies. So 500 Hertz corresponds to ms, and 200 Hz means ms. The maxima of and the bumped maxima of were very clear for all voiced sounds. They described the melody, and they did coincide.

Figure 9.

Correlogram (upper panel) and persistence (middle panel) of 12 s of “Hey Jude”. The scale of d was reverted and written as frequencies so that the melody could be read like musical notes. The bottom panel shows the percentage of , which is due to persistence.

The bottom panel of Figure 9 shows the percentage of that is due to persistence. Clearly, persistence was the dominating function, with 80% of at most places. However, there were also many places with 30% and less where the functions formed the larger part of Figure 10 shows some phonemes that may be characterized this way.

Figure 10.

Detail from Figure 9. The vowels of “Jude”, “don’t”, and the second syllable in “better” provide stationary parts of the time series lasting for 0.3, 0.1, and 0.3 s. Only 20 ms of each signal are shown in the top panel. Order correlation functions were calculated for six, resp. two, disjoint windows of a length of 50 ms and drawn for one pitch period, which equaled 4.5 ms for all three sounds.

7. Case Study: Tides

7.1. The Data

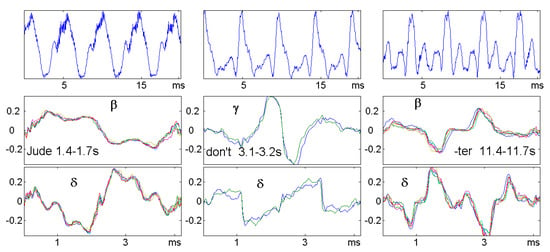

Tides of the oceans are a well-studied phenomenon, and tidal physics is a science that has developed over centuries. They form a good testbed for our correlation functions for two reasons. On the one hand, there are excellent data series of water levels at many stations, provided for the U.S. by the National Water Level Observation Network [21] over many years with 6-min intervals and few missing values. On the other hand, tides can be considered as an almost deterministic process driven by the interaction of the Moon, Sun, and Earth, with geographical site and coastal topography of the station as parameters. As Figure 11 shows, there are daily, monthly, and yearly scales of periodicities: tides at a place come two times a day, spring tides two times a month, etc.

Figure 11.

Tides form an almost deterministic process with periodicities on the scale of days, months, and years. Data from [21].

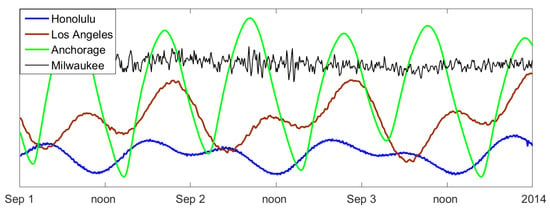

Since Anchorage, Alaska, is situated at the end of a narrow bay, the tidal range (difference between high tide and low tide) is 8 m. In Los Angeles, California, it is less than 2 m, and in Honolulu, Hawaii, it is only half a meter. Order patterns cannot distinguish the size of waves; we are only interested in their structure!Moreover, it makes no sense to compare zero levels of stations, so they were shifted for better visibility. For the sake of completeness, Figure 12 includes Milwaukee on Lake Michigan, where there are no tides and the differences are only 10 cm, caused by changes of wind and barometric pressure. This series shows what fluctuations arise when data represent random weather change.

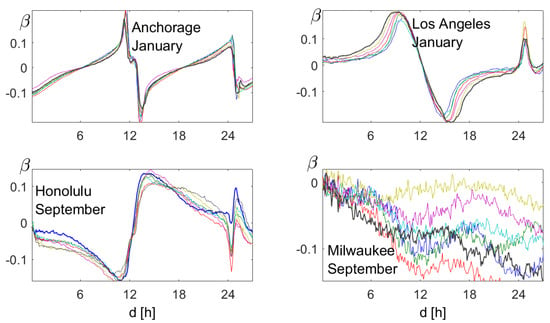

Figure 12.

Water levels for three days in 2014, measured every six minutes at different stations in the U.S. Data taken from [21]; shifted and scaled for better visibility.

7.2. Order Correlation

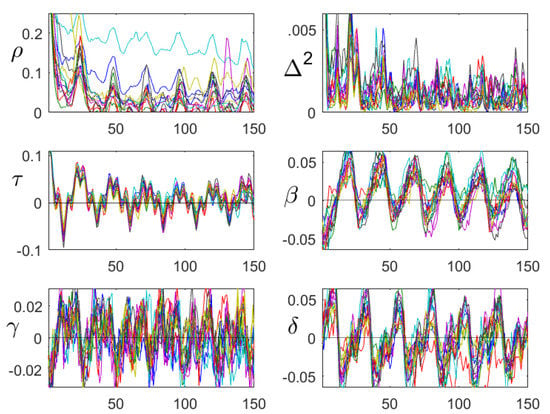

The functions given in Figure 13 showed that each of the ocean stations had its own profile, which did not change much in seven consecutive years. It did depend a bit on the season, however, so it was taken for one month in each year. Since we had 240 values per day, we could afford even shorter periods.

Figure 13.

Correlation functions for one month in seven consecutive years, at the four places of Figure 12. For a given month, each ocean station has its specific -profile.

Experience shows that water comes fast and goes slowly, so should be negative for small This is clearly true for Milwaukee, where no periodicity was found, and for Honolulu. For Anchorage and Los Angeles, it was the other way round. For the three ocean stations, had a zero-crossing for and a “discontinuity” at As explained in Section 5, this corresponds to the basic daily periodicity of 24.8 h (the difference from 24 is caused by the Moon).

Figure 14 shows all order correlations for Anchorage for the month January. Both the autocorrelation and persistence expressed the fact that there were two periodicities with periods of 12.5 and 25 h. For the maximum at 25 was larger than the first maximum at 12.5, while the minimum at 19 was not smaller than the first minimum at six. For persistence, the maximum at 25 had a small bump, while the maximum at 12.5 had a very large bump, since it was also a minimum corresponding to 25. The functions all showed a sharp structure that was preserved through consecutive years. This is no proof that they contain more information than , but it is an argument for further study of these functions.

Figure 14.

Classical autocorrelation and order correlation functions for the station at Anchorage in January. Persistence reflects the diurnal rhythm. The asymmetry functions all show a very specific structure, which remains stable through seven consecutive years. In contrast, does not seem to contain much structural information.

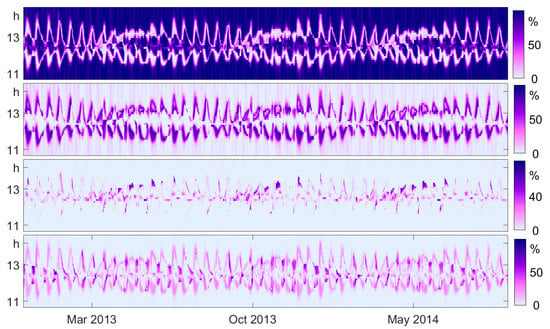

7.3. Relative Order Correlation

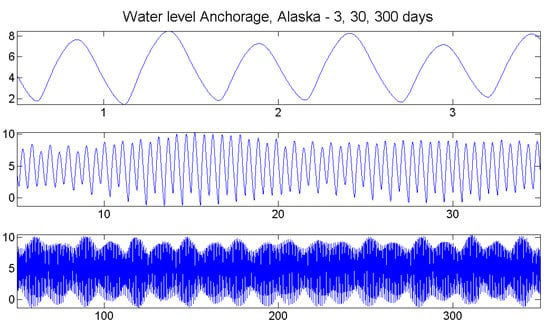

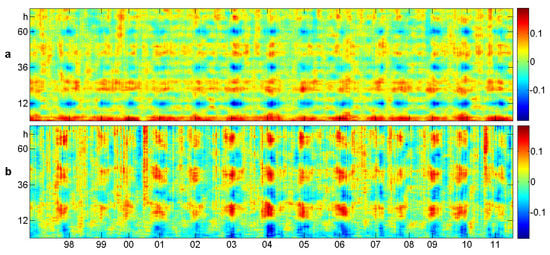

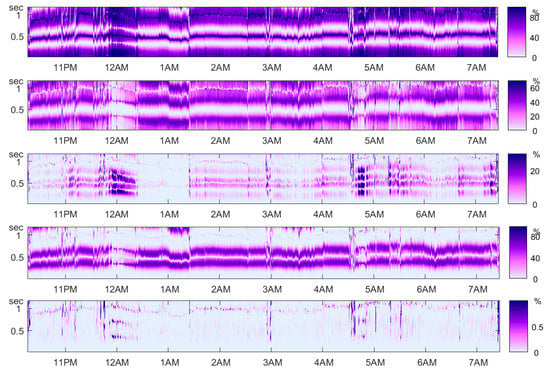

Let us check Theorem 1 with the tides data of Anchorage in Figure 15. Windows of length 1242 (five days and 4.2 h, just five main periods) were used for the years 2013 and 2014. For good resolution, the step size was half a day, so successive windows overlapped by 9/10 of their length. The lag d was restricted to the time between 10.5 and 14.5 h, around the first important period of 12.5 h where was large. Roughly speaking, the picture shows how the position and width of the main bump of in Figure 14 changed with time. There were small waves that described the bimonthly period and long waves describing biyearly periodicity.

Figure 15.

The division of into the components described in Theorem 1, illustrated for the tides at Anchorage in the years 2013 and 2014 in sliding window analysis. From top to bottom, the four panels correspond to the function , which takes the largest part of to , and

It can be seen how the different components fit together, with as the dominant component. The average value of was 76%, followed by 15% for 6% for and 2% for Since Theorem 1 holds for the probabilities of the process and here we have frequencies from data, there was a marginal error, as explained in Section 3. Since d was between 105 and 145, this error could be rather large. Here, the average error was 0.5%. There were very few places where the error was more than 1%, the maximum being 2%.

This example shows that the order correlation functions , and can also detect structure in data, even though they are squares and lack the information of the sign of the original functions. In the present case, as well as in Figure 1c, these relative quantities were more informative than the original ones.

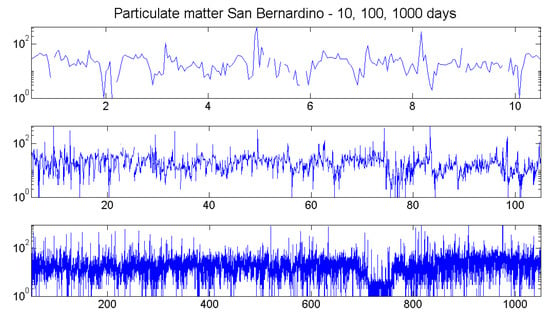

8. Case Study: Particulates

8.1. The Data

In the previous section, we had an almost deterministic process with excellent data. Now, we consider the opposite situation: measurements of particulates PM10 (aerodynamic diameter smaller than m). Such data, measured by a kind of vacuum cleaner with a light sensor for the dust, are notoriously noisy. Dust will not come uniformly; it will rather form clusters of various sizes. Although measurements can be taken several times in a second, they are averaged automatically at least over several minutes. In Figure 16, we consider hourly values from the public database [22] for Station 3215 at Trona-Athol in San Bernardino, California. They were transformed to logarithmic scale to reduce the influence of large values. Such a transform does not change order patterns.

Figure 16.

Particulate measurements are notoriously noisy. They show a weak daily and yearly rhythm, which can hardly be detected from the data. The PM10 measurements for Station 3215 Trona-Athol in San Bernardino, California, are from the public database [22].

There were 13% missing values, some of which can be detected in the upper panel of Figure 16. They affected the estimation of autocorrelation much more than the estimation of order correlation functions. This can be seen in Figure 17, where all functions were determined for 12 successive years. The variation was of course a bit larger than in the corresponding Figure 14 for the tides. The values of the order correlations were about three-times smaller than those for the tides. The important point, however, is the consistent structure of the order correlation functions. It was much better than classical autocorrelation or in the upper row. Persistence showed a loss of intensity from one. up to six days, though not as strong as autocorrelation. However, the structure of and even remained almost unchanged from Days 1–6.

Figure 17.

Correlation functions for hourly particulate measurements in San Bernardino [22] in 2000–2011. The 12 curves correspond to years and show the consistency of the correlation structure over the years. The order correlation functions keep the structure from Days 1–6 better than autocorrelation.

8.2. Sliding Windows Analysis

We took windows of length 1200, that is 50 days. Smaller windows do not provide sufficiently accurate estimates. Probably, the situation would be better if the data were measured every 6 min, like the tides above, even though there were larger variations. Figure 18 shows that the daily rhythm appears mainly in summer, not in winter, similar to temperature in Section 4. Thus, the curves of Figure 17 were obtained mainly from the summer measurements. The strength of daily rhythm and the “length of summer season” varied over the years.

Figure 18.

Sliding window analysis of (a) persistence and (b) up-down balance for hourly particulate measurements in San Bernardino 1997–2011 [22]. The lag d runs from 1–72, that is three days, on the vertical axis. Overlapping windows of 50 days were used. Daily rhythm is present mainly in summer, in both and

9. Brain and Heart Signals

9.1. The Data

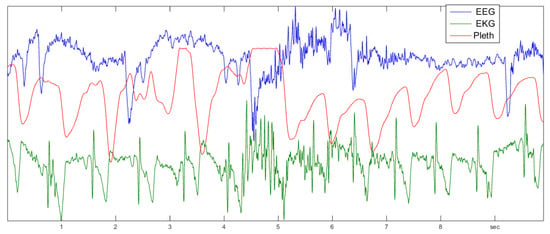

The examples in Section 4, Section 7, and Section 8 were presented as a proof of concept, with the idea to encourage readers to work out such applications more carefully. For biomedical data, however, the author has done a detailed study [11,13,19] of data published by Terzano et al. [23] at physionet [24], which seems very promising. Three types of data were considered, as indicated in Figure 19: the noisy EEG brain data (electroencephalogram), the well-known heart ECG (electrocardiogram), and the rather smooth plethysmogram, which measures the blood flow at the fingertip.

Figure 19.

Biomedical signals: 8 s of an electroencephalogram, an electrocardiogram, and a plethysmogram. Order correlation functions seem to apply to all of them.

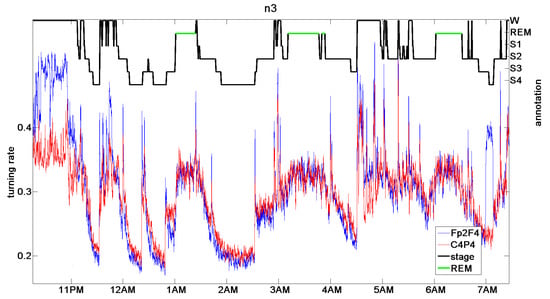

9.2. Sleep Stages

In [11], it was found that the function can distinguish sleep stages from the EEG data without any further processing. In [19], it was noted that it is actually the persistence, or turning rate, the main component of that can be taken as the measure of sleep depth. For all healthy control persons and a number of patients with different sleep problems, the results were as impressive as Figure 20, where manual annotation of sleep stages (wake, S1 to S4, REM sleep) by a medical expert and turning rate were almost parallel. The definition of sleep depth is a complicated and controversial issue among physicians, and a pure mathematical definition, even if it is not perfect, could be helpful.

Figure 20.

Coincidence of sleep stage annotation by a medical expert and turning rate for ms of two EEG channels [19]. Original data from Terzano et al. [23].

So far, the author has not been able reproduce this result with more recent data. Actually, there are huge databases of sleep data in different countries. However, these data are not of the same quality as those of [23] for two reasons. On the one hand, since there are so many data, they are sampled not at 500 Hz, but only at 200 Hz or less. On the other hand, EEG data are contaminated by the field of the electrical power net with a 50–60-Hz frequency. It is quite an effort to avoid this influence, especially in a hospital environment. Since conventional evaluation of EEG data considers s wavelength of at least 50 ms, experts are content with this type of data. The standard preprocessing is a low-pass filtering with a cutoff of 40 Hz, say. For determining the persistence or turning rate at ms, or frequency Hz, however, the 50-Hz noise of the power net is a real obstacle. This also holds for the ECG.

The plethysmogram is determined by a light sensor and can be measured without power noise contamination. Nevertheless, it is mostly measured at low frequencies like 30 Hz, because of its smooth appearance. Perhaps there is also a fine structure for higher resolution that can be studied by order methods. Figure 21 shows the validity of Theorem 1 for a plethysmogram of [23].

Figure 21.

Theorem 1 for the plethysmogram over a whole night. From top to bottom: The bottom panel contains the error of the equation of Theorem 1 on a scale from 0 up to 1%. Data of Person n3 from Terzano et al. [23].

10. Conclusions

Today’s time series of weather, geophysical and environmental observations, and from medical monitoring, are huge, covering several time scales of interest. Sliding windows analysis is an appropriate method, known from the spectrogram of speech data. Here we considered four order correlation functions instead of the spectrum. Their calculation is fast and simple, based on weak stationarity assumptions, and robust with respect to outliers and missing values. We studied the properties of the order correlation functions and demonstrated how they describe new aspects of the structure of the data.

Funding

Open access publishing was supported by Deutsche Forschungsgemeinschaft, Project Ba1332/11-1.

Acknowledgments

The author thanks Bernd Pompe for discussions over many years and for his opinion on the preprint [13], on which part of this work is based.

Conflicts of Interest

The author declares no conflict of interest.

References

- Amigo, J.; Keller, K.; Kurths, J. Recent progress in symbolic dynamics and permutation complexity. Ten years of permutation entropy. Eur. Phys. J. Spec. Top. 2013, 222, 247–257. [Google Scholar]

- Amigo, J.M. Permutation Complexity in Dynamical Systems; Springer Series in Synergetics; Springer: Berlin, Germany, 2010. [Google Scholar]

- Zanin, M.; Zunino, L.; Rosso, O.; Papo, D. Permutation entropy and its main biomedical and econophysics applications: A review. Entropy 2012, 14, 1553–1577. [Google Scholar] [CrossRef]

- Carpi, L.C.; Saco, P.M.; Rosso, O.A. Missing ordinal patterns in correlated noises. Phys. A 2010, 389, 2020–2029. [Google Scholar] [CrossRef]

- Martinez, J.H.; Herrera-Diestra, J.L.; Chavez, M. Detection of time reversibility in time series by ordinal patterns analysis. Chaos 2018, 28, 123111. [Google Scholar] [CrossRef] [PubMed]

- Zanin, M.; Rodríguez-González, A.; Menasalvas Ruiz, E.; Papo, D. Assessing time series reversibility through permutation patterns. Entropy 2018, 20, 665. [Google Scholar]

- Parlitz, U.; Berg, S.; Luther, S.; Schirdewan, A.; Kurths, J.; Wessel, N. Classifying cardiac biosignals using ordinal pattern statistics and symbolic dynamics. Comput. Biol. Med. 2012, 42, 319–327. [Google Scholar] [CrossRef] [PubMed]

- McCullough, M.; Small, M.; Iu, H.; Stemler, T. Multiscale ordinal network analysis of human cardiac dynamics. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2017, 375, 20160292. [Google Scholar] [CrossRef]

- Bandt, C.; Shiha, F. Order patterns in time series. J. Time Ser. Anal. 2007, 28, 646–665. [Google Scholar] [CrossRef]

- Bandt, C. Permutation entropy and order patterns in long time series. In Time Series Analysis and Forecasting; Rojas, I., Pomares, H., Eds.; Contributions to Statistics; Springer: Berlin, Germany, 2015. [Google Scholar]

- Bandt, C. A new kind of permutation entropy used to classify sleep stages from invisible EEG microstructure. Entropy 2017, 19, 197. [Google Scholar] [CrossRef]

- Bandt, C.; Pompe, B. Permutation entropy: A natural complexity measure for time series. Phys. Rev. Lett. 2001, 88, 174102. [Google Scholar] [CrossRef] [PubMed]

- Bandt, C. Autocorrelation type functions for big and dirty data series. arXiv 2014, arXiv:1411.3904. [Google Scholar]

- Rosso, O.; Larrondo, H.; Martin, M.T.; Plastino, A.; Fuentes, M. Distinguishing Noise from Chaos. Phys. Rev. Lett. 2007, 99, 154102. [Google Scholar] [CrossRef] [PubMed]

- López-Ruiz, R.; Nagy, Á.; Romera, E.; Sañudo, J. A generalized statistical complexity measure: Applications to quantum systems. J. Math. Phys. 2009, 50, 123528. [Google Scholar] [CrossRef]

- Deutscher Wetterdienst. Climate Data Center. Available online: ftp://ftp-cdc.dwd.de/pub/CDC/observations_germany (accessed on 20 May 2019).

- Brockwell, P.; Davies, R. Time Series, Theory and Methods, 2nd ed.; Springer: New York, NY, USA, 1991. [Google Scholar]

- Shumway, R.; Stoffer, D. Time Series Analysis and Its Applications, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Bandt, C. Crude EEG parameter provides sleep medicine with well-defined continuous hypnograms. arXiv 2017, arXiv:1710.00559. [Google Scholar]

- Ferguson, S.; Genest, C.; Hallin, M. Kendall’s tau for serial dependence. Can. J. Stat. 2000, 28, 587–604. [Google Scholar] [CrossRef]

- National Oceanic and Atmospheric Administration. National Water Level Observation Network. Available online: https://www.tidesandcurrents.noaa.gov/nwlon.html (accessed on 20 May 2019).

- California Air Resources Board. Available online: www.arb.ca.gov/adam (accessed on 20 May 2019).

- Terzano, M.; Parrino, L.; Sherieri, A.; Chervin, R.; Chokroverty, S.; Guilleminault, C.; Hirshkowitz, M.; Mahowald, M.; Moldofsky, H.; Rosa, A.; et al. Atlas, rules, and recording techniques for the scoring of cyclic alternating pattern (CAP) in human sleep. Sleep Med. 2001, 2, 537–553. [Google Scholar] [CrossRef]

- Goldberger, A.; Amaral, L.; Glass, L.; Hausdorff, J.; Ivanov, P.; Mark, R.; Mietus, J.; Moody, G.; Peng, C.K.; Stanley, H. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).