Information Theoretic Security for Broadcasting of Two Encrypted Sources under Side-Channel Attacks †

Abstract

:1. Introduction

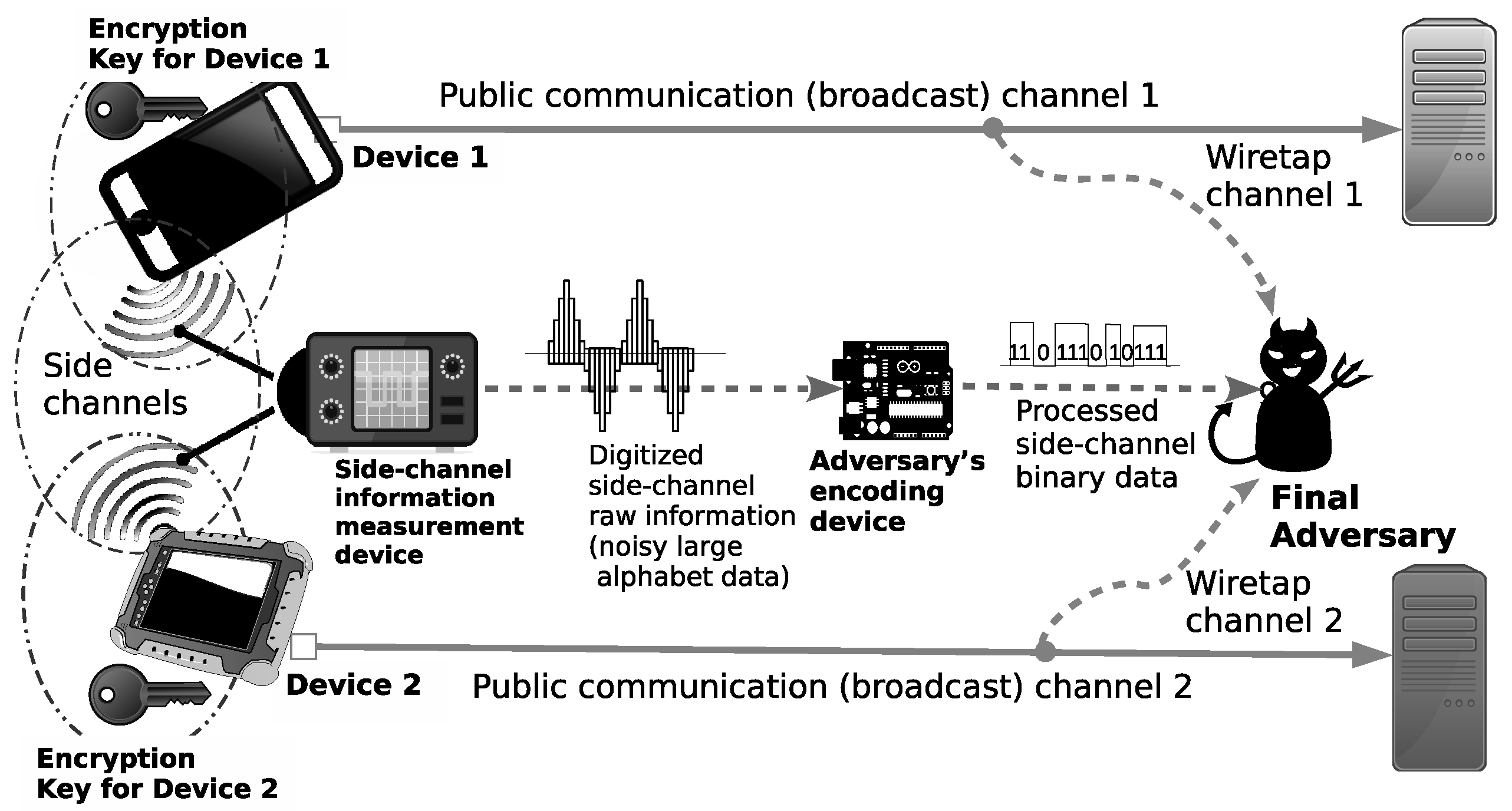

1.1. Modelling Side-Channel Attacks

1.2. Our Results and Methodology in Brief

- anyone with secret keys and can construct appropriate decoders that decrypt and encode the reencoded ciphertexts and into original sources and with exponentially decaying error probability, and

- the amount of information on the sources and gained by any adversary which collects the reencoded ciphertexts , the encoded side-channel information is exponentially decaying to zero as long as the side-channel encoding device encodes Z into with the rate which is inside the achievable rate region.

1.3. Related Works

1.4. Organization of This Paper

2. Problem Formulation

2.1. Preliminaries

2.2. Basic System Description

- The random keys and are generated by from uniform distribution. We may have a correlation between and .

- The sources and , respectively, are generated by and . Those are independent from the keys.

- Separate Sources Processing: For each , at the node , is encrypted with the key using the encryption function . The ciphertext of is given by

- Transmission: The ciphertexts and , respectively, are sent to the information processing center and through two public communication channels. Meanwhile, the keys and , respectively are sent to and through two private communication channels.

- Sink Nodes Processing: For each , in , we decrypt the ciphertext using the key through the corresponding decryption procedure defined by . It is obvious that we can correctly reproduce the source output from and by the decryption function .

- The two random pairs , and the random variable Z, satisfy , which implies that .

- By side-channel attacks, the adversary can access .

- The adversary , having accessed , obtains the encoded additional information . For each , the adversary can design .

- The sequence must be upper bounded by a prescribed value. In other words, the adversary must use such that, for some and for any sufficiently large n, .

- Encoding at Source node : For each , we first use to encode the ciphertext . A formal definition of is . Let . Instead of sending , we send to the public communication channel.

- Decoding at Sink Nodes : For each , receives from a public communication channel. Using common key and the decoder function , outputs an estimation of .

3. Proposed Idea: Affine Encoder as Privacy Amplifier

- Encoding at Source node : First, we use to encode the ciphertext Let . Then, instead of sending , we send to the public communication channel. By the affine structure (3) of encoder, we have thatwhere we set

- Decoding at Sink Node : First, using the linear encoder , encodes the key received through private channel into . Receiving from public communication channel, computes in the following way. From (4), we have that the decoder can obtain by subtracting from . Finally, outputs by applying the decoder to as follows:

4. Main Results

- (a)

- The region is a closed convex subset of . The region is a closed convex subset of .

- (b)

- The bound is sufficient to describe .

- On the reliability, for , goes to zero exponentially as n tends to infinity, and its exponent is lower bounded by the function .

- On the security, for any satisfying , the information leakage on goes to zero exponentially as n tends to infinity, and its exponent is lower bounded by the function .

- For each , any code that attains the exponent function is a universal code that depends only on not on the value of the distribution .

Examples of Extremal Cases

5. Proofs of the Main Results

5.1. Types of Sequences and Their Properties

- (a)

- (b)

- For ,

- (c)

- For ,

5.2. Upper Bounds on Reliability and Security

5.3. Random Coding Arguments

- (a)

- For any with , we have

- (b)

- For any , and for any , we have

- (c)

- For any with , and for any , we have

5.4. Explicit Upper Bound of

6. Alternative Formulation

6.1. Explanation on and and Their Comparison

6.2. Reliability and Security of Alternative Formulation

- is the one-time-pad encryption function defined as for ,

- is an affine encoder constructed based on a linear encoder as shown in Section 5.3,

- is the one-time-pad decryption function defined as for ,

- is a decoder function for linear encoder which is associated with the affine encoder . (See Section 5.3 for the detailed construction.).

7. Comparison to Previous Results

8. Discussion on the Outer-Bounds of Rate Regions and Open Problems

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Computation of

Appendix B. Computation of

Appendix C. Proof of Lemma 4

Appendix D. Proof of Lemma 7

Appendix E. Proof of Lemma 8

References

- Kocher, P.C. Timing Attacks on Implementations of Diffie-Hellman, RSA, DSS, and Other Systems. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 1996; Volume 1109, pp. 104–113. [Google Scholar]

- Kocher, P.C.; Jaffe, J.; Jun, B. Differential Power Analysis. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 15–19 August 1999; Volume 1666, pp. 388–397. [Google Scholar]

- Agrawal, D.; Archambeault, B.; Rao, J.R.; Rohatgi, P. The EM Side—Channel(s). Cryptographic Hardware and Embedded Systems-CHES 2002; Kaliski, B.S., Koç, Ç.K., Paar, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 29–45. [Google Scholar]

- Santoso, B.; Oohama, Y. Information Theoretic Security for Shannon Cipher System under Side-Channel Attacks. Entropy 2019, 21, 469. [Google Scholar] [CrossRef]

- Santoso, B.; Oohama, Y. Secrecy Amplification of Distributed Encrypted Sources with Correlated Keys using Post-Encryption-Compression. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3042–3056. [Google Scholar] [CrossRef]

- Santoso, B.; Oohama, Y. Privacy amplification of distributed encrypted sources with correlated keys. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 958–962. [Google Scholar]

- Oohama, Y.; Santoso, B. Information theoretical analysis of side-channel attacks to the Shannon cipher system. In Proceedings of the 2018 IEEE International Symposium on Information Theory (ISIT), Vail, CO, USA, 17–22 June 2018; pp. 581–585. [Google Scholar]

- Csiszár, I. Linear Codes for Sources and Source Networks: Error Exponents, Universal Coding. IEEE Trans. Inform. Theory 1982, 28, 585–592. [Google Scholar] [CrossRef]

- Oohama, Y. Intrinsic Randomness Problem in the Framework of Slepian-Wolf Separate Coding System. IEICE Trans. Fundam. 2007, 90, 1406–1417. [Google Scholar] [CrossRef]

- Santoso, B.; Oohama, Y. Post Encryption Compression with Affine Encoders for Secrecy Amplification in Distributed Source Encryption with Correlated Keys. In Proceedings of the 2018 International Symposium on Information Theory and Its Applications (ISITA), Singapore, 28–31 October 2018; pp. 769–773. [Google Scholar]

- Oohama, Y. Exponent function for one helper source coding problem at rates outside the rate region. In Proceedings of the 2015 IEEE International Symposium on Information Theory (ISIT), Hong Kong, China, 14–19 June 2015; pp. 1575–1579. Available online: https://arxiv.org/pdf/1504.05891.pdf (accessed on 17 January 2019).

- Johnson, M.; Ishwar, P.; Prabhakaran, V.; Schonberg, D.; Ramchandran, K. On compressing encrypted data. IEEE Trans. Signal Process. 2004, 52, 2992–3006. [Google Scholar] [CrossRef]

- Maurer, U.; Wolf, S. Unconditionally Secure Key Agreement and The Intrinsic Conditional Information. IEEE Trans. Inform. Theory 1999, 45, 499–514. [Google Scholar] [CrossRef]

- Maurer, U.; Wolf, S. Infromation-Theoretic Key Agreement: From Weak to Strong Secrecy for Free. In Proceedings of the International Conference on the Theory and Applications of Cryptographic Techniques, Bruges, Belgium, 14–18 May 2000; Volume 1807, pp. 351–368. [Google Scholar]

- Brier, E.; Clavier, C.; Olivier, F. Correlation Power Analysis with a Leakage Model. In Cryptographic Hardware and Embedded Systems—CHES 2004; Joye, M., Quisquater, J.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 16–29. [Google Scholar] [Green Version]

- Coron, J.; Naccache, D.; Kocher, P.C. Statistics and secret leakage. ACM Trans. Embed. Comput. Syst. 2004, 3, 492–508. [Google Scholar] [CrossRef]

- Köpf, B.; Basin, D.A. An information-theoretic model for adaptive side-channel attacks. In Proceedings of the 14th ACM Conference on Computer and Communications Security, Alexandria, VA, USA, 29 October–2 November 2007; pp. 286–296. [Google Scholar]

- Backes, M.; Köpf, B. Formally Bounding the Side-Channel Leakage in Unknown-Message Attacks. In Proceedings of the European Symposium on Research in Computer Security, Málaga, Spain, 6–8 October 2008; Volume 5283, pp. 517–532. [Google Scholar]

- Micali, S.; Reyzin, L. Physically Observable Cryptography (Extended Abstract). In Proceedings of the Theory of Cryptography Conference, Cambridge, MA, USA, 19–21 February 2004; Volume 2951, pp. 278–296. [Google Scholar]

- Standaert, F.; Malkin, T.; Yung, M. A Unified Framework for the Analysis of Side-Channel Key Recovery Attacks. In Proceedings of the Annual International Conference on the Theory and Applications of Cryptographic Techniques, Cologne, Germany, 26–30 April 2009; Volume 5479, pp. 443–461. [Google Scholar]

- de Chérisey, E.; Guilley, S.; Rioul, O.; Piantanida, P. An Information-Theoretic Model for Side-Channel Attacks in Embedded Hardware. In Proceedings of the 2019 IEEE International Symposium on Information Theory, Paris, France, 7–12 July 2019. [Google Scholar]

- de Chérisey, E.; Guilley, S.; Rioul, O.; Piantanida, P. Best Information is Most Successful Mutual Information and Success Rate in Side-Channel Analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2019, 49–79. [Google Scholar]

- Ahlswede, R.; Körner, J. Source Coding with Side Information and A Converse for The Degraded Broadcast Channel. IEEE Trans. Inform. Theory 1975, 21, 629–637. [Google Scholar] [CrossRef]

- El Gamal, A.; Kim, Y.H. Network Information Theory; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Csiszár, I.; Körner, J. Information Theory, Coding Theorems for Discrete Memoryless Systems, 2nd ed.; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Oohama, Y.; Santoso, B. Information Theoretic Security for Side-Channel Attacks to The Shannon Cipher System. Preprint. 2018. Available online: https://arxiv.org/pdf/1801.02563.pdf (accessed on 18 January 2019).

- Oohama, Y.; Han, T.S. Universal coding for the Slepian-Wolf data compression system and the strong converse theorem. IEEE Trans. Inform. Theory 1994, 40, 1908–1919. [Google Scholar] [CrossRef]

- Yamamoto, H. Coding theorems for Shannon’s cipher system with correlated source outputs, and common information. IEEE Trans. Inf. Theory 1994, 40, 85–95. [Google Scholar] [CrossRef]

| Network System | Side-Channel Adversary | Correlated Keys | |

|---|---|---|---|

| Previous work 1 [5,6] | Distributed Encryption (2 senders, 2 receivers) | No | Yes |

| Previous work 2 [4,7] | Two Terminals (1 sender, 1 receiver) | Yes | No |

| This paper | Broadcast Encryption (1 sender, 2 receivers) | Yes | Yes |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santoso, B.; Oohama, Y. Information Theoretic Security for Broadcasting of Two Encrypted Sources under Side-Channel Attacks †. Entropy 2019, 21, 781. https://doi.org/10.3390/e21080781

Santoso B, Oohama Y. Information Theoretic Security for Broadcasting of Two Encrypted Sources under Side-Channel Attacks †. Entropy. 2019; 21(8):781. https://doi.org/10.3390/e21080781

Chicago/Turabian StyleSantoso, Bagus, and Yasutada Oohama. 2019. "Information Theoretic Security for Broadcasting of Two Encrypted Sources under Side-Channel Attacks †" Entropy 21, no. 8: 781. https://doi.org/10.3390/e21080781