Real-Time Motion Tracking for Indoor Moving Sphere Objects with a LiDAR Sensor

Abstract

:1. Introduction

1.1. Application of LiDAR

1.2. Tracking Algorithms Based on LiDAR

1.3. Application of Kalman Filter

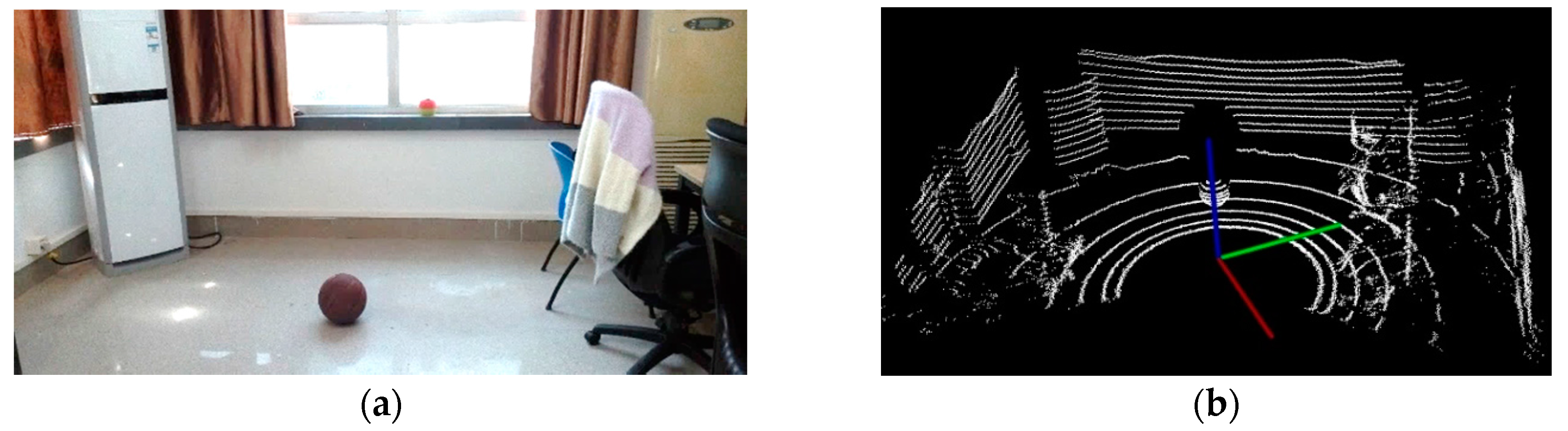

2. Detection of Moving Spherical Object

2.1. The Velodyne System

2.2. Outliers and Noise Filtering

2.3. Fast Ground Segmentation

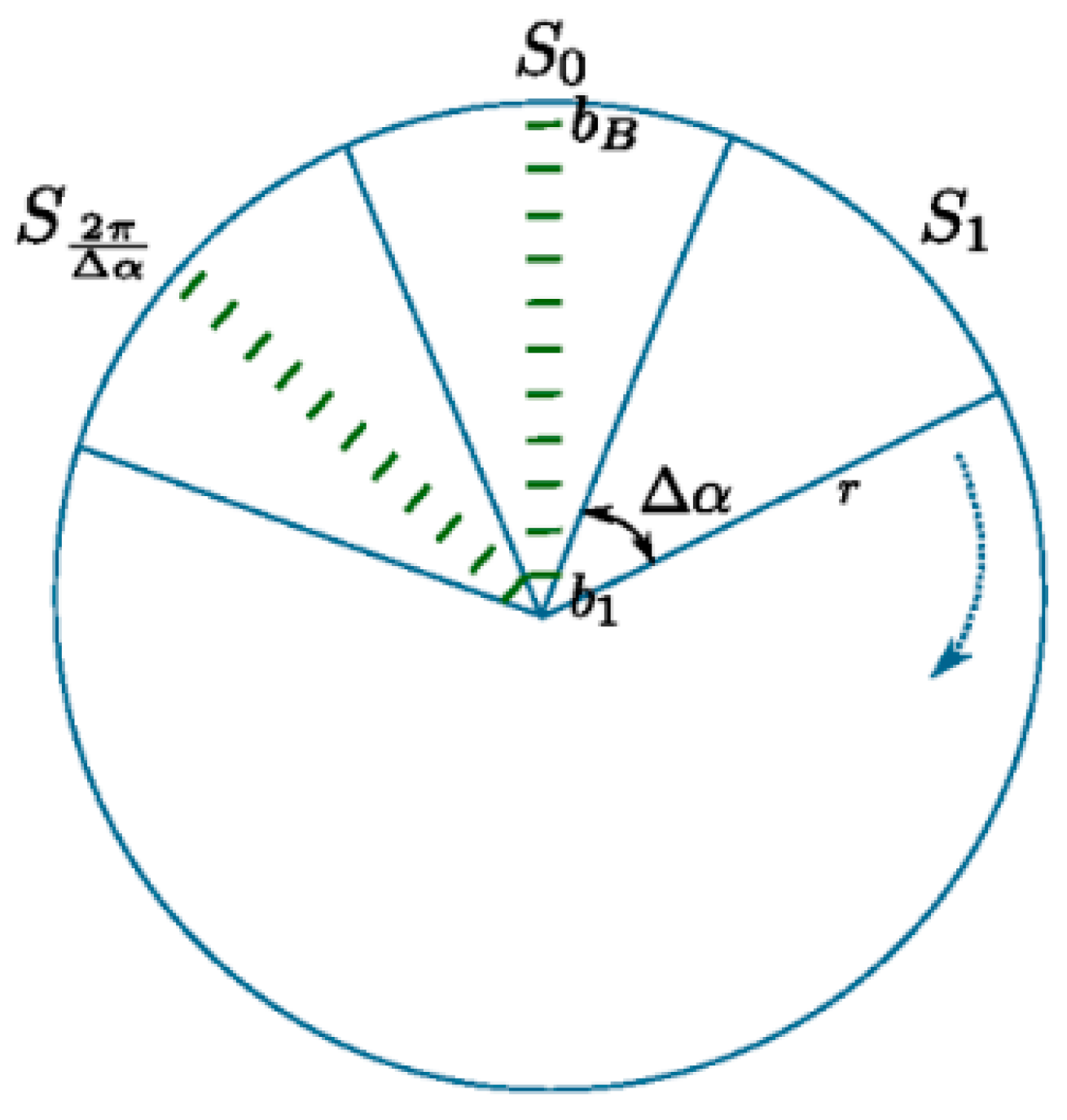

2.3.1. 3D Point-Cloud Data Set Mapping

- Define the unordered 3D point clouds from a scan time of the LiDAR sensor as , where denotes the number of 3D point clouds. The denotes a 3D point, given by the Euclidean coordinates to the ego-coordinate system with original point at the center of the LiDAR sensor.

- The x-o-y plane denotes a circle with a radius of , and then cut the circle equally into multiple discrete sectors, as shown in Figure 4. The denotes the angle of each sector plane, so the number of sectors .

- represents each sector, where , so that each point can be classified into a sector plane according to its projection on the x-o-y plane, expressed as a segment ():where represents the angle within [0, 2π) between the positive direction of x-axis and x-o-y plane, representing y-value of , representing x-value of , and representing the angle of each sector plane.

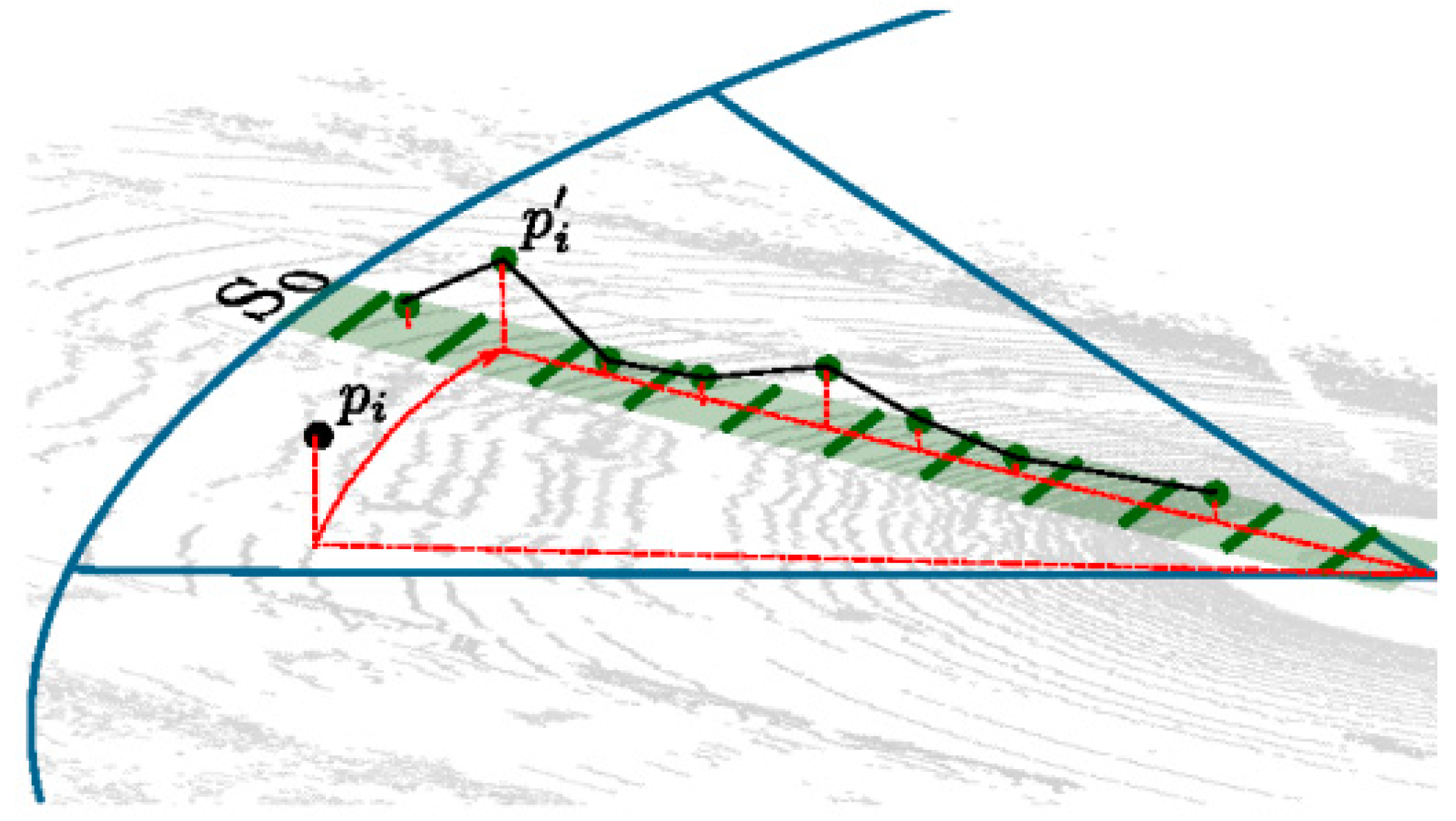

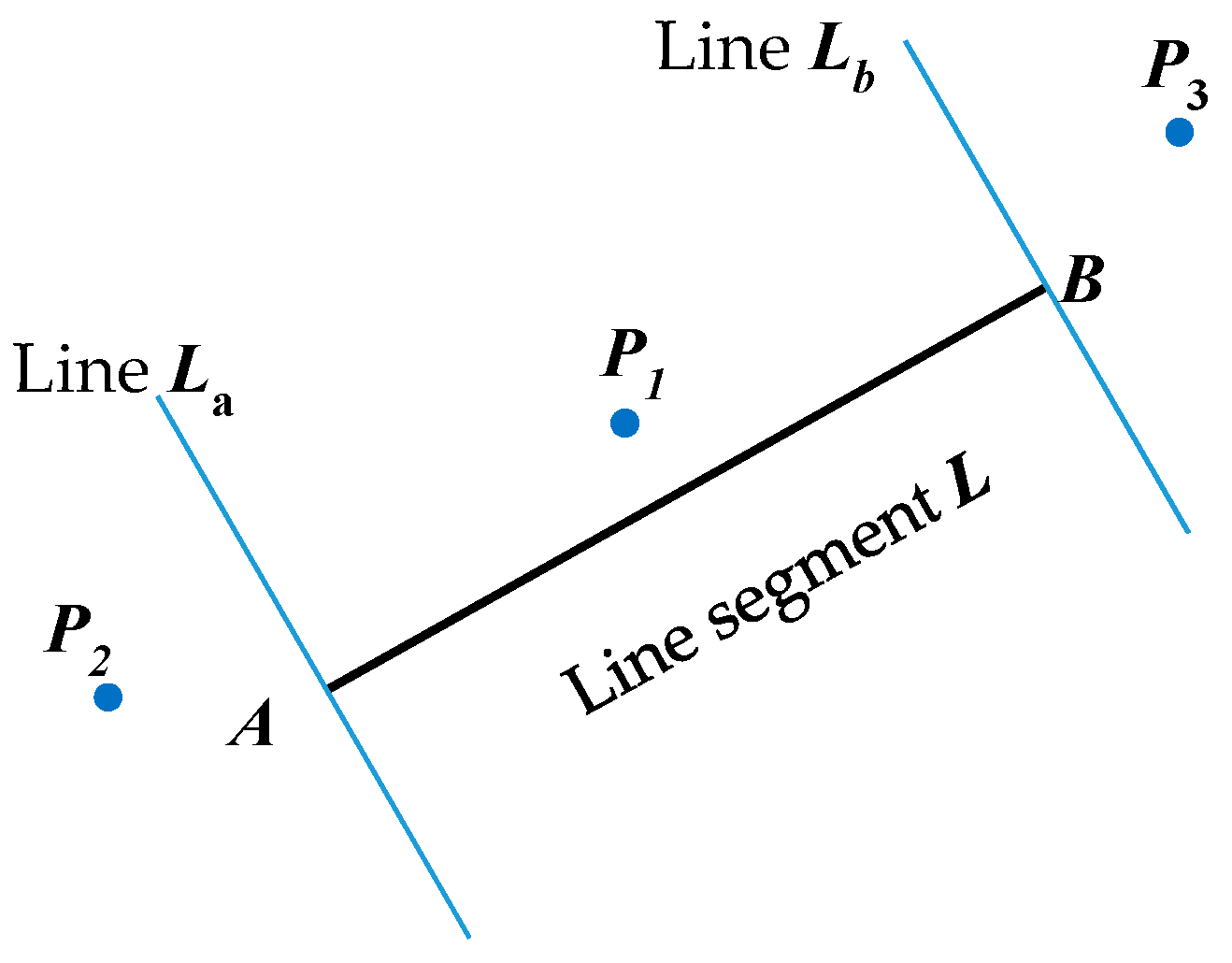

2.3.2. Fast Ground Segmentation

- The line’s slope must not exceed a certain threshold .

- The line’s absolute y-intercept b must not exceed a certain threshold .

- The root mean square error of the fit must not exceed a certain threshold .

- The distance of the first point of a line to the line previously fitted must not exceed , enforcing smooth transitions between pairs of successive lines.

| Algorithm 1. Extraction of lines for one segment SS |

| 1: , c = 0, |

| % denotes a set of line set in segment S, c denotes count of loop, denotes a point of line |

| 2: for j = 0 in MAX_B do % for each bin |

| 3: if then % if there is a point mapping in |

| 4: if || >= 2 then % if the number of points in bigger than two |

| 5: () = fitline() % line fit to get of L |

| 6: if <= ∧ ( > || ∨ <= ) ∧ fitError() < then |

| % if match condition of thresholds |

| 7: % add to |

| 8: else |

| 9: () fitline () % line fit to get of L |

| 10: |

| 11: % clear |

| 12: cc + 1 % next line segment |

| 13: jj − 1 % next distance |

| 14: else % if the number of points in smaller than two |

| 15: if c = 0 ∨ () ∨ then % if the first point or the distance of point and line match thresholds |

| 16: % add to |

2.4. Object Segmentation

2.4.1. Euclidean Clustering of Point Clouds

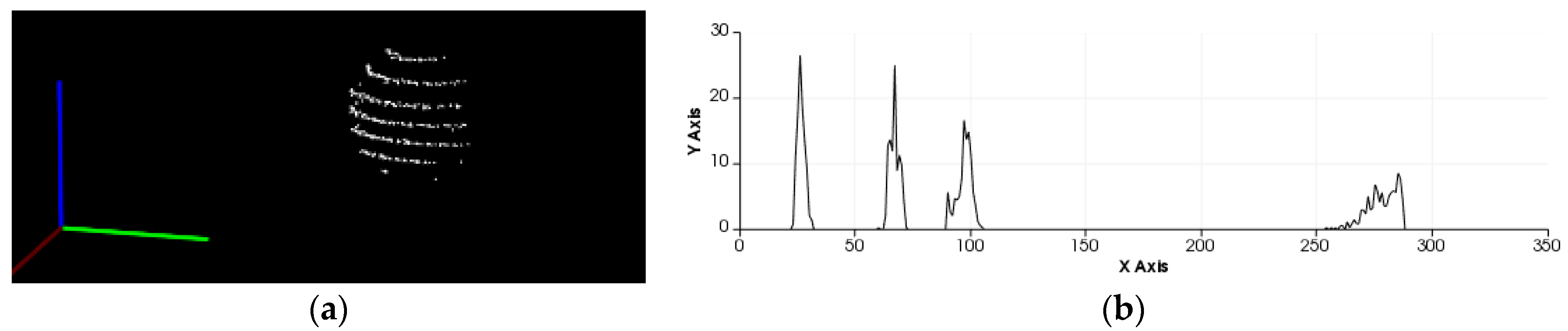

2.4.2. VFH Descriptor Extraction

2.4.3. Feature Match of FLANN

- (1)

- Acquire the point clouds data sets using the LiDAR sensor at different distance between the sphere object and sensor, and then extract VFH features for each point clouds model.

- (2)

- Load the above VFH features into memories and convert the data into matrix format.

- (3)

- Create the k-d (k-dimensional) tree with the converted matrix data, and save the index of k-d tree for the direct search match.

- (4)

- Input the VFH feature and the index of k-d, and search the nearest neighbor along the k-d tree for the input data.

- (5)

- Achieve the target point clouds if the difference between the searching results and VFH is less than the given threshold.

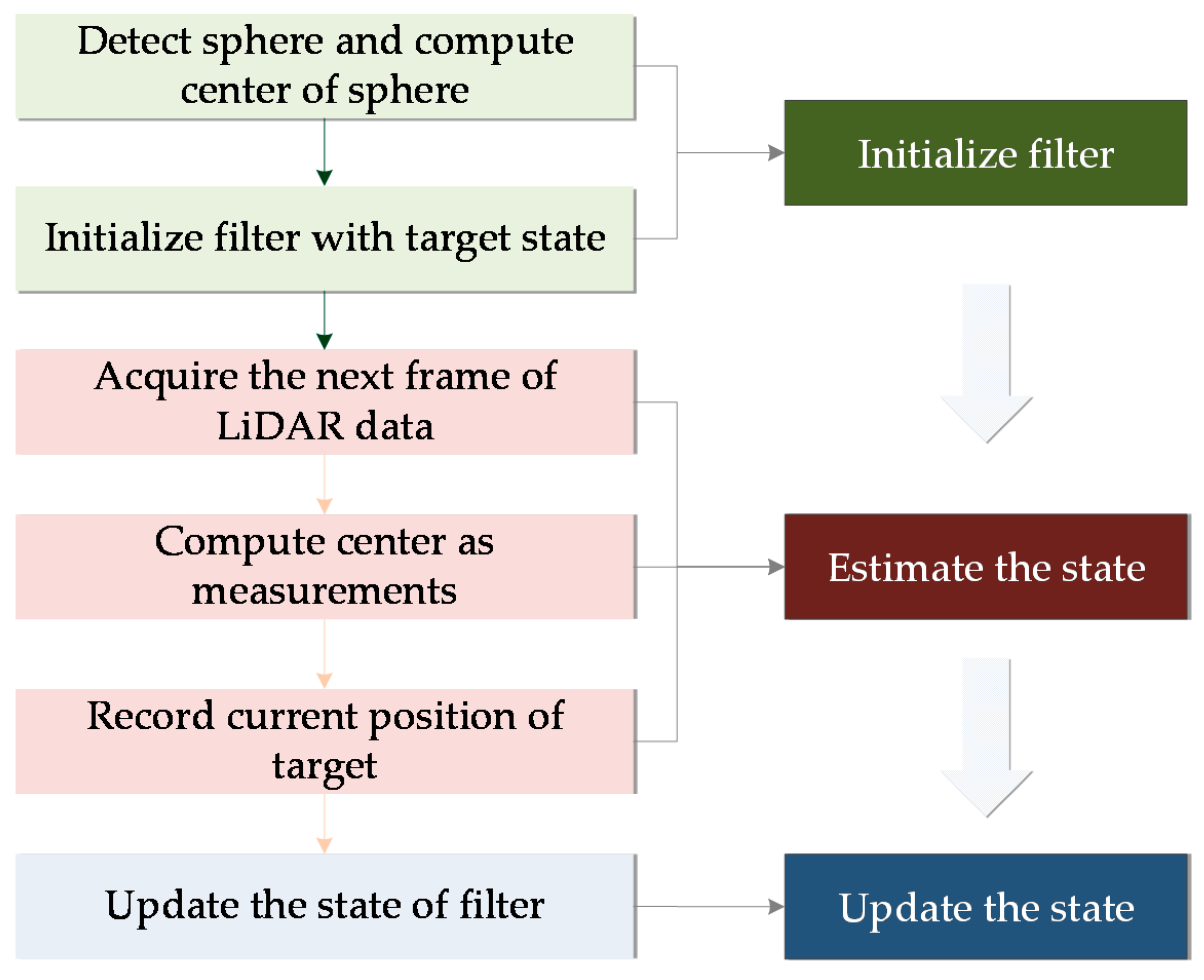

3. Tracking

3.1. Kalman Filtering

| Algorithm 2. Kalman Filter |

| Input:, % object position for time step t from sensor |

| Output: , % a position estimation of object |

| 1: initialize t, , A, P, Q, R % t represents prediction time moment, is the known posterior % state estimation at time moment k − 1; A represents state transition matrix; % P represents the covariance matrix, Q denotes covariance of the random % signals, and R is the matrix of observation noise covariance. |

| 2: if filterStop = false then % end with convergence of click the ‘stop’ button. |

| 3: % calculate predicting position estimation, according to Equation (16) |

| 4: P ← APAT + Q % calculate priori covariance matrix, according to Equation (17) |

| 5: K ← PCT(CPCT + R) % calculate Kalman Gain matrix, according to Equation (26) |

| 6: ← + K( − C) % calculate optimal estimation value, according to Equation (23) |

| 7: P ← (I − KC)P % calculate covariance, according to Equation (27), I denotes unit matrix |

| 8: t ← t + 1 |

| 9: end if |

3.2. Particle Filtering

| Algorithm 3. KLD sampling algorithm |

| Input: , observations , limits and; |

| Output: |

| 1: % initializing |

| 2: do % generating samples |

| 3: sampling from discrete distributions under the weight of known , the sequence is |

| 4: sampling from using |

| 5: % calculate the importance weights |

| 6: % update the normalization factor |

| 7: % insert the sample into the sample set |

| 8: if ( fall in ) then % update the number of |

| 9: |

| 10: |

| 11: % update the number of generated samples |

| 12: while () % stop when come to the limits K-L with Equation (35) |

| 13: for i: = 1, …, n do % normalize importance weights |

| 14: |

4. Experimentation and Discussions

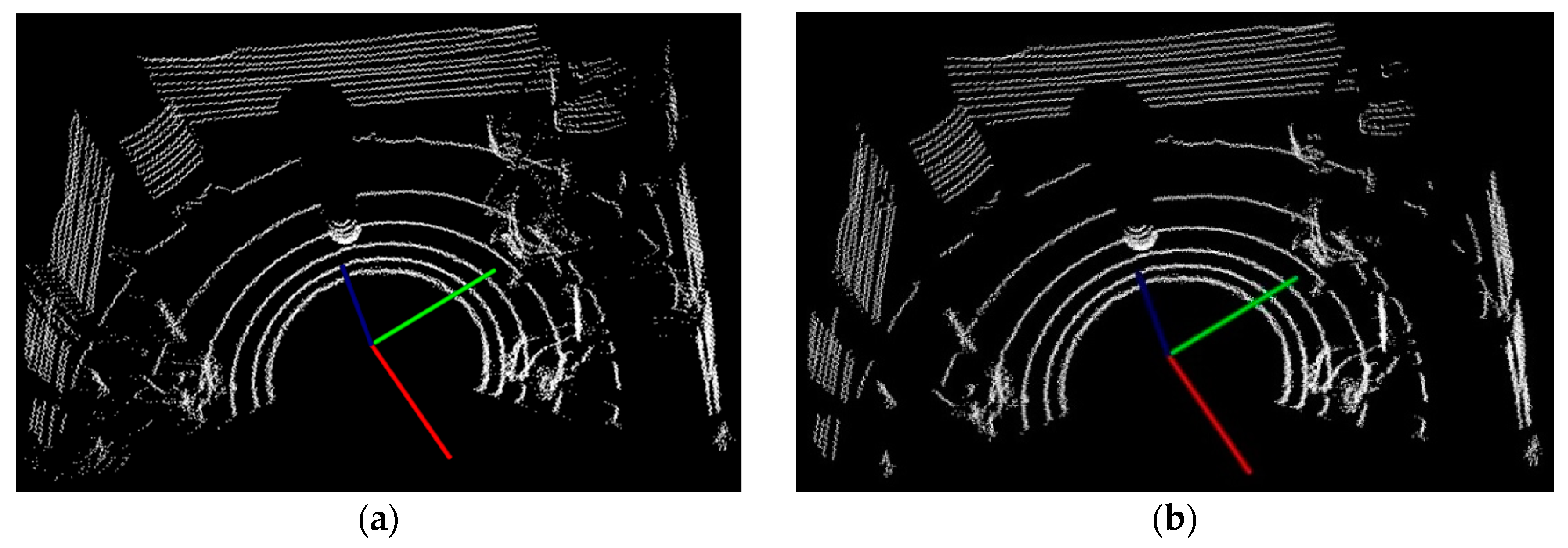

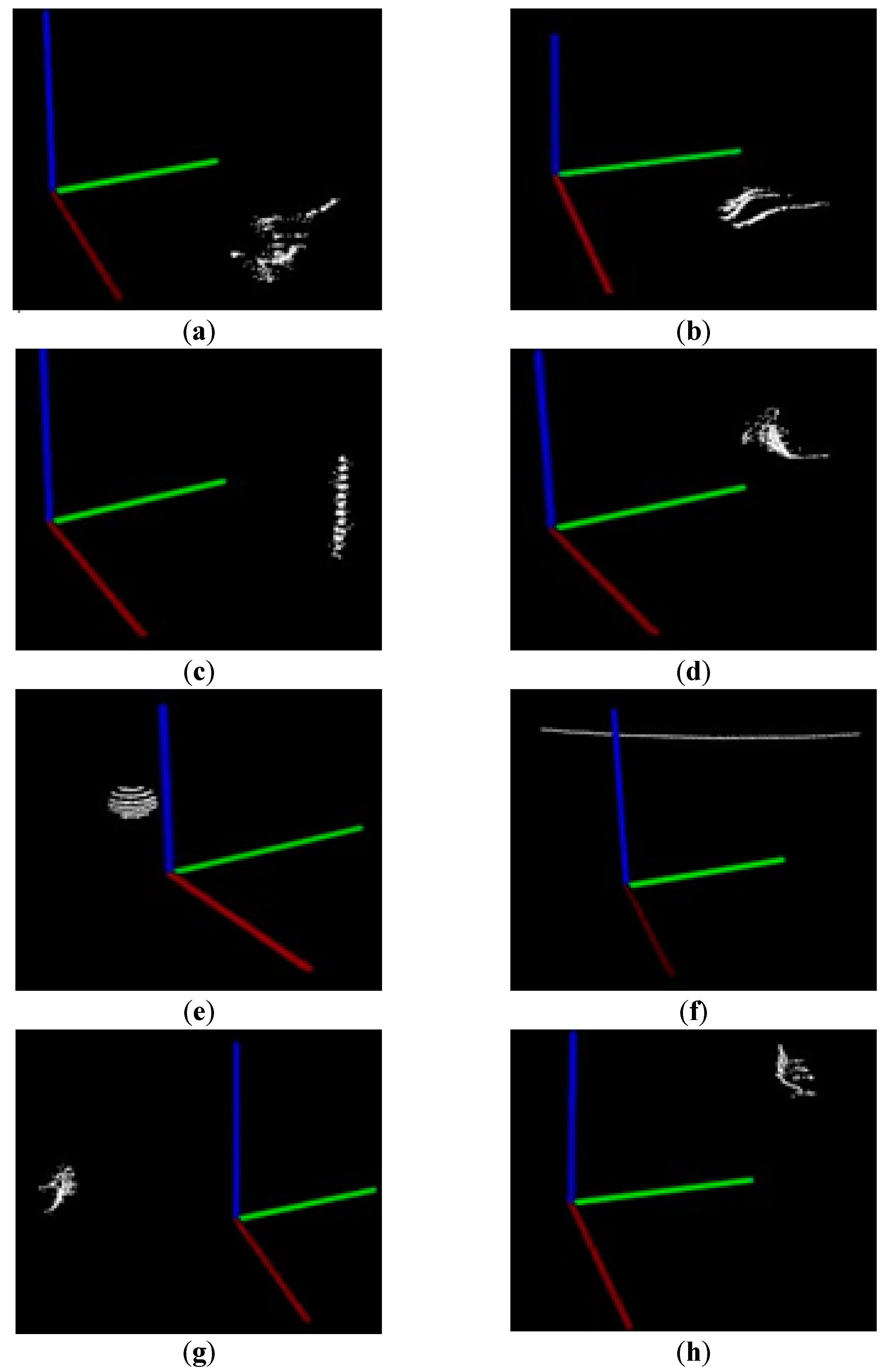

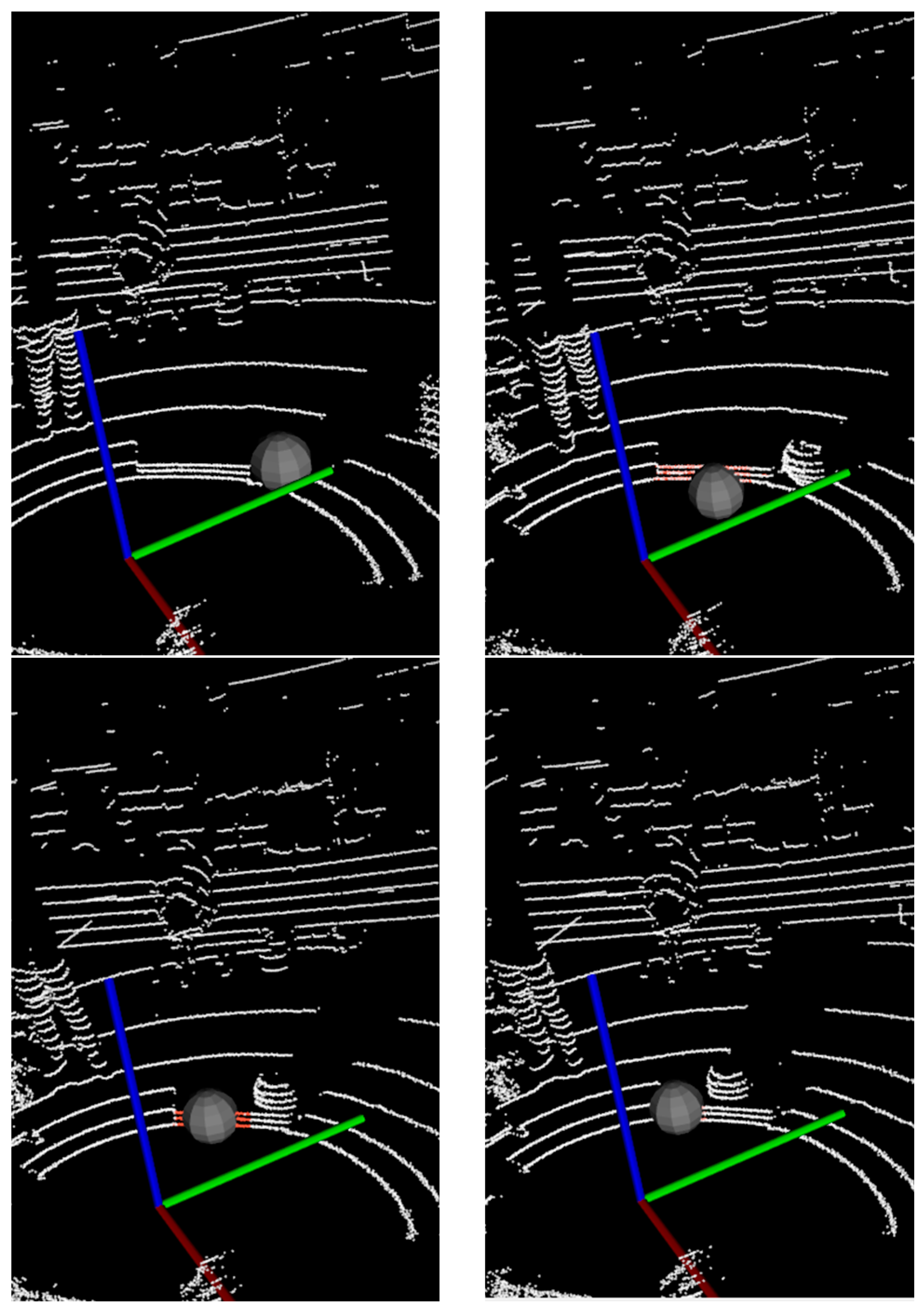

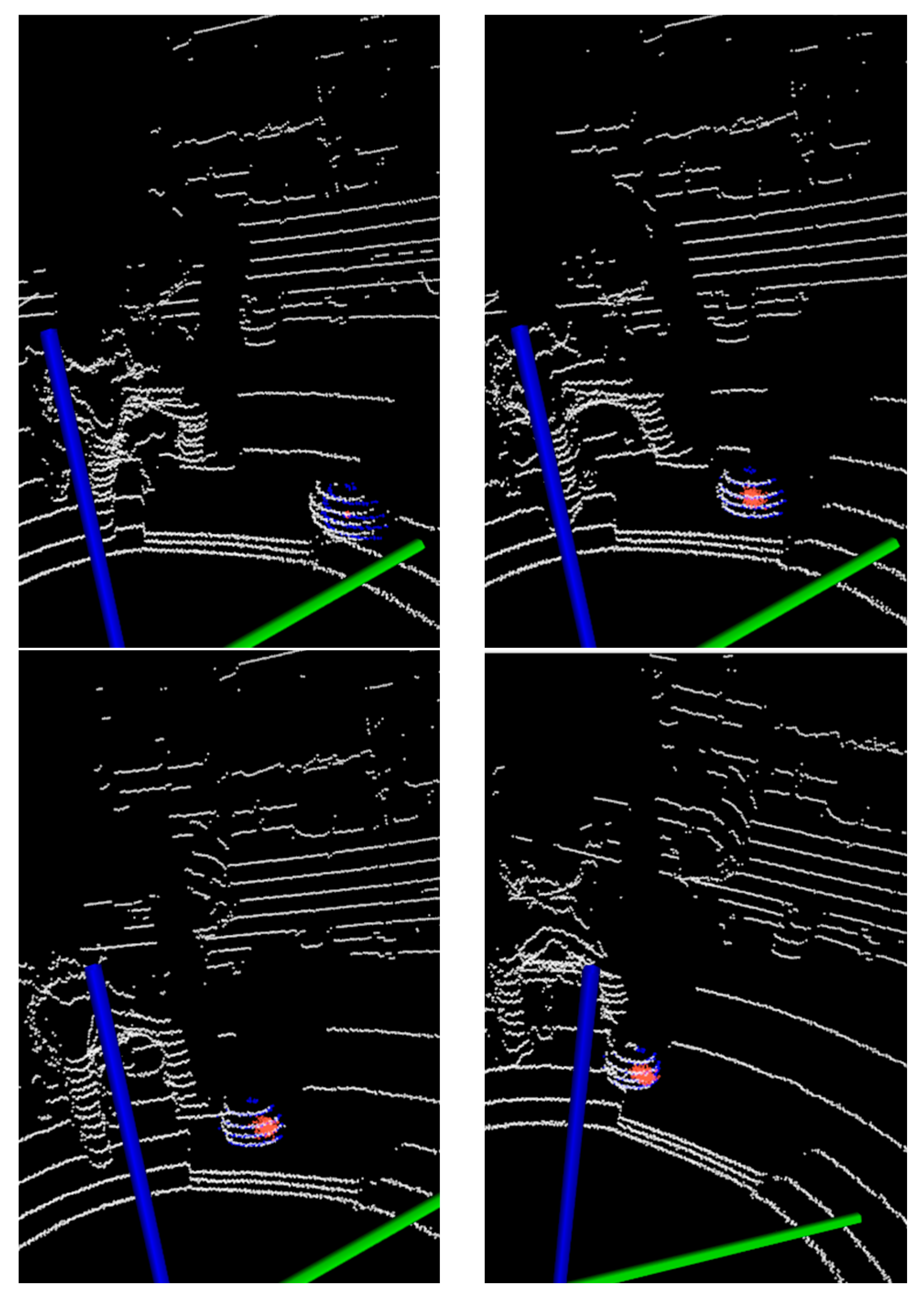

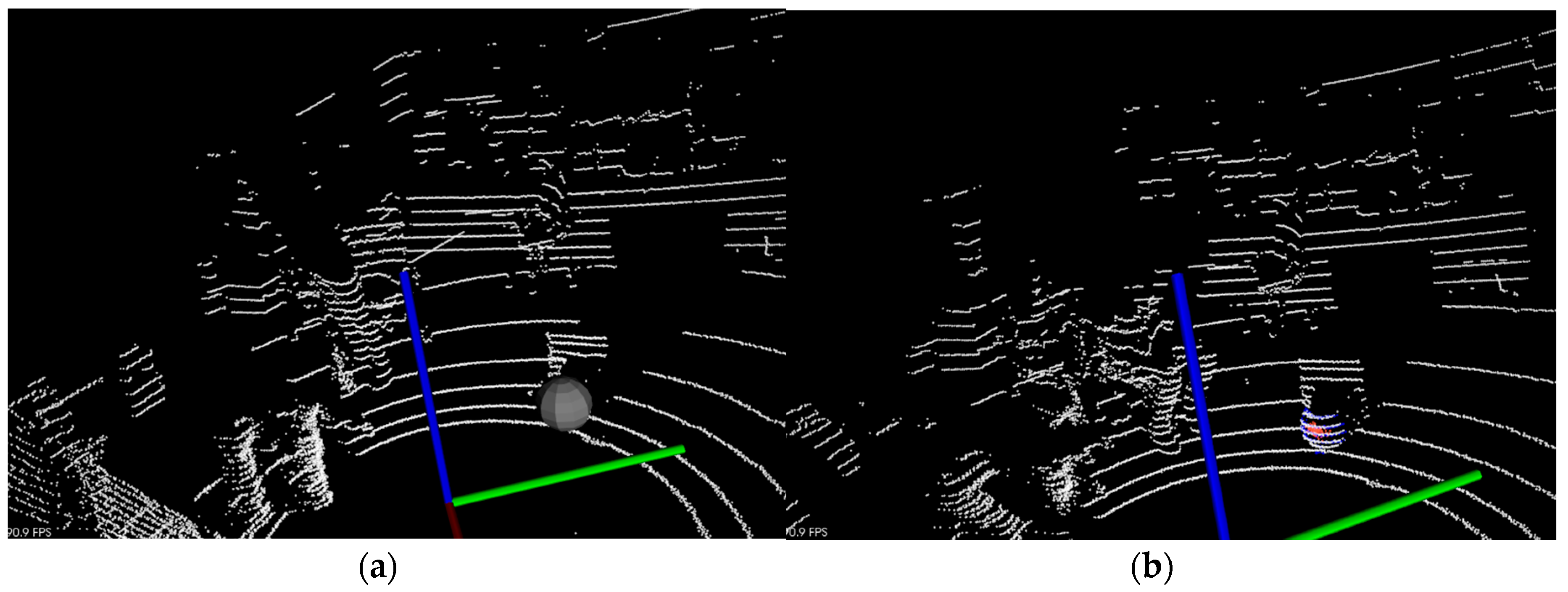

4.1. Target Tracking with Occlusion and Obstacle in the Environment

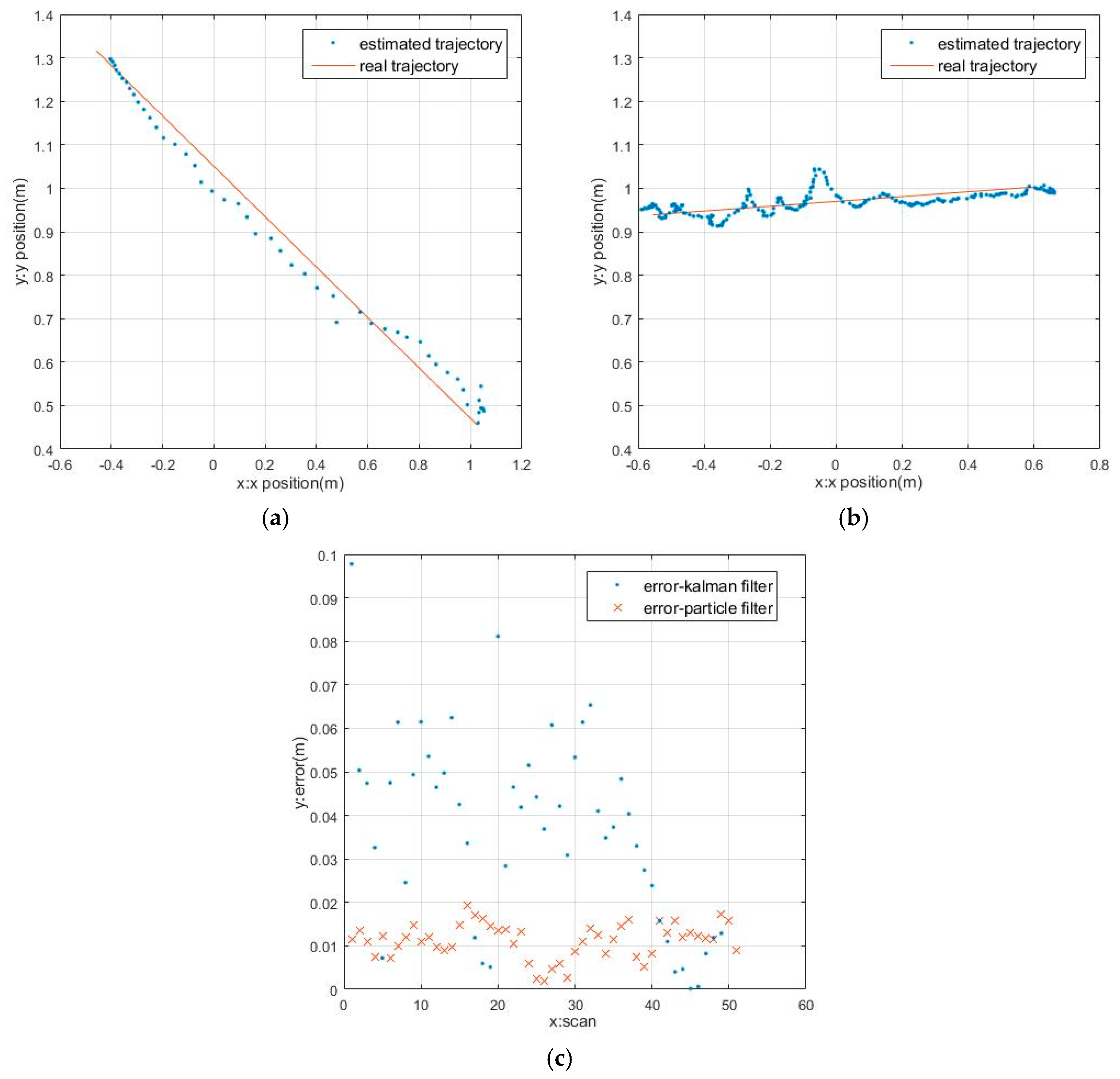

4.2. Target Tracking at Different Moving Speeds

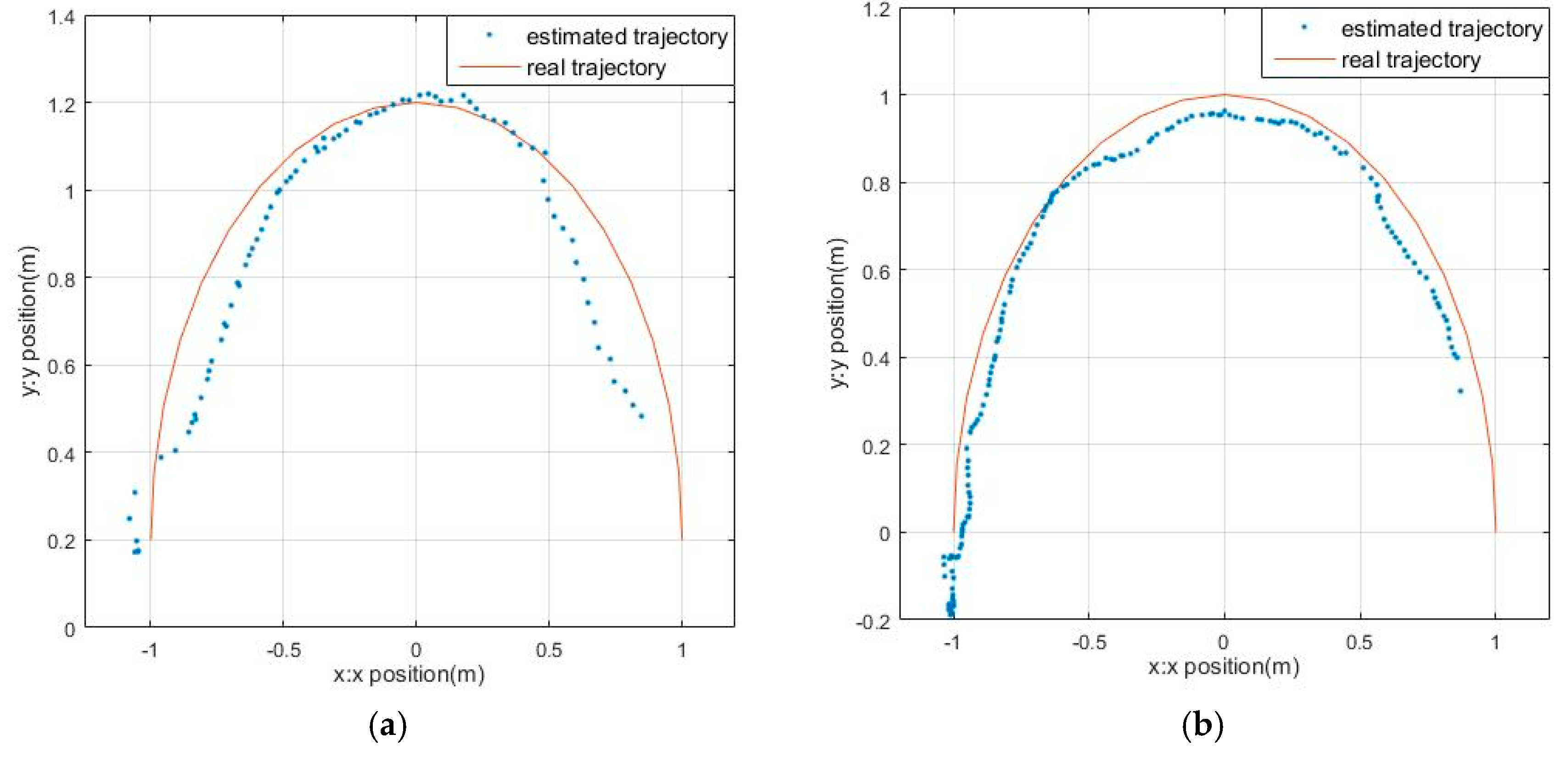

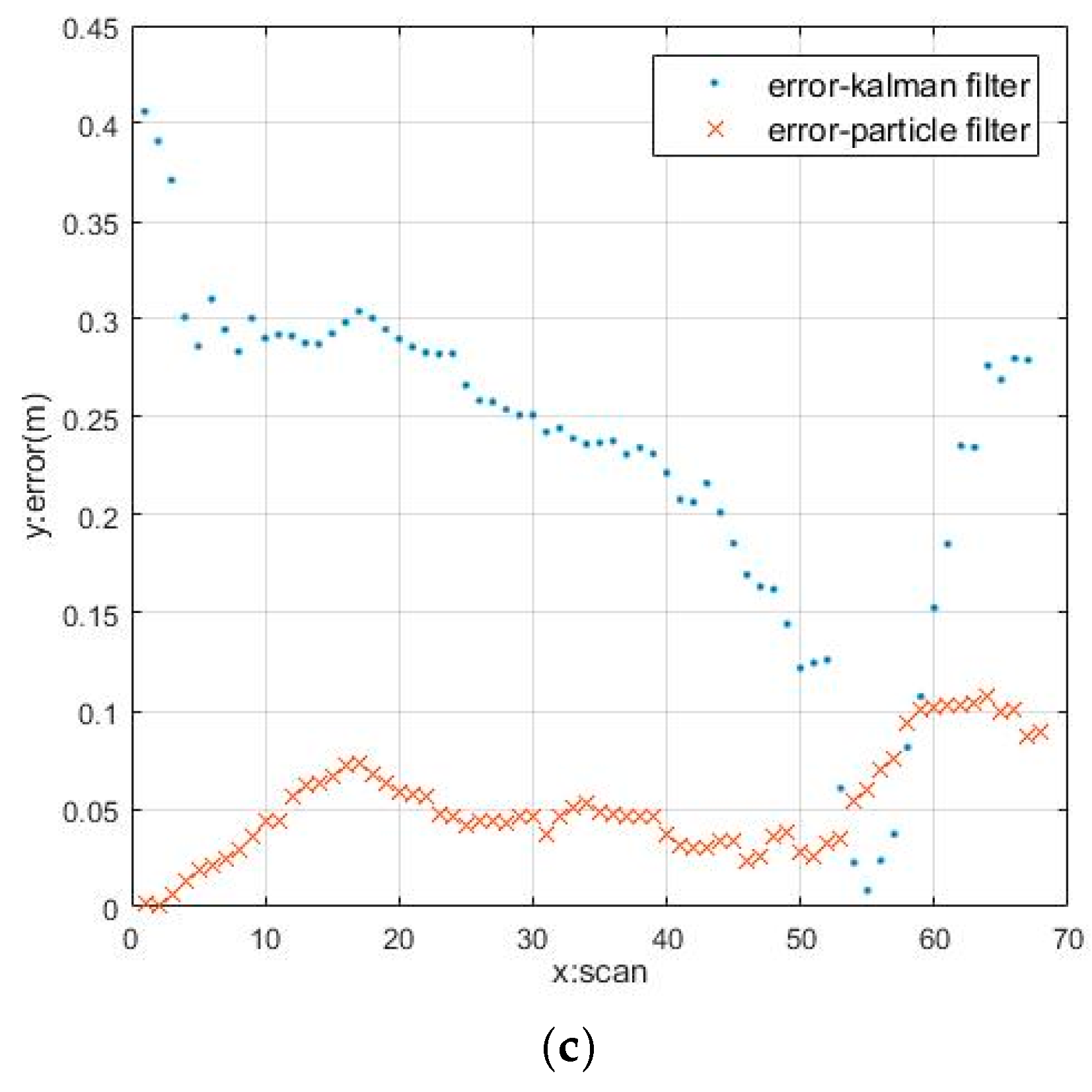

4.3. Target Tracking in Different Motion Trajectories

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Eum, J.; Berhanu, E.; Oh, S. Unmanned aircraft platform based real-time lidar data processing architecture for real-time detection information. KIISE Trans. Comput. Pract. 2015, 21, 745–750. [Google Scholar] [CrossRef]

- Dominguez, R.; Alonso, J.; Onieva, E.; Gonzalez, C. A transferable belief model applied to LIDAR perception for autonomous vehicles. Integr. Comput.-Aided Eng. 2013, 20, 289–302. [Google Scholar]

- Shang, E.; An, X.; Wu, T.; Hu, T.; Yuan, Q.; He, H. Lidar based negative obstacle detection for field autonomous land vehicles. J. Field Robot. 2016, 33, 591–617. [Google Scholar] [CrossRef]

- Feng, D.; Yuan, X. Automatic construction of aerial corridor for navigation of unmanned aircraft systems in class G airspace using LiDAR. In Proceedings of the Conference on Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications XIII, Baltimore, MD, USA, 18–19 April 2016; pp. 1–8. [Google Scholar]

- Teobaldelli, M.; Cona, F.; Saulino, L.; Migliozzi, A.; D’Urso, G.; Langella, G.; Manna, P.; Saracino, A. Detection of diversity and stand parameters in Mediterranean forests using leaf-off discrete return LiDAR data. Remote Sens. Environ. 2017, 192, 126–138. [Google Scholar] [CrossRef]

- Koenig, K.; Hoefle, B.; Haemmerle, M.; Jarmer, T.; Siegmann, B.; Lilienthal, H. Comparative classification analysis of post-harvest growth detection from terrestrial LiDAR point clouds in precision agriculture. ISPRS J. Photogramm. Remote Sens. 2015, 104, 112–125. [Google Scholar] [CrossRef]

- Andujar, D.; Moreno, H.; Valero, C.; Gerhards, R.; Griepentrog, H.W. Weed-crop discrimination using LiDAR measurements. In Proceedings of the 9th European Conference on Precision Agriculture, Lleida, Spain, 7–11 July 2013; pp. 541–545. [Google Scholar]

- Trmal, C.; Pons, F.; Ledoux, P. Flood protection structure detection with Lidar: Examples on French Mediterranean rivers and coastal areas. In Proceedings of the 3rd European Conference on Flood Risk Management (FLOODrisk), Lyon, France, 17–21 October 2016; pp. 1–5. [Google Scholar]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef]

- Hwang, S.; Kim, N.; Choi, Y.; Lee, S.; Kweon, I.S. Fast Multiple Objects Detection and Tracking Fusing Color Camera and 3D LIDAR for Intelligent Vehicles. In Proceedings of the 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xi’an, China, 19–22 August 2016; pp. 234–239. [Google Scholar]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Song, S.; Xiang, Z.; Liu, J. Object tracking with 3D LIDAR via multi-task sparse learning. In Proceedings of the 2015 IEEE International Conference on Mechatronics and Automation, Beijing, China, 2–5 August 2015; pp. 2603–2608. [Google Scholar]

- Guo, L.; Li, L.; Zhao, Y.; Zhao, Z. Pedestrian tracking based on camshift with Kalman prediction for autonomous vehicles. Int. J. Adv. Robot. Syst. 2016, 13, 120. [Google Scholar] [CrossRef]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Motion-based detection and tracking in 3D LiDAR Scans. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4508–4513. [Google Scholar]

- Allodi, M.; Broggi, A.; Giaquinto, D.; Patander, M.; Prioletti, A. Machine learning in tracking associations with stereo vision and lidar observations for an autonomous vehicle. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016; pp. 648–653. [Google Scholar]

- Wasik, A.; Ventura, R.; Pereira, J.N.; Lima, P.U.; Martinoli, A. Lidar-based relative position estimation and tracking for multi-robot systems. In Proceedings of the Robot 2015: Second Iberian Robotics Conference, Advances in Robotics, Lisbon, Portugal, 19–21 November 2015; pp. 3–16. [Google Scholar]

- Li, Q.; Dai, B.; Fu, H. LIDAR-based dynamic environment modeling and tracking using particles based occupancy grid. In Proceeding of the 2016 IEEE International Conference on Mechatronics and Automation, Harbin, China, 7–10 August 2016; pp. 238–243. [Google Scholar]

- Tuncer, M.A.C.; Schulz, D. Integrated object segmentation and tracking for 3D LIDAR data. In Proceedings of the 13th International Conference on Informatics in Control, Automation and Robotics, Lisbon, Portugal, 29–31 July 2016; pp. 344–351. [Google Scholar]

- Asvadi, A.; Girao, P.; Peixoto, P.; Nunes, U. 3D object tracking using RGB and LIDAR data. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1255–1260. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Srilekha, S.; Swamy, G.N.; Krishna, A.A. A novel approach for detection and tracking of vehicles using Kalman filter. In Proceedings of the 7th International Conference on Computational Intelligence and Communication Networks (CICN), Jabalpur, India, 12–14 December 2015; pp. 234–236. [Google Scholar]

- Huang, W.; Xie, H.; Shen, C.; Li, J. A robust strong tracking cubature Kalman filter for spacecraft attitude estimation with quaternion constraint. Acta Astronaut. 2016, 121, 153–163. [Google Scholar] [CrossRef]

- Jain, A.; Krishnamurthy, P.K. Phase noise tracking and compensation in coherent optical systems using Kalman filter. IEEE Commun. Lett. 2016, 20, 1072–1075. [Google Scholar] [CrossRef]

- Gulalkari, A.V.; Pratama, P.S.; Hoang, G.; Kim, D.H.; Jun, B.H.; Kim, S.B. Object tracking and following six-legged robot system using Kinect camera based on Kalman filter and backstepping controller. J. Mech. Sci. Technol. 2015, 29, 5425–5436. [Google Scholar] [CrossRef]

- Lim, J.; Yoo, J.H.; Kim, H.J. A mobile robot tracking using Kalman filter-based gaussian process in wireless sensor networks. In Proceedings of the 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 609–613. [Google Scholar]

- Moon, S.; Park, Y.; Ko, D.W.; Suh, I.H. Multiple kinect sensor fusion for human skeleton tracking using Kalman filtering. Int. J. Adv. Robot. Syst. 2016, 13, 1–10. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Himmelsbach, M.; Hundelshausen, F.V.; Wuensche, H.J. Fast segmentation of 3D point clouds for ground vehicles. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 560–565. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 2006; Volume 8, pp. 127–132. [Google Scholar]

- Fox, D. KLD-sampling: Adaptive particle filters. In Proceedings of the Neural Information Processing Systems: Natural and Synthetic, Vancouver, BC, Canada, 3–8 December 2001; pp. 713–720. [Google Scholar]

| 0.08 | 0.2 | 1 | 0.04 | 0.08 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, L.; Chen, S.; Zhang, J.; Cheng, B.; Liu, M. Real-Time Motion Tracking for Indoor Moving Sphere Objects with a LiDAR Sensor. Sensors 2017, 17, 1932. https://doi.org/10.3390/s17091932

Huang L, Chen S, Zhang J, Cheng B, Liu M. Real-Time Motion Tracking for Indoor Moving Sphere Objects with a LiDAR Sensor. Sensors. 2017; 17(9):1932. https://doi.org/10.3390/s17091932

Chicago/Turabian StyleHuang, Lvwen, Siyuan Chen, Jianfeng Zhang, Bang Cheng, and Mingqing Liu. 2017. "Real-Time Motion Tracking for Indoor Moving Sphere Objects with a LiDAR Sensor" Sensors 17, no. 9: 1932. https://doi.org/10.3390/s17091932