Image-Based Phenotyping of Flowering Intensity in Cool-Season Crops

Abstract

:1. Introduction

- (1)

- Compare the performance of the RGB and multispectral sensors in flower detection and monitoring. The hypothesis behind this objective was that the multispectral sensor can capture reflectance in near-infrared regions, which will assist in efficient image processing, especially during flower segmentation and noise removal, compared to RGB image.

- (2)

- Identify the impact of spatial resolution (proximal and remote sensing) on image-based flower detection accuracy. The hypothesis behind this objective was that the image resolution will affect flower detection based on the flower size and it is necessary to understand the impact of resolution on the detection accuracy.

- (3)

- Evaluate thresholding-based method and un-supervised machine learning (k-means clustering) technique for flower detection (in pea and canola). The hypothesis behind this objective was that the un-supervised machine learning technique will provide superior performance than standard image processing methods.

- (4)

- Evaluate the relationship between flower intensity and crop yield. The hypothesis behind this objective was that crop yield will be positively correlated with flower intensity.

2. Materials

2.1. Field Experiments and Visual Ratings

2.2. Data Acquisition Using Sensing Techniques

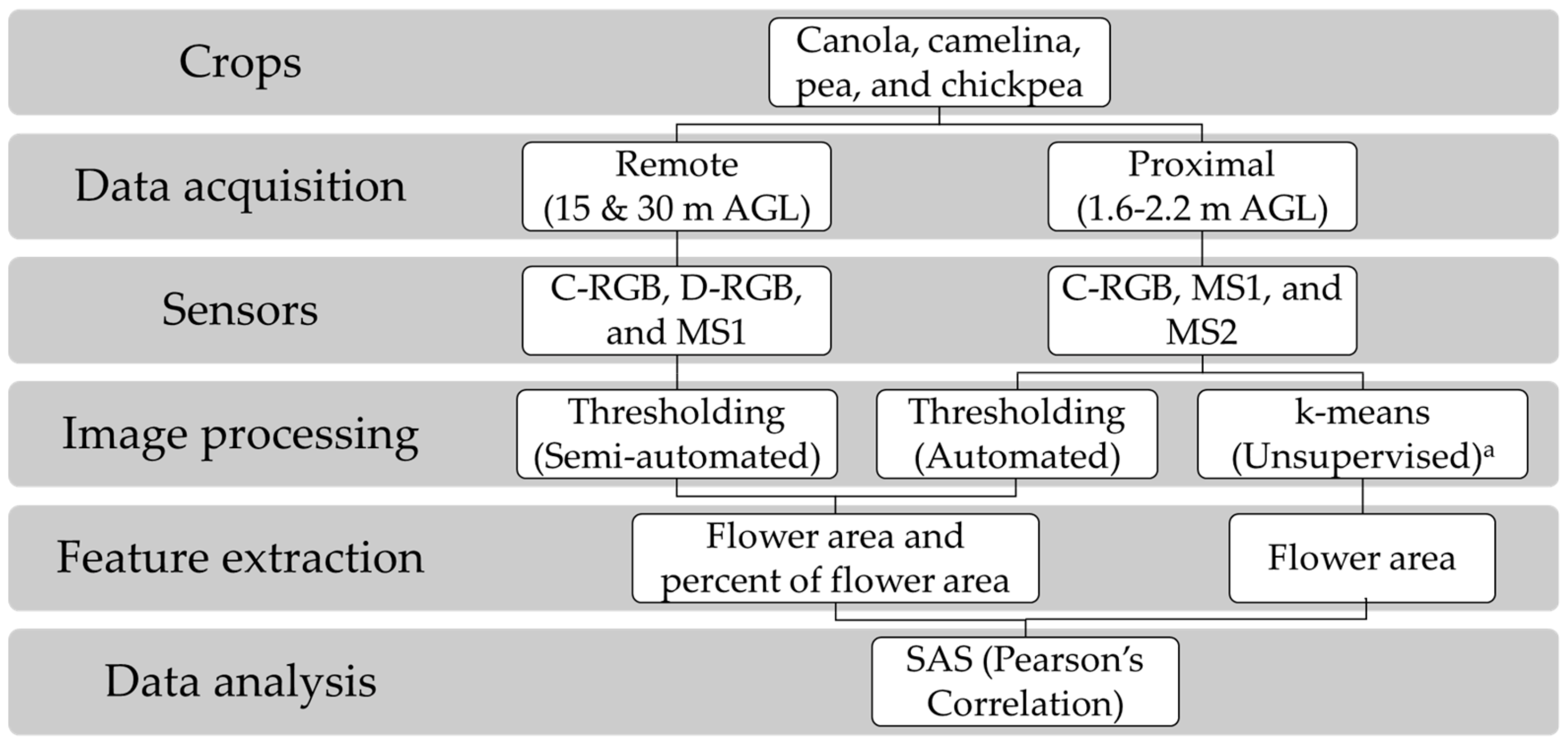

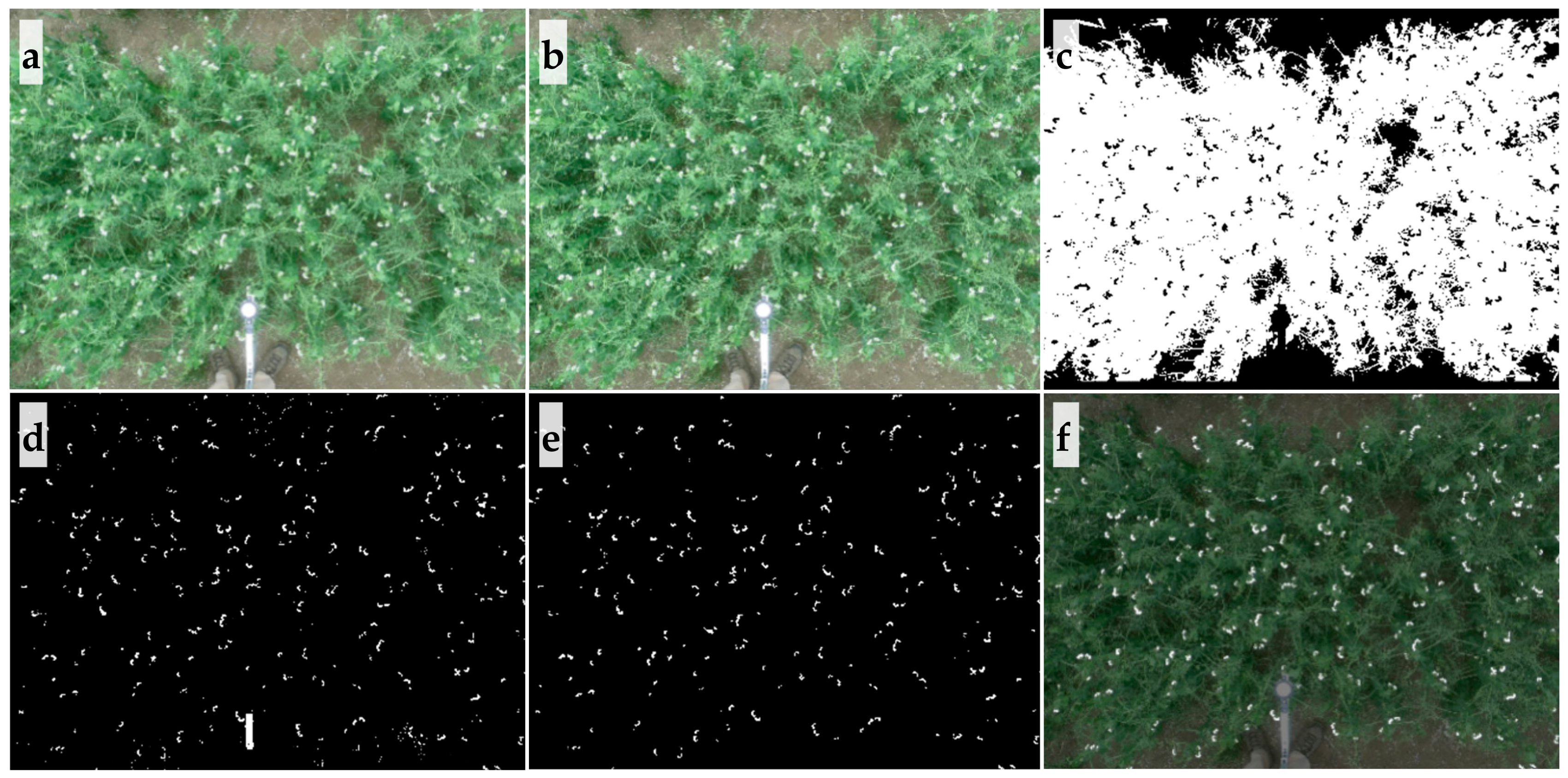

2.3. Image Processing and Feature Extraction

2.4. Statistical Analysis

3. Results

3.1. Flower Detection Using RGB and Multispectral Sensors

3.2. Impact of Spatial Resolution on Flower Detection

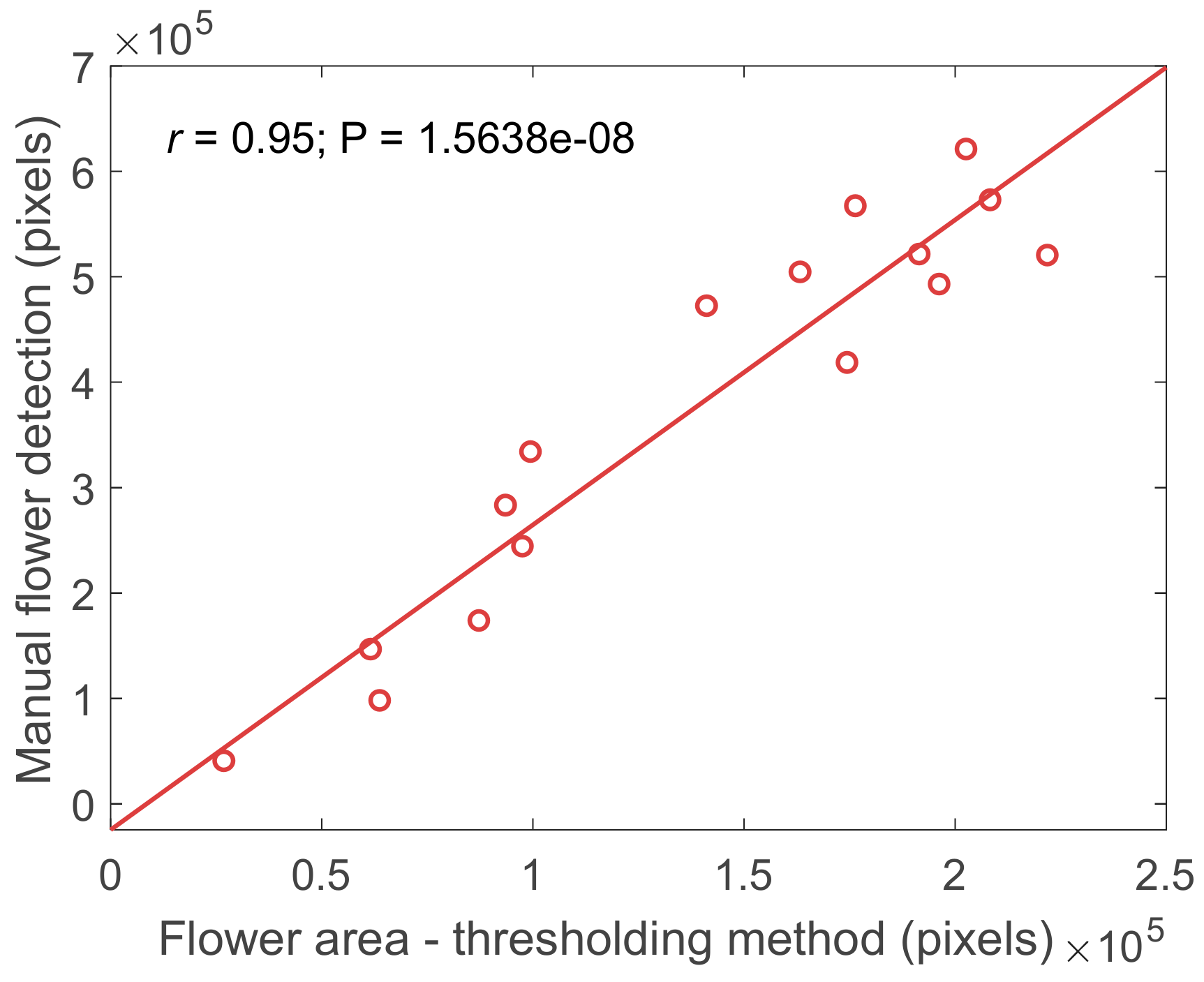

3.3. Machine Learning for Flowering Detection

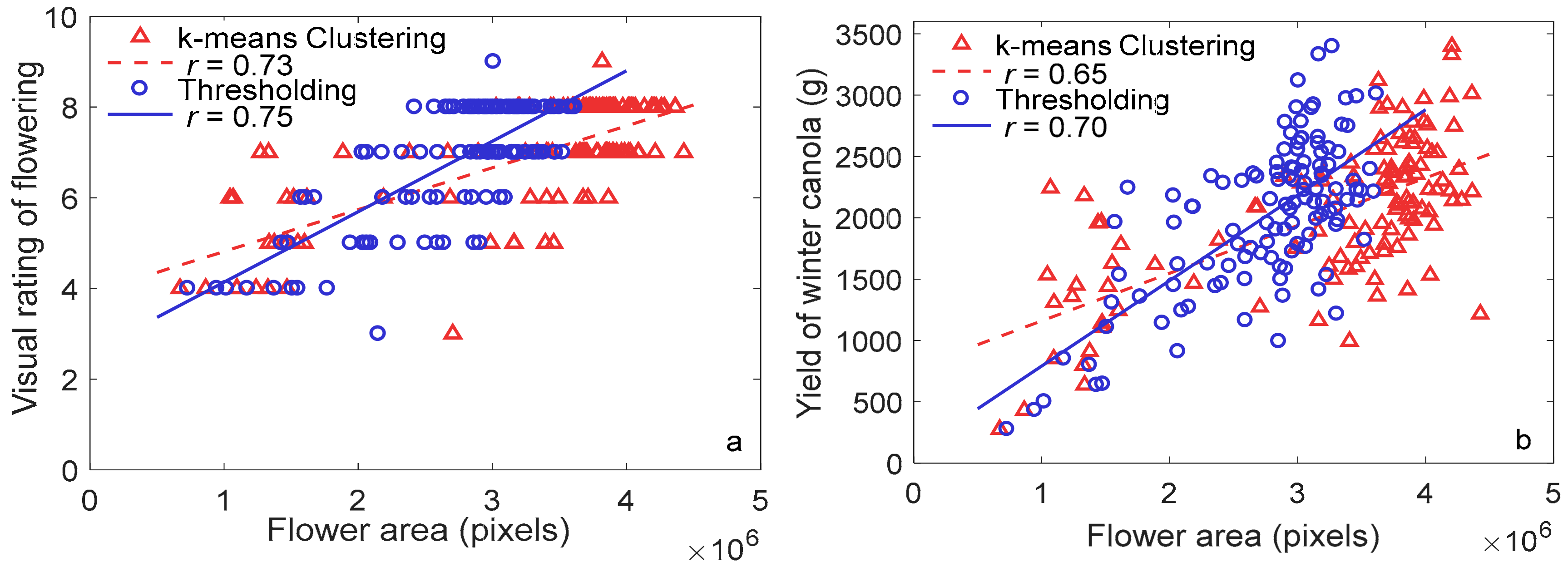

3.4. Relationship between Flower Features and Seed Yield

4. Discussion

4.1. Sensors for Flowering Detection

4.2. Role of Spatial Resolution and Auxiliaries during Data Acquisition

4.3. Methods of Flower Detection

4.4. Flower-Based Yield Estimation

4.5. Improving Accuracy of Flower Detection and Implication of Flower Monitoring Using HTP Techniques

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Availability of Data and Materials

Conflicts of Interest

References

- Fageria, N.K.; Baligar, V.C.; Clark, R. Physiology of Crop Production; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Jung, C.; Müller, A.E. Flowering time control and applications in plant breeding. Trends Plant Sci. 2009, 14, 563–573. [Google Scholar] [CrossRef] [PubMed]

- Passioura, J. Increasing crop productivity when water is scarce—from breeding to field management. Agric. Water Manag. 2006, 80, 176–196. [Google Scholar] [CrossRef] [Green Version]

- Richards, R.A. Defining selection criteria to improve yield under drought. Plant Growth Regul. 1996, 20, 157–166. [Google Scholar] [CrossRef]

- Mares, D.J. Pre-harvest sprouting in wheat. I. Influence of cultivar, rainfall and temperature during grain ripening. Aust. J. Agric. Res. 1993, 44, 1259–1272. [Google Scholar] [CrossRef]

- Saini, H.S.; Sedgley, M.; Aspinall, D. Development anatomy in wheat of male sterility induced by heat stress, water deficit or abscisic acid. Funct. Plant Biol. 1984, 11, 243–253. [Google Scholar] [CrossRef]

- Qiu, R.; Wei, S.; Zhang, M.; Li, H.; Sun, H.; Liu, G.; Li, M. Sensors for measuring plant phenotyping: a review. Int. J. Agric. Biol. Eng. 2018, 11, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Campbell, Z.C.; Acosta-Gamboa, L.M.; Nepal, N.; Lorence, A. Engineering plants for tomorrow: how high-throughput phenotyping is contributing to the development of better crops. Phytochem. Rev. 2018, 17, 1329–1343. [Google Scholar] [CrossRef]

- Klukas, C.; Chen, D.; Pape, J.-M. Integrated analysis platform: An open-source information system for high-throughput plant phenotyping. Plant Physiol. 2014, 165, 506–518. [Google Scholar] [CrossRef] [Green Version]

- Kipp, S.; Mistele, B.; Baresel, P.; Schmidhalter, U. High-throughput phenotyping early plant vigour of winter wheat. Eur. J. Agron. 2014, 52, 271–278. [Google Scholar] [CrossRef]

- Winterhalter, L.; Mistele, B.; Jampatong, S.; Schmidhalter, U. High throughput phenotyping of canopy water mass and canopy temperature in well-watered and drought stressed tropical maize hybrids in the vegetative stage. Eur. J. Agron. 2011, 35, 22–32. [Google Scholar] [CrossRef]

- Holman, F.; Riche, A.; Michalski, A.; Castle, M.; Wooster, M.; Hawkesford, M. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Bucksch, A.; Burridge, J.; York, L.M.; Das, A.; Nord, E.; Weitz, J.S.; Lynch, J.P. Image-based high-throughput field phenotyping of crop roots. Plant Physiol. 2014, 166, 470–486. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Whan, A.P.; Smith, A.B.; Cavanagh, C.R.; Ral, J.-P.F.; Shaw, L.M.; Howitt, C.A.; Bischof, L. GrainScan: a low cost, fast method for grain size and colour measurements. Plant Methods 2014, 10, 23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, W.; Fukatsu, T.; Ninomiya, S. Automated characterization of flowering dynamics in rice using field-acquired time-series RGB images. Plant Methods 2015, 11, 7. [Google Scholar] [CrossRef] [Green Version]

- Sadeghi-Tehran, P.; Sabermanesh, K.; Virlet, N.; Hawkesford, M.J. Automated method to determine two critical growth stages of wheat: heading and flowering. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hočevar, M.; Širok, B.; Godeša, T.; Stopar, M. Flowering estimation in apple orchards by image analysis. Precis. Agric. 2014, 15, 466–478. [Google Scholar] [CrossRef]

- Yahata, S.; Onishi, T.; Yamaguchi, K.; Ozawa, S.; Kitazono, J.; Ohkawa, T.; Yoshida, T.; Murakami, N.; Tsuji, H. A hybrid machine learning approach to automatic plant phenotyping for smart agriculture. In Proceedings of the Neural Networks (IJCNN), 2017 International Joint Conference on IEEE, Anchorage, AK, USA, 14–19 May 2017; pp. 1787–1793. [Google Scholar]

- Dorj, U.-O.; Lee, M.; Lee, K.; Jeong, G. A novel technique for tangerine yield prediction using flower detection algorithm. Int. J. Pattern Recognit. Artif. Intell. 2013, 27. [Google Scholar] [CrossRef]

- Thorp, K.R.; Dierig, D.A. Color image segmentation approach to monitor flowering in lesquerella. Ind. Crops Prod. 2011, 34, 1150–1159. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef] [Green Version]

- Kaur, R.; Porwal, S. An optimized computer vision approach to precise well-bloomed flower yielding prediction using image segmentation. Int. J. Comput. Appl. 2015, 119, 15–20. [Google Scholar] [CrossRef]

- Kurtulmuş, F.; Kavdir, İ. Detecting corn tassels using computer vision and support vector machines. Expert Syst. Appl. 2014, 41, 7390–7397. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Paterson, A.H.; Jiang, Y.; Sun, S.; Robertson, J.S. Aerial images and convolutional neural network for cotton bloom detection. Front. Plant Sci. 2018, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vandemark, G.J.; Grusak, M.A.; McGee, R.J. Mineral concentrations of chickpea and lentil cultivars and breeding lines grown in the U.S. Pacific Northwest. Crop J. 2018, 6, 253–262. [Google Scholar] [CrossRef]

- Belay, F.; Gebreslasie, A.; Meresa, H. Agronomic performance evaluation of cowpea [Vigna unguiculata (L.) Walp] varieties in Abergelle District, Northern Ethiopia. Plant Breed. Crop Sci. 2017, 9, 139–143. [Google Scholar]

- Annicchiarico, P.; Russi, L.; Romani, M.; Pecetti, L.; Nazzicari, N. Farmer-participatory vs. conventional market-oriented breeding of inbred crops using phenotypic and genome-enabled approaches: A pea case study. Field Crops Res. 2019, 232, 30–39. [Google Scholar] [CrossRef]

- NatureGate False Flax. Available online: http://www.luontoportti.com/suomi/en/kukkakasvit/false-flax (accessed on 2 August 2019).

- Zhang, C.; Craine, W.; Davis, J.B.; Khot, L.; Marzougui, A.; Brown, J.; Hulbery, S.; Sankaran, S. Detection of canola flowering using proximal and aerial remote sensing techniques. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping III, Orlando, FL, USA, 15–19 April 2018; Thomasson, J.A., McKee, M., Moorhead, R.J., Eds.; SPIE: Bellingham, WA, USA, 2018; p. 8. [Google Scholar]

- McLaren, K. XIII—The development of the CIE 1976 (L* a* b*) uniform colour space and colour-difference formula. J. Soc. Dye. Colour. 1976, 92, 338–341. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. K-means++: the advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; 2007; pp. 1027–1035. [Google Scholar]

- Horovitz, A.; Cohen, Y. Ultraviolet reflectance characteristics in flowers of crucifers. Am. J. Bot. 1972, 59, 706–713. [Google Scholar] [CrossRef]

- Chittka, L.; Shmida, A.; Troje, N.; Menzel, R. Ultraviolet as a component of flower reflections, and the colour perception of hymenoptera. Vision Res. 1994, 34, 1489–1508. [Google Scholar] [CrossRef]

- Briscoe, A.D.; Chittka, L. The evolution of color vision in insects. Annu. Rev. Entomol. 2001, 46, 471–510. [Google Scholar] [CrossRef] [Green Version]

- Thompson, W.R.; Meinwald, J.; Aneshansley, D.; Eisner, T. Flavonols: Pigments responsible for ultraviolet absorption in nectar guide of flower. Science 1972, 177, 528–530. [Google Scholar] [CrossRef]

- Gronquist, M.; Bezzerides, A.; Attygalle, A.; Meinwald, J.; Eisner, M.; Eisner, T. Attractive and defensive functions of the ultraviolet pigments of a flower (Hypericum calycinum). Proc. Natl. Acad. Sci. USA 2001, 98, 13745–13750. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sasaki, K.; Takahashi, T. A flavonoid from Brassica rapa flower as the UV-absorbing nectar guide. Phytochemistry 2002, 61, 339–343. [Google Scholar] [CrossRef]

- Nunez, M.; Forgan, B.; Roy, C. Estimating ultraviolet radiation at the earth’s surface. Int. J. Biometeorol. 1994, 38, 5–17. [Google Scholar] [CrossRef]

- Horton, R.; Cano, E.; Bulanon, D.; Fallahi, E. Peach flower monitoring using aerial multispectral imaging. J. Imaging 2017, 3, 2. [Google Scholar] [CrossRef]

- Zhu, Y.; Cao, Z.; Lu, H.; Li, Y.; Xiao, Y. In-field automatic observation of wheat heading stage using computer vision. Biosyst. Eng. 2016, 143, 28–41. [Google Scholar] [CrossRef]

- Sharma, R.C. Early generation selection for grain-filling period in wheat. Crop Sci. 1994, 34, 945–948. [Google Scholar] [CrossRef]

- Daynard, T.B.; Kannenberg, L.W. Relationships between length of the actual and effective grain filling periods and the grain yield of corn. Can. J. Plant Sci. 1976, 56, 237–242. [Google Scholar] [CrossRef]

- Acquaah, G. Principles of Plant Genetics and Breeding; John Wiley & Sons: Hoboken, NJ, USA, 2009; ISBN 978-1-4443-0901-0. [Google Scholar]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Chen, W.; Er, M.J.; Wu, S. Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2006, 36, 458–466. [Google Scholar] [CrossRef]

- KantSingh, K.; Pal, K.J.; Nigam, M. Shadow detection and removal from remote sensing images using NDI and morphological operators. Int. J. Comput. Appl. 2012, 42, 37–40. [Google Scholar] [CrossRef]

- Devasirvatham, V.; Tan, D.K.Y.; Gaur, P.M.; Raju, T.N.; Trethowan, R.M. High temperature tolerance in chickpea and its implications for plant improvement. Crop Pasture Sci. 2012, 63, 419. [Google Scholar] [CrossRef] [Green Version]

- Zirgoli, M.H.; Kahrizi, D. Effects of end-season drought stress on yield and yield components of rapeseed (Brassica napus L.) in warm regions of Kermanshah Province. Biharean Biol. 2015, 9, 133–140. [Google Scholar]

| Crops | Winter Canola | Spring Canola | Camelina | Pea | Chickpea |

|---|---|---|---|---|---|

| Flower size (mm) | 15–20 (dia.) a | 15–20 (dia.) | 3.5–4.5 (dia.) b | 18–27 × 13–19 (L × W) | 7–11 × 8–11 (L × W) |

| Location | Kambitsch Farm, ID | Kambitsch Farm, ID | Cook Farm, WA | Spillman Farm, WA | Spillman Farm, WA |

| Entries | 30 | 44 | 12 | 55 | 21 |

| Replicates | 4 | 4 | 3 or 1 c | 3 | 3 |

| Planting Date | 27 September 2017 | 3 May 2018 | 7 and 25 May, 11 June, 2018 d | 5 May 2018 | 5 May 2018 |

| Data acquisition (DAP) | 229, 236, and 245 | 57 and 67 | 60, 74, and 80 e | 48, 53, and 59 | 48, 53, and 59 |

| Factor | C-RGB | MS1 | MS2 | D-RGB |

|---|---|---|---|---|

| Model | Canon PowerShot SX260 HS, Canon U.S.A. Inc., Melville, NY, USA | Canon ELPH 110/160 HS, LDP LLC, Carlstadt, NJ, USA a | Canon ELPH 130 HS, LDP LLC, Carlstadt, NJ, USA | Camera of DJI Phantom 4 Pro, DJI Inc., LA, CA, USA |

| Spectrum | Visible/R, G, B b | NIR c (680–800 nm), G, B | R, B, NIR (800–900 nm) | Visible/R, G, B |

| Resolution (megapixels) | 12.1 | 16.1/20.0 | 16.0 | 20.0 |

| Focal length used (mm) | 4.5 | 4.3/5.0 | 5.0 | 8.8 |

| GSD d (mm, proximal) | 0.6/0.7 | 0.6/0.5 | 0.6/0.8 | - |

| GSD e (mm, remote) | 5 and 10 | 5 and 11/4 and 7 | - | 4 and 8 |

| Geotagged image | No | No | No | Yes |

| Application | Proximal and remote sensing | Proximal and remote sensing | Proximal sensing | Remote sensing |

| Sensor | C-RGB | MS1 | MS2 | |||||

|---|---|---|---|---|---|---|---|---|

| Flowering Stage | Early | Mid | Late | Early | Mid | Late | Early | |

| Winter canola | Flower area | 0.82 | 0.75 | 0.76 | 0.79 | 0.76 | 0.77 | 0.50 |

| *** | *** | *** | *** | *** | *** | *** | ||

| Flowers% | 0.82 | 0.75 | 0.75 | 0.77 | 0.73 | 0.74 | 0.15 | |

| *** | *** | *** | *** | *** | *** | ns | ||

| Spring canola | Flower area | na | 0.62 | 0.81 | na | 0.62 | 0.77 | na |

| *** | *** | *** | *** | |||||

| Flowers% | na | 0.64 | 0.80 | na | 0.58 | 0.77 | na | |

| *** | *** | *** | *** | |||||

| Camelina | Flower area | 0.60 | 0.27 | 0.27 | 0.64 | 0.36 | 0.40 | 0.68 |

| *** | ns | ns | *** | * | * | *** | ||

| Flowers% | 0.63 | 0.02 | 0.25 | 0.67 | 0.28 | 0.41 | 0.53 | |

| *** | ns | ns | *** | ns | * | *** | ||

| Pea | Flower area | 0.88 | 0.88 | 0.58 | 0.64 | 0.79 | 0.56 | 0.66 |

| *** | *** | *** | *** | *** | *** | *** | ||

| Flowers% | 0.86 | 0.89 | 0.58 | 0.63 | 0.80 | 0.56 | 0.65 | |

| *** | *** | *** | *** | *** | *** | *** | ||

| Chickpea | Flower area | 0.74 | 0.74 | 0.16 | 0.45 | 0.28 | 0.12 | 0.25 |

| *** | *** | ns | *** | * | ns | * | ||

| Flowers% | 0.61 | 0.54 | 0.19 | 0.28 | 0.17 | 0.26 | 0.05 | |

| *** | *** | ns | * | ns | * | ns | ||

| Camera | D-RGB | C-RGB | MS1 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Flowering Stage | Early | Mid | Late | Early | Mid | Late | Early | Mid | Late | ||

| Winter canola | Flower area | 15 m | 0.84 | 0.81 | 0.77 | 0.81 | 0.72 | 0.78 | 0.82 | 0.76 | 0.72 |

| *** | *** | *** | *** | *** | *** | *** | *** | *** | |||

| Flowers% | 15 m | 0.82 | 0.80 | 0.82 | 0.80 | 0.71 | 0.77 | 0.82 | 0.72 | 0.73 | |

| *** | *** | *** | *** | *** | *** | *** | *** | *** | |||

| Flower area | 30 m | 0.84 | 0.79 | 0.75 | 0.76 | 0.73 | 0.75 | 0.76 | 0.70 | 0.66 | |

| *** | *** | *** | *** | *** | *** | *** | *** | *** | |||

| Flowers% | 30 m | 0.79 | 0.78 | 0.79 | 0.72 | 0.72 | 0.72 | 0.74 | 0.70 | 0.68 | |

| *** | *** | *** | *** | *** | *** | *** | *** | *** | |||

| Spring canola | Flower area | 15 m | na | 0.42 | 0.72 | na | 0.54 | 0.77 | na | 0.50 | 0.66 |

| *** | *** | *** | *** | *** | *** | ||||||

| Flowers% | 15 m | na | 0.43 | 0.72 | na | 0.54 | 0.77 | na | 0.43 | 0.63 | |

| *** | *** | *** | *** | *** | *** | ||||||

| Flower area | 30 m | na | 0.43 | 0.60 | na | 0.41 | 0.71 | na | 0.39 | 0.51 | |

| *** | *** | *** | *** | *** | *** | ||||||

| Flowers% | 30 m | na | 0.46 | 0.61 | na | 0.40 | 0.71 | na | 0.40 | 0.49 | |

| *** | *** | *** | *** | *** | *** | ||||||

| Camelina | Flower area | 15 m | 0.36 | −0.03 | −0.40 | a | a | a | a | a | a |

| * | ns | * | |||||||||

| Flowers% | 15 m | 0.13 | −0.24 | −0.49 | a | a | a | a | a | a | |

| ns | ns | ** | |||||||||

| Flower area | 30 m | 0.40 | −0.002 | −0.33 | a | a | a | a | a | a | |

| ** | ns | * | |||||||||

| Flowers% | 30 m | 0.27 | −0.16 | −0.31 | a | a | a | a | a | a | |

| ns | ns | ns | |||||||||

| Pea | Flower area | 15 m | na | 0.72 | 0.39 | na | b | 0.32 | na | 0.55 | 0.42 |

| *** | *** | *** | *** | *** | |||||||

| Flowers% | 15 m | na | 0.72 | 0.39 | na | b | 0.32 | na | 0.58 | 0.42 | |

| *** | *** | *** | *** | *** | |||||||

| Flower area | 30 m | na | 0.57 | 0.31 | na | b | b | na | 0.55 | 0.28 | |

| *** | *** | *** | *** | ||||||||

| Flowers% | 30 m | na | 0.57 | 0.32 | na | b | b | na | 0.58 | 0.28 | |

| *** | *** | *** | *** | ||||||||

| Chickpea | Flower area | 15 m | na | −0.01 | 0.08 | a | a | a | a | a | a |

| ns | ns | ||||||||||

| Flowers% | 15 m | na | −0.05 | 0.11 | a | a | a | a | a | a | |

| ns | ns | ||||||||||

| Flower area | 30 m | na | −0.21 | 0.14 | a | a | a | a | a | a | |

| ns | ns | ||||||||||

| Flowers% | 30 m | na | −0.21 | 0.14 | a | a | a | a | a | a | |

| ns | ns | ||||||||||

| Method | Thresholding | k-Means (Unsupervised) | SVM and CNN (Supervised) |

|---|---|---|---|

| Algorithm development | Fast | Very fast | Slow, due to annotation of images and model development |

| Input | Images | Images | SVM: color, morphological, or texture features; CNN: Images |

| Training data | No | No | Yes |

| Flower detection per image | Fast | Slow | Fast |

| Example | Current study and [17,20,39] | Current study and [40] | SVM in [15,16,23] CNN in [18,24] |

| Crops | Apple, peach, pea, lesquerella, canola, camelina, chickpea | Canola, wheat | Rice, wheat, corn, soybean, and cotton |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Craine, W.A.; McGee, R.J.; Vandemark, G.J.; Davis, J.B.; Brown, J.; Hulbert, S.H.; Sankaran, S. Image-Based Phenotyping of Flowering Intensity in Cool-Season Crops. Sensors 2020, 20, 1450. https://doi.org/10.3390/s20051450

Zhang C, Craine WA, McGee RJ, Vandemark GJ, Davis JB, Brown J, Hulbert SH, Sankaran S. Image-Based Phenotyping of Flowering Intensity in Cool-Season Crops. Sensors. 2020; 20(5):1450. https://doi.org/10.3390/s20051450

Chicago/Turabian StyleZhang, Chongyuan, Wilson A. Craine, Rebecca J. McGee, George J. Vandemark, James B. Davis, Jack Brown, Scot H. Hulbert, and Sindhuja Sankaran. 2020. "Image-Based Phenotyping of Flowering Intensity in Cool-Season Crops" Sensors 20, no. 5: 1450. https://doi.org/10.3390/s20051450