A Lightweight Intelligent Network Intrusion Detection System Using One-Class Autoencoder and Ensemble Learning for IoT

Abstract

1. Introduction

- The OC-Bi-GRUs-AE model proposed in this paper tackles the problem of model closure, and it is more applicable to abnormal data detection and novel data detection, thereby enabling it to effectively deal with unknown cyberattacks.

- The complete model with OC-Bi-GRUs-AE and EL proposed in this paper solves the imbalance of dataset types, and it can quickly recognize whether a piece of network data is an attack, as well as identify the type of attack efficiently.

- The method proposed in this paper is portable and shows remarkable performance in many intrusion detection datasets. In addition, the model is able to cope with unknown attacks and identify them as the type most similar to existing ones.

2. Related Work

3. Proposed Methods

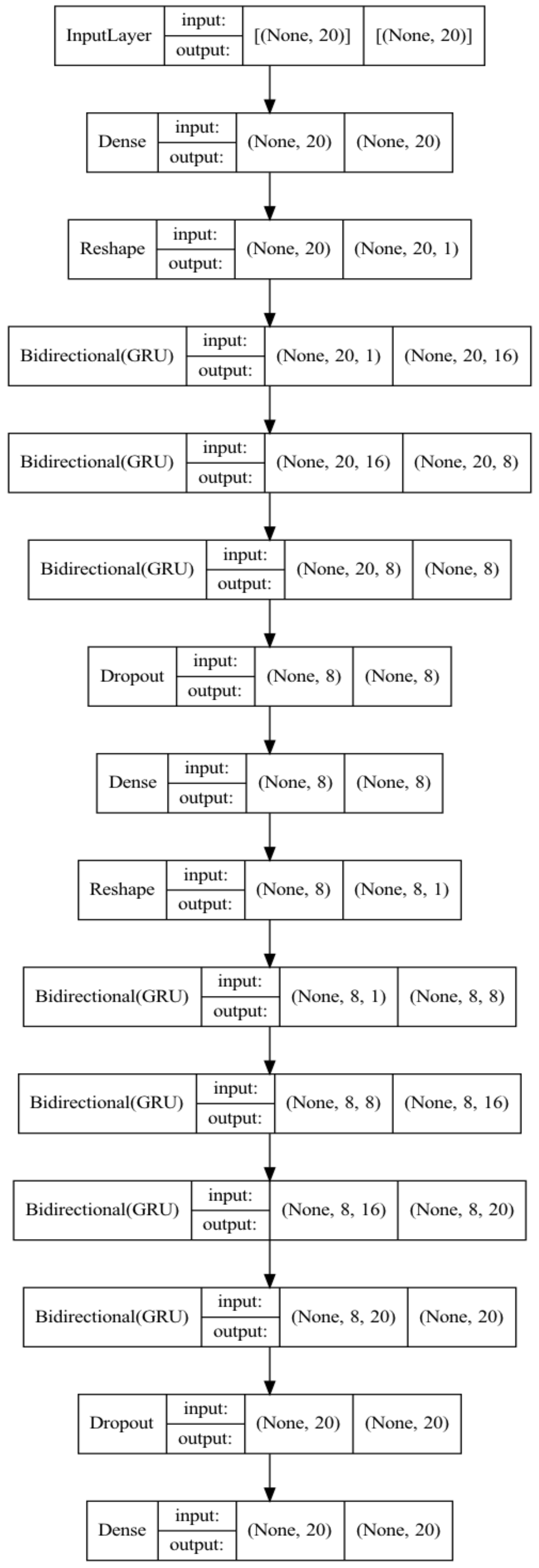

3.1. One-Class Bidirectional GRU Autoencoder

| Algorithm 1: One-Class Loss |

Input: Training set, # Normal data Evaluating set, # Normal data and Unnormal data = 1:1 Output: Normal label, Unnormal label Process: #step 1: Bi-GRU AE train model = Bi-GRU AE) # init model model.fit(TrainData, split = 0.2, batchsize, epoch) PredictData = model.predict(TrainData) Loss = abs(TrainData-PredictData)#absolute value Loss = sort(Loss) Loss_train = max(Loss) Return Loss_train # step 2: One-Class Classification PredictData = model.preict(TestData) Loss = abs(TrainData-PredictData) Loss = sort(Loss)) Loss = max(Loss) If Loss > Loss_train: Output: Unnormal label Else: Output: Normal label |

3.2. Ensemble Learning

4. Experiment and Result

4.1. Dataset

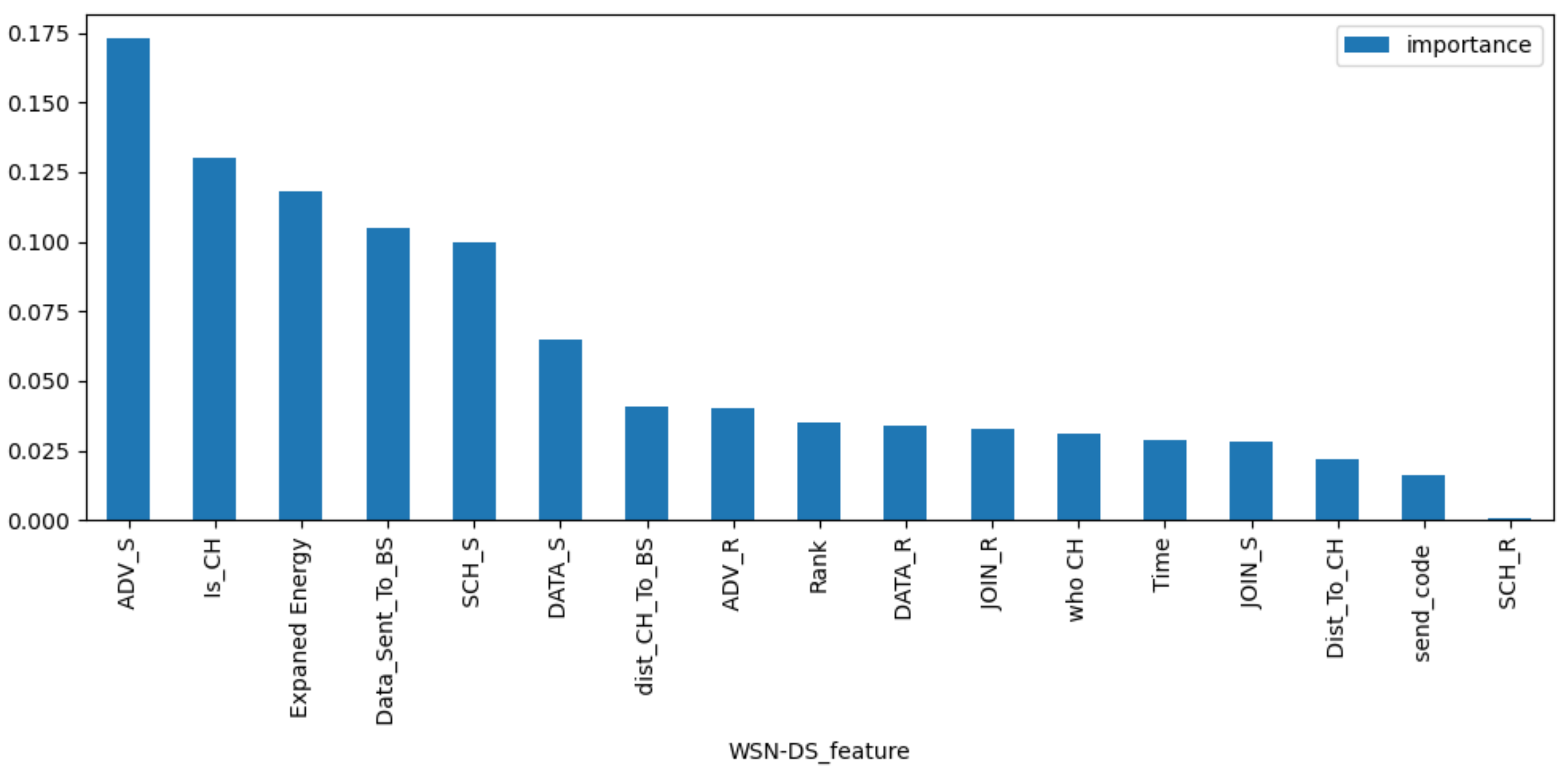

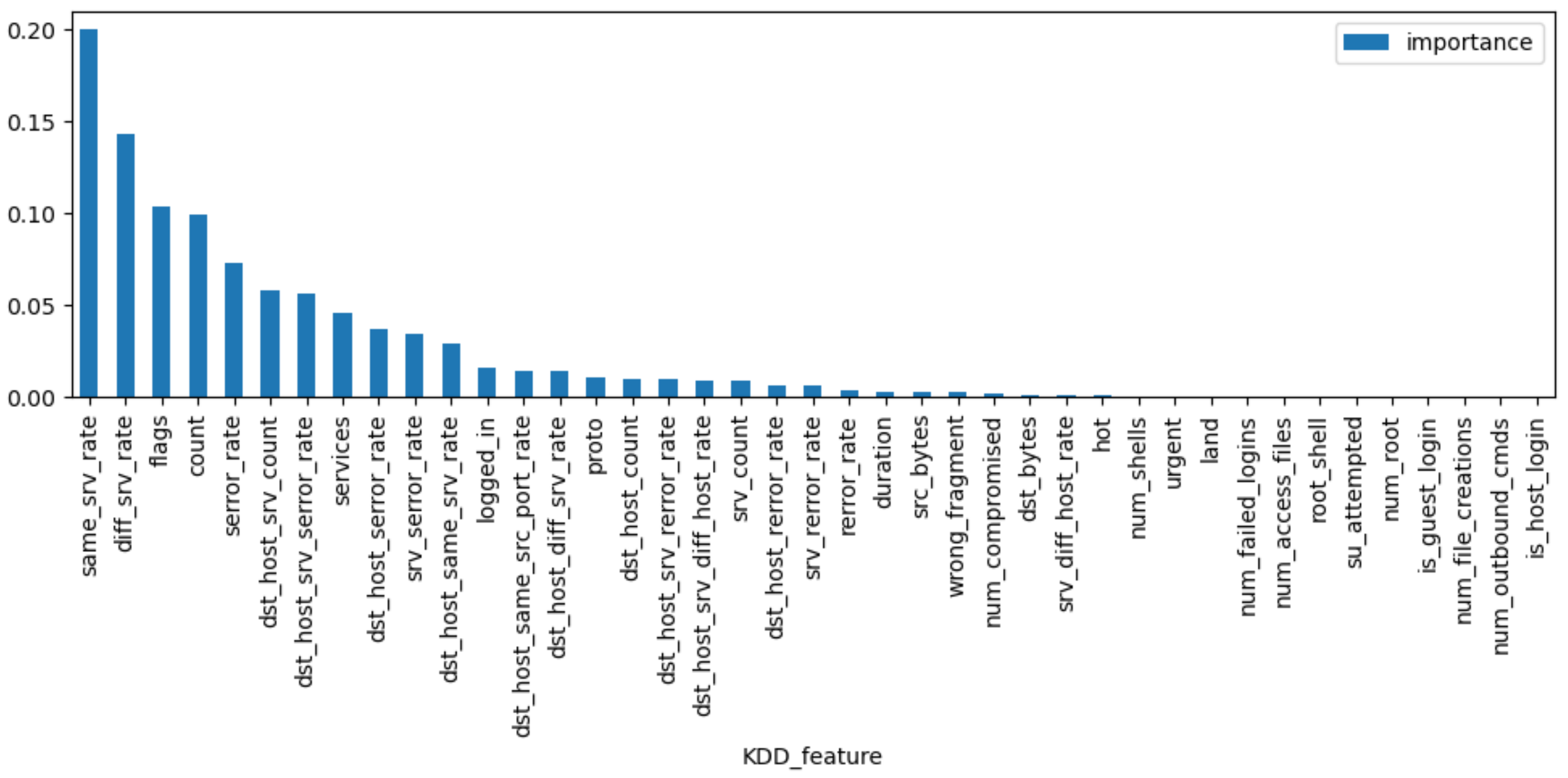

4.2. Feature Extraction, Dataset Split, and Metrics

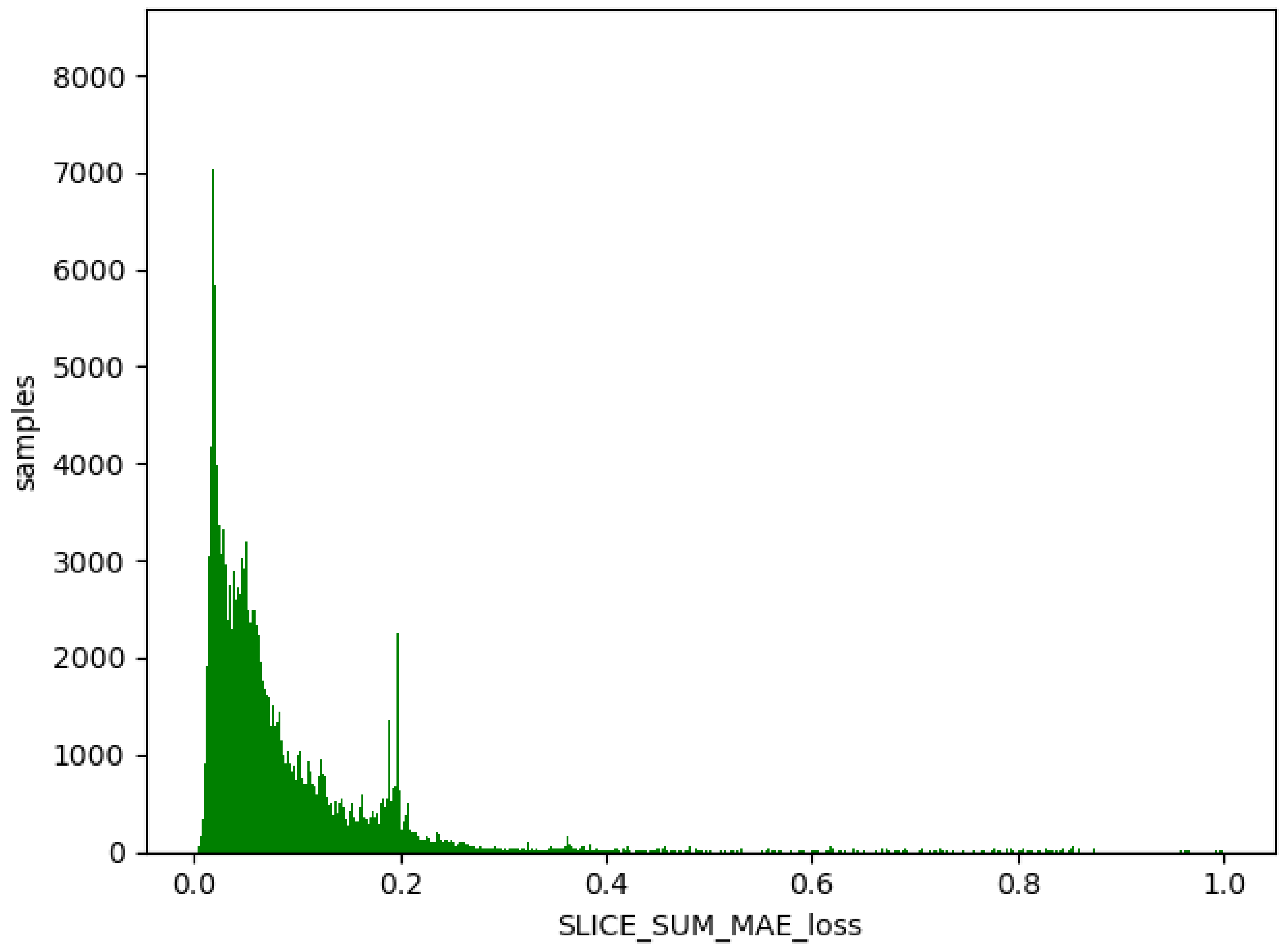

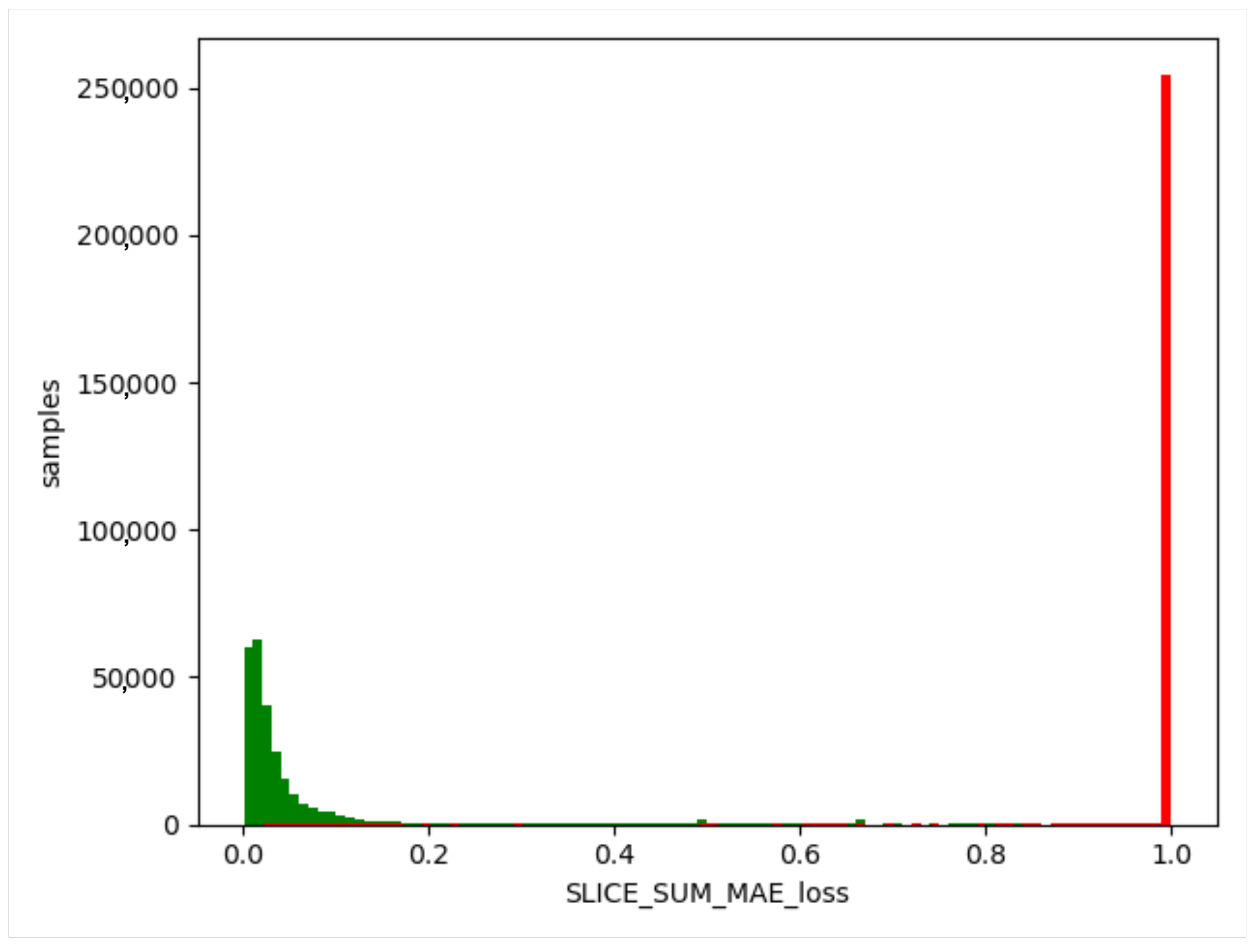

4.3. One-Class Classification

4.4. Zero-Day Attacks Detection

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kanimozhi, V.; Jacob, P. UNSW-NB15 Dataset Feature Selection and Network Intrusion Detection using Deep Learning. Int. J. Recent Technol. Eng. 2019, 7, 2277–3878. [Google Scholar]

- Azizjon, M.; Jumabek, A.; Kim, W. 1D CNN based network intrusion detection with normalization on imbalanced data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 218–224. [Google Scholar]

- Mahalakshmi, G.; Uma, E.; Aroosiya, M.; Vinitha, M. Intrusion Detection System Using Convolutional Neural Network on UNSW NB15 Dataset. In Advances in Parallel Computing Technologies and Applications; IOS Press: Amsterdam, The Netherlands, 2021; pp. 1–8. [Google Scholar]

- Yu, Y.; Bian, N. An Intrusion Detection Method Using Few-Shot Learning. IEEE Access 2020, 8, 49730–49740. [Google Scholar] [CrossRef]

- Sohi, S.M.; Seifert, J.P.; Ganji, F. RNNIDS: Enhancing network intrusion detection systems through deep learning. Comput. Secur. 2021, 102, 102151. [Google Scholar] [CrossRef]

- Yuan, D. Intrusion Detection for Smart Home Security Based on Data Augmentation with Edge Computing. In Proceedings of the 2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar]

- Mohammadi, M.; Rashid, T.A.; Karim, S.H.T. A comprehensive survey and taxonomy of the SVM-based intrusion detection systems. J. Netw. Comput. Appl. 2021, 178, 102983. [Google Scholar] [CrossRef]

- Gu, J.; Lu, S. An effective intrusion detection approach using SVM with naïve Bayes feature embedding. Comput. Secur. 2021, 103, 102158. [Google Scholar] [CrossRef]

- Shah, S.; Muhuri, P.S.; Yuan, X. Implementing a network intrusion detection system using semi-supervised support vector machine and random forest. In Proceedings of the 2021 ACM Southeast Conference, Virtual Event, 15–17 April 2012; pp. 180–184. [Google Scholar]

- Zhang, H. An Effective Deep Learning Based Scheme for Network Intrusion Detection. In Proceedings of the 24th IEEE International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Safaldin, M.; Otair, M.; Abualigah, L. Improved binary gray wolf optimizer and SVM for intrusion detection system in wireless sensor networks. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 1559–1576. [Google Scholar] [CrossRef]

- Frikha, A.; Krompaß, D.; Köpken, H.G. Few-shot one-class classification via meta-learning. arXiv 2020, arXiv:2007.04146. [Google Scholar] [CrossRef]

- Chen, Y.; Tian, Y.; Pang, G. Deep one-class classification via interpolated gaussian descriptor. arXiv 2021, arXiv:2101.10043. [Google Scholar] [CrossRef]

- Binbusayyis, A.; Vaiyapuri, T. Unsupervised deep learning approach for network intrusion detection combining convolutional autoencoder and one-class SVM. Appl. Intell. 2021, 51, 7094–7108. [Google Scholar] [CrossRef]

- Alazzam, H.; Sharieh, A.; Sabri, K.E. A lightweight intelligent network intrusion detection system using OCSVM and Pigeon inspired optimizer. Appl. Intell. 2022, 52, 3527–3544. [Google Scholar] [CrossRef]

- Mahfouz, A.M.; Abuhussein, A.; Venugopal, D. Network intrusion detection model using one-class support vector machine. In Advances in Machine Learning and Computational Intelligence: Proceedings of ICMLCI 2019–2021; Springer: Singapore, 2021; pp. 79–86. [Google Scholar]

- Yang, K.; Kpotufe, S.; Feamster, N. An Efficient One-Class SVM for Anomaly Detection in the Internet of Things. arXiv 2021, arXiv:2104.11146. [Google Scholar]

- Verkerken, M.; D’hooge, L.; Wauters, T. Towards model generalization for intrusion detection: Unsupervised machine learning techniques. J. Netw. Syst. Manag. 2022, 30, 12. [Google Scholar] [CrossRef]

- Abdelmoumin, G.; Rawat, D.B.; Rahman, A. On the Performance of Machine Learning Models for Anomaly-Based Intelligent Intrusion Detection Systems for the Internet of Things. IEEE Internet Things J. 2022, 9, 4280–4290. [Google Scholar] [CrossRef]

- Chalapathy, R.; Menon, A.K.; Chawla, S. Anomaly detection using one-class neural networks. arXiv 2018, arXiv:1802.06360. [Google Scholar]

- Gupta, P.; Ghatole, Y.; Reddy, N. Stacked Autoencoder based Intrusion Detection System using One-Class Classification. In Proceedings of the 11th International Conference on Cloud Computing, Noida, India, 28–29 January 2021; pp. 643–648. [Google Scholar]

- Dong, X.; Taylor, C.J. Defect Classification and Detection Using a Multitask Deep One-Class CNN. IEEE Trans. Autom. Sci. Eng. 2021, 19, 1719–1730. [Google Scholar] [CrossRef]

- Wang, T.; Cao, J.; Lai, X. Hierarchical One-Class Classifier With Within-Class Scatter-Based Autoencoders. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3770–3776. [Google Scholar] [CrossRef]

- Song, Y.; Hyun, S.; Cheong, Y.G. Analysis of Autoencoders for Network Intrusion Detection. Sensors 2021, 21, 4294. [Google Scholar] [CrossRef]

- Ghorbani, A.; Fakhrahmad, S.M. A Deep Learning Approach to Network Intrusion Detection Using a Proposed Supervised Sparse Auto-encoder and SVM. Iran. J. Sci. Technol. Trans. Electr. Eng. 2022, 46, 829–846. [Google Scholar] [CrossRef]

- Long, C.; Xiao, J.P.; Wei, J. Autoencoder ensembles for network intrusion detection. In Proceedings of the 24th International Conference on Advanced Communication Technology (ICACT), Phoenix, Pyeongchang, 13–16 February 2022; pp. 323–333. [Google Scholar]

- Husain, A.; Salem, A.; Jim, C.; Dimitoglou, G. Development of an efficient network intrusion detection model using extreme gradient boosting (XGBoost) on the UNSW-NB15 dataset. In Proceedings of the 2019 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Ajman, United Arab Emirates, 10–12 December 2019; pp. 1–7. [Google Scholar]

- Hussein, S.A.; Mahmood, A.A.; Oraby, E.O. Network Intrusion Detection System Using Ensemble Learning Approaches. Technology 2021, 18, 962–974. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J. Intrusion Detection Model of Internet of Things Based on lightGBM. IEICE Trans. Commun. 2023. Available online: https://ssrn.com/abstract=3993056 (accessed on 20 February 2023).

- Khan, M.A.; Khan Khattk, M.A.; Latif, S.; Shah, A.A.; Ur Rehman, M.; Boulila, W.; Driss, M.; Ahmad, J. Voting classifier-based intrusion detection for iot networks. In Advances on Smart and Soft Computing: Proceedings of ICACIn 2021–2022; Springer: Singapore, 2022; pp. 313–328. [Google Scholar]

- Jiaqi, L.; Zhifeng, Z. AI-Based Two-Stage Intrusion Detection for Software Defined IoT Networks. IEEE Internet Things J. 2019, 6, 2093–2102. [Google Scholar]

- Saba, T.; Sadad, T.; Rehman, A. Intrusion detection system through advance machine learning for the internet of things networks. IT Prof. 2021, 23, 58–64. [Google Scholar] [CrossRef]

- Yao, W.; Hu, L. A Two-Layer Soft-Voting Ensemble Learning Model For Network Intrusion Detection. In Proceedings of the 52nd Annual IEEE/IFIP International Conference on Dependable Systems and Networks Workshops (DSN-W), Baltimore, MD, USA, 27–30 June 2022; pp. 155–161. [Google Scholar]

- Tian, Q.; Han, D.; Hsieh, M.Y. A two-stage intrusion detection approach for software-defined IoT networks. Soft Comput. 2021, 25, 10935–10951. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference ACM, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’ 17, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Almomani, I.; Al-Kasasbeh, B.; Al-Akhras, M. WSN-DS: A dataset for intrusion detection systems in wireless sensor networks. J. Sensors 2016, 2016, 4731953. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, ACT, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set. Inf. Secur. J. Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. The significant features of the UNSW-NB15 and the KDD99 data set for network intrusion detection systems. In Proceedings of the 4th International Workshop on Building Analysis Datasets and Gathering Experience Returns for Security (BADGERS), Kyoto, Japan, 5 November 2015; pp. 25–31. [Google Scholar]

- Janarthanan, T.; Zargari, S. Feature selection in UNSW-NB15 and KDDCUP’ 99 dataset. In Proceedings of the 26th IEEE International Symposium on Industrial Electronics (ISIE), Edinburgh, UK, 19–21 June 2017; pp. 1881–1886. [Google Scholar]

- Dong, R.H.; Yan, H.H.; Zhang, Q.Y. An Intrusion Detection Model for Wireless Sensor Network Based on Information Gain Ratio and Bagging Algorithm. Int. J. Netw. Secur. 2020, 22, 218–230. [Google Scholar]

- Manal, A.; Bdoor, M. Daniel of Service Attack Detection using Classification Techniques in WSNs. Int. J. Adv. Trends Comput. Sci. Eng. 2019, 8, 266–272. [Google Scholar] [CrossRef]

- Chandre, P.; Mahalle, P.; Shinde, G. Intrusion prevention system using convolutional neural network for wireless sensor network. Int. J. Artif. Intell. 2022, 11, 504–515. [Google Scholar] [CrossRef]

- Arkan, A.; Ahmadi, M. An unsupervised and hierarchical intrusion detection system for software-defined wireless sensor networks. J. Supercomput. 2023. [Google Scholar] [CrossRef]

| Study | Method | Feature Selection | Balanced Data | Novelty (Zero-Day) Detection |

|---|---|---|---|---|

| Azizjon, M. [2]; Mahalakshmi, G. [3] | CNN | No | No | No |

| Yu, Y. [4] | FSL | No | Yes | No |

| Sms, A. [5] | RNN(LSTM) | No | Yes | No |

| Yuan, D. [6] | GAN | No | Yes | No |

| Safaldin, M. [11] | SVM | Yes | No | No |

| Abdelmoumin, G. [19] | OCSVM | Yes | No | No |

| Song, Y. [24] | Stacked self-encoder | No | No | No |

| Khan [30] | Voting Ensemble | No | No | No |

| Yao, W. [33] | Soft-Voting Ensemble | No | Yes | No |

| Our approach | OCAE + Ensemble | Yes | Yes | Yes |

| ID | WSN-DS | UNSW-NB15 | KDD99 |

|---|---|---|---|

| 1 | Time | dur | proto |

| 2 | Is_CH | sbytes | services |

| 3 | who CH | dbytes | flags |

| 4 | Dist_To_CH | sttl | src_bytes |

| 5 | ADV_S | dttl | logged_in |

| 6 | ADV_R | Sload | count |

| 7 | JOIN_R | Dload | srv_count |

| 8 | SCH_S | smeansz | serror_rate |

| 9 | Rank | dmeansz | srv_serror_rate |

| 10 | DATA_S | Sjit | same_srv_rate |

| 11 | DATA_R | Sintpkt | diff_srv_rate |

| 12 | Data_Sent_To_BS | Dintpkt | dst_host_count |

| 13 | dist_CH_To_BS | tcprtt | dst_host_srv_count |

| 14 | Expaned Energy | synack | dst_host_same_srv_rate |

| 15 | ackdat | dst_host_diff_srv_rate | |

| 16 | ct_state_ttl | dst_host_same_src_port_rate | |

| 17 | ct_srv_src | dst_host_srv_diff_host_rate | |

| 18 | ct_srv_dst | dst_host_srv_serror_rate | |

| 19 | ct_dst_src_ltm | dst_host_rerror_rate | |

| 20 | service | dst_host_srv_rerror_rate |

| Dataset | Data Split | Normal Data | Attack Data |

|---|---|---|---|

| WSN-DS | Training set | 302,921 | 0 |

| Evaluating set | 29,116 | 29,116 | |

| UNSW-NB15 | Training set | 1,862,200 | 0 |

| Evaluating set | 75,691 | 75,691 | |

| KDD99 | Training set | 550,652 | 0 |

| Evaluating set | 262,152 | 262,152 |

| Attack_label | Predict_label | ||

| Attack | Normal | ||

| True_label | Attack | TP | FN |

| Normal | FP | TN |

| Dataset | Loss | Model_Size |

|---|---|---|

| WSN-DS | 0.017 | 338 KB |

| UNSW-NB15 | 0.012 | 1.1 MB |

| KDD99 | 0.008 | 423 KB |

| Attack_label | Predict_label | ||

| Attack | Normal | ||

| True_label | Attack | 28,693 | 423 |

| Normal | 793 | 28,323 |

| Attack_label | Predict_label | ||

| Attack | Normal | ||

| True_label | Attack | 74,189 | 1502 |

| Normal | 135 | 75,556 |

| Attack_label | Predict_label | ||

| Attack | Normal | ||

| True_label | Attack | 257,505 | 4647 |

| Normal | 4656 | 257,496 |

| Evaluating Set | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|

| WSN-DS | 0.9791 | 0.9792 | 0.9854 | 0.9792 |

| UNSW-NB15 | 0.9892 | 0.9893 | 0.9802 | 0.9891 |

| KDD99 | 0.9823 | 0.9823 | 0.9823 | 0.9823 |

| Dataset | Approach | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|---|

| WSN-DS | SVM [44] | 0.96 | - | - | - |

| CNN [45] | 0.97 | - | - | - | |

| Software-defined [46] | 0.97 | - | - | - | |

| Our approach | 0.9791 | 0.9792 | 0.9854 | 0.9792 | |

| Dataset | Approach | Accuracy | Precision | Recall | F1_score |

| UNSW-NB15 | AC-GAN [6] | 0.96 | 0.96 | 0.98 | 0.97 |

| CAE and OC [14] | 0.94 | - | - | 0.95 | |

| Emsemble [33] | 0.9523 | 0.9658 | 0.9594 | 0.9623 | |

| Our approach | 0.9892 | 0.9893 | 0.9802 | 0.9891 | |

| Dataset | Approach | Accuracy | Precision | Recall | F1_score |

| KDD99 | CAE and OC [14] | 0.9158 | - | - | 0.9287 |

| AE and SVM [25] | 0.9472 | - | - | - | |

| Stacked AE [21] | 0.9817 | 0.9918 | 0.9522 | 0.9715 | |

| Our approach | 0.9823 | 0.9823 | 0.9823 | 0.9823 |

| Dataset | Anomaly Data | Grayhole | Blackhole | TDMA | Flooding (0-Day Attack) |

|---|---|---|---|---|---|

| WSN-DS | Training set | 10,063 | 5393 | 5312 | 0 |

| Evaluating set | 2539 | 1374 | 1278 | 0 | |

| Novelty set (0-Day Attack Set) | 0 | 0 | 0 | 3157 | |

| Total | 12,602 | 6767 | 6590 | 3157 | |

| Dataset | Anomaly Data | Dos | Probe | Access | Privilege (0-Day Attack) |

| KDD99 | Training set | 197,767 | 11,106 | 808 | 0 |

| Evaluating set | 49,493 | 2736 | 191 | 0 | |

| Novelty set (0-Day Attack Set) | 0 | 0 | 0 | 51 | |

| Total | 247,260 | 13,842 | 999 | 51 | |

| Dataset | Anomaly Label | Training set | Evaluating set | Novelty set (0-Day Attack Set) | Total |

| UNSW-NB15 | Exploits | 20,415 | 4979 | 0 | 25,394 |

| Fuzzers | 14,881 | 3809 | 0 | 18,690 | |

| Generic | 13,695 | 3492 | 0 | 17,187 | |

| Reconnaissance | 6752 | 3809 | 0 | 8410 | |

| DoS | 2894 | 723 | 0 | 3617 | |

| Shellcode | 1164 | 282 | 0 | 1446 | |

| Analysis | 352 | 89 | 0 | 441 | |

| Backdoor | 273 | 74 | 0 | 347 | |

| Worms (0-day attack) | 0 | 0 | 159 | 159 |

| Dataset | Method | Dataset_Type | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|---|---|

| WSN-DS | lightGBM | Training set | 0.9971 | 0.9971 | 0.9971 | 0.9971 |

| Evaluating set | 0.9882 | 0.9884 | 0.9882 | 0.9883 | ||

| XGBoost | Training set | 0.9986 | 0.9986 | 0.9986 | 0.9986 | |

| Evaluating set | 0.9909 | 0.991 | 0.9909 | 0.9907 | ||

| RandForest | Training set | 0.9998 | 0.9998 | 0.9998 | 0.9998 | |

| Evaluating set | 0.9946 | 0.9946 | 0.9946 | 0.9946 | ||

| Soft-Voting | Training set | 0.9995 | 0.9995 | 0.9995 | 0.9995 | |

| Evaluating set | 0.9934 | 0.9934 | 0.9934 | 0.9934 | ||

| UNSW-NB15 | lightGBM | Training set | 0.9841 | 0.9843 | 0.9841 | 0.9836 |

| Evaluating set | 0.9063 | 0.904 | 0.9063 | 0.9004 | ||

| XGBoost | Training set | 0.9665 | 0.9672 | 0.9665 | 0.9657 | |

| Evaluating set | 0.9081 | 0.908 | 0.9081 | 0.9021 | ||

| RandForest | Training set | 0.992 | 0.992 | 0.992 | 0.992 | |

| Evaluating set | 0.8888 | 0.8859 | 0.8888 | 0.8825 | ||

| Soft-Voting | Training set | 0.9883 | 0.9884 | 0.9883 | 0.9881 | |

| Evaluating set | 0.9074 | 0.9065 | 0.9073 | 0.9011 | ||

| KDD99 | lightGBM | Training set | 0.9999 | 0.9999 | 0.9999 | 0.9999 |

| Evaluating set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | ||

| XGBoost | Training set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | |

| Evaluating set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | ||

| RandForest | Training set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | |

| Evaluating set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | ||

| Soft-Voting | Training set | 0.9999 | 0.9999 | 0.9999 | 0.9999 | |

| Evaluating set | 0.9999 | 0.9999 | 0.9999 | 0.9999 |

| Dataset | 0-Day Type | Total | Method | Dos | Probe | Access |

|---|---|---|---|---|---|---|

| WSN-DS | Flooding | 3157 | lightGBM | 1513 | 31 | 1613 |

| XGBoost | 2739 | 13 | 405 | |||

| RandomForest | 2592 | 29 | 176 | |||

| Soft-Voting | 2591 | 18 | 548 | |||

| KDD99 | Privilege | 51 | lightGBM | 0 | 0 | 51 |

| XGBoost | 0 | 0 | 51 | |||

| RandomForest | 0 | 0 | 51 | |||

| Soft-Voting | 0 | 0 | 51 |

| Novelty Type | Recognition Label | LightGBM | XGBoost | RandomForest | Soft-Voting |

|---|---|---|---|---|---|

| WORMS | Exploits | 136 | 134 | 117 | 136 |

| Fuzzers | 12 | 12 | 12 | 12 | |

| Generic | 11 | 12 | 28 | 11 | |

| Reconnaissance | 0 | 0 | 0 | 0 | |

| DoS | 0 | 1 | 0 | 0 | |

| Shellcode | 0 | 0 | 2 | 0 | |

| Analysis | 0 | 0 | 0 | 0 | |

| Backdoor | 0 | 0 | 0 | 0 | |

| Total | 159 | 159 | 159 | 159 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, W.; Hu, L.; Hou, Y.; Li, X. A Lightweight Intelligent Network Intrusion Detection System Using One-Class Autoencoder and Ensemble Learning for IoT. Sensors 2023, 23, 4141. https://doi.org/10.3390/s23084141

Yao W, Hu L, Hou Y, Li X. A Lightweight Intelligent Network Intrusion Detection System Using One-Class Autoencoder and Ensemble Learning for IoT. Sensors. 2023; 23(8):4141. https://doi.org/10.3390/s23084141

Chicago/Turabian StyleYao, Wenbin, Longcan Hu, Yingying Hou, and Xiaoyong Li. 2023. "A Lightweight Intelligent Network Intrusion Detection System Using One-Class Autoencoder and Ensemble Learning for IoT" Sensors 23, no. 8: 4141. https://doi.org/10.3390/s23084141

APA StyleYao, W., Hu, L., Hou, Y., & Li, X. (2023). A Lightweight Intelligent Network Intrusion Detection System Using One-Class Autoencoder and Ensemble Learning for IoT. Sensors, 23(8), 4141. https://doi.org/10.3390/s23084141