Stingray Sensor System for Persistent Survey of the GEO Belt

Abstract

:1. Introduction

2. Design Methodology and System Description

2.1. Goals and Objectives

2.2. System Design

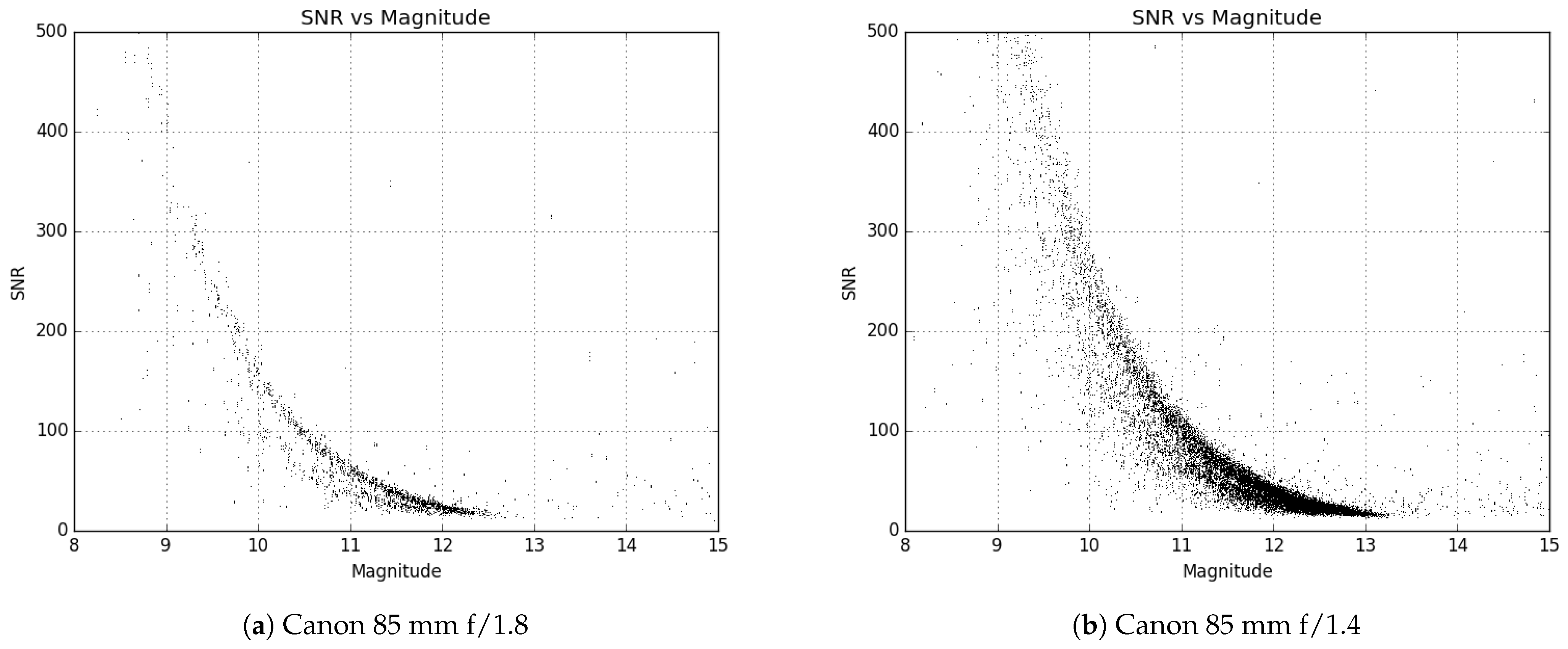

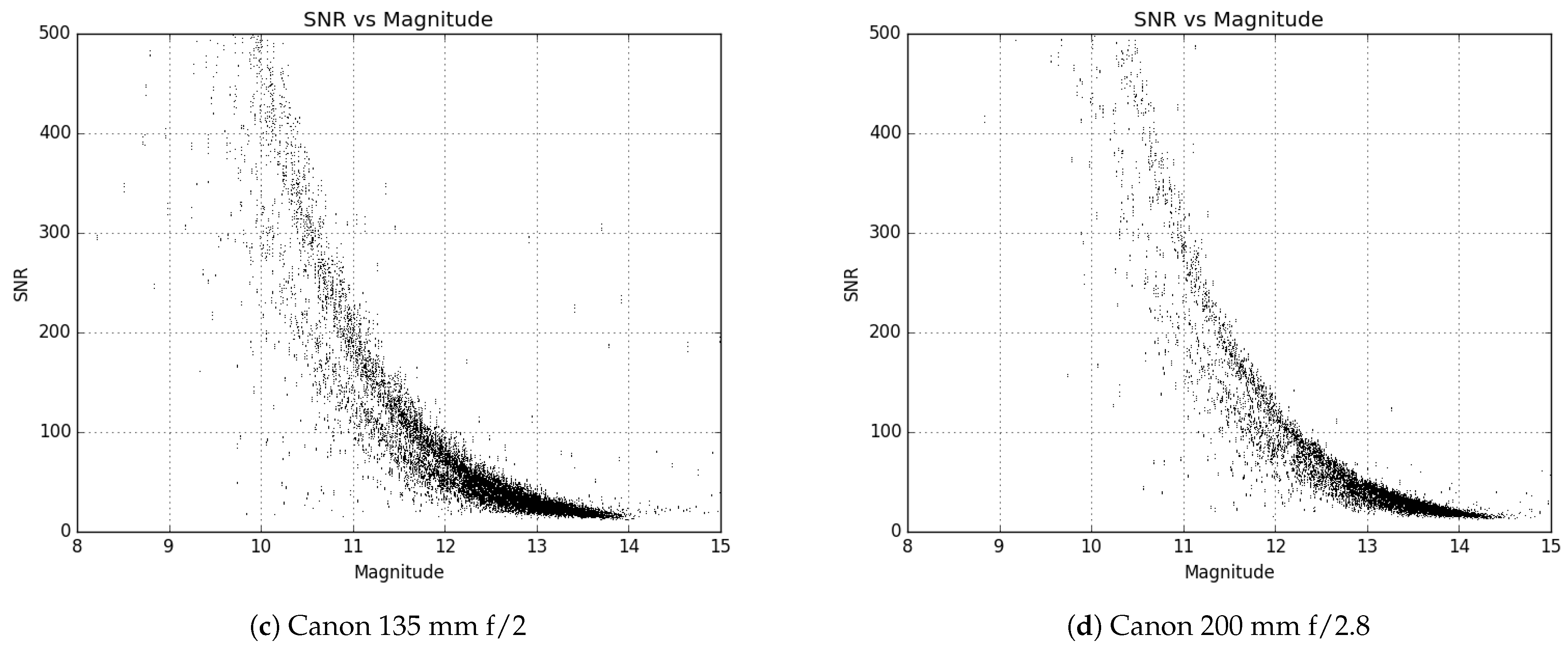

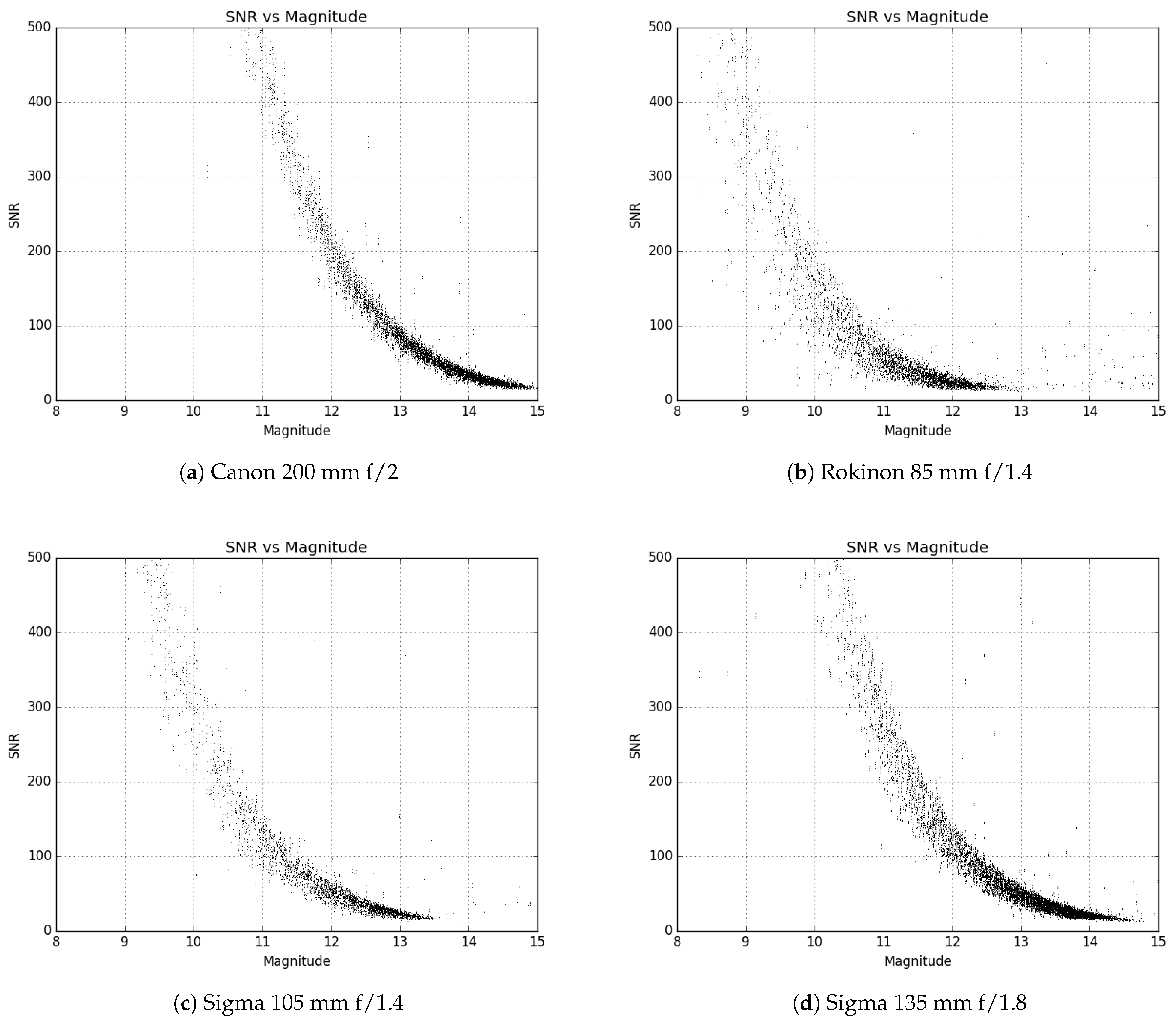

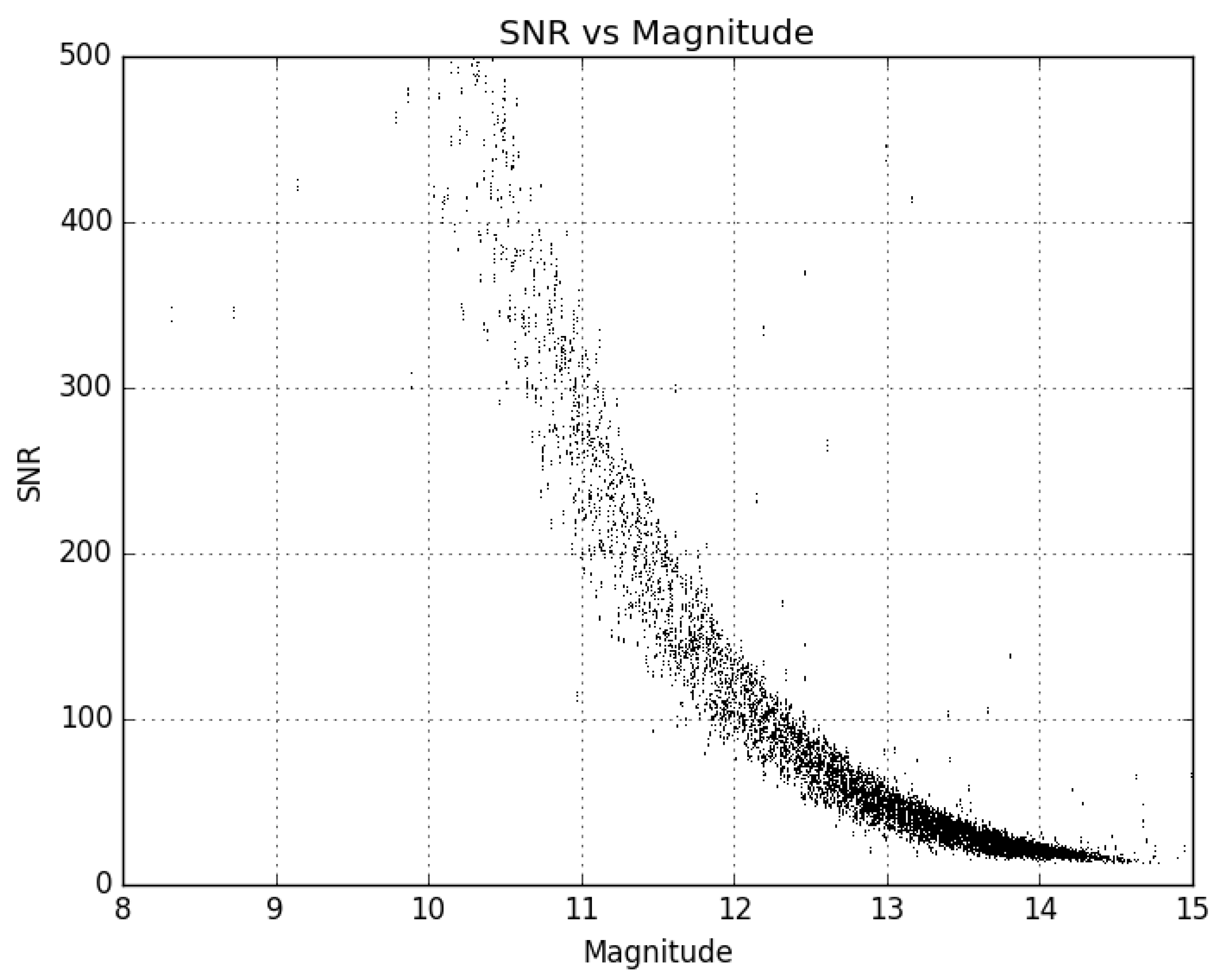

2.2.1. Hardware Trade Study

2.2.2. Stingray Prototype

2.3. System Architecture

2.3.1. Hardware Selection

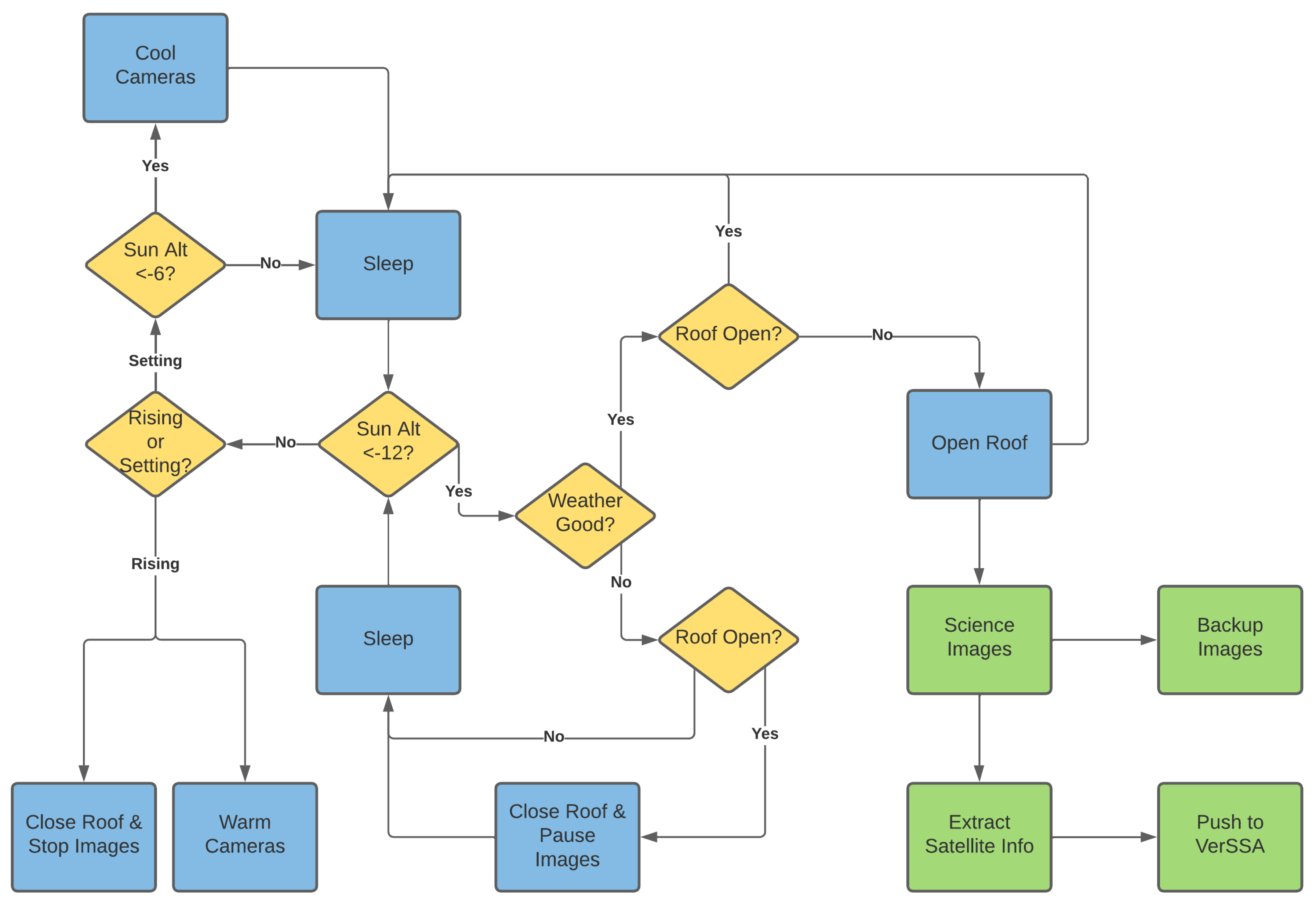

2.3.2. Data Collection

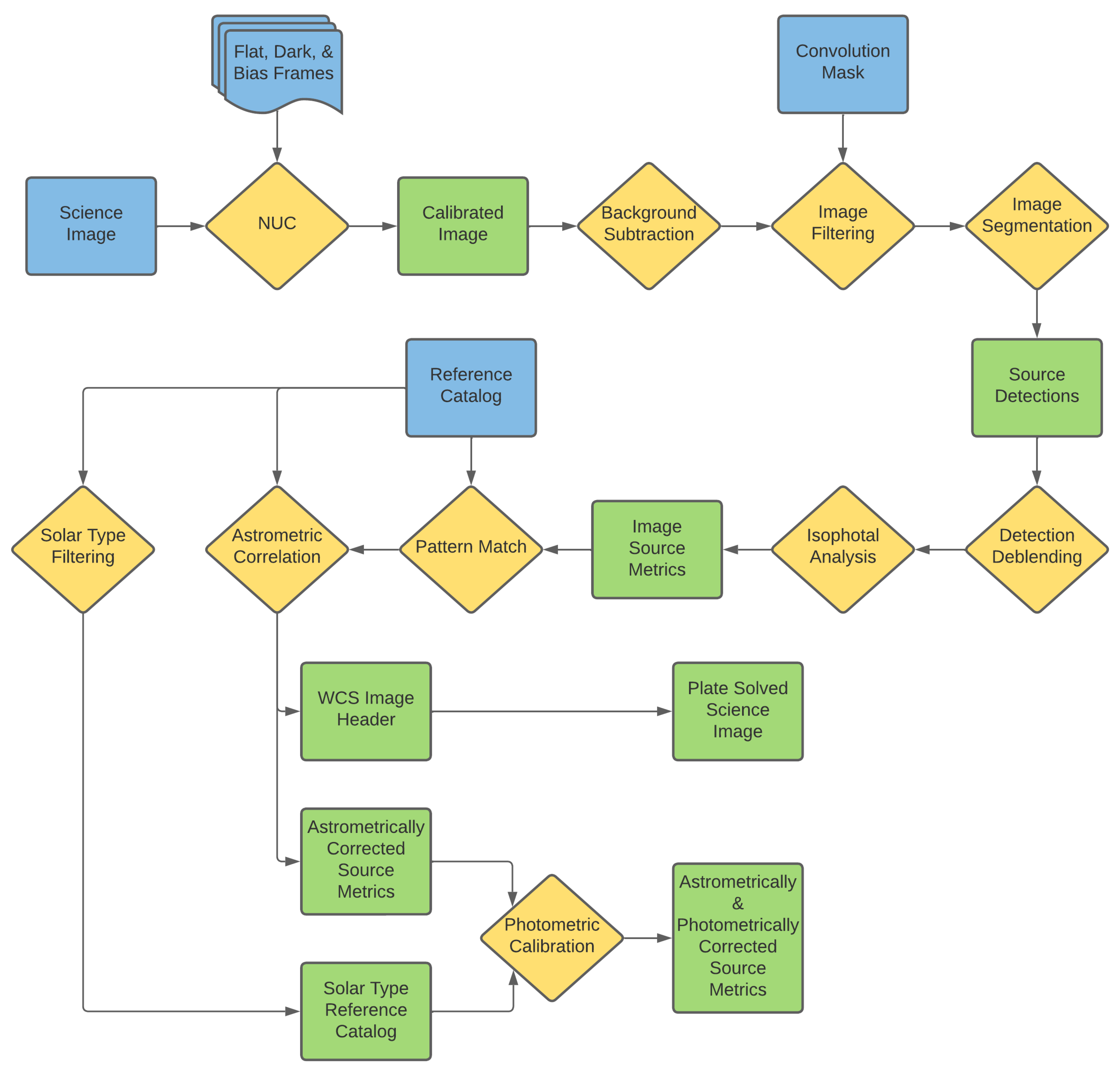

2.3.3. Data Processing and Handling

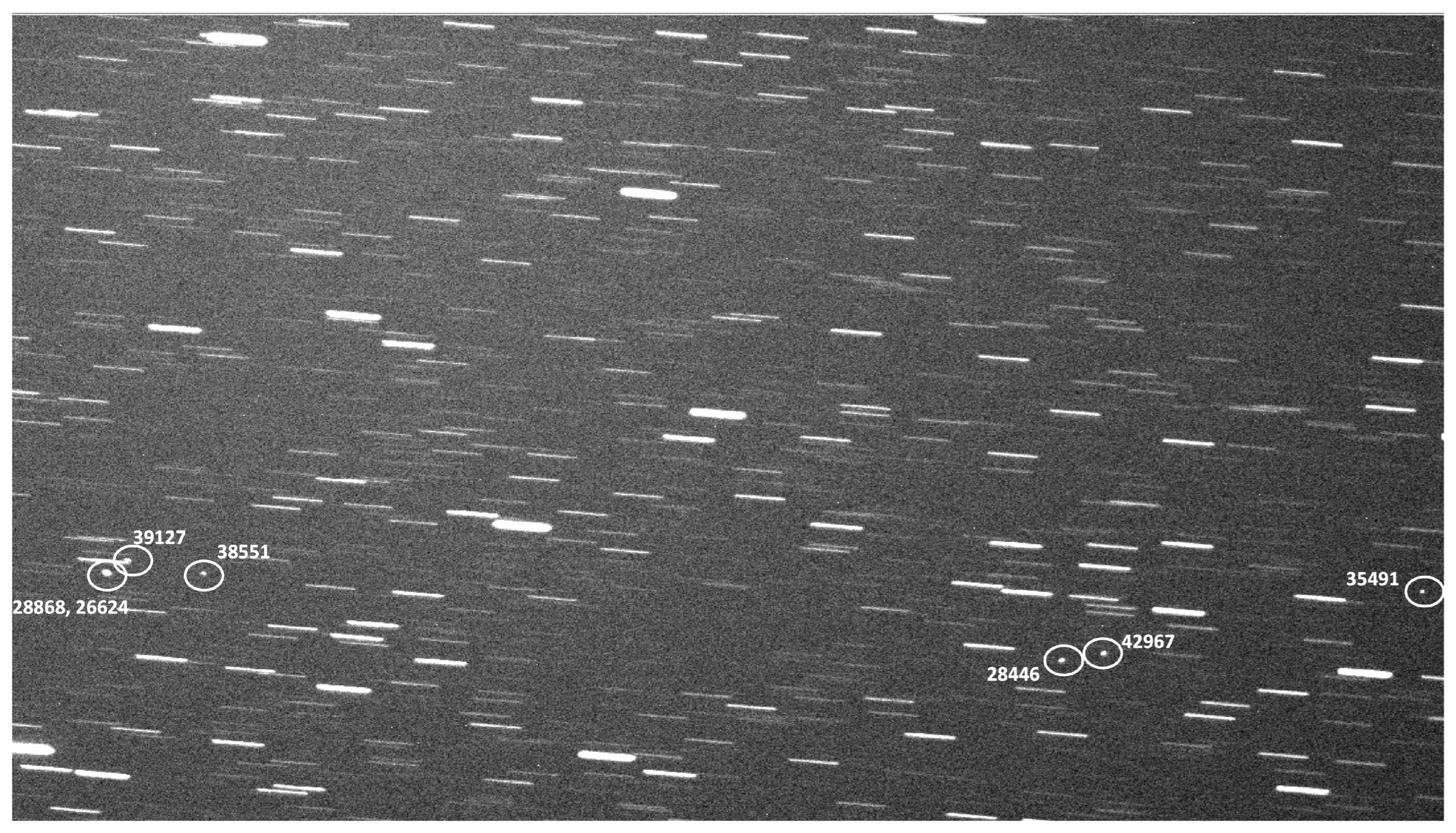

3. Deployment and Performance Evaluation

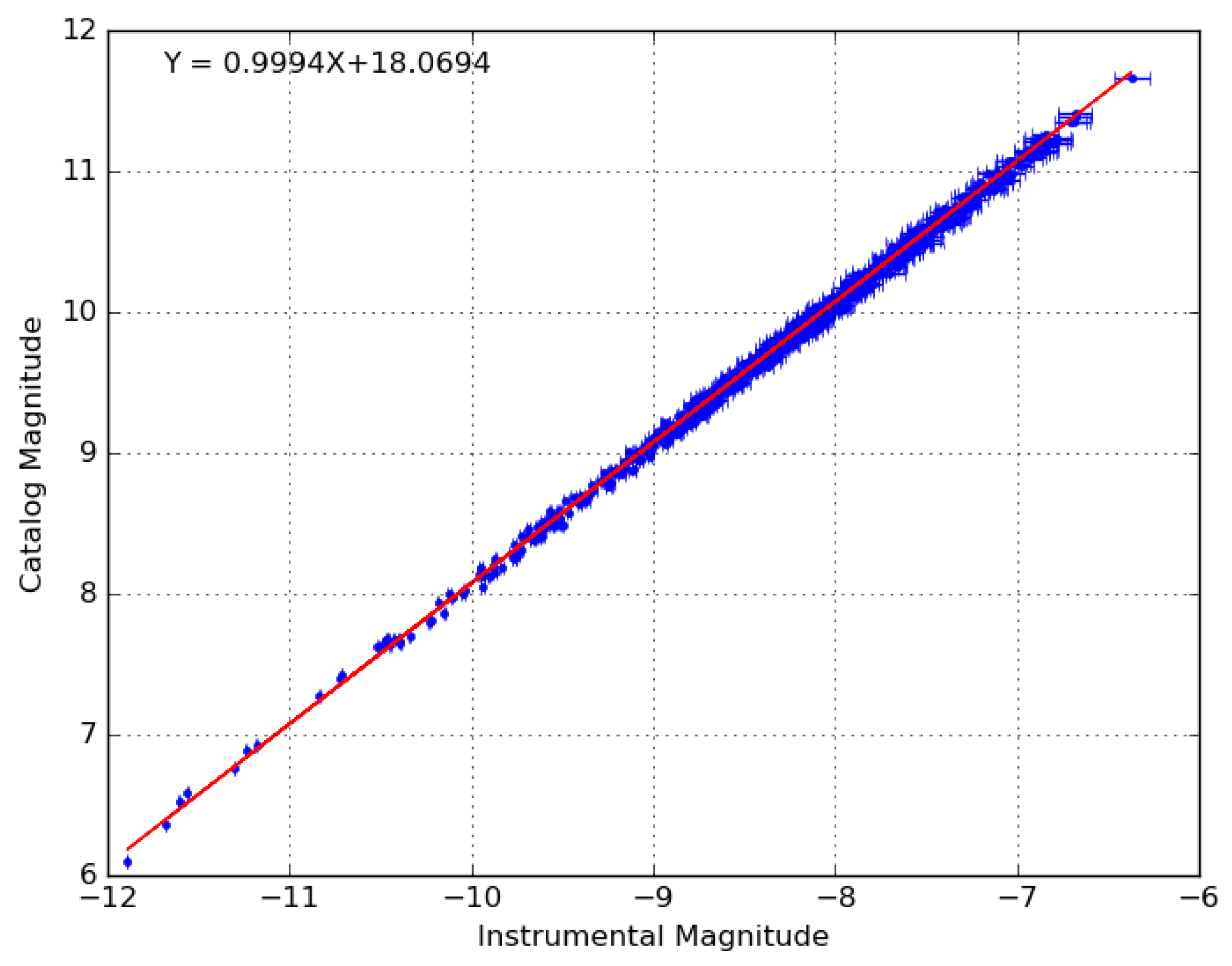

3.1. Commissioning and Calibration

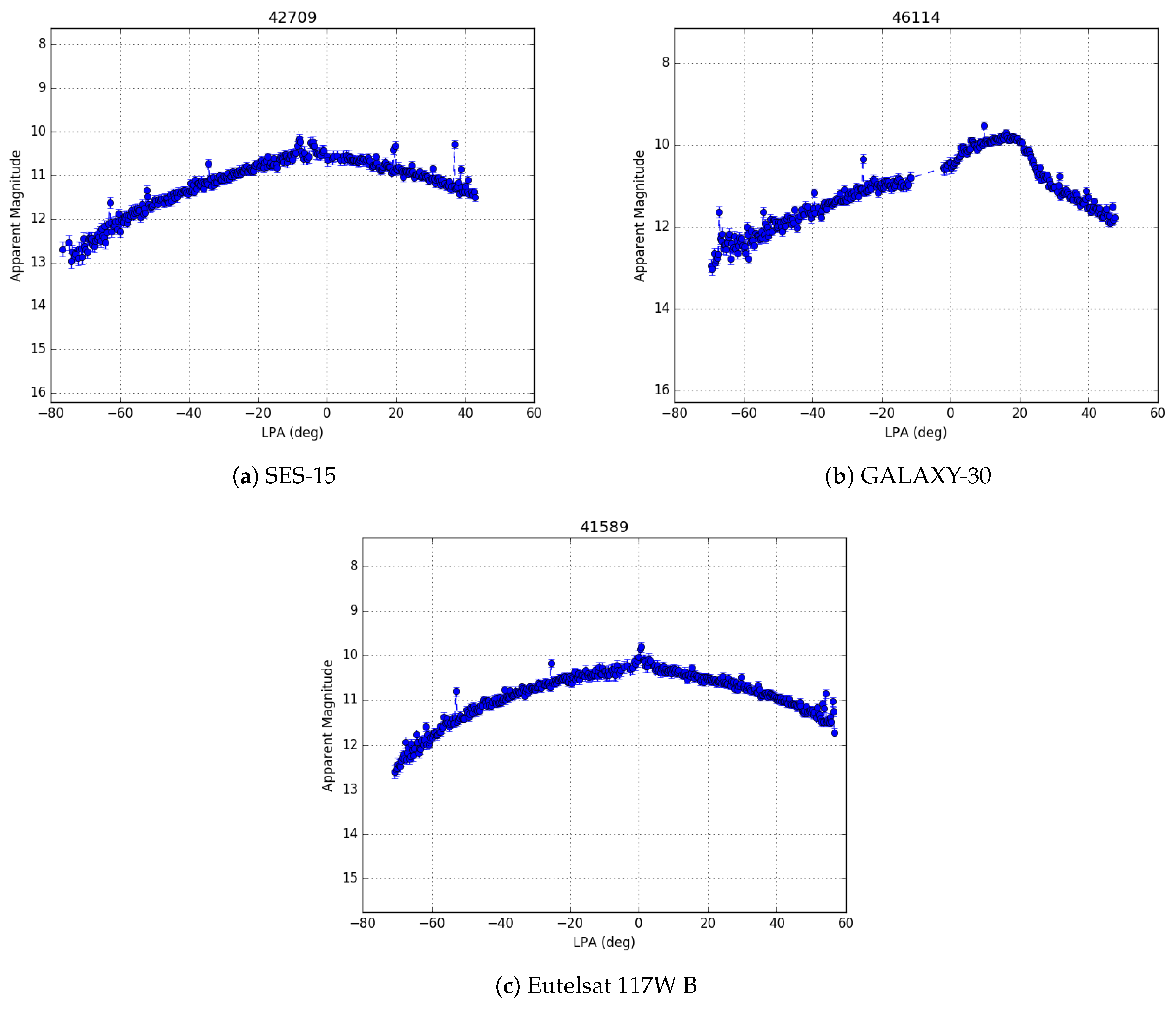

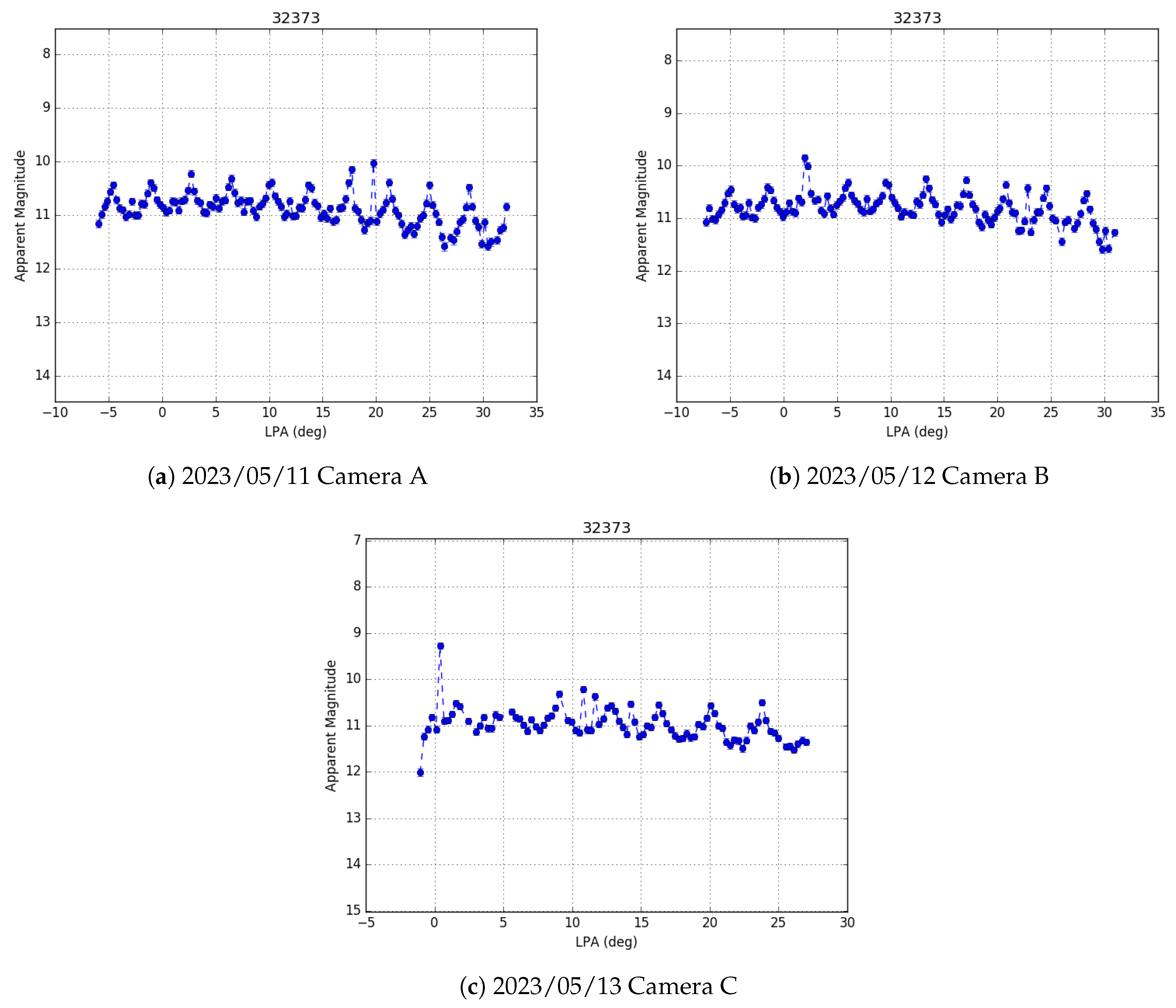

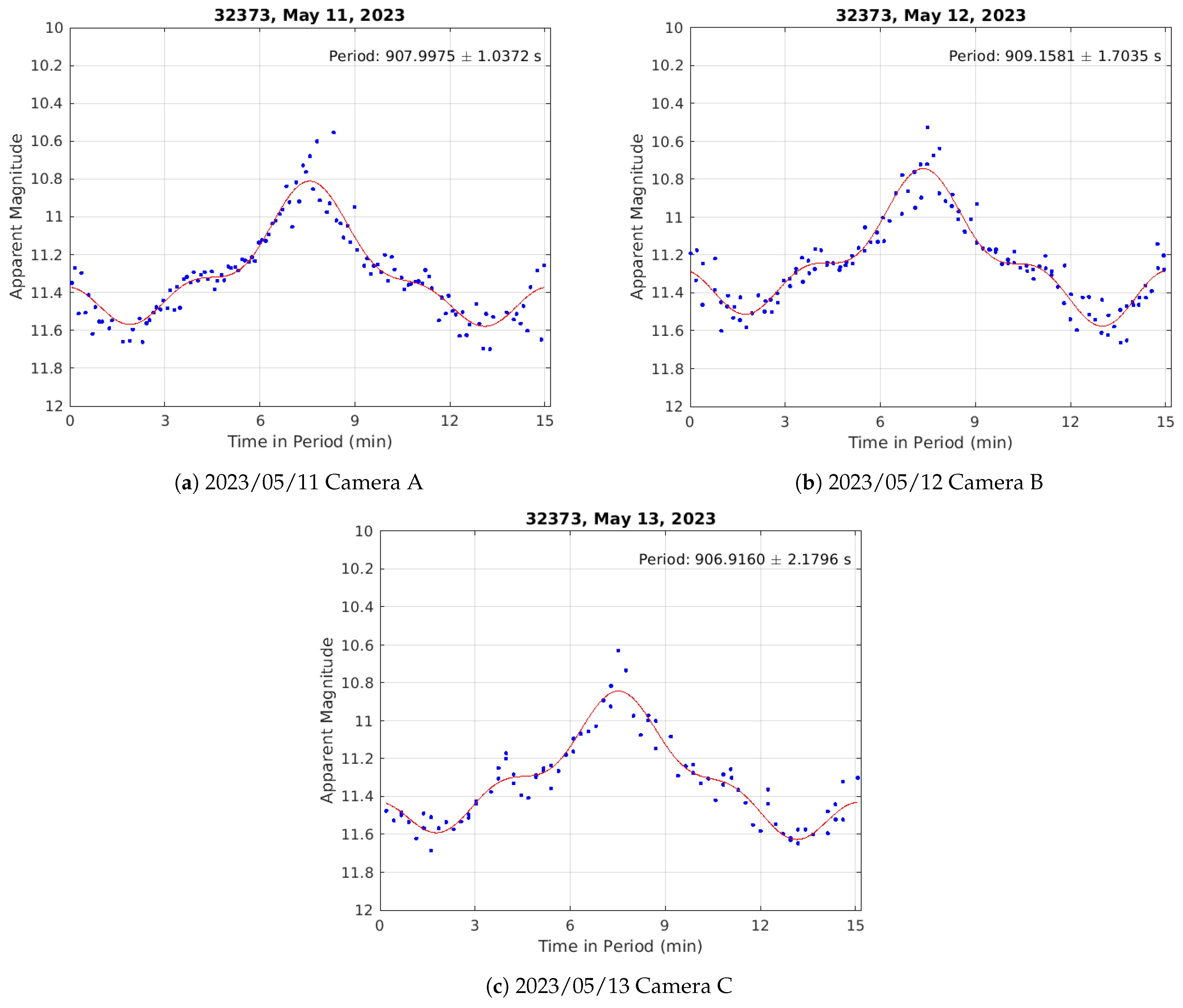

3.2. Sample Light Curves

4. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pelton, J. The Proliferation of Communications Satellites: Gold Rush in the Clarke Orbit. In People in Space: Policy Perspectives for a “Star Wars” Century; Katz, J., Ed.; Springer: Berlin/Heidelberg, Germany, 1985; pp. 98–109. [Google Scholar]

- Hinks, J.; Linares, R.; Crassidis, J. Attitude Observability from Light Curve Measurements. In Proceedings of the AIAA Guidance, Navigation, and Control (GNC) Conference, Boston, MA, USA, 19–22 August 2013; p. 5005. [Google Scholar]

- Furfaro, R.; Linares, R.; Reddy, V. Shape Identification of Space Objects via Light Curve Inversion Using Deep Learning Models. In Proceedings of the AMOS Technologies Conference, Maui Economic Development Board, Kihei, Maui, HI, USA, 17–20 September 2019. [Google Scholar]

- Linares, R.; Furfaro, R.; Reddy, V. Space Objects Classification via Light-Curve Measurements Using Deep Convolutional Neural Networks. J. Astronaut. Sci. 2020, 67, 1063–1091. [Google Scholar]

- Calef, B.; Africano, J.; Birge, B.; Hall, D.; Kervin, P. Photometric Signature Inversion. Unconventional Imaging II. In Proceedings of the International Society for Optics and Photonics, San Diego, CA, USA, 13–17 August 2006; Volume 6307, p. 63070E. [Google Scholar]

- Cowardin, H.; Seitzer, P.; Abercromby, K.; Barker, E.; Buckalew, B.; Cardona, T.; Krisko, P.; Lederer, S. Observations of Titan IIIC Transtage Fragmentation Debris. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies (AMOS) Conference, Maui, HI, USA, 10–13 September 2013; p. 4112612. [Google Scholar]

- Wetterer, C.; Linares, R.; Crassidis, J.; Kelecy, T.; Ziebart, M.; Jah, M.; Cefola, P. Refining Space Object Radiation Pressure Modeling with Bidirectional Reflectance Distribution Functions. J. Guid. Control Dyn. 2014, 37, 185–196. [Google Scholar]

- Cowardin, H.; Anz-Meador, P.; Reyes, J. Characterizing GEO Titan IIIC Transtage Fragmentations Using Ground-Based and Telescopic Measurements. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies (AMOS) Conference 2017, Maui, HI, USA, 19–22 September 2017. number JSC-CN-40379. [Google Scholar]

- DeMars, K.; Jah, M. Passive Multi-Target Tracking with Application to Orbit Determination for Geosynchronous Objects. In Proceedings of the 19th AAS/AIAA Space Flight Mechanics Meeting, Savannah, Georgia, 8–12 February 2009; AAS Paper. pp. 09–108. [Google Scholar]

- Aster, R.; Borchers, B.; Thurber, C. Parameter Estimation and Inverse Problems; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Sanchez, D.; Gregory, S.; Werling, D.; Payne, T.; Kann, L.; Finkner, L.; Payne, D.; Davis, C. Photometric Measurements of Deep Space Satellites. In Proceedings of the Imaging Technology and Telescopes, San Diego, CA, USA, 30 July– 4 August 2000; Volume 4091, pp. 164–182. [Google Scholar]

- Payne, T.; Gregory, S.; Luu, K. Electro-Optical Signatures Comparisons of Geosynchronous Satellites. In Proceedings of the 2006 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2006. [Google Scholar]

- Payne, T.; Gregory, S.; Luu, K. SSA Analysis of GEOS Photometric Signature Classifications and Solar Panel Offsets. In Proceedings of the The Advanced Maui Optical and Space Surveillance Technologies Conference, Maui, HI, USA, 10–14 September 2006; p. E73. [Google Scholar]

- Vrba, F.; DiVittorio, M.; Hindsley, R.; Schmitt, H.; Armstrong, J.; Shankland, P.; Hutter, D.; Benson, J. A Survey of Geosynchronous Satellite Glints. In Proceedings of the 2009 AMOS Technical Conference Proceedings, Maui, HI, USA, 1–4 September 2009; The Maui Economic Development Board, Inc.: Maui, HI, USA, 2009; pp. 268–275. [Google Scholar]

- Aaron, B. Geosynchronous Satellite Maneuver Detection and Orbit Recovery Using Ground Based Optical Tracking. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2006. [Google Scholar]

- Pastor, A.; Escribano, G.; Escobar, D. Satellite Maneuver Detection with Optical Survey Observations. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Maui, HI, USA, 15–18 September 2020. [Google Scholar]

- Linares, R.; Crassidis, J.; Wetterer, C.; Hill, K.; Jah, M. Astrometric and Photometric Data Fusion for Mass and Surface Material Estimation Using Refined Bidirectional Reflectance Distribution Functions-Solar Radiation Pressure Model; Technical Report; Pacific Defense Solutions LLC: Kihei, HI, USA, 2013. [Google Scholar]

- Linares, R.; Jah, M.; Crassidis, J.; Nebelecky, C. Space Object Shape Characterization and Tracking Using Light Curve and Angles Data. J. Guid. Control Dyn. 2014, 37, 13–25. [Google Scholar]

- Linares, R.; Crassidis, J. Space-Object Shape Inversion via Adaptive Hamiltonian Markov Chain Monte Carlo. J. Guid. Control Dyn. 2018, 41, 47–58. [Google Scholar]

- Mital, R.; Cates, K.; Coughlin, J.; Ganji, G. A Machine Learning Approach to Modeling Satellite Behavior. In Proceedings of the 2019 IEEE International Conference on Space Mission Challenges for Information Technology (SMC-IT), Pasadena, CA, USA, 30 July–1 August 2019; pp. 62–69. [Google Scholar]

- Campbell, T.; Furfaro, R.; Reddy, V.; Battle, A.; Birtwhistle, P.; Linder, T.; Tucker, S.; Pearson, N. Bayesian Approach to Light Curve Inversion of 2020 SO. J. Astronaut. Sci. 2022, 69, 95–119. [Google Scholar]

- Campbell, T.; Battle, A.; Gray, B.; Chesley, S.; Farnocchia, D.; Pearson, N.; Halferty, G.; Reddy, V.; Furfaro, R. Physical Characterization of Moon Impactor WE0913A. Planet. Sci. J. 2023, 4, 217. [Google Scholar] [CrossRef]

- Dao, P.; Monet, D. GEO Optical Data Association with Concurrent Metric and Photometric Information. In Proceedings of the AMOS Technologies Conference, Maui, HI, USA, 19–22 September 2017; Maui Economic Development Board: Kihei, HI, USA, 2017. [Google Scholar]

- Scorsoglio, A.; Ghilardi, L.; Furfaro, R. A Physics-Informed Neural Network Approach to Orbit Determination. J. Astronaut. Sci. 2023, 70, 25. [Google Scholar]

- Small, T.; Butler, S.; Marciniak, M. Solar Cell BRDF Measurement and Modeling with Out-of-Plane Data. Opt. Express 2021, 29, 35501–35515. [Google Scholar]

- Landolt, A. UBVRI Photometric Standard Stars in the Magnitude Range 11.5–16.0 Around the Celestial Equator. Astron. J. 1992, 104, 340–371. [Google Scholar] [CrossRef]

- Halferty, G.; Reddy, V.; Campbell, T.; Battle, A.; Furfaro, R. Photometric Characterization and Trajectory Accuracy of Starlink Satellites: Implications for Ground-Based Astronomical Surveys. Mon. Not. R. Astron. Soc. 2022, 516, 1502–1508. [Google Scholar]

- Gaia Collaboration. Gaia Data Release 2; 2018. Available online: http://esdcdoi.esac.esa.int/doi/html/data/astronomy/gaia/DR2.html (accessed on 7 November 2023). [CrossRef]

- Andrae, R.; Fouesneau, M.; Creevey, O.; Ordenovic, C.; Mary, N.; Burlacu, A.; Chaoul, L.; Jean-Antoine-Piccolo, A.; Kordopatis, G.; Korn, A.; et al. Gaia Data Release 2—First Stellar Parameters from Apsis. Astron. Astrophys. 2018, 616, A8. [Google Scholar] [CrossRef]

- Campbell, T.; Reddy, V.; Larsen, J.; Linares, R.; Furfaro, R. Optical Tracking of Artificial Earth Satellites with COTS Sensors. In Proceedings of the Advanced Maui Optical Surveillance Conference, Kihei, HI, USA, 11–14 September 2018. [Google Scholar]

- Campbell, T.; Reddy, V.; Furfaro, R.; Tucker, S. Characterizing LEO Objects Using Simultaneous Multi-Color Optical Array. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference, Maui, HI, USA, 17–20 September 2019. [Google Scholar]

- Bertin, E.; Arnouts, S. SExtractor: Software for Source Extraction. Astron. Astrophys. Suppl. Ser. 1996, 117, 393–404. [Google Scholar]

- Bertin, E. Automatic Astrometric and Photometric Calibration with SCAMP. In Proceedings of the Astronomical Data Analysis Software and Systems XV, San Lorenzo de El Escorial, Spain, 2–5 October 2005; Volume 351, p. 112. [Google Scholar]

- Pogson, N. Magnitudes of Thirty-six of the Minor Planets for the First Day of Each Month of the Year 1857. Mon. Not. R. Astron. Soc. 1856, 17, 12–15. [Google Scholar] [CrossRef]

| Manufacturer | Focal Length (mm) | Aperture (mm) | f/# | FOV () | Pixel Size () | Limiting Mag | Units Req |

|---|---|---|---|---|---|---|---|

| Canon | 85 | 47 | 1.8 | 12 × 9 | 9.3 | 12.5 | 10 |

| Canon | 85 | 60 | 1.4 | 12 × 9 | 9.3 | 13.2 | 10 |

| Canon | 135 | 67 | 2 | 7.6 × 5.8 | 5.9 | 13.9 | 16 |

| Canon | 200 | 71 | 2.8 | 5.1 × 3.9 | 8.5 | 14.5 | 24 |

| Canon | 200 | 100 | 2 | 5.1 × 3.9 | 8.5 | 14.9 | 24 |

| Rokinon | 85 | 60 | 1.4 | 12 × 9 | 9.3 | 12.75 | 10 |

| Sigma | 105 | 75 | 1.4 | 9.7 × 7.4 | 7.6 | 13.5 | 12 |

| Sigma | 135 | 75 | 1.8 | 7.6 × 5.8 | 5.9 | 14.5 | 16 |

| Name | Camera | Focal Length (mm) | Aperture (mm) | FOV () | FOR () | Pixel Scale () |

|---|---|---|---|---|---|---|

| Stingray | 15× ZWO ASI1600MM Pro | 135 | 75 | 7.6 × 5.8 | 114 × 5.8 | 5.9 |

| Satellite | Error in Right Ascension (arc-sec (px)) | Error in Declination (arc-sec (px)) |

|---|---|---|

| SES-15 | () | () |

| GALAXY-30 | () | () |

| Eutelsat 117W B | () | () |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Campbell, T.; Battle, A.; Gray, D.; Chabra, O.; Tucker, S.; Reddy, V.; Furfaro, R. Stingray Sensor System for Persistent Survey of the GEO Belt. Sensors 2024, 24, 2596. https://doi.org/10.3390/s24082596

Campbell T, Battle A, Gray D, Chabra O, Tucker S, Reddy V, Furfaro R. Stingray Sensor System for Persistent Survey of the GEO Belt. Sensors. 2024; 24(8):2596. https://doi.org/10.3390/s24082596

Chicago/Turabian StyleCampbell, Tanner, Adam Battle, Dan Gray, Om Chabra, Scott Tucker, Vishnu Reddy, and Roberto Furfaro. 2024. "Stingray Sensor System for Persistent Survey of the GEO Belt" Sensors 24, no. 8: 2596. https://doi.org/10.3390/s24082596