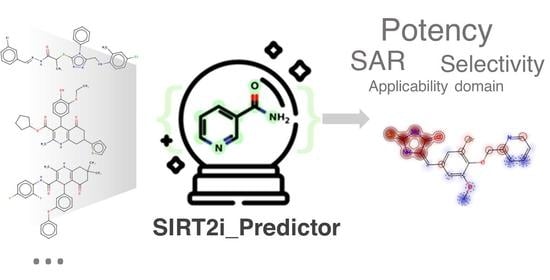

SIRT2i_Predictor: A Machine Learning-Based Tool to Facilitate the Discovery of Novel SIRT2 Inhibitors

Abstract

:1. Introduction

2. Results and Discussion

2.1. Datasets for Modelling

2.2. Model Development and Validation

2.2.1. Regression Models

2.2.2. Binary Classification Models

2.2.3. Multiclass Classification Models

2.3. SIRT2i_Predictor’s Framework for Discovery of Novel Inhibitors

2.4. Benchmarking SIRT2i_Predictor against the Structure-Based VS Approach

3. Materials and Methods

3.1. Dataset Preparation

3.2. Calculation of Molecular Features and Feature Selection

3.3. Model Building and Evaluation

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Finkel, T.; Deng, C.-X.; Mostoslavsky, R. Recent Progress in the Biology and Physiology of Sirtuins. Nature 2009, 460, 587–591. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Haigis, M.C.; Sinclair, D.A. Mammalian Sirtuins: Biological Insights and Disease Relevance. Annu. Rev. Pathol. Mech. Dis. 2010, 5, 253–295. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saunders, L.R.; Verdin, E. Sirtuins: Critical Regulators at the Crossroads between Cancer and Aging. Oncogene 2007, 26, 5489–5504. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Yang, J.; Hong, T.; Chen, X.; Cui, L. SIRT2: Controversy and Multiple Roles in Disease and Physiology. Ageing Res. Rev. 2019, 55, 100961. [Google Scholar] [CrossRef]

- Zhang, H.; Dammer, E.B.; Duong, D.M.; Danelia, D.; Seyfried, N.T.; Yu, D.S. Quantitative Proteomic Analysis of the Lysine Acetylome Reveals Diverse SIRT2 Substrates. Sci. Rep. 2022, 12, 3822. [Google Scholar] [CrossRef]

- de Oliveira, R.M.; Sarkander, J.; Kazantsev, A.; Outeiro, T. SIRT2 as a Therapeutic Target for Age-Related Disorders. Front. Pharmacol. 2012, 3, 82. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hong, J.Y.; Lin, H. Sirtuin Modulators in Cellular and Animal Models of Human Diseases. Front. Pharmacol. 2021, 12, 735044. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Kim, S.; Ren, X. The Clinical Significance of SIRT2 in Malignancies: A Tumor Suppressor or an Oncogene? Front. Oncol. 2020, 10, 1721. [Google Scholar] [CrossRef] [PubMed]

- Jing, H.; Hu, J.; He, B.; Negron Abril, Y.L.; Stupinski, J.; Weiser, K.; Carbonaro, M.; Chiang, Y.-L.; Southard, T.; Giannakakou, P.; et al. A SIRT2-Selective Inhibitor Promotes c-Myc Oncoprotein Degradation and Exhibits Broad Anticancer Activity. Cancer Cell 2016, 29, 297–310. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, A.L.; Rajabi, N.; Kudo, N.; Lundø, K.; Moreno-Yruela, C.; Bæk, M.; Fontenas, M.; Lucidi, A.; Madsen, A.S.; Yoshida, M.; et al. Mechanism-Based Inhibitors of SIRT2: Structure–Activity Relationship, X-Ray Structures, Target Engagement, Regulation of α-Tubulin Acetylation and Inhibition of Breast Cancer Cell Migration. RSC Chem. Biol. 2021, 2, 612–626. [Google Scholar] [CrossRef]

- Wawruszak, A.; Luszczki, J.; Czerwonka, A.; Okon, E.; Stepulak, A. Assessment of Pharmacological Interactions between SIRT2 Inhibitor AGK2 and Paclitaxel in Different Molecular Subtypes of Breast Cancer Cells. Cells 2022, 11, 1211. [Google Scholar] [CrossRef] [PubMed]

- Karwaciak, I.; Sałkowska, A.; Karaś, K.; Sobalska-Kwapis, M.; Walczak-Drzewiecka, A.; Pułaski, Ł.; Strapagiel, D.; Dastych, J.; Ratajewski, M. SIRT2 Contributes to the Resistance of Melanoma Cells to the Multikinase Inhibitor Dasatinib. Cancers 2019, 11, 673. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, W.-L.; Chen, K.-Y.; Lee, K.-Y.; Feng, P.-H.; Wu, S.-M. Nicotinic-NAChR Signaling Mediates Drug Resistance in Lung Cancer. J. Cancer 2020, 11, 1125–1140. [Google Scholar] [CrossRef] [Green Version]

- Hamaidi, I.; Zhang, L.; Kim, N.; Wang, M.-H.; Iclozan, C.; Fang, B.; Liu, M.; Koomen, J.M.; Berglund, A.E.; Yoder, S.J.; et al. Sirt2 Inhibition Enhances Metabolic Fitness and Effector Functions of Tumor-Reactive T Cells. Cell Metab. 2020, 32, 420–436.e12. [Google Scholar] [CrossRef]

- Ružić, D.; Đoković, N.; Nikolić, K.; Vujić, Z. Medicinal Chemistry of Histone Deacetylase Inhibitors. Arh. Farm. 2021, 71, 73–100. [Google Scholar] [CrossRef]

- Yang, W.; Chen, W.; Su, H.; Li, R.; Song, C.; Wang, Z.; Yang, L. Recent Advances in the Development of Histone Deacylase SIRT2 Inhibitors. RSC Adv. 2020, 10, 37382–37390. [Google Scholar] [CrossRef]

- Sauve, A.A.; Youn, D.Y. Sirtuins: NAD+-Dependent Deacetylase Mechanism and Regulation. Curr. Opin. Chem. Biol. 2012, 16, 535–543. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; He, J.; Liao, M.; Hu, M.; Li, W.; Ouyang, H.; Wang, X.; Ye, T.; Zhang, Y.; Ouyang, L. An Overview of Sirtuins as Potential Therapeutic Target: Structure, Function and Modulators. Eur. J. Med. Chem. 2019, 161, 48–77. [Google Scholar] [CrossRef]

- Hong, J.Y.; Fernandez, I.; Anmangandla, A.; Lu, X.; Bai, J.J.; Lin, H. Pharmacological Advantage of SIRT2-Selective versus Pan-SIRT1-3 Inhibitors. ACS Chem. Biol. 2021, 16, 1266–1275. [Google Scholar] [CrossRef]

- Djokovic, N.; Ruzic, D.; Rahnasto-Rilla, M.; Srdic-Rajic, T.; Lahtela-Kakkonen, M.; Nikolic, K. Expanding the Accessible Chemical Space of SIRT2 Inhibitors through Exploration of Binding Pocket Dynamics. J. Chem. Inf. Model. 2022, 62, 2571–2585. [Google Scholar] [CrossRef]

- Carracedo-Reboredo, P.; Liñares-Blanco, J.; Rodríguez-Fernández, N.; Cedrón, F.; Novoa, F.J.; Carballal, A.; Maojo, V.; Pazos, A.; Fernandez-Lozano, C. A Review on Machine Learning Approaches and Trends in Drug Discovery. Comput. Struct. Biotechnol. J. 2021, 19, 4538–4558. [Google Scholar] [CrossRef]

- Qian, T.; Zhu, S.; Hoshida, Y. Use of Big Data in Drug Development for Precision Medicine: An Update. Expert Rev. Precis. Med. Drug Dev. 2019, 4, 189–200. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H. Big Data and Artificial Intelligence Modeling for Drug Discovery. Annu. Rev. Pharmacol. Toxicol. 2020, 60, 573–589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roy, K.; Kar, S.; Das, R.N. A Primer on QSAR/QSPR Modeling; SpringerBriefs in Molecular Science; Springer International Publishing: Cham, Switzerland, 2015; ISBN 978-3-319-17280-4. [Google Scholar]

- Cherkasov, A.; Muratov, E.N.; Fourches, D.; Varnek, A.; Baskin, I.I.; Cronin, M.; Dearden, J.; Gramatica, P.; Martin, Y.C.; Todeschini, R.; et al. QSAR Modeling: Where Have You Been? Where Are You Going To? J. Med. Chem. 2014, 57, 4977–5010. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dixon, S.L.; Duan, J.; Smith, E.; Von Bargen, C.D.; Sherman, W.; Repasky, M.P. AutoQSAR: An Automated Machine Learning Tool for Best-Practice Quantitative Structure-Activity Relationship Modeling. Future Med. Chem. 2016, 8, 1825–1839. [Google Scholar] [CrossRef] [PubMed]

- Gramatica, P. Principles of QSAR Modeling: Comments and Suggestions from Personal Experience. IJQSPR 2020, 5, 61–97. [Google Scholar] [CrossRef]

- Bosc, N.; Atkinson, F.; Felix, E.; Gaulton, A.; Hersey, A.; Leach, A.R. Large Scale Comparison of QSAR and Conformal Prediction Methods and Their Applications in Drug Discovery. J. Cheminform. 2019, 11, 4. [Google Scholar] [CrossRef] [Green Version]

- Suvannang, N.; Preeyanon, L.; Ahmad Malik, A.; Schaduangrat, N.; Shoombuatong, W.; Worachartcheewan, A.; Tantimongcolwat, T.; Nantasenamat, C. Probing the Origin of Estrogen Receptor Alpha Inhibition via Large-Scale QSAR Study. RSC Adv. 2018, 8, 11344–11356. [Google Scholar] [CrossRef] [Green Version]

- Zakharov, A.V.; Zhao, T.; Nguyen, D.-T.; Peryea, T.; Sheils, T.; Yasgar, A.; Huang, R.; Southall, N.; Simeonov, A. Novel Consensus Architecture to Improve Performance of Large-Scale Multitask Deep Learning QSAR Models. J. Chem. Inf. Model. 2019, 59, 4613–4624. [Google Scholar] [CrossRef]

- Li, S.; Ding, Y.; Chen, M.; Chen, Y.; Kirchmair, J.; Zhu, Z.; Wu, S.; Xia, J. HDAC3i-Finder: A Machine Learning-Based Computational Tool to Screen for HDAC3 Inhibitors. Mol. Inform. 2021, 40, e2000105. [Google Scholar] [CrossRef]

- Li, R.; Tian, Y.; Yang, Z.; Ji, Y.; Ding, J.; Yan, A. Classification Models and SAR Analysis on HDAC1 Inhibitors Using Machine Learning Methods. Mol. Divers. 2022. [Google Scholar] [CrossRef]

- Machado, L.A.; Krempser, E.; Guimarães, A.C.R. A Machine Learning-Based Virtual Screening for Natural Compounds Capable of Inhibiting the HIV-1 Integrase. Front. Drug Discov. 2022, 2, 954911. [Google Scholar] [CrossRef]

- Lipinski, C.A. Drug-like Properties and the Causes of Poor Solubility and Poor Permeability. J. Pharmacol. Toxicol. Methods 2000, 44, 235–249. [Google Scholar] [CrossRef] [PubMed]

- Moriwaki, H.; Tian, Y.-S.; Kawashita, N.; Takagi, T. Mordred: A Molecular Descriptor Calculator. J. Cheminform. 2018, 10, 4. [Google Scholar] [CrossRef] [Green Version]

- Tropsha, A.; Golbraikh, A. Predictive QSAR Modeling Workflow, Model Applicability Domains, and Virtual Screening. Curr. Pharm. Des. 2007, 13, 3494–3504. [Google Scholar] [CrossRef]

- Validation of (Q)SAR Models—OECD. Available online: https://www.oecd.org/chemicalsafety/risk-assessment/validationofqsarmodels.htm (accessed on 20 August 2022).

- Czub, N.; Pacławski, A.; Szlęk, J.; Mendyk, A. Do AutoML-Based QSAR Models Fulfill OECD Principles for Regulatory Assessment? A 5-HT1A Receptor Case. Pharmaceutics 2022, 14, 1415. [Google Scholar] [CrossRef] [PubMed]

- Ojha, P.K.; Mitra, I.; Das, R.N.; Roy, K. Further Exploring Rm2 Metrics for Validation of QSPR Models. Chemom. Intell. Lab. Syst. 2011, 107, 194–205. [Google Scholar] [CrossRef]

- Chirico, N.; Gramatica, P. Real External Predictivity of QSAR Models: How to Evaluate It? Comparison of Different Validation Criteria and Proposal of Using the Concordance Correlation Coefficient. J. Chem. Inf. Model. 2011, 51, 2320–2335. [Google Scholar] [CrossRef]

- Roy, K.; Das, R.N.; Ambure, P.; Aher, R.B. Be Aware of Error Measures. Further Studies on Validation of Predictive QSAR Models. Chemom. Intell. Lab. Syst. 2016, 152, 18–33. [Google Scholar] [CrossRef]

- Consonni, V.; Todeschini, R.; Ballabio, D.; Grisoni, F. On the Misleading Use of Q2F3 for QSAR Model Comparison. Mol. Inform. 2019, 38, e1800029. [Google Scholar] [CrossRef]

- Roy, K.; Chakraborty, P.; Mitra, I.; Ojha, P.K.; Kar, S.; Das, R.N. Some Case Studies on Application of “Rm2” Metrics for Judging Quality of Quantitative Structure–Activity Relationship Predictions: Emphasis on Scaling of Response Data. J. Comput. Chem. 2013, 34, 1071–1082. [Google Scholar] [CrossRef] [PubMed]

- Chirico, N.; Gramatica, P. Real External Predictivity of QSAR Models. Part 2. New Intercomparable Thresholds for Different Validation Criteria and the Need for Scatter Plot Inspection. J. Chem. Inf. Model. 2012, 52, 2044–2058. [Google Scholar] [CrossRef] [PubMed]

- Sahigara, F.; Mansouri, K.; Ballabio, D.; Mauri, A.; Consonni, V.; Todeschini, R. Comparison of Different Approaches to Define the Applicability Domain of QSAR Models. Molecules 2012, 17, 4791–4810. [Google Scholar] [CrossRef] [Green Version]

- Truchon, J.-F.; Bayly, C.I. Evaluating Virtual Screening Methods: Good and Bad Metrics for the “Early Recognition” Problem. J. Chem. Inf. Model. 2007, 47, 488–508. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-6848-6. [Google Scholar]

- Li, X.; Kleinstreuer, N.C.; Fourches, D. Hierarchical Quantitative Structure-Activity Relationship Modeling Approach for Integrating Binary, Multiclass, and Regression Models of Acute Oral Systemic Toxicity. Chem. Res. Toxicol. 2020, 33, 353–366. [Google Scholar] [CrossRef]

- Klingspohn, W.; Mathea, M.; ter Laak, A.; Heinrich, N.; Baumann, K. Efficiency of Different Measures for Defining the Applicability Domain of Classification Models. J. Cheminform. 2017, 9, 44. [Google Scholar] [CrossRef]

- Costantini, S.; Sharma, A.; Raucci, R.; Costantini, M.; Autiero, I.; Colonna, G. Genealogy of an Ancient Protein Family: The Sirtuins, a Family of Disordered Members. BMC Evol. Biol. 2013, 13, 60. [Google Scholar] [CrossRef]

- Riniker, S.; Landrum, G.A. Similarity Maps—A Visualization Strategy for Molecular Fingerprints and Machine-Learning Methods. J. Cheminform. 2013, 5, 43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Specs. Compound Management Services and Research Compounds for the Life Science Industry. Available online: https://www.specs.net/ (accessed on 8 January 2019).

- Lougiakis, N.; Gavriil, E.-S.; Kairis, M.; Sioupouli, G.; Lambrinidis, G.; Benaki, D.; Krypotou, E.; Mikros, E.; Marakos, P.; Pouli, N.; et al. Design and Synthesis of Purine Analogues as Highly Specific Ligands for FcyB, a Ubiquitous Fungal Nucleobase Transporter. Bioorgan. Med. Chem. 2016, 24, 5941–5952. [Google Scholar] [CrossRef]

- Sklepari, M.; Lougiakis, N.; Papastathopoulos, A.; Pouli, N.; Marakos, P.; Myrianthopoulos, V.; Robert, T.; Bach, S.; Mikros, E.; Ruchaud, S. Synthesis, Docking Study and Kinase Inhibitory Activity of a Number of New Substituted Pyrazolo [3,4-c]Pyridines. Chem. Pharm. Bull. 2017, 65, 66–81. [Google Scholar] [CrossRef]

- Blum, C.A.; Ellis, J.L.; Loh, C.; Ng, P.Y.; Perni, R.B.; Stein, R.L. SIRT1 Modulation as a Novel Approach to the Treatment of Diseases of Aging. J. Med. Chem. 2011, 54, 417–432. [Google Scholar] [CrossRef] [PubMed]

- Mendez, D.; Gaulton, A.; Bento, A.P.; Chambers, J.; De Veij, M.; Félix, E.; Magariños, M.P.; Mosquera, J.F.; Mutowo, P.; Nowotka, M.; et al. ChEMBL: Towards Direct Deposition of Bioassay Data. Nucleic Acids Res. 2019, 47, D930–D940. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Ai, T.; More, S. Therapeutic Compounds 2016. French Patent WO2016140978A1, 9 September 2016. [Google Scholar]

- Swain, M.C.; Cole, J.M. ChemDataExtractor: A Toolkit for Automated Extraction of Chemical Information from the Scientific Literature. J. Chem. Inf. Model. 2016, 56, 1894–1904. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Landrum, G. RDKit. Available online: http://rdkit.org (accessed on 15 April 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. JAIR 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- O’Malley, T.; Bursztein, E.; Long, J.; Chollet, F.; Jin, H.; Invernizzi, L. Others Keras Tuner. 2019. Available online: https://keras.io/keras_tuner/ (accessed on 30 April 2022).

- Gramatica, P. On the Development and Validation of QSAR Models. Methods Mol. Biol. 2013, 930, 499–526. [Google Scholar] [CrossRef]

- Consonni, V.; Ballabio, D.; Todeschini, R. Comments on the Definition of the Q2 Parameter for QSAR Validation. J. Chem. Inf. Model. 2009, 49, 1669–1678. [Google Scholar] [CrossRef]

- Schüürmann, G.; Ebert, R.-U.; Chen, J.; Wang, B.; Kühne, R. External Validation and Prediction Employing the Predictive Squared Correlation Coefficient Test Set Activity Mean vs Training Set Activity Mean. J. Chem. Inf. Model. 2008, 48, 2140–2145. [Google Scholar] [CrossRef]

- Mysinger, M.M.; Carchia, M.; Irwin, J.J.; Shoichet, B.K. Directory of Useful Decoys, Enhanced (DUD-E): Better Ligands and Decoys for Better Benchmarking. J. Med. Chem. 2012, 55, 6582–6594. [Google Scholar] [CrossRef]

- Heger, V.; Tyni, J.; Hunyadi, A.; Horáková, L.; Lahtela-Kakkonen, M.; Rahnasto-Rilla, M. Quercetin Based Derivatives as Sirtuin Inhibitors. Biomed. Pharmacother. 2019, 111, 1326–1333. [Google Scholar] [CrossRef] [PubMed]

| Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 | |

|---|---|---|---|---|

| No. of compounds | 1002 | 1797 | 984 | 612 |

| Expressed activity | pIC50 | pIC50 and Inh% | Inh% | Inh% |

| Activity towards | SIRT2 | SIRT2 | SIRT1, SIRT2 | SIRT2, SIRT3 |

| Encoded activity | pIC50 | Active, inactive | Selective, nonselective, inactive | Selective, nonselective, inactive |

| ML Algorithm | Molecular Feature | RMSEext | CCC | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RF | Descriptors | 0.7 | 0.55 | 0.52 | 0.27 | 0.7 | 0.7 | 0.7 | 0.81 |

| ECFP4 | 0.75 | 0.5 | 0.6 | 0.23 | 0.75 | 0.75 | 0.75 | 0.85 a | |

| MACCS | 0.71 | 0.53 | 0.55 | 0.26 | 0.71 | 0.71 | 0.71 | 0.82 | |

| ECFP6 | 0.77 | 0.48 | 0.62 | 0.21 | 0.77 | 0.77 | 0.76 | 0.86 a | |

| SVR | Descriptors | 0.62 | 0.61 | 0.44 | 0.31 | 0.62 | 0.62 | 0.62 | 0.77 |

| ECFP4 | 0.74 | 0.51 | 0.63 | 0.13 * | 0.74 | 0.74 | 0.73 | 0.84 | |

| MACCS | 0.68 | 0.57 | 0.55 | 0.21 | 0.68 | 0.68 | 0.68 | 0.81 | |

| ECFP6 | 0.74 | 0.51 | 0.63 | 0.18 * | 0.74 | 0.74 | 0.74 | 0.86 a | |

| XGBoost | Descriptors | 0.67 | 0.58 | 0.53 | 0.25 | 0.68 | 0.68 | 0.68 | 0.82 |

| ECFP4 | 0.75 (0.79) b | 0.5 (0.46) b | 0.64 (0.7) b | 0.17 (0.17) *,b | 0.74 (0.75) b | 0.74 (0.75) b | 0.74 (0.74) b | 0.86 (0.86) a,b | |

| MACCS | 0.71 | 0.53 | 0.58 | 0.24 | 0.7 | 0.7 | 0.7 | 0.82 | |

| ECFP6 | 0.73 | 0.52 | 0.62 | 0.2 | 0.73 | 0.73 | 0.73 | 0.87 a | |

| KNN | Descriptors | 0.68 | 0.56 | 0.56 | 0.23 | 0.68 | 0.68 | 0.68 | 0.86 a |

| ECFP4 | 0.74 | 0.51 | 0.64 | 0.13 * | 0.74 | 0.74 | 0.74 | 0.87 a | |

| MACCS | 0.6 | 0.63 | 0.47 | 0.16 * | 0.6 | 0.6 | 0.6 | 0.79 | |

| ECFP6 | 0.76 (0.77) b | 0.49 (0.48) b | 0.66 (0.68) b | 0.12 (0.11) *,b | 0.76 (0.76) b | 0.76 (0.76) b | 0.76 (0.76) b | 0.87 (0.87) a,b | |

| DNN | Descriptors | 0.66 | 0.58 | 0.57 | 0.03 * | 0.66 | 0.66 | 0.66 | 0.81 |

| ECFP4 | 0.74 | 0.51 | 0.63 | 0.18 * | 0.73 | 0.73 | 0.73 | 0.84 | |

| MACCS | 0.68 | 0.56 | 0.56 | 0.16 * | 0.68 | 0.68 | 0.67 | 0.80 | |

| ECFP6 | 0.73 | 0.52 | 0.63 | 0.17 * | 0.73 | 0.73 | 0.73 | 0.81 | |

| Criteria | >0.6 | >0.5 | <0.2 | >0.7 | >0.7 | >0.7 | >0.85 | ||

| ML Algorithm | Molecular Feature | BA | MCC | ROC_AUC | Precision a | Recall a | F1 a |

|---|---|---|---|---|---|---|---|

| RF | Descriptors | 0.88 | 0.74 | 0.94 | 0.86 | 0.88 | 0.87 |

| ECFP4 | 0.84 | 0.66 | 0.92 | 0.82 | 0.84 | 0.83 | |

| MACCS | 0.82 | 0.62 | 0.91 | 0.8 | 0.82 | 0.81 | |

| ECFP6 | 0.85 | 0.68 | 0.92 | 0.83 | 0.85 | 0.84 | |

| SVR | Descriptors | 0.88 | 0.74 | 0.95 | 0.87 | 0.88 | 0.87 |

| ECFP4 | 0.81 | 0.63 | 0.9 | 0.82 | 0.81 | 0.82 | |

| MACCS | 0.8 | 0.59 | 0.87 | 0.79 | 0.8 | 0.79 | |

| ECFP6 | 0.79 | 0.62 | 0.9 | 0.83 | 0.79 | 0.81 | |

| XGBoost | Descriptors | 0.86 | 0.72 | 0.94 | 0.85 | 0.86 | 0.85 |

| ECFP4 | 0.81 | 0.62 | 0.91 | 0.8 | 0.81 | 0.81 | |

| MACCS | 0.8 | 0.6 | 0.9 | 0.8 | 0.8 | 0.8 | |

| ECFP6 | 0.81 | 0.62 | 0.91 | 0.81 | 0.81 | 0.81 | |

| KNN | Descriptors | 0.79 | 0.56 | 0.88 | 0.77 | 0.79 | 0.77 |

| ECFP4 | 0.82 | 0.62 | 0.9 | 0.8 | 0.82 | 0.81 | |

| MACCS | 0.82 | 0.62 | 0.88 | 0.8 | 0.82 | 0.81 | |

| ECFP6 | 0.84 | 0.65 | 0.91 | 0.81 | 0.84 | 0.82 | |

| DNN | Descriptors | 0.89 | 0.75 | 0.94 | 0.85 | 0.86 | 0.86 |

| ECFP4 | 0.83 | 0.65 | 0.91 | 0.8 | 0.81 | 0.8 | |

| MACCS | 0.8 | 0.58 | 0.89 | 0.8 | 0.8 | 0.8 | |

| ECFP6 | 0.82 | 0.64 | 0.9 | 0.79 | 0.82 | 0.8 |

| ML Algorithm | Molecular Feature | BA | MCC | ROC_AUC | Precision a | Recall a | F1 a | EF05% | EF1% | EF2% | EF5% |

|---|---|---|---|---|---|---|---|---|---|---|---|

| RF | Descriptors | 0.68 | 0.09 | 0.87 | 0.51 | 0.68 | 0.35 | 0.63 | 0.67 | 0.68 | 0.73 |

| ECFP4 | 0.81 (0.9) b | 0.19 (0.52) b | 0.87 (0.89) b | 0.53 (0.67) b | 0.81 (0.9) b | 0.49 (0.73) b | 0.74 (0.74) b | 0.74 (0.74) b | 0.76 (0.76) b | 0.77 (0.8) b | |

| MACCS | 0.66 | 0.08 | 0.82 | 0.51 | 0.66 | 0.35 | 0.55 | 0.56 | 0.59 | 0.62 | |

| ECFP6 | 0.75 | 0.14 | 0.87 | 0.52 | 0.75 | 0.43 | 0.72 | 0.74 | 0.76 | 0.78 | |

| SVR | Descriptors | 0.69 | 0.1 | 0.89 | 0.51 | 0.69 | 0.36 | 0.43 | 0.56 | 0.62 | 0.71 |

| ECFP4 | 0.46 | −0.06 | 0.8 | 0.48 | 0.46 | 0.05 | 0.75 | 0.75 | 0.75 | 0.76 | |

| MACCS | 0.62 | 0.06 | 0.83 | 0.51 | 0.62 | 0.32 | 0.39 | 0.61 | 0.68 | 0.74 | |

| ECFP6 | 0.47 | −0.07 | 0.8 | 0.47 | 0.47 | 0.03 | 0.76 | 0.76 | 0.77 | 0.77 | |

| XGBoost | Descriptors | 0.71 | 0.11 | 0.85 | 0.51 | 0.71 | 0.39 | 0.41 | 0.44 | 0.48 | 0.54 |

| ECFP4 | 0.74 | 0.13 | 0.87 | 0.52 | 0.74 | 0.42 | 0.35 | 0.39 | 0.43 | 0.52 | |

| MACCS | 0.64 | 0.07 | 0.73 | 0.51 | 0.64 | 0.32 | 0 | 0 | 0.02 | 0.2 | |

| ECFP6 | 0.71 | 0.11 | 0.85 | 0.51 | 0.71 | 0.39 | 0.37 | 0.38 | 0.44 | 0.5 | |

| KNN | Descriptors | 0.66 | 0.08 | 0.76 | 0.51 | 0.66 | 0.37 | 0.09 | 0.23 | 0.26 | 0.29 |

| ECFP4 | 0.72 | 0.12 | 0.8 | 0.52 | 0.72 | 0.41 | 0 | 0 | 0 | 0 | |

| MACCS | 0.64 | 0.07 | 0.75 | 0.51 | 0.64 | 0.33 | 0 | 0 | 0 | 0 | |

| ECFP6 | 0.72 | 0.11 | 0.8 | 0.52 | 0.72 | 0.41 | 0 | 0 | 0 | 0 | |

| DNN | Descriptors | 0.72 | 0.12 | 0.8 | 0.51 | 0.71 | 0.38 | 0 | 0 | 0 | 0 |

| ECFP4 | 0.73 | 0.13 | 0.84 | 0.52 | 0.73 | 0.43 | 0.1 | 0.25 | 0.32 | 0.41 | |

| MACCS | 0.69 | 0.1 | 0.79 | 0.51 | 0.62 | 0.29 | 0.04 | 0.08 | 0.17 | 0.23 | |

| ECFP6 | 0.67 | 0.09 | 0.81 | 0.51 | 0.67 | 0.38 | 0.17 | 0.25 | 0.34 | 0.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Djokovic, N.; Rahnasto-Rilla, M.; Lougiakis, N.; Lahtela-Kakkonen, M.; Nikolic, K. SIRT2i_Predictor: A Machine Learning-Based Tool to Facilitate the Discovery of Novel SIRT2 Inhibitors. Pharmaceuticals 2023, 16, 127. https://doi.org/10.3390/ph16010127

Djokovic N, Rahnasto-Rilla M, Lougiakis N, Lahtela-Kakkonen M, Nikolic K. SIRT2i_Predictor: A Machine Learning-Based Tool to Facilitate the Discovery of Novel SIRT2 Inhibitors. Pharmaceuticals. 2023; 16(1):127. https://doi.org/10.3390/ph16010127

Chicago/Turabian StyleDjokovic, Nemanja, Minna Rahnasto-Rilla, Nikolaos Lougiakis, Maija Lahtela-Kakkonen, and Katarina Nikolic. 2023. "SIRT2i_Predictor: A Machine Learning-Based Tool to Facilitate the Discovery of Novel SIRT2 Inhibitors" Pharmaceuticals 16, no. 1: 127. https://doi.org/10.3390/ph16010127