Effects of Random Values for Particle Swarm Optimization Algorithm

Abstract

:1. Introduction

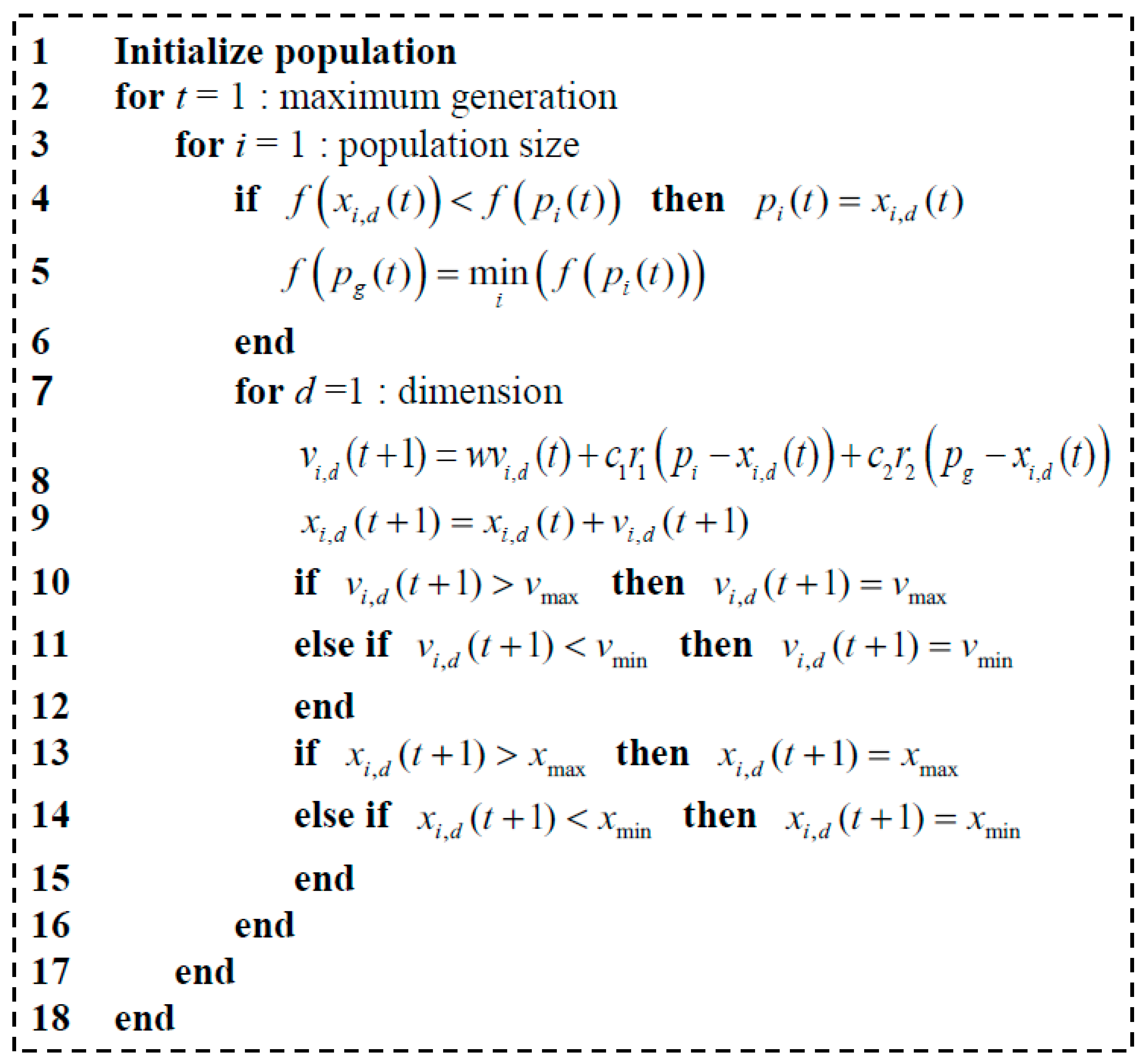

2. Standard and Modified Particle Swarm Optimization Algorithms

2.1. Standard Particle Swarm Optimization Algorithm

2.2. Modifications for Particle Swarm Optimization Algorithm

2.2.1. Constant or Random Inertia Weight Strategies

2.2.2. Time Varying Inertia Weight Strategies

2.2.3. Adaptive Inertia Weight Strategies

3. Particle Swarm Optimization Algorithm with Different Types of Random Values

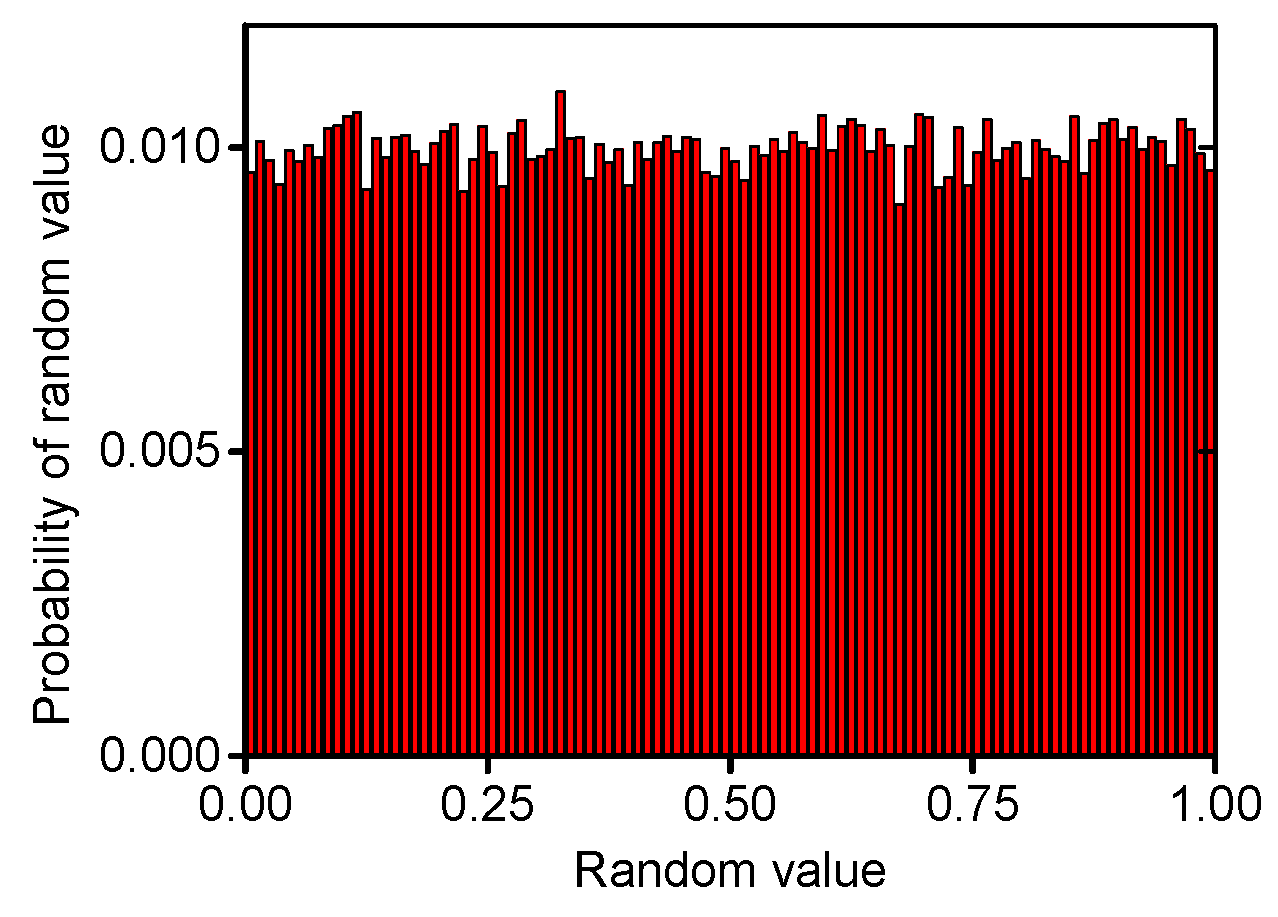

3.1. Random Values with Uniform Distribution in the Range of [0, 1]

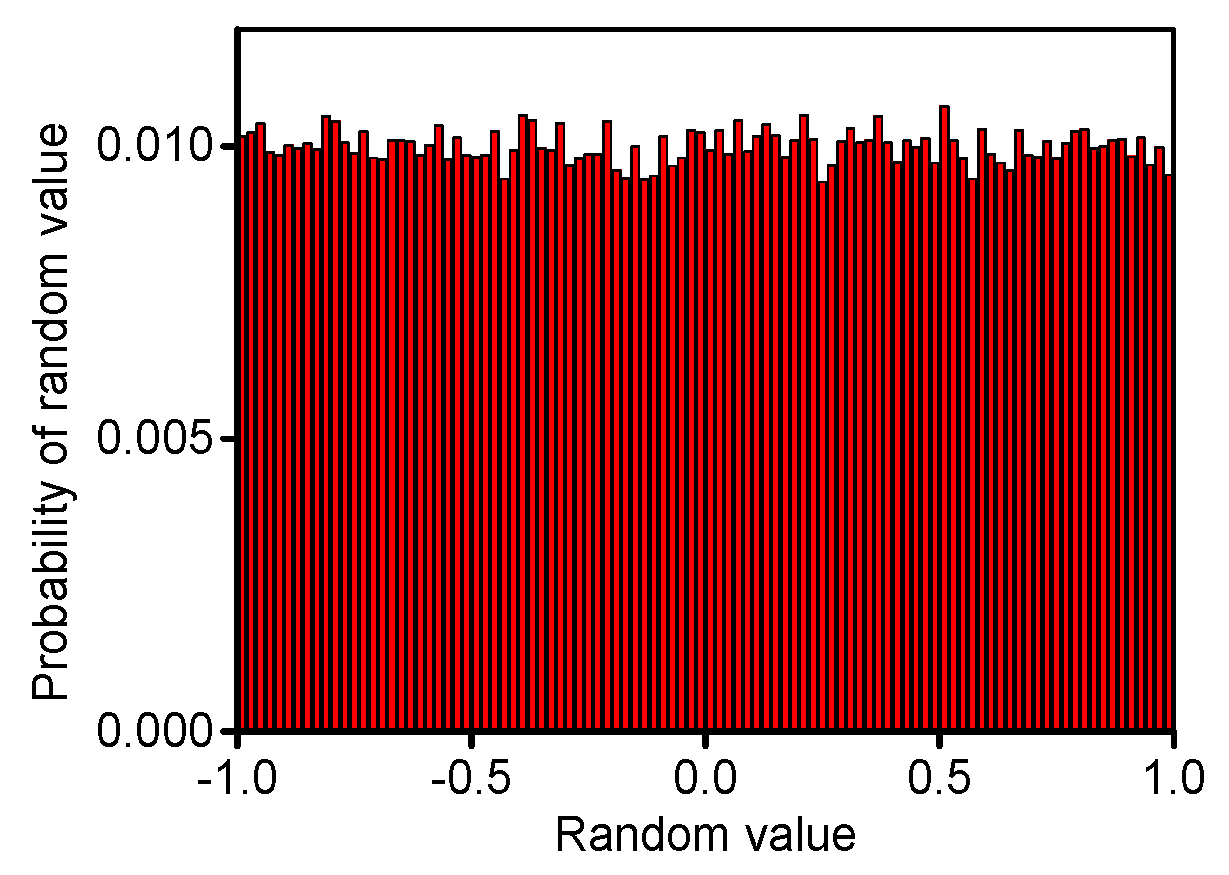

3.2. Random Values with Uniform Distribution in the Range of [−1, 1]

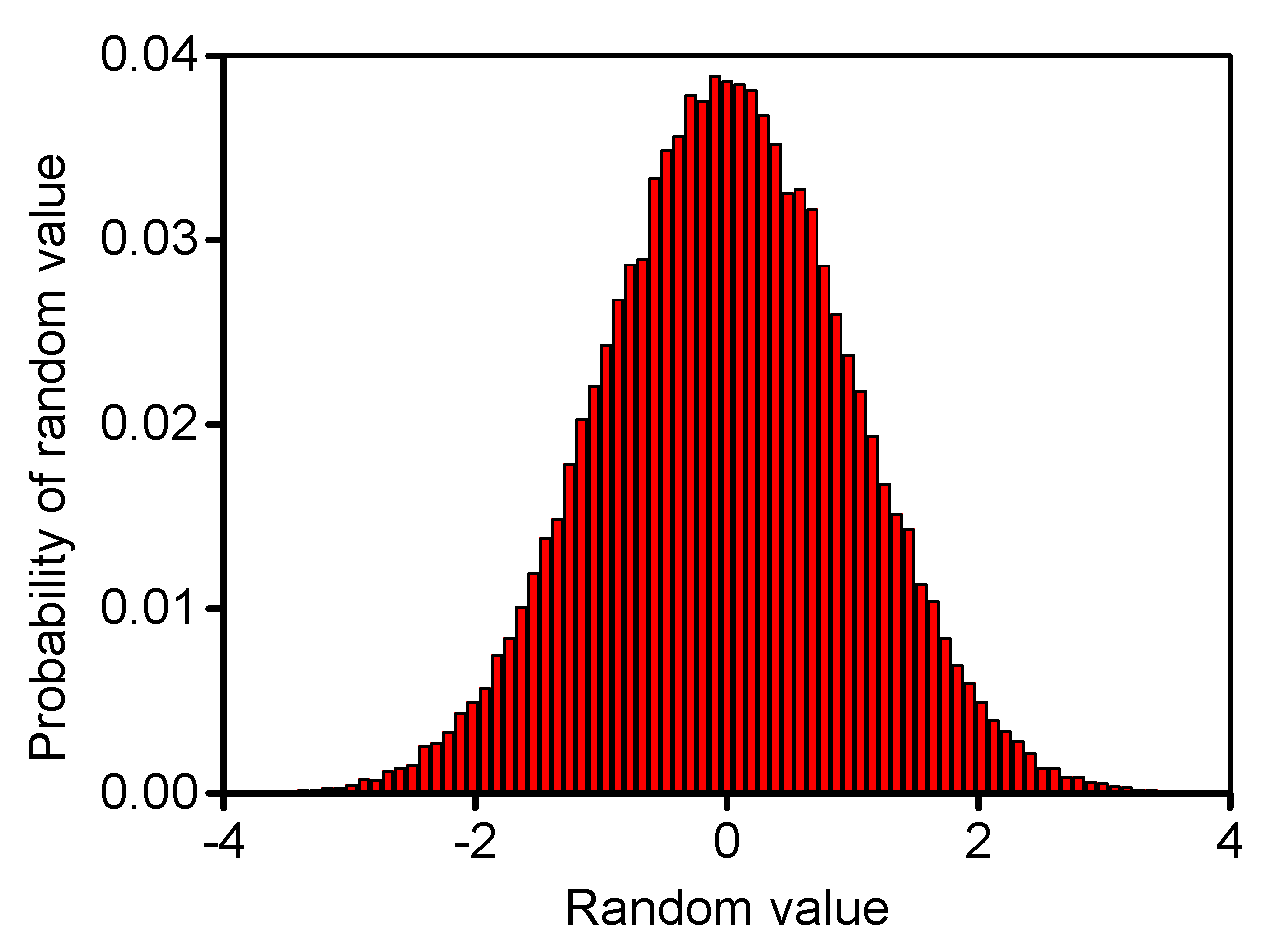

3.3. Random Values with Gauss Distribution

4. Experiments and Analysis

4.1. Experimental Setup

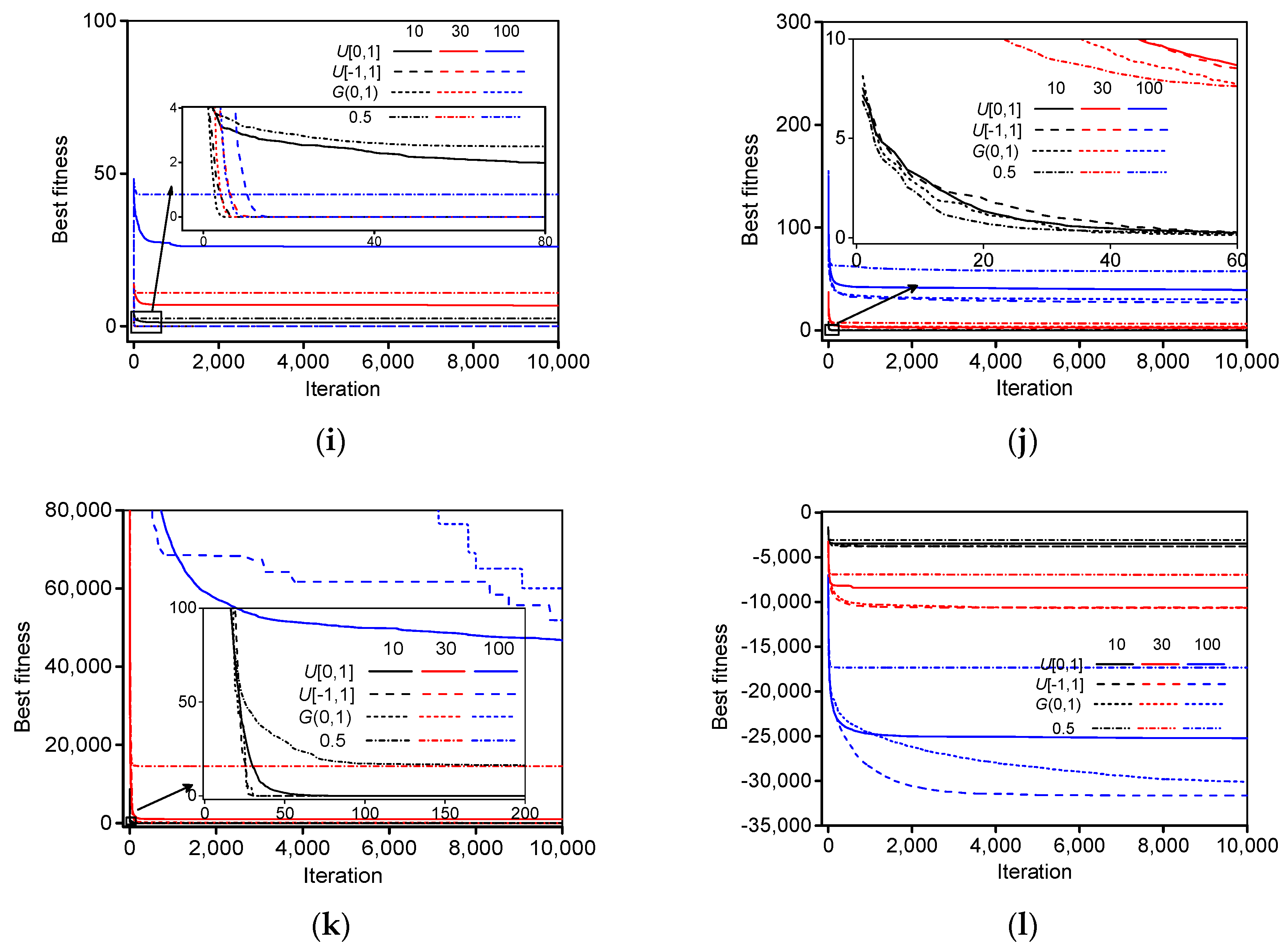

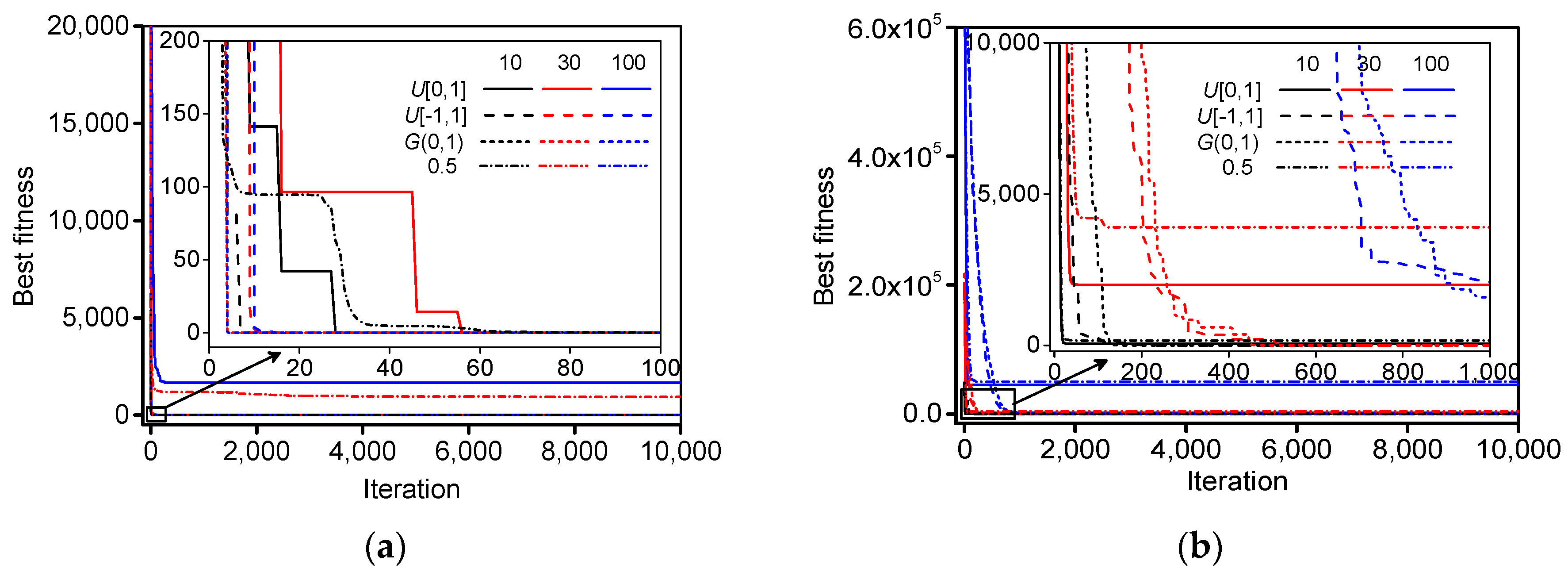

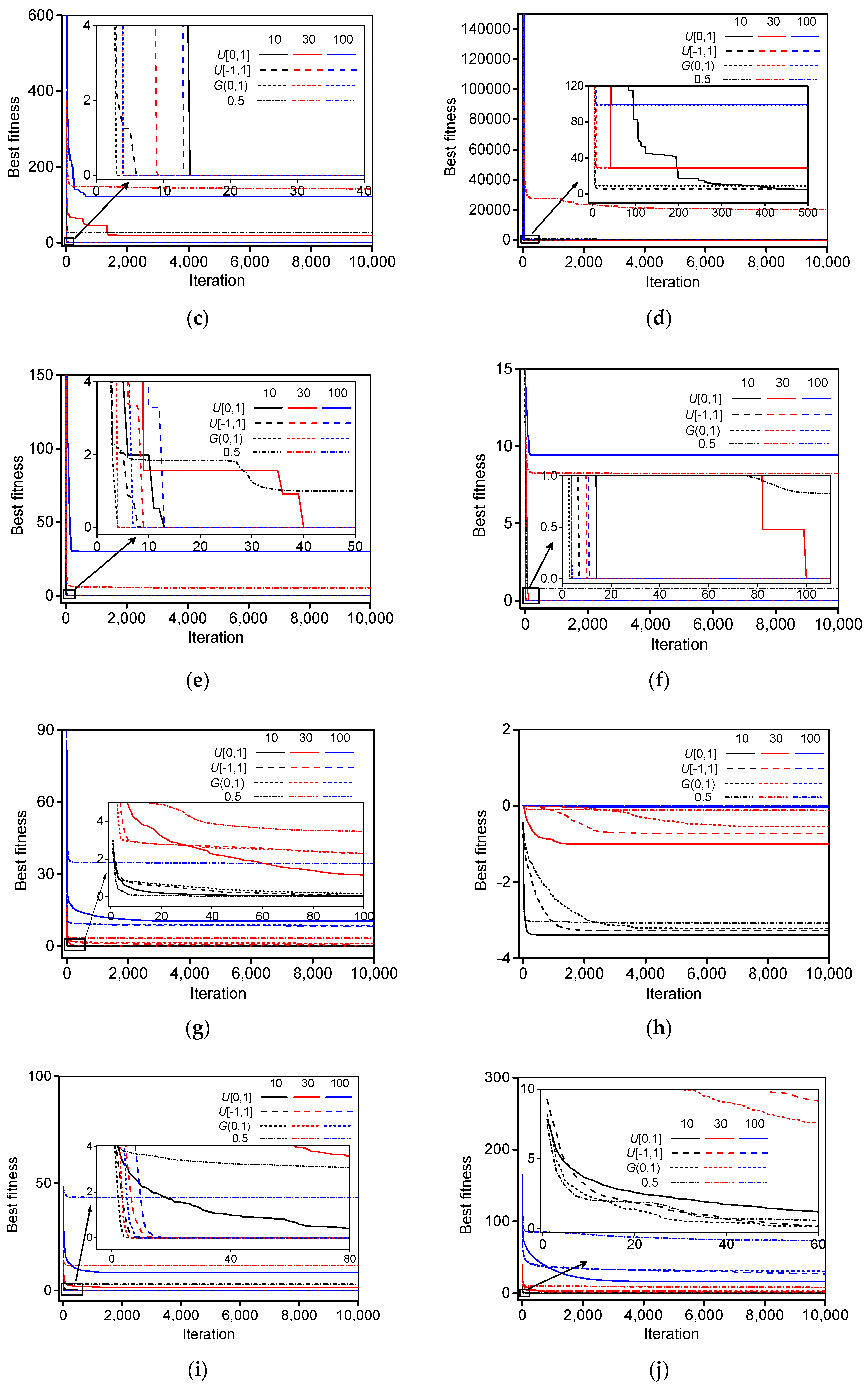

4.2. Experimental Results and Comparisons

4.3. Application and Analysis

4.3.1. Application in Engineering Problem

4.3.2. Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kennedy, J.; Eberhart, R.C. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neuron Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Wang, Y.; Li, B.; Weise, T.; Wang, J.Y.; Yuan, B.; Tian, Q.J. Self-adaptive learning based particle swarm optimization. Inf. Sci. 2011, 180, 4515–4538. [Google Scholar] [CrossRef]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particleswarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Chen, D.B.; Zhao, C.X. Particle swarm optimization with adaptive population size and its application. Appl. Soft Comput. 2009, 9, 39–48. [Google Scholar] [CrossRef]

- Xu, G. An adaptive parameter tuning of particle swarm optimization algorithm. Appl. Math. Comput. 2013, 219, 4560–4569. [Google Scholar] [CrossRef]

- Mirjalili, S.A.; Hashim, S.Z.M.; Sardroudi, H.M. Training feedforward neural networks using hybrid particle swarm optimization and gravitational search algorithm. Appl. Math. Comput. 2012, 218, 11125–11137. [Google Scholar] [CrossRef]

- Ren, C.; An, N.; Wang, J.; Li, L.; Hu, B.; Shang, D. Optimal parameters selection for BP neural network based on particle swarm optimization: A case study of wind speed forecasting. Knowl. Based Syst. 2014, 56, 226–239. [Google Scholar] [CrossRef]

- Zhang, J.R.; Zhang, J.; Lok, T.M.; Lyu, M.R. A hybrid particle swarmoptimization–back-propagation algorithm for feedforward neural network training. Appl. Math. Comput. 2007, 185, 1026–1037. [Google Scholar]

- Das, G.; Pattnaik, P.K.; Padhy, S.K. Artificial Neural Network trained by Particle Swarm Optimization for non-linear channel equalization. Expert Syst. Appl. 2014, 41, 3491–3496. [Google Scholar] [CrossRef]

- Lin, C.J.; Chen, C.H.; Lin, C.T. A hybrid of cooperative particle swarm optimization and cultural algorithm for neural fuzzy networks and its prediction applications. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2009, 39, 55–68. [Google Scholar]

- Juang, C.F.; Hsiao, C.M.; Hsu, C.H. Hierarchical cluster-based multispecies particle-swarm optimization for fuzzy-system optimization. IEEE Trans. Fuzzy Syst. 2010, 18, 14–26. [Google Scholar] [CrossRef]

- Kuo, R.J.; Hong, S.Y.; Huang, Y.C. Integration of particle swarm optimization-based fuzzy neural network and artificial neural network for supplier selection. Appl. Math. Model. 2010, 34, 3976–3990. [Google Scholar] [CrossRef]

- Tang, Y.; Ju, P.; He, H.; Qin, C.; Wu, F. Optimized control of DFIG-based wind generation using sensitivity analysis and particle swarm optimization. IEEE Trans. Smart Grid 2013, 4, 509–520. [Google Scholar] [CrossRef]

- Sui, X.; Tang, Y.; He, H.; Wen, J. Energy-storage-based low-frequency oscillation damping control using particle swarm optimization and heuristic dynamic programming. IEEE Trans. Power Syst. 2014, 29, 2539–2548. [Google Scholar] [CrossRef]

- Jiang, H.; Kwong, C.K.; Chen, Z.; Ysim, Y.C. Chaos particle swarm optimization and T–S fuzzy modeling approaches to constrained predictive control. Expert Syst. Appl. 2012, 39, 194–201. [Google Scholar] [CrossRef]

- Moharam, A.; El-Hosseini, M.A.; Ali, H.A. Design of optimal PID controller using hybrid differential evolution and particle swarm optimization with an aging leader and challengers. Appl. Soft Comput. 2016, 38, 727–737. [Google Scholar] [CrossRef]

- Arumugam, M.S.; Rao, M.V.C. On the improved performances of the particle swarm optimization algorithms with adaptive parameters, cross-over operators and root mean square (RMS) variants for computing optimal control of a class of hybrid systems. Appl. Soft Comput. 2008, 8, 324–336. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V. A new particle swarm optimization method enhanced with a periodic mutation strategy and neural networks. IEEE Trans. Evolut. Comput. 2013, 17, 436–452. [Google Scholar] [CrossRef]

- Ratnaweera, A.; Halgamuge, S.; Waston, H. Self-organizing hierarchical particle optimizer with time-varying acceleration coefficients. IEEE Trans. Evol. Comput. 2004, 8, 240–255. [Google Scholar] [CrossRef]

- Shi, Y.H.; Eberhart, R.C. A modified particle swarm optimizer. In Proceedings of the IEEE International Conference on Computational Intelligence, Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Xing, J.; Xiao, D. New Metropolis coefficients of particle swarm optimization. In Proceedings of the IEEE Chinese Control and Decision Conference, Yantai, China, 2–4 July 2008; pp. 3518–3521. [Google Scholar]

- Taherkhani, M.; Safabakhsh, R. A novel stability-based adaptive inertia weight for particle swarm optimization. Appl. Soft Comput. 2016, 38, 281–295. [Google Scholar] [CrossRef]

- Nickabadi, A.; Ebadzadeh, M.M.; Safabakhsh, R. A novel particle swarm optimization algorithm with adaptive inertia weight. Appl. Soft Comput. 2011, 11, 3658–3670. [Google Scholar] [CrossRef]

- Zhang, L.; Tang, Y.; Hua, C.; Guan, X. A new particle swarm optimization algorithm with adaptive inertia weight based on Bayesian techniques. Appl. Soft Comput. 2015, 28, 138–149. [Google Scholar] [CrossRef]

- Hu, M.; Wu, T.; Weir, J.D. An adaptive particle swarm optimization with multiple adaptive methods. IEEE Trans. Evol. Comput. 2013, 17, 705–720. [Google Scholar] [CrossRef]

- Shi, X.H.; Liang, Y.C.; Lee, H.P.; Lu, C.; Wang, L.M. An improved GA and a novel PSOGA-based hybrid algorithm. Inf. Process. Lett. 2005, 93, 255–261. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive differential evolution with optional external archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Mousa, A.A.; El-Shorbagy, M.A.; Abd-El-Wahed, W.F. Local search based hybrid particle swarm optimization algorithm for multiobjective optimization. Swarm Evol. Comput. 2012, 3, 1–14. [Google Scholar] [CrossRef]

- Liu, Y.; Niu, B.; Luo, Y. Hybrid learning particle swarm optimizer with genetic disturbance. Neurocomputing 2015, 151, 1237–1247. [Google Scholar] [CrossRef]

- Duan, H.B.; Luo, Q.A.; Shi, Y.H.; Ma, G.J. Hybrid Particle Swarm Optimization and Genetic Algorithm for Multi-UAV Formation Reconfiguration. IEEE Computat. Intell. Mag. 2013, 8, 16–27. [Google Scholar] [CrossRef]

- Epitropakis, M.G.; Plagianakos, V.P.; Vrahatis, M.N. Evolving cognitive and social experience in particle swarm optimization through differential evolution: A hybrid approach. Inf. Sci. 2012, 216, 50–92. [Google Scholar] [CrossRef]

- Blackwell, T.; Branke, J. Multiswarms, exclusion, and anti-convergence in dynamic environments. IEEE Trans. Evol. Comput. 2006, 10, 459–472. [Google Scholar] [CrossRef]

- Parrott, D.; Li, X. Locating and tracking multiple dynamic optima by a particle swarm model using speciation. IEEE Trans. Evol. Comput. 2006, 10, 440–458. [Google Scholar] [CrossRef]

- Li, C.; Yang, S. A clustering particle swarm optimizer for dynamic optimization. In Proceedings of the 2009 Congress on Evolutionary Computation, Trondheim, Norway, 18–21 May 2009; pp. 439–446. [Google Scholar]

- Kamosi, M.; Hashemi, A.B.; Meybodi, M.R. A new particle swarm optimization algorithm for dynamic environments. In Proceedings of the 2010 Congress on Swarm, Evolutionary, and Memetic Computing, Chennai, India, 16–18 December 2010; pp. 129–138. [Google Scholar]

- Du, W.; Li, B. Multi-strategy ensemble particle swarm optimization for dynamic optimization. Inf. Sci. 2008, 178, 3096–3109. [Google Scholar] [CrossRef]

- Dong, D.M.; Jie, J.; Zeng, J.C.; Wang, M. Chaos-mutation-based particle swarm optimizer for dynamic environment. In Proceedings of the 2008 Conference on Intelligent System and Knowledge Engineering, Xiamen, China, 17–19 November 2008; pp. 1032–1037. [Google Scholar]

- Cui, X.; Potok, T.E. Distributed adaptive particle swarm optimizer in dynamic environment. In Proceedings of the 2007 Conference on Parallel and Distributed Processing Symposium, Rome, Italy, 26–30 March 2007; pp. 1–7. [Google Scholar]

- De, M.K.; Slawomir, N.J.; Mark, B. Stochastic diffusion search: Partial function evaluation in swarm intelligence dynamic optimization. In Stigmergic Optimization; Springer: Berlin/Heidelberg, Germany, 2006; pp. 185–207. [Google Scholar]

- Janson, S.; Middendorf, M. A hierarchical particle swarm optimizer for noisy and dynamic environments. Genet. Program. Evol. Mach. 2006, 7, 329–354. [Google Scholar] [CrossRef]

- Zheng, X.; Liu, H. A different topology multi-swarm PSO in dynamic environment. In Proceedings of the 2009 Conference on Medicine & Education, Jinan, China, 14–16 August 2009; pp. 790–795. [Google Scholar]

- Shi, Y.H.; Eberhart, R.C. Parameter selection in particle swarm optimization. In Proceedings of the 7th Annual International Conference on Evolutionary Programming, San Diego, CA, USA, 25–27 March 1998; pp. 591–601. [Google Scholar]

- Eberhart, R.C.; Shi, Y.H. Tracking and optimizing dynamic systems with particle swarms. In Proceedings of the 2001 Congress on Evolutionary Computation, Seoul, Korea, 27–30 May 2001; pp. 81–86. [Google Scholar]

- Yang, C.; Gao, W.; Liu, N.; Song, C. Low-discrepancy sequence initialized particle swarm optimization algorithm with high-order nonlinear time-varying inertia weight. Appl. Soft Comput. 2015, 29, 386–394. [Google Scholar] [CrossRef]

- Shi, Y.H.; Eberhart, R.C. Empirical study of particle swarm optimization. In Proceedings of the 1999 Congress on Evolutionary Computation, Washington, DC, USA, 6–9 July 1999; pp. 1945–1950. [Google Scholar]

- Eberhart, R.C.; Shi, Y.H. Comparing inertia weights and constriction factors in particle swarm optimization. In Proceedings of the IEEE Congress on Evolutionary Computation, La Jolla, CA, USA, 16–19 July 2000; pp. 84–88. [Google Scholar]

- Chatterjee, A.; Siarry, P. Nonlinear inertia weight variation for dynamic adaptation in particle swarm optimization. Comput. Oper. Res. 2006, 33, 859–871. [Google Scholar] [CrossRef]

- Feng, Y.; Teng, G.F.; Wang, A.X.; Yao, Y.M. Chaotic inertia weight in particle swarm optimization. In Proceedings of the 2nd International Conference on Innovative Computing, Information and Control, Kumamoto, Japan, 5–7 September 2007; p. 475. [Google Scholar]

- Fan, S.K.S.; Chiu, Y.Y. A decreasing inertia weight particle swarm optimizer. Eng. Optimiz. 2007, 39, 203–228. [Google Scholar] [CrossRef]

- Jiao, B.; Lian, Z.; Gu, X. A dynamic inertia weight particle swarm optimization algorithm. Chaos Solitons Fract. 2008, 37, 698–705. [Google Scholar] [CrossRef]

- Lei, K.; Qiu, Y.; He, Y. A new adaptive well-chosen inertia weight strategy to automatically harmonize global and local search ability in particle swarm optimization. In Proceedings of the 1st International Symposium on Systems and Control in Aerospace and Astronautics, Harbin, China, 19–21 January 2006; pp. 977–980. [Google Scholar]

- Yang, X.; Yuan, J.; Mao, H. A modified particle swarm optimizer with dynamic adaptation. Appl. Math. Comput. 2007, 189, 1205–1213. [Google Scholar] [CrossRef]

- Panigrahi, B.K.; Pandi, V.R.; Das, S. Adaptive particle swarm optimization approach for static and dynamic economic load dispatch. Energ. Convers. Manag. 2008, 49, 1407–1415. [Google Scholar] [CrossRef]

- Suresh, K.; Ghosh, S.; Kundu, D.; Sen, A.; Das, S.; Abraham, A. Inertia-adaptiveparticle swarm optimizer for improved global search. In Proceedings of the Eighth International Conference on Intelligent Systems Design and Applications, Kaohsiung, Taiwan, 26–28 November 2008; pp. 253–258. [Google Scholar]

- Tanweer, M.R.; Suresh, S.; Sundararajan, N. Self-regulating particle swarm optimization algorithm. Inf. Sci. 2015, 294, 182–202. [Google Scholar] [CrossRef]

- Nakagawa, N.; Ishigame, A.; Yasuda, K. Particle swarm optimization using velocity control. IEEJ Trans. Electr. Inf. Syst. 2009, 129, 1331–1338. [Google Scholar] [CrossRef]

- Clerc, M.; Kennedy, J. The particle swarm: Explosion stability and convergence in a multi-dimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

- Iwasaki, N.; Yasuda, K.; Ueno, G. Dynamic parameter tuning of particle swarm optimization. IEEJ Trans. Electr. Electr. 2006, 1, 353–363. [Google Scholar] [CrossRef]

- Leong, W.F.; Yen, G.G. PSO-based multiobjective optimization with dynamic population size and adaptive local archives. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2008, 38, 1270–1293. [Google Scholar] [CrossRef] [PubMed]

- Rada-Vilela, J.; Johnston, M.; Zhang, M. Population statistics for particle swarm optimization: Single-evaluation methods in noisy optimization problems. Soft Comput. 2014, 19, 1–26. [Google Scholar] [CrossRef]

- Hsieh, S.T.; Sun, T.Y.; Liu, C.C.; Tsai, S.J. Efficient population utilization strategy for particle swarm optimizer. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2009, 39, 444–456. [Google Scholar] [CrossRef] [PubMed]

- Ruan, Z.H.; Yuan, Y.; Chen, Q.X.; Zhang, C.X.; Shuai, Y.; Tan, H.P. A new multi-function global particle swarm optimization. Appl. Soft Comput. 2016, 49, 279–291. [Google Scholar] [CrossRef]

- Serani, A.; Leotardi, C.; Iemma, U.; Campana, E.F.; Fasano, G.; Diez, M. Parameter selection in synchronous and asynchronous deterministic particle swarm optimization for ship hydrodynamics problems. Appl. Soft Comput. 2016, 49, 313–334. [Google Scholar] [CrossRef] [Green Version]

- Sandgren, E. Nonlinear integer and discrete programming in mechanical design optimization. J. Mech. Des. ASME 1990, 112, 223–229. [Google Scholar] [CrossRef]

| Function Name | Test Function | Search Space | The Range of Particle Velocity | The Best Solution | The Best Result |

|---|---|---|---|---|---|

| Sphere1 | 0 | ||||

| Sphere2 | 0 | ||||

| Rastrigin | 0 | ||||

| Rosenbrock | 0 | ||||

| Griewank | 0 | ||||

| Ackley | 0 | ||||

| Levy and Montalvo 2 | 0 | ||||

| Sinsolidal | −3.5 | ||||

| Rotated Expanded Scaffer | 0 | ||||

| Alpine | 0 | ||||

| Moved axis parallel hyper-ellipsoid | 0 | ||||

| Schwefel |

| Function | r1, r2 | 0.5 | |||

|---|---|---|---|---|---|

| Dimension | 10/30/100 | 10/30/100 | 10/30/100 | 10/30/100 | |

| Sphere1 | Average solution | 0/19.90/4203.68 | 0.74/1390.12/34,069.52 | 0/0/0 | 0/0/0 |

| Standard deviation | 0/12.37/4400.60 | 1.62/575.44/10,343.18 | 0/0/0 | 0/0/0 | |

| The worst solution | 0/48.87/13,080.62 | 7.90/2730.92/53,024.46 | 0/0/0 | 0/0/0 | |

| The best solution | 0/4.13/303.05 | 0/443.08/17,579.98 | 0/0/0 | 0/0/0 | |

| Sphere2 | Average solution | 0/1726.67/9386.74 | 130.34/5076.33/56,870.86 | 0/0/183.33 | 0/0/220 |

| Standard deviation | 0/466.04/6716.49 | 180.26/9114.11/75,788.47 | 0/0/159.92 | 0/0/174.99 | |

| The worst solution | 0/2600/44,700 | 600/38,700/323,027.84 | 0/0/600 | 0/0/600 | |

| The best solution | 0/900.00/6400.80 | 0.01/2319.72/9236.02 | 0/0/0 | 0/0/0 | |

| Rastrigin | Average solution | 1.03/52.83/357.43 | 17.01/116.61/810.69 | 0/0/0 | 0/0/0 |

| Standard deviation | 1.60/21.87/81.84 | 9.70/33.22/76.28 | 0/0/0 | 0/0/0 | |

| The worst solution | 4.97/100.77/499.92 | 42.81/215.88/932.59 | 0/0/0 | 0/0/0 | |

| The best solution | 0/3.62/186.01 | 4.98/62.26/670.46 | 0/0/0 | 0/0/0 | |

| Rosenbrock | Average solution | 0.40/462.38/308,982.29 | 250.36/63,215/13,665,740.29 | 1.93/28.86/98.92 | 0.98/28.90/98.94 |

| Standard deviation | 1.22/292.83/182,460.39 | 663.05/59,859/6,367,792.97 | 2.86/0.08/0.05 | 2.43/0.07/0.03 | |

| The worst solution | 3.99/1383.17/874,057.21 | 3515.43/260,355.55/27,517,029.65 | 8.93/28.96/98.98 | 8.75/28.97/98.98 | |

| The best solution | 0/165.84/36,093.44 | 5.86/1614.69/5,391,184.05 | 0.00/28.67/98.77 | 1.63 × 10−6/28.69/98.86 | |

| Griewank | Average solution | 0.19/1.13/17.27 | 0.22/12.88/289.00 | 0/0/0 | 0/0/0 |

| Standard deviation | 0.15/0.15/9.06 | 0.12/5.64/84.70 | 0/0/0 | 0/0/0 | |

| The worst solution | 0.51/1.51/39.44 | 0.59/26.48/519.72 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0.90/3.45 | 0.08/3.34/123.68 | 0/0/0 | 0/0/0 | |

| Ackley | Average solution | 1.46/6.07/11.12 | 1.64/9.00/15.63 | 0/0/0 | 0/0/0 |

| Standard deviation | 1.22/1.50/2.26 | 1.02/1.25/1.10 | 0/0/0 | 0/0/0 | |

| The worst solution | 3.22/8.90/15.17 | 3.58/12.06/17.46 | 0/0/0 | 0/0/0 | |

| The best solution | 0/2.73/3.44 | 0.02/6.98/13.44 | 0/0/0 | 0/0/0 | |

| Levy and Montalvo 2 | Average solution | 0/0.14/12.83 | 0.01/1.31/20.28 | 0/0.23/8.63 | 0/1.34/8.78 |

| Standard deviation | 0/0.17/2.31 | 0.01/0.54/4.57 | 0/0.46/0.48 | 0/0.64/0.44 | |

| The worst solution | 0/0.81/17.84 | 0.06/2.78/34.78 | 0/1.60/9.42 | 0/2.49/9.57 | |

| The best solution | 0/0.01/9.09 | 0.00/0.28/14.28 | 0/0/7.38 | 0/0/7.89 | |

| Sinsolidal | Average solution | −3.43/−1.02/−0.11 | −3.43/−0.41/0 | −3.50/−1.59/−0.09 | −3.18/−1/0 |

| Standard deviation | 0.29/1.14/0.31 | 0.14/0.46/0 | 0/1.56/0.32 | 0.74/1.18/0 | |

| The worst solution | −2.12/−0.01/0 | −2.93/−0.01/0 | −3.50/−0.01/0 | −0.87/−0.01/0 | |

| The best solution | −3.50/−3.45/−1.66 | −3.50/−1.47/0 | −3.50/−3.50/−1.75 | −3.50/−3.50/0 | |

| Rotated Expanded Scaffer | Average solution | 1.27/6.77/26.10 | 2.58/10.92/43.13 | 0/0/0 | 0/0/0 |

| Standard deviation | 0.74/1.96/5.54 | 0.47/1.18/1.59 | 0/0/0 | 0/0/0 | |

| The worst solution | 2.60/9.24/34.49 | 3.56/13.11/45.81 | 0/0/0 | 0/0/0 | |

| The best solution | 0/1.92/13.45 | 1.73/8.63/39.45 | 0/0/0 | 0/0/0 | |

| Alpine | Average solution | 0/2.67/39.15 | 0.12/6.36/57.50 | 0/2.14/27.04 | 0/3.36/30.12 |

| Standard deviation | 0/1.70/8.56 | 0.34/1.81/8.48 | 0/1.17/3.96 | 0/1.19/3.65 | |

| The worst solution | 0/7.30/62.51 | 1.81/10.83/75.846 | 0/5.71/34.30 | 0/6.04/38.17 | |

| The best solution | 0/0.27/17.71 | 0/2.94/44.30 | 0/0/16.87 | 0/1/23.11 | |

| Moved axis parallel hyper-ellipsoid | Average solution | 0/976.19/102,196.49 | 10.66/14,535.57/714,363.54 | 0/0/56,727.35 | 0/0/44,270.31 |

| Standard deviation | 0/2276.36/46,700.85 | 21.97/6358.06/125,266.58 | 0/0/54,460.61 | 0/0/70,284.76 | |

| The worst solution | 0/8532.57/196,643.04 | 105.74/33,237.39/967,098.52 | 0/0/195,537.54 | 0/0/235,721.53 | |

| The best solution | 0/7.13/21,301.13 | 0.01/5625.85/484,347.13 | 0/0/0 | 0/0/0 | |

| Schwefel | Average solution | −3472.55/−8400.88/−25,222.99 | −3072.44/−6957.38/−17,338.78 | −3772.47/−10,596.14/−31,640.44 | −3772.47/−10,651.80/−30,094.87 |

| Standard deviation | 301.97/862.61/2717.54 | 402.88/941.95/2511.55 | 229.58/745.71/2489.84 | 233.82/635.86/1790.82 | |

| The worst solution | −2865.61/−6560.66/−20,423.87 | −2151.57/−4665.91/−12,121.78 | −3355.12/−9101.35/−25,385.62 | −3235.87/−9101.35/−26,155.70 | |

| The best solution | −4070.58/−9946.11/−31,017.18 | −3733.40/−8648.21/−21,643.45 | −4189.83/−11,854.02/−37,370.87 | −4189.83/−11,854.01/−32,695.87 |

| Function | r1, r2 | 0.5 | |||

|---|---|---|---|---|---|

| Dimension | 10/30/100 | 10/30/100 | 10/30/100 | 10/30/100 | |

| Sphere1 | Average solution | 0/0/1666.67 | 0/937.89/62,668.95 | 0/0/0 | 0/0/0 |

| Standard deviation | 0/0/4611.33 | 0.01/1954.53/19,933.81 | 0/0/0 | 0/0/0 | |

| The worst solution | 0/0/20,000 | 0.04/8616.07/110,110.64 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0/0 | 0/62.99/35,629.65 | 0/0/0 | 0/0/0 | |

| Sphere2 | Average solution | 56.67/2033.33 /46,393.33 | 151.13/3905.81/49,939.96 | 0/0/293.33 | 0/0/236.6667 |

| Standard deviation | 67.89/256.41/35,977.84 | 122.87/6591.85/40,171.84 | 0/0/228.84 | 0/0/225.1181 | |

| The worst solution | 200/2600/189,200 | 500.38/38,800/153,700 | 0/0/900 | 0/0/700 | |

| The best solution | 0/1600/8600 | 0.03/2361.29/9103.90 | 0/0/0 | 0/0/0 | |

| Rastrigin | Average solution | 0/18.90 /130.24 | 26.30/142.34/865.88 | 0/0/0 | 0/0/0 |

| Standard deviation | 0/22.91/83.07 | 11.70/31.11/82.15 | 0/0/0 | 0/0/0 | |

| The worst solution | 0/82.72/335.59 | 48.75/193.01/1096.66 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0/28.92 | 7.97/67.63/737.39 | 0/0/0 | 0/0/0 | |

| Rosenbrock | Average solution | 0.27/26.04/98.15 | 379.22/20,307.62/33,690,571.22 | 0.47/28.81/98.87 | 2.39/28.90/98.93 |

| Standard deviation | 1.01/0.54/0.08 | 845.50/34,257.18/41,367,235.20 | 1.54/0.09/0.04 | 3.33/0.06/0.04 | |

| The worst solution | 3.99/27.30/98.24 | 3032.11/107,129.05/231,274,237.32 | 7.48/28.94/98.94 | 8.95/28.98/98.99 | |

| The best solution | 0/25.04/97.89 | 4.96/1698.53/6,545,833.08 | 0/28.59/98.77 | 1.29 × 10−8/28.76/98.79 | |

| Griewank | Average solution | 0/0/28.04 | 0.19/5.28/478.06 | 0/0/0 | 0/0/0 |

| Standard deviation | 0/0/42.54 | 0.18/3.19/155.18 | 0/0/0 | 0/0/0 | |

| The worst solution | 0/0/90.93 | 0.84/14.45/941.53 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0/0 | 0.05/1.70/203.77 | 0/0/0 | 0/0/0 | |

| Ackley | Average solution | 0/0/9.64 | 0.80/8.25/18.27 | 0/0/0 | 0/0/0 |

| Standard deviation | 0/0/6.53 | 0.85/2.90/0.79 | 0/0/0 | 0/0/0 | |

| The worst solution | 0/0/19.97 | 2.81/16.67/19.44 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0/0 | 0/4.40/16.92 | 0/0/0 | 0/0/0 | |

| Levy and Montalvo 2 | Average solution | 0/0/10.48 | 0/3.35/34.54 | 0/0.36/8.28 | 0/1.08/8.64 |

| Standard deviation | 0/0/3.20 | 0/1.84/8.01 | 0/0.50/0.62 | 0/0.68/0.37 | |

| The worst solution | 0/0/17.73 | 0/7.62/49.10 | 0/1.51/9.37 | 0/2.20/9.32 | |

| The best solution | 0/0/5.40 | 0/0.70/18.90 | 0/0/6.31 | 0/0/7.70 | |

| Sinsolidal | Average solution | −3.38/−1/−0.03 | −3.07/−0.12/0 | −3.27/−0.72/−0.05 | −3.21/−0.54/0 |

| Standard deviation | 0.44/1.13/0.09 | 0.53/0.16/0 | 0.61/1.02/0.18 | 0.66/0.80/0 | |

| The worst solution | −1.75/0/0 | −1.75/0/0 | −1.75/0/0 | −1.75/0/0 | |

| The best solution | −3.50/−3.50/−0.44 | −3.50/−0.70/0 | −3.50/−3.50/−0.87 | −3.50/−3.50/0 | |

| Rotated Expanded Scaffer | Average solution | 0.07/1.44/8.33 | 3/11.74/43.49 | 0/0/0 | 0/0/0 |

| Standard deviation | 0.25/1.49/3.53 | 0.47/1.03/1.02 | 0/0/0 | 0/0/0 | |

| The worst solution | 1/5.98/14.94 | 3.70/12.97/46.10 | 0/0/0 | 0/0/0 | |

| The best solution | 0/0/0 | 1.42/8.57/41.56 | 0/0/0 | 0/0/0 | |

| Alpine | Average solution | 0/0.91/16.46 | 0.31/8.41/73.22 | 0/2.45/27.24 | 0/2.93/30.70 |

| Standard deviation | 0/1.94/7.65 | 0.76/3.95/13.39 | 0/1.23/6.95 | 0/1.44/4.19 | |

| The worst solution | 0/8.46/34.94 | 2.85/15.54/96.89 | 0/5.02/36.40 | 0/6.16/37.74 | |

| The best solution | 0/0.02/5.71 | 0/1.08/48.66 | 0/0/0 | 0/1/22.49 | |

| Moved axis parallel hyper-ellipsoid | Average solution | 0/1383.33/102,196.49 | 20.64/9487.68/714,363.54 | 0/265.79/56,727.35 | 0/132.76/44,270.31 |

| Standard deviation | 0/2215.48/46,700.85 | 90.81/5798.05/125,266.58 | 0/865.18/54,460.61 | 0/727.13/70,284.76 | |

| The worst solution | 0/7000/196,643.04 | 500/24,372.80/967,098.52 | 0/3982.80/195,537.54 | 0/3982.65/235,721.53 | |

| The best solution | 0/0/21,301.13 | 0/2221.47/484,347.13 | 0/0/0 | 0/0/0 | |

| Schwefel | Average solution | −3764.52/−9823.92/−26,540.45 | −3134.09/−7401.16/−18,304.47 | −3764.52/−10,431.89/−30,589.24 | −3732.73/−10,492.05/−29,986.84 |

| Standard deviation | 142.42/1344.37/3360.98 | 450.61/794.06/2000.45 | 243.94/662.12/2767.91 | 226.00/698.81/2431.96 | |

| The worst solution | −3474.36/−6944.90/−20,227.96 | −2057.33/−5583.79/−14,272.03 | −3235.87/−8743.62/−25,650.73 | −3235.87/−8624.37/−25,743.44 | |

| The best solution | −3951.34/−11,854.02/−31,935.70 | −3832.10/−9056.25/−22,371.46 | −4189.83/−11,496.29/−37,956.42 | −4189.83/−11,948.07/−34,477.94 |

| Type | r1, r2 | 0.5 | |||

|---|---|---|---|---|---|

| SPSO | Average solution | 5975.93 | 5975.93 | 5975.93 | 5975.94 |

| Standard deviation | 0.00 | 0.00 | 0.01 | 0.01 | |

| The worst solution | 5975.93 | 5975.93 | 5975.96 | 5975.99 | |

| The best solution | 5975.93 | 5975.93 | 5975.93 | 5975.93 | |

| LDIW-PSO | Average solution | 5975.93 | 5975.93 | 6001.34 | 6026.76 |

| Standard deviation | 0.00 | 0.00 | 139.18 | 193.41 | |

| The worst solution | 5975.93 | 5975.93 | 6738.24 | 6738.26 | |

| The best solution | 5975.93 | 5975.93 | 5975.93 | 5975.93 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, H.-P.; Chen, D.-D.; Zheng, Z.-S. Effects of Random Values for Particle Swarm Optimization Algorithm. Algorithms 2018, 11, 23. https://doi.org/10.3390/a11020023

Dai H-P, Chen D-D, Zheng Z-S. Effects of Random Values for Particle Swarm Optimization Algorithm. Algorithms. 2018; 11(2):23. https://doi.org/10.3390/a11020023

Chicago/Turabian StyleDai, Hou-Ping, Dong-Dong Chen, and Zhou-Shun Zheng. 2018. "Effects of Random Values for Particle Swarm Optimization Algorithm" Algorithms 11, no. 2: 23. https://doi.org/10.3390/a11020023