Evaluating Typical Algorithms of Combinatorial Optimization to Solve Continuous-Time Based Scheduling Problem

Abstract

:1. Introduction

- -

- Enumerating techniques

- -

- Different kinds of relaxation

- -

- Artificial intelligence techniques (neural networks, genetic algorithms, agents, etc.)

2. Materials and Methods

2.1. Notions and Base Data for Scheduling

- The enterprise functioning process utilizes resources of different types (for instance machines, personnel, riggings, etc.). The set of the resources is indicated by a variable , .

- The manufacturing procedure of the enterprise is formalized as a set of operations J tied with each other via precedence relations. Precedence relations are brought to a matrix , , . Each element of the matrix iff the operation j follows the operation i and zero otherwise .

- Each operation i is described by duration . Elements , , form a vector of operations’ durations ⟶.

- Each operation i has a list of resources it uses while running. Necessity for resources for each operation is represented by the matrix , , . Each element of the matrix iff the operation i of the manufacturing process allocates the resource r. All other cases bring the element to zero value .

- The input orders of the enterprise are considered as manufacturing tasks for the certain amount of end product and are organized into a set F. Each order is characterized by the end product amount and the deadline , . Elements inside F are sorted in the deadline ascending order.

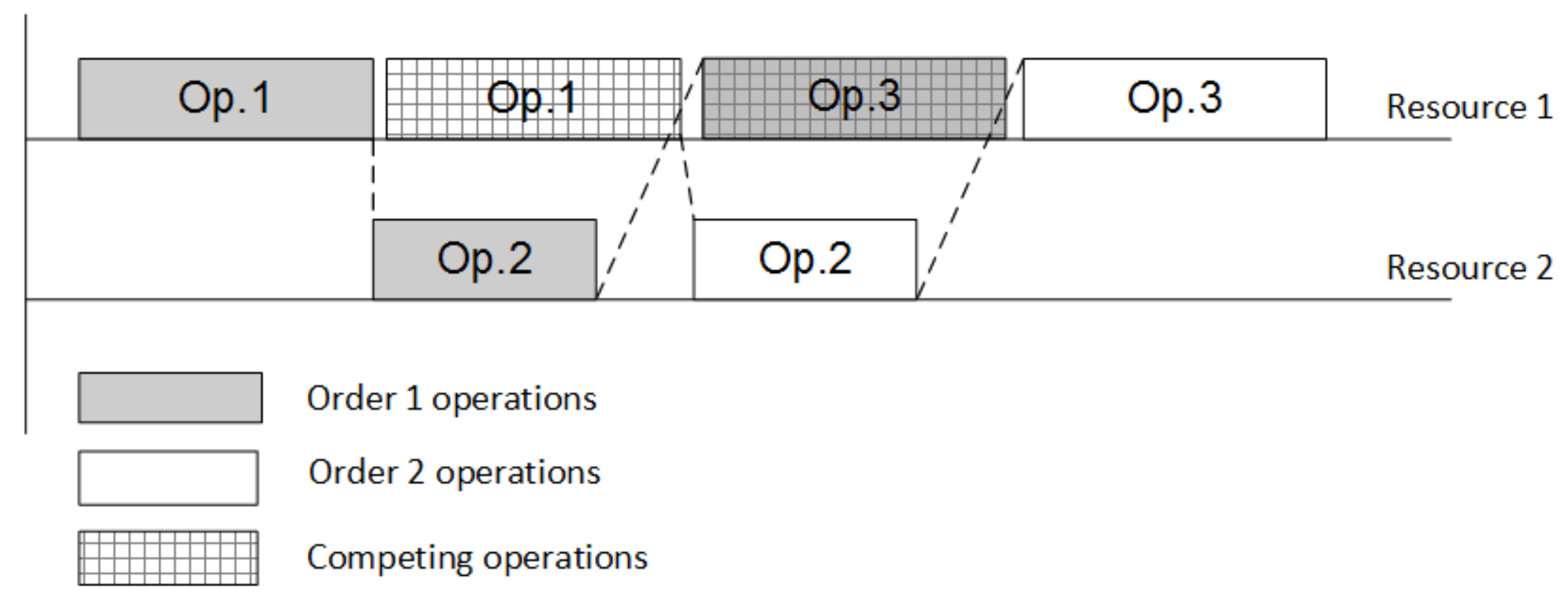

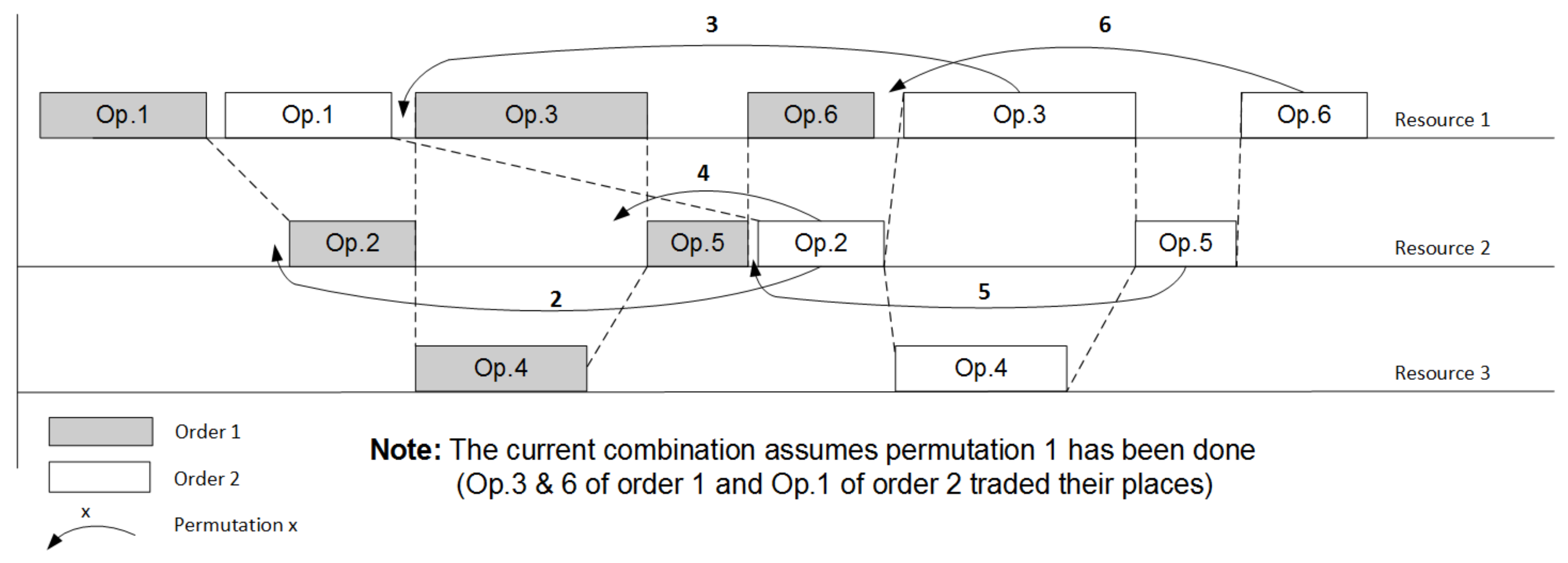

2.2. Continuous-Time Problem Setting

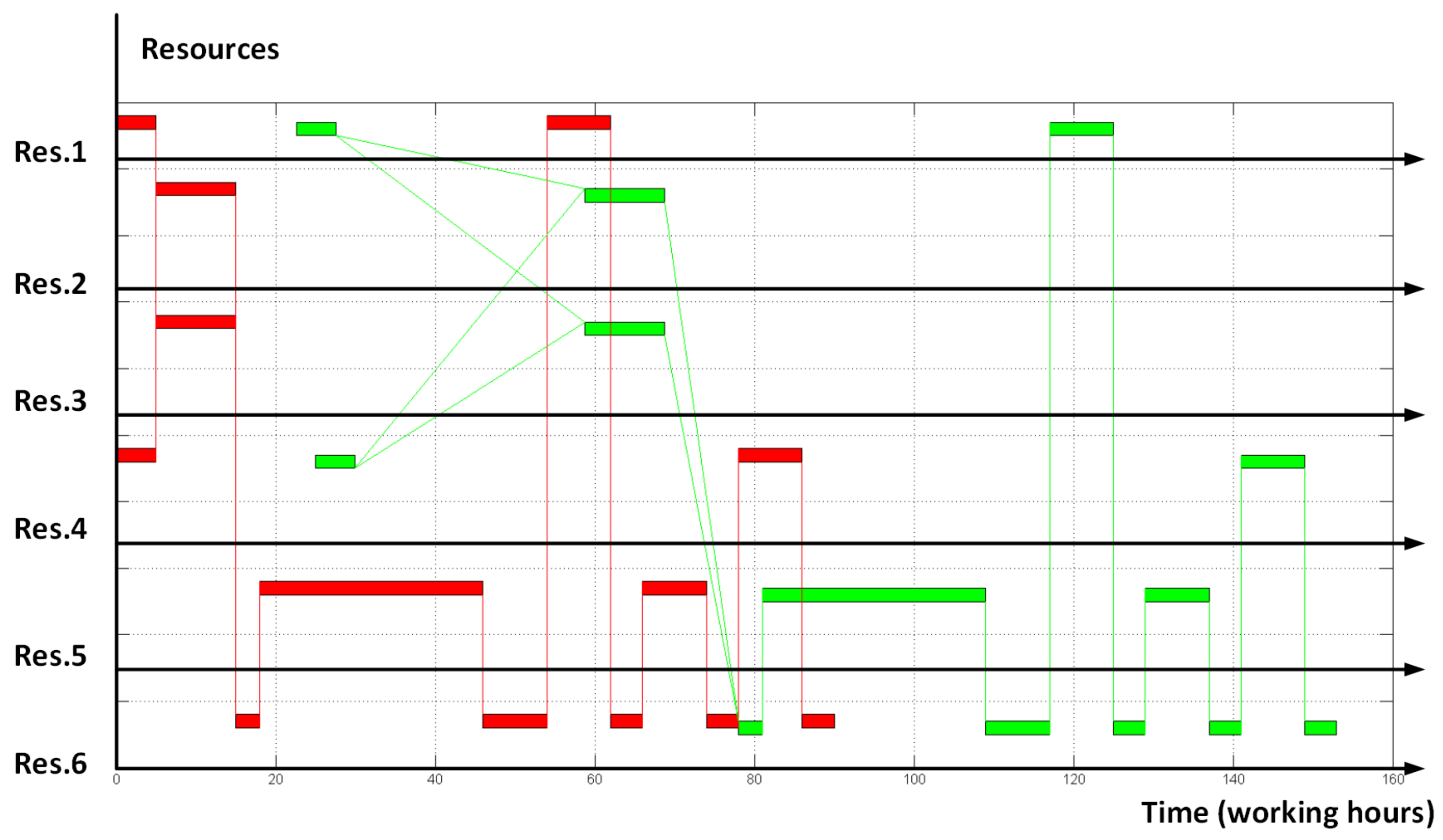

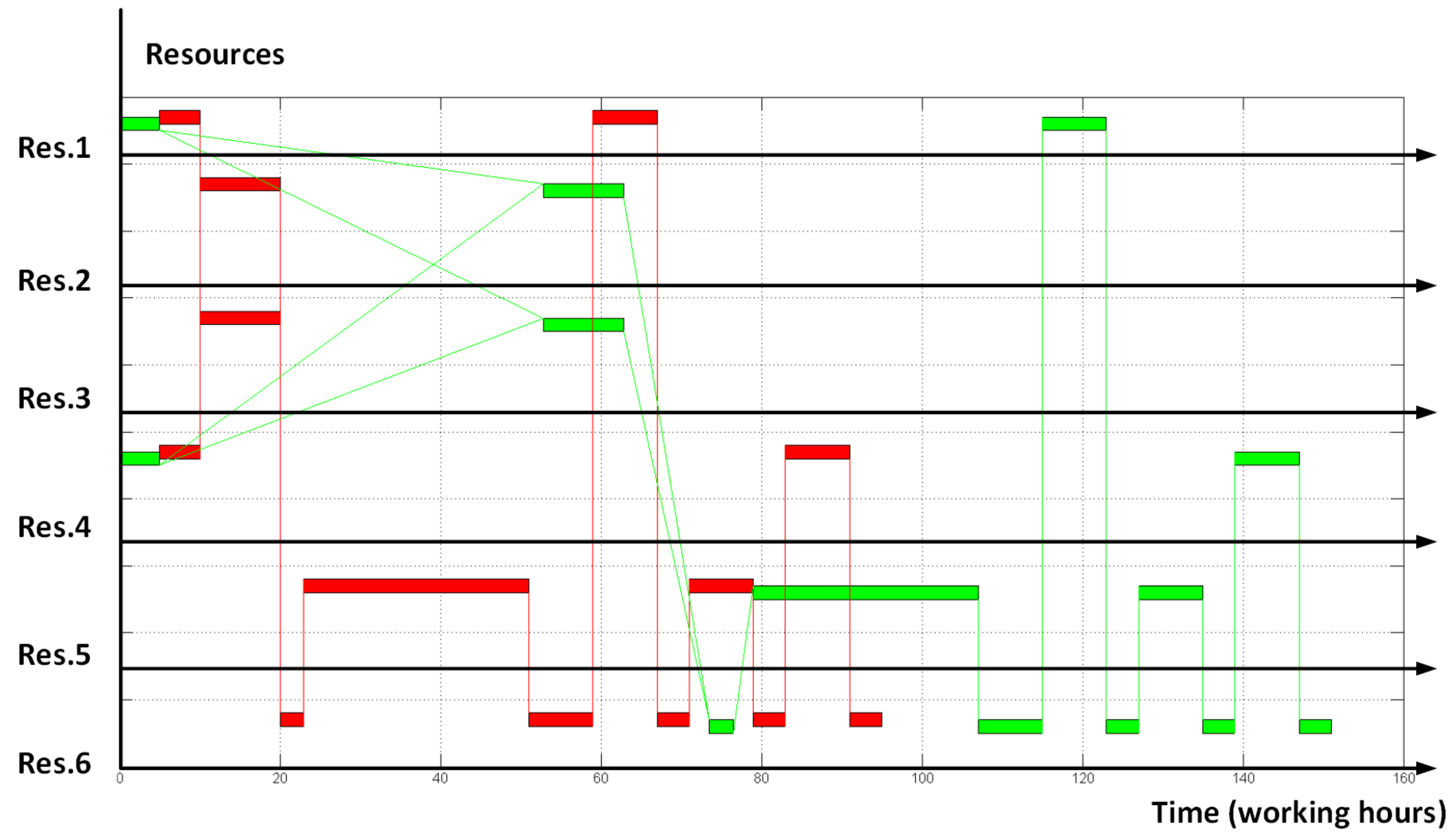

- Precedence graph of the manufacturing procedure:

- Meeting deadlines for all orders

2.3. Combinatorial Optimization Techniques

2.3.1. Enumeration and Branch-and-Bound Approach

Procedure 1: branch-and-bound 1. Find the initial solution of the LP problem (1)–(3) for the combination , that corresponds to the case when all orders are in a row (first operation of the following order starts only after the last operation of preceding order is completed). 2. Remember the solution and keep the value of objective function as temporarily best result . 3. Select the last order . 4. Select the resource that is used by last operation of the precedence graph G of the manufacturing process. 5. The branch is now formulated. 6. Condition: Does the selected resource r have more than one operation in the manufacturing procedure that allocates it? 6.1. If yes then Begin evaluating branch Shift the operation i one competing position to the left Find the solution of the LP problem (1)–(3) for current combination and calculate the objective function Condition: is the solution feasible? If feasible then Condition: Is the objective function value better than temporarily best result ? If yes then save current solution as the new best result . End of condition Switch to the preceding operation of currently selected order f for the currently selected resource r. Go to the p. 5 and start evaluating the new branch If not feasible then Stop evaluating branch Switch to the preceding resource Go to the p. 5 and start evaluating the new branch End of condition: is the solution feasible? End of condition: p. 6 7. Switch to the preceding order 8. Repeat pp. 4–7 until no more branches are available 9. Repeat pp. 3–8 until no more feasible shifts are possible for all operations in all branches.

2.3.2. Gradient-Alike Algorithm

Procedure 2: gradient algorithm 1. Find the initial solution of the LP problem (1)–(3) for the combination , that corresponds to the case when all orders are in a row (first operation of the following order starts only after the last operation of preceding order is completed). 2. Remember the solution and keep the value of objective function as temporarily best result . 3. Select the last order . 4. Select the resource that is used by first operation of the sequence graph of the manufacturing process. 5. The current optimization variable for gradient optimization is selected—position of operation i of the order f on the resource r. 6. Condition: Does the selected resource r have more than one operation in the manufacturing procedure that allocates it? 6.1. If yes then Begin optimizing position 6.1.1. Set the step of shifting the operation to maximum (shifting to the leftmost position). 6.1.2. Find the ‘derivative’ of shifting the operation i to the left Shift the operation i one position to the left Find the solution of the LP problem (1)–(3) for current combination and calculate the objective function Condition A: is the solution feasible? If feasible then Condition B: Is the objective function value better than temporarily best result ? If yes then We found the optimization direction for position , proceed to p. 6.1.3 If not then No optimization direction for the current position stop optimizing position switch to the next operation go to p 6 and repeat search for position End of condition B If not feasible then No optimization direction for the current position stop optimizing position switch to the next operation go to p 6 and repeat search for position End of condition A 6.1.3. Define the maximum possible optimization step for the current position , initial step value Shift the operation i left using the step . Find the solution of the LP problem (1)–(3) for current combination and calculate the objective function Condition C: Is the solution feasible and objective function value better than temporarily best result ? If yes then save current solution as the new best result stop optimizing position switch to the next operation go to p 6 and repeat search for position If not then reduce the step twice and repeat operations starting from p. 6.1.3 End of condition C Switch to the next operation, go to p. 6 and optimize position 7. Switch to the preceding resource 8. Repeat pp. 5–7 for currently selected resource 9. Switch to the preceding order 10. Repeat pp. 4–9 for currently selected order . 11. Repeat pp. 3–10 until no improvements and/or no more feasible solutions exist.

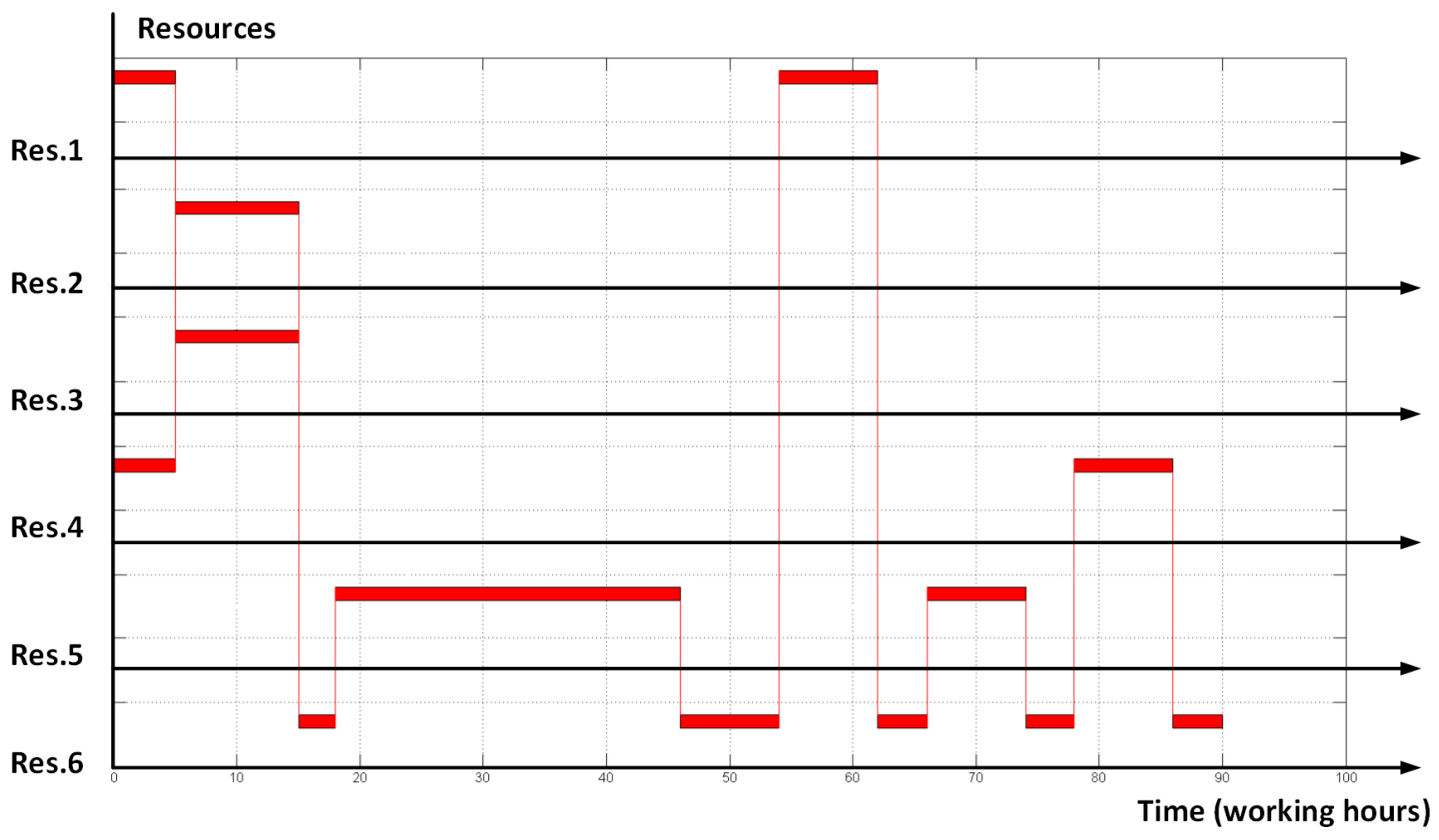

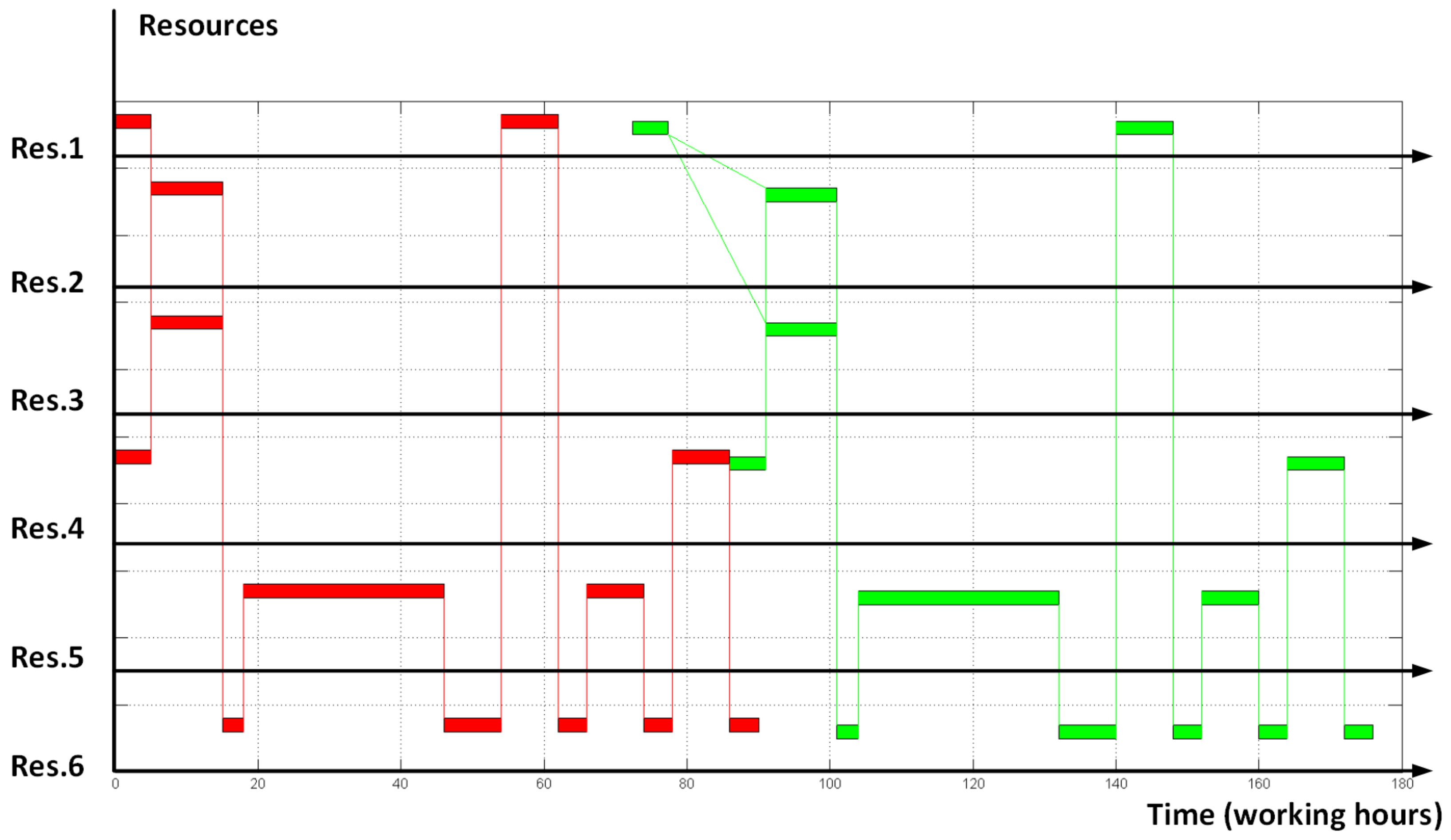

3. Results of the Computational Experiment

4. Discussion

4.1. Random Search as an Effort to Find Global Optimum

5. Conclusions

- Genetic algorithms. From the first glance evolutionary algorithms [15] should have a good application case for the scheduling problem (1)–(3). The combinatorial vector of permutations , , seems to be naturally and easily represented as a binary crossover [15] while the narrow tolerance region of the optimization problem will contribute to the fast convergence of the breeding procedure. Authors of this paper leave this question for further research and discussion.

- Dynamic programming. A huge implementation area in global optimization (and particularly in RCPSP) is left for dynamic programming algorithms [16]. Having severe limitations in amount and time we do not cover this approach but will come back to it in future papers.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Artigues, C.; Demassey, S.; Néron, E.; Sourd, F. Resource-Constrained Project Scheduling Models, Algorithms, Extensions and Applications; Wiley-Interscience: Hoboken, NJ, USA, 2008. [Google Scholar]

- Meyer, H.; Fuchs, F.; Thiel, K. Manufacturing Execution Systems. Optimal Design, Planning, and Deployment; McGraw-Hill: New York, NY, USA, 2009. [Google Scholar]

- Jozefowska, J.; Weglarz, J. Perspectives in Modern Project Scheduling; Springer: New York, NY, USA, 2006. [Google Scholar]

- Manne, A.S. On the Job-Shop Scheduling Problem. Oper. Res. 1960, 8, 219–223. [Google Scholar] [CrossRef]

- Jones, A.; Rabelo, L.C. Survey of Job Shop Scheduling Techniques. In Wiley Encyclopedia of Electrical and Electronics Engineering; National Institute of Standards and Technology: Gaithersburg, ML, USA, 1999. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.37.1262&rep=rep1&type=pdf (accessed on 10 April 2017).

- Taravatsadat, N.; Napsiah, I. Application of Artificial Intelligent in Production Scheduling: A critical evaluation and comparison of key approaches. In Proceedings of the 2011 International Conference on Industrial Engineering and Operations Management, Kuala Lumpur, Malaysia, 22–24 January 2011; pp. 28–33. [Google Scholar]

- Hao, P.C.; Lin, K.T.; Hsieh, T.J.; Hong, H.C.; Lin, B.M.T. Approaches to simplification of job shop models. In Proceedings of the 20th Working Seminar of Production Economics, Innsbruck, Austria, 19–23 February 2018. [Google Scholar]

- Trevisan, L. Combinatorial Optimization: Exact and Approximate Algorithms; Stanford University: Stanford, CA, USA, 2011. [Google Scholar]

- Wilf, H.S. Algorithms and Complexity; University of Pennsylvania: Philadelphia, PA, USA, 1994. [Google Scholar]

- Jacobson, J. Branch and Bound Algorithms—Principles and Examples; University of Copenhagen: Copenhagen, Denmark, 1999. [Google Scholar]

- Erickson, J. Models of Computation; University of Illinois: Champaign, IL, USA, 2014. [Google Scholar]

- Ruder, S. An Overview of Gradient Descent Optimization Algorithms; NUI Galway: Dublin, Ireland, 2016. [Google Scholar]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms, 3rd ed.; Massachusetts Institute of Technology: London, UK, 2009. [Google Scholar]

- Kalitkyn, N.N. Numerical Methods; Chislennye Metody; Nauka: Moscow, Russia, 1978. (In Russian) [Google Scholar]

- Haupt, R.L.; Haupt, S.E. Practical Genetic Algorithms, 2nd ed.; Wiley-Interscience: Hoboken, NJ, USA, 2004. [Google Scholar]

- Mitchell, I. Dynamic Programming Algorithms for Planning and Robotics in Continuous Domains and the Hamilton-Jacobi Equation; University of British Columbia: Vancouver, BC, Canada, 2008. [Google Scholar]

- Miner, D.; Shook, A. MapReduce Design Patterns: Building Effective Algorithms and Analytics for Hadoop and Other Systems; O’Reilly Media: Sebastopol, CA, USA, 2013. [Google Scholar]

| Algorithm | Resulting “Makespan” Objective Function | Times of Orders Finished | Full Number of Operations Permutations (Including Non-Feasible) | Number of Iterated Permutations | Number of Iterated Non-Feasible Permutations | Calculation Time, Seconds | |

|---|---|---|---|---|---|---|---|

| Order 1 | Order 2 | ||||||

| B&B | 235 | 86 | 149 | 318 | 25 | 11 | 3.6 |

| gradient | 238 | 91 | 147 | 318 | 18 | 4 | 2.8 |

| Number of Operations | Algorithm | Resulting “Makespan” Objective Function | Times of Orders Finished | Number of Iterated Permutations | Number of Iterated Non-Feasible Permutations | Calculation Time, Seconds | |

|---|---|---|---|---|---|---|---|

| Order 1 | Order 2 | ||||||

| 25 | B&B | 348 | 134 | 214 | 71 | 29 | 5.4 |

| gradient | 358 | 139 | 219 | 36 | 9 | 3.1 | |

| 50 | B&B | 913 | 386 | 527 | 201 | 59 | 25 |

| gradient | 944 | 386 | 558 | 84 | 22 | 3.7 | |

| 65 | B&B | 1234 | 488 | 746 | 490 | 70 | 112 |

| gradient | 1296 | 469 | 826 | 126 | 66 | 11.2 | |

| 100 | B&B | 1735 | 656 | 1079 | 809 | 228 | 288 |

| gradient | 1761 | 669 | 1092 | 677 | 83 | 237 | |

| Algorithm | Resulting “Makespan” Objective Function | Times of Orders Finished | Full Number of Operations Permutations (Including Non-Feasible) | Number of Iterated Permutations | Number of Iterated Non-Feasible Permutations | Calculation Time, Seconds | |

|---|---|---|---|---|---|---|---|

| Order 1 | Order 2 | ||||||

| B&B | 4909 | 1886 | 3023 | Unknown | ≈1200 (Manually stopped) | Unknown (≈10% of total) | Manually stopped after ≈7 h |

| Gradient | 4944 | 1888 | 3056 | Unknown | ≈550 | Unknown (≈30% of total) | ≈3 h |

| Random (gradient extension) | Trying implementing randomized algorithm led to high computation load of PC with no reasonable estimation of calculation time. Time to start first gradient iteration from feasible point took enumeration of more than 1000 variants. | ||||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lazarev, A.A.; Nekrasov, I.; Pravdivets, N. Evaluating Typical Algorithms of Combinatorial Optimization to Solve Continuous-Time Based Scheduling Problem. Algorithms 2018, 11, 50. https://doi.org/10.3390/a11040050

Lazarev AA, Nekrasov I, Pravdivets N. Evaluating Typical Algorithms of Combinatorial Optimization to Solve Continuous-Time Based Scheduling Problem. Algorithms. 2018; 11(4):50. https://doi.org/10.3390/a11040050

Chicago/Turabian StyleLazarev, Alexander A., Ivan Nekrasov, and Nikolay Pravdivets. 2018. "Evaluating Typical Algorithms of Combinatorial Optimization to Solve Continuous-Time Based Scheduling Problem" Algorithms 11, no. 4: 50. https://doi.org/10.3390/a11040050