Windthrow Detection in European Forests with Very High-Resolution Optical Data

Abstract

:1. Introduction

- windthrow areas ≥0.5 ha, and

- groups consisting of only few fallen trees (both tree fall-gaps and freestanding groups).

2. Materials and Methods

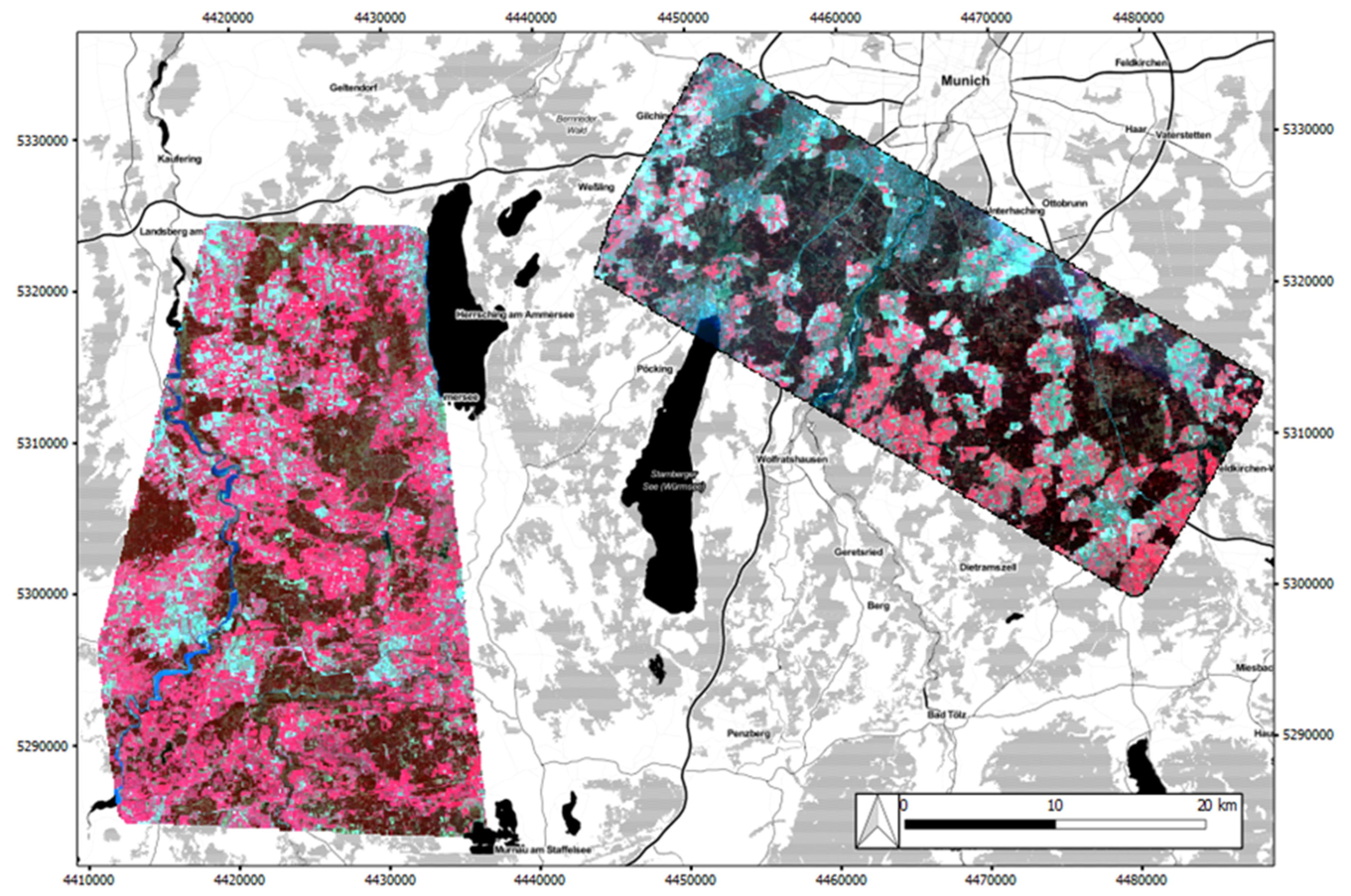

2.1. Storm Niklas and Study Area

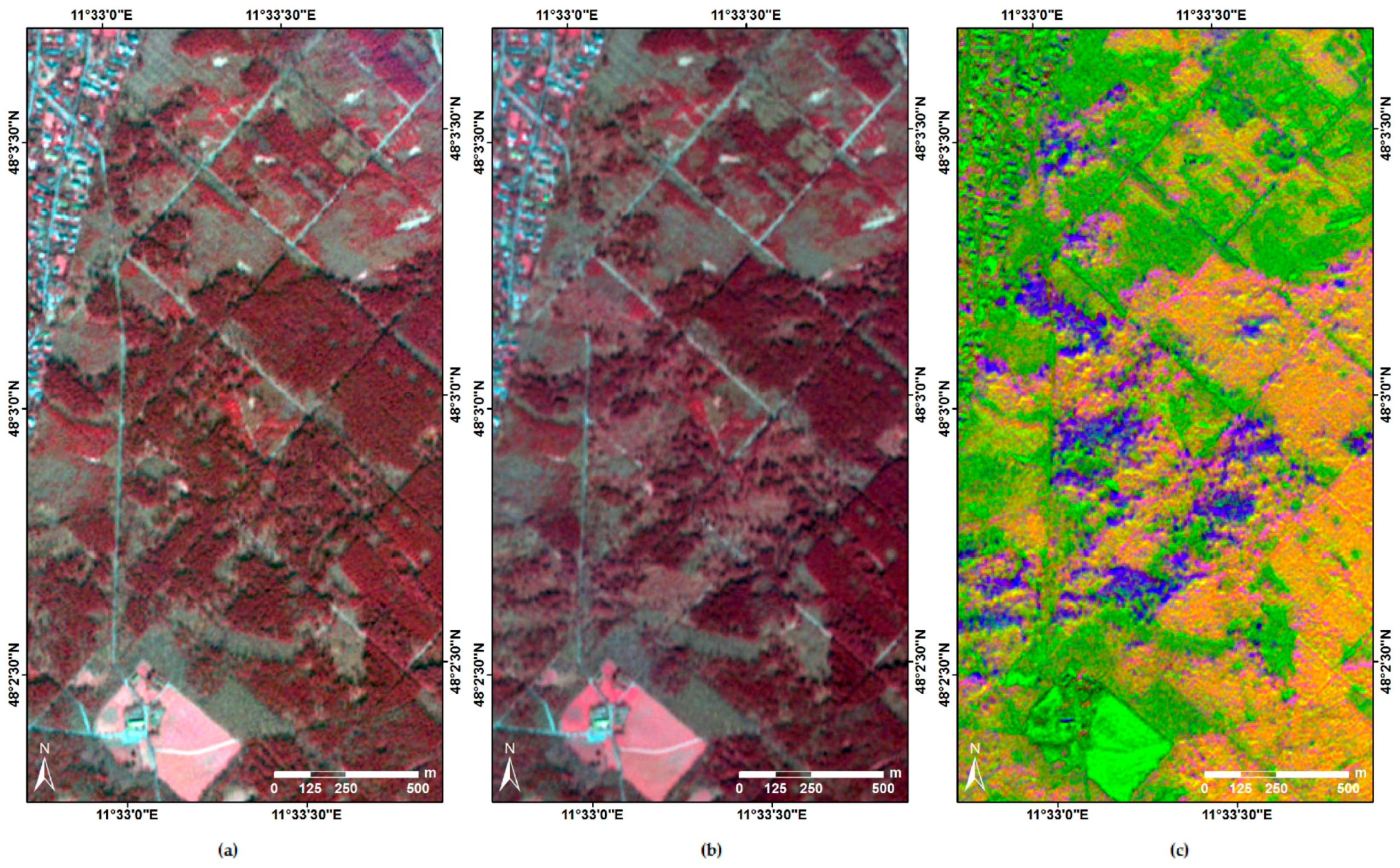

2.2. RapidEye Data Set

2.3. Reference Data

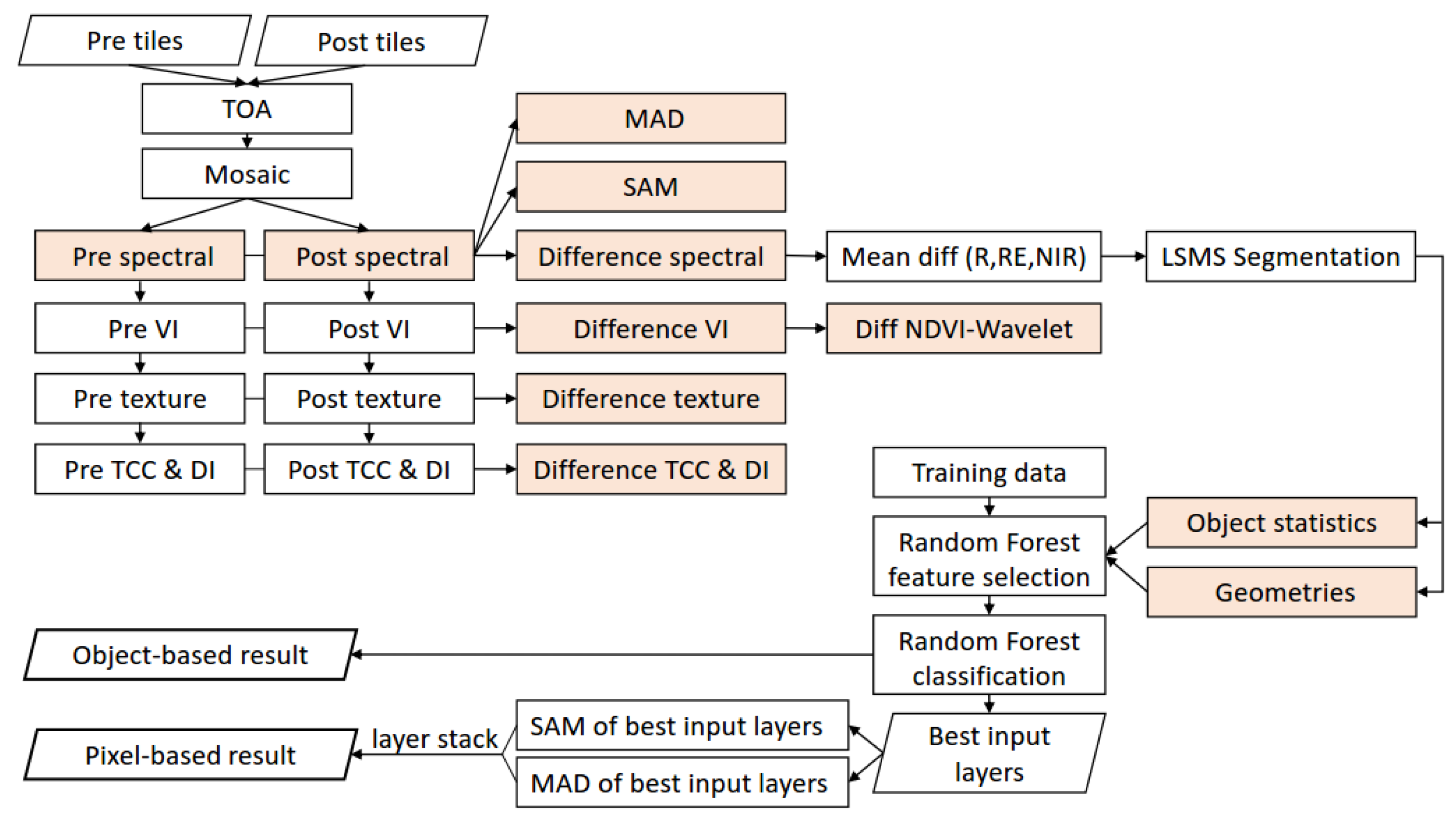

2.4. Method/Approach

- calculation of input layers,

- image segmentation,

- RF feature selection and object-based change classification, and

- development of pixel-based approach

2.4.1. Input Variables

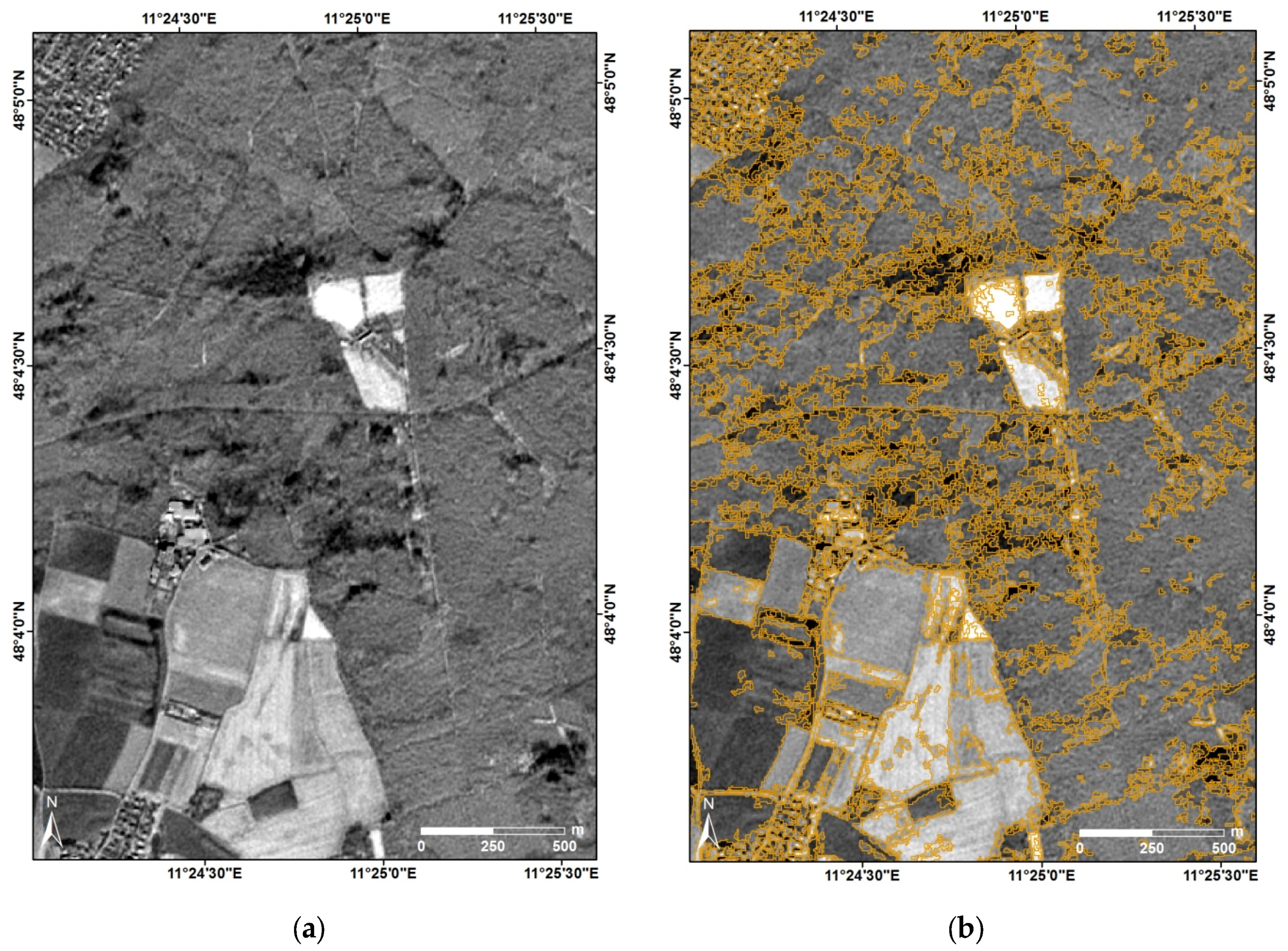

2.4.2. Segmentation

- stacked pre- and post-storm TOA reflectances (10 layers)

- difference images of the TOA reflectances (5 layers)

- difference images of the three spectral channels R, RE, and NIR (3 layers)

- mean of the three difference images (R, RE, and NIR) (1 layer)

2.5. Object-Based Change Detection for Detecting Windthrow Areas ≥0.5 ha

2.5.1. Random Forest Classifier

2.5.2. Training Data

2.5.3. Feature Selection

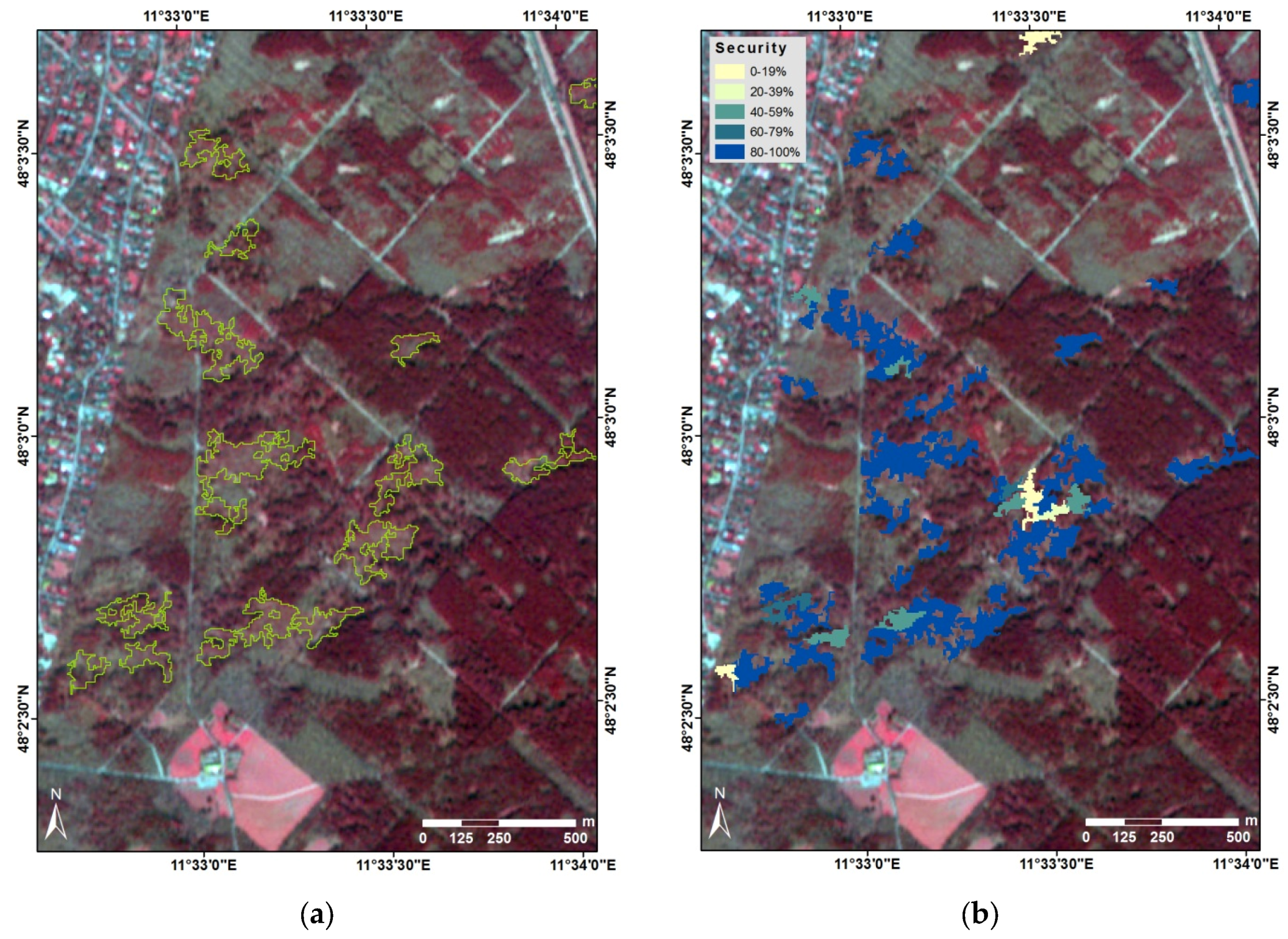

2.5.4. Classification Margin

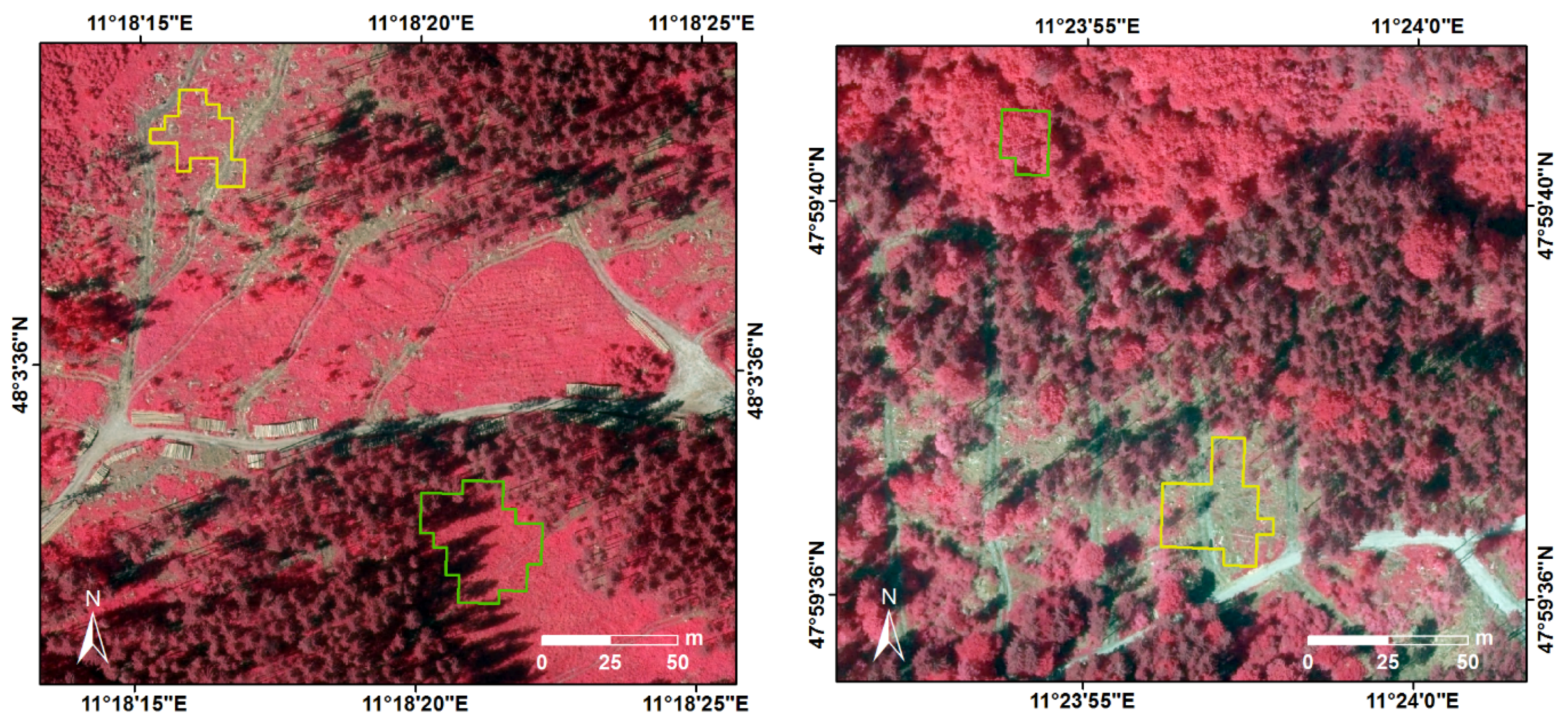

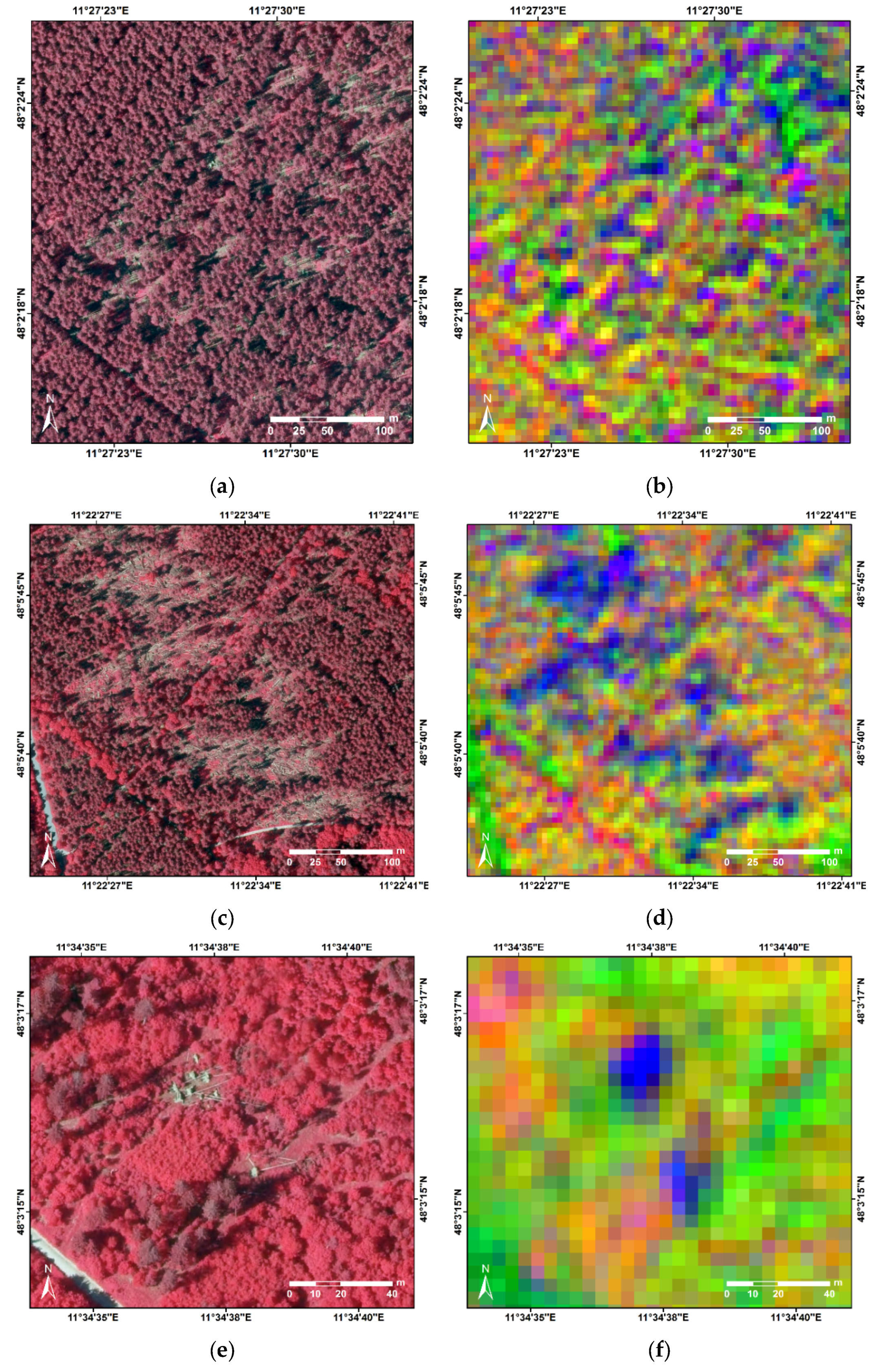

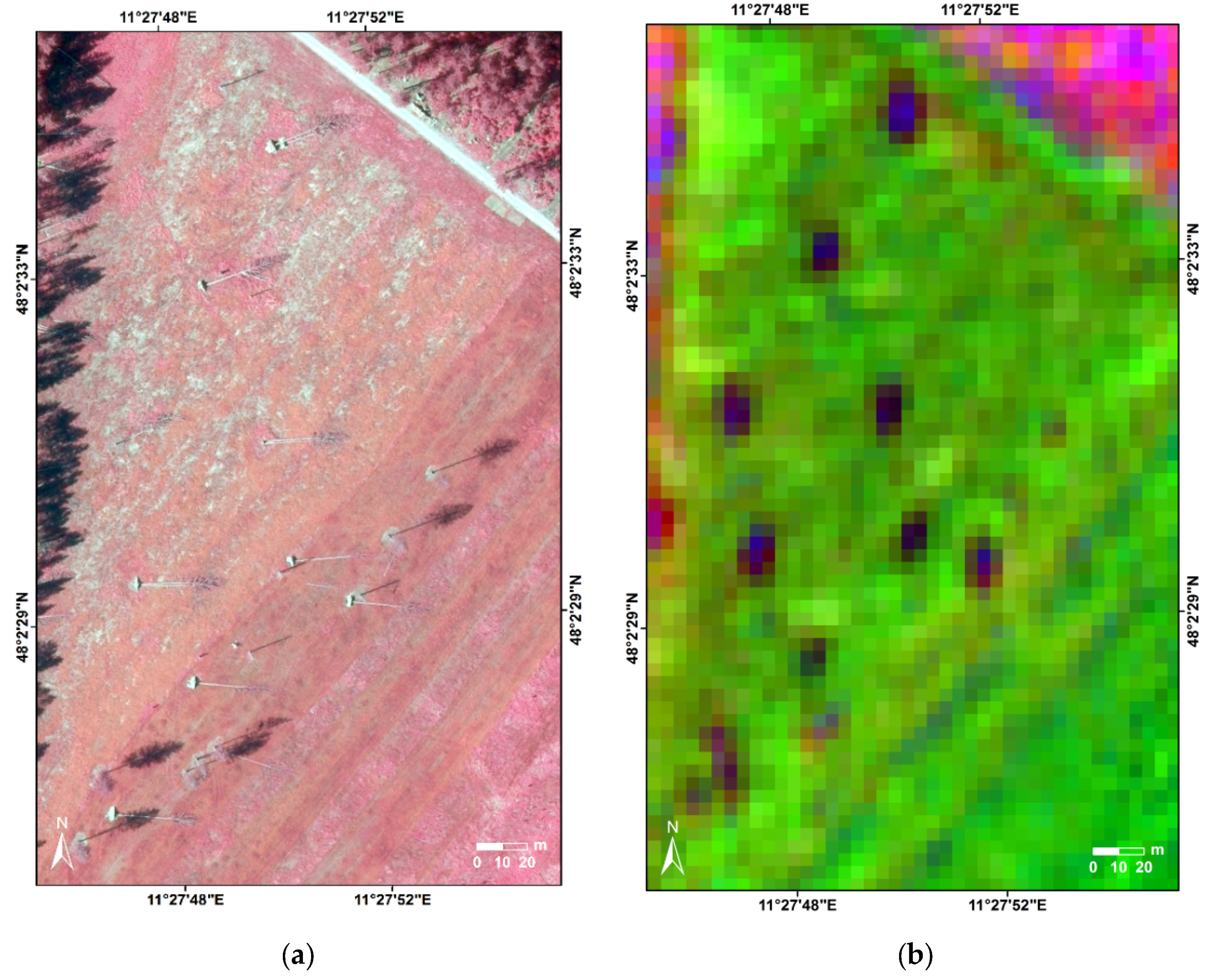

2.6. Pixel-Based Change Detection for Identifying Smaller Groups of Fallen Trees

2.7. Validation

- True positives (tp): windthrow areas are correctly detected

- False positives (fp): unchanged objects are incorrectly flagged as “windthrow”

- True negatives (tn): unchanged objects are correctly identified as “no change”

- False negatives (fn): windthrow areas are incorrectly flagged as “no change”

3. Results and Discussion

3.1. Detection of Windthrow Areas ≥0.5 ha Using Object-Based Classification

3.1.1. Classification Results

3.1.2. Selected Features

3.2. Detection of Smaller Groups of Fallen Trees Using Pixel-Based Change Detection

3.3. Quantification of Forest Loss

3.4. Comparisson with Other Studies

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

Appendix A.1. Vegetation Indices

| Index | Equation | Reference |

|---|---|---|

| Atmospherically Resistant Vegetation Index | [71] | |

| Difference Difference Vegetation Index | [72] | |

| Difference Vegetation Index | [73] | |

| Enhanced Vegetation Index | [74] | |

| Green Atmospherically Resistant Vegetation Index | [75] | |

| Green Normalized Difference Vegetation Index | [76] | |

| Infrared Percentage Vegetation Index | [77] | |

| 2nd Modified Soil Adjusted Vegetation Index | [78] | |

| Normalized Difference Red Edge Index | [79,80] | |

| Normalized Difference Greenness Index | [81] | |

| Normalized Difference Red Edge Blue Index | evolved for this study | |

| Normalized Difference Vegetation Index | [82] | |

| Normalized Near Infrared | [83] | |

| Plant Senescence Reflectance Index | [65] | |

| Red Edge Normalized Difference Vegetation Index | [84] | |

| Red Edge Ratio Index 1 | [85] | |

| Ratio Vegetation Index | [86,87] | |

| Soil Adjusted Vegetation Index | [88] |

Appendix A.2. Tasseled Cap Transformation and Disturbance Index

Appendix A.3. Image Differencing

Appendix A.4. Spectral Angle Mapper

Appendix A.5. Multivariate Alteration Detection

Appendix A.6. Textural Features

| Haralick | Grey-Level Run-Length Matrix | ||

|---|---|---|---|

| Energy Entropy Correlation Inverse Difference Moment Inertia Cluster Shade Cluster Prominence Haralick Correlation | [98] | Short Run Emphasis Long Run Emphasis Grey-Level Nonuniformity Run Length Nonuniformity Run Percentage | [99] |

| Low Grey-Level Run Emphasis High Grey-Level Run Emphasis | [100] | ||

| Short Run Low Grey-Level Emphasis Short Run High Grey-Level Emphasis Long Run Low Grey-Level Emphasis Long Run High Grey-Level Emphasis | [101] | ||

Appendix A.7. Geometrical Characteristics

References

- Schelhaas, M.-J.; Nabuurs, G.-J.; Schuck, A. Natural disturbances in the European forests in the 19th and 20th Centuries. Glob. Chang. Biol. 2003, 9, 1620–1633. [Google Scholar] [CrossRef]

- Seidl, R.; Schelhaas, M.-J.; Rammer, W.; Verkerk, P.J. Increasing forest disturbances in Europe and their impact on carbon storage. Nat. Clim. Chang. 2014, 4, 806–810. [Google Scholar] [CrossRef] [PubMed]

- Seidl, R.; Rammer, W. Climate change amplifies the interactions between wind and bark beetle disturbances in forest landscapes. Landsc. Ecol. 2016, 1–14. [Google Scholar] [CrossRef]

- Coppin, P.R.; Bauer, M.E. Digital change detection in forest ecosystems with remote sensing imagery. Remote Sens. Rev. 1996, 13, 207–234. [Google Scholar] [CrossRef]

- Vogelmann, J.E.; Tolk, B.; Zhu, Z. Monitoring forest changes in the southwestern United States using multitemporal Landsat data. Remote Sens. Environ. 2009, 113, 1739–1748. [Google Scholar] [CrossRef]

- Wang, F.; Xu, Y.J. Comparison of remote sensing change detection techniques for assessing hurricane damage to forests. Environ. Monit. Assess. 2010, 162, 311–326. [Google Scholar] [CrossRef] [PubMed]

- Rich, R.L.; Frelich, L.; Reich, P.B.; Bauer, M.E. Detecting wind disturbance severity and canopy heterogeneity in boreal forest by coupling high-spatial resolution satellite imagery and field data. Remote Sens. Environ. 2010, 114, 299–308. [Google Scholar] [CrossRef]

- Jonikavičius, D.; Mozgeris, G. Rapid assessment of wind storm-caused forest damage using satellite images and stand-wise forest inventory data. iForest 2013, 6, 150–155. [Google Scholar] [CrossRef]

- Baumann, M.; Ozdogan, M.; Wolter, P.T.; Krylov, A.; Vladimirova, N.; Radeloff, V.C. Landsat remote sensing of forest windfall disturbance. Remote Sens. Environ. 2014, 143, 171–179. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C. Early detection of bark beetle infestation in Norway Spruce (Picea abies, L.) using WorldView-2 data. Photogramm. Fernerkund. Geoinform. 2014, 2014, 351–367. [Google Scholar] [CrossRef]

- Latifi, H.; Fassnacht, F.E.; Schumann, B.; Dech, S. Object-based extraction of bark beetle (Ips typographus L.) infestations using multi-date LANDSAT and SPOT satellite imagery. Prog. Phys. Geogr. 2014, 38, 755–785. [Google Scholar] [CrossRef]

- Chehata, N.; Orny, C.; Boukir, S.; Guyon, D.; Wigneron, J.P. Object-based change detection in wind storm-damaged forest using high-resolution multispectral images. Int. J. Remote Sens. 2014, 35, 4758–4777. [Google Scholar] [CrossRef]

- Arnett, J.T.T.R.; Coops, N.C.; Gergel, S.E.; Falls, R.W.; Baker, R.H. Detecting stand replacing disturbance using RapidEye imagery: A tasseled cap transformation and modified disturbance index. Can. J. Remote Sens. 2014, 40, 1–14. [Google Scholar] [CrossRef]

- Elatawneh, A.; Wallner, A.; Manakos, I.; Schneider, T.; Knoke, T. Forest cover database updates using multi-seasonal RapidEye data—Storm event assessment in the Bavarian forest national park. Forests 2014, 5, 1284–1303. [Google Scholar] [CrossRef]

- Schwarz, M.; Steinmeier, C.; Holecz, F.; Stebler, O.; Wagner, H. Detection of Windthrow in mountainous regions with different remote sensing data and classification methods. Scand. J. For. Res. 2003, 18, 525–536. [Google Scholar] [CrossRef]

- Healey, S.; Cohen, W.; Zhiqiang, Y.; Krankina, O. Comparison of Tasseled Cap-based Landsat data structures for use in forest disturbance detection. Remote Sens. Environ. 2005, 97, 301–310. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Dyukarev, E.A.; Pologova, N.N.; Golovatskaya, E.A.; Dyukarev, A.G. Forest cover disturbances in the South Taiga of West Siberia. Environ. Res. Lett. 2011, 6, 035203. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E.; Olofsson, P. Continuous monitoring of forest disturbance using all available Landsat imagery. Remote Sens. Environ. 2012, 122, 75–91. [Google Scholar] [CrossRef]

- Negrón-Juárez, R.; Baker, D.B.; Zeng, H.; Henkel, T.K.; Chambers, J.Q. Assessing hurricane-induced tree mortality in U.S. Gulf Coast forest ecosystems. J. Geophys. Res. Biogeosci. 2010, 115, G04030. [Google Scholar] [CrossRef]

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W. Regional detection, characterization, and attribution of annual forest change from 1984 to 2012 using Landsat-derived time-series metrics. Remote Sens. Environ. 2015, 170, 121–132. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.T.; Wulder, A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Hecheltjen, A.; Thonfeld, F.; Menz, G. Recent advances in remote sensing change detection—A review. In Land Use and Land Cover Mapping in Europe; Manakos, I., Braun, M., Eds.; Springer: Dordrecht, The Netherlands, 2014; pp. 145–178. [Google Scholar]

- Bovolo, F.; Bruzzone, L. The time variable in data fusion: A change detection perspective. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–26. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef]

- Li, X.; Cheng, X.; Chen, W.; Chen, G.; Liu, S. Identification of forested landslides using LiDAR data, object-based image analysis, and machine learning algorithms. Remote Sens. 2015, 7, 9705–9726. [Google Scholar] [CrossRef]

- Wu, X.; Yang, F.; Roly, L. Land cover change detection using texture analysis. J. Comput. Sci. 2010, 6, 92–100. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in Central Europe. Remote Sens. 2016, 8, 1–27. [Google Scholar] [CrossRef]

- Schumacher, P.; Mislimshoeva, B.; Brenning, A.; Zandler, H.; Brandt, M.; Samimi, C.; Koellner, T. Do red edge and texture attributes from high-resolution satellite data improve wood volume estimation in a Semi-arid mountainous region? Remote Sens. 2016, 8, 540. [Google Scholar] [CrossRef]

- Ramoelo, A.; Skidmore, A.K.; Cho, M.A.; Schlerf, M.; Mathieu, R.; Heitkönig, I.M.A. Regional estimation of savanna grass nitrogen using the red-edge band of the spaceborne RapidEye sensor. Int. J. Appl. Earth Obs. Geoinform. 2012, 19, 151–162. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L. Retrieving canopy chlorophyll content of potato crops using Sentinel-2 bands. In Proceedings of ESA Living Planet Symposium, Edinburgh, UK, 9–13 September 2012; pp. 1–8.

- Haeseler, S.; Lefebvre, C. Hintergrundbericht: Orkantief NIKLAS wütet am 31. März 2015 über Deutschland 2015; Deutscher Wetterdienst (DWD), Climate Data Center(CDC): Offenbach am Main, Germany, 2015. [Google Scholar]

- Preuhsler, T. Sturmschäden in einem Fichtenbestand der Münchener Schotterebene. Allg. Forstz. 1990, 46, 1098–1103. [Google Scholar]

- BlackBridge. Satellite Imagery Product Specifications; Version 6.1; BlackBridge: Berlin, Germany, 2015. [Google Scholar]

- Vicente-Serrano, S.; Pérez-Cabello, F.; Lasanta, T. Assessment of radiometric correction techniques in analyzing vegetation variability and change using time series of Landsat images. Remote Sens. Environ. 2008, 112, 3916–3934. [Google Scholar] [CrossRef]

- Kauth, J.; Thomas, G.S. The tasselled cap—A graphic description of the spectral-temporal development of agricultural crops as seen by LANDSAT. In Symposium on Machine Processing of Remotely Sensed Data; Institute of Electrical and Electronics Engineers: West Lafayette, IN, USA, 1976; pp. 41–51. [Google Scholar]

- Schönert, M.; Weichelt, H.; Zillmann, E.; Jürgens, C. Derivation of tasseled cap coefficients for RapidEye data. In Earth Resources and Environmental Remote Sensing/GIS Applications V, 92450Q; Michel, U., Schulz, K., Eds.; SPIE: Bellingham, WA, USA, 2014; Volume 9245, pp. 1–11. [Google Scholar]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate Alteration Detection (MAD) and MAF Postprocessing in Multispectral, Bitemporal Image Data: New approaches to change detection studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Kruse, F.A.F.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)-interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable mean-shift algorithm and its application to the segmentation of arbitrarily large remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 952–964. [Google Scholar] [CrossRef]

- Orfeo ToolBox (OTB) Development Team. The Orfeo ToolBox Cookbook, A Guide for Non-Developers; Updated for OTB-5.2.1; CNES: Paris, France, 2016. [Google Scholar]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef]

- Boukir, S.; Jones, S.; Reinke, K. Fast mean-shift based classification of very high resolution images: Application to forest cover mapping. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012; Volume I-7, pp. 293–298.

- Arbeitsgemeinschaft der Vermessungsverwaltung der Länder der Bundesrepublik Deutschland (AdV). Amtliches Topographisch-Kartographisches Informationssystem (ATKIS). Available online: http://www.adv-online.de/AAA-Modell/ATKIS/ (accessed on 7 January 2016).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Immitzer, M.; Stepper, C.; Böck, S.; Straub, C.; Atzberger, C. Use of WorldView-2 stereo imagery and National Forest Inventory data for wall-to-wall mapping of growing stock. For. Ecol. Manag. 2016, 359, 232–246. [Google Scholar] [CrossRef]

- Ng, W.-T.; Meroni, M.; Immitzer, M.; Böck, S.; Leonardi, U.; Rembold, F.; Gadain, H.; Atzberger, C. Mapping Prosopis spp. with Landsat 8 data in arid environments: Evaluating effectiveness of different methods and temporal imagery selection for Hargeisa, Somaliland. Int. J. Appl. Earth Obs. Geoinform. 2016, 53, 76–89. [Google Scholar] [CrossRef]

- Schultz, B.; Immitzer, M.; Formaggio, A.R.; Sanches, I.D.A.; Luiz, A.J.B.; Atzberger, C. Self-guided segmentation and classification of multi-temporal Landsat 8 images for crop type mapping in Southeastern Brazil. Remote Sens. 2015, 7, 14482–14508. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef]

- Toscani, P.; Immitzer, M.; Atzberger, C. Texturanalyse mittels diskreter Wavelet Transformation für die objektbasierte Klassifikation von Orthophotos. Photogramm. Fernerkund. Geoinform. 2013, 2, 105–121. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with Random Forest using very high spatial resolution 8-band WorldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016. [Google Scholar]

- Granitto, P.M.; Furlanello, C.; Biasioli, F.; Gasperi, F. Recursive feature elimination with random forest for PTR-MS analysis of agroindustrial products. Chemom. Intell. Lab. Syst. 2006, 83, 83–90. [Google Scholar] [CrossRef]

- Vuolo, F.; Atzberger, C. Improving land cover maps in areas of disagreement of existing products using NDVI time series of MODIS—Example for Europe. Photogramm. Fernerkund. Geoinform. 2014, 393–407. [Google Scholar] [CrossRef]

- Rosin, P.L.; Ioannidis, E. Evaluation of global image thresholding for change detection. Pattern Recognit. Lett. 2003, 24, 2345–2356. [Google Scholar] [CrossRef]

- Radke, R.J.; Andra, S.; Al-Kofahi, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Dorko, G.; Schmid, C. Selection of scale-invariant parts for object class recognition. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 634–640.

- Google Inc. Google Earth 2015. Available online: https://google.com/earth/ (accessed on 10 April 2015).

- Peterson, C.J. Catastrophic wind damage to North American forests and the potential impact of climate change. Sci. Total Environ. 2000, 262, 287–311. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Ollinger, S.V. Sources of variability in canopy reflectance and the convergent properties of plants. New Phytol. 2011, 189, 375–394. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, L.A.; Fernández-Sarría, A.; Recio, J.A. Texture feature extraction for classification of remote sensing data using wavelet decomposition: A comparative study. In Proceedings of the 20th ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; pp. 1109–1114.

- Carvalho, O.A.J.; Guimarães, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A new approach to change vector analysis using distance and similarity measures. Remote Sens. 2011, 3, 2473–2493. [Google Scholar] [CrossRef]

- Elatawneh, A.; Rappl, A.; Schneider, T.; Knoke, T. A semi-automated method of forest cover losses detection using RapidEye images: A case study in the Bavarian forest National Park. In Proceedings of the 4th RESA Workshop, Neustrelitz, Germany, 21–22 March 2012; Borg, E., Ed.; GITO Verlag für industrielle Informationstechnik und Organisation: Neustrelitz, Germany, 2012; pp. 183–200. [Google Scholar]

- Baig, M.H.A.; Zhang, L.; Shuai, T.; Tong, Q. Derivation of a tasselled cap transformation based on Landsat 8 at-satellite reflectance. Remote Sens. Lett. 2014, 5, 423–431. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Jackson, R.D.; Slater, P.N.; Pinter, P.J. Discrimination of growth and water stress in wheat by various vegetation indices through clear and turbid atmospheres. Remote Sens. Environ. 1983, 13, 187–208. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Signature analysis of leaf reflectance spectra: Algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 1996, 148, 494–500. [Google Scholar] [CrossRef]

- Crippen, R. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Qi, J.; Kerr, Y.; Chehbouni, A. External factor consideration in vegetation index development. In Proceedings of the 6th Symposium on Physical Measurements and Signatures in Remote Sensing, Val D’Isere, France, 17–21 January 1994; pp. 723–730.

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Barnes, E.M.E.; Clarke, T.R.T.; Richards, S.E.S.; Colaizzi, P.D.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of crop water stress, nitrogen status and canopy density using ground-based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; Robert, P.C., Rust, R.H., Larson, W.E., Eds.; American Society of Agronomy: Madison, WI, USA, 2000. [Google Scholar]

- Chamard, P.; Courel, M.-F.; Guenegou, M.; Lerhun, J.; Levasseur, J.; Togola, M. Utilisation des bandes spectrales du vert et du rouge pour une meilleure évaluation des formations végétales actives. In Télédétection et Cartographie; AUPELF-UREF, Réseau Télédétection Journées scientifiques; Presses de l’université du Québec: Québec, CA, 1991; pp. 203–209. [Google Scholar]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symposium; National Aeronautics and Space Administration: Washington, DC, USA, 1974; Volume 351, pp. 309–317. [Google Scholar]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968–977. [Google Scholar] [CrossRef]

- ApolloMapping. Using RapidEye 5-meter Imagery for Vegetation Analysis; ApolloMapping: Boulder, CO, USA, 2012. [Google Scholar]

- Ehammer, A.; Fritsch, S.; Conrad, C.; Lamers, J.; Dech, S. Statistical derivation of fPAR and LAI for irrigated cotton and rice in arid Uzbekistan by combining multi-temporal RapidEye data and ground measurements. In Remote Sensing for Agriculture, Ecosystems, and Hydrology XII; Neale, C.M.U., Maltese, A., Eds.; SPIE: Bellingham, WA, USA, 2010; Volume 7824, pp. 1–10. [Google Scholar]

- Jordan, C.F. Derivation of leaf area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Pearson, R.; Miller, L. Remote mapping of standing crop biomass for estimation of the productivity of the short-grass Prairie, Pawnee National Grasslands, Colorado. In Proceedings of the 8th International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 2–6 October 1972; pp. 1357–1381.

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Crist, E.P.; Cicone, R.C. A physically-based transformation of Thematic Mapper data—The TM Tasseled Cap. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 256–263. [Google Scholar] [CrossRef]

- Yarbrough, L.; Easson, G.; Kuszmaul, J.S. Tasseled cap coefficients for the QuickBird2 sensor: A comparison of methods and development. In Proceedings of the PECORA 16 Conference on Global priorities in land remote sensing, American Society for Photogrammetry and Remote Sensing, Sioux Falls, SD, USA, 23–27 October 2005; pp. 23–27.

- Singh, A. Review Article Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Cho, M.A.; Debba, P.; Mathieu, R.; Naidoo, L.; van Aardt, J.; Asner, G.P. Improving discrimination of savanna tree species through a multiple-endmember spectral angle mapper approach: Canopy-level analysis. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4133–4142. [Google Scholar] [CrossRef]

- Einzmann, K.; Ng, W.-T.; Immitzer, M.; Pinnel, N.; Atzberger, C. Method analysis for collecting and processing in-situ hyperspectral needle reflectance data for monitoring Norway Spruce. Photogramm. Fernerkund. Geoinform. 2014, 5, 423–434. [Google Scholar] [CrossRef]

- Carvalho, O.A.J.; Guimarães, R.F.; Silva, N.C.; Gillespie, A.R.; Gomes, R.A.T.; Silva, C.R.; De Carvalho, A.P. Radiometric normalization of temporal images combining automatic detection of pseudo-invariant features from the distance and similarity spectral measures, density scatterplot analysis, and robust regression. Remote Sens. 2013, 5, 2763–2794. [Google Scholar] [CrossRef]

- Schlerf, M.; Hill, J.; Bärisch, S.; Atzberger, C. Einfluß der spektralen und räumlichen Auflösung von Fernerkundungsdaten bei der Nadelwaldklassifikation. Photogramm. Fernerkund. Geoinform. 2003, 2003, 25–34. [Google Scholar]

- Tuominen, S.; Pekkarinen, A. Performance of different spectral and textural aerial photograph features in multi-source forest inventory. Remote Sens. Environ. 2005, 94, 256–268. [Google Scholar] [CrossRef]

- Beguet, B.; Guyon, D.; Boukir, S.; Chehata, N. Automated retrieval of forest structure variables based on multi-scale texture analysis of VHR satellite imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 164–178. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Galloway, M.M. Texture analysis using gray level run lengths. Comput. Graph. Image Process. 1975, 4, 172–179. [Google Scholar] [CrossRef]

- Chu, A.; Sehgal, C.M.; Greenleaf, J.F. Use of gray value distribution of run lengths for texture analysis. Pattern Recognit. Lett. 1990, 11, 415–420. [Google Scholar] [CrossRef]

- Dasarathy, B.V.; Holder, E.B. Image characterizations based on joint gray level-run length distributions. Pattern Recognit. Lett. 1991, 12, 497–502. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Ngigi, T.G.; Tateishi, R. On the optimization and selection of wavelet texture for feature extraction from high-resolution satellite imagery with application towards urban-tree delineation. Int. J. Remote Sens. 2006, 27, 73–104. [Google Scholar] [CrossRef]

- The MathWorks Inc. MATLAB; Version 8.2.0.29 (r2013b); The MathWorks Inc.: Natick, MA, USA, 2013. [Google Scholar]

- Klonus, S.; Tomowski, D.; Ehlers, M.; Reinartz, P.; Michel, U. Combined edge segment texture analysis for the detection of damaged buildings in crisis areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1118–1128. [Google Scholar] [CrossRef]

- Osserman, R.; Yau, S.T. The Isoperimetric inequality. Bull. Am. Math. Soc. 1978, 84, 1182–1238. [Google Scholar] [CrossRef]

- Krummel, J.R.; Gardner, R.H.; Sugihara, G.; O’Neill, R.V.; Coleman, P.R. Landscape patterns in a disturbed environment. Oikos 1987, 48, 321–324. [Google Scholar] [CrossRef]

| Topic | Area (km2) | Region | Sensor | Approach | References |

|---|---|---|---|---|---|

| Forest cover change detection | 421 | Minnesota, United States | Landsat TM | Detection of canopy disturbances with vegetation indices, standardized image differencing, and principal component analysis | Coppin and Bauer [4] |

| Monitoring of forest changes with multi-temporal data | 166 | New Mexico, United States | Landsat TM/ETM+ | Spectral trends of time series data sets to capture forest changes | Vogelmann et al. [5] |

| Detection of forest damage resulting from wind storm | 19,600 | Lithuania | Landsat TM | Image differencing and classification with k-Nearest Neighbor | Jonikavičius and Mozgeris [8] |

| Forest windfall disturbance detection | 33,600 | European Russia; Minnesota, United States | Landsat TM/ETM+ | Separation of windfalls and clear cuts with Forestness Index, Disturbance Index, and Tasseled Cap Transformation | Baumann et al. [9] |

| 17,100 | |||||

| Object-based change detection to assess wind storm damage | 60 | Southwest France | Formosat-2 | Automated feature selection process followed by bi-temporal classification to detect wind storm damage in forests | Chehata et al. [12] |

| Windthrow detection in mountainous regions | 221 | Switzerland | Ikonos, SPOT-4, Landsat ETM+, AeS-1, E-SAR, ERS-1/2 | Comparison of active and passive data as well as pixel- and object-based approaches for detecting windthrow | Schwarz et al. [15] |

| Forest disturbance detection | 3810 | Washington State, United States; St. Petersburg region, Russia | Landsat TM/ETM+ | Assessing forest disturbances in multi-temporal data with Tasseled Cap Transformation and Disturbance Index | Healey et al. [16] |

| 4200 | |||||

| 5000 | |||||

| Clear-cut detection | 1800 | Eastern Belgium | SPOT | Object-based image segmentation, image differencing, stochastic analysis of the multispectral signal for clear-cut detection | Desclée et al. [17] |

| Forest cover disturbance detection | 32,150 | West Siberia, Russia | Landsat TM/ETM+ | Unsupervised classification of Tasseled Cap Indices to detect changes caused by forest harvesting and windthrow | Dyukarev et al. [18] |

| Continuous forest disturbance monitoring | 3600 | Georgia, United States | Landsat | High temporal forest monitoring with Forest Disturbance Algorithm (CMFDA) | Zhu et al. [19] |

| Assessing tree damage caused by hurricanes | Golf Coast, United States | Landsat, MODIS | Estimating large-scale disturbances by combining satellite data, field data, and modeling | Négron-Juárez et al. [20] | |

| Spectral trend analysis to detect forest changes | 375,000 | Saskatchewan, Canada | Landsat TM/ETM+ | Breakpoint analysis of spectral trends with changed objects being attributed to a certain change type | Hermosilla et al. [21] |

| Pre-Storm Image | Post-Storm Image | Area | |

|---|---|---|---|

| Munich South | 18 March 2015 | 10 April 2015 | 720 km2 |

| Landsberg | 18 March 2015 | 19 April 2015 | 840 km2 |

| Spruce, Pure Stand | Spruce, Mixed Forest | Mixed Forest | Total Number | Total Area (in ha) | |

|---|---|---|---|---|---|

| Munich South | 6 | 8 | - | 14 | 27.5 |

| Landsberg | 27 | - | 4 | 31 | 16.6 |

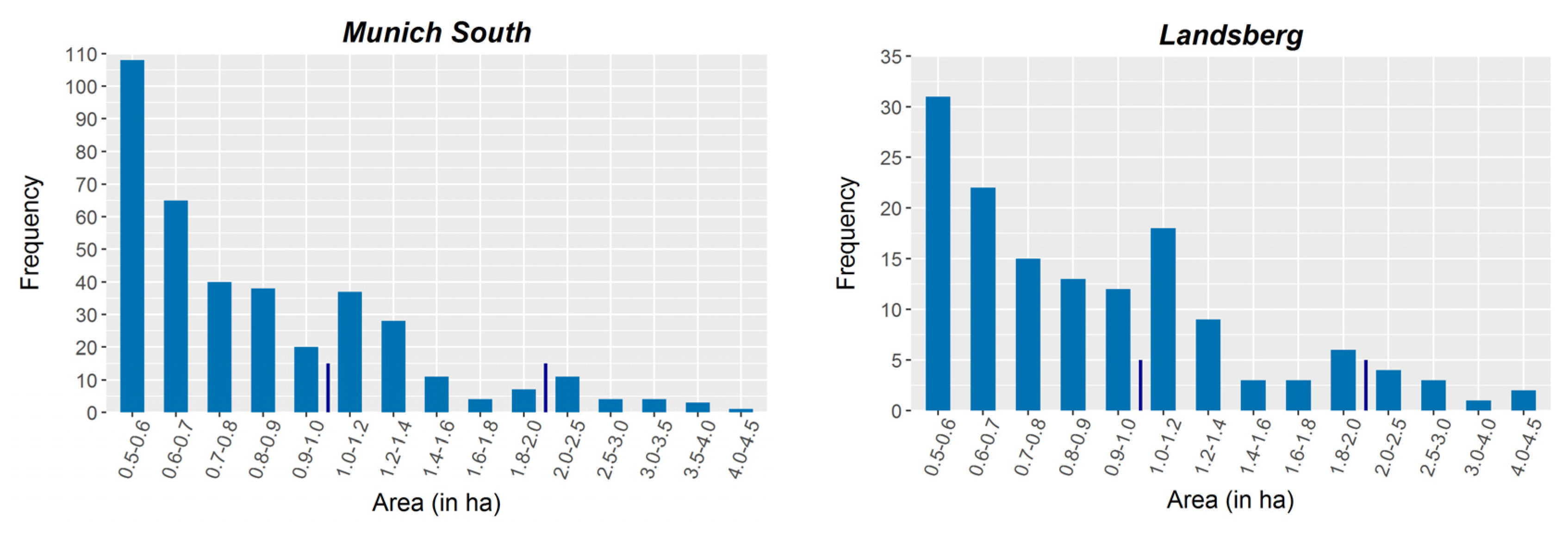

| Munich South | Landsberg | |

|---|---|---|

| Number of polygons | 307 | 135 |

| Size between 0.5 ≤ 1 ha | 176 | 93 |

| Size between 1 ≤ 2 ha | 79 | 32 |

| Size between 2 ≤ 5 ha | 46 | 10 |

| Size between 5 ≤ 10 ha | 5 | - |

| Size ≤ 10 ha | 1 | - |

| Largest area (in ha) | 11.5 | 4.4 |

| Mean size (in ha) | 1.3 | 1 |

| Total area (in ha) | 400.8 | 138.6 |

| Spectral Input Layers | Transformation-Based Input Layers | Textural Input Layers | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Pre-Storm | Post-Storm | Difference | VI Difference | sTCC + DI Difference | MAD | SAM | Wavelet Difference | Haralick Difference | GLRLM Difference |

| B G R RE NIR | B G R RE NIR | B G R RE NIR | 18 × VIs | 3 × sTCC (Annert) | 5 × 1 layer | 8 × 1 layer | 4 scales × 6 (5 spectral and NDVI) mean directions | 5 × 8 Haralick features | 5 × 11 GLRLM features |

| 3 × sTCC (Schönert) | |||||||||

| 2 × DI | |||||||||

| 2 × sTCB-sTCG | |||||||||

| 5 | 5 | 5 | 18 | 10 | 5 | 8 | 24 | 40 | 55 |

| Statistics | Geometry |

|---|---|

| Minimum | Area |

| Maximum | Perimeter |

| Median | Compactness |

| Mean | Shape Index |

| Standard Deviation | Fractal Dimension |

| 12 percentiles (1 to 99) |

| Standard Deviation | Spatial Radius hs | Range Radius hr | Minimum Size ms | |

|---|---|---|---|---|

| Munich South | 29 | 14.5 | 7.25 | 10 (250 m2) |

| Landsberg | 22.57 | 7.5 | 3.75 | 5 (125 m2) |

| Class | Security (in %) |

|---|---|

| 1 | 0–19 |

| 2 | 20–39 |

| 3 | 40–59 |

| 4 | 60–79 |

| 5 | 80–100 |

| Munich South | Landsberg | |||

|---|---|---|---|---|

| Reference: Windthrow | Reference: No Windthrow | Reference: Windthrow | Reference: No Windthrow | |

| Classification result: windthrow detected | 295 (tp) | 24 (fp) | 88 (tp) | 1 (fp) |

| Classification result: windthrow not detected | 21 (fn) | not applicable | 4 (fn) | not applicable |

| Indicator | Object Statistics | MDA |

|---|---|---|

| Plant Senescence Reflectance Index difference | 15 percentile | 18.5 |

| Plant Senescence Reflectance Index difference | 5 percentile | 16.2 |

| Plant Senescence Reflectance Index difference | 20 percentile | 15.2 |

| Near Infrared of post-storm image | 1 percentile | 14.3 |

| Plant Senescence Reflectance Index difference | 10 percentile | 14.1 |

| Red Edge difference | 25 percentile | 14.0 |

| Near Infrared of post-storm image | Minimum | 13.8 |

| Normalized Difference Red Edge Blue Index difference | 20 percentile | 13.6 |

| Haralick Correlation of Green difference | 10 percentile | 13.2 |

| Indicator | Object Statistics | MDA |

|---|---|---|

| Red Edge of pre-storm image | Maximum | 21 |

| Normalized Difference Red Edge Blue Index difference | Mean | 19.4 |

| Red Edge difference | 99 percentile | 18.4 |

| Normalized Difference Red Edge Blue Index difference | 75 percentile | 17.7 |

| Normalized Difference Red Edge Blue Index difference | Median | 17.1 |

| 2nd Modified Soil Adjusted Vegetation Index difference | 90 percentile | 16.8 |

| Plant Senescence Reflectance Index difference | 75 percentile | 15.7 |

| Spectral Angle Mapper with radius of 5 neighboring pixel | 80 percentile | 15.3 |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Einzmann, K.; Immitzer, M.; Böck, S.; Bauer, O.; Schmitt, A.; Atzberger, C. Windthrow Detection in European Forests with Very High-Resolution Optical Data. Forests 2017, 8, 21. https://doi.org/10.3390/f8010021

Einzmann K, Immitzer M, Böck S, Bauer O, Schmitt A, Atzberger C. Windthrow Detection in European Forests with Very High-Resolution Optical Data. Forests. 2017; 8(1):21. https://doi.org/10.3390/f8010021

Chicago/Turabian StyleEinzmann, Kathrin, Markus Immitzer, Sebastian Böck, Oliver Bauer, Andreas Schmitt, and Clement Atzberger. 2017. "Windthrow Detection in European Forests with Very High-Resolution Optical Data" Forests 8, no. 1: 21. https://doi.org/10.3390/f8010021

APA StyleEinzmann, K., Immitzer, M., Böck, S., Bauer, O., Schmitt, A., & Atzberger, C. (2017). Windthrow Detection in European Forests with Very High-Resolution Optical Data. Forests, 8(1), 21. https://doi.org/10.3390/f8010021