Securing Resource-Constrained IoT Nodes: Towards Intelligent Microcontroller-Based Attack Detection in Distributed Smart Applications

Abstract

:1. Introduction

2. Background Literature Review

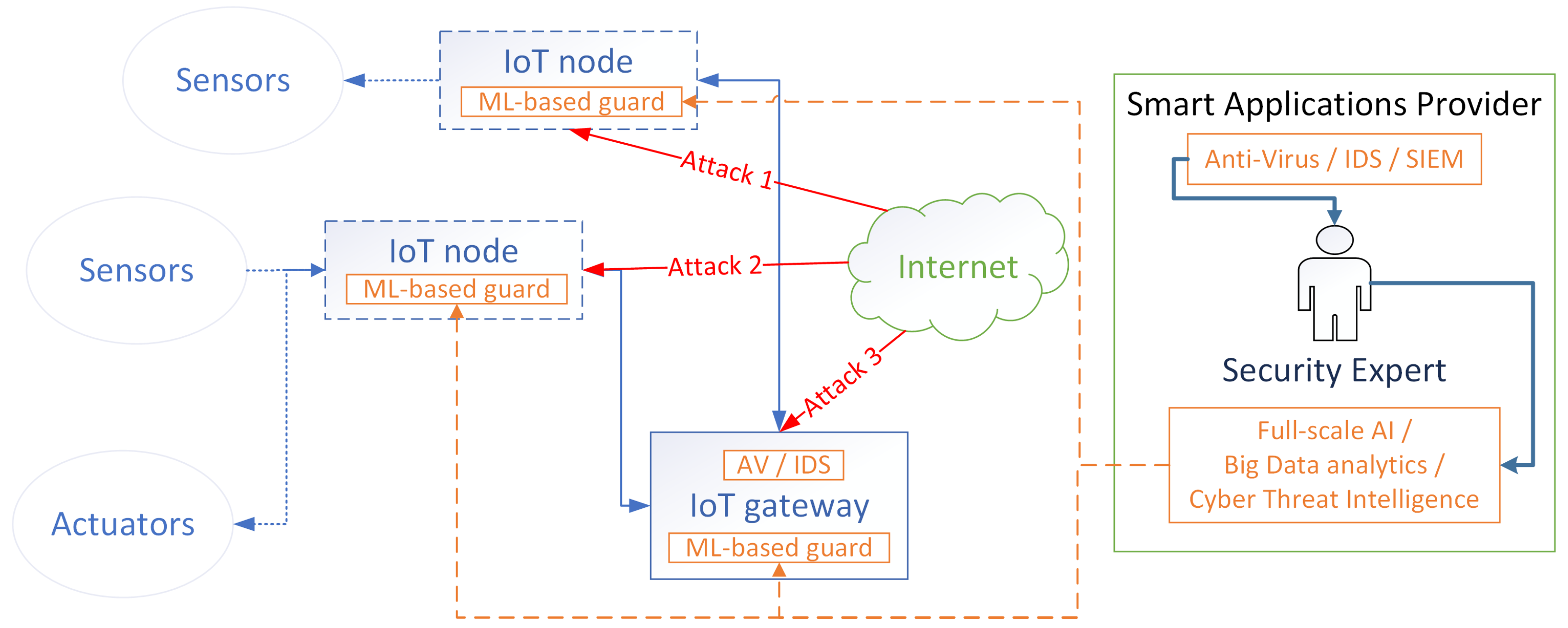

2.1. Security Concerns in Smart Applications

2.2. Data-Related Capabilities of the IoT Ecosystem Components

3. Machine Learning on the Internet of Things: State of the Art and Implications

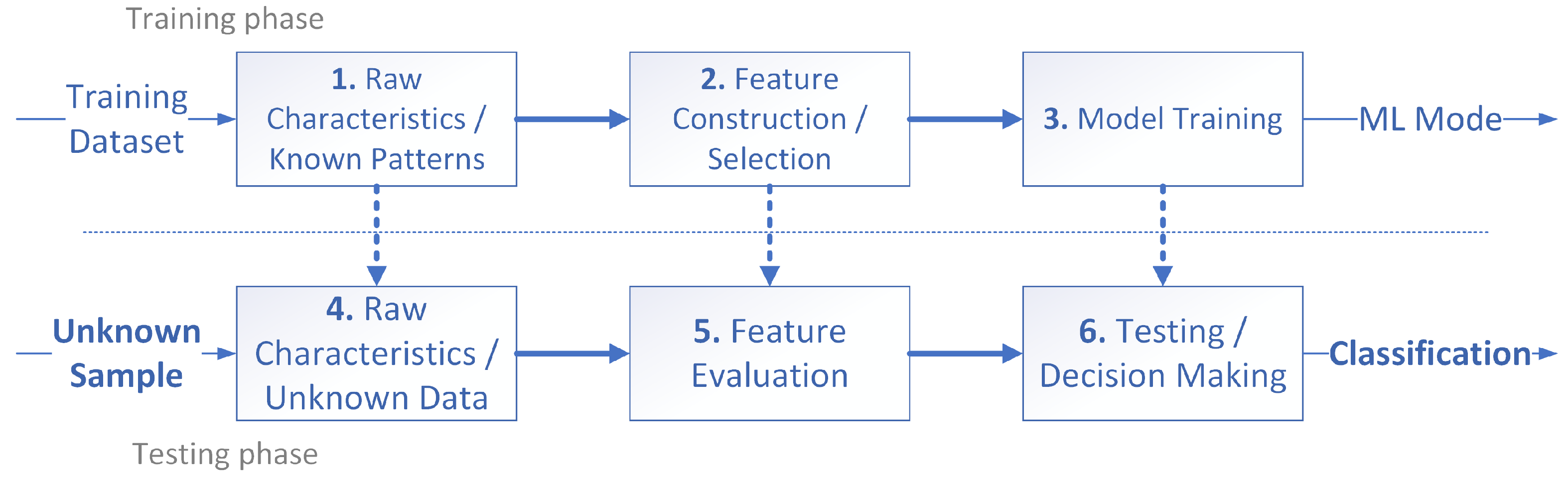

- Training. This is the process of building the Intelligent Classifier that can help to perform similarity-based attacks classification and detection:

- Data Pre-processing. The raw characteristics such as files’ static and dynamic properties, network traffic packet, etc have to be harvested in a methodological reproducible manner.

- Feature Construction. Extraction of the relevant and selection of the best numerical indicators that can differentiate different entry patterns. The quality of the features will define the efficiency and effectiveness of the whole model.

- Model Training. During this step, the selected Machine Learning method is being trained.

- Testing. This step helps to determine the particular class (e.g., malicious or benign) of a data piece that needs to be classified such as a file or network traffic packet:

- Pre-processing. A set of raw characteristics is being aggregated in a way identical to Training: Data Pre-processing step.

- Feature measurement. The raw data characteristics are extracted according to the defined previously features properties.

- Classification/Decision Making. Similarity-based identification using the model constructed during the Training: Model Training step.

3.1. Community-Accepted Machine Learning Models

3.2. Human Factor in Cyberattacks Detection in Smart Cities

3.3. Existing ML Implementations for IoT

4. Methodology: Distributed ML-Aided cyberattacks Detection on IoT Nodes

4.1. Use Case and Suggested Model Overview

4.2. Bounding Complexity for Neural Network on IoT

5. Experimental Design Analysis of Results

5.1. Training–Building a Model

5.2. Testing–Attack Detection Phase

6. Conclusions & Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Scott, A. 8 Ways the Internet of Things Will Change the Way We Live and Work. Available online: https://www.businessnewsdaily.com/4858-internet-of-things-will-change-work.html (accessed on 21 September 2021).

- Miller, W. Comparing Prototype Platforms: Arduino, Raspberry Pi, BeagleBone, and LaunchPad. Available online: https://www.electronicproducts.com/comparing-prototype-platforms-arduino-raspberry-pi-beaglebone-and-launchpad/ (accessed on 21 June 2019).

- Shalaginov, A.; Kotsiuba, I.; Iqbal, A. Cybercrime Investigations in the Era of Smart Applications: Way Forward Through Big Data. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 4309–4314. [Google Scholar]

- Cyberattacks on IOT Devices Surge 300% in 2019, ‘Measured in Billions’, Report Claims. 2019. Available online: https://www.oodaloop.com/briefs/2019/09/16/cyberattacks-on-iot-devices-surge-300-in-2019-measured-in-billions-report-claims/ (accessed on 10 February 2020).

- Arshad, J.; Azad, M.A.; Abdellatif, M.M.; Rehman, M.H.U.; Salah, K. COLIDE: A collaborative intrusion detection framework for Internet of Things. IET Netw. 2019, 8, 3–14. [Google Scholar] [CrossRef] [Green Version]

- Raza, S.; Wallgren, L.; Voigt, T. SVELTE: Real-time Intrusion Detection in the Internet of Things. Ad Hoc Netw. 2013, 11, 2661–2674. [Google Scholar] [CrossRef]

- Ranger, S. What Is the IoT? Everything You Need to Know about the Internet of Things Right Now. Available online: https://www.zdnet.com/article/what-is-the-internet-of-things-everything-you-need-to-know-about-the-iot-right-now/ (accessed on 21 September 2021).

- Azad, M.A.; Bag, S.; Parkinson, S.; Hao, F. TrustVote: Privacy-Preserving Node Ranking in Vehicular Networks. IEEE Internet Things J. 2019, 6, 5878–5891. [Google Scholar] [CrossRef] [Green Version]

- Azad, M.A.; Bag, S.; Hao, F.; Salah, K. M2M-REP: Reputation system for machines in the internet of things. Comput. Secur. 2018, 79, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Council, N.C. WatchOut—Analysis of Smartwatches for Children. Available online: https://www.conpolicy.de/en/news-detail/watchout-analysis-of-smartwatches-for-children/ (accessed on 24 June 2019).

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the effectiveness of machine and deep learning for cyber security. In Proceedings of the 2018 10th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 29 May–1 June 2018; pp. 371–390. [Google Scholar]

- Yamin, M.M.; Shalaginov, A.; Katt, B. Smart Policing for a Smart World Opportunities, Challenges and Way Forward. In Proceedings of the Future of Information and Communication Conference, San Francisco, CA, USA, 5–6 March 2020; pp. 532–549. [Google Scholar]

- Shalaginov, A.; Semeniuta, O.; Alazab, M. MEML: Resource-aware MQTT-based Machine Learning for Network Attacks Detection on IoT Edge Devices. In Proceedings of the 12th IEEE/ACM International Conference on Utility and Cloud Computing Companion, Auckland, New Zealand, 2–5 December 2019; pp. 123–128. [Google Scholar]

- Zahoor, S.; Mir, R.N. Resource management in pervasive Internet of Things: A survey. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 921–935. [Google Scholar] [CrossRef]

- Antonakakis, M.; April, T.; Bailey, M.; Bernhard, M.; Bursztein, E.; Cochran, J.; Durumeric, Z.; Halderman, J.A.; Invernizzi, L.; Kallitsis, M.; et al. Understanding the mirai botnet. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 1093–1110. [Google Scholar]

- Analytics, I. The 10 Most Popular Internet of Things Applications Right Now. 2016. Available online: https://bigdatanomics.org/index.php/iot-cloud/235-the-10-most-popular-internet-of-things-applications (accessed on 22 June 2019).

- Mujica, G.; Portilla, J. Distributed Reprogramming on the Edge: A New Collaborative Code Dissemination Strategy for IoT. Electronics 2019, 8, 267. [Google Scholar] [CrossRef] [Green Version]

- Deogirikar, J.; Vidhate, A. Security attacks in IoT: A survey. In Proceedings of the 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, Tamil Nadu, India, 10–11 February 2017; pp. 32–37. [Google Scholar]

- Torano, C. IoT for Kids: Cayla Doll Exploit; Technical Report; Abertay University: Dundee, UK, 2014. [Google Scholar]

- Saif, I. Striking a Balance between Extracting Value and Exposing Your Data. 2013. Available online: https://www.ft.com/content/35993dce-933a-11e2-9593-00144feabdc0 (accessed on 25 June 2019).

- Barcena, M.B.; Wueest, C. Insecurity in the Internet of Things. Available online: https://docs.broadcom.com/doc/insecurity-in-the-internet-of-things-en (accessed on 25 June 2019).

- Difference between Raspberry Pi vs. Orange Pi. Available online: https://www.geeksforgeeks.org/difference-between-raspberry-pi-and-orange-pi/ (accessed on 25 June 2019).

- Top 5 Raspberry Pi Network Security Tips for Beginners. Available online: https://www.raspberrypistarterkits.com/guide/top-raspberry-pi-network-security-tips-beginners/ (accessed on 10 June 2019).

- Sforzin, A.; Mármol, F.G.; Conti, M.; Bohli, J.M. RPiDS: Raspberry Pi IDS—A Fruitful Intrusion Detection System for IoT. In Proceedings of the 2016 International IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016; pp. 440–448. [Google Scholar]

- Arduino Language Reference. Available online: https://arduinogetstarted.com/arduino-language-reference (accessed on 25 June 2019).

- Arduino Cryptography Library. Available online: http://rweather.github.io/arduinolibs/crypto.html (accessed on 24 June 2019).

- Kononenko, I.; Kukar, M. Machine Learning and Data Mining; Horwood Publishing: Cambridge, UK, 2007. [Google Scholar]

- Stamp, M. A Survey of Machine Learning Algorithms and Their Application in Information Security. In Guide to Vulnerability Analysis for Computer Networks and Systems; Springer: Berlin, Germany, 2018; pp. 33–55. [Google Scholar]

- IoT Hardware Guide. 2019. Available online: https://www.postscapes.com/internet-of-things-hardware/ (accessed on 5 June 2019).

- Shalaginov, A.; Franke, K. Big data analytics by automated generation of fuzzy rules for Network Forensics Readiness. Appl. Soft Comput. 2017, 52, 359–375. [Google Scholar] [CrossRef]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A survey of deep learning methods for cyber security. Information 2019, 10, 122. [Google Scholar] [CrossRef] [Green Version]

- A Beginner’s Guide to Neural Networks and Deep Learning. Available online: https://wiki.pathmind.com/neural-network (accessed on 3 June 2019).

- Yavuz, F.Y. Deep Learning in Cyber Security for Internet of Things. Ph.D. Thesis, Istanbul Sehir University, Istanbul, Turkey, 2018. [Google Scholar]

- Canedo, J.; Skjellum, A. Using machine learning to secure IoT systems. In Proceedings of the 2016 14th Annual Conference on Privacy, Security and Trust (PST), Auckland, North Island, New Zealand, 12–14 December 2016; pp. 219–222. [Google Scholar]

- Hussain, F.; Hussain, R.; Hassan, S.A.; Hossain, E. Machine Learning in IoT Security: Current Solutions and Future Challenges. arXiv 2019, arXiv:1904.05735. [Google Scholar]

- Al-Garadi, M.A.; Mohamed, A.; Al-Ali, A.; Du, X.; Guizani, M. A survey of machine and deep learning methods for internet of things (IoT) security. arXiv 2018, arXiv:1807.11023. [Google Scholar]

- Andročec, D.; Vrček, N. Machine Learning for the Internet of Things Security: A Systematic. In Proceedings of the 13th International Conference on Software Technologies, Porto, Portugal, 26–28 July 2018; p. 97060. [Google Scholar] [CrossRef]

- Restuccia, F.; D’Oro, S.; Melodia, T. Securing the internet of things in the age of machine learning and software-defined networking. IEEE Internet Things J. 2018, 5, 4829–4842. [Google Scholar] [CrossRef] [Green Version]

- Embedded Learning Library (ELL). 2018. Available online: https://microsoft.github.io/ELL/ (accessed on 17 June 2019).

- Tsai, P.H.; Hong, H.J.; Cheng, A.C.; Hsu, C.H. Distributed analytics in fog computing platforms using tensorflow and kubernetes. In Proceedings of the 2017 19th Asia-Pacific Network Operations and Management Symposium (APNOMS), Seoul, Korea, 27–29 September 2017; pp. 145–150. [Google Scholar]

- Build Machine Learning Environment on OrangePi Zero Plus (arm64). 2018. Available online: https://github.com/hankso/OrangePi-BuildML (accessed on 10 June 2019).

- Kumar, A.; Goyal, S.; Varma, M. Resource-efficient machine learning in 2 KB RAM for the internet of things. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1935–1944. [Google Scholar]

- Protecting the Three States of Data. 2016. Available online: https://www.sealpath.com/blog/protecting-the-three-states-of-data/ (accessed on 26 June 2019).

- Mellis, D.A. ESP (Example-Based Sensor Predictions). 2017. Available online: https://github.com/damellis/ESP (accessed on 21 June 2019).

- Śmigielski, M. Machine Learning Library for Arduino. 2014. Available online: https://github.com/smigielski/q-behave (accessed on 25 June 2019).

- A Neural Network for Arduino. 2012. Available online: https://www.bilibili.com/read/cv3119927 (accessed on 14 June 2019).

- Moretti, C.B. Neurona—Artificial Neural Networks for Arduino. 2016. Available online: https://github.com/moretticb/Neurona (accessed on 23 June 2019).

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- TensorFlow. Deploy Machine Learning Models on Mobile and IoT Devices. Available online: https://www.tensorflow.org/lite (accessed on 15 October 2021).

- Flood, I.; Kartam, N. Neural networks in civil engineering. I: Principles and understanding. J. Comput. Civ. Eng. 1994, 8, 131–148. [Google Scholar] [CrossRef]

- Keras-Losses. Available online: https://keras.io/api/losses/ (accessed on 15 October 2021).

| Characteristics | Arduino Uno Rev3 | Orange Pi One |

|---|---|---|

| CPU frequency | 16 Mhz | 4-core 1 GHz |

| Flash memory | 32 KBytes | None |

| RAM | 2 KBytes | 512 MB DDR3 |

| EEPROM | 1 KByte | None |

| Operating Voltage | 3.3–5 V | 5 V |

| SD card extension | Possible | Yes |

| NAS/USB HDD | No | Yes |

| Network | Possible | Ethernet |

| Space Compexity | Flash, Bytes (%) | SRAM, Bytes (%) |

|---|---|---|

| Full memory | 32,256 (100%) | 2048 (100%) |

| 2 training packets (9 f.) | 8276 (25%) | 970 (47%) |

| 20 training packets (9 f.) | 8960 (27%) | 1690 (82%) |

| Epoch ID | 1 | 10 | 16 |

| Output Error | 2.08758 | 0.01587 | 0.00946 |

| Time (per epoch), s | 28,752 | 29,808 | 29,756 |

| ID | 1st Epoch | 16th Epoch | ||||

|---|---|---|---|---|---|---|

| Target | Output | Time, s | Target | Output | Time, s | |

| 1 | 0 | 0.39667 | 1196 | 0 | 0.03863 | 1388 |

| 2 | 0 | 0.39667 | 1896 | 0 | 0.03863 | 1932 |

| 3 | 1 | 0.70681 | 1752 | 1 | 0.97040 | 1836 |

| 4 | 0 | 0.27704 | 1176 | 0 | 0.02999 | 1176 |

| 5 | 0 | 0.32847 | 1668 | 0 | 0.02803 | 1668 |

| 6 | 1 | 0.75069 | 1792 | 1 | 0.98339 | 1856 |

| 7 | 1 | 0.70681 | 1752 | 1 | 0.97040 | 1836 |

| 8 | 1 | 0.70512 | 1756 | 1 | 0.96993 | 1828 |

| 9 | 1 | 0.70606 | 1752 | 1 | 0.97020 | 1820 |

| 10 | 1 | 0.70662 | 1740 | 1 | 0.97035 | 1836 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shalaginov, A.; Azad, M.A. Securing Resource-Constrained IoT Nodes: Towards Intelligent Microcontroller-Based Attack Detection in Distributed Smart Applications. Future Internet 2021, 13, 272. https://doi.org/10.3390/fi13110272

Shalaginov A, Azad MA. Securing Resource-Constrained IoT Nodes: Towards Intelligent Microcontroller-Based Attack Detection in Distributed Smart Applications. Future Internet. 2021; 13(11):272. https://doi.org/10.3390/fi13110272

Chicago/Turabian StyleShalaginov, Andrii, and Muhammad Ajmal Azad. 2021. "Securing Resource-Constrained IoT Nodes: Towards Intelligent Microcontroller-Based Attack Detection in Distributed Smart Applications" Future Internet 13, no. 11: 272. https://doi.org/10.3390/fi13110272

APA StyleShalaginov, A., & Azad, M. A. (2021). Securing Resource-Constrained IoT Nodes: Towards Intelligent Microcontroller-Based Attack Detection in Distributed Smart Applications. Future Internet, 13(11), 272. https://doi.org/10.3390/fi13110272