1. Introduction

Clouds are one of the most vital macroscopic parameters in the research of climate change and meteorological services [

1,

2]. Nowadays, clouds are studied in satellite-based and ground-based manners. Much work has been done to classify clouds based on satellite images. Ebert [

3] proposed a pattern recognition algorithm to classify eighteen surface and cloud types in high-latitude advanced very high resolution radiometer (AVHRR) imagery based on several spectral and textural features. Recently, a probabilistic approach to cloud and snow detection on AVHRR imagery proposed by Musial et al. [

4]. Lamei et al. [

5] investigated a texture-based method which is based on 2-D Gabor functions for satellite image representation. Costa et al. [

6] proposed a cloud detection and classification method based on multi-spectral satellite data. Lee and Lin [

7] proposed a threshold-free method based on support vector machine (SVM) for cloud detection of optical satellite images. Neural network approaches to cloud detection based on satellite images are also proposed [

8,

9]. The satellite-based cloud observation aims to analyze the top of cloud for observing and investigating the global atmospheric movement. Therefore, it is appropriate for large scale climate research. The ground-based cloud observation is good at monitoring the local area to characterize the bottom of cloud for obtaining the information of cloud type [

10]. At the current stage, ground-based cloud classification has received great attention. Ground-based cloud classification is mainly conducted by experienced human observers, which causes extensive human efforts and might suffer from ambiguities due to the different standards of multiple observers. Hence, automatic techniques for ground-based cloud classification are in great need.

Up to now, many ground-based cloud image capturing devices have been developed to generate cloud images, such as the whole sky imager (WSI) [

11], the all sky imager (ASI) [

12], the infrared cloud imager (ICI) [

13], and the whole-sky infrared cloud-measuring system (WSICMS) [

14]. Based on the cloud images captured from these devices, researchers have proposed many methods [

15,

16,

17,

18] for automatic ground-based cloud classification. These methods have achieved promising performances under the assumption that training images and test images are from the same database. Concretely, such methods expect that training images and test images belong to the same feature space and come from the same distribution. This means the training and test images distribute in the same domain. However, these methods could not deal with cloud images from different domains. It is because the cloud images from different domains possess changes in capturing location, image resolution, illumination, camera setting, occlusion and so on.

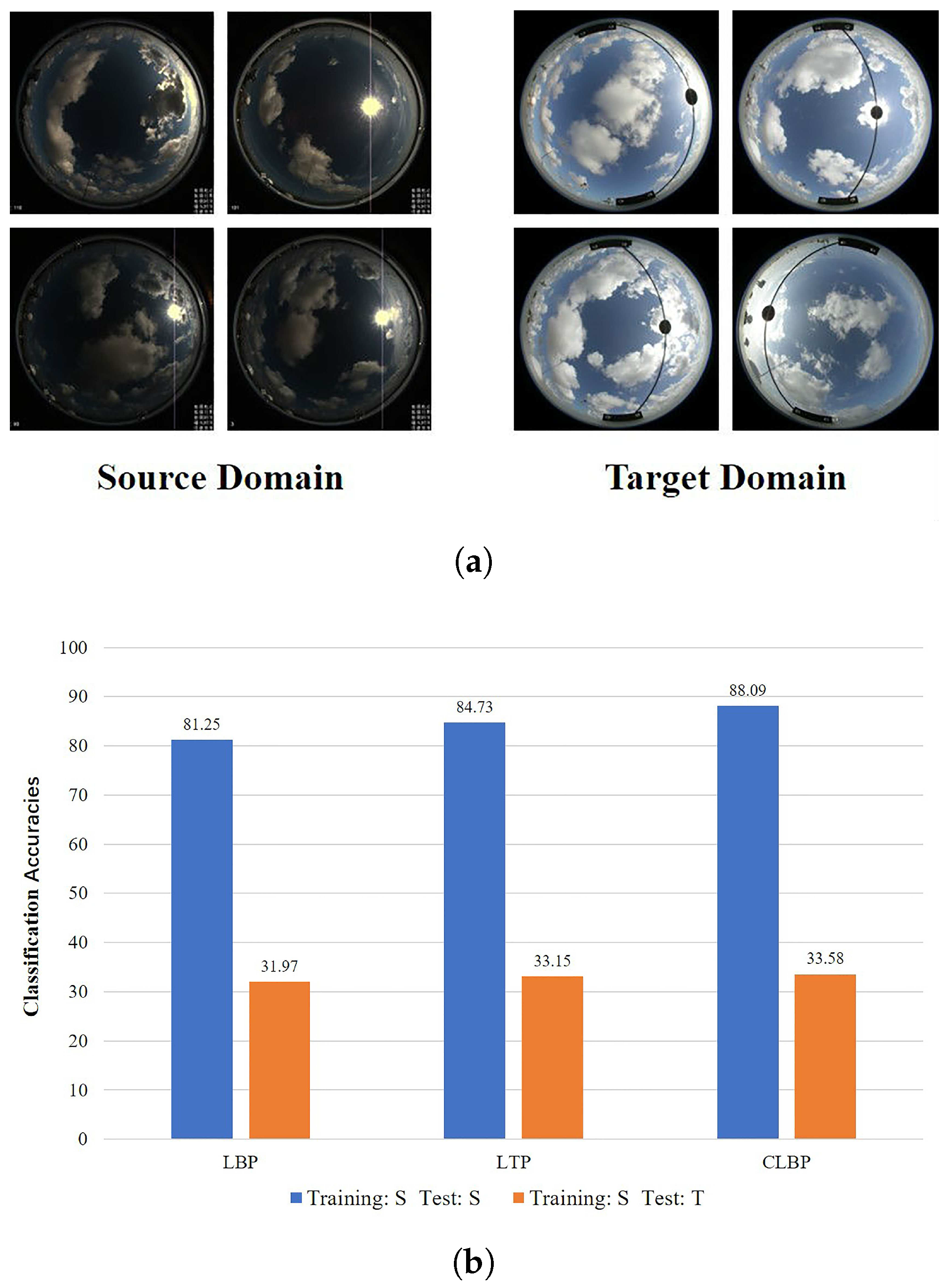

Hence, we wish to train a classifier in one domain (we define it as the source domain), and perform classification in another domain (we define it as the target domain). We define this kind of problem as cross-domain ground-based cloud classification which would represent a welcome innovation in this field, and we argue that addressing this problem is essential for two reasons. First, there are many different weather stations in China, about 2424, and cloud images captured by them are various as shown in

Figure 1a. The existing methods are unsuitable for cross-domain ground-based cloud classification. As shown in

Figure 1b, three representative methods, i.e., LBP, LTP and CLBP, generally achieve promising results when cloud samples are trained and tested in the same domain, but the performance degrades significantly when cloud samples are trained in the source domain and then tested in the target domain. Hence, it is necessary to design a generalized classifier to recognize cross-domain cloud images. Second, some of weather stations possess a large number of labelled cloud images, while labelled cloud images in some weather station are scarce. It is inevitable to establish new weather stations to obtain more completed cloud information, and labelling the new cloud images leads to high human resource burden. So we expect to make use of the many labelled cloud images and the few labelled cloud images to train a classification model, and then the model can be applied to the new weather stations that are in possession of a few labelled cloud images. To our knowledge, there is no published literature focusing on the cross-domain ground-based cloud classification problem.

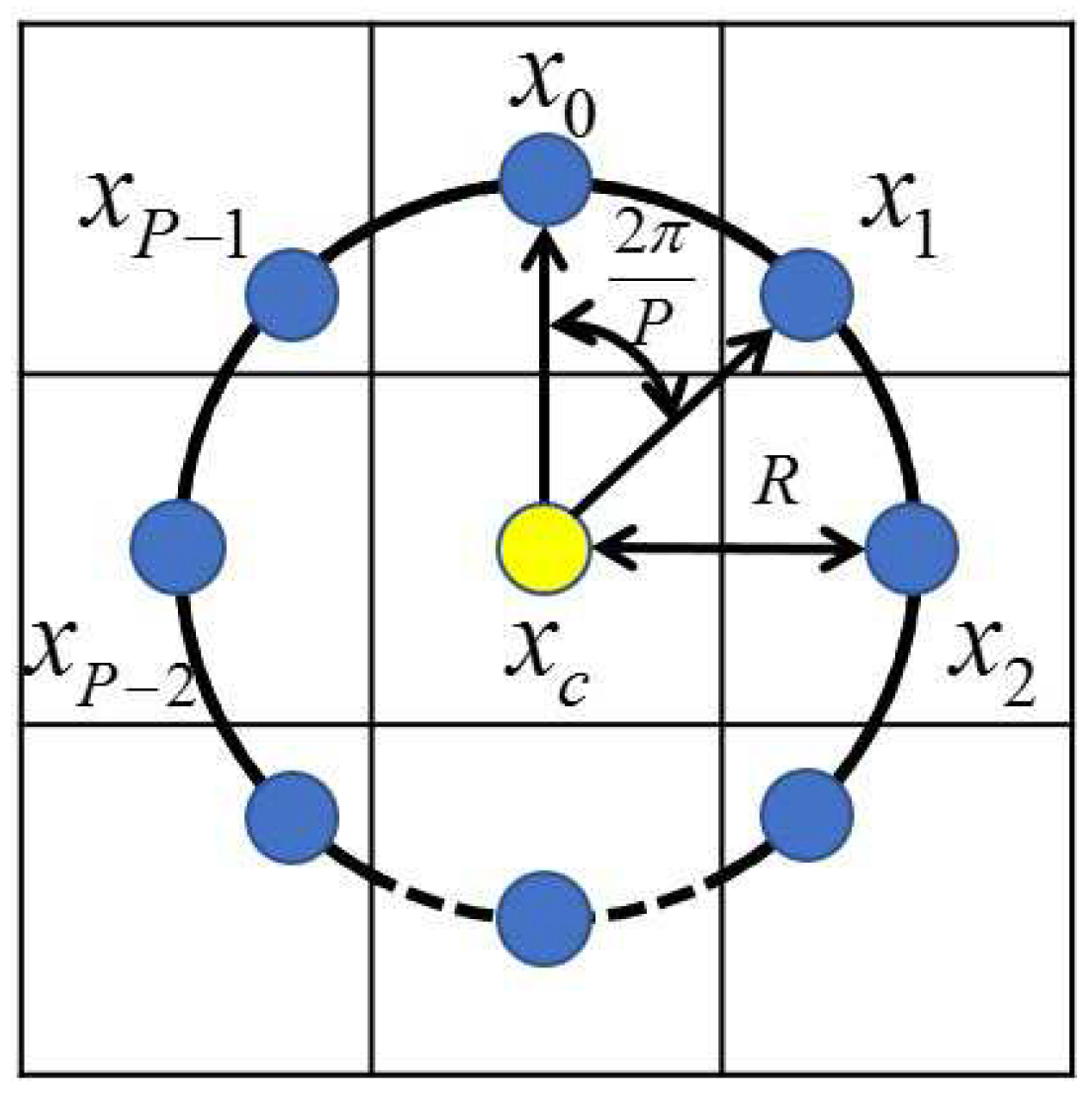

Two fundamental problems which are essential for cross-domain ground-based cloud classification are feature representation and similarity measurement. The first one aims to obtain a stable feature representation. As clouds can be thought of as a kind of natural texture [

19], many existing methods use texture descriptors to represent cloud appearance. Isosalo et al. [

20] adopted local texture information, i.e., LBP and local edge patterns (LEP), to recognize cloud images and classified them into five different sky conditions. Xiao et al. [

21] further extracted the raw visual descriptors from the perspectives of texture, structure, and color, in a densely sampled manner. Liu et al. [

22,

23] proposed two texture descriptors, comprising illumination-invariant completed local ternary pattern (ICLTP) and salient local binary pattern (SLBP). Concretely, the ICLTP is effective for illumination variations, and the SLBP contains discriminative information that makes it robust to noise. Huertas-Tato et al. [

24] proposed that an additional ceilometer and the use of the blue color band were required to obtain comparable cloud classification accuracies. However, these features could not adapt to domain variation. The second problem aims to learn similarity measurements to evaluate similarity between two feature vectors. The existing measurements include the Euclidean distance [

25], chi-square metric [

22], Quadratic-Chi (QC) metric [

23], and metric learning [

26,

27]. The first three measurements are predefined metrics and therefore they can not represent the desired topology. As a desirable alternative, metric learning can be used to replace these predefined metrics. The key idea of metric learning is to construct a Mahalanobis distance (quadratic Gaussian metric) over the input space in place of Euclidean distances. It can be also explained as a linear transformation of the original inputs, followed by Euclidean distance in the projected feature space. Xing et al. [

26] learned a distance metric with the consideration of side information. The learned metric try to minimize the distance between all pairs of similar points, and meanwhile maximize the distance between all pairs of dissimilar points. But the algorithm is computationally expensive and unsuitable for large or high-dimensional databases. To address this kind of problem, Alipanahi et al. [

28] proposed a method to solve the metric learning problem with a closed-form solution without using semidefinite programming. Moreover, metric learning also successfully applied to many fields in remote sensing and image processing [

29,

30,

31,

32]. However, the major problem in metric learning is that it only considers the relationship between sample pairs, but does not take the relationship among cloud classes which has the high level semantic information into consideration.

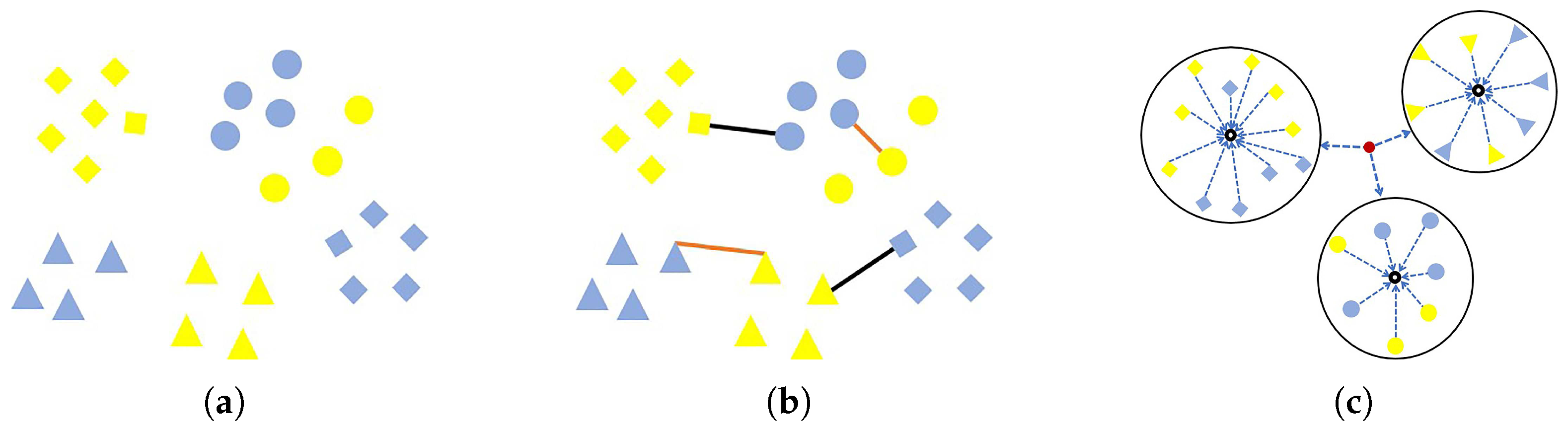

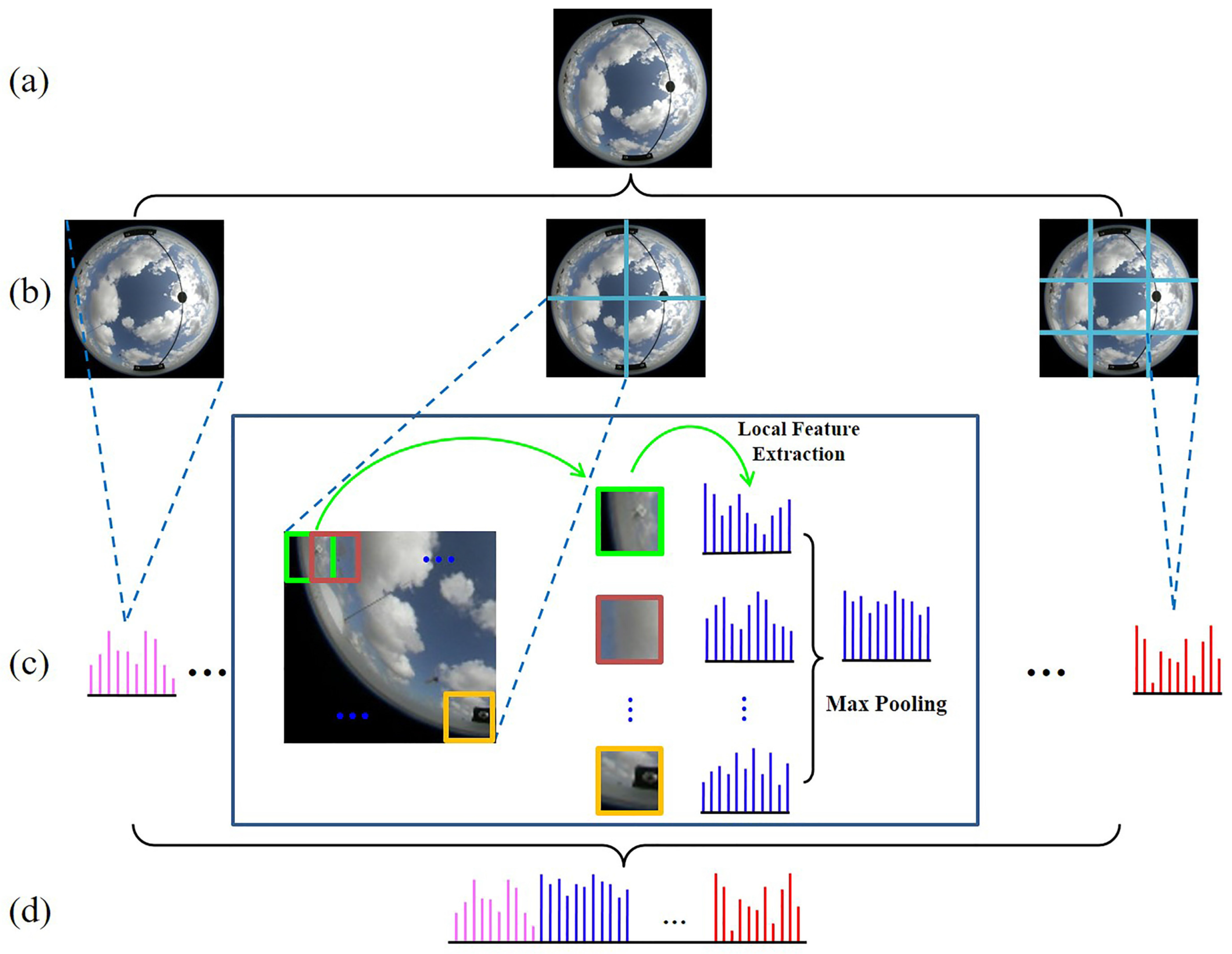

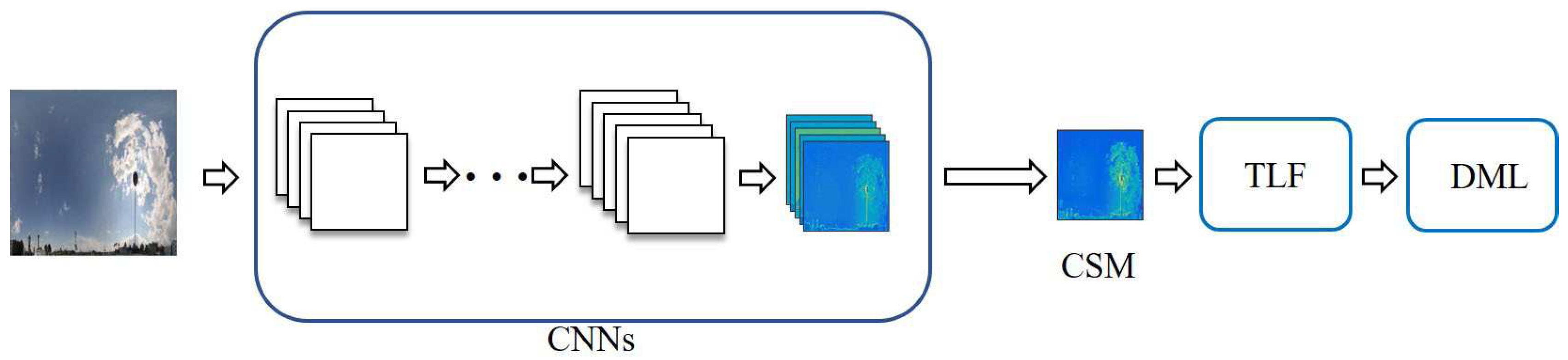

In this paper, we propose a novel representation framework called Transfer of Local Features (TLF) and a novel distance metric called Discriminative Metric Learning (DML). The TLF is a robust representation framework that can adapt to domain variation. It can integrate different kinds of local features, e.g., LBP, LTP and CLBP. The TLF mines the maximum response in regions to make a stable representation for domain variation. By max pooling across different image regions, the pooled feature is salient and more robust to local transformations. We utilize max pooling for two reasons. First, max pooling was advocated by Riesenhuber and Poggio as a more appropriate pooling mechanism for higher-level visual processing such as object recognition, and the max pooling could successfully achieve invariance to image-plane transformations such as translation and scale by building position and scale tolerant complex (C1) cells units [

33,

34]. Second, Boureau et al. [

35] provided a detailed theoretical analysis to prove why max pooling is suitable for classification tasks. The DML not only utilizes sample pairs to learn the distance metric, but also considers the relationship among cloud classes. Specifically, we force the feature vectors from the same class close to their mean vectors, and meanwhile keep the mean vectors of different classes away from the total mean vector. The key idea of DML is to learn a transformation matrix based on the aforementioned considerations. Here, a sample pair consists of a sample from the source domain (yellow) and a sample from the target domain (blue), as illustrated in

Figure 2a. To learn a transformation matrix, the input is labelled pairs including similar pairs (orange lines) and dissimilar pairs (black lines) (see

Figure 2b). Meanwhile the relationship among cloud classes with respect to the mean vectors should be considered, as shown in

Figure 2c. The output is the learned transformation matrix. The excellent property of DML is that it possesses the characteristic of intra-class compactness and maintains the discrimination among classes. Furthermore, in order to improve the practicability of the proposed method, we replace the original cloud images with the convolutional activation maps which are fed into the framework of TLF, and we have obtained significant improvements.

3. Results and Discussion

In this section, we first introduce the databases and experimental setup. It is should be noted that the cloud images are captured by an RGB color camera. Second, we verify the effect of TLF, DML and CSM on three databases, i.e., the CAMS database, the IAP database and the MOC database. Then, we compare the proposed method with other excellent methods. Finally, in order to better understand the proposed method, we analyze it in three aspects: the role of max pooling, the influence of projected feature space dimensions, and the role of the fraction of cloud images from the target domain.

3.1. Databases and Experimental Setup

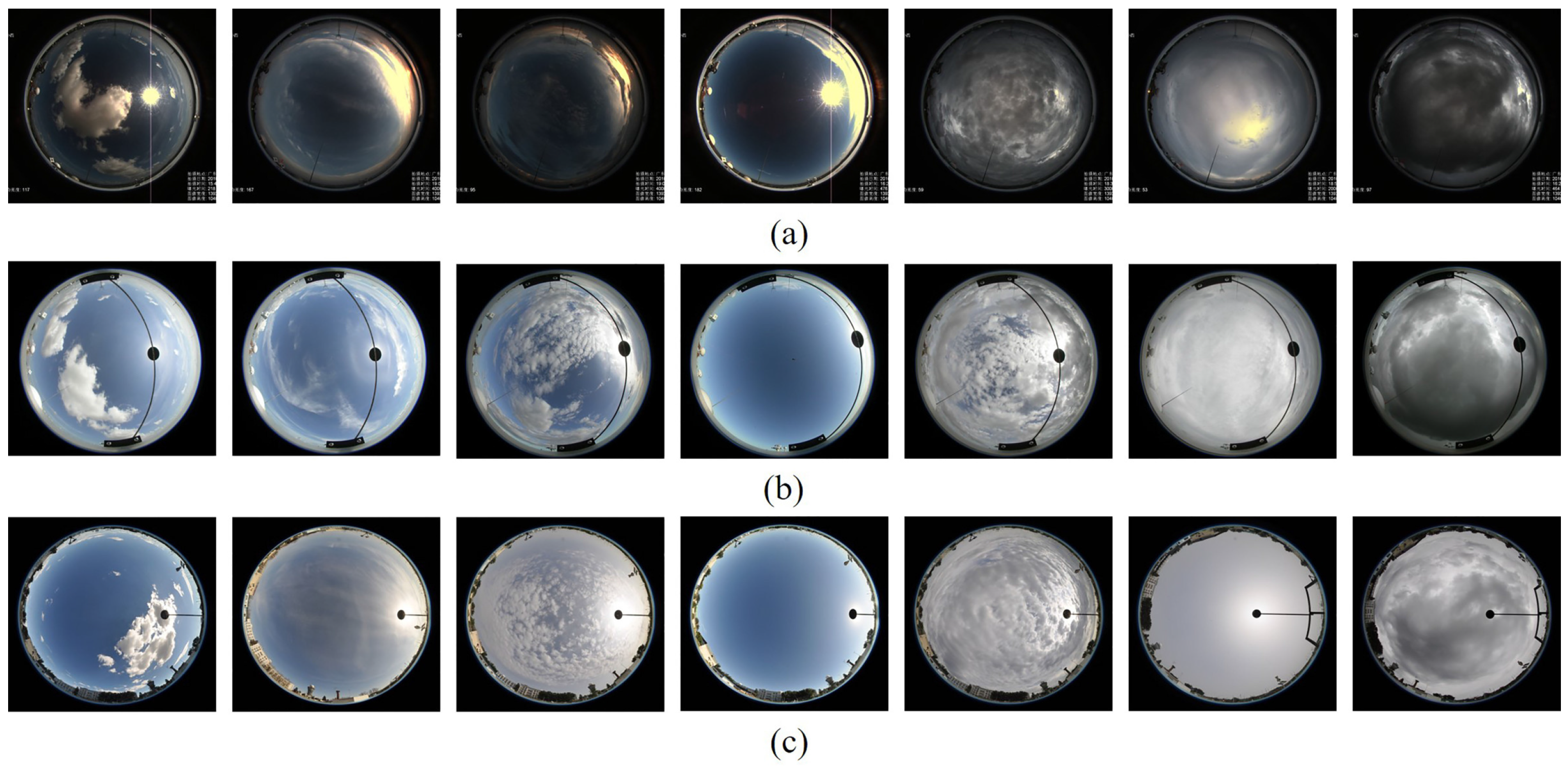

The first cloud database is the CAMS database, which is provided by Chinese Academy of Meteorological Sciences. According to the international cloud classification system published in World Meteorological Organization (WMO), the database is divided into seven classes. Note that the class of clear sky includes not only images without clouds but also images with cloudiness below 10%. The sample numbers in each class are different and the total number is 1600 as listed in

Table 1. The cloud images in this database are captured in Yangjiang, Guangdong Province, China, and have

pixels. The cloud images have a weak illumination and no occlusion. The sundisk is considered as a virtual cloud for the CAMS database. Samples for each class are shown in

Figure 7a.

The second cloud database is the IAP database, which is provided by Institute of Atmospheric Physics, Chinese Academy of Sciences. The database is also divided into seven classes. The sample number of each class is different and the total number is 1518 as listed in

Table 1. The cloud images in this database are captured in the same location as the CAMS database, but the acquisition device is different from that of the CAMS database. The cloud images from the IAP database have

pixels, a strong illumination and occlusion. Note that the occlusion in cloud images is caused by a part of the camera. Samples for each class are shown in

Figure 7b.

The third cloud database is the MOC database, which is provided by Meteorological Observation Centre, China Meteorological Administration. The database is divided into seven class as well. The total sample number is 1397 and the detail sample number for each class is listed in

Table 1. Different from the first two cloud databases, the cloud images in this database are taken in Wuxi, Jiangsu Province, China. Moreover, the cloud images have

pixels with a strong illumination and occlusion. Samples for each class are shown in

Figure 7c.

It is obvious that cloud images from the three cloud databases vary in location, illumination, occlusion and resolution. Hence, the cloud images distribute in three different domains and the differences are listed in

Table 2.

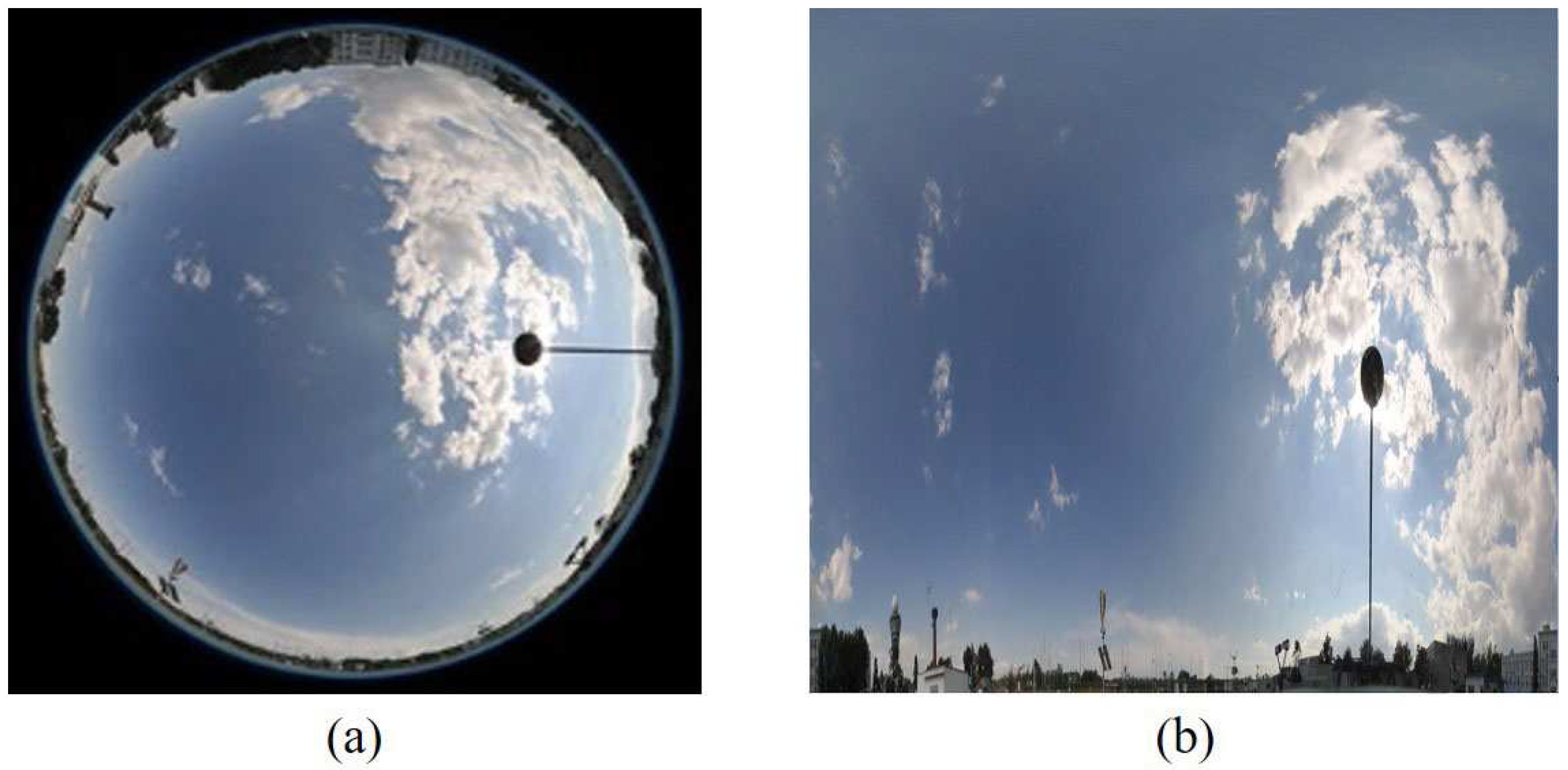

All images from the three databases were scaled to

pixels and then the intensity of each cloud image was normalized to an average intensity of 128 with a standard deviation of 20. This normalization reduced the effects of illumination variance across images. Finally, we adjusted the geometry of the cloud images to uniform representation, and the sample image is shown in

Figure 8. Furthermore, we adopted the feature normalization (FN) step for image representation. Specifically, as for the LBP, LTP and CLBP, the feature vectors were normalized to zero-mean unit-variance vectors, and then were concatenated. As for the TLBP, TLTP and TCLBP, the feature vector of each region was normalized to a zero-mean unit-variance vector, and then was concatenated. We selected all cloud images from the source domain and a half of cloud images in each class from the target domain at random as training images, and the remaining of the target domain as test images. This procedure was independently implemented 10 times and the final results represented the average accuracy over these 10 random splits. We implemented our algorithm on a desktop PC with an Intel Xeon CPU E5-2660 v2 @2.20GHz and 64 Gbytes memory in Matlab 2013b. LBP requires 35.2 s for each cloud image, while TCLBP requires 56.8 s for each cloud image.

The nearest neighborhood classifier was used to classify the cloud images. The metrics employed to evaluate the goodness-of-fit between two histograms included predefined metrics, metric learning (ML) and the proposed distance metric (DML). Note that the key idea of metric learning is to project the original inputs into another feature space, and then calculate Euclidean distance in the projected feature space. Hence, as for the selection of predefined metrics, we chose Euclidean distance metric (Euclid) as the similarity measurement in the following contrasting experiments.

3.2. Effect of TLF

We compared the TLBP, TLTP and TCLBP with the LBP, LTP and CLBP, respectively. Specifically, we extracted LBP feature with equal to (8, 1), (16, 2) and (24, 3), and then concatenated histograms of the three scales to form a feature vector for each cloud image. So the final feature vector of each cloud image has 10 + 18 + 26 = 54 dimensions. The LTP can be divided into two LBPs, positive LBP and negative LBP. Then two histograms are concatenated into one histogram, so a cloud image is finally represented as a = 108 dimensional feature vector. For the CLBP, the three operators, CLBP_C, CLBP_S and CLBP_M, can be combined hybridly. Specifically, a 2D joint histogram, “CLBP_S/C” is built first, and then the histogram is converted to a 1D histogram, which is then concatenated with CLBP_M to generate a joint histogram. The dimension is () + () + () = 162. When applied the three features to the TLF, the dimension of TLBP is + + = 756, and likewise, the dimensions of TLTP and TCLBP are 1512 and 2268, respectively.

The experimental results are listed in

Table 3. The first numbers in the bracket show the basic results. The remaining two numbers in the bracket show the results with image geometric correction (IGC), and with both IGC and feature normalization (FN), respectively. From the basic results, it can be seen that the proposed TLBP, TLTP and TCLBP achieve higher accuracies than LBP, LTP and CLBP, respectively, and the TCLBP achieves the best performance in all 6 situations. That’s because TLBP, TLTP and TCLBP are extracted by dense sampling which could obtain more stable and completed cloud information in local regions. We further apply max pooling on all local features for each region to obtain features which are more robust to local transformations. Hence, applying the TLF to the local features is a good choice to adapt to domain shift. Furthermore, when we take the MOC database as the source domain, and the CAMS database as the target domain, we obtain the poorest performance compared with other combinations of the source and target domains. The reason is that the MOC database is greatly different from the CAMS database in illumination, capturing location, occlusion and image resolution. With the help of IGC, the accuracies improve by about 4%. Based on IGC and FN, the accuracies further improve by about 3%.

3.3. Effect of DML

We compared the proposed DML with Euclidean distance metric (Euclid) and ML to classify cloud images with the six kinds of features. The experimental results are listed in

Table 4 and

Table 5. The first numbers in the bracket show the basic results. The remaining two numbers in the bracket show the results with IGC, and with both IGC and FN, respectively. From the basic results, several conclusions can be drawn. First, the performance improves with the help of ML. It is because ML is a data-driven method which learns the intrinsic topology structure between the source and target domains. This indicates that ML is fitter for evaluating the similarity between the sample pairs. Second, as for traditional and transferred features, the classification accuracies increase significantly with DML, all increased by over 3% comparing to ML. It demonstrates that the consideration of the relationship among cloud classes in the learning process of DML can boost the performance. Third, the transferred features perform better than the traditional ones, which further proves the effectiveness of the proposed TLF both in pre-defined metric and learning-based metric. Particularly, the combination of TCLBP and DML outperforms the other compared methods in all situations. The case of MOC to CAMS domain shift still achieves the lowest classification accuracy in all situations. For example, comparing to TCLBP + Euclid and TCLBP + ML, the performance of TCLBP + ML rises by 2.85% and 4.35%, respectively. While other cases of domain shift, the improvements of classification accuracies are lower than the case of MOC to CAMS domain shift. It is further verified that DML can solve the classification issue that two domains are greatly different. With the help of IGC, the accuracies improve by about 4%. Based on IGC and FN, the accuracies further improve by about 3%.

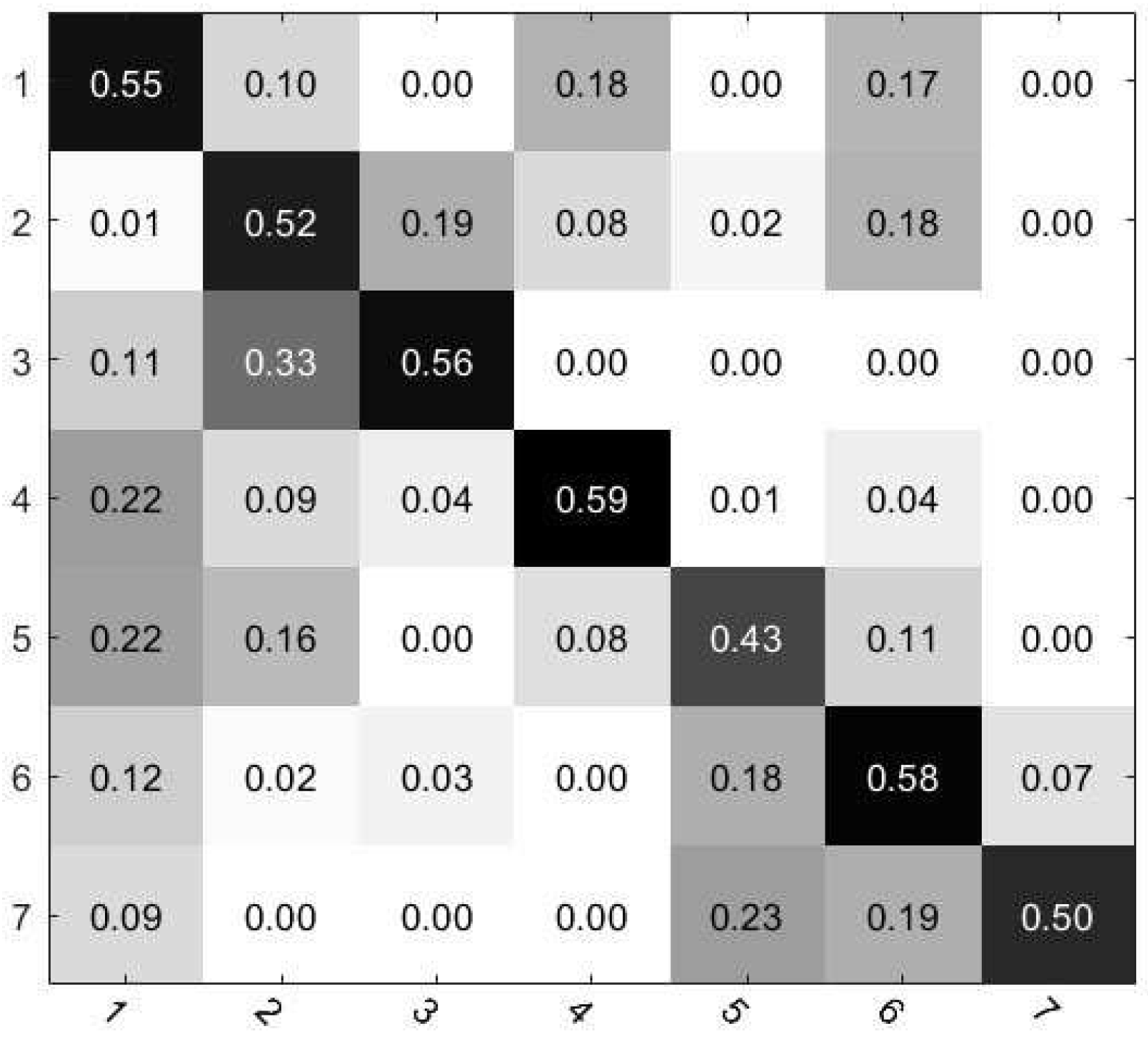

In order to further analyse the effectiveness of the proposed method TCLBP + DML (without IGC and FN), we utilized the confusion matrix to show the detailed performance of each category in the case of IAP to MOC domain shift, as shown in

Figure 9. The element of row

i and column

j in confusion matrix means the percentage of the

i-th cloud class being recognized as the

j-th cloud class. The proposed method can achieve the best performance in classifying ‘Clear sky’. ‘Cirrocumulus and altocumulus’ is likely to incorrectly be discriminated as ‘Cirrus and cirrostratus’, and ‘Cumulonimbus and nimbostratus’ is likely to incorrectly be discriminated as ‘Stratocumulus’. The incorrect discrimination of ‘Cumulus’ as ‘Clear sky’ is relatively high, and the reason is as follows. Some images of ‘Cumulus’ in the IAP database contain a few of ‘Cumulus’ clouds which are with cloudiness more than 10% (such as 15%). While some images of ‘Clear sky’ in the MOC database include not only images without clouds but also images with cloudiness below 10%. Hence, some images of the two classes are similar and easily misclassified.

3.4. Effect of CSM

In this section, we conducted the experiments based on the cloud images after IGC and FN. First, we concatenated the TLBP, TLTP and TCLBP to form a feature vector for a cloud image, and

Table 6 shows the classification accuracies with different metrics. Comparing to TCLBP + DML, the classification accuracies increase by over 7% in all situations. Second, we took the fixed-size

RGB cloud images that the red, green and blue bands were all used as the input to the CNNs, and employed the convolutional activation maps of the eighth convolutional layer with 256 kernels of size

. Then, we concatenated the TLBP, TLTP and TCLBP with CSM. The experimental results are shown in

Table 7. With the help of CSM, the classification accuracies all further increase by about 15%.

3.5. Comparison to the State-Of-The-Art Methods

We compared the performance of the proposed method TCLBP + DML with two state-of-the-art methods, bag of words (BoW) [

45] and multiple random projections (MRP) [

46]. For the BoW method, we first extracted patch features for each cloud image. Each patch feature was an 81 dimensional vector, which was formed by stretching a

neighborhood around each pixel. All the patch vectors were normalized using Weber’s law [

47]. Then, we utilized

K-means clustering [

48] over patch vectors to learn a dictionary. The size of dictionary for each class was set to be 300, which resulted in a 2100 dimensional vector for each cloud image. Finally, feature vectors of all cloud images were fed into a support vector machine (SVM) classifier with the radial basis function (RBF) kernel for classification. The MRP is a patch-based method. We selected the patch size of

, and followed the procedure in [

46]. For fair comparison, we followed the same experimental setting as mentioned in

Section 3.1 for the three methods.

The experimental results are listed in

Table 8. The sixth column shows the results of TCLBP + DML with IGC, and the seventh column shows the results of TCLBP + DML with IGC and FN. It is obvious that BoW and MRP do not adapt to cross-domain ground-based cloud classification. The BoW and MRP are learning-based method which encodes cloud images by using the learned dictionary, but they take raw pixel intensities as features which are not robust to local transformations. In contrast, we utilize a stable feature representation to solve the problem of domain shift, and take sample pairs and the relationship among cloud classes into consideration to learn a discriminative metric.

3.6. Discussion of the Proposed Method

We analyzed the proposed method in three aspects with the basic results, including the role of max pooling, the influence of projected feature space dimensions, and the role of the fraction of cloud images from the target domain. Note that we took the CLBP and TCLBP as examples.

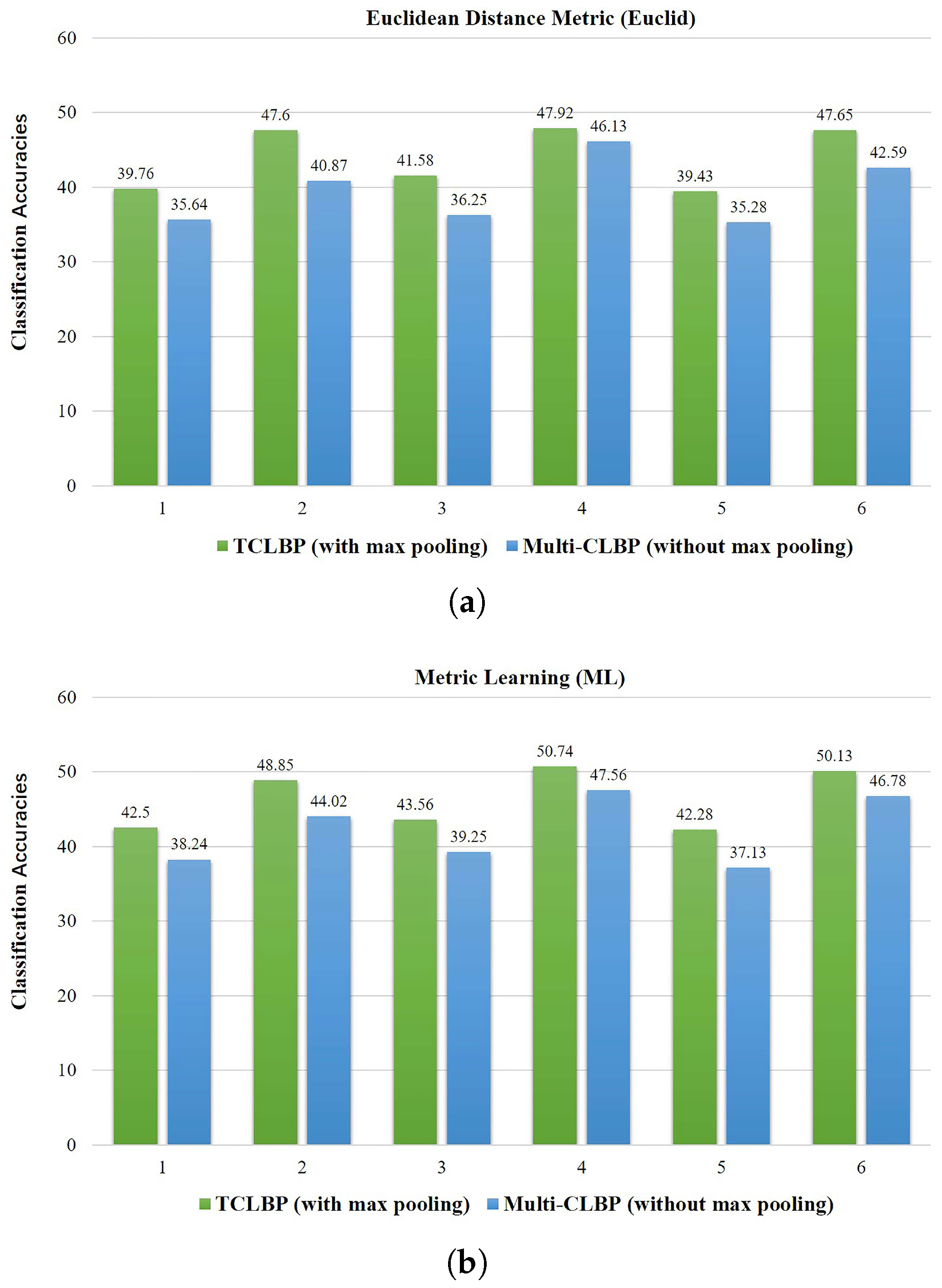

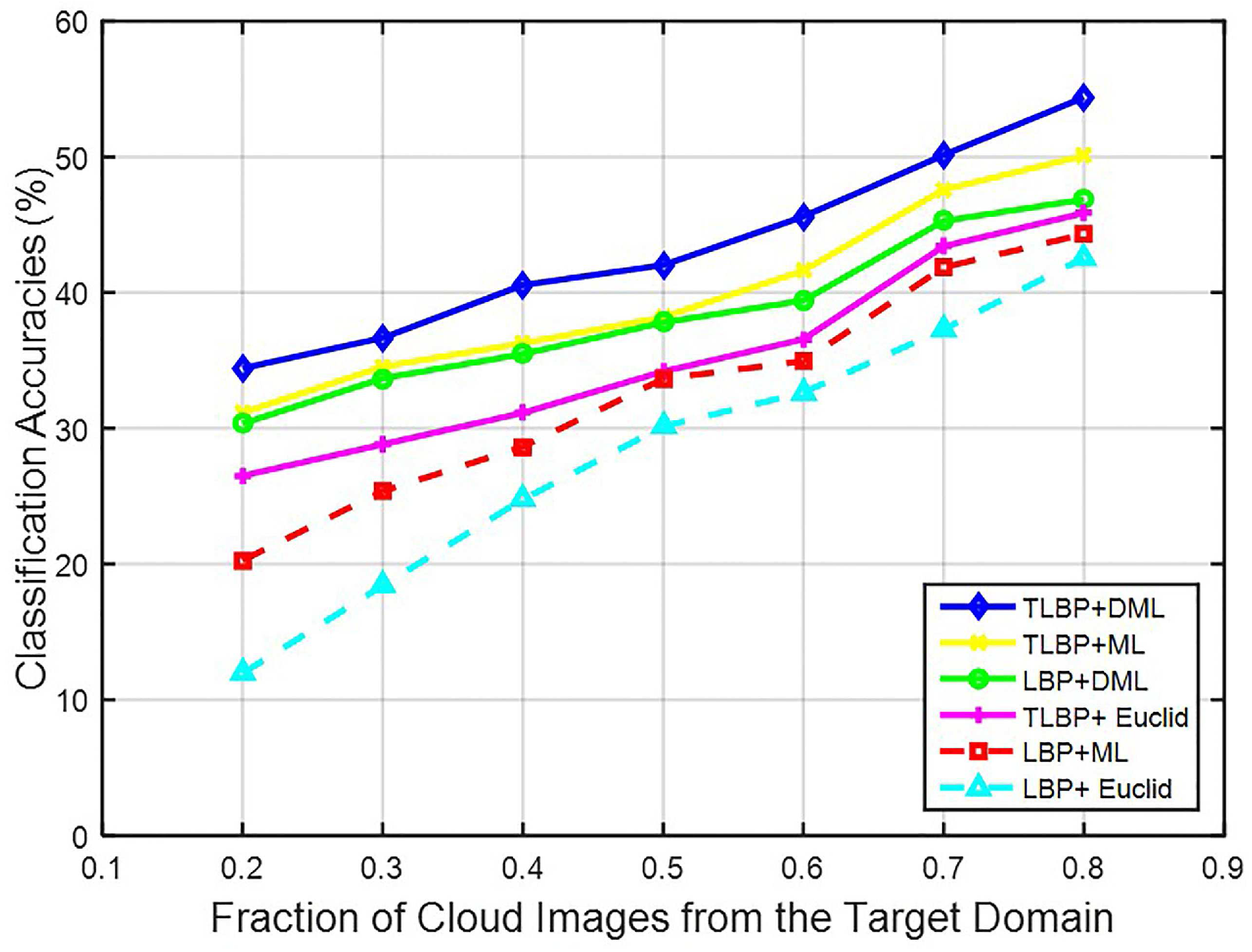

3.6.1. Role of Max Pooling

The cross-domain ground-based cloud classification is largely affected by changes in image resolution, illumination and occlusion, which should be addressed in feature representation and similarity measurement. The application of max pooling in TLF is an effective strategy to overcome changes in illumination, image resolution or occlusion. For fair comparison, we partitioned a cloud image into

regions with different scales

l = 1, 2, 3, and utilized a subwindow with the size of

to densely sample the local patches with an overlap step of 5 pixels. We extracted CLBP feature in each patch, and then aggregated all features in each region using average pooling which preserved the average response of each histogram bin among all histograms. Each cloud image was also represented as a 2268-dimensional feature vector. We defined the feature as multi-CLBP. By comparing the multi-CLBP without max pooling and TCLBP in different metrics, we found that this operation does improve the performance of cross-domain ground-based cloud classification, as illustrated in

Figure 10. With max pooling, the classification results are improved by about 5% in all situations with different metrics.

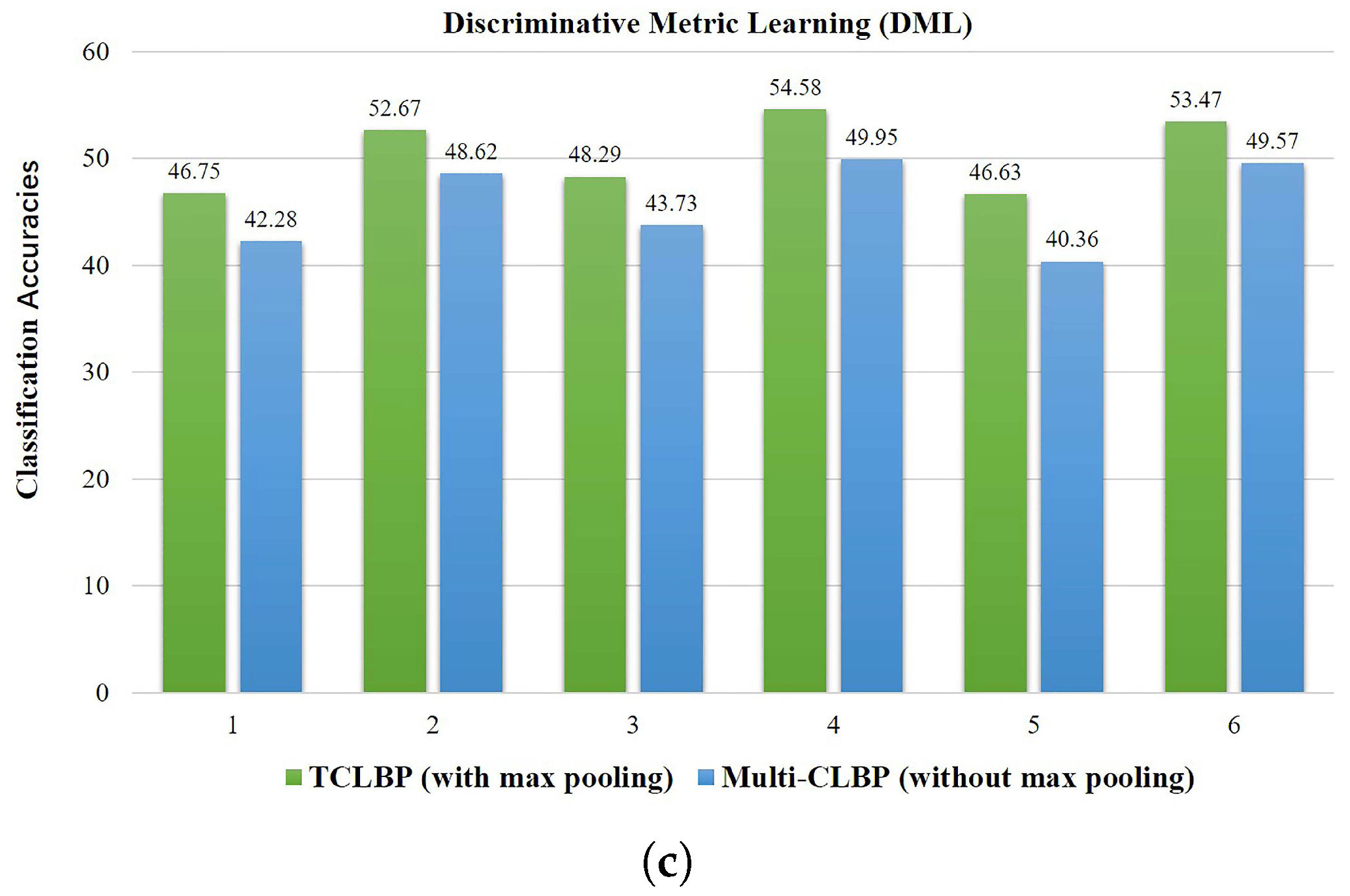

3.6.2. Influence of Parameter Variances

For the proposed DML, the dimensions of the projected space has an influence on performance. In other words, the parameter

m in Equation (

24) controls the dimension of

W and as a result affects classification accuracies. This influence is shown in

Figure 11, obtained by experiments on the IAP to MOC domain shift. Approximately, the performance is increasing with dimension increasing, but it decreases after 200 dimensions. The best experimental result is obtained with

m equal to 200.

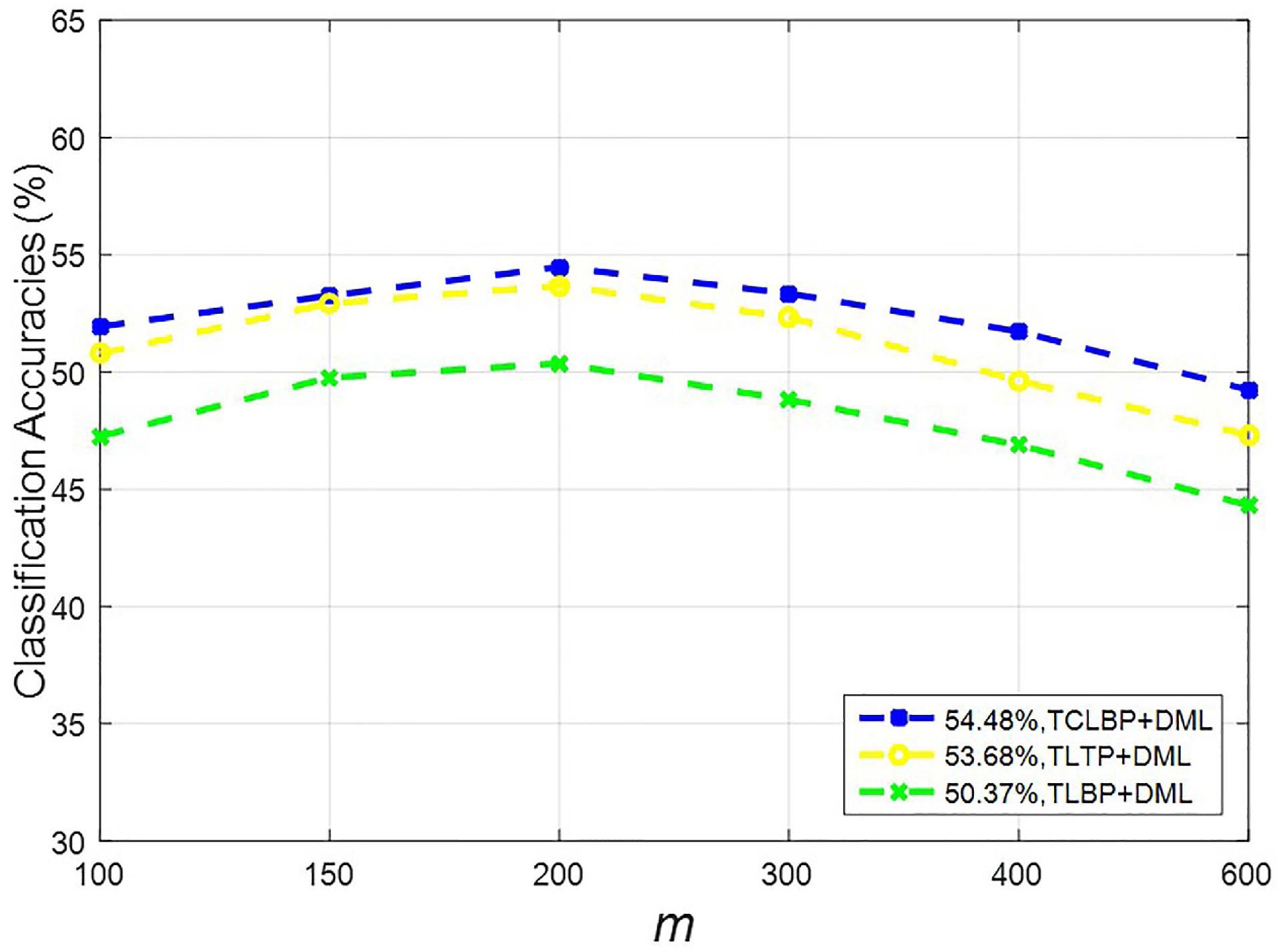

3.6.3. Role of the Fraction of Cloud Images from the Target Domain

We varied the fraction of cloud images in each class from the target domain in increment of 20% up to 80%. The training images consisted of two parts, all cloud images from the source domain, and a fraction of cloud images from the target domain. We took the MOC to CAMS domain shift as an example. The average recognition accuracies for different fractions are shown in

Figure 12. The classification capabilities of various methods reduce significantly when the number of cloud images from the target domain decreases. In contrast, our method outperforms other approaches with a tolerable accuracy due to adopting the stable feature representation and discriminative metric.