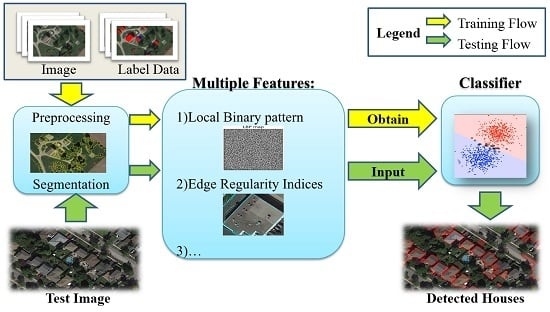

Feature descriptors have a significant impact on the recognition of ground objects. Good features should be non-ambiguous despite changes in angle, illumination, and scale. In classification and object detection, several types of features have been developed, including color features, texture features, and geometric features. In this section, we will present some feature descriptors used in our work as well as some implementation details.

3.4.1. Color Descriptors

Color is one of the most common features used to characterize image regions. The images used in our experiments contain only 3 channels; therefore, objects in the images can be distinguished using only red, green, and blue colors. In general, the color feature of a region is represented by the mean color value of all the pixels inside. Due to the variability of color, the mean value cannot fully capture the exact color characteristics. In order to measure the color distribution in an image region, we use color moments to describe the color feature. In our experiments, 1st- and 2nd-order moments are used, as follows:

where

is the value of the

j-th pixel of the object in the

i-th color channel;

N is the total number of pixels;

is the mean value (1st-order moment) in the

i-th channel; and

is the standard deviation (2nd-order moment).

In our work, color moments are computed in both RGB and HSV (hue, saturation, and value) color space. In each color channel, two values (, ) are computed for each object. In both the RGB and HSV color space, we can obtain two 6-dimensional features, denoted as (, , …, ) and (, , …, ), respectively.

3.4.2. Texture Descriptors

Local binary patterns (LBP) was first described by Ojala et al. [

46] and has proven to be a powerful feature for texture classification. Due to its discriminative power and computational simplicity, LBP has been seen as a unifying approach to traditional statistical and structural models. The original LBP proceeds as illustrated in

Figure 4a: Each pixel value is compared with its neighbors; the neighbors that have greater values than the central pixel are set to bit 1, and others having less or equal values are set to bit 0. One can generate a binary bit serial by concatenating all the bits of the neighbors in a clockwise manner. The binary bits serial is then converted to a decimal LBP code of the central pixel. After processing all the pixels in this way, an LBP map is produced. The histogram calculated over the LBP map image can be used as the descriptor of the original image.

Such an LBP defined on 3 × 3 neighbors does not have a good discrimination; therefore, a rotation-invariant and uniform LBP, denoted as

, is developed [

47].

is calculated on resampling points along

P symmetric directions at a radius of

R from the central point. By right-shifting the LBP binary code, one can get different values, of which the minimum is selected as the final LBP code. In

Figure 4b, the rotation-invariant uniform LBP map image (middle) is computed on the image of a tree canopy (left) with the parameters of

and

. The corresponding histogram of the LBP map is shown on the right.

3.4.4. Zernike Moments

Moment is a typical kind of region-based shape descriptor widely used in object recognition. Among the commonly used region-based descriptors, Zernike moments are a set of excellent shape descriptors based on the theory of orthogonal polynomials [

48]. The two-dimensional Zernike moment of order

p with repetition

q for an image function

in polar coordinates is defined as follows:

where

is a Zernike polynomial that forms a complete orthogonal set over the interior of the unit disc of

.

is the complex conjugate of

. In polar coordinates,

is expressed as follows:

where

;

p is a non-negative integer; and

q is a non-zero integer subject to the constraints that

is even and

;

is the length of the vector

from the pixel

to the origin

and

; and

is the angle between the vector

and the

x axis in a counter clockwise direction.

is the Zernike radial polynomial defined as follows:

The Zernike moments are only rotation invariant but not scale or translation invariant. To achieve scale and translation invariance, the regular moments, shown as follows, are utilized.

Translation invariance is achieved by transforming the original binary image

into a new one

where (

,

) is the center location of the original image computed by Equation (

13) and

is the mass (or area) of the image. Scale invariance is accomplished by normalizing the original image into a unit disk, which can be done using

and

. Combing the two points mentioned above, the original image is transformed by Equation (

14) before computing Zernike moments. After the transformation, the moments computed upon the image

will be scale, translation and rotation invariant.

3.4.5. Edge Regularity Indices

Compared to other objects, parallel and perpendicular lines are more likely to appear around anthropogenic objects such as houses, roads, parking lots, and airports. In order to measure how strongly these lines are perpendicular or parallel to each other, we developed a group of indices called ERI (edge regularity indices) that can describe the spatial relations between these lines. The whole procedure can be divided into two steps.

(1) Local Line Segment Detection

The first step is local line segment detection, in which line segments are extracted within each candidate region. In general, Hough transformation (HT) is the most common method used to detect line segments. Different from the previous methods, in our work, HT is applied only to each local region instead of the whole image. A local region is defined by the MBR (minimum bounding rectangle) of each segmented region.

Figure 5b gives some examples of the MBR of the candidate region in

Figure 5a. Before the local HT, a binary edge image is calculated by a Canny edge detection operation (

Figure 5c). In the local HT, two parameters are set as follows:

and

. The

means that only line segments over 15 pixels length are extracted, and

means that gaps less or equal 2 pixels between two line segments will be filled and that the two segments will be connected. One of the local line segment detection results is shown in

Figure 5d; line segments along the house boundaries and on the roofs are detected and delineated in cyan.

(2) Calculation of Indices

The second step is to calculate the ERI values. Two scalar values (, ) are designed to measure the degree to which these line segments are perpendicular or parallel to each other, and three statistical values (, , ) are selected to describe the statistical characteristics of these line segments.

(a)

is short for perpendicularity index, which describes how strongly the line segments are perpendicular to each other. Take one pair of line segments as an example (

Figure 6a). The angle between the two line segments is defined as follows (only the acute angle of the two line segments is considered):

where

and

are the

ith and

jth line segments, respectively, which are represented as vectors. For the current local region with n line segments, the total number of these angles can amount to

. If

, the

ith and

jth line segments are considered perpendicular to each other. Then, we can sum the number of such approximate right angles as follows:

The ratio between and is defined as of the region.

Take the line segments in

Figure 6b as an example. There are 5 line segments and 6 pairs of line segments that are perpendicular to each other, namely,

,

,

,

,

, and

. The total number of pairs is

, and the perpendicularity index is

.

(b)

denotes the parallelity index, which is similar to the perpendicularity index but describes how strongly the line segments are parallel to each other. Two line segments are considered parallel to each other only when the angle between them is less than 20 degrees. Thus, the parallelity index of a group of line segments can be computed as follows:

For example,

Figure 6b contains 4 pairs of parallel line segments:

,

,

, and

; and the parallelity index is

.

(c) , , and are the statistics of these line segments. The lengths of all the line segments, denoted as , , , , are used to calculate the following statistics.

3.4.6. Shadow Line Indices

In most cases, buildings are always accompanied by shadows adjacent to them (

Figure 7b). Therefore, the shadow clues represent useful prior knowledge for building detection and are commonly exploited by researchers [

23,

49]. Different from previous works, we do not directly extract buildings from shadow clues. Instead, we extract shadow line indices (SLI) from shadows and use them in the training process. Furthermore, we found that the edges between a building and its shadow often appear as straight lines. The more numerous and longer straight lines there are in the area adjacent to an object, the more likely the object is to be a building. A feature descriptor that captures the geometric characteristics of shadows can be used to discriminate buildings from other objects. The process of SLI extraction is divided into two steps.

The first step is to detect straight line segments along the edges of shadows in the binary mask image (the red pixels in

Figure 7a). It should be noted that the edges here are not edges of the whole image but of only shadows, as shown in

Figure 7b.

After the line segments in each region have been extracted, the SLI can be calculated for these lines. In this study, we select and design 5 scalar values to represent the SLI. Let

denote n line segments. The lengths of these line segments are denoted as

,

, …,

. The SLI values are computed as follows:

where

is the diameter of a circle with the same area as the current region and

represents the ratio between the length of shadow lines and the region size.

3.4.7. Hybrid Descriptors

As mentioned above, different features are extracted from each candidate region. Color features in both RGB and HSV color spaces are two 6-dimensional descriptors denoted as and . The LBP texture with 8 neighbors is used, and the dimension of the LBP descriptor is 8, denoted as . The Zernike moments are the shape descriptors with the dimension of 25, denoted as . ERI is a 5-dimensional descriptor and denoted as a vector (, , , , ). SLI is a descriptor with 5 dimensions, denoted as: (, , , , ). Together with other simple geometric indices, including area, eccentricity, solidity, convexity, rectangularity, circularity, and shape roughness, all these descriptors can be concatenated to obtain a long hybrid descriptor, which can enhance the discrimination ability and improve the accuracy of the classification.