Mean Composite Fire Severity Metrics Computed with Google Earth Engine Offer Improved Accuracy and Expanded Mapping Potential

Abstract

:1. Introduction

2. Materials and Methods

2.1. Processing in Google Earth Engine

2.2. Validation

2.3. Google Earth Engine Implementation and Code

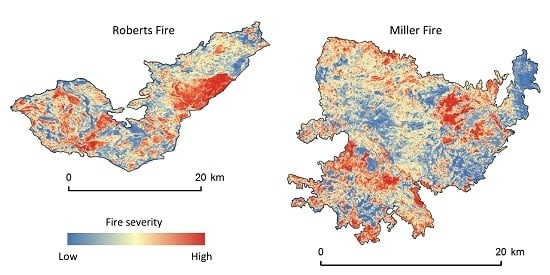

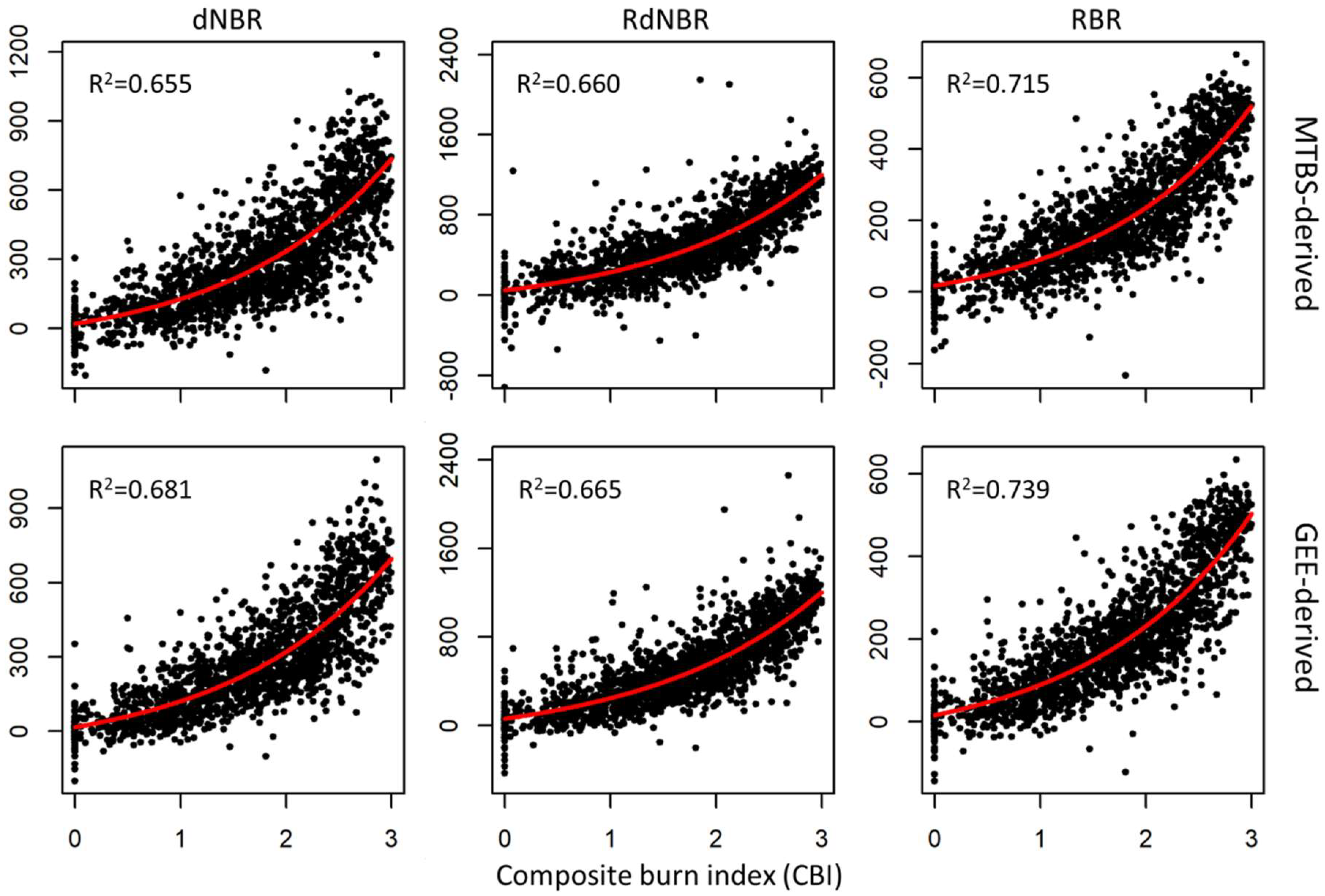

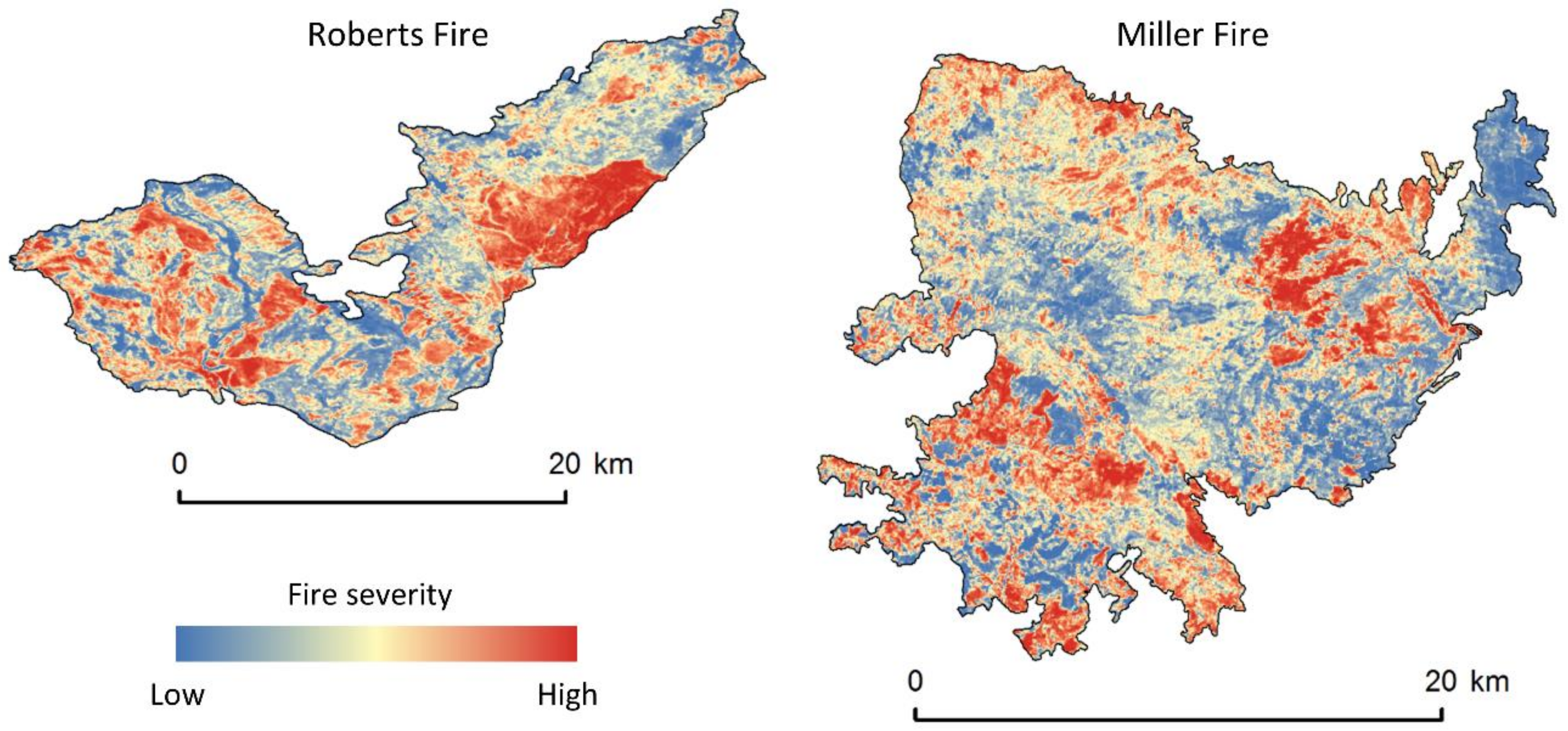

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Code Availability

References

- Parks, S.A.; Parisien, M.A.; Miller, C.; Dobrowski, S.Z. Fire activity and severity in the western US vary along proxy gradients representing fuel amount and fuel moisture. PLoS ONE 2014, 9, e99699. [Google Scholar] [CrossRef] [PubMed]

- Dillon, G.K.; Holden, Z.A.; Morgan, P.; Crimmins, M.A.; Heyerdahl, E.K.; Luce, C.H. Both topography and climate affected forest and woodland burn severity in two regions of the western US, 1984 to 2006. Ecosphere 2011, 2, 130. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Lhermitte, S.; Verstraeten, W.W.; Goossens, R. The temporal dimension of differenced Normalized Burn Ratio (dNBR) fire/burn severity studies: The case of the large 2007 Peloponnese wildfires in Greece. Remote Sens. Environ. 2010, 114, 2548–2563. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Garcia, V.; Santamarta, M.; Fernández-Manso, A.; Quintano, C.; Marcos, E.; Calvo, L. Burn severity metrics in fire-prone pine ecosystems along a climatic gradient using Landsat imagery. Remote Sens. Environ. 2018, 206, 205–217. [Google Scholar] [CrossRef]

- Fang, L.; Yang, J.; White, M.; Liu, Z. Predicting Potential Fire Severity Using Vegetation, Topography and Surface Moisture Availability in a Eurasian Boreal Forest Landscape. Forests 2018, 9, 130. [Google Scholar] [CrossRef]

- Key, C.H.; Benson, N.C. Landscape assessment (LA). In FIREMON: Fire Effects Monitoring and Inventory System; General Technical Report RMRS-GTR-164-CD; U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station: Fort Collins, CO, USA, 2006. [Google Scholar]

- Miller, J.D.; Thode, A.E. Quantifying burn severity in a heterogeneous landscape with a relative version of the delta Normalized Burn Ratio (dNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Parks, S.A.; Dillon, G.K.; Miller, C. A new metric for quantifying burn severity: The relativized burn ratio. Remote Sens. 2014, 6, 1827–1844. [Google Scholar] [CrossRef]

- Holden, Z.A.; Morgan, P.; Evans, J.S. A predictive model of burn severity based on 20-year satellite-inferred burn severity data in a large southwestern US wilderness area. For. Ecol. Manag. 2009, 258, 2399–2406. [Google Scholar] [CrossRef]

- Wimberly, M.C.; Reilly, M.J. Assessment of fire severity and species diversity in the southern Appalachians using Landsat TM and ETM+ imagery. Remote Sens. Environ. 2007, 108, 189–197. [Google Scholar] [CrossRef] [Green Version]

- Veraverbeke, S.; Lhermitte, S.; Verstraeten, W.W.; Goossens, R. Evaluation of pre/post-fire differenced spectral indices for assessing burn severity in a Mediterranean environment with Landsat Thematic Mapper. Int. J. Remote Sens. 2011, 32, 3521–3537. [Google Scholar] [CrossRef] [Green Version]

- Soverel, N.O.; Perrakis, D.D.B.; Coops, N.C. Estimating burn severity from Landsat dNBR and RdNBR indices across western Canada. Remote Sens. Environ. 2010, 114, 1896–1909. [Google Scholar] [CrossRef]

- Beck, P.S.A.; Goetz, S.J.; Mack, M.C.; Alexander, H.D.; Jin, Y.; Randerson, J.T.; Loranty, M.M. The impacts and implications of an intensifying fire regime on Alaskan boreal forest composition and albedo. Glob. Chang. Biol. 2011, 17, 2853–2866. [Google Scholar] [CrossRef] [Green Version]

- Mallinis, G.; Mitsopoulos, I.; Chrysafi, I. Evaluating and comparing Sentinel 2A and Landsat-8 Operational Land Imager (OLI) spectral indices for estimating fire severity in a Mediterranean pine ecosystem of Greece. GISci. Remote Sens. 2018, 55, 1–18. [Google Scholar] [CrossRef]

- Fang, L.; Yang, J.; Zu, J.; Li, G.; Zhang, J. Quantifying influences and relative importance of fire weather, topography, and vegetation on fire size and fire severity in a Chinese boreal forest landscape. For. Ecol. Manag. 2015, 356, 2–12. [Google Scholar] [CrossRef]

- Cansler, C.A.; McKenzie, D. How robust are burn severity indices when applied in a new region? Evaluation of alternate field-based and remote-sensing methods. Remote Sens. Mol. 2012, 4, 456–483. [Google Scholar] [CrossRef]

- Key, C.H. Ecological and sampling constraints on defining landscape fire severity. Fire Ecol. 2006, 2, 34–59. [Google Scholar] [CrossRef]

- Picotte, J.J.; Peterson, B.; Meier, G.; Howard, S.M. 1984–2010 trends in fire burn severity and area for the conterminous US. Int. J. Wildl. Fire 2016, 25, 413–420. [Google Scholar] [CrossRef]

- Eidenshink, J.C.; Schwind, B.; Brewer, K.; Zhu, Z.-L.; Quayle, B.; Howard, S.M. A project for monitoring trends in burn severity. Fire Ecol. 2007, 3, 3–21. [Google Scholar] [CrossRef]

- Kane, V.R.; Cansler, C.A.; Povak, N.A.; Kane, J.T.; McGaughey, R.J.; Lutz, J.A.; Churchill, D.J.; North, M.P. Mixed severity fire effects within the Rim fire: Relative importance of local climate, fire weather, topography, and forest structure. For. Ecol. Manag. 2015, 358, 62–79. [Google Scholar] [CrossRef] [Green Version]

- Stevens-Rumann, C.; Prichard, S.; Strand, E.; Morgan, P. Prior wildfires influence burn severity of subsequent large fires. Can. J. For. Res. 2016, 46, 1375–1385. [Google Scholar] [CrossRef] [Green Version]

- Prichard, S.J.; Kennedy, M.C. Fuel treatments and landform modify landscape patterns of burn severity in an extreme fire event. Ecol. Appl. 2014, 24, 571–590. [Google Scholar] [CrossRef] [PubMed]

- Parks, S.A.; Holsinger, L.M.; Panunto, M.H.; Jolly, W.M.; Dobrowski, S.Z.; Dillon, G.K. High-severity fire: Evaluating its key drivers and mapping its probability across western US forests. Environ. Res. Lett. 2018, 13, 044037. [Google Scholar] [CrossRef]

- Keyser, A.; Westerling, A. Climate drives inter-annual variability in probability of high severity fire occurrence in the western United States. Environ. Res. Lett. 2017, 12, 065003. [Google Scholar] [CrossRef] [Green Version]

- Arkle, R.S.; Pilliod, D.S.; Welty, J.L. Pattern and process of prescribed fires influence effectiveness at reducing wildfire severity in dry coniferous forests. For. Ecol. Manag. 2012, 276, 174–184. [Google Scholar] [CrossRef]

- Wimberly, M.C.; Cochrane, M.A.; Baer, A.D.; Pabst, K. Assessing fuel treatment effectiveness using satellite imagery and spatial statistics. Ecol. Appl. 2009, 19, 1377–1384. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Parks, S.A.; Miller, C.; Abatzoglou, J.T.; Holsinger, L.M.; Parisien, M.-A.; Dobrowski, S.Z. How will climate change affect wildland fire severity in the western US? Environ. Res. Lett. 2016, 11, 035002. [Google Scholar] [CrossRef] [Green Version]

- Miller, J.D.; Safford, H.D.; Crimmins, M.; Thode, A.E. Quantitative evidence for increasing forest fire severity in the Sierra Nevada and southern Cascade Mountains, California and Nevada, USA. Ecosystems 2009, 12, 16–32. [Google Scholar] [CrossRef]

- Whitman, E.; Parisien, M.-A.; Thompson, D.K.; Hall, R.J.; Skakun, R.S.; Flannigan, M.D. Variability and drivers of burn severity in the northwestern Canadian boreal forest. Ecosphere 2018, 9. [Google Scholar] [CrossRef]

- Ireland, G.; Petropoulos, G.P. Exploring the relationships between post-fire vegetation regeneration dynamics, topography and burn severity: A case study from the Montane Cordillera Ecozones of Western Canada. Appl. Geogr. 2015, 56, 232–248. [Google Scholar] [CrossRef]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; Available online: https://www.r-project.org/ (accessed on 1 July 2017).

- Rollins, M.G. LANDFIRE: A nationally consistent vegetation, wildland fire, and fuel assessment. Int. J. Wildl. Fire 2009, 18, 235–249. [Google Scholar] [CrossRef]

- Kuhn, M. Caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar]

- Hijmans, R.J.; van Etten, J.; Cheng, J.; Mattiuzzi, M.; Sumner, M.; Greenberg, J.A.; Lamigueiro, O.P.; Bevan, A.; Racine, E.B.; Shortridge, A.; et al. Package ‘Raster’; R. Package, 2015. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Kennedy, R.E.; Yang, Z.; Gorelick, N.; Braaten, J.; Cavalcante, L.; Cohen, W.B.; Healey, S. Implementation of the LandTrendr Algorithm on Google Earth Engine. Remote Sens. 2018, 10, 691. [Google Scholar] [CrossRef]

- Robinson, N.P.; Allred, B.W.; Jones, M.O.; Moreno, A.; Kimball, J.S.; Naugle, D.E.; Erickson, T.A.; Richardson, A.D. A Dynamic Landsat Derived Normalized Difference Vegetation Index (NDVI) Product for the Conterminous United States. Remote Sens. 2017, 9, 863. [Google Scholar] [CrossRef]

- Lydersen, J.M.; Collins, B.M.; Brooks, M.L.; Matchett, J.R.; Shive, K.L.; Povak, N.A.; Kane, V.R.; Smith, D.F. Evidence of fuels management and fire weather influencing fire severity in an extreme fire event. Ecol. Appl. 2017, 27, 2013–2030. [Google Scholar] [CrossRef] [PubMed]

- Reilly, M.J.; Dunn, C.J.; Meigs, G.W.; Spies, T.A.; Kennedy, R.E.; Bailey, J.D.; Briggs, K. Contemporary patterns of fire extent and severity in forests of the Pacific Northwest, USA (1985–2010). Ecosphere 2017, 8, e01695. [Google Scholar] [CrossRef]

- Stevens, J.T.; Collins, B.M.; Miller, J.D.; North, M.P.; Stephens, S.L. Changing spatial patterns of stand-replacing fire in California conifer forests. For. Ecol. Manag. 2017, 406, 28–36. [Google Scholar] [CrossRef]

- Whitman, E.; Batllori, E.; Parisien, M.-A.; Miller, C.; Coop, J.D.; Krawchuk, M.A.; Chong, G.W.; Haire, S.L. The climate space of fire regimes in north-western North America. J. Biogeogr. 2015, 42, 1736–1749. [Google Scholar] [CrossRef]

- Fernandes, P.M.; Loureiro, C.; Magalhães, M.; Ferreira, P.; Fernandes, M. Fuel age, weather and burn probability in Portugal. Int. J. Wildl. Fire 2012, 21, 380–384. [Google Scholar] [CrossRef] [Green Version]

- Trigo, R.M.; Sousa, P.M.; Pereira, M.G.; Rasilla, D.; Gouveia, C.M. Modelling wildfire activity in Iberia with different atmospheric circulation weather types. Int. J. Climatol. 2016, 36, 2761–2778. [Google Scholar] [CrossRef]

- Parisien, M.-A.; Miller, C.; Parks, S.A.; Delancey, E.R.; Robinne, F.-N.; Flannigan, M.D. The spatially varying influence of humans on fire probability in North America. Environ. Res. Lett. 2016, 11, 075005. [Google Scholar] [CrossRef] [Green Version]

- Price, O.F.; Penman, T.D.; Bradstock, R.A.; Boer, M.M.; Clarke, H. Biogeographical variation in the potential effectiveness of prescribed fire in south-eastern Australia. J. Biogeogr. 2015, 42, 2234–2245. [Google Scholar] [CrossRef] [Green Version]

- Fox, D.M.; Carrega, P.; Ren, Y.; Caillouet, P.; Bouillon, C.; Robert, S. How wildfire risk is related to urban planning and Fire Weather Index in SE France (1990–2013). Sci. Total Environ. 2018, 621, 120–129. [Google Scholar] [CrossRef] [PubMed]

- Villarreal, M.L.; Haire, S.L.; Iniguez, J.M.; Montaño, C.C.; Poitras, T.B. Distant Neighbors: Recent wildfire patterns of the Madrean Sky Islands of Southwestern United States and Northwestern México. Fire Ecol. 2018, in press. [Google Scholar]

- Kolden, C.A.; Rogan, J. Mapping wildfire burn severity in the Arctic tundra from downsampled MODIS data. Arct. Antarct. Alp. Res. 2013, 45, 64–76. [Google Scholar] [CrossRef]

| Historical Fire Regime [33] | ||||||

|---|---|---|---|---|---|---|

| Fire Name | Year | Number of plots | Overstory species (in order of prevalence) | Surface | Mixed | Replace |

| Tripod Cx (Spur Peak) 1 | 2006 | 328 | Douglas-fir, ponderosa pine, subalpine fir, Engelmann spruce | 80–90% | <5% | 5–10% |

| Tripod Cx (Tripod) 1 | 2006 | 160 | Douglas-fir, ponderosa pine, subalpine fir, Engelmann spruce | >90% | <5% | <5% |

| Robert 2 | 2003 | 92 | Subalpine fir, Engelmann spruce, lodgepole pine, Douglas-fir, grand fir, western red cedar, western larch | 5–10% | 30–40% | 40–50% |

| Falcon 3 | 2001 | 42 | Subalpine fir, Engelmann spruce, lodgepole pine, whitebark pine | 0% | 30–40% | 60–70% |

| Green Knoll 3 | 2001 | 54 | Subalpine fir, Engelmann spruce, lodgepole pine, Douglas-fir, aspen | 0% | 20–30% | 70–80% |

| Puma 4 | 2008 | 45 | Douglas-fir, white fir, ponderosa pine | 20–30% | 70–80% | 0% |

| Dry Lakes Cx 3 | 2003 | 49 | Ponderosa pine, Arizona pine, Emory oak, alligator juniper | >90% | 0% | 0% |

| Miller 5 | 2011 | 94 | Ponderosa pine, Arizona pine, Emory oak, alligator juniper | 80–90% | 5–10% | 0% |

| Outlet 6 | 2000 | 54 | Subalpine fir, Engelmann spruce, lodgepole pine, ponderosa pine, Douglas-fir, white fir | 30–40% | 5–10% | 50–60% |

| Dragon Cx WFU 6 | 2005 | 51 | Ponderosa pine, Douglas-fir, white fir, aspen, subalpine fir, lodgepole pine | 60–70% | 20–30% | 5–10% |

| Long Jim 6 | 2004 | 49 | Ponderosa pine, Gambel oak | >90% | 0% | 0% |

| Vista 6 | 2001 | 46 | Douglas-fir, white fir, ponderosa pine, aspen, subalpine fir | 20–30% | 70–80% | 0% |

| Walhalla 6 | 2004 | 47 | Douglas-fir, white fir, ponderosa pine, aspen, subalpine fir, lodgepole pine | 60–70% | 20–30% | <5% |

| Poplar 6 | 2003 | 108 | Douglas-fir, white fir, ponderosa pine, aspen, subalpine fir, lodgepole pine | 20–30% | 20–30% | 40–50% |

| Power 7 | 2004 | 88 | Ponderosa/Jeffrey pine, white fir, mixed conifers, black oak | >90% | 0% | 0% |

| Cone 7 | 2002 | 59 | Ponderosa/Jeffrey pine, mixed conifers | 80–90% | <5% | <5% |

| Straylor 7 | 2004 | 75 | Ponderosa/Jeffrey pine, western juniper | >90% | 0% | <5% |

| McNally 7 | 2002 | 240 | Ponderosa/Jeffrey pine, mixed conifers, interior live oak, scrub oak, black oak | 70–80% | 10–20% | 0% |

| Mean R2 without dNBRoffset | Mean R2 with dNBRoffset | |||

|---|---|---|---|---|

| MTBS-Derived | GEE-Derived | MTBS-Derived | GEE-Derived | |

| dNBR | 0.761 | 0.768 | 0.761 | 0.768 |

| RdNBR | 0.736 | 0.764 | 0.751 | 0.759 |

| RBR | 0.784 | 0.791 | 0.784 | 0.790 |

| R2 without dNBRoffset (Standard Error) | R2 with dNBRoffset (Standard Error) | |||

|---|---|---|---|---|

| MTBS-Derived | GEE-Derived | MTBS-Derived | GEE-Derived | |

| dNBR | 0.630 (0.026) | 0.660 (0.025) | 0.655 (0.026) | 0.682 (0.025) |

| RdNBR | 0.616 (0.026) | 0.692 (0.025) | 0.661 (0.026) | 0.669 (0.026) |

| RBR | 0.683 (0.025) | 0.722 (0.024) | 0.714 (0.025) | 0.739 (0.024) |

| Without dNBRoffset | With dNBRoffset | ||||

|---|---|---|---|---|---|

| Accuracy (%) | 95% CI | Accuracy (%) | 95% CI | ||

| dNBR | MTBS-derived | 69.6 | 67.3–71.8 | 70.2 | 68.0–72.4 |

| GEE-derived | 71.3 | 69.0–73.4 | 71.7 | 69.5–73.9 | |

| RdNBR | MTBS-derived | 71.4 | 69.2–73.5 | 73.6 | 71.4–75.6 |

| GEE-derived | 73.4 | 71.2–75.5 | 73.1 | 71.0–75.3 | |

| RBR | MTBS-derived | 72.4 | 71.1–74.5 | 73.5 | 71.4–75.6 |

| GEE-derived | 73.5 | 71.4–75.6 | 74.1 | 72.0–76.2 | |

| Reference CBI Class | Reference CBI Class | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Classified using MTBS-derived dNBR | Low | Mod. | High | UA | Classified using GEE-derived dNBR | Low | Mod. | High | UA | ||

| Low | 401 | 159 | 18 | 69.4 | Low | 407 | 139 | 13 | 72.8 | ||

| Mod. | 91 | 412 | 114 | 66.8 | Mod. | 87 | 438 | 123 | 67.6 | ||

| High | 5 | 124 | 357 | 73.5 | High | 3 | 118 | 353 | 74.5 | ||

| PA | 80.7 | 59.3 | 73.0 | PA | 81.9 | 63.0 | 72.2 | ||||

| Reference CBI class | Reference CBI class | ||||||||||

| Classified using MTBS-derived RdNBR | Low | Mod. | High | UA | Classified using GEE-derived RdNBR | Low | Mod. | High | UA | ||

| Low | 366 | 142 | 7 | 71.1 | Low | 385 | 136 | 5 | 73.2 | ||

| Mod. | 119 | 451 | 99 | 67.4 | Mod. | 105 | 465 | 100 | 69.4 | ||

| High | 12 | 102 | 383 | 77.1 | High | 7 | 94 | 384 | 79.2 | ||

| PA | 73.6 | 64.9 | 78.3 | PA | 77.5 | 66.9 | 78.5 | ||||

| Reference CBI class | Reference CBI class | ||||||||||

| Classified using MTBS-derived RBR | Low | Mod. | High | UA | Classified using GEE-derived RBR | Low | Mod. | High | UA | ||

| Low | 380 | 127 | 12 | 73.2 | Low | 403 | 130 | 9 | 74.4 | ||

| Mod. | 113 | 462 | 102 | 68.2 | Mod. | 90 | 464 | 111 | 69.8 | ||

| High | 4 | 106 | 375 | 77.3 | High | 4 | 101 | 369 | 77.8 | ||

| PA | 76.5 | 66.5 | 76.7 | PA | 81.1 | 66.8 | 75.5 | ||||

| Reference CBI Class | Reference CBI Class | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Classified using MTBS-derived dNBR | Low | Mod. | High | UA | Classified using GEE-derived dNBR | Low | Mod. | High | UA | ||

| Low | 397 | 156 | 13 | 70.1 | Low | 402 | 141 | 10 | 72.7 | ||

| Mod. | 98 | 425 | 118 | 66.3 | Mod. | 92 | 451 | 126 | 67.4 | ||

| High | 2 | 114 | 358 | 75.5 | High | 3 | 103 | 353 | 76.9 | ||

| PA | 79.9 | 61.2 | 73.2 | PA | 80.9 | 64.9 | 72.2 | ||||

| Reference CBI class | Reference CBI class | ||||||||||

| Classified using MTBS-derived RdNBR | Low | Mod. | High | UA | Classified using GEE-derived RdNBR | Low | Mod. | High | UA | ||

| Low | 378 | 133 | 5 | 73.3 | Low | 390 | 137 | 5 | 73.3 | ||

| Mod. | 112 | 467 | 92 | 69.6 | Mod. | 101 | 460 | 104 | 69.2 | ||

| High | 7 | 95 | 392 | 79.4 | High | 6 | 98 | 380 | 78.5 | ||

| PA | 76.1 | 67.2 | 80.2 | PA | 78.5 | 66.2 | 77.7 | ||||

| Reference CBI class | Reference CBI class | ||||||||||

| Classified using MTBS-derived RBR | Low | Mod. | High | UA | Classified using GEE-derived RBR | Low | Mod. | High | UA | ||

| Low | 390 | 135 | 6 | 73.4 | Low | 386 | 123 | 7 | 74.8 | ||

| Mod. | 105 | 460 | 97 | 69.5 | Mod. | 107 | 481 | 103 | 69.6 | ||

| High | 2 | 100 | 386 | 79.1 | High | 4 | 91 | 379 | 80.0 | ||

| PA | 78.5 | 66.2 | 78.9 | PA | 77.7 | 69.2 | 77.5 | ||||

| MTBS-Derived | GEE-Derived | ||||||

|---|---|---|---|---|---|---|---|

| Low | Moderate | High | Low | Moderate | High | ||

| Excludes dNBRoffset | dNBR | ≤186 | 187–429 | ≥430 | ≤185 | 186–417 | ≥418 |

| RdNBR | ≤337 | 338–721 | ≥722 | ≤338 | 339–726 | ≥727 | |

| RBR | ≤134 | 135–303 | ≥304 | ≤135 | 136–300 | ≥301 | |

| Includes dNBRoffset | dNBR | ≤165 | 166–440 | ≥411 | ≤159 | 160–392 | ≥393 |

| RdNBR | ≤294 | 295–690 | ≥691 | ≤312 | 313–706 | ≥707 | |

| RBR | ≤118 | 119–289 | ≥289 | ≤115 | 116–282 | ≥283 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parks, S.A.; Holsinger, L.M.; Voss, M.A.; Loehman, R.A.; Robinson, N.P. Mean Composite Fire Severity Metrics Computed with Google Earth Engine Offer Improved Accuracy and Expanded Mapping Potential. Remote Sens. 2018, 10, 879. https://doi.org/10.3390/rs10060879

Parks SA, Holsinger LM, Voss MA, Loehman RA, Robinson NP. Mean Composite Fire Severity Metrics Computed with Google Earth Engine Offer Improved Accuracy and Expanded Mapping Potential. Remote Sensing. 2018; 10(6):879. https://doi.org/10.3390/rs10060879

Chicago/Turabian StyleParks, Sean A., Lisa M. Holsinger, Morgan A. Voss, Rachel A. Loehman, and Nathaniel P. Robinson. 2018. "Mean Composite Fire Severity Metrics Computed with Google Earth Engine Offer Improved Accuracy and Expanded Mapping Potential" Remote Sensing 10, no. 6: 879. https://doi.org/10.3390/rs10060879