Development of Stereo Visual Odometry Based on Photogrammetric Feature Optimization

Abstract

:1. Introduction

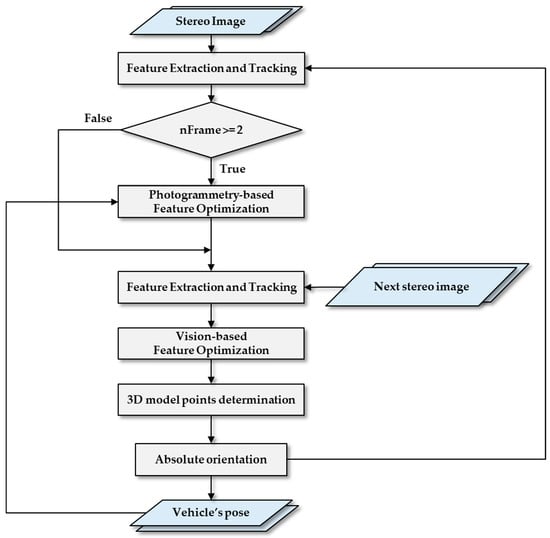

2. Materials and Methods

2.1. Feature Extraction and Matching

2.2. Corresponding Point Optimization

2.3. Absolute Orientation for Pose Estimation

3. Results

3.1. Corresponding Point Optimization Result

3.2. Pose Estimation Result for Three Cases

3.3. Estimation Results for Ten Sequences in KITTI Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lee, H.K.; Lee, J.G.; Jee, G.I. Channelwise multipath detection for general GPS receivers. J. Inst. Control Robot. Syst. 2002, 8, 818–826. [Google Scholar]

- Gräter, J.; Schwarze, T.; Lauer, M. Robust scale estimation for monocular visual odometry using structure from motion and vanishing points. In Proceedings of the 2015 IEEE Intelligent Vehicles, Seoul, Korea, 28 June–1 July 2015; pp. 475–480. [Google Scholar]

- Jeong, J.; Kim, T. Analysis of dual-sensor stereo geometry and its positioning accuracy. Photogramm. Eng. Remote Sens. 2014, 80, 653–661. [Google Scholar] [CrossRef]

- Cvišić, I.; Ćesić, J.; Marković, I.; Petrović, I. SOFT-SLAM: Computationally efficient stereo visual simultaneous localization and mapping for autonomous unmanned aerial vehicles. J. Field Robot. 2018, 35, 578–595. [Google Scholar] [CrossRef]

- Buczko, M.; Willert, V. Flow-decoupled normalized reprojection error for visual odometry. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1161–1167. [Google Scholar]

- Zhu, J. Image gradient-based joint direct visual odometry for stereo camera. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 4558–4564. [Google Scholar]

- The Elbrus method on KITTI site. Available online: www.cvlibs.net/datasets/kitti/eval_odometry_detail.php?&result=87e2f700437fe9c32003ee8b60ff5f828507ddf4 (accessed on 17 December 2018).

- Mankowitz, D.J.; Rivlin, E. CFORB: Circular FREAK-ORB visual odometry. arXiv, 2015; arXiv:1506.05257. [Google Scholar]

- Wu, F.L.; Fang, X.Y. An improved RANSAC homography algorithm for feature based image mosaic. In Proceedings of the 7th WSEAS International Conference on Signal Processing, Computational Geometry & Artificial Vision, Athens, Greece, 24–26 August 2007; pp. 202–207. [Google Scholar]

- Wang, R.; Schwörer, M.; Cremers, D. Stereo dso: Large-scale direct sparse visual odometry with stereo cameras. In Proceedings of the International Conference on Computer Vision (ICCV), Venezia, Italy, 22–27 October 2017; pp. 3903–3911. [Google Scholar]

- Buczko, M.; Willert, V. How to distinguish inliers from outliers in visual odometry for high-speed automotive applications. In Proceedings of the Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 478–483. [Google Scholar]

- Persson, M.; Piccini, T.; Felsberg, M.; Mester, R. Robust stereo visual odometry from monocular techniques. In Proceedings of the Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 686–691. [Google Scholar]

- Buczko, M.; Willert, V. Monocular outlier detection for visual odometry. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 739–745. [Google Scholar]

- Deigmoeller, J.; Eggert, J. Stereo visual odometry without temporal filtering. In Proceedings of the German Conference on Pattern Recognition, Hannover, Germany, 12–15 September 2016; pp. 166–175. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- The KITTI Vision Benchmark Suite—Andreas Geiger. Available online: www.cvlibs.net/datasets/kitti/ (accessed on 2 December 2018).

- Mu, K.; Hui, F.; Zhao, X. Multiple vehicle detection and tracking in highway traffic surveillance video based on SIFT feature matching. J. Inf. Process. Syst. 2016, 12, 183–195. [Google Scholar]

- Patel, M.S.; Patel, N.M.; Holia, M.S. Feature based multi-view image registration using SURF. In Proceedings of the 2015 International Symposium on Advanced Computing and Communication (ISACC), Silchar, India, 14–15 September 2015; pp. 213–218. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Jiang, J.; Yilmaz, A. Good features to track: A view geometric approach. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 72–79. [Google Scholar]

| Detector | Descriptor | Matcher |

|---|---|---|

| Scale invariant feature transform (SIFT) | SIFT | Brute-Force |

| Speed-up robust feature (SURF) | SURF | Brute-Force |

| Features from accelerated segment test (FAST) | Binary robust invariant scalable keypoints (BRISK) | Fast library for approximate nearest neighbors (FLANN) |

| FAST | Oriented fast and rotated binary robust independent elementary feature (ORB) | FLANN |

| FAST | Fast retina keypoint (FREAK) | FLANN |

| Extractor | Tracker |

|---|---|

| FAST | Kanade–Lucas–Tomasi tracker |

| Shi–Thomasi corner | Kanade–Lucas–Tomasi tracker |

| Rotation Error Rate (deg/m) | Translation Error Rate (%) | |

|---|---|---|

| Before optimization | 12.8637 | 0.0354 |

| After optimization | 3.9254 | 0.0178 |

| Sequence | Rotation Error Rate (deg/m) | Translation Error Rate (%) | Processing Time Per Frame (s) |

|---|---|---|---|

| (a) | 0.0127 | 2.3817 | 0.0269 |

| (b) | 0.0108 | 2.6987 | 0.0347 |

| (c) | 0.0162 | 2.3109 | 0.0324 |

| Average | 0.0132 | 2.4638 | 0.0313 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, S.-J.; Kim, T. Development of Stereo Visual Odometry Based on Photogrammetric Feature Optimization. Remote Sens. 2019, 11, 67. https://doi.org/10.3390/rs11010067

Yoon S-J, Kim T. Development of Stereo Visual Odometry Based on Photogrammetric Feature Optimization. Remote Sensing. 2019; 11(1):67. https://doi.org/10.3390/rs11010067

Chicago/Turabian StyleYoon, Sung-Joo, and Taejung Kim. 2019. "Development of Stereo Visual Odometry Based on Photogrammetric Feature Optimization" Remote Sensing 11, no. 1: 67. https://doi.org/10.3390/rs11010067