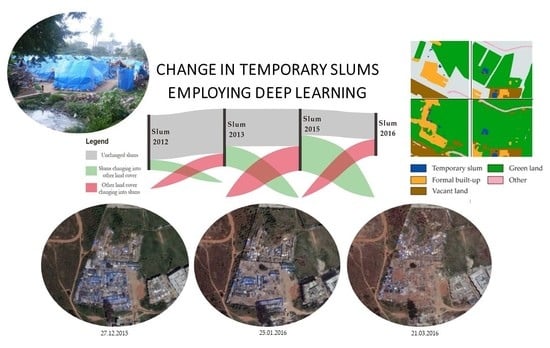

The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach

Abstract

:1. Introduction

2. Materials and Methods

2.1. The Study Area and Data Sets

2.2. Data Preparation and Pre-Processing

- Tiles have to be covered by all image data from 2012 to 2016.

- Slums are present in the selected tiles.

- Slums in the selected tiles have changed between 2012 and 2016.

2.3. Training and Testing Data

- The training tiles cover all the land-use classes.

- Every slum change trajectory is included in the training tiles.

2.4. Change Detection

2.4.1. Proposed FCNs

2.4.2. Post-Classification Change Detection

2.4.3. Change-Detection Net

2.4.4. Noise Reduction for Land-Use Classification

2.5. Accuracy Assessment

3. Results

3.1. FCN-Based Land-Use Classification

3.1.1. Comparing the Performance of 5 × 5 Networks and 3 × 3 Networks

3.1.2. Noise Reduction for Land-Use Classification

3.2. Change Detection

3.2.1. Performance of 5 × 5 Networks and 3 × 3 Networks

3.2.2. Accuracy Assessment by Confusion Matrix

3.2.3. Accuracy Assessment by Trajectory Error Matrix

3.2.4. Change Detection Maps

4. Discussion

4.1. Temporal Dynamics of Slums in Bangalore

4.2. The Pattern of Slum Changing

4.3. Methodological Advantages and Disadvantages

4.4. Accuracy Assessment

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- United Nations. UN-DESA World Urbanization Prospects: The 2018 Revision; United Nations: New York, NY, USA, 2018. [Google Scholar]

- Kohli, D.; Sliuzas, R.; Kerle, N.; Stein, A. An ontology of slums for image-based classification. Comput. Environ. Urban Syst. 2012, 36, 154–163. [Google Scholar] [CrossRef]

- UN-HABITAT. State of the World’s Cities, 2006/2007: 30 Years of Shaping the Habitat Agenda; UN-HABITAT: London, UK, 2006. [Google Scholar]

- UN General Assembly. Transforming Our World: The 2030 Agenda for Sustainable Development; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Mahabir, R.; Crooks, A.; Croitoru, A.; Agouris, P.; Mahabir, R.; Crooks, A.; Croitoru, A.; The, P.A. The study of slums as social and physical constructs: Challenges and emerging research opportunities. Reg. Stud. Reg. Sci. 2016, 3, 400–420. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R. Slums from space-15 years of slum mapping using remote sensing. Remote Sens. 2016, 8, 455. [Google Scholar] [CrossRef]

- Ranguelova, E.; Weel, B.; Roy, D.; Kuffer, M.; Pfeffer, K.; Lees, M. Image based classification of slums, built-up and non-built-up areas in Kalyan and Bangalore, India. Eur. J. Remote Sens. 2019, 52 (Suppl. S1), 40–61. [Google Scholar] [CrossRef]

- Kit, O.; Lüdeke, M. Automated detection of slum area change in Hyderabad, India using multitemporal satellite imagery. ISPRS J. Photogramm. Remote Sens. 2013, 83, 130–137. [Google Scholar] [CrossRef]

- Veljanovski, T.; Kanjir, U.; Pehani, P.; Otir, K.; Kovai, P. Object-Based Image Analysis of VHR Satellite Imagery for Population Estimation in Informal Settlement Kibera-Nairobi, Kenya. In Remote Sensing—Applications; Escalante, B., Ed.; InTech: Rijeka, Croatia, 2012; pp. 407–434. ISBN 978-953-51-0651-7. [Google Scholar]

- Pratomo, J.; Kuffer, M.; Kohli, D.; Martinez, J. Application of the trajectory error matrix for assessing the temporal transferability of OBIA for slum detection. Eur. J. Remote Sens. 2018, 51, 838–849. [Google Scholar] [CrossRef]

- Kohli, D.; Warwadekar, P.; Kerle, N.; Sliuzas, R.; Stein, A. Transferability of object-oriented image analysis methods for slum identification. Remote Sens. 2013, 5, 4209–4228. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I. Extraction of Slum Areas From VHR Imagery Using GLCM Variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1830–1840. [Google Scholar] [CrossRef]

- Hofmann, P.; Strobl, J.; Blaschke, T.; Kux, H. Detecting informal settlements from QuickBird data in Rio de Janeiro using an object based approach. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 531–553. ISBN 3540770577. [Google Scholar]

- Badmos, O.S.; Rienow, A.; Callo-Concha, D.; Greve, K.; Jürgens, C. Simulating slum growth in Lagos: An integration of rule based and empirical based model. Comput. Environ. Urban Syst. 2019, 77, 101369. [Google Scholar] [CrossRef]

- Bachofer, F.; Murray, S. Remote Sensing for Measuring Housing Supply in Kigali Remote Sensing for Measuring Housing Supply in Kigali, Final Report CONTENT; International Growth Centre: London, UK, 2018. [Google Scholar]

- Liu, H.; Huang, X.; Wen, D.; Li, J. The use of landscape metrics and transfer learning to explore urban villages in China. Remote Sens. 2017, 9, 365. [Google Scholar] [CrossRef]

- Duque, J.C.; Patino, J.E.; Betancourt, A. Exploring the potential of machine learning for automatic slum identification from VHR imagery. Remote Sens. 2017, 9, 895. [Google Scholar] [CrossRef]

- Verma, D.; Jana, A.; Ramamritham, K. Transfer learning approach to map urban slums using high and medium resolution satellite imagery. Habitat Int. 2019, 88, 101981. [Google Scholar] [CrossRef]

- Wang, J.; Kuffer, M.; Roy, D.; Pfeffer, K. Deprivation pockets through the lens of convolutional neural networks. Remote Sens. Environ. 2019, 234, 111448. [Google Scholar] [CrossRef]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Networks for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Van Den Hengel, A. Semantic Labeling of Aerial and Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2868–2881. [Google Scholar] [CrossRef]

- Mboga, N.; Persello, C.; Bergado, J.R.; Stein, A. Detection of informal settlements from VHR images using convolutional neural networks. Remote Sens. 2017, 9, 1106. [Google Scholar] [CrossRef]

- Ajami, A.; Kuffer, M.; Persello, C.; Pfeffer, K. Identifying a Slums’ Degree of Deprivation from VHR Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1282. [Google Scholar] [CrossRef]

- Persello, C.; Stein, A. Deep Fully Convolutional Networks for the Detection of Informal Settlements in VHR Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2325–2329. [Google Scholar] [CrossRef]

- Sun, W.; Wang, R. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined with DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for high resolution remote sensing imagery using a fully convolutional network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Government of India Census 2011 India. Available online: http://www.census2011.co.in/ (accessed on 23 August 2018).

- Census Organization of India Bangalore (Bengaluru) City Population Census 2011–2019|Karnataka. Available online: https://www.census2011.co.in/census/city/448-bangalore.html (accessed on 14 February 2019).

- Roy, D.; Lees, M.H.; Pfeffer, K.; Sloot, P.M.A. Spatial segregation, inequality, and opportunity bias in the slums of Bengaluru. Cities 2018, 74, 269–276. [Google Scholar] [CrossRef]

- Government of India. Slums in India: A Statistical Compendium 2015; Ministry of Housing and Urban Poverty Alleviation, National Buildings Organization: New Delhi, India, 2015.

- Krishna, A.; Sriram, M.S.; Prakash, P. Slum types and adaptation strategies: Identifying policy-relevant differences in Bangalore. Environ. Urban. 2014, 26, 568–585. [Google Scholar] [CrossRef]

- DynaSlum. Available online: http://www.dynaslum.com/ (accessed on 23 August 2018).

- Bergado, J.R.; Persello, C.; Gevaert, C. A deep learning approach to the classification of sub-decimetre resolution aerial images. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; Volume 2016-Novem, pp. 1516–1519. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the International Conference on Machine Learning (ICML), Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Radoux, J.; Bogaert, P. Good practices for object-based accuracy assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Li, B.; Zhou, Q. Accuracy assessment on multi-temporal land-cover change detection using a trajectory error matrix. Int. J. Remote Sens. 2009, 30, 1283–1296. [Google Scholar] [CrossRef]

- Kohli, D.; Stein, A.; Sliuzas, R. Uncertainty analysis for image interpretations of urban slums. Comput. Environ. Urban Syst. 2016, 60, 37–49. [Google Scholar] [CrossRef]

- Kuffer, M.; Wang, J.; Nagenborg, M.; Pfeffer, K.; Kohli, D.; Sliuzas, R.; Persello, C. The Scope of Earth-Observation to Improve the Consistency of the SDG Slum Indicator. ISPRS Int. J. Geo Inf. 2018, 7, 428. [Google Scholar] [CrossRef]

- Molenaar, M. Three conceptual uncertainty levels for spatial objects. Int. Arch. Photogramm. Remote Sens. 2000, 33, 670–677. [Google Scholar]

- Dai, F.; Wang, Q.; Gong, Y.; Chen, G.; Zhang, X.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar]

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef] [Green Version]

| Satellite | Resolution | Band Number | Time |

|---|---|---|---|

| WorldView 2 | 0.5 × 0.5 m (multispectral) | 8 bands | 01.12.2012 |

| 2.0 × 2.0 m (panchromatic) | 24.04.2013 | ||

| WorldView 3 | 0.3 × 0.3 m (multispectral) | 8 bands | 16.02.2015 |

| 1.2 × 1.2 m (panchromatic) | 06.01.2016 |

| Class | Description | Label | Count |

|---|---|---|---|

| Temporary slum | Tents with blue plastic sheeting and small unit size | 1 | 1,328,901 |

| Green land | Open land covered by vegetation | 2 | 4,843,864 |

| Vacant land | Bare soil land | 3 | 3,687,606 |

| Formally built-up | Formal buildings, roads | 4 | 10,984,295 |

| Other | Car park, water body, etc. | 5 | 488,007 |

| Class | Description | Land-Use in T1 | Land-Use in T2 | Label |

|---|---|---|---|---|

| Increased slum | Temporary slum did not exist in T1 but appeared in T2. | Green land Vacant land Formally built-up Other | Temporary slum | 1 |

| Decreased slum | Temporary slum existed in T1 but disappeared in T2. | Temporary slum | Green land Vacant land Formally built-up Other | 2 |

| Unchanged slum | Temporary slum stayed unchanged between T1 and T2 | Temporary slum | Temporary slum | 3 |

| Other | Other land use | Green land Vacant land Formally built-up Other | Green land Vacant land Formally built-up Other | 4 |

| T1: An earlier year | T2: A later year |

| Layer | Module Type | Dimension | Dilation | Stride | Pad |

|---|---|---|---|---|---|

| DK1 | convolution | 5 × 5 × 8 × 16 | 1 | 1 | 2 |

| lReLUs | |||||

| DK2 | convolution | 5 × 5 × 16 × 32 | 2 | 1 | 4 |

| lReLUs | |||||

| DK3 | convolution | 5 × 5 × 32 × 32 | 3 | 1 | 6 |

| lReLUs | |||||

| DK4 | convolution | 5 × 5 × 32 × 32 | 4 | 1 | 8 |

| lReLUs | |||||

| DK5 | convolution | 5 × 5 × 32 × 32 | 5 | 1 | 10 |

| lReLUs | |||||

| DK6 | convolution | 5 × 5 × 32 × 32 | 6 | 1 | 12 |

| lReLUs | |||||

| Class. | convolution | 1 × 1 × 32 × 5 | 1 | 1 | 0 |

| softmax |

| Layer | Module Type | Dimension | Dilation | Stride | Pad |

|---|---|---|---|---|---|

| DK1 | convolution | 3 × 3 × 8 × 16 | 1 | 1 | 1 |

| lReLUs | |||||

| convolution | 3 × 3 × 16 × 16 | 1 | 1 | 1 | |

| lReLUs | |||||

| DK2 | convolution | 3 × 3 × 16 × 32 | 2 | 1 | 2 |

| lReLUs | |||||

| convolution | 3 × 3 × 32 × 32 | 2 | 1 | 2 | |

| lReLUs | |||||

| DK3 | convolution | 3 × 3 × 32 × 32 | 3 | 1 | 3 |

| lReLUs | |||||

| convolution | 3 × 3 × 32 × 32 | 3 | 1 | 3 | |

| lReLUs | |||||

| DK4 | convolution | 3 × 3 × 32 × 32 | 4 | 1 | 4 |

| lReLUs | |||||

| convolution | 3 × 3 × 32 × 32 | 4 | 1 | 4 | |

| lReLUs | |||||

| DK5 | convolution | 3 × 3 × 32 × 32 | 5 | 1 | 5 |

| lReLUs | |||||

| convolution | 3 × 3 × 32 × 32 | 5 | 1 | 5 | |

| lReLUs | |||||

| DK6 | convolution | 3 × 3 × 32 × 32 | 6 | 1 | 6 |

| lReLUs | |||||

| convolution | 3 × 3 × 32 × 32 | 6 | 1 | 6 | |

| lReLUs | |||||

| Class. | convolution | 1 × 1 × 32 × 5 | 1 | 1 | 0 |

| softmax |

| Year | Land-Use Class Label | ||||

|---|---|---|---|---|---|

| Temporary Slum | Green Land | Vacant Land | Formal Built-Up | Other | |

| 2012 | 1 | 2 | 3 | 4 | 5 |

| 2013 | 10 | 20 | 30 | 40 | 50 |

| 2015 | 100 | 200 | 300 | 400 | 500 |

| 2016 | 1000 | 2000 | 3000 | 4000 | 5000 |

| Groups | Classification Situation | Interpretations |

|---|---|---|

| S1 | Correct | Correctly detected as non-changed with the correct classification |

| S2 | Correctly detected as a changed slum with correct trajectory | |

| S3 | Incorrect | Correctly detected as non-changed with an incorrect classification |

| S4 | Incorrectly detected as changed slum | |

| S5 | Incorrectly detected as non-changed | |

| S6 | Correctly detected as a changed slum with an incorrect trajectory |

| 5 × 5 Networks | 3 × 3 Networks | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| 2012 | 85.57% | 97.04% | 90.85% | 85.79% | 96.99% | 90.95% |

| 2013 | 84.20% | 97.00% | 90.03% | 84.32% | 96.02% | 89.55% |

| 2015 | 81.55% | 85.76% | 83.29% | 84.41% | 89.69% | 86.82% |

| 2016 | 74.40% | 85.76% | 81.97% | 79.44% | 89.69% | 86.58% |

| In total | 81.10% | 93.19% | 86.32% | 83.30% | 96.55% | 88.38% |

| Original Classification | Majority Analysis | Classification Clumping | |

|---|---|---|---|

| 2012 | 90.95% | 89.38% | 87.39% |

| 2013 | 89.55% | 89.19% | 86.43% |

| 2015 | 86.82% | 88.03% | 86.21% |

| 2016 | 86.58% | 86.80% | 84.23% |

| In total | 88.38% | 88.35% | 86.06% |

| 5 × 5 Networks | 3 × 3 Networks | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | |

| 2012–2013 | 13.85% | 42.26% | 20.25% | 12.75% | 40.42% | 18.31% |

| 2013–2015 | 34.79% | 42.31% | 36.01% | 31.87% | 52.59% | 37.88% |

| 2015–2016 | 22.41% | 47.46% | 28.76% | 31.52% | 54.17% | 36.49% |

| In total | 23.68% | 44.01% | 28.34% | 25.38% | 49.06% | 30.89% |

| Post-Classification | Change-Detection Networks | |

|---|---|---|

| 2012–2013 | 43.69% | 49.69% |

| 2013–2015 | 61.52% | 60.66% |

| 2015–2016 | 55.95% | 50.96% |

| In total | 53.80% | 53.68% |

| Tile | Post-Classification | Change-Detection Networks | ||||

|---|---|---|---|---|---|---|

| 2012–2013 | 2013–2015 | 2015–2016 | 2012–2013 | 2013–2015 | 2015–2016 | |

| 1 | 36.67% | 38.42% | 11.92% | 22.69% | 19.89% | 3.86% |

| 2 | 37.19% | 55.15% | 55.00% | 19.31% | 51.32% | 40.37% |

| 3 | 41.66% | 70.22% | 51.31% | 78.46% | 89.30% | 73.27% |

| 4 | 28.87% | 63.24% | 42.71% | 17.79% | 54.50% | 24.37% |

| 5 | 54.70% | 73.69% | 70.20% | 91.54% | 94.97% | 91.29% |

| 6 | 36.13% | 57.11% | 47.94% | 23.66% | 36.65% | 39.20% |

| 7 | 62.28% | 82.82% | 92.63% | 91.29% | 95.20% | 96.93% |

| 8 | * | * | 73.58% | * | * | 48.65% |

| 9 | 62.58% | 72.98% | 63.93% | 84.70% | 86.48% | 75.51% |

| 10 | 33.11% | 40.03% | 50.31% | 17.78% | 17.68% | 16.12% |

| Tile 3/5/7/9: Training tiles * No changes in this tile | ||||||

| Tile | Method | 2012–2013 | 2013–2015 | 2015–2016 | In Total |

|---|---|---|---|---|---|

| Training | Post-classification | 55.30% | 74.93% | 69.52% | 66.58% |

| Change-detection networks | 86.50% | 91.49% | 84.25% | 87.41% | |

| Testing | Post-classification | 34.39% | 50.79% | 46.91% | 44.21% |

| Change-detection networks | 20.25% | 36.01% | 28.76% | 28.37% |

| Indices | Post-Classification | Change-Detection Networks |

|---|---|---|

| overall accuracy (AT) | 76.36% | 72.30% |

| change/no change accuracy (AC/N), | 89.60% | 80.12% |

| overall accuracy difference (OAD) | 13.24% | 7.82% |

| accuracy difference of no change trajectory (ADICN) | 100.00% | 100.00% |

| accuracy difference of change trajectory (ADICC) | 67.18% | 74.17% |

| 2012–2013 | 2013–2015 | 2015–2016 | ||||

|---|---|---|---|---|---|---|

| (m2) | Increase | Decrease | Increase | Decrease | Increase | Decrease |

| Reference data | 8873 | 4047 | 12,614 | 9652 | 7203 | 19,860 |

| Post-classification | 7981 | 6377 | 15,205 | 12,471 | 10,030 | 21,980 |

| Change-detection networks | 4826 | 2612 | 9313 | 13,403 | 5654 | 13,364 |

| Increased | Decreased | ||||

|---|---|---|---|---|---|

| Proportion | Changing Rate (m2/Year) | Proportion | Changing Rate (m2/Year) | ||

| other → slum | 0.64% | 22 | slum → green land | 42.64% | 2250 |

| formally built-up → slum | 24.11% | 819 | slum → vacant land | 36.71% | 1937 |

| green land → slum | 32.68% | 1111 | slum → formally built-up | 20.51% | 1083 |

| vacant land → slum | 42.57% | 1447 | slum → other | 0.14% | 7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, R.; Kuffer, M.; Persello, C. The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach. Remote Sens. 2019, 11, 2844. https://doi.org/10.3390/rs11232844

Liu R, Kuffer M, Persello C. The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach. Remote Sensing. 2019; 11(23):2844. https://doi.org/10.3390/rs11232844

Chicago/Turabian StyleLiu, Ruoyun, Monika Kuffer, and Claudio Persello. 2019. "The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach" Remote Sensing 11, no. 23: 2844. https://doi.org/10.3390/rs11232844