A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification

Abstract

:1. Introduction

Aim and Structure of the Paper

2. State of the Art

3. Case Studies

3.1. Milan Cathedral

3.1.1. 3D Survey

3.1.2. Classification Needs

- Derivation of measurements and 2D representations from anywhere in the Cathedral.

- Identification, counting and visualisation of single architectural elements.

- A better interpretation of the architectural structures at point cloud level, avoiding long and tedious modelling processes.

- Keeping track of every restoration activity, treating the point cloud as a complete 3D navigable information system where it is possible to reference information, data, and a catalogue of archive documents.

- Generation of a BIM-like web-based information system platform, usable in the field within a mixed-reality system.

3.2. Pomposa Abbey

3.2.1. 3D Survey

3.2.2. Classification Needs

- Derivation of measurements and 2D representations.

- Monitoring the building behaviour over time, by verifying masonries, columns and roof roto-translations: a comparison of 3D point clouds acquired over time can be considered a valuable solution to exactly and completely describe the whole fabric.

- Geometric quality check of the individual elements belonging to the same class, highlighting possible alterations in the structural composition of the building.

- Quantification of the building from a material and functional point of view, a crucial step for both a conservative intervention and damage evaluation (e.g., after a destructive event).

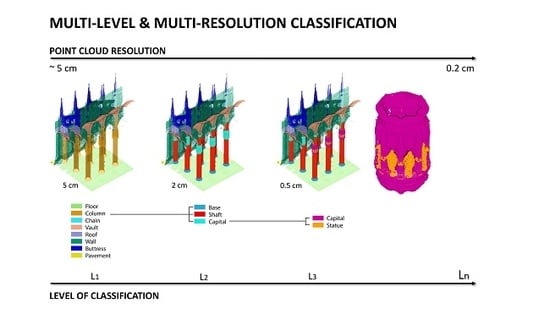

4. Developed Methodology

- The size of the dataset and the number of geometrical features to be extracted makes the computational process challenging. If the latter can be solved choosing only essential features, a subsampling of the acquired point cloud could help to handle the high number of points, but it would lower the details visible in the dataset.

- The large number of semantic classes to be identified might induce misclassification issues: initial experiments have shown that with a higher number of classes a lower accuracy of the classification is achieved (Section 7.1.1 and Section 7.2.1).

- The full-resolution point cloud is subsampled in various geometric levels.

- Given certain manually annotated areas corresponding to the classes of interest of the first geometric level, a RF model is trained and then all classes are predicted on the entire point cloud.

- Classification results coming from this first step are back interpolated (BI) on a higher resolution version of the dataset, using a nearest neighbourhood algorithm. To do so, the class value assigned to each point is transferred to a certain number of elements evaluated inside a specific cluster; the number of nearest neighbourhoods to be evaluated depends on the point cloud resolution. The higher the resolution, the higher the number of points in the neighbourhood.

- The next geometric level is considered with its new classes (e.g., columns are divided in base, shaft and capitals) and a new classification procedure is applied.

- Classification results are again back interpolated on a higher resolution version of the point cloud until the full geometric resolution is reached.

5. Semantic Classes and Classification Levels

5.1. Milan Cathedral

5.2. Pomposa Abbey

6. Data Processing

6.1. Geometric Features Extraction

- Anisotropy: allows the recognition of 3D elements such as columns, buttresses and spires over 2.5D elements such as walls and vaults.

- Planarity: highlight linear and planar items such as chains and floors and their vertical counterparts such as column shaft and walls.

- Linearity: similarly to planarity, it helps in identifying linear structures.

- Surface variation: it emphasises changes in the shapes allowing, for example, to detect corners or edges.

- Sphericity: similarly to surface variation, it helps in identifying spherical and cylindrical elements, such as columns.

- Verticality: it points out vertical and horizontal surfaces allowing the recognition of walls and floors as well as column shafts.

6.2. Training and Classification

7. Results and Discussion

7.1. Milan Cathedral

7.1.1. One-Step Classification

7.1.2. MLMR Classification

7.2. Pomposa Abbey

7.2.1. One Step Classification

7.2.2. MLMR classification

8. Conclusions

- The use of machine learning techniques to quickly classify large and complex 3D architectures without the need of large training datasets.

- The definition of general rules (e.g., identification of geometric features), replicable in various heritage scenarios, in terms of relations among classification levels, point cloud resolution and minimum/maximum feature search radii.

- The hierarchical segmentation (until single instances) of 3D surveying data which could facilitate HBIM processes.

- The speed of the process: once training and validation sets are defined, the prediction to the entire dataset is achieved in a few minutes.

- The objectivity of the classification procedure: objective rules are applied uniformly throughout the entire process, making the process repeatable and independent from subjective choices of an operator.

- Better investigation of the relationship between classification levels, point cloud resolution and features search radii: it is necessary to understand if the automatic classification with specific features can be generalised concerning data density, or if it is case dependent.

- Verification of the usefulness of the classification process for the scan-to-BIM process, checking if the extracted semantic structures and instances facilitate the preparatory work for the construction of BIM models.

- Checking if the semantically segmented point clouds could facilitate the generation of polygonal meshes.

- Extension of the instance segmentation, not only to repeated and separated elements but also to those classes that present differences in composition or material, even if contiguous and similar in shape (e.g., walls).

- Creation of a more user-friendly classification framework to be used by non-experts in the sector.

- Testing the possibility to automatically process the data acquired on-site with mobile scanner instruments for real-time monitoring applications.

- Improvement of the classification details by integrating information coming from images, which generally feature higher resolution, hence allows for a better identification/distinction of small elements (e.g., classification of each single marble block composing the Milan Cathedral).

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Remondino, F.; Georgopoulos, A.; Agrafiotis, P. Latest Developments in Reality-Based 3D Surveying and Modelling; MDPI AG: Basel, Switzerland, 2018. [Google Scholar] [CrossRef]

- Gonzalez-Aguilera, D.; Remondino, F.; Nocerino, E. Remote Sensed Data and Processing Methodologies for 3D Virtual Reconstruction and Visualization of Complex Architectures; MDPI AG: Basel, Switzerland, 2016. [Google Scholar] [CrossRef] [Green Version]

- Apollonio, F.I.; Basilissi, V.; Callieri, M.; Dellepiane, M.; Gaiani, M.; Ponchio, F.; Rizzo, F.; Rubino, A.R.; Scopigno, R.; Sobra’, G. A 3D-centered information system for the documentation of a complex restoration intervention. J. Cult. Herit. 2018, 29, 89–99. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Toschi, I.; Morabito, D.; Remondino, F.; Rodriguez-Gonzalvez, P. Valorisation of history and landscape for promoting the memory of WWI. J. Cult. Herit. 2018, 29, 113–122. [Google Scholar] [CrossRef] [Green Version]

- Malinverni, E.S.; Pierdicca, R.; Paolanti, M.; Martini, M.; Morbidoni, C.; Matrone, F.; Lingua, A. Deep learning for semantic segmentation of 3D point cloud. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 735–742. [Google Scholar] [CrossRef] [Green Version]

- Roussel, R.; Bagnéris, M.; De Luca, L.; Bomblet, P. A digital diagnosis for the « Autumn » statue (Marseille, France): Photogrammetry, digital cartography and construction of a thesaurus. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 1039–1046. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification Algorithms. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, D.; Boehm, J. A Review on Deep Learning Techniques for 3D Sensed Data Classification. Remote Sens. 2019, 11, 1499. [Google Scholar] [CrossRef] [Green Version]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar] [CrossRef]

- Jiang, H.; Yan, F.; Cai, J.; Zheng, J.; Xiao, J. End-to-end 3D Point Cloud Instance Segmentation without Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 16–18 June 2020; pp. 12796–12805. [Google Scholar]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-Time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNNs. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Zhang, Y.; Wang, J. Map-Based Localization Method for Autonomous Vehicles Using 3D-LIDAR. In IFAC-PapersOnLine; Elsevier: Amsterdam, The Netherlands, 2017; Volume 50, pp. 276–281. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 16–18 June 2020; pp. 11108–11117. [Google Scholar]

- Kim, W.; Seok, J. Indoor Semantic Segmentation for Robot Navigating on Mobile. In Proceedings of the International Conference on Ubiquitous and Future Networks, Prague, Czech Republic, 3–6 July 2018; pp. 22–25. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods. ISPRS Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Vosselman, G.; Elberink, S.O. Multiple-entity based classification of airborne laser scanning data in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 88, 1–15. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, Y.; Hu, H.; Wu, B. Robust point cloud classification based on multi-level semantic relationships for urban scenes. ISPRS J. Photogramm. Remote Sens. 2017, 129, 86–102. [Google Scholar] [CrossRef]

- Weinmann, M.; Schmidt, A.; Mallet, C.; Hinz, S.; Rottensteiner, F.; Jutzi, B. Contextual classification of point cloud data by exploiting individual 3D neigbourhoods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II-3/W4, 271–278. [Google Scholar] [CrossRef] [Green Version]

- Özdemir, E.; Remondino, F.; Golkar, A. Aerial point cloud classification with deep learning and machine learning algorithms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 843–849. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Dininno, D.; Petrucci, G.; Remondino, F. From 2D to 3D supervised segmentation and classification for cultural heritage applications. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 2, 399–406. [Google Scholar] [CrossRef] [Green Version]

- Son, H.; Kim, C. Semantic As-built 3D Modeling of Structural Elements of Buildings based on Local Concavity and Convexity. In Advanced Engineering Informatics; Elsevier: Amsterdam, The Netherlands, 2017; Volume 34, pp. 114–124. [Google Scholar] [CrossRef]

- Lu, Q.; Lee, S. Image-based technologies for constructing as-is building information models for existing buildings. J. Comput. Civ. Eng. 2017, 31, 04017005. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Bassier, M.; Yousefzadeh, M.; Vergauwen, M. Comparison of 2D and 3D wall reconstruction algorithms from point cloud data for as-built BIM. J. Inf. Technol. Constr. 2020, 25, 173–192. [Google Scholar] [CrossRef]

- Valero, E.; Bosche, F.; Forster, A.M. Automatic segmentation of 3D point clouds of rubble masonry walls, and its application to building surveying, repair and maintenance. Autom. Constr. 2018, 96, 29–39. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Del Pozo, S.; Ramos, L.F.; Arce, A.; Fernandes, F. Heritage site preservation with combined radiometric and geometric analysis of TLS data. Autom. Constr. 2018, 85, 24–39. [Google Scholar] [CrossRef]

- Bosché, F. Automated Recognition of 3D CAD Model objects in Laser Scans and Calculation of As-built Dimensions for Dimensional Compliance Control in Construction. In Advanced Engineering Informatics; Elsevier: Amsterdam, The Netherlands, 2010; Volume 24, pp. 107–118. [Google Scholar] [CrossRef]

- Ordóñez, C.; Martínez, J.; Arias, P.; Armesto, J.; Martinez-Sanchez, J. Measuring building façades with a low-cost close-range photogrammetry system. Autom. Constr. 2010, 19, 742–749. [Google Scholar] [CrossRef]

- Mizoguchi, T.; Koda, Y.; Iwaki, I.; Wakabayashi, H.; Kobayashi, Y.; Shirai, K.; Hara, Y.; Lee, H.-S. Quantitative scaling evaluation of concrete structures based on terrestrial laser scanning. Autom. Constr. 2013, 35, 263–274. [Google Scholar] [CrossRef]

- Kashani, A.G.; Graettinger, A.J. Cluster-Based Roof Covering Damage Detection in Ground-Based Lidar Data. Autom. Constr. 2015, 58, 19–27. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Virtual Disassembling of Historical Edifices: Experiments and Assessments of an Automatic Approach for Classifying Multi-Scalar Point Clouds into Architectural Elements. Sensors 2020, 20, 2161. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grilli, E.; Farella, E.M.; Torresani, A.; Remondino, F. Geometric features analysis for the classification of cultural heritage point clouds. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W, 541–548. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Remondino, F. Machine Learning Generalisation across Different 3D Architectural Heritage. ISPRS Int. J Geo-Inf. 2020, 9, 379. [Google Scholar] [CrossRef]

- Llamas, J.; Lerones, P.M.; Medina, R.; Zalama, E.; García-Bermejo, J.G. Classification of Architectural Heritage Images Using Deep Learning Techniques. Appl. Sci. 2017, 7, 992. [Google Scholar] [CrossRef] [Green Version]

- Yasser, A.M.; Clawson, K.; Bowerman, C.; Mustafá, Y. Saving cultural heritage with digital make-believe: Machine learning and digital techniques to the rescue. In Proceedings of the 31st British Computer Society Human Computer Interaction Conference, Sunderland, UK, 3–6 July 2017; pp. 97–101. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef] [Green Version]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 24 January 2019. [Google Scholar]

- Pierdicca, R.; Paolanti, M.; Matrone, F.; Martini, M.; Morbidoni, C.; Malinverni, E.S.; Frontoni, E.; Lingua, A.M. Point Cloud Semantic Segmentation Using a Deep Learning Framework for Cultural Heritage. Remote Sens. 2020, 12, 1005. [Google Scholar] [CrossRef] [Green Version]

- Matrone, F.; Lingua, A.; Pierdicca, R.; Malinverni, E.S.; Paolanti, M.; Grilli, E.; Remondino, F.; Murtiyoso, A.; Landes, T. A benchmark for large-scale heritage point cloud semantic segmentation. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2, 4558–4567. [Google Scholar]

- Grilli, E.; Özdemir, E.; Remondino, F. Application of machine and deep learning strategies for the classification of heritage point clouds. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W18, 447–454. [Google Scholar] [CrossRef] [Green Version]

- Fassi, F.; Achille, C.; Fregonese, L. Surveying and modelling the main spire of Milan Cathedral using multiple data sources. Photogramm. Rec. 2011, 26, 462–487. [Google Scholar] [CrossRef]

- Achille, C.; Fassi, F.; Mandelli, A.; Perfetti, L.; Rechichi, F.; Teruggi, S. From A Traditional to A Digital Site: 2008–2019. The History of Milan Cathedral Surveys. In Research for Development; Springer: Berlin/Heidelberg, Germany, 2020; pp. 331–341. [Google Scholar] [CrossRef] [Green Version]

- Perfetti, L.; Fassi, F.; Gulsan, H. Generation of gigapixel orthophoto for the maintenance of complex buildings. Challenges and lesson learnt. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 605–614. [Google Scholar] [CrossRef] [Green Version]

- Perfetti, L.; Polari, C.; Fassi, F. Fisheye photogrammetry: Tests and methodologies for the survey of narrow spaces. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 573–580. [Google Scholar] [CrossRef] [Green Version]

- Mandelli, A.; Fassi, F.; Perfetti, L.; Polari, C. Testing different survey techniques to model architectonic narrow spaces. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W5, 505–511. [Google Scholar] [CrossRef] [Green Version]

- Salmi, M. L’abbazia Di Pomposa; Amilcare Pizzi: Milano, Italy, 1966. [Google Scholar]

- Russo, E. Profilo Storico-artistico Della Chiesa Abbaziale di Pomposa. In L’arte sacra nei Ducati Estensi, Proceedings of the II Settimana Dei Beni Storico-Artistici Della Chiesa Nazionale Negli Antichi Ducati Estensi, Ferrara, Italy, 13–18 September 1982; Fellani, G., Ed.; Sate: Ferrara, Italy, 1984. [Google Scholar]

- Addison, A.; Gaiani, M. Virtualized architectural heritage: New tools and techniques. IEEE MultiMedia 2000, 7, 26–31. [Google Scholar] [CrossRef]

- El-Hakim, S.F.; Beraldin, J.A.; Picard, M.; Vettore, A. Effective 3d modeling of heritage sites. In Proceedings of the Fourth International Conference on 3-D Digital Imaging and Modeling, 2003. 3DIM 2003, Banff, AB, Canada, 6–10 October 2003; IEEE: Piscataway, NJ, USA; pp. 302–309. [Google Scholar] [CrossRef] [Green Version]

- Cosmi, E.; Guerzoni, G.; Di Francesco, C.; Alessandri, C. Pomposa Abbey: FEM Simulation of Some Structural Damages and Restoration Proposals. In Structural Studies, Repairs and Maintenance of Historical Buildings, WIT Transaction of The Built Environment; Brebbia, C.A., Ed.; WIT Press: Wessex, UK, 1999; Volume 42. [Google Scholar] [CrossRef]

- Francesco, C.; Di Mezzadri, G. Indagini e Rilievi per Interventi Strutturali Nella Chiesa Abbaziale di Santa Maria di Pomposa. In Il Cantiere Della Conoscenza, Il Cantiere Del Restauro, Proceedings of Convegno Di Studi, Bressanone, Italy, 27–30 June 1989; Biscontin, G., Dal Colle, M., Volpin, S., Eds.; Libreria Progetto: Bressanone, Italy, 1989. [Google Scholar]

- Russo, M.; Manferdini, A.M. Integration of image and range-based techniques for surveying complex architectures. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 305–312. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cloud Compare (Version 2.11.0) [GPL software]. Available online: http://www.cloudcompare.org/ (accessed on 3 August 2020).

- Fassi, F.; Parri, S. Complex Architecture in 3D: From Survey to Web. Int. J. Herit. Digit. Era 2012, 1, 379–398. [Google Scholar] [CrossRef]

- Chehata, N.; Guo, L.; Mallet, C. Airborne lidar feature selection for urban classification using random forests. Laser Scanning 2009 IAPRS 2009, XXXVIII, 207–212. Available online: https://hal.archives-ouvertes.fr/hal-02384719/ (accessed on 10 August 2020).

| Architectural Elements | Method | # Acquisitions | # 3D Points | Mean Resol. (mm) | Subsampling (cm) |

|---|---|---|---|---|---|

| External Areas | Photogrammetry, Leica RTC360 | 16,080 photos, 54 scans | 1.5 billion | 5 | 2−5 |

| Internal Spaces | Leica HDS 7000, Leica C10 | 238 scans | 2.1 billion | 5 | 2−5 |

| Architectural Elements | Instruments | # Acquisitions | # 3D Points | Mean Resol. (mm) | Subsampling (cm) |

|---|---|---|---|---|---|

| External Areas | Leica C10 | 21 | 161 mil. | 5, 20 | 2, 5 |

| Internal Spaces | Faro Focus X 120 | 31 | 580 mil. | 10 | 2, 5 |

| Level 1 | Level 2 | Level 3 | ||||

|---|---|---|---|---|---|---|

| Milan Cathedral (Exteriors) | Pomposa Abbey | Milan Cathedral (Pillars) | Pomposa Abbey (Columns) | Milan Cathedral (Capitals) | Pomposa Abbey (Central Roof) | |

| # of Training Points | 2,580,368 | 115,444 | 1,011,994 | 21,389 | 3,309,947 | 62,258 |

| Training Time (Sec) | 363 | 5 | 17 | 0.65 | 142 | 4.25 |

| # of Classified Points | 12,680,681 | 1,102,569 | 14,645,986 | 47,006 | 47,651,835 | 832,549 |

| Classification Time (Sec) | 43.5 | 2.7 | 12.62 | 0.15 | 174.89 | 29.8 |

| Level 1 (5 cm) | Level 2 (2 cm) | Level 3 (0.5 cm) | |

|---|---|---|---|

| F1 (%) | 93.75 | 99.35 | 91.80 |

| Chains | Choir | Walls | Floor | Columns | Vaults | AVERAGE | WEIGHTED AVERAGE | |

|---|---|---|---|---|---|---|---|---|

| PREC. (%) | 67.52 | 85.19 | 94.08 | 98.03 | 90.15 | 98.28 | 88.88 | 93.05 |

| RECALL (%) | 89.96 | 85.41 | 93.42 | 94.37 | 94.85 | 94.54 | 92.09 | 93.05 |

| F1 (%) | 71.14 | 85.30 | 93.75 | 96.17 | 92.45 | 96.37 | 90.46 | 93.02 |

| Buttresses | Walls | Street | Roofs | AVERAGE | WEIGHTED AVERAGE | |

|---|---|---|---|---|---|---|

| PREC. (%) | 92.75 | 97.34 | 99.66 | 99.03 | 97.19 | 96.44 |

| RECALL (%) | 92.62 | 97.47 | 99.06 | 98.42 | 96.89 | 96.44 |

| F1 (%) | 92.68 | 97.40 | 99.36 | 98.72 | 97.04 | 96.44 |

| Level 1 (5 cm) | Level 2 (2 cm) | Level 3 (2 cm) | |

|---|---|---|---|

| F1 (%) | 95.1 | 97.8 | 94.6 |

| Purlin | Panel | Wall Plate | Tie Beam | Principal Rafter (Right) | Principal Rafter (Left) | Crown Post | AVERAGE | WEIGHTED AVERAGE | |

|---|---|---|---|---|---|---|---|---|---|

| PREC. (%) | 96.66 | 86.99 | 95.71 | 97.21 | 90.11 | 94.54 | 86.44 | 89.67 | 88.05 |

| RECALL (%) | 61.53 | 92.29 | 95.09 | 97.64 | 92.10 | 93.12 | 89.41 | 88.74 | 89.04 |

| F1 (%) | 68.27 | 89.56 | 95.40 | 97.43 | 91.09 | 93.82 | 87.90 | 89.20 | 88.39 |

| Panel | Purlin | Crown Post | Principal Rafter (Left) | Tie Beam | Principal Rafter (Right) | AVERAGE | WEIGHTED AVERAGE | |

|---|---|---|---|---|---|---|---|---|

| PREC. (%) | 96.86 | 97.13 | 83.87 | 67.36 | 99.57 | 87.52 | 88.72 | 95.77 |

| RECALL (%) | 98.47 | 93.70 | 92.24 | 68.63 | 99.36 | 87.87 | 90.05 | 95.79 |

| F1 (%) | 97.66 | 95.38 | 87.86 | 67.99 | 99.46 | 87.70 | 89.34 | 95.77 |

| Panel | Principal Rafter | Purlin | AVERAGE | WEIGHTED AVERAGE | |

|---|---|---|---|---|---|

| PREC. (%) | 97.25 | 98.41 | 97.7 | 97.79 | 97.46 |

| RECALL (%) | 100.00 | 96.16 | 85.23 | 93.80 | 97.45 |

| F1 (%) | 98.61 | 97.27 | 91.04 | 95.64 | 97.38 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teruggi, S.; Grilli, E.; Russo, M.; Fassi, F.; Remondino, F. A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification. Remote Sens. 2020, 12, 2598. https://doi.org/10.3390/rs12162598

Teruggi S, Grilli E, Russo M, Fassi F, Remondino F. A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification. Remote Sensing. 2020; 12(16):2598. https://doi.org/10.3390/rs12162598

Chicago/Turabian StyleTeruggi, Simone, Eleonora Grilli, Michele Russo, Francesco Fassi, and Fabio Remondino. 2020. "A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification" Remote Sensing 12, no. 16: 2598. https://doi.org/10.3390/rs12162598