Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches

Abstract

:1. Introduction

2. Study Area and Data

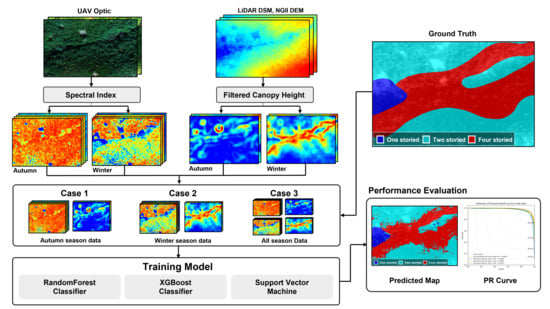

3. Methodology

3.1. Generation of the Normalized Input Data

3.1.1. Spectral Index Maps

3.1.2. Canopy Height Maps

3.2. Classification with Machine Learning Techniques

3.2.1. Random Forest

3.2.2. XGBoost

3.2.3. Support Vector Machine

3.3. Performance Evaluation

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Prasad, R.; Kant, S. Institutions, Forest Management, and Sustainable Human Development: Experience from India. Environ. Dev. Sustain. 2003, 5, 353–367. [Google Scholar] [CrossRef]

- Kibria, A.S.; Behie, A.; Costanza, R.; Groves, C.; Farrell, T. The value of ecosystem services obtained from the protected forest of Cambodia: The case of Veun Sai-Siem Pang National Park. Ecosyst. Serv. 2017, 26, 27–36. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S.; Lee, M.-J.; Jung, H.-S. Spatial Assessment of Urban Flood Susceptibility Using Data Mining and Geographic Information System (GIS) Tools. Sustainability 2018, 10, 648. [Google Scholar] [CrossRef] [Green Version]

- Bohn, F.J.; Huth, A. The importance of forest structure to biodiversity–productivity relationships. R. Soc. Open Sci. 2017, 4, 160521. [Google Scholar] [CrossRef] [Green Version]

- Kimes, D.; Ranson, K.; Sun, G.; Blair, J. Predicting lidar measured forest vertical structure from multi-angle spectral data. Remote Sens. Environ. 2006, 100, 503–511. [Google Scholar] [CrossRef]

- Froidevaux, J.; Zellweger, F.; Bollmann, K.; Jones, G.; Obrist, M.K. From field surveys to LiDAR: Shining a light on how bats respond to forest structure. Remote Sens. Environ. 2016, 175, 242–250. [Google Scholar] [CrossRef] [Green Version]

- Lee, Y.-S.; Baek, W.-K.; Jung, H.-S. Forest vertical Structure classification in Gongju city, Korea from optic and RADAR satellite images using artificial neural network. Korean J. Remote Sens. 2019, 35, 447–455. [Google Scholar]

- Zimble, D.A.; Evans, D.L.; Carlson, G.C.; Parker, R.C.; Grado, S.C.; Gerard, P.D. Characterizing vertical forest structure using small-footprint airborne LiDAR. Remote Sens. Environ. 2003, 87, 171–182. [Google Scholar] [CrossRef] [Green Version]

- Hyde, P.; Dubayah, R.; Walker, W.; Blair, J.B.; Hofton, M.; Hunsaker, C. Mapping forest structure for wildlife habitat analysis using multi-sensor (LiDAR, SAR/InSAR, ETM+, Quickbird) synergy. Remote Sens. Environ. 2006, 102, 63–73. [Google Scholar] [CrossRef]

- Navalgund, R.R.; Jayaraman, V.; Roy, P.S. Remote sensing applications: An overview. Curr. Sci. 2007, 93, 1747–1766. [Google Scholar]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Camps-Valls, G. Machine Learning in Remote Sensing Data Processing. In Proceedings of the 2009 IEEE International Workshop on Machine Learning for Signal Processing, Grenoble, France, 1–4 September 2009. [Google Scholar]

- Bermant, P.C.; Bronstein, M.M.; Wood, R.J.; Gero, S.; Gruber, D.F. Deep Machine Learning Techniques for the Detection and Classification of Sperm Whale Bioacoustics. Sci. Rep. 2019, 9, 12588. [Google Scholar] [CrossRef] [Green Version]

- Batista, G.; Monard, M.C. An analysis of four missing data treatment methods for supervised learning. Appl. Artif. Intell. 2003, 17, 519–533. [Google Scholar] [CrossRef]

- Breck, E.; Polyzotis, N.; Roy, S.; Whang, S.; Zinkevich, M. Data Validation for Machine Learning. In Proceedings of the MLSys 2019, Stanford, CA, USA, 31 March–2 April 2019. [Google Scholar]

- Gudivada, V.; Apon, A.; Ding, J. Data quality considerations for big data and machine learning: Going beyond data cleaning and transformations. Int. J. Adv. Softw. 2017, 10, 1–20. [Google Scholar]

- Miller, J.R.; White, H.P.; Chen, J.M.; Peddle, D.R.; McDermid, G.; Fournier, R.A.; Shepherd, P.; Rubinstein, I.; Freemantle, J.; Soffer, R.; et al. Seasonal change in understory reflectance of boreal forests and influence on canopy vegetation indices. J. Geophys. Res. Space Phys. 1997, 102, 29475–29482. [Google Scholar] [CrossRef]

- Potter, B.E.; Teclaw, R.M.; Zasada, J.C. The impact of forest structure on near-ground temperatures during two years of contrasting temperature extremes. Agric. For. Meteorol. 2001, 106, 331–336. [Google Scholar] [CrossRef]

- Kim, J.H. Seasonal Changes in Plants in Temperate Forests in Korea. Ph.D. Thesis, The Seoul National University, Seoul, Korea, 2019. [Google Scholar]

- Motohka, T.; Nasahara, K.; Murakami, K.; Nagai, S. Evaluation of Sub-Pixel Cloud Noises on MODIS Daily Spectral Indices Based on in situ Measurements. Remote Sens. 2011, 3, 1644–1662. [Google Scholar] [CrossRef] [Green Version]

- Soenen, S.; Peddle, D.; Coburn, C. SCS+C: A modified Sun-canopy-sensor topographic correction in forested terrain. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2148–2159. [Google Scholar] [CrossRef]

- Van Beek, J.; Tits, L.; Somers, B.; Deckers, T.; Janssens, P.; Coppin, P. Reducing background effects in orchards through spectral vegetation index correction. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 167–177. [Google Scholar] [CrossRef]

- Ghazal, M.; Al Khalil, Y.; Hajjdiab, H. UAV-based remote sensing for vegetation cover estimation using NDVI imagery and level sets method. In Proceedings of the 2015 IEEE ISSPIT, Abu Dhabi, United Arab Emirates, 7–10 December 2015. [Google Scholar] [CrossRef]

- Wahab, I.; Hall, O.; Jirström, M. Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa. Drones 2018, 2, 28. [Google Scholar] [CrossRef] [Green Version]

- Jorge, J.; Vallbé, M.; Soler, J.A. Detection of irrigation inhomogeneities in an olive grove using the NDRE vegetation index obtained from UAV images. Eur. J. Remote Sens. 2019, 52, 169–177. [Google Scholar] [CrossRef] [Green Version]

- Zhang, N.; Su, X.; Zhang, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Monitoring daily variation of leaf layer photosynthesis in rice using UAV-based multi-spectral imagery and a light response curve model. Agric. For. Meteorol. 2020, 291, 108098. [Google Scholar] [CrossRef]

- Khan, R.S.; Bhuiyan, M.A.E. Artificial Intelligence-Based Techniques for Rainfall Estimation Integrating Multisource Precipitation Datasets. Atmosphere 2021, 12, 1239. [Google Scholar] [CrossRef]

- Kwon, S.-K.; Jung, H.-S.; Baek, W.-K.; Kim, D. Classification of Forest Vertical Structure in South Korea from Aerial Orthophoto and Lidar Data Using an Artificial Neural Network. Appl. Sci. 2017, 7, 1046. [Google Scholar] [CrossRef] [Green Version]

- Chandra, M.A.; Bedi, S.S. Survey on SVM and their application in image classification. Int. J. Inf. Technol. 2018, 13, 1–11. [Google Scholar] [CrossRef]

- Horning, N. Random Forests: An algorithm for image classification and generation of continuous fields data sets. In Proceedings of the International Conference on Geoinformatics for Spatial Infrastructure Development in Earth and Allied Sciences, Osaka, Japan, 9–11 December 2010; Volume 911. [Google Scholar]

- Memon, N.; Patel, S.B.; Patel, D.P. Comparative Analysis of Artificial Neural Network and XGBoost Algorithm for PolSAR Image Classification. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 452–460. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Kim, J.-C.; Jung, H.-S.; Lee, M.J.; Lee, S. Spatial prediction of flood susceptibility using random-forest and boosted-tree models in Seoul metropolitan city, Korea. Geomat. Nat. Hazards Risk 2017, 8, 1185–1203. [Google Scholar] [CrossRef] [Green Version]

- Joharestani, M.Z.; Cao, C.; Ni, X.; Bashir, B.; Talebiesfandarani, S. PM2.5 Prediction Based on Random Forest, XGBoost, and Deep Learning Using Multisource Remote Sensing Data. Atmosphere 2019, 10, 373. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Hwang, J.-I.; Jung, H.-S. Automatic Ship Detection Using the Artificial Neural Network and Support Vector Machine from X-Band Sar Satellite Images. Remote Sens. 2018, 10, 1799. [Google Scholar] [CrossRef] [Green Version]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Buckland, M.; Gey, F. The relationship between recall and precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jo, J.-M. Effectiveness of Normalization Pre-Processing of Big Data to the Machine Learning Performance. J. Inf. Commun. Converg. Eng. 2019, 14, 547–552. [Google Scholar]

- Kwon, S.-K.; Lee, Y.-S.; Kim, D.-S.; Jung, H.-S. Classification of Forest Vertical Structure Using Machine Learning Analysis. Korean J. Remote Sens. 2019, 35, 229–239. [Google Scholar]

- Lee, Y.-S.; Lee, S.; Baek, W.-K.; Jung, H.-S.; Park, S.-H.; Lee, M.-J. Mapping Forest Vertical Structure in Jeju Island from Optical and Radar Satellite Images Using Artificial Neural Network. Remote Sens. 2020, 12, 797. [Google Scholar] [CrossRef] [Green Version]

| Band | Center | Width |

|---|---|---|

| Blue | 475 nm | 32 nm |

| Green | 560 nm | 27 nm |

| Red | 668 nm | 16 nm |

| Red Edge | 717 nm | 12 nm |

| Near infrared | 842 nm | 57 nm |

| Name | Acronyms | Equation |

|---|---|---|

| Normal Difference Vegetation Index | NDVI | |

| Green Normalized Difference Vegetation Index | GNDVI | |

| Normalized Difference Red Edge Index | NDRE | |

| Structure Insensitive Pigment Index | SIPI |

| Model | Hyperparameter | Value | ||

|---|---|---|---|---|

| Case 1 | Case 2 | Case 3 | ||

| RF | max_depth | 962 | 137 | 979 |

| n_estimators | 319 | 465 | 292 | |

| XGBoost | learning_rate | 0.13 | 0.39 | 0.10 |

| max_depth | 581 | 139 | 765 | |

| n_estimators | 438 | 463 | 583 | |

| SVM | C | 8506 | 5517 | 7030 |

| gamma | 0.97 | 0.86 | 0.90 | |

| kernel | rbf | rbf | rbf | |

| Model | Case | Evaluation Metrics | One-Storied | Two-Storied | Four-Storied | Macro Average |

|---|---|---|---|---|---|---|

| RF | Case 1 | Precision | 0.76 | 0.78 | 0.73 | 0.76 |

| Recall | 0.28 | 0.82 | 0.74 | 0.61 | ||

| False Alarm Rate | 0.24 | 0.22 | 0.27 | 0.24 | ||

| F1 score | 0.41 | 0.80 | 0.74 | 0.65 | ||

| Case 2 | Precision | 0.82 | 0.89 | 0.84 | 0.85 | |

| Recall | 0.63 | 0.87 | 0.87 | 0.79 | ||

| False Alarm Rate | 0.18 | 0.11 | 0.16 | 0.15 | ||

| F1 score | 0.71 | 0.88 | 0.85 | 0.81 | ||

| Case 3 | Precision | 0.94 | 0.93 | 0.88 | 0.92 | |

| Recall | 0.81 | 0.92 | 0.91 | 0.88 | ||

| False Alarm Rate | 0.06 | 0.07 | 0.12 | 0.08 | ||

| F1 score | 0.87 | 0.92 | 0.90 | 0.90 | ||

| XGBoost | Case 1 | Precision | 0.68 | 0.79 | 0.73 | 0.73 |

| Recall | 0.40 | 0.81 | 0.75 | 0.65 | ||

| False Alarm Rate | 0.32 | 0.21 | 0.27 | 0.27 | ||

| F1 score | 0.50 | 0.80 | 0.74 | 0.68 | ||

| Case 2 | Precision | 0.79 | 0.89 | 0.84 | 0.84 | |

| Recall | 0.69 | 0.87 | 0.88 | 0.81 | ||

| False Alarm Rate | 0.21 | 0.11 | 0.16 | 0.16 | ||

| F1 score | 0.74 | 0.88 | 0.86 | 0.83 | ||

| Case 3 | Precision | 0.94 | 0.94 | 0.91 | 0.93 | |

| Recall | 0.90 | 0.93 | 0.92 | 0.92 | ||

| False Alarm Rate | 0.06 | 0.06 | 0.09 | 0.07 | ||

| F1 score | 0.92 | 0.94 | 0.92 | 0.92 | ||

| SVM | Case 1 | Precision | 0.62 | 0.76 | 0.71 | 0.70 |

| Recall | 0.29 | 0.80 | 0.71 | 0.60 | ||

| False Alarm Rate | 0.38 | 0.24 | 0.29 | 0.30 | ||

| F1 score | 0.40 | 0.78 | 0.71 | 0.63 | ||

| Case 2 | Precision | 0.68 | 0.88 | 0.81 | 0.79 | |

| Recall | 0.69 | 0.84 | 0.86 | 0.79 | ||

| False Alarm Rate | 0.32 | 0.12 | 0.19 | 0.21 | ||

| F1 score | 0.68 | 0.85 | 0.83 | 0.79 | ||

| Case 3 | Precision | 0.85 | 0.92 | 0.89 | 0.88 | |

| Recall | 0.92 | 0.91 | 0.89 | 0.91 | ||

| False Alarm Rate | 0.15 | 0.08 | 0.11 | 0.12 | ||

| F1 score | 0.88 | 0.91 | 0.89 | 0.90 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.-W.; Yoon, Y.-W.; Baek, W.-K.; Jung, H.-S. Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches. Remote Sens. 2021, 13, 4282. https://doi.org/10.3390/rs13214282

Yu J-W, Yoon Y-W, Baek W-K, Jung H-S. Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches. Remote Sensing. 2021; 13(21):4282. https://doi.org/10.3390/rs13214282

Chicago/Turabian StyleYu, Jin-Woo, Young-Woong Yoon, Won-Kyung Baek, and Hyung-Sup Jung. 2021. "Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches" Remote Sensing 13, no. 21: 4282. https://doi.org/10.3390/rs13214282