Evaluation of Decision Fusions for Classifying Karst Wetland Vegetation Using One-Class and Multi-Class CNN Models with High-Resolution UAV Images

Abstract

:1. Introduction

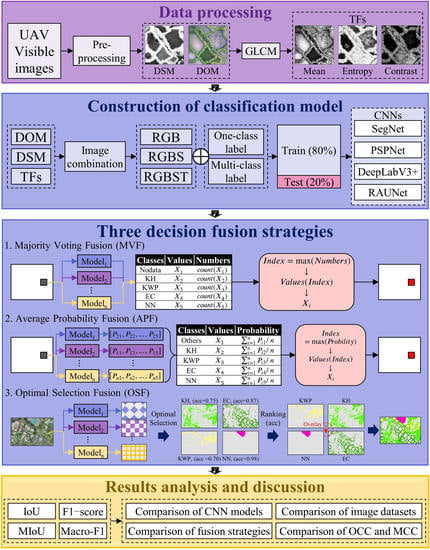

- We constructed three UAV image datasets by combing DOM, DSM, and TFs to explore the impact of different feature combinations on karst wetland vegetation mapping.

- We constructed several OCC and MCC models based on four CNN algorithms (SegNet, PSPNet, DeepLabV3+, and RAUNet) and compared the classification results of OCC and MCC models to demonstrate the advantages of OCC for classifying karst wetland vegetation communities.

- We used three decision fusion strategies (Majority Voting Fusion, Average Probability Fusion, and Optimal Selection Fusion) to fuse multiple OCC and MCC models, respectively, and evaluated the identification abilities of one-class FCMs and multi-class FCMs to demonstrate the advantages of multiple OCC models’ fusion for karst wetland vegetation mapping.

- We compared the differences in classification accuracy between FCMs and single CNN models to evaluate the effects of different decision fusion strategies on the classification of karst wetland vegetation.

2. Materials and Methods

2.1. Study Area

2.2. Data Source

2.2.1. UAV Data Acquisition and Processing

2.2.2. Field Investigation and Semantic Label Creation

2.3. Methods

2.3.1. CNNs-Based Wetland Vegetation Classification

2.3.2. One-Class and Multi-Class Classification Models of Karst Wetland Vegetation Using CNN Algorithms and UAV Images

2.3.3. Fusion Classification Models Based on Three Fusion Strategies

2.3.4. Accuracy Assessment

3. Results

3.1. One-Class and Multi-Class Classifications Based on CNN Models

3.2. Fusion of CNN-Based Classification

- For KH, the difference values of IoU and F1-score were both greater than 0 when using the RGBS image dataset. Among them, the RGBS image dataset combined with the APF strategy resulted in the difference values of IoU and F1-score both reaching the maximum, which are 22.09% and 8.41%, respectively, while the RGB image dataset combined with the MVF strategy resulted in the difference values of IoU and F1-score both reaching the minimum, which are −10.99% and −21.71%, respectively. These results proved that the identification ability of OC-FCM for KH was better than that of MC-FCM when using the RGBS image dataset, and the difference between the identification ability of the two at the pixel level reached the maximum when using the APF strategy. Meanwhile, the RGB image dataset combined with the MVF strategy resulted in the identification ability of MC-FCM for KH surpassing that of OC-FCM.

- For KWP, the difference values of IoU and F1-score were both greater than 0 in all cases, and the RGBS image dataset combined with the APF strategy still resulted in the difference values of IoU and F1-score both reaching the maximum of 16.88% and 6.62%, respectively. These results proved that the identification ability of OC-FCM for KWP was better than that of MC-FCM, and the difference between the two reached the maximum when using the RGBS image dataset and the APF strategy.

- For EC, the difference values of IoU and F1-score were both greater than 0 when using the RGB image dataset, and the RGB image dataset combined with the APF and MVF strategies resulted in the difference values of IoU and F1-score both reaching their maximum of 3.31% and 5.22%, respectively. Meanwhile, the RGBST image dataset combined with the OSF strategy resulted in the difference values of IoU and F1-score reaching their minimum of −3.9% and −0.15%, respectively. These results proved that the identification ability of OC-FCM for EC was better than that of MC-FCM when using the RGB image dataset, and the difference in the identification abilities of the two at the pixel and attribute levels reached the maximum when using the APF and MVF strategies, respectively. Meanwhile, the identification ability of MC-FCM for EC was better than that of OC-FCM when using the RGBST image dataset and the OSF strategy.

- For NN, similar to KWP, the difference value of IoU was greater than 0 in all cases, where the RGB image dataset combined with the MVF strategy exhibited the largest difference value of IoU (6.42%), while the RGBS image dataset combined with both the MVF and APF strategies exhibited the largest difference value of F1-score (1.96%). These results proved that OC-FCM outperformed MC-FCM in identifying NN at the pixel level, and the difference in the identification ability between the two at the pixel level reached the maximum when using the RGB image dataset and the MVF strategy. Meanwhile, OC-FCM outperformed MC-FCM in identifying NN at the attribute level when using the RGBS image dataset and the MVF and APF strategies.

3.3. Fusion of Different Images Datasets Classifications

- For KH, the difference values of IoU were all greater than 0, while the difference values of the F1-score were also greater than 0 when using the SegNet and PSPNet algorithms. Among them, the SegNet algorithm combined with the OSF strategy resulted in the difference values of IoU and F1-score reaching the maximum of 18.81% and 10.36%, respectively.

- For KWP, when using the MVF and the APF strategies, the identification ability of OC-FCM was higher than that of MC-FCM, and the difference value of IoU and F1-score reached the maximum when using the SegNet algorithm and the APF strategy (the maximum difference values of IoU and F1-score were 15.11% and 5.06%, respectively).

- For EC, the variation trends of the difference values of F1-score and IoU were similar (the difference values were greater than 0), where the RAUNet algorithm combined with the OSF strategy resulted in the maximum difference values of both F1-score and IoU, which were 2.31% and 9.90%, respectively.

- For NN, the difference values of IoU were all greater than 0, and the RAUNet algorithm combined with the APF fusion strategy resulted in the difference value of IoU reaching a maximum of 8.89%; while in the attribute-level evaluation, two cases resulted in the difference value of F1-score decreasing to less than 0 (the SegNet algorithm combined with the MVF strategy and the RAUNet algorithm combined with the OSF strategy), and there were two cases where the difference value of F1-score reached a maximum of 1.96% (the RAUNet algorithm combined with the MVF or the APF strategy).

3.4. Fusion of CNNs-Based and Image Datasets-Based Classifications

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Training Curve

References

- Ford, D.; Williams, P.D. Karst Hydrogeology and Geomorphology; John Wiley & Sons: Hoboken, NJ, USA, 2013; ISBN 9781118684993. [Google Scholar]

- Guo, F.; Jiang, G.; Yuan, D. Major Ions in Typical Subterranean Rivers and Their Anthropogenic Impacts in Southwest Karst Areas, China. Environ. Geol. 2007, 53, 533–541. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, C.; Chen, H.; Yue, Y.; Zhang, W.; Zhang, M.; Qi, X.; Fu, Z. Karst Landscapes of China: Patterns, Ecosystem Processes and Services. Landsc. Ecol. 2019, 34, 2743–2763. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Li, W.; Xiao, Y.; Cheng, A.; Shen, T.; Zhu, M.; Yu, L. Abundance and Diversity of Carbon-Fixing Bacterial Communities in Karst Wetland Soil Ecosystems. CATENA 2021, 204, 105418. [Google Scholar] [CrossRef]

- Pipan, T.; Culver, D.C. Wetlands in cave and karst regions. In Encyclopedia of Caves; Elsevier: Amsterdam, The Netherlands, 2019; pp. 1156–1164. [Google Scholar]

- Beltram, G. Karst Wetlands. In The Wetland Book; Springer: Dordrecht, The Netherlands, 2016; pp. 1–17. [Google Scholar]

- Kokaly, R.F.; Despain, D.G.; Clark, R.N.; Livo, K.E. Mapping Vegetation in Yellowstone National Park Using Spectral Feature Analysis of AVIRIS Data. Remote Sens. Environ. 2003, 84, 437–456. [Google Scholar] [CrossRef] [Green Version]

- Oostdijk, M.; Santos, M.J.; Whigham, D.; Verhoeven, J.; Silvestri, S. Assessing Rehabilitation of Managed Mangrove Ecosystems Using High Resolution Remote Sensing. Estuar. Coast. Shelf Sci. 2018, 211, 238–247. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Regan, S.; Naughton, O.; Johnston, P.; Waldren, S.; Ghosh, B. Mapping vegetation communities inside wetlands using sentinel-2 imagery in ireland. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102083. [Google Scholar] [CrossRef]

- Li, N.; Lu, D.; Wu, M.; Zhang, Y.; Lu, L. Coastal Wetland Classification with Multiseasonal High-Spatial Resolution Satellite Imagery. Int. J. Remote Sens. 2018, 39, 8963–8983. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Houet, T.; Mony, C.; Lecoq, L.; Corpetti, T. Can UAVs Fill the Gap between in Situ Surveys and Satellites for Habitat Mapping? Remote Sens. Environ. 2020, 243, 111780. [Google Scholar] [CrossRef]

- Martínez Prentice, R.; Villoslada Peciña, M.; Ward, R.D.; Bergamo, T.F.; Joyce, C.B.; Sepp, K. Machine Learning Classification and Accuracy Assessment from High-Resolution Images of Coastal Wetlands. Remote Sens. 2021, 13, 3669. [Google Scholar] [CrossRef]

- Gray, P.; Ridge, J.; Poulin, S.; Seymour, A.; Schwantes, A.; Swenson, J.; Johnston, D. Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments. Remote Sens. 2018, 10, 1257. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Object-Based Classification of Wetland Vegetation Using Very High-Resolution Unmanned Air System Imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef] [Green Version]

- Wu, N.; Shi, R.; Zhuo, W.; Zhang, C.; Tao, Z. Identification of Native and Invasive Vegetation Communities in a Tidal Flat Wetland Using Gaofen-1 Imagery. Wetlands 2021, 41, 46. [Google Scholar] [CrossRef]

- Banks, S.; White, L.; Behnamian, A.; Chen, Z.; Montpetit, B.; Brisco, B.; Pasher, J.; Duffe, J. Wetland Classification with Multi-Angle/Temporal SAR Using Random Forests. Remote Sens. 2019, 11, 670. [Google Scholar] [CrossRef] [Green Version]

- Deval, K.; Joshi, P.K. Vegetation Type and Land Cover Mapping in a Semi-Arid Heterogeneous Forested Wetland of India: Comparing Image Classification Algorithms. Environ. Dev. Sustain. 2021, 24, 3947–3966. [Google Scholar] [CrossRef]

- Balogun, A.-L.; Yekeen, S.T.; Pradhan, B.; Althuwaynee, O.F. Spatio-Temporal Analysis of Oil Spill Impact and Recovery Pattern of Coastal Vegetation and Wetland Using Multispectral Satellite Landsat 8-OLI Imagery and Machine Learning Models. Remote Sens. 2020, 12, 1225. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Lin, H. Wetland Classification Using Parcel-Level Ensemble Algorithm Based on Gaofen-6 Multispectral Imagery and Sentinel-1 Dataset. J. Hydrol. 2022, 606, 127462. [Google Scholar] [CrossRef]

- Pardede, H.F.; Suryawati, E.; Krisnandi, D.; Yuwana, R.S.; Zilvan, V. Machine Learning Based Plant Diseases Detection: A Review. In Proceedings of the 2020 International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET), Tangerang, Indonesia, 18–20 November 2020. [Google Scholar] [CrossRef]

- Zhong, B.; Pan, X.; Love, P.E.D.; Ding, L.; Fang, W. Deep Learning and Network Analysis: Classifying and Visualizing Accident Narratives in Construction. Autom. Constr. 2020, 113, 103089. [Google Scholar] [CrossRef]

- Wang, Z.; Hong, T.; Piette, M.A. Building Thermal Load Prediction through Shallow Machine Learning and Deep Learning. Appl. Energy 2020, 263, 114683. [Google Scholar] [CrossRef] [Green Version]

- Scepanovic, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-Area Land Cover Mapping With Sentinel-1 Imagery Using Deep Learning Semantic Segmentation Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Lin, F.-C.; Chuang, Y.-C. Interoperability Study of Data Preprocessing for Deep Learning and High-Resolution Aerial Photographs for Forest and Vegetation Type Identification. Remote Sens. 2021, 13, 4036. [Google Scholar] [CrossRef]

- Liu, M.; Fu, B.; Xie, S.; He, H.; Lan, F.; Li, Y.; Lou, P.; Fan, D. Comparison of Multi-Source Satellite Images for Classifying Marsh Vegetation Using DeepLabV3 Plus Deep Learning Algorithm. Ecol. Indic. 2021, 125, 107562. [Google Scholar] [CrossRef]

- Fu, B.; He, X.; Yao, H.; Liang, Y.; Deng, T.; He, H.; Fan, D.; Lan, G.; He, W. Comparison of RFE-DL and Stacking Ensemble Learning Algorithms for Classifying Mangrove Species on UAV Multispectral Images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102890. [Google Scholar] [CrossRef]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and Evaluation of Deep Learning Architectures for Efficient Land Cover Mapping with UAS Hyper-Spatial Imagery: A Case Study Over a Wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Zhong, Y.; Wang, X.; Hu, X.; Luo, C.; Boitt, M.; Piiroinen, R.; Zhang, L.; Heiskanen, J.; Pellikka, P. Mapping the Distribution of Invasive Tree Species Using Deep One-Class Classification in the Tropical Montane Landscape of Kenya. ISPRS J. Photogramm. Remote Sens. 2022, 187, 328–344. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, L. How to Automate Timely Large-Scale Mangrove Mapping with Remote Sensing. Remote Sens. Environ. 2021, 264, 112584. [Google Scholar] [CrossRef]

- Sanjeewani, P.; Verma, B. Single Class Detection-Based Deep Learning Approach for Identification of Road Safety Attributes. Neural Comput. Appl. 2021, 33, 9691–9702. [Google Scholar] [CrossRef]

- Tang, T.Y.; Fu, B.L.; Lou, P.Q.; Bi, L. Segnet-based extraction of wetland vegetation information from UAV images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 42, 375–380. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Y.; Wu, J.; Lin, Z.; Zhao, X. A Deep Learning-Based Multi-Model Ensemble Method for Cancer Prediction. Comput. Methods Programs Biomed. 2018, 153, 1–9. [Google Scholar] [CrossRef]

- Choi, Y.; Chung, H.I.; Lim, C.H.; Lee, J.; Sung, H.C.; Jeon, S.W. Machine Learning Approach to Predict Vegetation Health Using Multi-Source Geospatial Data. In Proceedings of the AGU Fall Meeting 2021, New Orleans, LA, USA, 13–17 December 2021. [Google Scholar]

- Man, C.D.; Nguyen, T.T.; Bui, H.Q.; Lasko, K.; Nguyen, T.N.T. Improvement of Land-Cover Classification over Frequently Cloud-Covered Areas Using Landsat 8 Time-Series Composites and an Ensemble of Supervised Classifiers. Int. J. Remote Sens. 2017, 39, 1243–1255. [Google Scholar] [CrossRef]

- Hang, R.; Li, Z.; Ghamisi, P.; Hong, D.; Xia, G.; Liu, Q. Classification of Hyperspectral and LiDAR Data Using Coupled CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4939–4950. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Sargent, I.; Pan, X.; Gardiner, A.; Hare, J.; Atkinson, P.M. VPRS-Based Regional Decision Fusion of CNN and MRF Classifications for Very Fine Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4507–4521. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.; Zhang, J.; Ma, Y.; An, J.; Ren, G.; Li, X. Hyperspectral Coastal Wetland Classification Based on a Multiobject Convolutional Neural Network Model and Decision Fusion. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1110–1114. [Google Scholar] [CrossRef]

- Meng, X.; Zhang, S.; Zang, S. Lake Wetland Classification Based on an SVM-CNN Composite Classifier and High-Resolution Images Using Wudalianchi as an Example. J. Coast. Res. 2019, 93, 153. [Google Scholar] [CrossRef]

- Deng, T.; Fu, B.; Liu, M.; He, H.; Fan, D.; Li, L.; Huang, L.; Gao, E. Comparison of Multi-Class and Fusion of Multiple Single-Class SegNet Model for Mapping Karst Wetland Vegetation Using UAV Images. Sci. Rep. 2022, 12, 13270. [Google Scholar] [CrossRef]

- Xiao, H.; Shahab, A.; Li, J.; Xi, B.; Sun, X.; He, H.; Yu, G. Distribution, Ecological Risk Assessment and Source Identification of Heavy Metals in Surface Sediments of Huixian Karst Wetland, China. Ecotoxicol. Environ. Saf. 2019, 185, 109700. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar] [CrossRef] [Green Version]

- Ni, Z.-L.; Bian, G.-B.; Zhou, X.-H.; Hou, Z.-G.; Xie, X.-L.; Wang, C.; Zhou, Y.-J.; Li, R.-Q.; Li, Z. RAUNet: Residual Attention U-Net for Semantic Segmentation of Cataract Surgical Instruments. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2019; pp. 139–149. [Google Scholar] [CrossRef]

- Takruri, M.; Rashad, M.W.; Attia, H. Multi-Classifier Decision Fusion for Enhancing Melanoma Recognition Accuracy. In Proceedings of the 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA), Ras Al Khaimah, United Arab Emirates, 6–8 December 2016. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Palao, L.K.; Nelson, A.; Gumma, M.K.; Paliwal, A.; Win, K.T.; Nge, K.H.; Johnson, D.E. Characterizing and Mapping Cropping Patterns in a Complex Agro-Ecosystem: An Iterative Participatory Mapping Procedure Using Machine Learning Algorithms and MODIS Vegetation Indices. Comput. Electron. Agric. 2020, 175, 105595. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, S.; Zhao, X. Context-Based Conditional Random Fields as Recurrent Neural Networks for Image Labeling. Multimed. Tools Appl. 2019, 79, 17135–17145. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land Cover Classification from Fused DSM and UAV Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef] [Green Version]

- Hoffmann, E.J.; Wang, Y.; Werner, M.; Kang, J.; Zhu, X.X. Model Fusion for Building Type Classification from Aerial and Street View Images. Remote Sens. 2019, 11, 1259. [Google Scholar] [CrossRef]

| Image Datasets | Combination | Descriptions |

|---|---|---|

| RGB | DOM | Blue, Green, and Red |

| RGBS | DOM + DSM | Blue, Green, Red, and DSM |

| RGBST | DOM + DSM + TFs | Blue, Green, Red, DSM, Mean, Contrast, and Entropy |

| Classes | KRL | KH | PF | KWP | EC | NN | BSA | Total |

|---|---|---|---|---|---|---|---|---|

| Number of samples | 50 | 45 | 15 | 55 | 35 | 25 | 15 | 240 |

| Number of pixels | 3,508,776 | 3,177,450 | 530,202 | 4,420,458 | 1,592,524 | 591,233 | 94,083 | 13,887,251 |

| Models | Groups | Algorithms | Image Datasets | Scenarios |

|---|---|---|---|---|

| One-class classification | I | SegNet | RGB | 1 |

| RGBS | 2 | |||

| RGBST | 3 | |||

| II | PSPNet | RGB | 4 | |

| RGBS | 5 | |||

| RGBST | 6 | |||

| III | DeepLabV3+ | RGB | 7 | |

| RGBS | 8 | |||

| RGBST | 9 | |||

| IV | RAUNet | RGB | 10 | |

| RGBS | 11 | |||

| RGBST | 12 | |||

| Multi-class classification | V | SegNet | RGB | 13 |

| RGBS | 14 | |||

| RGBST | 15 | |||

| VI | PSPNet | RGB | 16 | |

| RGBS | 17 | |||

| RGBST | 18 | |||

| VII | DeepLabV3+ | RGB | 19 | |

| RGBS | 20 | |||

| RGBST | 21 | |||

| VIII | RAUNet | RGB | 22 | |

| RGBS | 23 | |||

| RGBST | 24 |

| Strategies | Models | Image Datasets | ||

|---|---|---|---|---|

| RGB | RGBS | RGBST | ||

| Macro-F1/MIoU | Macro-F1/MIoU | Macro-F1/MIoU | ||

| MVF | OC-FCM | 0.8949/0.6965 | 0.9595/0.7788 | 0.9684/0.7653 |

| MC-FCM | 0.9340/0.6882 | 0.9463/0.6949 | 0.9433/0.7119 | |

| APF | OC-FCM | 0.9070/0.7063 | 0.9650/0.7864 | 0.9684/0.7751 |

| MC-FCM | 0.9114/0.6712 | 0.9161/0.6782 | 0.9335/0.6970 | |

| OSF | OC-FCM | 0.9039/0.7185 | 0.9640/0.7894 | 0.9390/0.7630 |

| MC-FCM | 0.9255/0.6903 | 0.9287/0.7017 | 0.9339/0.7269 | |

| Strategies | Models | Algorithms | |||

|---|---|---|---|---|---|

| SegNet | PSPNet | DeepLabV3+ | RAUNet | ||

| Macro-F1/MIoU | Macro-F1/MIoU | Macro-F1/MIoU | Macro-F1/MIoU | ||

| MVF | OC-FCM | 0.9516/0.7490 | 0.9595/0.7872 | 0.9604/0.7592 | 0.9337/0.7521 |

| MC-FCM | 0.9184/0.6692 | 0.9287/0.7107 | 0.9350/0.6758 | 0.9329/0.6894 | |

| APF | OC-FCM | 0.9617/0.7498 | 0.9613/0.7862 | 0.9597/0.7605 | 0.9432/0.7539 |

| MC-FCM | 0.9158/0.6556 | 0.9314/0.7091 | 0.9480/0.6748 | 0.9411/0.6804 | |

| OSF | OC-FCM | 0.9685/0.7630 | 0.9640/0.7894 | 0.9496/0.7545 | 0.9380/0.7753 |

| MC-FCM | 0.9344/0.6748 | 0.9384/0.7112 | 0.9451/0.6965 | 0.9112/0.7087 | |

| Strategies | Models | |||

|---|---|---|---|---|

| OC-FCM | MC-FCM | |||

| Macro-F1 | MIoU | Macro-F1 | MIoU | |

| MVF | 0.9660 | 0.7683 | 0.9441 | 0.6929 |

| APF | 0.9660 | 0.7719 | 0.9406 | 0.6892 |

| OSF | 0.9640 | 0.7901 | 0.9343 | 0.7271 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Deng, T.; Fu, B.; Lao, Z.; Yang, W.; He, H.; Fan, D.; He, W.; Yao, Y. Evaluation of Decision Fusions for Classifying Karst Wetland Vegetation Using One-Class and Multi-Class CNN Models with High-Resolution UAV Images. Remote Sens. 2022, 14, 5869. https://doi.org/10.3390/rs14225869

Li Y, Deng T, Fu B, Lao Z, Yang W, He H, Fan D, He W, Yao Y. Evaluation of Decision Fusions for Classifying Karst Wetland Vegetation Using One-Class and Multi-Class CNN Models with High-Resolution UAV Images. Remote Sensing. 2022; 14(22):5869. https://doi.org/10.3390/rs14225869

Chicago/Turabian StyleLi, Yuyang, Tengfang Deng, Bolin Fu, Zhinan Lao, Wenlan Yang, Hongchang He, Donglin Fan, Wen He, and Yuefeng Yao. 2022. "Evaluation of Decision Fusions for Classifying Karst Wetland Vegetation Using One-Class and Multi-Class CNN Models with High-Resolution UAV Images" Remote Sensing 14, no. 22: 5869. https://doi.org/10.3390/rs14225869