1. Introduction

Object detection in remote-sensing images (RSIs) is used to answer one of the most basic questions in the remote-sensing (RS) community: What and where are the objects (such as a ship, vehicle, or aircraft) in the RSIs? In general, the objective of object detection is to build models to localize and recognize different ground objects of interest in high-resolution RSIs [

1]. Due to the fact that object detection is a fundamental task for the interpretation of high-resolution RSIs, a great number of methods have been proposed to handle the issue of RSI object detection in the last decade [

2].

The traditional RSI object-detection methods focus on constructing effective features for objects of interest and training a classifier from a set of annotated RSIs. They usually acquire object regions with sliding windows and then try to recognize each region. The varieties of feature-extracting methods, e.g., bag-of-words (BOW) [

3], scale-invariant feature transform [

4], and their extensions, have been explored for representing objects. Then, the feature fusion and dimension processing were conducted in order to further improve the representation capability of multiple features. At last, efficient and well-designed classifiers were trained to recognize objects. For example, Sun et al. [

5] proposed an RSI detection framework based on spatial sparse coding bag-of-words (SSCBOW), which adopted a rotation-invariant spatial-mapping strategy and sparse coding to decrease reconstruction error. Cheng et al. [

6] explored a partially model-based RSI object-detection method based on the collection of part detectors (COPD), which used linear support-vector machines (SVMs) as partial models for detecting objects or recurring patterns. These methods could be adapted to more complicated tasks, but the hand-crafted feature-extracting approaches significantly restricted the detection performance.

As the detection performance of methods based on hand-crafted features and inefficient region-proposal strategies became saturated, it was hard to make substantial progress on object-detection until the emergence of deep convolutional neural networks (CNNs) [

7]. Relying on the ability of CNNs to extract high-level and robust features, Girshick et al. [

8,

9] proposed region-CNN (R-CNN) and Fast R-CNN, which achieved an attractive detection performance. These methods used CNN to classify and locate objects from a specified amount of generated region proposals (bounding-box candidates). Subsequently, numerous researchers explored RSI object-detection methods based on the R-CNN framework. Cheng et al. [

10] inserted a fully connected layer into the tail of the backbone network of the R-CNN framework and restrained the inserted layer with a regularization constraint to minimize the rotation variation. Thus, a rotation-invariant CNN (RICNN) was constructed. Afterward, a fisher discrimination regularized layer was appended to construct an enhanced RICNN, i.e., the RIFD-CNN [

11]. Inspired by the idea of the region-proposal network (RPN) in the Faster R-CNN [

12], Li et al. [

13] presented multi-angle anchors for establishing a rotation-insensitive RPN, and a double-channel network was used for contextual feature fusion. The utilization of the RPN enormously reduced the time for region proposal and achieved a near-real-time speed. Additionally, to enhance the detection performance for small objects in RSIs, some researchers began to develop RSI object-detection methods based on multi-scale feature operations. Inspired by the feature-pyramid network (FPN) [

14], Zhang et al. [

15] presented a double multi-scale FPN framework and studied several multi-scale training and inference strategies. Deng et al. [

16] and Guo et al. [

17] focused on multi-scale object-proposal networks that generated candidate regions with features of different intermediary layers, and the multi-scale object-detection networks made predictions on the obtained regions. The R-CNN-based RSI object-detection methods made great progress on detection performance, but they still suffered from insufficient inference speeds caused by redundant computations.

Methods based on the framework of the R-CNN always obtained region proposals first and then predicted categories and refined their coordinates; therefore, they were called two-stage RSI object-detection algorithms. In contrast, many researchers focused on exploring methods that complete the whole detection in only one step, which were called one-stage RSI object-detection algorithms [

2]. Plenty of these methods were based on one of the most representative studies in the field of object detection, the You Only Look Once (YOLO) [

18], which is an extremely fast object-detection paradigm. The YOLO discarded the process of seeking region proposals and directly predicted both bounding-box coordinates and categories, which dramatically accelerated the inference process [

18,

19,

20]. Pham et al. [

21] proposed YOLO-fine, which conducted finer regression in order to enhance the capacity of recognizing small objects and tackled the problems of domain adaptation by investigating its robustness to various backgrounds. Alganci et al. [

22] provided a comparison among YOLO-v3 and other CNN-based detectors for RSI and evaluated that the YOLO provided the most balanced trade-off between detection accuracy and computation efficiency. Additionally, a few studies shared similar ideas with the single-shot multibox detector [

23]. Zhuang et al. [

24] applied a single-shot framework, which focused on multi-scale feature fusion and improved performance for detecting small objects. In general, the one-stage-RSI detection methods were more appropriate for real-time object-detection tasks. However, it seems that CNN-based methods, whether one-stage or two-stage, have reached the bottleneck of progress.

Recently, the attention-based Transformer presented by Vaswani et al. [

25] has become the standard model for machine translation. Numerous studies have demonstrated that the Transformer might also be efficient at image-processing tasks, and they have achieved breakthroughs. The Transformer was able to obtain the relationship in RSIs at a long distance [

26,

27,

28], which tackled the difficulty of CNN-based methods for capturing global features. Therefore, there have been a number of successful studies focusing on Transformer-based models in the RS community. Inspired by the Vision Transformer [

26], He et al. [

29] proposed a Transformer-based hyperspectral image-classification method. They introduced the spatial-spectral Transformer, using a CNN to extract spatial features of hyperspectral images and a densely connected Transformer to learn the spectra relationships. Hong et al. [

30] presented a flexible backbone network for hyperspectral images named SpectralFormer, which exploited the spectral-wise sequence attributes of hyperspectral images in order to sequentially feed them into the Transformer. Zhang et al. [

31] proposed a Transformer-based method for a remote-sensing scene-classification method, which designed a new bottleneck based on multi-head self-attention (MHSA) for image embedding, and cascaded encoder blocks to enhance accuracy. They all achieved state-of-the-art performance, which shows the potential of the Transformer for various tasks in RSI processing. However, for RSI object detection, the amount of studies working on the basis of the Transformer is still insufficient. Zheng et al. [

32] proposed an adaptive, dynamically refined one-stage detector based on the feature-pyramid Transformer, which embedded a Transformer in the FPN in order to enhance its feature-fusion capacity. Xu et al. [

33] proposed a local-perception backbone based on the Swin Transformer for RSI object detection and instance segmentation, and they investigated the performance of their backbone on different detection frameworks. In their studies, the Transformer worked as a feature-interaction module, i.e., backbone or feature-fusion component, which is adaptable to various detection frameworks. Above all, since the Transformer has enormous potential to promote a unification of the architecture of various tasks in artificial intelligence, it is essential to further explore Transformer-based RSI object detectors.

In this paper, we investigate a neoteric Transformer-based remote-sensing object-detection (TRD) framework. The proposed TRD is inspired by the detection Transformer [

28], which takes features obtained from a CNN backbone as the input and directly outputs a set of detected objects. The existing Transformer-based RSIs object detectors [

32,

33] are still highly dependent on the existing detection frameworks composed of various surrogate-task components, such as duplicated prediction elimination, etc. The proposed TRD abandons the conventional complicated structure in favor of an independent and more end-to-end framework. Additionally, the CNN backbone in the TRD is trained with transfer learning. To reduce the diversity of the source domain and target domain, the T-TRD is proposed, which adjusts the pre-trained CNN with the attention mechanism for a better transfer. Moreover, since the quantity of reliable training samples for RSI object detection is usually insufficient for training a Transformer-based model, the T-TRD-DA explores data augmentation composed of sample expansion and multiple-sample fusion to enrich the training samples and prevent overfitting. We hope that our research will inspire the development of RSI object-detection components based on the Transformer.

In summary, the following are the main contributions of this study.

(1) An end-to-end Transformer-based RSI object-detection framework, TRD, is proposed, in which the Transformer is remolded in order to efficiently integrate features of global spatial positions and capture relationships of feature embeddings and objects instances. Additionally, the deformable attention module is introduced as an essential component of the proposed TRD, which only attends to a sparse set of sampling features and mitigates the problem of high computational complexity. Hence, the TRD can process RSIs on multiple scales and recognize objects of interest from RSIs.

(2) The pre-trained CNN is used as the backbone for feature extraction. Furthermore, in order to mitigate the difference between the two data sets (i.e., ImageNet and RSI data set), the attention mechanism is used in the T-TRD to reweight the features, which further improves the RSI detection performance. Therefore, the pre-trained backbone is better transferred and obtains discriminant pyramidal features.

(3) Data augmentations, including sample expansion and multiple-sample fusion, are used to enrich the diversity of orientations, scales, and backgrounds of training samples. In the proposed T-TRD-DA, the impact of using insufficient training samples for Transformer-based RSI object detection is alleviated.

2. The Proposed Transformer-Based RSI Object-Detection Framework

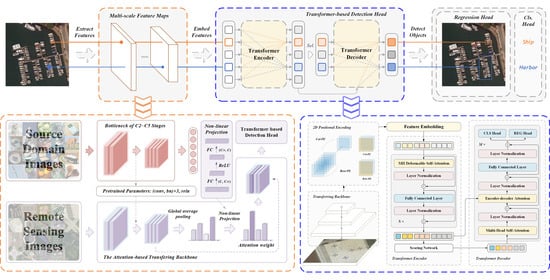

Figure 1 shows the overview architecture of the proposed Transformer-based RSI object-detection framework. First, a CNN backbone with attention-based transferring learning is used for extracting multi-scale feature maps of the RSIs. The feature maps from the shallower layers have higher resolutions, which benefits the detection of small-object instances, while the high-level features have wide receptive fields and they are appropriate for large-object detection and global spatial-information fusion. The features of all levels are embedded together in a sequence. The sequence of embedded features undergoes the encoder and decoder of the Transformer-based detection head and is transferred to a set of predictions with categories and locations. As the figure shows, the point in the input embeddings from the high-level feature map tends to recognize a small instance, while that from the low-level map is inclined to recognize a large instance. The detailed introduction of the proposed Transformer-based RSI object-detection framework is started with the framework of the proposed TRD and the effective deformable attention module in its Transformer. Subsequently, the attention-based transferring backbone and the data augmentation are introduced in detail.

2.1. The Framework of the Proposed TRD

Figure 2 shows the framework of the proposed TRD. A CNN backbone is first used to extract pyramidal multi-scale feature maps from an RSI. They are then embedded with the 2D positional encoding and converted to a sequence that can be inputted into the Transformer. The Transformer is remolded in order to process the sequence of image embeddings and make predictions of detected object instances.

The feature pyramid of the proposed TRD can be obtained by a well-designed CNN, and in this study, the detection backbone based on ResNet [

34] is adopted. The convolutional backbone takes an RSI

of an arbitrary size

as the input and generates hierarchical feature maps. Specifically, the ResNet generate hierarchical maps from the outputs of the last three stages, which are denoted as

, and

. Those of the other stages are not included due to their restricted receptive field and additional computational complexities. Then, the feature map at each level undergoes

convolutions, mapping their channels

to a smaller, uniform dimension

.

Hence, a three-level feature pyramid is obtained, which is denoted as and . Additionally, a lower-resolution feature map is acquired by a convolution on .

The feature pyramid is further processed to be fed into the Transformer. The MHSA in Transformer aggregates the elements of the input and does not discriminate their positions; hence, the Transformer has permutation invariance. To alleviate this problem, we need to embed spatial information in the feature maps. Therefore, after the -level feature pyramid is extracted from the convolutional backbone, the 2D position encodings are supplemented at each level. Specifically, the sine and cosine positional encoding of the original Transformer is extended to column and row positional encodings, respectively. They are both acquired by encoding on the dimension of the row or column and half of the channels, and then duplicated to the other spatial dimension. The final positional encodings are concatenated with them.

The Transformer expects a sequence consisting of elements of equal dimensions as inputs. Therefore, the multi-scale position-encoded feature maps are flattened in the spatial dimensions, developing them into sequences of length. The input sequence is obtained by concatenating the sequences from levels, which consists of tokens with dimensionalities. Each pixel in the feature pyramid is treated as an element of the sequence. The Transformer then models the interaction of the feature points and recognizes concerned object instances from the sequence.

The original Transformer adopted an encoder–decoder structure using stacked self-attention layers and point-wise fully connected layers, and the decoder was auto-regressive, generating an element at a time and appending the element to the input sequence for the next generation [

25]. In a different manner, the Transformer here changes the MHSA layers of the encoder to the deformable attention layers, which are more attractive for modeling the relationship between feature points due to the lack of computational and memory complexities. Besides, the decoder adopts a non-autoregressive structure, which parallelly decodes the elements. The details are as follows:

The encoder takes the sequence of the feature embeddings as the input and outputs a sequence of spatial-aware elements. The encoder consists of cascaded encoder layers. In each encoder layer, the sequence undergoes a deformable multi-head attention layer and a feed-forward layer, both of which are accompanied by a layer normalization and a residual computation, and the encoder layer outputs an equilong sequence of isometric elements. The deformable attention layers aggregate the features at positions in an adaptive field, obtaining feature maps with a distant relationship. The feature points can be used to compose the input sequence of the decoder. To reduce computational complexities, the feature points are fed into a scoring network, specifically, a three-layer FFN with a softmax layer, which can be realized as a binary classifier of the foreground and background. The highest scored points constitute a fixed-length sequence, which is fed into the decoder. The encoder endows the multi-scale feature maps with global spatial information and then selects a quantity-fixed set of spatial-aware feature points, which are more easily used for detecting object instances.

The decoder takes the sequence of essential feature points as the input and outputs a sequence of object-aware elements in parallel. The decoder also contains cascaded decoder layers, consisting of an MHSA layer, an encoder–decoder attention layer, and a feed-forward layer, followed by three-layer normalization and residual computations behind them, respectively. The MHSA layers capture interactions between pairwise feature points, which has benefits for constraints related to object instances, such as preventing duplicate predictions. Each encoder–decoder attention layer takes the elements from the previous layer in the decoder as queries and those from the output of the last encoder layer as memory keys and values. It enables the feature points to attend to feature contexts at different scale levels and global spatial positions. The output embeddings of each decoder layer are fed into a layer normalization and the prediction heads, which share a common set of parameters for different layers.

The prediction heads further decode the output embeddings from the decoder into object categories and bounding-box coordinates. Similar to most modern end-to-end object-detection architectures, the prediction head is divided into two branches for classification and regression. In the classification branch, a linear projection with a softmax function is used to predict the category of each embedding. A special ‘background’ category is appended to the classes, meaning that no concerned object is detected from the query. In the regression branch, a three-layer fully connected network with the ReLU function is utilized for producing the normalized coordinates of the bounding boxes. In total, the heads generate an set of predictions, and each set consists of a class and the corresponding box position. The final prediction results are obtained by removing the ‘background’.

The proposed TRD takes full advantage of the relationship-capturing capacity of the Transformer and rebuilds the original structure and embedding scheme. It explores a Transformer-based paradigm for RSI object detection.

2.2. The Deformable Attention Module

To enhance the detection performance of small-object instances, the idea of utilizing multi-scale feature maps is explored, in which the low-level and high-resolution feature maps are conducive to recognizing small objects. However, the high-resolution feature maps result in high computational and memory complexities for the conventional MHSA-based Transformer, because the MHSA layers measure the compatibility of each pair of reference points. In contrast, the deformable attention module only pays attention to a fixed-quantity set of essential sampling points at several adaptive positions around the reference point, which enormously decreases the computational and memory complexities. Thus, the Transformer can be effectively extended to the aggregation of multi-scale features of RSIs.

Figure 3 shows the diagram of the deformable attention module. The module generates a specific quantity of sampling offsets and attention weights for each element in each scale level. The features at the sampling positions of maps in different levels are aggregated to a spatial- and scale-aware element.

The input sequence of the embedded feature elements is denoted as

. In each level, the normalized location of the

-th feature element is denoted as

, which can be re-scaled to the practical coordinates at the

-th level with a mapping function

. For each element, which is represented as

, a

-channel linear projection is used to obtain

sets of sampling offsets

and attention weights

, which is normalized by

. Then, the features of the

sampling points

are calculated from the input feature maps by applying bilinear interpolation. They are aggregated by multiplying the attention weights

, generating a spatial- and scale-aware element. Therefore, the output sequence of the deformable attention module is calculated with (1).

where

indexes the

feature levels, and

indexes the

sampled points for keys and values, respectively. The

is the sequence of the practical coordinates {

}, and the

indicates the sequence of the

-th sampling offsets {

}. The

is composed of normalized attention weights

.

The deformable attention mechanism resolves the problem of processing spatial features with self-attention computations. It is extremely appropriate for Transformers in computer-vision tasks and it is adopted in the proposed TRD detector.

2.3. The Attention-Based Transferring Backbone

In general, deep CNN can obtain discriminative features of RSIs for object detection. However, due to the fact that RSI object-detection tasks usually have limited training samples and deep models always contain numerous parameters, deep-learning-based RSI object-detection methods usually face the problem of overfitting.

To address the overfitting issue, transfer learning is used in this study. In the proposed T-TRD detector, a pre-trained CNN model is used as the backbone for RSI feature extraction, and then the Transformer-based detection head is used to complete the object-detection task. In CNNs, the first few convolution operations extract low-level and mid-level features such as blobs, corners, and edges, which are common features for image processing [

35].

In RSI object detection, the proper re-usage of low-level and mid-level representations will significantly improve the detection performance. However, due to the fact that the spatial resolution and imaging environment between ImageNet and RSI are quite different, the attention mechanism is used in this study to adjust the pre-trained model for better RSI object detection.

In the original attention mechanism, more attention is paid to the important regions in an image and the selected regions are assigned by different weights. Such an attention mechanism has been proved to be effective in text entailment and sentence representations [

36,

37].

Motivated by the attention mechanism, we re-weight the feature maps to reduce the difference in the two data sets (i.e., RSI and ImageNet). Specifically, the feature maps in the pre-trained model are re-weighted and then transferred to the backbone in RSI object detection. When attention scores of different feature maps are higher, the transferring features are more important for the following feature extractions.

Figure 4 shows the framework of the proposed attention-based transferring backbone. As is shown, the model pre-trained on the source-domain-images data set is transferred to the backbone of the T-TRD. The attention weights are obtained with global average pooling and non-linear projection. At last, the feature maps are re-weighted according to the attention weights. The detailed steps are defined below.

At first, feature maps in one convolutional layer are operated to channel-wise statistics by using the global average pooling layer. Specifically, the spatial dimension

of each feature map is calculated by the following formula:

where

refers to the input feature map and

indicates the aggregated information of a whole feature map.

Next, to capture the relationships of feature maps with different importances, a neural network that consists of two fully connected (FC) layers and a ReLU operation are utilized. To limit model complexity, the first FC layer maps the total number of feature maps to a fixed value (i.e., 128), followed by a non-linearity ReLU operation. In addition, the second FC layer restores the number of feature maps to its initial dimension. By learning the parameters in this neural network through backpropagation, the interaction reflected the importance between different feature maps can be obtained.

Finally, the attention values of different feature maps are outputted by the sigmoid function, which restricts the values from zero to one. Each feature map multiplies the obtained attention values to distinguish the degree importance of different feature maps.

The above steps are used in the proposed attention-based transferring backbone. The transferring features from ImageNet to RSI re-weighted by the attention values could boost the feature discriminability, thereby reducing the difference between the two data sets by learning more important transferring features and weakening less important features.

2.4. Data Augmentation for RSI Object Detection

As is reported, the Transformer-based vision models are more likely to overfit than the CNN with equivalent computational complexity on limited data sets [

26]. However, the quantities of training samples in RSI data sets for object detection are usually deficient. Additionally, objects in an RSI sample are usually sparsely distributed, which is an inefficient method of training the proposed Transformer-based detection models. Hence, a data-augmentation method, which is composed of sample expansion and multiple-sample fusion, is merged into the training strategy of the T-TRD to improve the detection performance.

Let be the training samples. We define a set of four right-angle rotation transformations and another set of two horizontal flip transformations . Both sets are applied to all the training samples, generating a extended samples set .

For each sample in the extended set, we randomly choose three samples from the set and blend the four samples into a larger fixed-size sample. The samples are concatenated at the top-left, top-right, bottom-left, and bottom-right of an intersection point. Afterward, a blank canvas of the fusion image size is generated by gray padding. Then, the normalized coordinates of the intersection point are randomly generated, with a restricted range of 0.25 to 0.75. The concatenated sample is pasted on the canvas by aligning the intersection points. The composite images and boxes outside of the border of the canvas are cropped.

Figure 5 shows several examples of composite RSI samples. At last, random scale and crop augmentation are applied to the composite samples.

With the data augmentation, the problem of insufficient training samples is mitigated. The proposed T-TRD-DA trains a Transformer-based detection model on an enhanced training data set with more diversity of scale, orientation, background, etc., which prevents the proposed deep model from overfitting.

5. Conclusions

In this study, Transformer-based frameworks were explored for RSI object detection. It was found that the Transformer was good at obtaining the long-distance relationship; therefore, it could capture global spatial- and scale-aware features of RSIs and detect objects of interest. The proposed TRD used the pre-trained CNN to extract local discriminate features, and the Transformer was modified to process feature pyramid of an RSI and predict the categories and the box coordinates of the objects in an end-to-end manner. By combining the advantages of the CNN and Transformer, the experimental results of diverse terms demonstrated that the TRD achieved impressive RSI object-detection performance for objects of different scales, especially on small objects.

There was still a lot of room for improvement in the TRD. On the one hand, the use of the pre-trained CNN faced the problem of data-set shift (i.e., the source data set and the target data set were quite different). On the other hand, there were insufficient training samples for RSI object detection to train a Transformer-based model. Hence, to further improve the performance of the TRD, an attention-based transferring backbone and data augmentation were combined with the TRD to formulate the T-TRD-DA. The ablation experiments on various structures, i.e., TRD, T-TRD, TRD-DA, and T-TRD-DA, have shown that the two improvements as well as their combination were efficient. The T-TRD-DA was proved to be a state-of-the-art RSI object-detection framework.

Compared with the CNN-based frameworks, the proposed T-TRD-DA was demonstrated to be a better detection architecture. There were not anchors, non-maximum suppression, or FPN in the proposed frameworks. However, the T-TRD-DA exceeded YOLO-v3 and the Faster RCNN with FPN in detecting small objects. As an early stage of the Transformer-based detection method, the T-TRD-DA showed the potential of the Transformer-based RSI object-detection methods. Nevertheless, the proposed Transformer-based frameworks have the problem of low inference speed, which is another topic for further research.

Very recently, some modifications of the Transformer, including the self-training Trans-former and transferring Transformer, can be investigated for RSI object detection in the near future.

The findings reported in this study have some implications for effective RSI object detection, which show that Transformer-based methods have huge research value in the area of RSI object detection.