Simultaneous Vehicle Localization and Roadside Tree Inventory Using Integrated LiDAR-Inertial-GNSS System

Abstract

:1. Introduction

- (1)

- Long-distance tree canopies, buildings and overpasses obstruct GNSS signals, significantly reducing the accuracy of satellite navigation. Inertial Navigation Systems rely on Inertial Measurement Units (IMUs) for vehicle positioning, which can accumulate errors over time.

- (2)

- Running SLAM algorithms and tree extraction algorithms separately incurs a high computational resource cost. Existing SLAM feature extraction algorithms and tree feature extraction algorithms differ significantly, making it challenging to adopt a unified algorithm for simultaneous vehicle positioning and real-time tree inventory creation.

- (3)

- In regions with complex road conditions, fast tree detection algorithms that use shared SLAM feature information are prone to false positives due to environmental interference.

- (1)

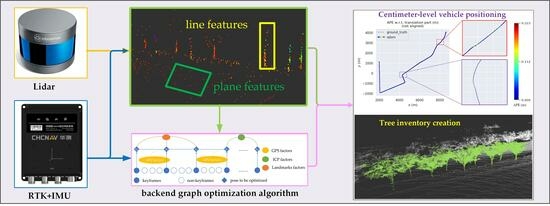

- We introduce a positioning and mapping scheme suitable for long-distance occlusion scenarios. This scheme presents a front-end odometry based on an error-state kalman filter (ESKF) and a back-end optimization framework based on factor graphs. The updated poses from the back-end are used for establishing point-to-plane residual constraints for the front-end in the local map.

- (3)

- We adopt a two-stage approach to minimize global mapping errors, refining accumulated mapping errors through GNSS-assisted registration.

- (3)

- In this paper, we propose an innovative approach that uses shared feature extraction results and data preprocessing results from SLAM to create a tree inventory. With this method, we are able to reduce the computational cost of the system while simultaneously achieving vehicle positioning and tree detection.

- (4)

- Additionally, we introduce a method that uses azimuth angle feature information to further mitigate false positives.

- (5)

- The system is extensively evaluated in urban and suburban areas. The evaluation results demonstrate the accuracy and robustness of our system, which can effectively handle positioning and tree inventory creation tasks in various scenarios.

2. Related Work

3. Materials and Methods

3.1. Feature Extraction

3.1.1. Candidate Point Calculation

3.1.2. Feature Point Selection

3.2. Tree Detection

3.3. Front-End Odometry

3.4. Backend Optimization

3.4.1. GPS Factors

3.4.2. ICP Factors

- (1)

- Loop closure detection: When a new keyframe is added to the factor graph, the keyframe closest to in Euclidean space is searched. Only when and are within a spatial distance threshold and a temporal threshold , an ICP factor is added to the factor graph. In the experiments, is usually set to 2 m, and is typically set to 15 s.

- (2)

- Low-speed stationary state: In a degenerate scenario, the zero bias estimation of the IMU in the frontend odometry can have significant errors over a long period, causing drift in the frontend odometry when the vehicle is moving slowly or at a standstill. Therefore, in such cases, additional constraints need to be added to prevent pose drift during prolonged stops. When the vehicle comes to a stop, and the surrounding point cloud features are relatively abundant, they can provide sufficient geometric information for ICP constraint solving. When the system detects that it is in a low-speed or stationary state, it will re-cache every keyframe acquired during this low-speed stationary state. Each time a new keyframe, denoted as x_(I + 1), is added to the factor graph, constraints are established between x_(i + 1) and the keyframe x_k that is furthest in time from the current moment.

3.4.3. Landmarks Factors

3.5. Map Update and Canopy Detection

4. Experimental Results and Discussion

4.1. Testing Platform

4.2. Test Results

4.3. Results and Analysis

4.3.1. Localization Accuracy Evaluation

4.3.2. Evaluation of Tree Detection Accuracy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Corada, K.; Woodward, H.; Alaraj, H.; Collins, C.M.; de Nazelle, A. A systematic review of the leaf traits considered to contribute to removal of airborne particulate matter pollution in urban areas. Environ. Pollut. 2020, 269, 116104. [Google Scholar] [CrossRef]

- Eck, R.W.; McGee, H.W. Vegetation Control for Safety: A Guide for Local Highway and Street Maintenance Personnel: Revised August 2008; United States, Federal Highway Administration, Office of Safety: Washington, DC, USA, 2008. [Google Scholar]

- Safaie, A.H.; Rastiveis, H.; Shams, A.; Sarasua, W.A.; Li, J. Automated street tree inventory using mobile LiDAR point clouds based on Hough transform and active contours. ISPRS J. Photogramm. Remote Sens. 2021, 174, 19–34. [Google Scholar] [CrossRef]

- Williams, J.; Schonlieb, C.-B.; Swinfield, T.; Lee, J.; Cai, X.; Qie, L.; Coomes, D.A. 3D Segmentation of Trees Through a Flexible Multiclass Graph Cut Algorithm. IEEE Trans. Geosci. Remote Sens. 2019, 58, 754–776. [Google Scholar] [CrossRef]

- Soilán, M.; González-Aguilera, D.; del-Campo-Sánchez, A.; Hernández-López, D.; Hernández-López, D. Road Marking Degradation Analysis Using 3D Point Cloud Data Acquired with a Low-Cost Mobile Mapping System. Autom. Constr. 2022, 141, 104446. [Google Scholar] [CrossRef]

- Rastiveis, H.; Shams, A.; Sarasua, W.A.; Li, J. Automated extraction of lane markings from mobile LiDAR point clouds based on fuzzy inference. ISPRS J. Photogramm. Remote Sens. 2019, 160, 149–166. [Google Scholar] [CrossRef]

- Yadav, M.; Lohani, B. Identification of trees and their trunks from mobile laser scanning data of roadway scenes. Int. J. Remote Sens. 2019, 41, 1233–1258. [Google Scholar] [CrossRef]

- Bauwens, S.; Bartholomeus, H.; Calders, K.; Lejeune, P. Forest Inventory with Terrestrial LiDAR: A Comparison of Static and Hand-Held Mobile Laser Scanning. Forests 2016, 7, 127. [Google Scholar] [CrossRef]

- Luo, Z.; Zhang, Z.; Li, W.; Chen, Y.; Wang, C.; Nurunnabi, A.A.M.; Li, J. Detection of Individual Trees in UAV LiDAR Point Clouds Using a Deep Learning Framework Based on Multichannel Representation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Cabo, C.; Ordoñez, C.; García-Cortés, S.; Martínez, J. An algorithm for automatic detection of pole-like street furniture objects from Mobile Laser Scanner point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 87, 47–56. [Google Scholar] [CrossRef]

- Hu, T.; Wei, D.; Su, Y.; Wang, X.; Zhang, J.; Sun, X.; Liu, Y.; Guo, Q. Quantifying the shape of urban street trees and evaluating its influence on their aesthetic functions based on mobile lidar data. ISPRS J. Photogramm. Remote Sens. 2022, 184, 203–214. [Google Scholar] [CrossRef]

- Oveland, I.; Hauglin, M.; Giannetti, F.; Kjørsvik, N.S.; Gobakken, T. Comparing Three Different Ground Based Laser Scanning Methods for Tree Stem Detection. Remote Sens. 2018, 10, 538. [Google Scholar] [CrossRef]

- Ning, X.; Ma, Y.; Hou, Y.; Lv, Z.; Jin, H.; Wang, Z.; Wang, Y. Trunk-Constrained and Tree Structure Analysis Method for Individual Tree Extraction from Scanned Outdoor Scenes. Remote Sens. 2023, 15, 1567. [Google Scholar] [CrossRef]

- Kolendo, Ł.; Kozniewski, M.; Ksepko, M.; Chmur, S.; Neroj, B. Parameterization of the Individual Tree Detection Method Using Large Dataset from Ground Sample Plots and Airborne Laser Scanning for Stands Inventory in Coniferous Forest. Remote Sens. 2021, 13, 2753. [Google Scholar] [CrossRef]

- Gollob, C.; Ritter, T.; Wassermann, C.; Nothdurft, A. Influence of Scanner Position and Plot Size on the Accuracy of Tree Detection and Diameter Estimation Using Terrestrial Laser Scanning on Forest Inventory Plots. Remote Sens. 2019, 11, 1602. [Google Scholar] [CrossRef]

- Husain, A.; Vaishya, R.C. Detection and thinning of street trees for calculation of morphological parameters using mobile laser scanner data. Remote Sens. Appl. Soc. Environ. 2018, 13, 375–388. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, J. Semi-automated extraction and delineation of 3D roads of street scene from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 79, 80–93. [Google Scholar] [CrossRef]

- Lv, Z.; Li, G.; Jin, Z.; Benediktsson, J.A.; Foody, G.M. Iterative Training Sample Expansion to Increase and Balance the Accuracy of Land Classification from VHR Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 59, 139–150. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, Y.; Qiu, F. Individual tree segmentation from LiDAR point clouds for urban forest inventory. Remote Sens. 2015, 7, 7892–7913. [Google Scholar] [CrossRef]

- Xu, S.; Xu, S.; Ye, N.; Zhu, F. Automatic extraction of street trees’ nonphotosynthetic components from MLS data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 64–77. [Google Scholar] [CrossRef]

- Dersch, S.; Heurich, M.; Krueger, N.; Krzystek, P. Combining graph-cut clustering with object-based stem detection for tree segmentation in highly dense airborne lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2021, 172, 207–222. [Google Scholar] [CrossRef]

- Tusa, E.; Monnet, J.-M.; Barre, J.-B.; Mura, M.D.; Dalponte, M.; Chanussot, J. Individual Tree Segmentation Based on Mean Shift and Crown Shape Model for Temperate Forest. IEEE Geosci. Remote Sens. Lett. 2020, 18, 2052–2056. [Google Scholar] [CrossRef]

- Yang, S.; Zhu, X.; Nian, X.; Feng, L.; Qu, X.; Mal, T. A Robust Pose Graph Approach for City Scale LiDAR Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1175–1182. [Google Scholar]

- Liu, H.; Pan, W.; Hu, Y.; Li, C.; Yuan, X.; Long, T. A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks. Sensors 2022, 22, 5989. [Google Scholar] [CrossRef] [PubMed]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar]

- Gao, F.; Wu, W.; Gao, W.; Shen, S. Flying on point clouds: Online trajectory generation and autonomous navigation for quadrotors in cluttered environments. J. Field Robot. 2018, 36, 710–733. [Google Scholar] [CrossRef]

- Kong, F.; Xu, W.; Cai, Y.; Zhang, F. Avoiding Dynamic Small Obstacles with Onboard Sensing and Computation on Aerial Robots. IEEE Robot. Autom. Lett. 2021, 6, 7869–7876. [Google Scholar] [CrossRef]

- Lu, F.; Milios, E. Globally Consistent Range Scan Alignment for Environment Mapping. Auton. Robot. 1997, 4, 333–349. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; pp. 1–9. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. SuMa++: Efficient LiDAR-based Semantic SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Macau, China, 4–8 November 2019. [Google Scholar]

- Wang, H.; Wang, C.; Xie, L. Intensity-SLAM: Intensity Assisted Localization and Mapping for Large Scale Environment. IEEE Robot. Autom. Lett. 2021, 6, 1715–1721. [Google Scholar] [CrossRef]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3d lidar inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3144–3150. [Google Scholar]

- Lin, J.; Zhang, F. R 3 LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package. In Proceedings of the 2022 IEEE International Conference on Robotics and Automation (ICRA 2022), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Wang, Y.; Lou, Y.; Song, W.; Huang, F. Simultaneous Localization of Rail Vehicles and Mapping of Surroundings with LiDAR-Inertial-GNSS Integration. IEEE Sens. J. 2022, 22, 14501–14512. [Google Scholar] [CrossRef]

- Yue, G.; Liu, R.; Zhang, H.; Zhou, M. A Method for Extracting Street Trees from Mobile LiDAR Point Clouds. Open Cybern. Syst. J. 2015, 9, 204–209. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, F. Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated Kalman filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24–30 October 2020; pp. 5135–5142. [Google Scholar]

- Pan, W.; Huang, C.; Chen, P.; Ma, X.; Hu, C.; Luo, X. A Low-RCS and High-Gain Partially Reflecting Surface Antenna. IEEE Trans. Antennas Propag. 2013, 62, 945–949. [Google Scholar] [CrossRef]

- Pan, W.; Fan, X.; Li, H.; He, K. Long-Range Perception System for Road Boundaries and Objects Detection in Trains. Remote Sens. 2023, 15, 3473. [Google Scholar] [CrossRef]

| ID | dXc | dYc | dDBH | dCBH | dCD | dCW | dDRE | dTH | |

|---|---|---|---|---|---|---|---|---|---|

| Urban Area | 1 | 11 | 9 | 4 | 23 | 16 | 35 | 6 | 55 |

| 2 | 6 | 6 | 2 | 16 | 12 | 51 | 10 | 83 | |

| 3 | 8 | 12 | 2 | 21 | 9 | 26 | 9 | 75 | |

| 4 | 8 | 7 | 4 | 21 | 13 | 33 | 12 | 93 | |

| 5 | 7 | 11 | 5 | 14 | 11 | 36 | 5 | 21 | |

| 6 | 13 | 12 | 2 | 25 | 9 | 47 | 6 | 45 | |

| 7 | 9 | 10 | 3 | 18 | 13 | 29 | 8 | 67 | |

| 8 | 10 | 6 | 6 | 16 | 12 | 30 | 9 | 38 | |

| 9 | 6 | 7 | 2 | 13 | 11 | 44 | 9 | 56 | |

| 10 | 7 | 8 | 4 | 21 | 14 | 28 | 6 | 38 | |

| 1 | 7 | 8 | 3 | 12 | 11 | 37 | 8 | 53 | |

| 2 | 8 | 13 | 4 | 15 | 7 | 18 | 9 | 22 | |

| 3 | 12 | 4 | 5 | 16 | 22 | 31 | 11 | 92 | |

| 4 | 5 | 6 | 3 | 8 | 13 | 19 | 6 | 34 | |

| 5 | 6 | 9 | 2 | 22 | 9 | 28 | 8 | 41 | |

| suburban Data | 6 | 12 | 10 | 6 | 16 | 12 | 52 | 11 | 97 |

| 7 | 11 | 8 | 5 | 22 | 21 | 38 | 9 | 68 | |

| 8 | 8 | 9 | 3 | 17 | 15 | 29 | 8 | 102 | |

| 9 | 6 | 11 | 4 | 15 | 16 | 37 | 7 | 34 | |

| 10 | 10 | 12 | 5 | 19 | 21 | 34 | 9 | 56 | |

| min | 5 | 4 | 2 | 8 | 7 | 18 | 5 | 21 | |

| max | 13 | 13 | 6 | 25 | 22 | 52 | 12 | 102 | |

| avg | 9 | 9 | 4 | 18 | 13 | 34 | 8 | 59 |

| TNT | PP | FP | TP | FN | TPR | ACC |

|---|---|---|---|---|---|---|

| 1376 | 1178 | 19 | 1159 | 217 | 0.84 | 0.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, X.; Chen, Z.; Liu, P.; Pan, W. Simultaneous Vehicle Localization and Roadside Tree Inventory Using Integrated LiDAR-Inertial-GNSS System. Remote Sens. 2023, 15, 5057. https://doi.org/10.3390/rs15205057

Fan X, Chen Z, Liu P, Pan W. Simultaneous Vehicle Localization and Roadside Tree Inventory Using Integrated LiDAR-Inertial-GNSS System. Remote Sensing. 2023; 15(20):5057. https://doi.org/10.3390/rs15205057

Chicago/Turabian StyleFan, Xianghua, Zhiwei Chen, Peilin Liu, and Wenbo Pan. 2023. "Simultaneous Vehicle Localization and Roadside Tree Inventory Using Integrated LiDAR-Inertial-GNSS System" Remote Sensing 15, no. 20: 5057. https://doi.org/10.3390/rs15205057