1. Introduction

Most advanced seismic signal processing methods, such as reverse time migration (RTM) [

1], full waveform inversion (FWI) [

2], and surface-related multiple elimination (SRME) [

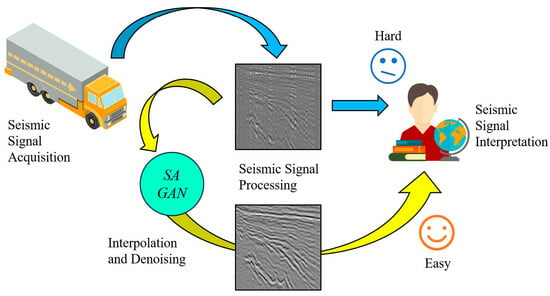

3], benefit from high-quality seismic signals. Based on high-quality seismic signals, we can also map geological structures and explore mineral resources. However, in field seismic exploration, the signal is often missing or contains noise due to the harsh acquisition environment and poor construction conditions. The presence of incomplete signals tainted by noise not only leads to the forfeiture of crucial information but also exerts detrimental effects on subsequent processing stages. Hence, the interpolation and denoising of seismic signals emerge as a pivotal endeavor in the realm of seismic exploration [

4,

5,

6,

7], holding profound significance in ensuring the fidelity and integrity of the data employed in this field.

Investigations into seismic signal interpolation and denoising methods can be broadly categorized into two primary methodologies: traditional approaches founded on model-driven principles and deep learning techniques rooted in data-driven paradigms.

Sparse transformation methods within traditional approaches have been extensively employed in the domain of seismic signal interpolation and denoising. For instance, Hindriks and Duijndam [

8] introduced the concept of minimum norm Fourier reconstruction, employing Fourier transformation on non-uniformly sampled data to derive Fourier coefficients and subsequently utilizing inverse Fourier transformation to yield a uniform time domain signal. In a similar vein, Satish et al. [

9] pioneered a novel methodology to compute the time–frequency map of non-stationary signals via continuous Wavelet transform. Additionally, Jones and Levy [

10] harnessed the K-L transform to reconstruct seismic signals and suppress multiples within CMP or CDP datasets. These methodologies, grounded in sparse transformation, often employ iterative techniques and leverage sparse transformations. Predominantly, the transformations utilized presently rely on fixed basis functions. However, a persisting challenge lies in the selection of appropriate basis functions to achieve a sparser representation of the data. The optimization of basis function selection to yield enhanced sparsity remains an ongoing concern within this domain.

Artificial neural networks have garnered significant traction in the realm of seismic data processing. Liu et al. [

11], for instance, proposed a method centered on neural networks for denoising seismic signals, demonstrating the efficacy of this approach. Similarly, Kundu et al. [

12] leveraged artificial neural networks for seismic phase identification, showcasing the versatility of these networks within seismic research. Despite their widespread application, traditional artificial neural networks exhibit certain drawbacks. The challenges encompass an excessive number of weight variables, necessitating a substantial computational load and protracted training durations. These limitations pose considerable hurdles, prompting researchers to explore alternative methodologies to circumvent these issues and enhance the efficiency of neural network-based seismic data processing.

Recent strides in convolutional neural networks (CNNs) have markedly influenced the broader landscape of signal and image processing [

13,

14]. Groundbreaking algorithms rooted in deep learning have been cultivated within various image processing domains, fostering sophisticated methodologies for signal interpolation and denoising. Given the multi-dimensional matrix nature of seismic signals, akin to a distinctive form of imagery, CNNs emerge as a compelling tool for addressing the intricate task of seismic signal interpolation and denoising. These techniques, as a result of sustained scholarly exploration, have become pivotal in geophysical exploration, particularly in the comprehensive study of interpolation and denoising challenges. A seminal work by Mandelli et al. [

15] marks the pioneering introduction of CNNs, enabling seismic interpolation amidst randomly missing seismic traces—an unprecedented achievement. Similarly, Park et al. [

16] harnessed the UNet architecture to achieve the complete interpolation of seismic signals afflicted by regularly missing traces, unveiling its potential in signal restoration. Wang et al. [

17] extended this, solidifying CNN’s efficacy in reconstructing both regular and irregular signals through rigorous comparative analysis. Furthermore, Gao and Zhang [

18] proposed leveraging trained denoising neural networks to reconstruct noisy models, albeit necessitating input network interpolation. Notably, exceptional performance has been attained across these tasks by leveraging advanced network architectures like residual neural networks [

19,

20], generative adversarial networks [

21], and attention mechanisms, further affirming the versatility and effectiveness of these methodologies in addressing seismic signal processing intricacies.

While the performance of deep learning methods in seismic interpolation and denoising remains commendable, the need for the separate training of missing and noisy seismic signals adds significant processing time, advocating the necessity for intelligent seismic signal processing technologies in the future. Hence, the exploration of concurrent interpolation and denoising techniques for seismic signals emerges as a promising research avenue. Vineela et al. [

22] ingeniously amalgamated attention mechanisms with Wavelet transforms, introducing an innovative method employing attention-based Wavelet convolutional neural networks (AWUN). This pioneering approach showcased remarkable results, particularly validated through robust field data experiments. Additionally, Li et al. [

23] optimized the nonsubsampled contourlet transform by refining iterative functions, significantly expediting convergence rates. The efficacy of this refined method was substantiated through comprehensive experiments demonstrating seismic signal interpolation and denoising. Furthermore, Cao et al. [

24] proposed a novel threshold method grounded in L

1 norm regularization to elevate the quality of simultaneous interpolation and denoising processes. Iqbal [

25] proposed a new noise reduction network based on intelligent deep CNN. The network demonstrates the adaptive capability for capturing noisy seismic signals. Zhang and Baan [

26] introduced an advanced methodology encompassing a dual convolutional neural network coupled with a low-rank structure extraction module. This approach is designed to reconstruct signals that are obscured by intricate field noise. These seminal contributions underscore the ongoing pursuit of more efficient and integrated methodologies in the realm of seismic signal processing.

In recent years, attention mechanisms have emerged as foundational models capable of capturing global dependencies [

27,

28,

29,

30,

31]. Specifically, self-attention [

32,

33], also referred to as intra-attention, calculates the response within a series by comprehensively considering all elements within the same array. Vaswani et al. [

34] demonstrated the remarkable potential of using only a self-attention model, showcasing the ability of translation models to achieve cutting-edge results. In a groundbreaking stride, Parmar et al. [

35] introduced an image transformer model that seamlessly integrated self-attention into an autoregressive framework for picture generation. Addressing the intricate spatial–temporal interdependencies inherent in video sequences, Wang et al. [

36] formalized self-attention as a non-local operation, introducing a novel paradigm for representing these relationships. Despite these advancements, the exploration of self-attention within the context of generative adversarial networks (GANs) remains unexplored. Xu et al. [

37] pioneered the fusion of attention with GANs, directing the network’s focus towards the word embeddings within an input sequence while not considering its internal model states.

The superiority of generative adversarial networks (GANs) over traditional probabilistic generative models lies in their ability to circumvent the learning mechanism of Markov chains. GANs present a versatile framework where diverse types of loss functions can be integrated, establishing a robust algorithmic foundation for crafting unsupervised natural data generation networks [

38]. Mirza and Osindero [

39] pioneered the conditional generation adversarial network, augmenting the GAN by introducing a generation condition within the network to facilitate the production of specific desired data. Radford et al. [

40] proposed a pioneering approach, merging a deep convolutional GAN with a CNN, enhancing the authenticity and naturalness of the generated data. Exploring further innovations, Pathak et al. [

41] introduced the Context Encoders network, employing noise coding on missing images as input for a deep convolutional GAN. This inventive methodology was tailored for image restoration purposes, showcasing its efficacy in image repair. In a similar vein, Isola et al. [

42] leveraged deep convolutional GANs and conditional GANs to process images, demonstrating the versatility of GAN architectures in image manipulation and enhancement.

The emergence of attention mechanism models and generative adversarial networks (GANs) represents recent advancements in neural networks, showcasing significant accomplishments in signal and image processing domains. Exploring their potential application in seismic signal interpolation and denoising stands as a valuable and compelling research trajectory [

43,

44]. Investigating the adaptation of these cutting-edge neural network paradigms within the context of seismic data holds promise for enhancing interpolation and denoising techniques in this specialized field.

To efficiently and accurately reconstruct and denoise the seismic signal, this research suggests a novel approach that combines the self-attention mechanism and the generative adversarial network for interpolation and denoising. The novelty of this method is that the receptive field may widen due to the self-attention mechanism, and combined with the generative adversarial network, it can better generate high-quality seismic signals. It also provides subsequent theoretical support for seismic signal interpolation and denoising methods based on deep learning. The contributions of this paper can be summarized as follows:

- (1)

Based on the characteristics of seismic signals, a novel network is proposed by combining a self-attention mechanism with a generative adversarial network. The self-attention module can effectively capture long-range dependencies. Therefore, SAGAN can comprehensively synthesize different event information from the seismic signals and is not limited to local areas. This is critical for the interpolation of missing trace seismic signals, where global factors need to be considered.

- (2)

The proposed method can simultaneously reconstruct and denoise seismic signals without training two networks separately, saving time and improving efficiency.

- (3)

This method has a good generalization ability, can be used for 2D and 3D seismic signals, and has achieved competitive results.

The rest of this article is organized as follows.

Section 2 introduces the proposed method and the training process of the network.

Section 3 introduces the seismic signal dataset, analyzes the results obtained from interpolation and denoising experiments, and compares the proposed method with other methods to demonstrate its effectiveness.

Section 4 is the conclusion of this article.

2. Methods

2.1. SAGAN Network Design

Convolutive layers are used to construct the majority of GAN-based models [

40,

45,

46] for data generation. It is computationally inefficient to describe long-distance dependencies in multidimensional signals using only convolutional layers since convolution only analyzes information in a limited vicinity. In this section, self-attention is added to the GAN framework using an adaptation of the non-local model, allowing both generator and discriminator to effectively describe interactions between spatial areas that are far apart. Due to its self-attention model, we refer to it as self-attention generative adversarial network (SAGAN), abbreviated as SAGAN. The SAGAN was introduced as a method for rapidly discovering internal signal representations that have global and long-range dependencies, and its specific architecture is shown in

Figure 1.

To compute the attention map, the data features from preceding hidden layer

are initially transformed into two feature spaces,

and

, where

and

.

and

denotes the degree to which the model synthesizes the

th area while paying attention to the

th location.

is the number of channels in this example, while the number of feature locations from preceding hidden layer is

. The attention layer’s output is

, where

In the above formulation, , , , and are the weight matrices; they use convolutions as their implementation. After a few training epochs on our dataset, we found that there was no appreciable performance drop when we changed the channel number from to , where = 1, 2, 4, 8. In all of our trials, we chose = 8 (8) for memory effectiveness.

Additionally, we feed back the input feature map after further scaling the output of attention by scale parameter. Consequently, ultimate result is provided by

where

is a scalar that may be learned and is initially set to 0. Adding the learnable

enables the network to primarily depend on clues in the immediate area, as it is simpler, and then progressively study to give greater weight to non-local data. The aim is to understand brief tasks first and, after that, gradually make them more difficult. Both generator and discriminator in SAGAN have been trained alternately by reducing the hinge version of adversarial loss, and both have been given the suggested attention module. The loss functions of generator and discriminator are shown in Formula (4).

2.2. SAGAN Network Training

We constructed a training set of 14,416 seismic signals to train the network. The dataset used was the internal dataset of the research group. Firstly, five types of seismic signals with clear information on the event were selected, and then the five large-sized seismic signals were cut to obtain a huge number of seismic signals for the field of seismic exploration. These datasets were used for training, all of which had a size of 256 × 256. These seismic signals include both synthetic seismic signals and field seismic signals. So, the training set is made up of five groups of seismic signals, each of which consists of eight levels of damage. The training set to the validation set to the test set is eight/one/one. To verify the performance of SAGAN, we select the commonly used Wavelet, CNN, and UNet approaches as the comparison methods. In order to better demonstrate the training process,

Figure 2 shows the training curves of each process of the SAGAN network. The generator’s adversarial loss function, the discriminator’s adversarial loss function, as well as the generator’s loss function and the discriminator’s loss function together can be seen. Among them, the loss function value of one epoch is obtained by averaging multiple batches of seismic data. The number of epochs used in this experiment is 100, which was verified through multiple interpolation and denoising experiments.

Now, we will give a detailed introduction to the parameter settings during the training process of the SAGAN method. The input size of the network is set to 192 × 192, the size of the inference input is 256 × 256, the training batch size is set to 4, epoch is set from to 0 to 100, and the pre-trained weight models GB2A and DA are loaded. The GB2A model contains 14,776,866 parameters; the DA model contains 9,314,753 parameters. The learning rate of the generator is set to 0.0002, and the learning rate of the discriminator is set to 0.001. The optimizer selects the Adam optimizer; beta1 is 0.5 and beta2 is 0.999. The adversarial loss is the least squares loss. The output of the network is divided into three parts, namely input value, interpolation and denoising value, and label value.

Figure 3 displays the training process of the SAGAN and UNet modules, in which

Figure 3a is the curve of PSNR varied with epoch, and

Figure 3b is the curve of SSIM varied with epoch.

Figure 3a,b demonstrate that the PSNR curves of SAGAN and UNet are both increasing, but the performance of SAGAN is better than that of UNet, and the SSIM curve is also the same. It can be seen that when the number of epochs reaches 100, the curve begins to converge.

The specific steps for reconstructing and denoising experiments using the SAGAN method are as follows:

- (1)

Input seismic signals with noise and missing traces into the SAGAN network.

- (2)

The input seismic signal is represented in a matrix form, and then the self-attention feature map is obtained through the convolutional feature map decomposition operation of the self-attention mechanism.

- (3)

Next, through GAN, we make the seismic signal generated by the generator a fake seismic signal, and the clean seismic signal is true seismic signal. Then, the hyperparameters of the generative adversarial network are iteratively trained and generated.

- (4)

Finally, the reconstructed and denoised seismic signal is output.

2.3. The Innovation of SAGAN Method

The incorporation of self-attention modules within convolutions represents a synergy aimed at capturing extensive, multi-level dependencies across various regions within images. Leveraging self-attention empowers the generator to craft images meticulously, orchestrating intricate fine details in each area to harmonize seamlessly with distant portions of the image. Beyond this, the discriminator benefits by imposing intricate geometric constraints more accurately onto the global structure of the image. Consequently, recognizing the potential and applicability of these attributes in seismic signal processing, a tailored SAGAN method has been proposed to optimize the interpolation and denoising processes within this specialized domain.

The selection of UNet, CNN, and Wavelet transform methods for comparison in subsequent experiments stems from their distinct structural and functional attributes. UNet’s architecture stands out for its ability to amalgamate information from diverse receptive fields and scales. However, each layer in UNet, despite its capacity to incorporate varied multi-scale information, operates within limited constraints, primarily focusing on localized information during convolutional operations. Even with different receptive fields, the convolutional unit confines its attention to the immediate neighborhood, overlooking the potential contributions from distant pixels to the current area. In contrast, the inherent capability of the attention mechanism, as incorporated in SAGAN, lies in its aptitude to capture extensive, long-range relationships; factor in global considerations; and synthesize information across multiple scales without confining itself solely to local regions. This stands as a distinctive advantage over methods like UNet, enabling a more holistic perspective when processing seismic signals. Furthermore, the inclusion of CNN serves as a benchmark, representing a classic deep learning methodology. Meanwhile, the utilization of Wavelet transform method adds a classical, traditional approach to the comparative analysis. This diverse selection of methodologies ensures a comprehensive evaluation, juxtaposing modern deep learning techniques with conventional yet established methods, enriching the exploration of seismic signal processing techniques.

3. Interpolation and Denoising Experiments Simultaneously

The seismic signal datasets used in this paper for training, verification, and testing are two-dimensional (2D) synthetic seismic signals, 2D marine seismic signals, three-dimensional (3D) land seismic signals, and 3D field seismic signals. The data sets utilized in this experiment have been sourced from the internal repositories of our research group, ensuring a controlled and specialized collection for our investigations. However, the availability of public data sets plays a pivotal role in advancing research in seismic exploration. Fortunately, repositories like

https://wiki.seg.org/wiki/Open_data (accessed on 10 May 2020) offer valuable public data sets, although a standardized, widely accepted public data set remains an ongoing aspiration for scholars within the seismic exploration domain.

The pursuit of a standardized public data set stands as a shared goal among scholars in this field. Such a resource would not only facilitate benchmarking and comparison among various methodologies but also foster collaboration and innovation within the seismic exploration community. As researchers continue to commit their efforts to this pursuit, the creation of a standard public data set remains a collective ambition, promising significant advancements in the field.

In this section, the performance validation of the SAGAN method is undertaken through comprehensive simultaneous interpolation and denoising experiments. The experiments are meticulously divided into four distinct parts, each dedicated to the interpolation and denoising of specific types of seismic signals: 2D synthetic seismic signals, 2D marine seismic signals, 3D land seismic signals, and 3D field seismic signals. This structured approach allows for a comprehensive evaluation of the SAGAN method’s efficacy across diverse seismic signal types, ensuring a robust assessment of its performance in varying signal complexities and domains.

The damage process of seismic signals is divided into two steps. Firstly, Gaussian white noise is artificially added, and the specific method is as follows:

Among them,

x is the original seismic signal,

b is the added Gaussian white noise, sigma is the variance, also known as noise level, and

y is the noisy seismic signal. Because the noise contained in seismic signals is mostly random noise, the method of adding noise is to add Gaussian white noise with different noise levels (variances), just as papers [

47,

48] also adopt the same strategy.

The second step is to create missing seismic signals. The formation process of missing seismic signals is as follows:

Among them, S is the sampling matrix, and the damaged seismic signal is obtained by operating the sampling matrix with the noisy signal. This paper sets four sampling rates in the experiment, namely 30%, 40%, 50%, and 60%, which represent the missing rate.

The interpolation and denoising performance metrics are RMSE, PSNR, and SSIM. The definitions of the three metrics are shown in Formulas (7)–(9).

where

and

are the traces and sampling points of seismic signal, respectively. Among them, the

represents the original seismic signal,

is the interpolated and denoised seismic signal,

is the maximum value in the original seismic signal.

and

, respectively, represent the mean of

and

;

and

are the constants;

and

, respectively, represent the variance of

and

;

represents

and

covariance.

The smaller the value of RMSE, the better the interpolation and denoising effect. The higher the value of PSNR, the better the interpolation and denoising effect. The closer the value of SSIM is to 1, the better the interpolation and denoising effect. When the MSE value approaches 0, the PSNR value tends to infinity, but this situation is almost impossible to achieve.

3.1. Two-Dimensional Synthetic Seismic Signal Interpolation and Denoising

In the experimental setup, a 2D synthetic seismic signal is intentionally subjected to varying degrees of damage, encompassing eight distinct levels of impairment. Specifically, these levels are delineated by two factors: a noise level of 10 or 20, coupled with different sampling rates (30%, 40%, 50%, 60%). This controlled setting aims to simulate diverse scenarios of signal degradation. To restore and denoise these impaired seismic signals, the Wavelet, CNN, UNet, and SAGAN methods are employed. The results of the signal recovery across all eight damage degrees are presented in

Figure 4 and subsequent displays, providing a comprehensive visual representation of the recovery outcomes for each scenario.

Figure 4a showcases the pristine state of the 2D synthetic seismic signal—a clean representation comprising three distinct events. This signal is characterized by 256 traces, each containing 256 sampling points, forming the foundational basis for the subsequent comparative analyses of signal restoration and denoising efficacy.

Upon scrutinizing the subgraphs of

Figure 4, a notable observation arises. Specifically, in

Figure 4k, where the noise level is set at 10 and the missing rate at 30%, a discernible discrepancy surfaces: the error map derived from the SAGAN method displays minimal residuals, contrasting starkly with the conspicuous residual evident in the error map generated by the Wavelet transform method across three events. Delving deeper into

Figure 4m,o, a compelling trend emerges: with the consistent missing rate, the escalation in noise levels indeed exerts a tangible influence on both interpolation and denoising outcomes. Of note, the impact is discernable as the missing rate ascends; the performance of CNN gradually deteriorates in its efficacy for interpolation and denoising. In stark contrast, SAGAN exhibits a consistent ability to sustain continuity amidst escalating missing rates, underscoring its resilience in preserving continuous events.

Observations from

Figure 4 elucidate a crucial aspect: at a 60% degree of missingness, the method exhibits certain limitations attributed to substantial contiguous missing regions. Nonetheless, it is notable that in most instances, the method proposed in this manuscript excels in simultaneous interpolation and denoising. Furthermore, the efficacy of SAGAN becomes evident, particularly as the extent of damage intensifies, owing to its adaptive utilization of the attention mechanism in recovering seismic signals. To better discern the recovery performance across the four approaches,

Table 1 encapsulates the RMSE, PSNR, and SSIM values. These metrics aim to provide a comprehensive evaluation framework, allowing a nuanced comparison of the performance among these methodologies.

From the data in

Table 1, it becomes apparent that at specific conditions, such as a noise level of 10 and a missing rate of 30% or a noise level of 10 with a missing rate of 40%, the recovery efficacy of UNet surpasses that of SAGAN. However, as the extent of damage escalates, SAGAN exhibits its strengths and advantages over UNet. To enhance the visual representation of these results, we organized the RMSE and PSNR values from

Table 1 into box plots for improved visualization.

Figure 5 comprises two distinct box plots, delineating pertinent insights. Notably, in

Figure 5a, the median line representing the SAGAN method sits below that of the comparative method, highlighting SAGAN’s superior performance. Conversely, in

Figure 5b, the median line for the SAGAN method exceeds that of the comparative method, indicating a differing trend in performance under these specific conditions.

3.2. Two-Dimensional Marine Seismic Signal Interpolation and Denoising

In the realm of the 2D marine seismic signal, diverse damage degrees were intentionally induced, spanning eight distinct levels. Each setting included variations in the noise level, set at 10 or 20, and diverse sampling rates ranging from 30% to 60%. Employing the Wavelet, CNN, UNet, and SAGAN methods, the seismic signal underwent a process of interpolation and denoising.

Figure 6 aptly illustrates the resulting recovery outcomes across varying sampling rates.

Figure 6a serves as a visual depiction of the original 2D marine seismic signal. This intricate signal comprises multiple discernible events, with a trace number totaling 256 and 256 sampling points, encapsulating the complexity inherent in such seismic signals.

In

Figure 6m, a notable observation emerges: the error map derived from the Wavelet transform method exhibits the most pronounced residuals. Notably, on distinct seismic traces, the performance of SAGAN outshines that of UNet and CNN, showcasing superior error reduction. Continuing the observation with

Figure 6n, it becomes apparent that when confronted with consecutively missing traces, the efficacy of various methods in reconstructing the seismic signal presents challenges. Portions of the missing trace remain unreconstructed across methodologies. However, even under these demanding conditions, the interpolation and denoising outcomes achieved by SAGAN maintain a noticeable superiority over other methodologies.

In scrutinizing

Figure 6, a discernible trend emerges: when confronted with lower damage degrees, both methods exhibit commendable restoration of the seismic signal. However, as the damage degree escalates, the advantage of SAGAN becomes increasingly pronounced. SAGAN’s adept use of adaptive attention mechanisms enables a more discerning observation of events within seismic signals, facilitating more effective recovery. This characteristic positions SAGAN as a superior choice for seismic signal interpolation and denoising when compared to Wavelet, CNN, and UNet. To facilitate a clearer comparison of the recovery effects among the four approaches,

Table 2 compiles the RMSE, PSNR, and SSIM values. These metrics serve as quantitative indicators to discern and evaluate the performance discrepancies across these methodologies.

The data in

Table 2 reveal a pertinent trend: at lower damage degrees in the seismic signal, the PSNR and SSIM values of SAGAN only marginally exceed those of UNet. However, as the damage degree intensifies, SAGAN consistently outperforms UNet, aligning with our prior theoretical analysis.

Figure 7 presents two box plots, offering additional insights. In

Figure 7a, the median line representing the SAGAN method sits below that of the UNet, CNN, and Wavelet methods, indicative of its slightly lower performance in specific conditions. Conversely, in

Figure 7b, the median line for the SAGAN method surpasses that of the UNet, CNN, and Wavelet methods, signaling its superior performance under these circumstances. These visualizations further corroborate the performance disparities across methodologies under varying degrees of damage in seismic signals.

Aimed at better proving the performance of SAGAN, the 3D seismic signals are recovered below.

3.3. Three-Dimensional Land Seismic Signal Interpolation and Denoising

The investigation extends ablation experiments initially conducted in a 2D setting to encompass the dynamic scope of 3D land and field seismic signals. To delineate the impact of varied damage intensities, the 3D land seismic signals were subjected to eight distinct damage degrees. Specifically, these degrees were configured with two noise levels, 10 and 20, encompassing sampling rates of 30%, 40%, 50%, and 60% for each noise level. Employing the Wavelet, CNN, UNet, and SAGAN methodologies, the seismic signals underwent interpolation and denoising procedures. As illustrated in

Figure 8, the outcomes of the recovery process under different sampling rates are depicted.

Figure 8a specifically presents the original 3D land seismic signal, comprising a multitude of events within its slice. This slice encompasses 256 traces with a sampling point of 256, manifesting the intricacies inherent in the seismic signal.

Figure 8l distinctly highlights the discernible presence of artifacts, particularly evident when employing the UNet method. This observation underscores the inherent challenges encountered during the intricate process of reconstructing and denoising three-dimensional seismic signals. Analogous to their two-dimensional counterparts, the complexity amplifies when confronted with uninterrupted missing traces. However, the culmination of comprehensive experimentation and comparative analysis among various methodologies unequivocally demonstrates that the SAGAN method excels in achieving optimal interpolation efficacy.

Observing

Figure 8, it becomes apparent that for lower degrees of damage, both methods demonstrate a proficient restoration of the seismic signal. However, as the damage escalates, the advantage in recovery effectiveness distinctly tilts in favor of the SAGAN approach. This disparity arises due to SAGAN’s adaptive utilization of attention mechanisms, specifically tailored to the recovery of seismic signals. A mere visual analysis of the recovery outcome graphs fails to comprehensively elucidate the nuanced efficacy of distinct methods. To more precisely discern the recovery effects of the four approaches,

Table 3 meticulously presents the RMSE, PSNR, and SSIM values corresponding to each methodology.

Table 3 distinctly portrays a substantial disparity in the PSNR and SSIM values, showcasing the marked superiority of SAGAN over the UNet, CNN, and Wavelet methods. Notably, this pronounced performance divergence persists consistently when the missing rate of the seismic signal stands at 50%, irrespective of the noise level. Even at a missing rate of 60%, SAGAN retains a notable edge in the recovery process, aligning with the anticipated outcomes derived from theoretical analysis. These objective performance metrics, thus, validate and reinforce the empirical findings, affirming the advantageous proficiency of the SAGAN methodology in seismic signal recovery.

Figure 9 shows two box plots, and it can be observed that the median line of the SAGAN method in

Figure 9a is lower than that of the comparison method, while the median line of the SAGAN method in

Figure 9b is higher than that of the comparison method.

The culminating segment of the experimentation process involves the recovery of the 3D field seismic signal, presenting the most formidable challenge within this experimental phase.

3.4. Three-Dimensional Field Seismic Signal Interpolation and Denoising

The intricate nature of 3D field seismic signals necessitates comprehensive efforts in their interpolation and denoising processes. To address this challenge, various damage levels were meticulously assigned to the 3D field seismic signal, encompassing eight distinct degrees. These levels were carefully structured around two noise levels, 10 and 20, coupled with sampling rates set at 30%, 40%, 50%, and 60%. Interpolation and denoising endeavors were meticulously conducted, employing the Wavelet, CNN, UNet, and SAGAN methodologies, each method tailored to address the complexities inherent in these seismic signals. In

Figure 10, the interpolation and denoising results under diverse sampling rates are shown.

Figure 10a shows the original 3D field seismic signal. The 3D field seismic signal slice consists of complex events; the trace number in this seismic signal is 256, and the sampling point is 256.

A discernible trend emerges from

Figure 10k,o, highlighting that at a missing rate of 30%, multiple methodologies exhibit superior efficacy in reconstructing seismic signals. However, as the missing rate escalates to 40%, 50%, and 60%, the effectiveness of interpolation gradually diminishes across the methods. Notwithstanding, amidst these escalating missing rates, the SAGAN method consistently maintains its superior performance in both interpolation and denoising, outperforming its counterparts. Notably, a keen observation from

Figure 10m,q reveals that heightened noise levels predominantly impact the inclined events within the seismic signal. Specifically, at a noise level of 20, there is a discernible increase in residual tilted events, signifying the nuanced impact of noise amplification on the intricate components of the seismic data.

Figure 10 presents a comprehensive overview, indicating that all methods demonstrate a foundational capacity to undertake the intricate tasks of reconstructing and denoising seismic signals. Nevertheless, the distinctive advantage of SAGAN becomes evident, particularly in handling seismic signals plagued by higher missing rates, showcasing its superior recovery performance in such scenarios. These nuances are further validated through the RMSE, PSNR, and SSIM values meticulously presented in

Table 4. Additionally,

Figure 11, depicted through two box plots, accentuates these disparities; in

Figure 11a, the median line attributed to the SAGAN method conspicuously rests below those of the UNet, CNN, and Wavelet methodologies. Conversely, in

Figure 11b, the median line associated with the SAGAN method notably surpasses those of the comparative UNet, CNN, and Wavelet methods. These visual representations offer compelling evidence supporting the pronounced advantages of the SAGAN approach in effectively handling seismic signal recovery across diverse conditions.

Table 4 demonstrates that the performance indexes of the SAGAN method are not as advantageous as when recovering synthetic seismic signals, which indicates that all methods can recover seismic signals better in the actual seismic signal recovery task. However, in order that pursue high-quality seismic signals to achieve better results in subsequent seismic signal interpretation tasks, the SAGAN method has obvious advantages for both 2D and 3D seismic signal recovery.

The above four experimental results all indicate that the objective performance metrics of the SAGAN method have significant advantages. However, we cannot simply focus on subjective interpolation and denoising maps or only look at objective performance metrics. We should also combine subjective and objective factors to better illustrate the generalization of the method. Due to the fact that high-quality seismic signals are provided for geologists to better interpret the corresponding seismic signals, subjective maps are also very crucial. We can find that in the above experiments, the subjective maps of the SAGAN method are also the clearest among the four methods in terms of preserving events of the original seismic signals.

3.5. Interpolation Experiments with Various Noises

Next, we add two types of noise to the 3D field seismic signal, namely colored noise and Gaussian white noise. These two types of noise are also common noises in seismic signals. The above interpolation and denoising experiments are then repeated using different methods. In order to simplify the layout,

Figure 12 only shows the added noise and does not show the interpolation and denoising results of different methods.

Table 5 still gives the performance indicators.

It can be seen in

Table 5 that after adding two kinds of noise, the performance index of the SAGAN method is the best regardless of the missing rate, which once again verifies the advantages of multi-scale SAGAN.

Finally, we increase the intensity of the colored noise and continue the interpolation and denoising experiments of 3D field seismic signals. The added noise images are shown in

Figure 13.

Table 6 lists the performance indicators of seismic signal interpolation and denoising using different methods under different circumstances.

Observing

Figure 13 and analyzing

Table 6, it can be found that when 1.5 times the colored noise is added and the Gaussian noise level is 10, the superposition of the two noises may produce a neutralizing effect, which will weaken the overall noise. The performance metrics after interpolation and denoising will generally be better than without colored noise. However, when the noise level is 20, the overall effect is not as good as when there is only Gaussian noise, which proves that when multiple noises coexist, the difficulty of interpolation and denoising will increase. Overall, the proposed SAGAN method still has advantages.

3.6. Comparison of Running Times of Different Methods

In the last part of the experiments, we started to analyze the computational efficiency to see what the testing time of different methods is while ensuring the accuracy of interpolation and denoising. If the test time is also an advantage, then sacrificing a lot of training time is worth it.

Table 7 lists the running time results after using different methods to interpolate and denoise different types of seismic signals.

We analyze the data in

Table 7. For the two-dimensional synthetic seismic signal, the running time of Wavelet transform is 4.13 s, which increases by 4.8% for CNN, decreases by 34.1% for UNet, and decreases by 36.1% for SAGAN. Compared with UNet, SAGAN dropped by 2.9%. For a three-dimensional field seismic signal, the Wavelet transform time is 4.94 s. Compared with Wavelet transform, the CNN running time is reduced by 18.2%, the UNet running time is reduced by 33.6%, and the SAGAN running time is reduced by 41.7%. Compared with UNet, the running time of SAGAN is reduced by 12.2%. It can be found from

Table 5 that although the deep learning methods require a lot of time to train network parameters in the early stage, when the network training is completed, the test time has an advantage compared with the traditional methods, and the interpolation and denoising accuracy has also been improved. Therefore, deep learning methods have attracted more and more attention from scholars in the field of seismic signal processing. Each method has its own unique contribution to seismic signal processing and has accelerated the intelligent development of seismic exploration.