1. Introduction

Grains are the foundation of social development, and efficient and accurate classification of agricultural lands can facilitate the control of crop production for social stability. According to statistics published by the Food and Agriculture Organization of the United Nations, among various grains, rice (

Oryza sativa L.) accounts for 20% of the world’s dietary energy and is the staple food of >50% of the world’s population [

1]. However, frequent natural disasters such as typhoons, heavy rains, and droughts hinder rice production and can cause substantial financial losses for smallholder farmers [

2,

3,

4,

5], particularly in intensive agricultural practice areas such as Taiwan.

Many countries have implemented compensatory measures for agricultural losses caused by natural disasters [

6,

7]. Currently, in situ disaster assessment of agricultural lands is mostly conducted manually worldwide. According to the Implementation Rules of Agricultural Natural Disaster Relief in Taiwan, township offices must perform a preliminary disaster assessment within 3 days of a disaster and complete a comprehensive disaster investigation within 7 days. After reporting to the county government, township offices must conduct sampling reviews within 2 weeks. A sampled agricultural paddy with ≥20% lodging is considered a disaster area; to able to receive cash and project assistance for rapidly restoring damaged agricultural land, a sampling accuracy of ≥90% is required. All assessments are conducted through estimation and random sampling because of the vast land area of the country and labor constraints. Consequently, assessments frequently yield inaccurate and overdue loss reports because of time and human labor constraints. In addition, local authorities often deliberately overreport losses in order to obtain generous subsidies from the central government and gain favor from local communities. Therefore, overreporting affects disaster control and relief policies. Moreover, in irregular damaged agricultural fields, directly calculating the damaged areas with the unaided eyes is difficult. Furthermore, the affected farmers are required to preserve evidence of the damage during assessment; thus, they are not allowed to resume cultivation for at least 2 weeks, which considerably affects their livelihood. Therefore, to provide a quantitative assessment method and rapidly alleviate farmers’ burdens, developing a comprehensive and efficient agricultural disaster assessment approach to accelerate the disaster relief process is imperative.

Remote sensing has been broadly applied to disaster assessment [

8,

9,

10,

11]. To reduce compensation disputes on crop lodging interpretation assessment after an agricultural disaster, many remote sensing applications have been applied to agricultural disaster assessment [

12,

13,

14]. For example, satellite images captured through synthetic aperture radar (SAR) have been widely used for agricultural management, classification, and disaster assessment [

15]. However, limited by the fixed capturing time and spatial resolution, satellite images often cannot provide accurate real-time data for disaster interpretation [

16]. In addition, SAR requires constant retracking during imaging because of the fixed baseline length, resulting in low spatial and temporal consistency levels and thus reducing the applicability of SAR images in disaster interpretation [

15].

Unmanned aerial vehicles (UAVs), which have been rapidly developed in the past few years, exhibit advantages of low cost and easy operation [

17,

18,

19,

20]. UAVs fly at lower heights than satellites and can instantly capture bird’s-eye view images with a high subdecimeter spatial resolution by flying designated routes according to demands. Owing to the advanced techniques of computer vision and digital photogrammetry, UAV images can be used to produce comprehensive georectified image mosaics, three-dimensional (3D) point cloud data [

21], and digital surface models (DSMs) through many techniques and image-based modeling (IBM) algorithms such as Structure-from-Motion (SfM), multiview stereo (MVS), scale-invariant feature transform (SIFT), and speeded-up robust features (SURF) [

22,

23,

24]. Therefore, UAVs have been widely applied in production forecasting for agricultural lands [

25,

26,

27,

28,

29], carbon stock estimation in forests [

30], agricultural land classification, and agricultural disaster assessment [

31,

32,

33,

34,

35]. In addition, height data derived from UAV image-generated DSMs have received considerable attention because studies have revealed that height data possess a critical contribution to classification and have the potential to improve classification accuracy compared with the use of UAV images only [

36,

37].

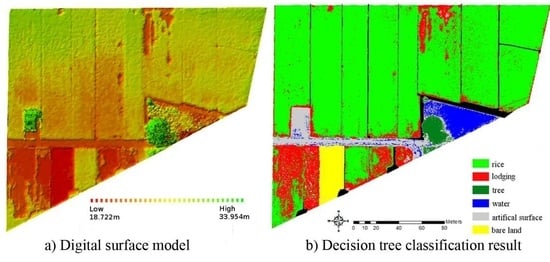

This study proposes a comprehensive and efficient rice lodging interpretation method entailing the application of a spatial and spectral hybrid image classification technique to UAV imagery. The study site was an approximately 306-ha crop field that had recently experienced agricultural losses in southern Taiwan. Specifically, spatial information including height data derived from a UAV image-generated DSM and textural features of the site was gathered. In addition to the original spectral information regarding the site, single feature probability (SFP), representing the spectral characteristics of each pixel of the UAV images, was computed to signify the probability metric based on the pixel value and training samples. Through the incorporation of the spatial and spectral information, the classification accuracy was assessed using maximum likelihood classification (MLC) [

38,

39] and decision tree classification (DTC) [

40]. Finally, the proposed hybrid image classification technique was applied to the damaged paddies within the study site to interpret the rice lodging ratio.

2. Materials and Methods

Figure 1 depicts the flowchart of the study protocol, starting with UAV imaging. DSM specifications were formulated by applying IBM 3D reconstruction algorithms to UAV images. Moreover, texture analysis was conducted followed by image combination to produce spatial and spectral hybrid images. After training samples were selected from the site, the SFP value was computed, which was later used as the threshold value in the DTC process. Finally, image classification accuracy was evaluated using MLC and DTC, and the rice lodging ratio at the study site was interpreted.

2.1. Study Site

The study site is located in the Chianan Plain and Taibao City, Chiayi County, with a rice production area of approximately 12,000 ha, which is the second largest county in Taiwan (

Figure 2).

Farmers in Taibao City harvest rice twice a year during June–July and September–November; however, they frequently experience rice lodging caused by heavy rains and strong storms associated with weather fronts and typhoons.

On 3 June 2014, a record-breaking rainfall of 235.5 mm/h in Chiayi County associated with a frontal event caused considerable agricultural losses of approximately US$ 80,000. The Chiayi County Government executed an urgent UAV mission to assess the area of rice lodging on 7 June 2014. In total, 424 images were acquired across 306 ha by using an Avian-S fixed-wing UAV with a spatial resolution of 5.5 cm/pixel at a flight height of 233 m, with approximately 3 ha of the rice field used as the study site. The UAV was equipped with a lightweight Samsung NX200 (Samsung Electronics Co., Ltd., Yongin, South Korea) digital camera with a 20.3-megapixel APS-C CMOS sensor, an image size of 23.5 mm × 15.7 mm, and a focal length of 16 mm. The camera recorded data in the visible spectrum by using an RGB color filter. The weather condition was sunny with 10-km/h winds at ground level.

To improve the image accuracy of UAVs in this study, nine ground control points (GCPs) were deployed with highly distinguishable features such as the edges of paddies or road lines. A GeoXH GPS handheld with real-time H-Star technology for subfoot (30 cm) nominal accuracy was placed on the ground to acquire the coordinates of nine GCPs (

Figure 3). The rice lodging images and ground truth information were obtained through field surveys. A Trimble M3 Total Station was employed to measure the height difference between the lodged and healthy rice (

Figure 4). The study site covers approximately 3 ha and features six land cover types: rice, lodging, tree, water body, artificial surface, and bare land. According to the field survey, the cultivar in the study site is TaiKeng 2 (

O. sativa L. c.v. Taiken 2, TK 2). With excellent consumption quality, TK 2 is one of the most popular japonica rice varieties in Taiwan with a plant height of 111.2–111.3 cm [

41]. A quick observation revealed the lodged rice to be at least 20 cm lower than the healthy rice (

Figure 4b), resulting in the plant height being lower than 90 cm (

Figure 5).

2.2. Image-Based Modeling

IBM is a new trend of photometric modeling for generating realistic and accurate virtual 3D models of the environment. Through the use of the invariant feature points of images detected through SIFT, the SfM technique was adopted to simulate the moving tracks of cameras and to identify the target objects in a 3D environment, and the feature points were matched in 3D coordinates. Subsequently, the SfM-generated weakly supported surfaces with a low point cloud density were reinforced using multiview reconstruction software, CMPMVS, to complete 3D model reconstructions. In brief, IBM can be divided into three major steps, namely SIFT, SfM, and CMPMVS; detailed procedures were provided by Yang et al. [

42].

SIFT entails matching the feature points to the local features on images that are invariant to image rotation and scaling and partially invariant to changes in illumination and 3D camera viewpoint [

43]. SIFT produces highly distinctive features for object and scene recognition by searching for stable features across all possible scales by using a continuous scale function known as scale space [

43,

44,

45,

46].

Then, SfM determines the spatial structure according to the motion of the camera and 3D coordinates of the objects by matching identical feature points obtained using SIFT on different images [

47,

48]. The exchangeable image format used by digital cameras is used to obtain the basic image attributes for estimating motion tracking. Subsequently, the camera position is estimated using the kernel geometry of the feature points. The relationship between the corresponding feature points of the two images is then identified in the trajectory of the feature points. Through the optimization of the estimated point positions on multiple overlapping images by applying bundle adjustment, the coordinates are calculated to determine the intersections and resections of the elements and point positions inside and outside the camera. According to the coordinates of the corresponding positions, a point cloud comprising 3D coordinates and RGB color data is formed.

Finally, CMPMVS, a multiview reconstruction program based on clustering views for multiview stereo and patch-based multiview stereo algorithms, is used to reinforce the SfM-generated weakly supported surfaces of low-textured, transparent, or reflective objects with low point cloud density (e.g., green houses and ponds in agricultural fields). CMPMVS can be used to generate a textured mesh and reconstruct the surface of the final 3D model by using a multiview stereo application [

49,

50].

2.3. Texture Analysis

Texture analysis is considered an important method of measuring the spatial heterogeneity of remotely sensed images, including pattern variability, shape, and size [

51]. By measuring the frequency of gray-tone changes or color-space correlation, texture analysis can describe image details and determine the relationship between pixels [

52,

53].

Texture analysis is typically categorized into four categories, namely structural, statistical, model-based, and transform approaches [

54], of which the statistical approach indirectly represents the texture by using the nondeterministic properties that govern the distributions and relationships between the gray tones of an image; this approach has been demonstrated to outperform the transform-based and structural methods. Regarding the measurement level, the statistical approach can be categorized into first-order statistics, such as mean and variance, and second-order statistics, such as angular second moment (ASM), entropy, contrast, correlation, dissimilarity, and homogeneity [

55,

56,

57,

58]. For second-order statistics, the spatial distribution of spectral values is considered and measured using a gray-level co-occurrence matrix, which presents the texture information of an image in adjacency relationships between specific gray tones. According to a study on human texture discrimination, the textures in gray-level images are spontaneously discriminated only if they differ in second-order moments. Therefore, six second-order statistics, namely ASM, entropy, contrast, correlation, dissimilarity, and homogeneity, were employed in this study to measure the texture characteristics. In addition, two first-order statistics, namely mean and variance, were evaluated for comparison.

2.4. Single Feature Probability

SFP, a pixel-based Bayesian-classifier, is used to compute a probability metric (with a value ranging between 0 and 1) for each pixel of the input image based on the pixel value and training samples. The Bayesian network is appropriate because of its ability to handle both continuous and discrete variables, learn from training samples, and return a query metric for candidate pixels demonstrating a goodness of fit to the training samples. Within the selected training areas, representative pixels of land use types are used for computing pixel cue metrics to train the pixel classifier. Candidate pixels from images are then evaluated by the pixel classifier to quantify the degree to which they resemble the training pixels. The pixel cue metrics can include human visual attributes, such as color/tone, texture, and site/situation, and also visually undetectable information, such as spectral transforms or vegetation indices. Higher/lower probability values are assigned to those pixels whose values are similar/different from the training samples [

59,

60,

61]. Subsequently, the feature probability layer (ranging between 0 and 1) is outputted, with each pixel value representing the probability of being the object of interest [

62].

2.5. Image Classification

Various classification algorithms have been applied to remotely sensed data for terrain pattern recognition [

8,

63]. Two supervised classification algorithms, namely MLC and DTC, were employed in the current study, and the classification accuracy levels were assessed.

2.5.1. Maximum Likelihood Classification

The maximum likelihood decision rule is centered on probability [

64]. In MLC, the mean vector and covariance matrix in each training set class are calculated, under the assumption that all characteristic values are normally distributed. Subsequently, the probability of belonging is calculated for the unknown pixels, and the pixels are categorized into the training set class with the highest probability [

65,

66,

67]. Because MLC is a supervised classifier, the classification highly depends on the centroid and variation of training sets. Therefore, the selection of training data is crucial in MLC; in this study, two selection criteria were followed: representativeness and efficiency.

2.5.2. Decision Tree Classification

DTC, comprising internal and external nodes connected by branches, is a hierarchical model composed of decision rules that recursively split independent variables into homogeneous zones [

68]. Each internal node is associated with a decision rule, whereas each external node indicates the classification results. The tree-structured DTC, a widely used classification technique in machine learning, has been extensively applied to various data analysis systems such as electronic sensors [

69] and land cover classification [

70]. This study used the classification and regression trees for constructing binary trees with appropriate SFP information employed as the decision rule for each internal node.

4. Discussion

In practice, the identification of healthy and lodged rice within paddy fields is the most critical task for disaster relief compensation schemes, which are based on the precise evaluation of the proportion of lodged rice. Because strict decision criteria were adopted in DTC and lodged rice was the last external node and target object in the decision tree, lodged rice had a comparatively high commission error in this study. To minimize the commission error in rice/lodging identification, two additional image processing steps, extracting and thresholding, were employed to enhance the proposed approach under realistic conditions.

First, paddy fields were extracted from the whole image by using a cadastral boundary map. The boundaries of the paddy fields were distinguished, and the area outside the paddy fields was excluded by a mask layer and exempted from further classification and analysis. Second, considering that the healthy TK2 rice crop typically has a height of 1.1 m and considering the domino effect of lodging [

4], the lodging area should be larger than 1 m

2. Therefore, a threshold of 1 m

2 was adopted to exclude scattering noise, a common effect of pixel-based classification on fine spatial resolution imagery, from the lodging area. Under these two practical constraints, the rice paddy fields were extracted, and the scattering noise of lodged rice was reduced (

Figure 13).

In the future, establishing photo-identifiable GCPs and checkpoints (CPs) at locations with invariant properties is essential to providing calibration information for geometrically rectifying UAV images. Invariant locations such as an edge of a concrete facility, a manhole cover along public roads, or central separation islands of public roads can be adequate candidates for GCPs and CPs. With a priori establishment of GCPs and CPs at invariant locations, the geometric correction of UAV images can be improved so to further enhance image applications. Moreover, establishing an agricultural UAV monitoring system to provide regular inventories and environmental surveying on crops can be beneficial to farmers and government agencies for agricultural disaster relief compensation.