StandFood: Standardization of Foods Using a Semi-Automatic System for Classifying and Describing Foods According to FoodEx2

Abstract

:1. Introduction

2. Materials and Methods

2.1. FoodEx2 Data

2.2. StandFood

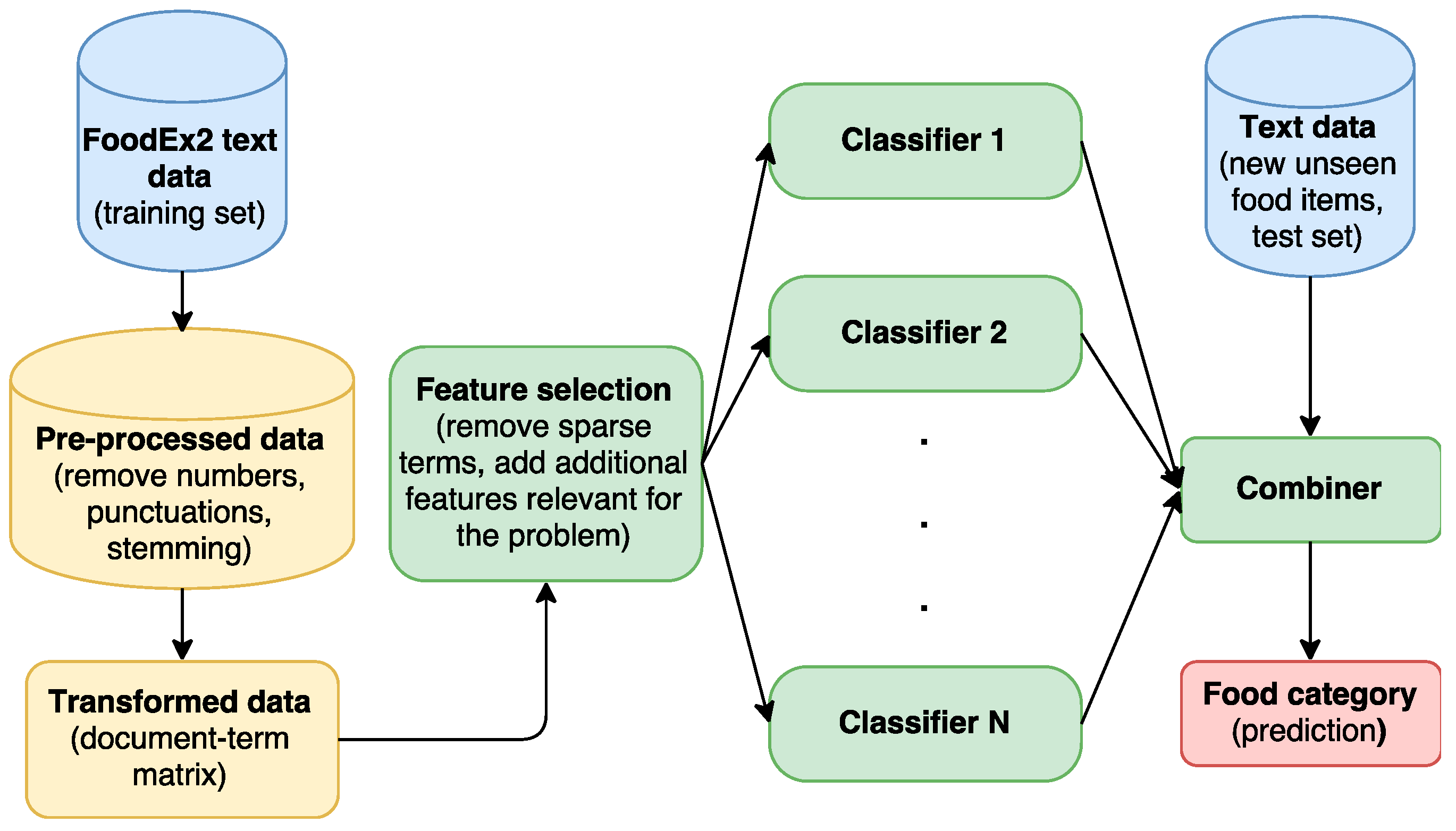

2.2.1. Classification Part

- Pre-processing of the instances (food items names)

- Feature selection (building a document-term matrix and adding more relevant features)

- Model training

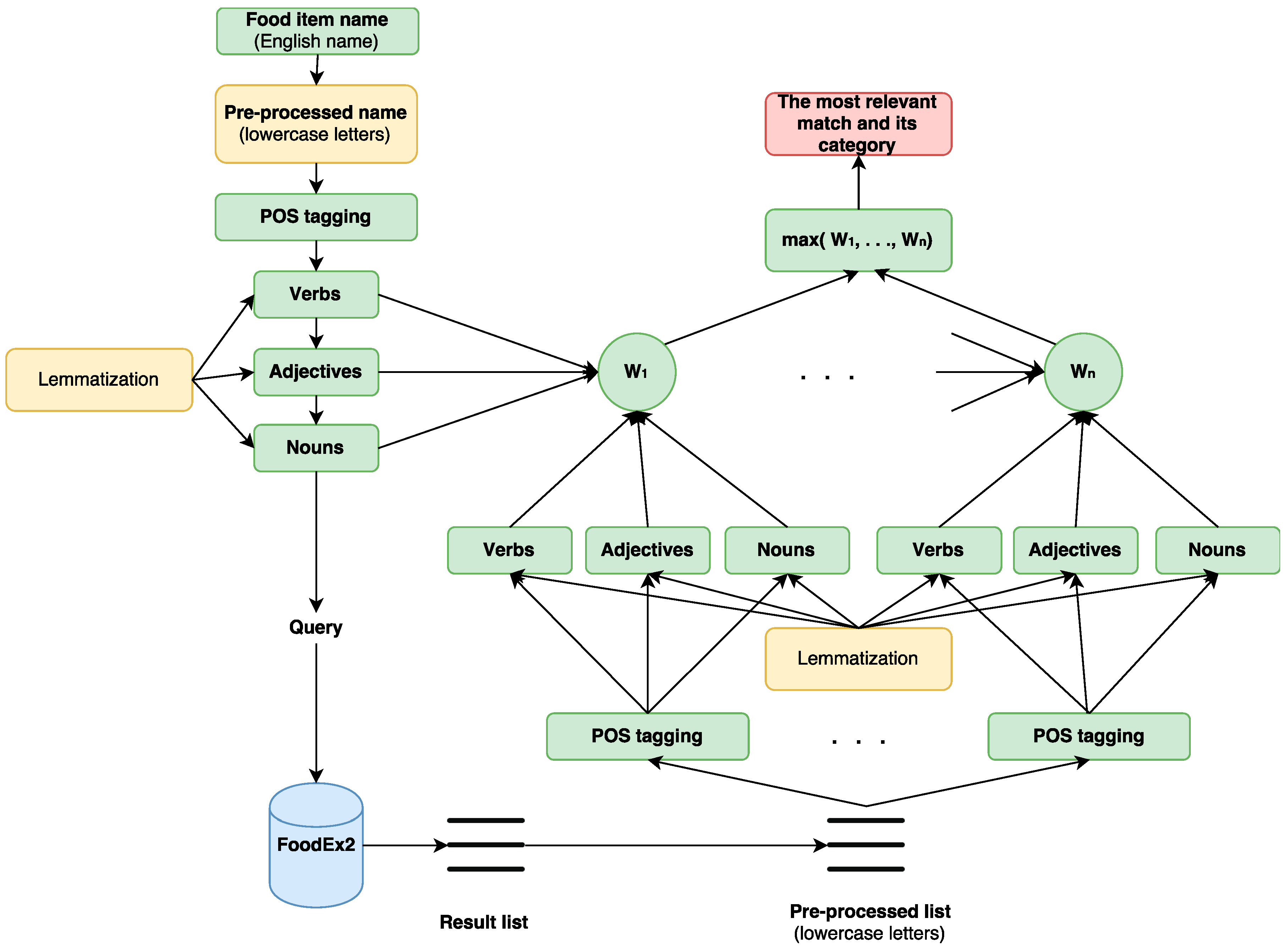

2.2.2. Description Part

Ai = {adjectives extracted from Di},

Vi = {verbs extracted from Di},

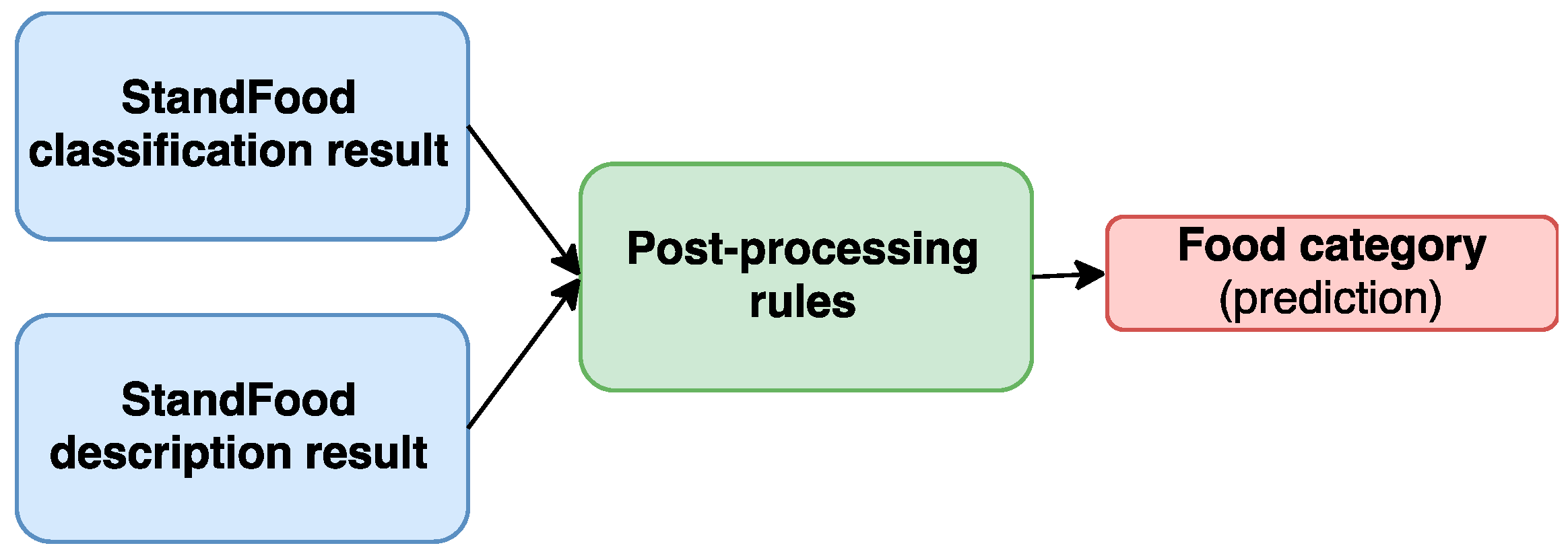

2.2.3. Post-Processing Rules to Improve the Accuracy of the Classification Part

- In the first rule, processes that change the nature of a food product are used. Their definitions are in the technical report of FoodEx2 and include, for example, canning, smoking, frying, and baking. Then, for each process in the list of processes, lemmatization is applied to avoid different word forms of food items names. So, if a food item is classified as raw (r) using the StandFood classification model but its set of adjectives and verbs (their lemmas) consists of at least one cooking process that change its nature, it is automatically changed to a derivative (d).

- In the second rule, if a food item is classified, as being either raw (r) or a derivative (d) and the result from the description part of StandFood is that the most relevant food item is a composite food (s) or (c), it is automatically changed to a composite food.

- In the third rule, if a food item is classified as a simple composite food (s) and the result from the description part of the StandFood system is that the most relevant food item is an aggregated composite food (c), it is automatically changed to an aggregated composite food (c).

- The fourth rule works in reverse, by changing an aggregated composite food (c) to a simple composite food (s).

3. Results

3.1. StandFood Classification Results

3.2. StandFood Description Results

3.3. StandFood Post-Processing Rules

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| EFSA | European Food safety Authority |

| FN | False negatives |

| FoodEx1 | Food classification and description system for exposure assessment (version 1) |

| FoodEx2 | Food classification and description system for exposure assessment (version 2) |

| FP | False positives |

| Maxent | Maximum Entropy |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| NNET | Neural Networks |

| RF | Random Forest |

| SLDA | Scaled Linear Discriminant Analysis |

| StandFood | Food Standardization System |

| SVM | Support Vector Machine |

| TN | True negatives |

| TP | True positives |

| TREE | Classification tree |

References

- EFSA. European Food Safety Authority. Available online: https://www.efsa.europa.eu/ (accessed on 17 February 2017).

- European Food safety Authority. The Food Classification and Description System FoodEx2, 2nd ed.; European Food safety Authority: Parma, Italy; Available online: https://www.efsa.europa.eu/ (accessed on 17 February 2017).

- Pounis, G.; Bonanni, A.; Ruggiero, E.; Di Castelnuovo, A.; Costanzo, S.; Persichillo, M.; Bonaccio, M.; Cerletti, C.; Riccardi, G.; Donati, M.; et al. Food group consumption in an Italian population using the updated food classification system FoodEx2: Results from the Italian Nutrition & HEalth Survey (INHES) study. Nutr. Metab. Cardiovasc. Dis. 2017, 27, 307–328. [Google Scholar] [PubMed]

- Birot, S.; Madsen, C.B.; Kruizinga, A.G.; Christensen, T.; Crépet, A.; Brockhoff, P.B. A procedure for grouping food consumption data for use in food allergen risk assessment. J. Food Compos. Anal. 2017, 59, 111–123. [Google Scholar] [CrossRef]

- Gurinovic, M.; Mileševic, J.; Kadvan, A.; Djekic-Ivankovic, M.; Debeljak-Martacic, J.; Takic, M.; Nikolic, M.; Rankovic, S.; Finglas, P.; Glibetic, M. Establishment and advances in the online Serbian food and recipe data base harmonized with EuroFIRTM standards. Food Chem. 2016, 193, 30–38. [Google Scholar] [CrossRef] [PubMed]

- EuroFir. European Food Information Resource. Available online: http://www.eurofir.org/ (accessed on 18 September 2016).

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Michalski, R.S.; Carbonell, J.G.; Mitchell, T.M. Machine Learning: An Artificial Intelligence Approach; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Manning, C.D.; Schütze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999; Volume 999. [Google Scholar]

- Chowdhury, G.G. Natural language processing. Annu. Rev. Inf. Sci. Technol. 2003, 37, 51–89. [Google Scholar] [CrossRef]

- Zhang, C.; Ma, Y. Ensemble Machine Learning; Springer: Berlin/Heidelberg, Germany, 2012; Volume 1. [Google Scholar]

- Plisson, J.; Lavrac, N.; Mladenic, D. A rule based approach to word lemmatization. Proc. IS 2004, 83–86. [Google Scholar]

- Lovins, J.B. Development of a Stemming Algorithm; MIT Information Processing Group, Electronic Systems Laboratory Cambridge: Cambridge, MA, USA, 1968. [Google Scholar]

- Hull, D.A. Stemming algorithms: A case study for detailed evaluation. JASIS 1996, 47, 70–84. [Google Scholar] [CrossRef]

- Tong, S.; Koller, D. Support vector machine active learning with applications to text classification. J. Mach. Learn. Res. 2001, 2, 45–66. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by random Forest. R News 2002, 2, 18–22. [Google Scholar]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. ICML 1996, 96, 148–156. [Google Scholar]

- McCallum, A.; Freitag, D.; Pereira, F.C. Maximum Entropy Markov Models for Information Extraction and Segmentation. ICML 2000, 17, 591–598. [Google Scholar]

- Eftimov, T.; Seljak, B.K. POS tagging-probability weighted method for matching the Internet recipe ingredients with food composition data. In Proceedings of the IEEE 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K), Lisbon, Portugal, 12–14 November 2015; Volume 1, pp. 330–336. [Google Scholar]

- Voutilainen, A. Part-of-speech tagging. In The Oxford Handbook of Computational Linguistics; Oxford University Press Inc.: New York, NY, USA, 2003; pp. 219–232. [Google Scholar]

- Schmid, H. Probabilistic part-of-speech tagging using decision trees. In Proceedings of the International Conference on New Methods in Language Processing, Manchester, UK, September 1994; Citeseer: Manchester, UK, 1994; Volume 12, pp. 44–49. [Google Scholar]

- Tian, Y.; Lo, D. A comparative study on the effectiveness of part-of-speech tagging techniques on bug reports. In Proceedings of the 2015 IEEE 22nd International Conference on Software Analysis, Evolution and Reengineering (SANER), Montreal, QC, Canada, 2–6 March 2015; pp. 570–574. [Google Scholar]

- Marcus, M.P.; Marcinkiewicz, M.A.; Santorini, B. Building a large annotated corpus of English: The Penn Treebank. Comput. Linguist. 1993, 19, 313–330. [Google Scholar]

- Real, R.; Vargas, J.M. The probabilistic basis of Jaccard’s index of similarity. Syst. Biol. 1996, 45, 380–385. [Google Scholar] [CrossRef]

- Cestnik, B. Estimating probabilities: A crucial task in machine learning. In Proceedings of the European Conference on Artificial Intelligence, Stockholm, Sweden, 6–10 August 1990; Volume 90, pp. 147–149. [Google Scholar]

- Jurka, T.P.; Collingwood, L.; Boydstun, A.E.; Grossman, E.; van Atteveldt, W. RTextTools: A supervised learning package for text classification. R J. 2013, 5, 6–12. [Google Scholar]

- Arnold, T.; Tilton, L. Natural Language Processing. In Humanities Data in R; Springer: Berlin/Heidelberg, Germany, 2015; pp. 131–155. [Google Scholar]

- Mika, S.; Ratsch, G.; Weston, J.; Scholkopf, B.; Mullers, K.R. Fisher discriminant analysis with kernels. In Proceedings of the 1999 IEEE Signal Processing Society Workshop on Neural Networks for Signal Processing IX, Madison, WI, USA, 25 August 1999; pp. 41–48. [Google Scholar]

- Oza, N.C. Online bagging and boosting. In Proceedings of the 2005 IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 12 October 2005; Volume 3, pp. 2340–2345. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Collingwood, L.; Wilkerson, J. Tradeoffs in accuracy and efficiency in supervised learning methods. J. Inf. Technol. Politics 2012, 9, 298–318. [Google Scholar] [CrossRef]

- Gomaa, W.H.; Fahmy, A.A. A survey of text similarity approaches. Int. J. Comput. Appl. 2013, 68, 13–18. [Google Scholar]

- Metzler, D.; Dumais, S.; Meek, C. Similarity measures for short segments of text. In Proceedings of the European Conference on Information Retrieval, Rome, Italy, 2–5 April 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 16–27. [Google Scholar]

- Zhai, C.; Lafferty, J. Model-based feedback in the language modeling approach to information retrieval. In Proceedings of the Tenth International Conference on Information and Knowledge Management, Atlanta, GA, USA, 5–10 November 2001; pp. 403–410. [Google Scholar]

| Metric | SVM | SLDA | RF | Maxent | Boosting | Bagging | TREE | NNET |

|---|---|---|---|---|---|---|---|---|

| Accuracy (%) | 88.50 | 72.41 | 88.95 | 89.21 | 85.88 | 83.47 | 69.02 | 77.12 |

| Category | Precision | Recall |

|---|---|---|

| r | 0.72 | 0.99 |

| d | 0.81 | 0.81 |

| c | 0.75 | 0.67 |

| s | 0.95 | 0.57 |

| Food Item | Category |

|---|---|

| Barley grains | r |

| Mandarins (Citrus reticulata) | r |

| Buckwheat flour | d |

| Oat flakes | d |

| Fruit compote | s |

| Marmalade, mixed fruit | s |

| Rice and vegetables meal | c |

| Mushroom soup | c |

| Food Item | StandFood FoodEx2 Code | StandFood Relevant FoodEx2 Item | Manual FoodEx2 Code |

|---|---|---|---|

| Mushroom soup | A041R | Mushroom soup | A041R |

| Prepared green salad | A042C | Mixed green salad | A042C |

| Meat burger | A03XF | Meat burger no sandwich | A03XF |

| Yeast | A049A | Baking yeast | A049A |

| Brown sauce (gravy, lyonnais sauce) | A043Z | Continental European brown cooked sauce gravy | A043Z |

| Cow milk, <1% fat (skimmed milk) | A02MA | Cow milk skimmed low fat | A02MA |

| Supplements containing special fatty acids (e.g., omega-3, essential fatty acids) | A03SX | Formulations containing special fatty acids (e.g., omega-3 essential fatty acids) | A03SX |

| Durum wheat flour (semola) | A004C | Wheat flour durum | A004F |

| Gingerbread | A00CT | Gingerbread | A009Q$F14.A07GX |

| Cherry, fresh | A01GG | Cherries and similar | A01GK |

| A01GH | Sour cherries | ||

| A01GK | Cherries sweet | ||

| A0DVN | Nanking cherries | ||

| A0DVP | Cornelian cherries | ||

| A0DVR | Black cherries |

| Category | Precision | Recall |

|---|---|---|

| r | 0.85 | 0.99 |

| d | 0.90 | 0.84 |

| c | 0.82 | 0.87 |

| s | 0.97 | 0.83 |

| Food Item | Classification Part Category | Post-processing Category |

|---|---|---|

| Cabbage Chinese boiled | r | d |

| Marzipan | r | s |

| Gingerbread | r | c |

| Water, bottled, flavored, citrus | d | s |

| Salad, tuna-vegetable, canned | d | c |

| Multigrain rolls | c | s |

| Croissant, filled with jam | s | c |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eftimov, T.; Korošec, P.; Koroušić Seljak, B. StandFood: Standardization of Foods Using a Semi-Automatic System for Classifying and Describing Foods According to FoodEx2. Nutrients 2017, 9, 542. https://doi.org/10.3390/nu9060542

Eftimov T, Korošec P, Koroušić Seljak B. StandFood: Standardization of Foods Using a Semi-Automatic System for Classifying and Describing Foods According to FoodEx2. Nutrients. 2017; 9(6):542. https://doi.org/10.3390/nu9060542

Chicago/Turabian StyleEftimov, Tome, Peter Korošec, and Barbara Koroušić Seljak. 2017. "StandFood: Standardization of Foods Using a Semi-Automatic System for Classifying and Describing Foods According to FoodEx2" Nutrients 9, no. 6: 542. https://doi.org/10.3390/nu9060542