Gesture Recognition Based on a Convolutional Neural Network–Bidirectional Long Short-Term Memory Network for a Wearable Wrist Sensor with Multi-Walled Carbon Nanotube/Cotton Fabric Material

Abstract

:1. Introduction

2. Fabrication Procedure

2.1. Structure of the MWCNT/CF Sensor Unit

2.2. Fabrication of the MWCNT/CF Sensor Unit

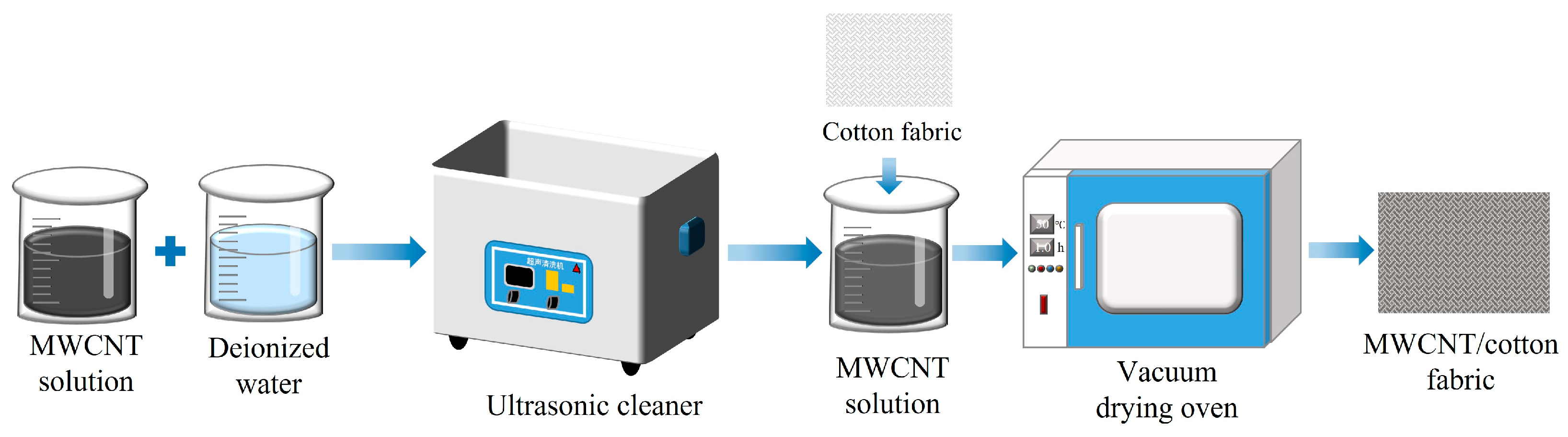

2.2.1. Preparation of the MWCNT/CF Composite

2.2.2. Encapsulation of the MWCNT/CF Sensor Unit

2.3. Performance Testing

2.3.1. Sensitivity Testing

2.3.2. Response Characteristic Testing and Stability Test

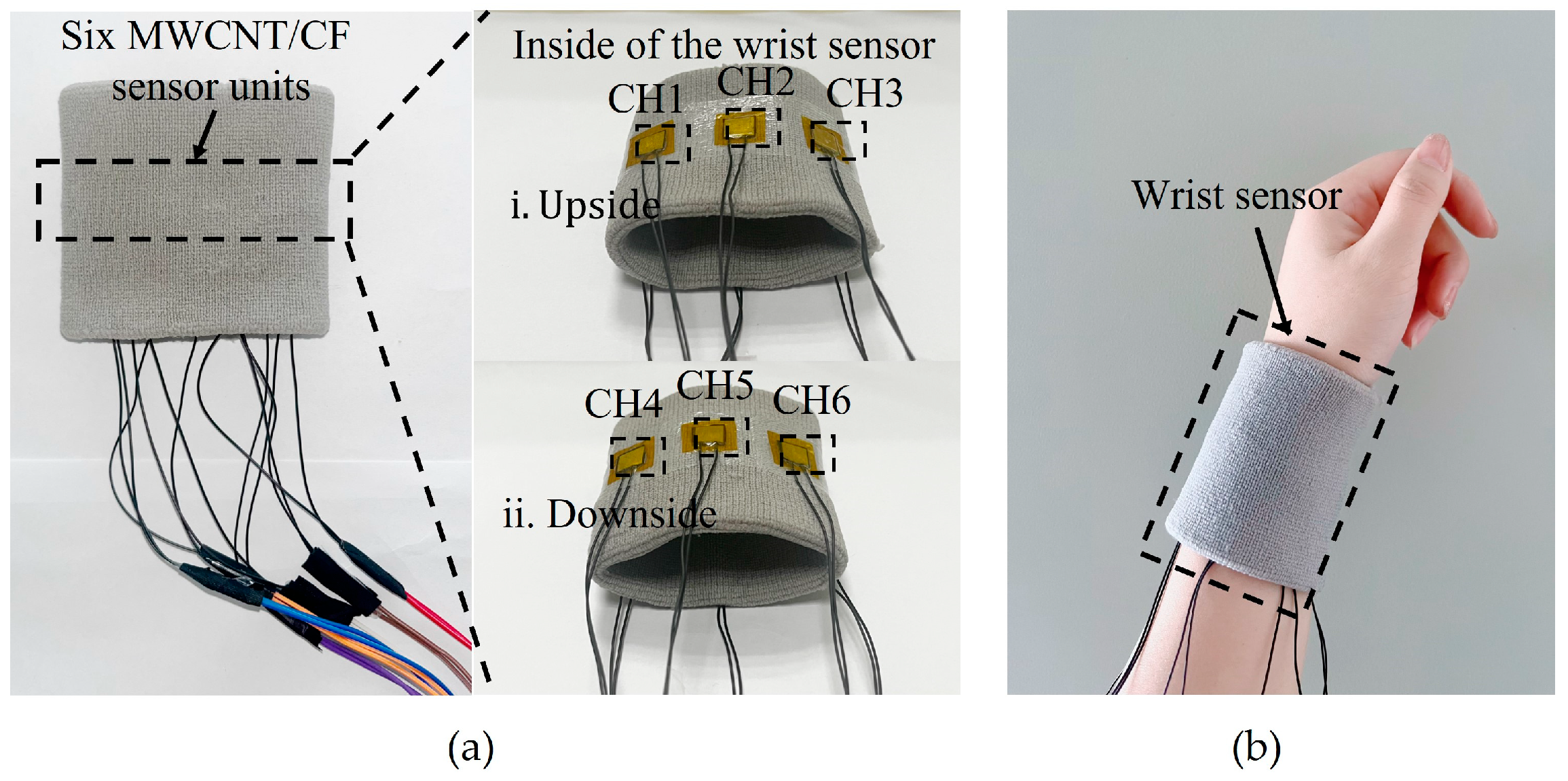

2.4. Construction of the Wrist Sensor

2.4.1. Manufacturing of the Wrist Sensor

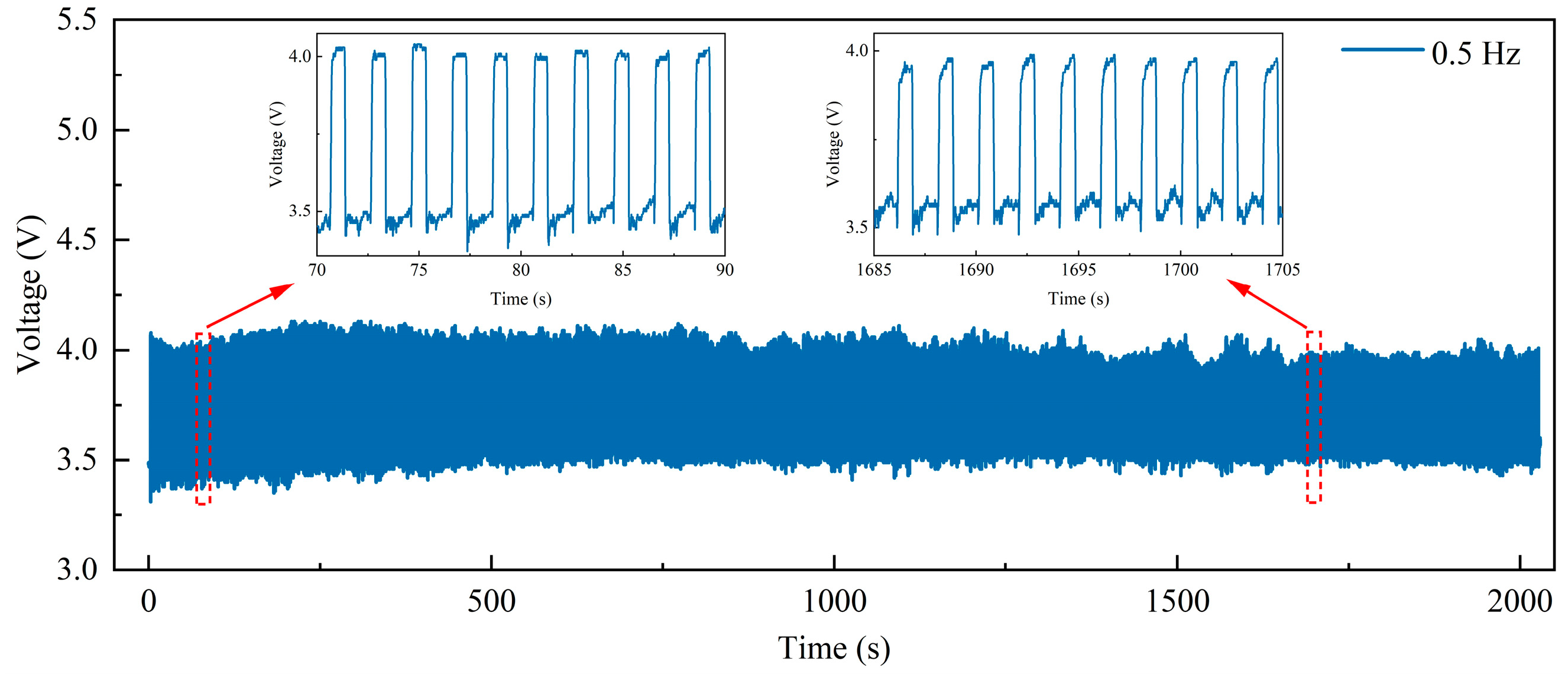

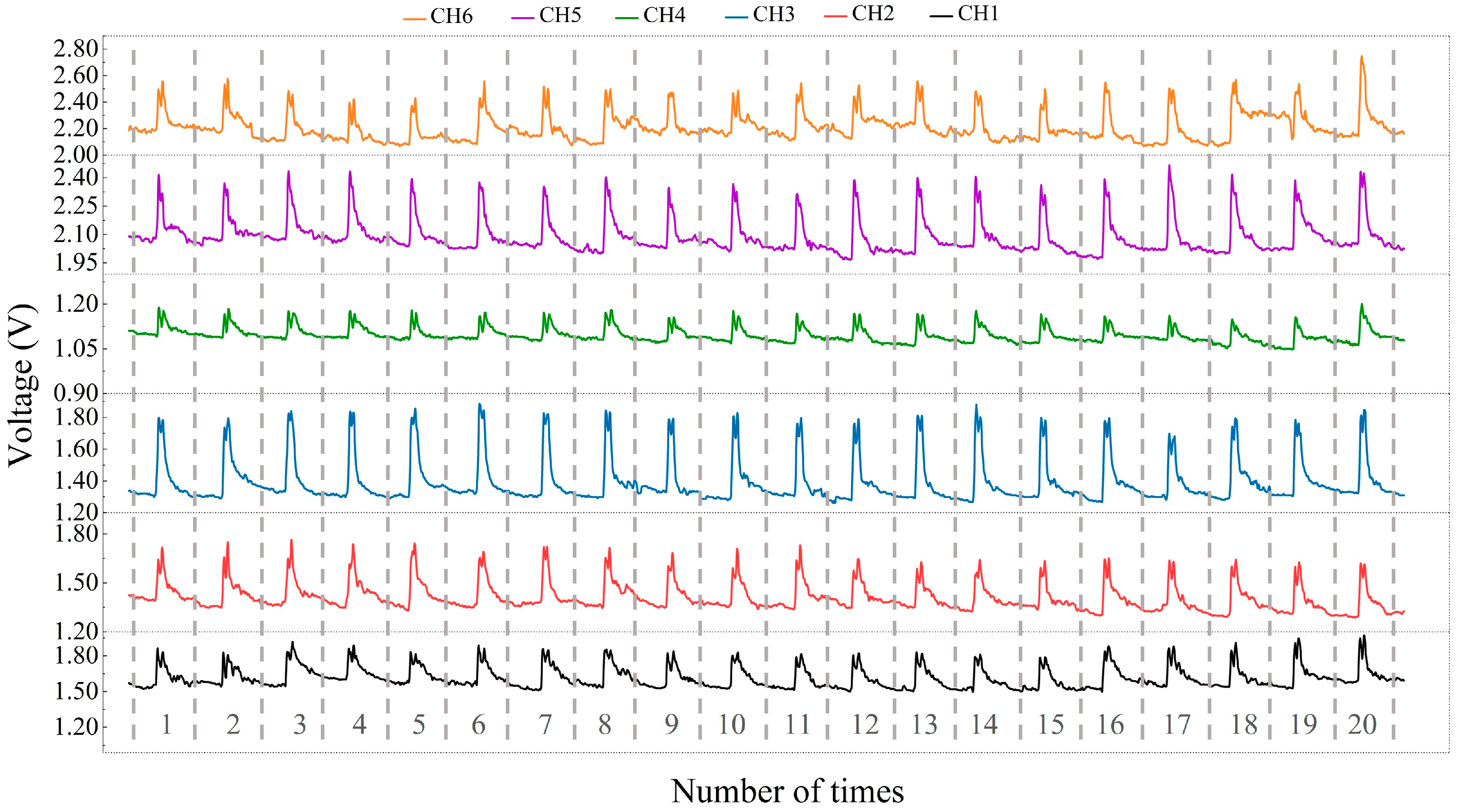

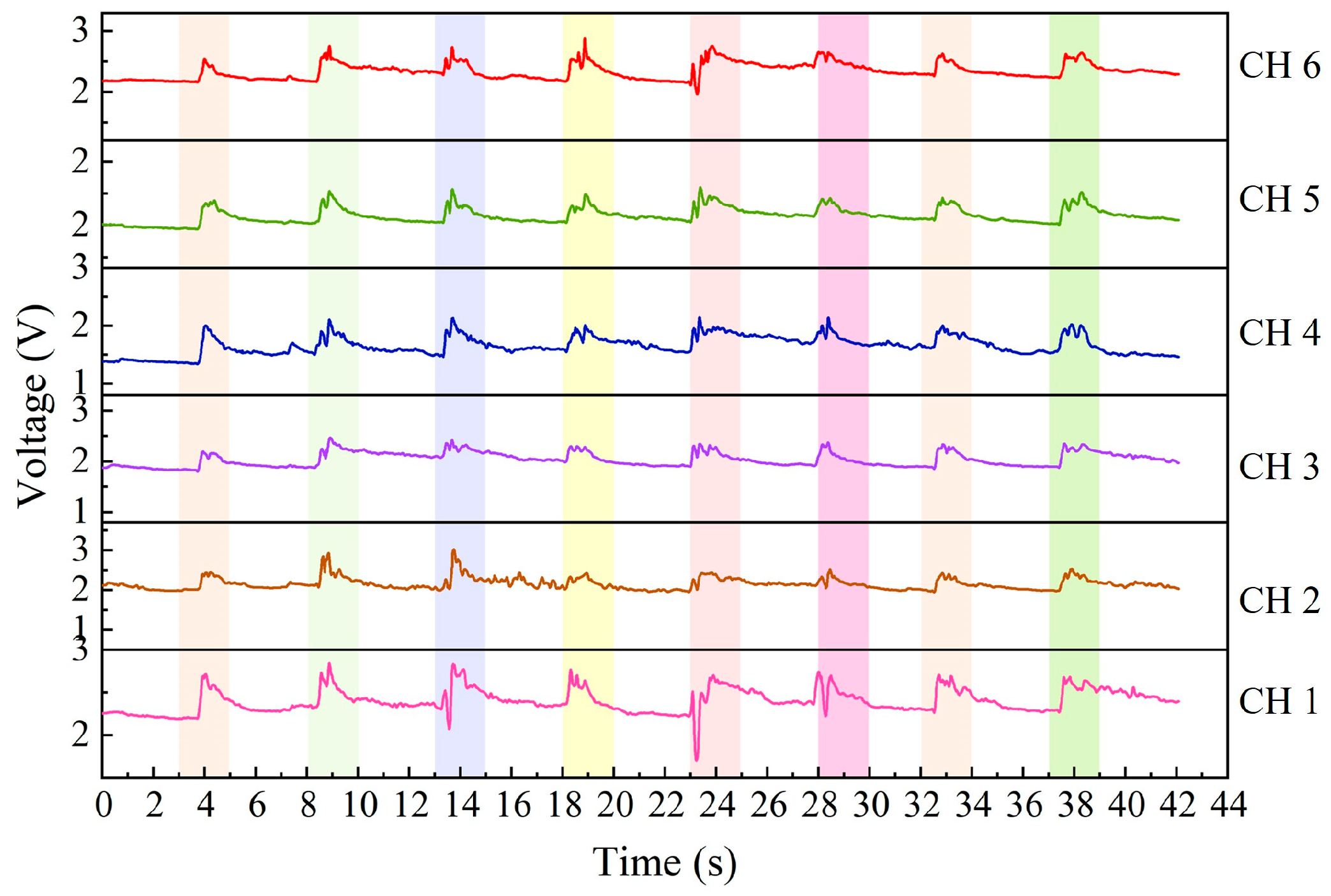

2.4.2. Stability of the Wrist Sensor

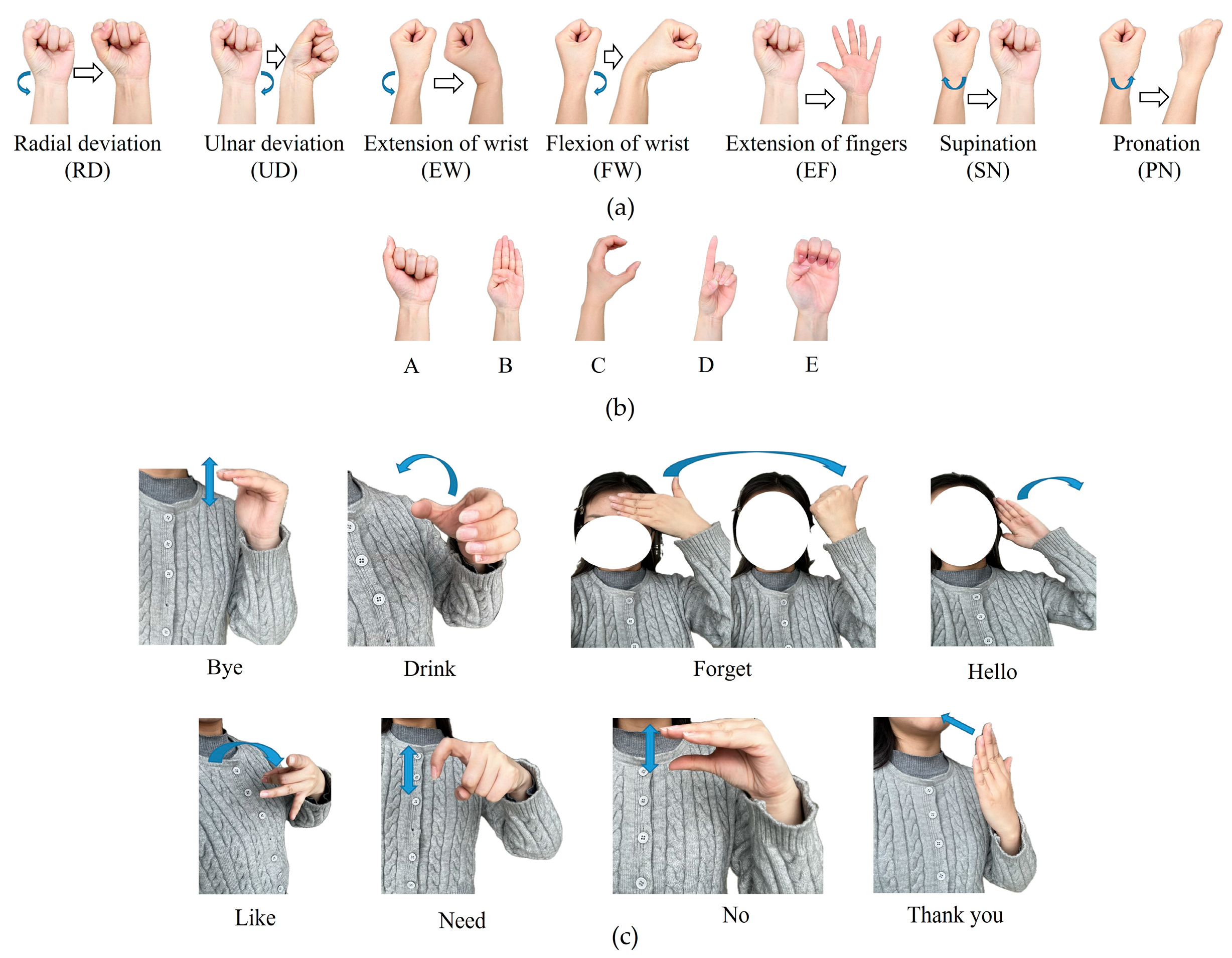

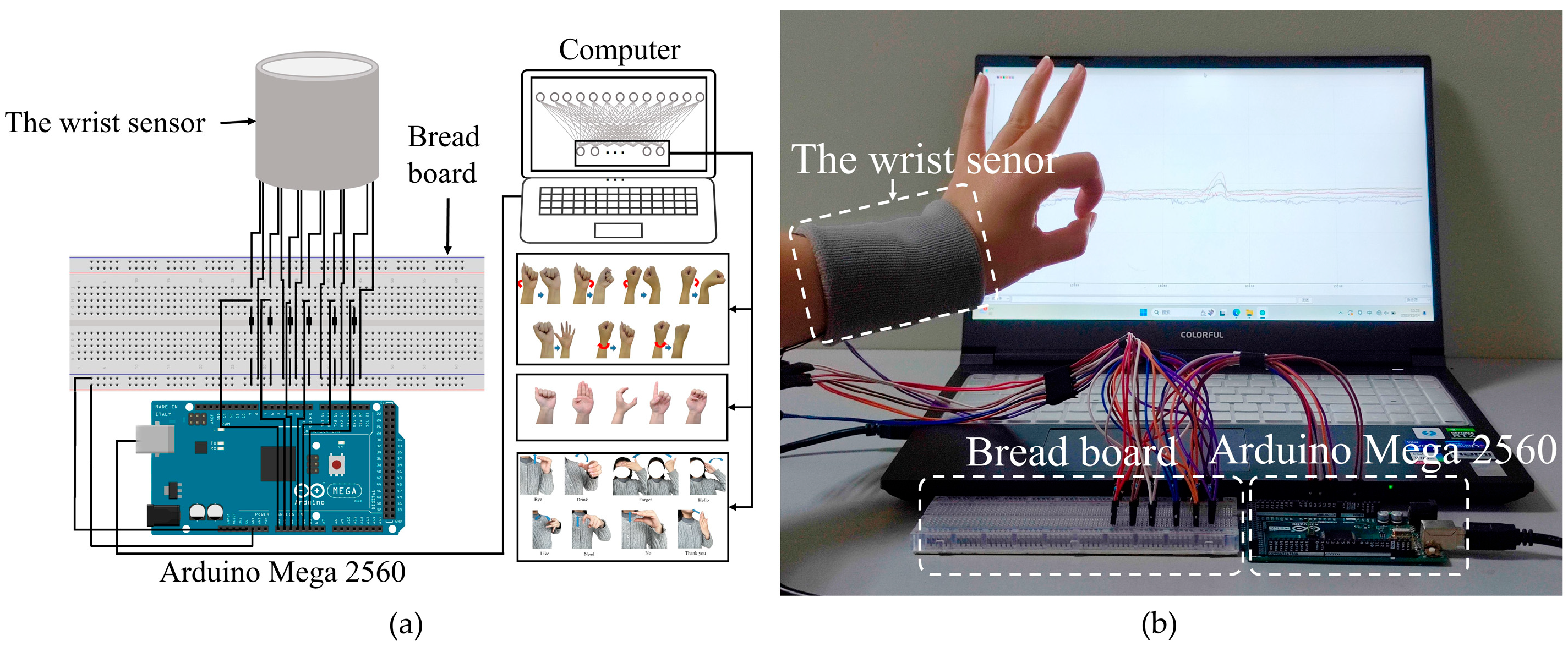

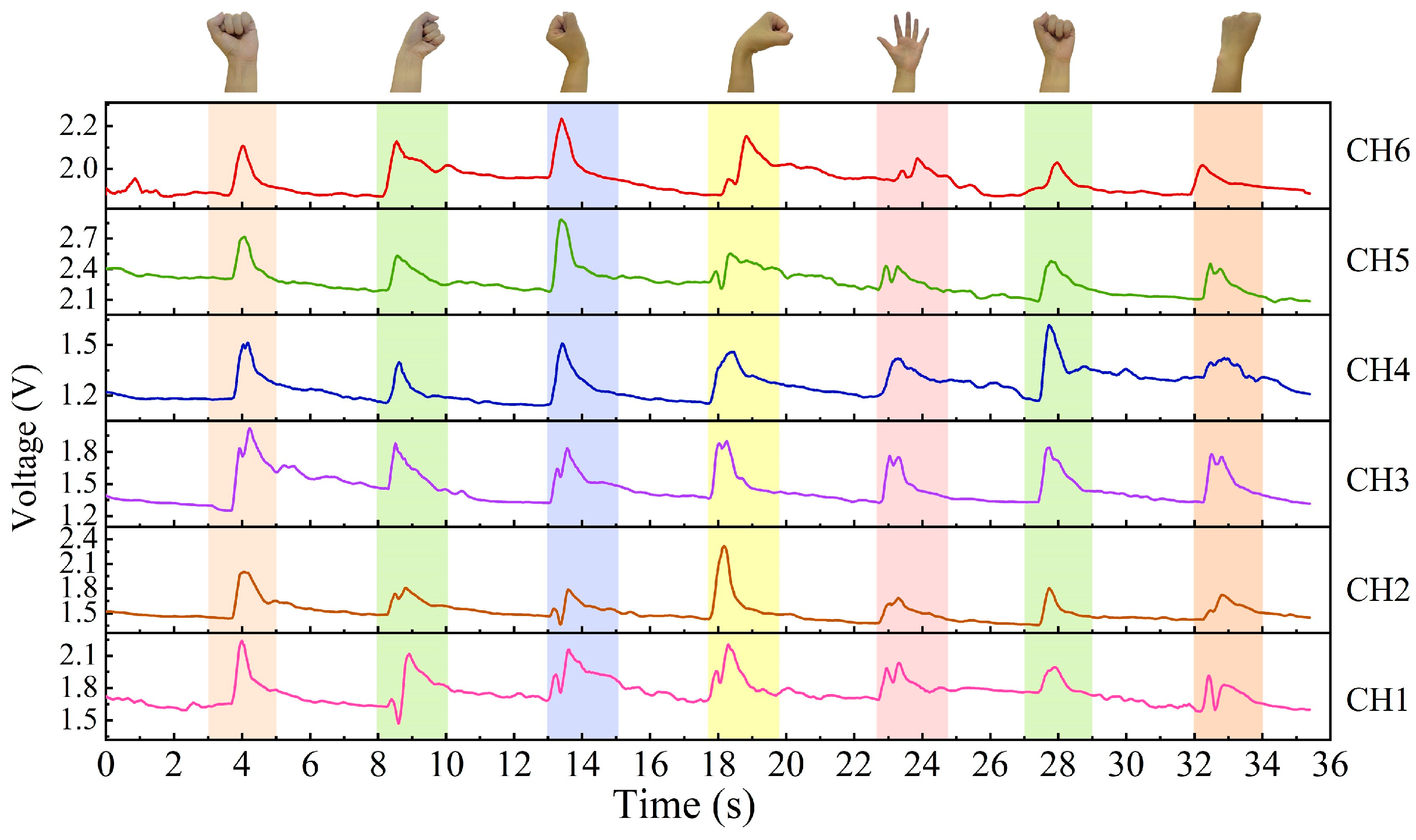

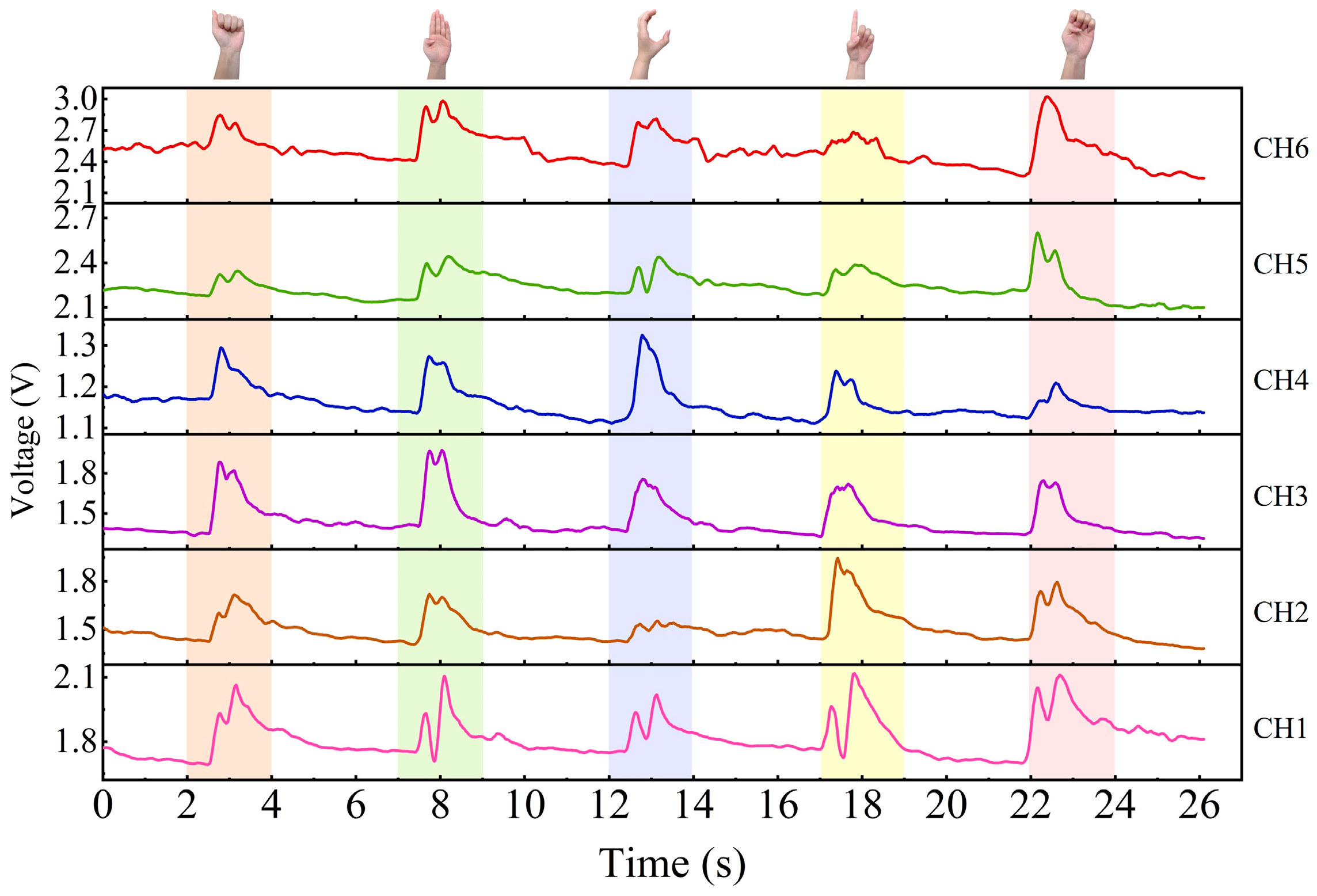

3. Data Acquisition for Different Gestures

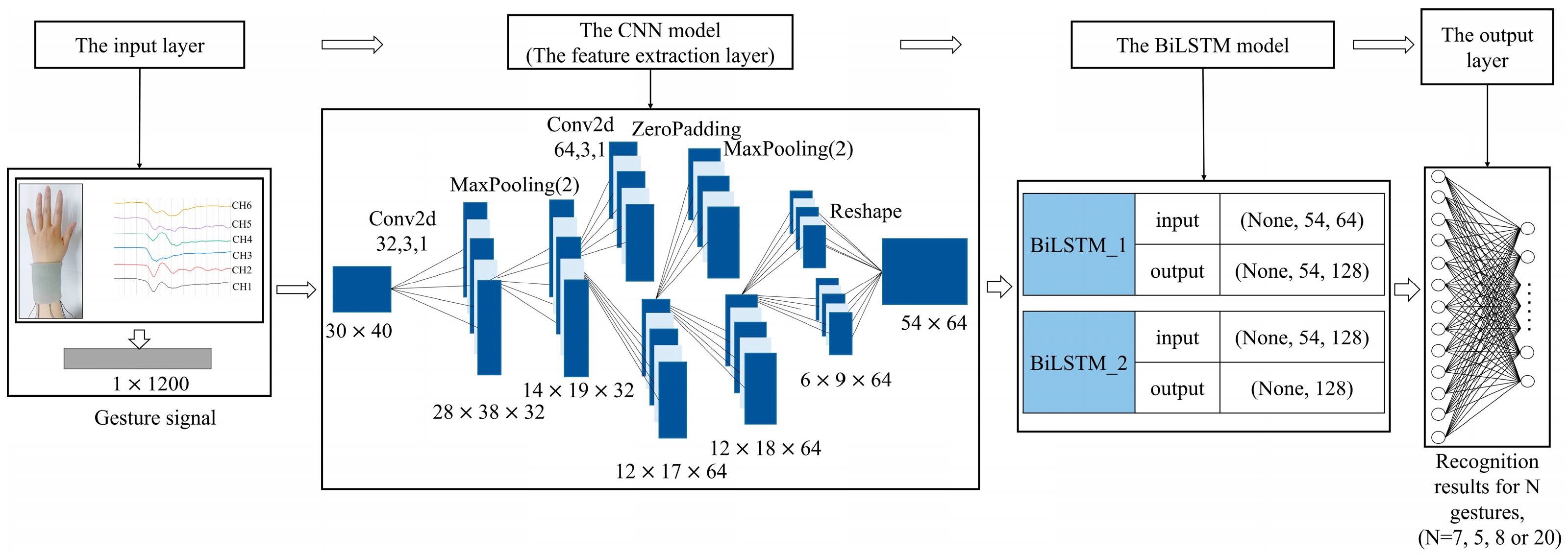

4. Gesture Recognition Based on the CNN-BiLSTM Model

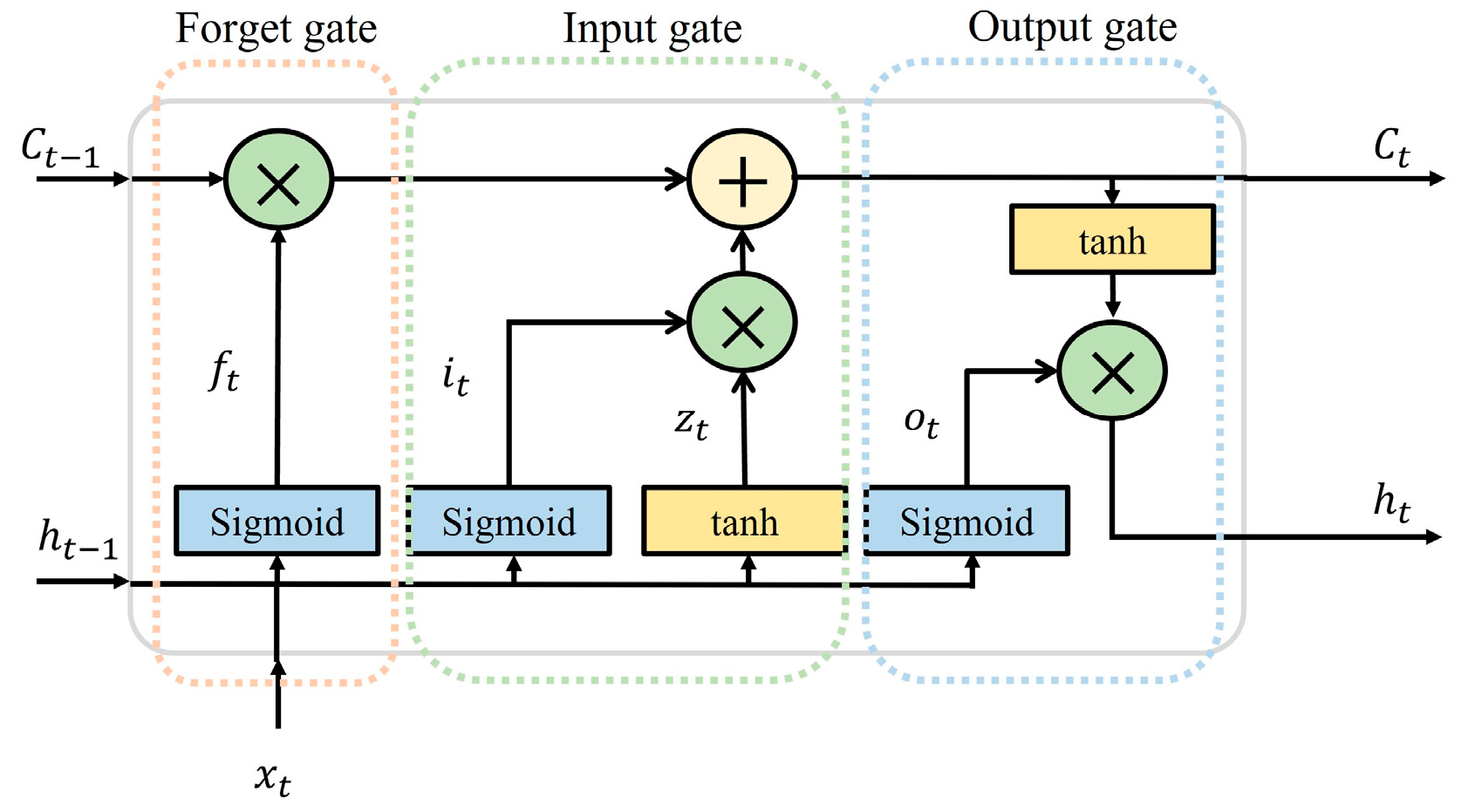

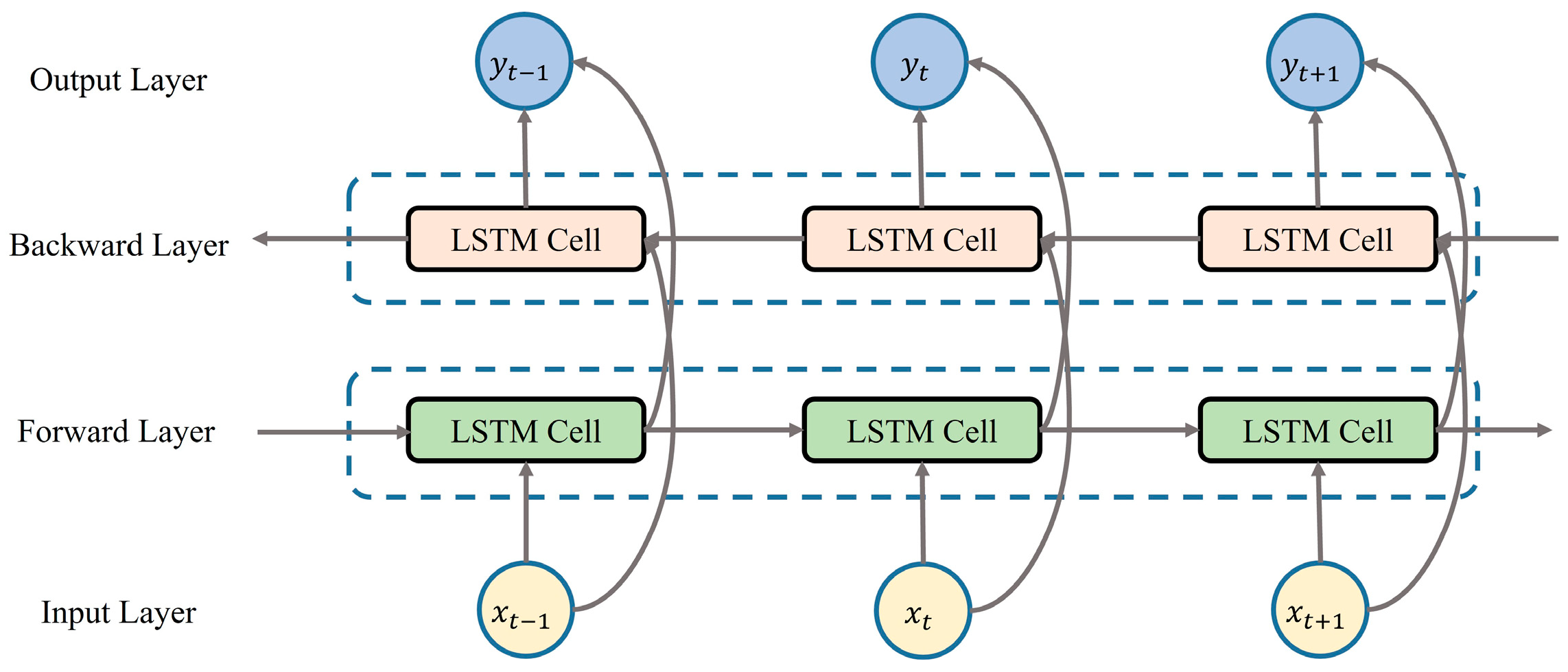

4.1. Principle of the CNN-BiLSTM Algorithm

4.2. Construction of the CNN-BiLSTM Model

4.3. Evaluation Factors

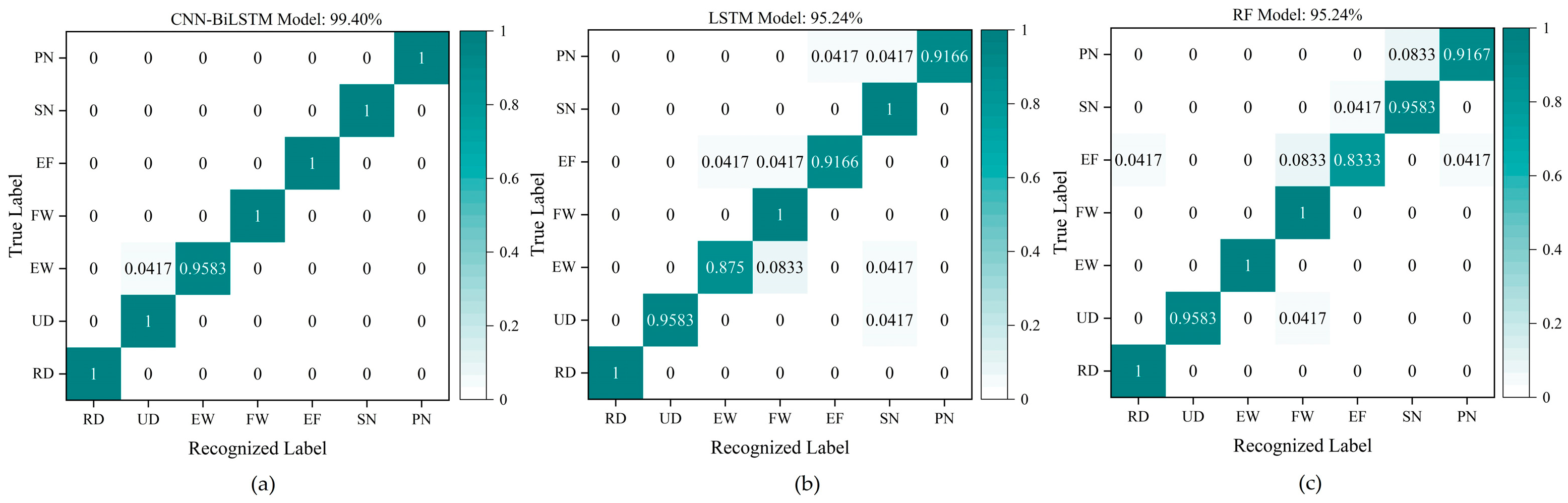

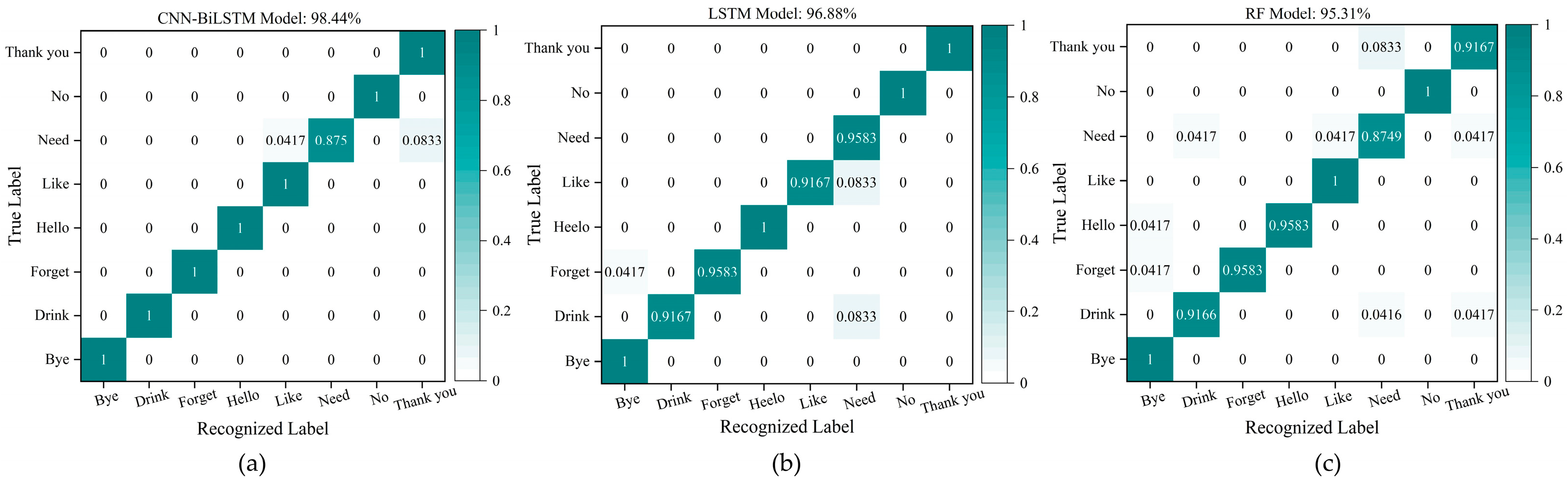

4.4. Analysis and Discussion of the Recognition Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Selamneni, V.; Kunchur, A.; Sahatiya, P. Large-Area, Flexible SnS/Paper-Based Piezoresistive Pressure Sensor for Artificial Electronic Skin Application. IEEE Sens. J. 2020, 21, 5143–5150. [Google Scholar] [CrossRef]

- Li, Q.; Yin, R.; Zhang, D.; Liu, H.; Chen, X.; Zheng, Y.; Guo, Z.; Liu, C.; Shen, C. Flexible conductive MXene/cellulose nano-crystal coated nonwoven fabrics for tunable wearable strain/pressure sensors. J. Mater. Chem. A 2020, 8, 21131–21141. [Google Scholar] [CrossRef]

- Zhao, P.F.; Song, Y.L.; Xie, P.; Zhang, F.; Xie, T.; Liu, G.; Zhao, J.Y.; Han, S.T.; Zhou, Y. All-Organic Smart Textile Sensor for Deep-Learning-Assisted Multimodal Sensing. Adv. Funct. Mater. 2023, 33, 2301816. [Google Scholar] [CrossRef]

- Li, Y.; Wu, G.; Song, G.; Lu, S.H.; Wang, Z.; Sun, H.; Zhang, Y.; Wang, X. Soft, Pressure-Tolerant, Flexible Electronic Sensors for Sensing under Harsh Environments. ACS Sens. 2022, 7, 2400–2409. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Wang, M.; Uzabakiriho, P.C.; Zhao, G. High Sensitivity, Broad Working Range, Comfortable, and Biofriendly Wearable Strain Sensor for Electronic Skin. Adv. Mater. Technol. 2022, 7, 2200106. [Google Scholar] [CrossRef]

- Yu, Q.H.; Su, C.L.; Bi, S.Y.; Huang, Y.L.; Li, J.N.; Shao, H.Q.; Jiang, J.H.; Chen, N.L. Ti3C2TX@nonwoven Fabric Composite: Promising MXene-Coated Fabric for Wearable Piezoresistive Pressure Sensors. ACS Appl. Mater. Interfaces 2022, 14, 9632–9643. [Google Scholar] [CrossRef]

- Song, Y.; Li, M.K.; Wang, F.L.; Lv, S.N. Contact Pattern Recognition of a Flexible Tactile Sensor Based on the CNN-LSTM Fusion Algorithm. Micromachines 2022, 13, 1053. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.B.; Xian, S.; Zhang, Z.P.; Hou, X.J.; He, J.; Mu, J.L.; Geng, W.P.; Qiao, X.J.; Zhang, L.; Chou, X.J. Synergistic piezoelectricity enhanced BaTiO3/polyacrylonitrile elastomer-based highly sensitive pressure sensor for intelligent sensing and posture recognition applications. Nano Res. 2023, 16, 5490–5502. [Google Scholar] [CrossRef]

- Li, T.; Luo, H.; Qin, L.; Wang, X.W.; Xiong, Z.P.; Ding, H.Y.; Gu, Y.; Liu, Z.; Zhang, T. Flexible Capacitive Tactile Sensor Based on Micropatterned Dielectric Layer. Small 2016, 12, 5042–5048. [Google Scholar] [CrossRef]

- Yu, Q.Y.; Zhang, P.; Chen, Y.C. Human Motion State Recognition Based on Flexible, Wearable Capacitive Pressure Sensors. Micromachines 2021, 12, 1219. [Google Scholar] [CrossRef]

- Song, Y.; Lv, S.N.; Wang, F.L.; Li, M.K. Hardness-and-Type Recognition of Different Objects Based on a Novel Porous Gra-phene Flexible Tactile Sensor Array. Micromachines 2023, 14, 10217. [Google Scholar] [CrossRef]

- Tian, G.L.; Zhan, L.; Deng, J.X.; Liu, H.G.; Li, J.; Ma, J.J.; Jin, X.Y.; Ke, Q.F.; Huang, C. Coating of multi-wall carbon nanotubes (MWCNTs) on three-dimensional, bicomponent nonwovens as wearable and high-performance piezoresistive sensors. Chem. Eng. J. 2021, 425, 130682. [Google Scholar] [CrossRef]

- Dai, X.Y.; Huang, L.B.; Du, Y.Z.; Han, J.C.; Zheng, Q.Q.; Kong, J.; Hao, J.H. Self-Healing, Flexible, and Tailorable Triboelectric Nanogenerators for Self-Powered Sensors based on Thermal Effect of Infrared Radiation. Adv. Funct. Mater. 2020, 30, 1910723. [Google Scholar] [CrossRef]

- Gao, F.F.; Zhao, X.; Zhang, Z.; An, L.L.; Xu, L.X.; Xun, X.C.; Zhao, B.; Ouyang, T.; Zhang, Y.; Liao, Q.L.; et al. A stretch-ing-insensitive, self-powered and wearable pressure sensor. Nano Energy 2022, 91, 106695. [Google Scholar] [CrossRef]

- Chang, S.; Li, J.; He, Y.; Liu, H.; Cheng, B. A high-sensitivity and low-hysteresis flexible pressure sensor based on carbonized cotton fabric. Sens. Actuators A Phys. 2019, 294, 45–53. [Google Scholar] [CrossRef]

- Cho, H.S.; Yang, J.H.; Lee, J.H.; Lee, J.H. Evaluation of Joint Motion Sensing Efficiency According to the Implementation Method of SWCNT-Coated Fabric Motion Sensor. Sensors 2020, 20, 284. [Google Scholar] [CrossRef]

- Xu, H.C.; Gao, L.B.; Wang, Y.J.; Cao, K.; Hu, X.K.; Wang, L.; Mu, M.; Liu, M.; Zhang, H.Y.; Wang, W.D.; et al. Flexible Wa-terproof Piezoresistive Pressure Sensors with Wide Linear Working Range Based on Conductive Fabrics. Nano-Micro Lett. 2020, 12, 13. [Google Scholar] [CrossRef]

- Chen, F.C.; Liu, H.J.; Xu, M.T.; Ye, J.P.; Li, Z.; Qin, L.Z.; Zhang, T.H. Flexible cotton fabric with stable conductive coatings for piezoresistive sensors. Cellulose 2021, 28, 10025–10038. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Ren, H.; Chen, H.Q.; Chen, Q.J.; Jin, L.J.; Peng, W.M.; Xin, S.X.; Bai, Y.X. Cotton Fabrics Decorated with Con-ductive Graphene Nanosheet Inks for Flexible Wearable Heaters and Strain Sensors. ACS Appl. Nano Mater. 2021, 4, 9709–9720. [Google Scholar] [CrossRef]

- Han, L.; Zhang, T.C.; Lin, C.; Gao, Q.; Liu, Y.L.; Tang, J.L. Long-term application of core spun fabric strain sensor manufactured via dip coating for large deformation monitoring. Polym. Adv. Technol. 2023, 8, 6217. [Google Scholar] [CrossRef]

- Zhou, Y.; Myant, C.; Stewart, R. Multifunctional and stretchable graphene/textile composite sensor for human motion moni-toring. J. Appl. Polym. Sci. 2022, 139, 52755. [Google Scholar] [CrossRef]

- Yang, S.T.; Li, C.W.; Chen, X.Y.; Zhao, Y.P.; Zhang, H.; Wen, N.X.; Fan, Z.; Pan, L.J. Facile Fabrication of High-Performance Pen Ink-Decorated Textile Strain Sensors for Human Motion Detection. ACS Appl. Mater. Interfaces 2020, 12, 19874–19881. [Google Scholar] [CrossRef]

- Zheng, Y.J.; Li, Y.L.; Zhou, Y.J.; Dai, K.; Zheng, G.Q.; Zhang, B.; Liu, C.T.; Shen, C.Y. High-Performance Wearable Strain Sensor Based on Graphene/Cotton Fabric with High Durability and Low Detection Limit. ACS Appl. Mater. Interfaces 2020, 12, 1474–1485. [Google Scholar] [CrossRef] [PubMed]

- Pyo, S.; Lee, J.; Kim, W.; Jo, E.; Kim, J. Multi-Layered, Hierarchical Fabric-Based Tactile Sensors with High Sensitivity and Linearity in Ultrawide Pressure Range. Adv. Funct. Mater. 2019, 29, 1902484. [Google Scholar] [CrossRef]

- Liu, B.H.; Lin, X.Y.; Zhao, P.X.; He, Y.Q.; Liu, M.X. Robust Polypyrrole@Halloysite Nanotube-Coated Polyurethane Sponge as Multifunctional Flexible Sensors. ACS Sustain. Chem. Eng. 2023, 11, 8753–8763. [Google Scholar] [CrossRef]

- Zhou, Z.W.; Zhang, W.L.; Zhang, Y.; Yin, X.Y.; Chen, X.Y.; He, B.W. Facile and direct 3D printing of smart glove for gesture monitoring. Microelectron. Eng. 2023, 282, 112102. [Google Scholar] [CrossRef]

- Seesaard, T.; Wongchoosuk, C. Fabric-based piezoresistive Ti3AlC2/PEDOT: PSS force sensor for wearable E-textile applications. Org. Electron. 2023, 122, 106894. [Google Scholar] [CrossRef]

- Uno, M.O.; Omori, M.; Morita, S.; Kojitani, T.; Yoshimura, K.; Tsurumi, T.; Ito, K. Moisture-Insensitive Force Sensor Yarns and Fabrics to Monitor Biological Motion. Adv. Mater. Technol. 2022, 8, 2301124. [Google Scholar] [CrossRef]

- Zhou, Z.W.; Zhang, W.L.; Zhang, J.T.; Zhang, Y.; Yin, X.Y.; He, B.W. Flexible and self-adhesive strain sensor based on GNSs/MWCNTs coated stretchable fabric for gesture monitoring and recognition. Sens. Actuator A Phys. 2023, 349, 114004. [Google Scholar] [CrossRef]

- Zeng, X.H.; Hu, M.L.; He, P.; Zhao, W.K.; Dong, S.H.; Xu, X.W.; Dai, G.Z.; Sun, J.; Yang, J.L. Highly Conductive Carbon-Based E-Textile for Gesture Recognition. IEEE Electron. Device Lett. 2023, 44, 825–828. [Google Scholar] [CrossRef]

- Song, X.; Liu, X.T.; Peng, Y.X.; Xu, Z.; Liu, W.M.; Pang, K.; Wang, J.X.; Zhong, L.; Yang, Q.; Meng, J. A graphene-coated silk-spandex fabric strain sensor for human movement monitoring and recognition. Nanotechnology 2021, 32, 215501. [Google Scholar] [CrossRef]

- Peng, Y.X.; Wang, J.X.; Pang, K.; Liu, W.M.; Meng, J.; Li, B. A Physiology-Based Flexible Strap Sensor for Gesture Recognition by Sensing Tendon Deformation. IEEE Sens. J. 2021, 21, 9449–9456. [Google Scholar] [CrossRef]

- Wu, X.X.; Niu, F.F.; Zhong, A.; Han, F.; Chen, Y.; Li, J.H.; Zhang, G.P.; Sun, R.; Wong, C.P. Highly sensitive strain sensors based on hollow packaged silver nanoparticle-decorated three-dimensional graphene foams for wearable electronics. RSC Adv. 2019, 9, 39958–39964. [Google Scholar] [CrossRef]

- Zhao, M.J.; Zhang, W.S.; Wang, D.; Sun, P.P.; Tao, Y.Y.; Xu, L.X.; Shi, L. A Packaged and Reusable Hydrogel Strain Sensor with Conformal Adhesion to Skin for Human Motions Monitoring. Adv. Mater. Interfaces 2022, 9, 2101786. [Google Scholar] [CrossRef]

- Li, X. Sensor and Testing Technology; China Higher Education Press: Beijing, China, 2004; pp. 75–100. [Google Scholar]

- Zhu, H.; Dai, S.P.; Cao, J.; Bai, H.Y.; Zhong, Y.; Zhang, Z.Q.; Cheng, G.G.; Yuan, N.Y.; Ding, J.N. A high-performance textile pressure sensor based on carbon black/carbon nanotube-polyurethane coated fabrics with porous structure for monitoring human motion. Mater. Today Commun. 2022, 33, 104541. [Google Scholar] [CrossRef]

- Byun, S.W.; Lee, S.P. Implementation of Hand Gesture Recognition Device Applicable to Smart Watch Based on Flexible Epi-dermal Tactile Sensor Array. Micromachines 2019, 10, 692. [Google Scholar] [CrossRef]

- Tanaka, T.; Nambu, I.; Maruyama, Y.; Wada, Y. Sliding-Window Normalization to Improve the Performance of Machine-Learning Models for Real-Time Motion Prediction Using Electromyography. Sensors 2022, 22, 5005. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Nie, L.S.; Si, X.D.; Ding, R.J.; Zhan, D.C. Enhancing Representation of Deep Features for Sensor-Based Activity Recog-nition. Mob. Netw. Appl. 2021, 26, 130–145. [Google Scholar] [CrossRef]

- Gao, W.B.; Zhang, L.; Huang, W.B.; Min, F.H.; He, J.; Song, A.G. Deep Neural Networks for Sensor-Based Human Activity Recognition Using Selective Kernel Convolution. IEEE Trans. Instrum. Meas. 2021, 70, 2512313. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Lu, Y.H.; Tang, L.Q.; Chen, C.B.; Zhou, L.C.; Liu, Z.J.; Liu, Y.P.; Jiang, Z.Y.; Yang, B. Reconstruction of structural long-term acceleration response based on BiLSTM networks. Eng. Struct. 2023, 285, 116000. [Google Scholar] [CrossRef]

- Zhou, Z. Machine Learning; Tsinghua University Press: Beijing, China, 2016; pp. 28–37. [Google Scholar]

| Ref. | Materials | Number of Gestures | Recognition Accuracy |

|---|---|---|---|

| This work | MWCNT/CF | 20 | CNN-BiLSTM (96.88%) |

| [29] | GNSs/MWCNTs/fabric | 5 | LSTM (95%) |

| [30] | Carbon-based e-textile | 8 | ANN (96.58%) |

| [31] | Graphene-coated silk–spandex fabric | 4 | Lenet-5 model (96.07%) |

| [32] | Graphene aerogel | 12 | Machine learning (84.7%) |

| Channel | Mean Value (V) | Standard Deviation (V) |

|---|---|---|

| CH1 | 1.8719 | 0.0367 |

| CH2 | 1.5908 | 0.0260 |

| CH3 | 1.8025 | 0.0506 |

| CH4 | 1.2206 | 0.0117 |

| CH5 | 2.2579 | 0.0217 |

| CH6 | 2.6071 | 0.0409 |

| Model | Gesture Group | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| CNN-BiLSTM | Group #1 | 99.40% | 99.40% | 99.40% | 99.40% |

| Group #2 | 95.00% | 95.00% | 95.20% | 95.10% | |

| Group #3 | 98.44% | 98.50% | 98.50% | 98.50% | |

| LSTM | Group #1 | 95.24% | 95.55% | 95.24% | 95.39% |

| Group #2 | 88.33% | 88.94% | 88.33% | 88.39% | |

| Group #3 | 96.88% | 97.19% | 96.88% | 97.03% | |

| RF | Group #1 | 95.24% | 95.40% | 95.24% | 95.32% |

| Group #2 | 90.83% | 91.65% | 90.83% | 90.86% | |

| Group #3 | 95.31% | 95.39% | 95.31% | 95.35% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Liu, M.; Wang, F.; Zhu, J.; Hu, A.; Sun, N. Gesture Recognition Based on a Convolutional Neural Network–Bidirectional Long Short-Term Memory Network for a Wearable Wrist Sensor with Multi-Walled Carbon Nanotube/Cotton Fabric Material. Micromachines 2024, 15, 185. https://doi.org/10.3390/mi15020185

Song Y, Liu M, Wang F, Zhu J, Hu A, Sun N. Gesture Recognition Based on a Convolutional Neural Network–Bidirectional Long Short-Term Memory Network for a Wearable Wrist Sensor with Multi-Walled Carbon Nanotube/Cotton Fabric Material. Micromachines. 2024; 15(2):185. https://doi.org/10.3390/mi15020185

Chicago/Turabian StyleSong, Yang, Mengru Liu, Feilu Wang, Jinggen Zhu, Anyang Hu, and Niuping Sun. 2024. "Gesture Recognition Based on a Convolutional Neural Network–Bidirectional Long Short-Term Memory Network for a Wearable Wrist Sensor with Multi-Walled Carbon Nanotube/Cotton Fabric Material" Micromachines 15, no. 2: 185. https://doi.org/10.3390/mi15020185