CLCD-I: Cross-Language Clone Detection by Using Deep Learning with InferCode

Abstract

:1. Introduction

- 1.

- Is CLCD-I more effective than LSTM autorencoders?

- 2.

- Is CLCD-I more effective than existing approaches?

2. Related Work

3. Data Preparation

| Algorithm 1 Create Clone Pairs |

|

4. Source Code Embedding by Using InferCode

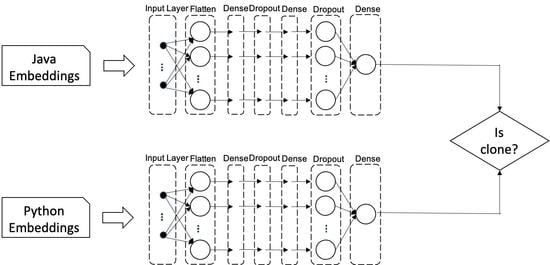

5. CLCD-I: Deep Learning Approach

6. Compared Models

7. Evaluation

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baxter, I.D.; Yahin, A.; Moura, L.; Sant’Anna, M.; Bier, L. Clone detection using abstract syntax trees. In Proceedings of the International Conference on Software Maintenance, Bethesda, MD, USA, 16–19 March 1998; pp. 368–377. [Google Scholar]

- Di Lucca, G.A.; Di Penta, M.; Fasolino, A.R. An approach to identify duplicated web pages. In Proceedings of the 26th Annual International Computer Software and Applications, Oxford, UK, 26–29 August 2002; pp. 481–486. [Google Scholar]

- Monden, A.; Nakae, D.; Kamiya, T.; Sato, S.; Matsumoto, K. Software quality analysis by code clones in industrial legacy software. In Proceedings of the 8th IEEE Symposium on Software Metrics, Ottawa, ON, Canada, 4–7 June 2002; pp. 87–94. [Google Scholar]

- Krinke, J. A Study of Consistent and Inconsistent Changes to Code Clones. In Proceedings of the 14th Working Conference on Reverse Engineering, Vancouver, BC, Canada, 28–31 October 2007; pp. 170–178. [Google Scholar]

- Bellon, S.; Koschke, R.; Antoniol, G.; Krinke, J.; Merlo, E. Comparison and evaluation of clone detection tools. IEEE Trans. Softw. Eng. 2007, 33, 577–591. [Google Scholar] [CrossRef] [Green Version]

- Roy, C.K.; Cordy, J.R. A survey on software clone detection research. Queen’s Sch. Comput. TR 2007, 541, 64–68. [Google Scholar]

- Zhang, J.; Wang, X.; Zhang, H.; Sun, H.; Wang, K.; Liu, X. A Novel Neural Source Code Representation Based on Abstract Syntax Tree. In Proceedings of the 41st IEEE/ACM International Conference on Software Engineering, Montreal, QC, Canada, 25–31 May 2019. [Google Scholar]

- Yuan, Y.; Kong, W.; Hou, G.; Hu, Y.; Watanabe, M.; Fukuda, A. From Local to Global Semantic Clone Detection. In Proceedings of the 6th IEEE International Conference on Dependable Systems and Their Applications, Harbin, China, 3–6 January 2020. [Google Scholar]

- Hua, W.; Sui, Y.; Wan, Y.; Liu, G.; Xu, G. FCCA: Hybrid Code Representation for Functional Clone Detection Using Attention Networks. IEEE Trans. Reliab. 2020, 70, 304–318. [Google Scholar] [CrossRef]

- Zeng, J.; Ben, K.; Li, X.; Zhang, X. Fast Code Clone Detection Based on Weighted Recursive Autoencoders. IEEE Access 2019, 7, 125062–125078. [Google Scholar] [CrossRef]

- Wang, W.; Li, G.; Ma, B.; Xia, X.; Jin, Z. Detecting Code Clones with Graph Neural Network and Flow-Augmented Abstract Syntax Tree. In Proceedings of the IEEE 27th International Conference on Software Analysis, Evolution and Reengineering, London, ON, Canada, 18–21 February 2020. [Google Scholar]

- Meng, Y.; Liu, L. A Deep Learning Approach for a Source Code Detection Model Using Self-Attention. Complexity 2020, 2020, 5027198. [Google Scholar] [CrossRef]

- Lei, M.; Li, H.; Li, J.; Aundhkar, N.; Kim, D.K. Deep learning application on code clone detection: A review of current knowledge. J. Syst. Softw. 2022, 184, 111141. [Google Scholar] [CrossRef]

- Nafi, K.W.; Kar, T.S.; Roy, B.; Roy, C.K.; Schneider, K.A. CLCDSA: Cross Language Code Clone Detection Using Syntactical Features and API Documentation. In Proceedings of the 34th IEEE/ACM International Conference on Automated Software Engineering, San Diego, CA, USA, 10–15 November 2019. [Google Scholar]

- Perez, D.; Chiba, S. Cross-language clone detection by learning over abstract syntax trees. In Proceedings of the 16th IEEE/ACM International Conference on Mining Software Repositories, Montreal, QC, Canada, 26–27 May 2019; pp. 518–528. [Google Scholar]

- Bui, N.D.; Yu, Y.; Jiang, L. InferCode: Self-Supervised Learning of Code Representations by Predicting Subtrees. In Proceedings of the 43rd IEEE/ACM International Conference on Software Engineering, Virtual, 25–28 May 2021; pp. 1186–1197. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. In Proceedings of the 6th International Conference on Neural Information Processing Systems, Denver, CO, USA, 29 November–2 December 1993; pp. 737–744. [Google Scholar]

- White, M.; Tufano, M.; Vendome, C.; Poshyvanyk, D. Deep Learning Code Fragments for Code Clone Detection. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Singapore, 3–7 September 2016. [Google Scholar]

- Li, L.; Feng, H.; Zhuang, W.; Meng, N.; Ryder, B. CCLearner: A Deep Learning-Based Clone Detection Approach. In Proceedings of the IEEE International Conference on Software Maintenance and Evolution, Shanghai, China, 17–22 September 2017. [Google Scholar]

- Wei, H.; Li, M. Supervised Deep Features for Software Functional Clone Detection by Exploiting Lexical and Syntactical Information in Source Code. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Zhao, G.; Huang, J. DeepSim: Deep Learning Code Functional Similarity. In Proceedings of the 26th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Lake Buena Vista, FL, USA, 4–9 November 2018. [Google Scholar]

- Sheneamer, A. CCDLC Detection Framework-Combining Clustering with Deep Learning Classification for Semantic Clones. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, Orlando, FL, USA, 17–20 December 2018. [Google Scholar]

- Chen, L.; Wei, Y.; Zhang, S. Capturing Source Code Semantics via Tree-Based Convolution over API-Enhanced AST. In Proceedings of the 16th ACM International Conference on Computing Frontiers, Alghero, Italy, 30 April–2 May 2019. [Google Scholar]

- Fang, C.; Liu, Z.; Shi, Y.; Huang, J.; Shi, Q. Functional Code Clone Detection with Syntax and Semantics Fusion Learning. In Proceedings of the 29th ACM SIGSOFT International Symposium on Software Testing and Analysis, Virtual, 18–22 July 2020. [Google Scholar]

- Saini, V.; Farmahinifarahani, F.; Lu, Y.; Baldi, P.; Lopes, C. Oreo: Detection of Clones in the Twilight Zone. In Proceedings of the 26th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Lake Buena Vista, FL, USA, 4–9 November 2018. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:abs/1301.3781. [Google Scholar]

- Narayanan, A.; Chandramohan, M.; Venkatesan, R.; Chen, L.; Liu, Y.; Jaiswal, S. Graph2vec: Learning Distributed Representations of Graphs. arXiv 2017, arXiv:abs/1707.05005. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable Feature Learning for Networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014. [Google Scholar]

- Yu, H.; Lam, W.; Chen, L.; Li, G.; Xie, T.; Wang, Q. Neural Detection of Semantic Code Clones Via Tree-Based Convolution. In Proceedings of the 27th IEEE/ACM International Conference on Program Comprehension, Montreal, QC, Canada, 25 May 2019; pp. 70–80. [Google Scholar]

- Koehren, W. Neural Network Embeddings Explained. 2018. Available online: https://towardsdatascience.com/neural-networke\protect\discretionary{\char\hyphenchar\font}{}{}mbeddings-explained-4d028e6f0526 (accessed on 20 March 2020).

- Wang, C.; Gao, J.; Jiang, Y.; Xing, Z.; Zhang, H.; Yin, W.; Gu, M.; Sun, J. Go-Clone: Graph-Embedding Based Clone Detector for Golang. In Proceedings of the 28th ACM SIGSOFT International Symposium on Software Testing and Analysis, Beijing, China, 15–19 July 2019. [Google Scholar]

- Gao, Y.; Wang, Z.; Liu, S.; Yang, L.; Sang, W.; Cai, Y. TECCD: A Tree Embedding Approach for Code Clone Detection. In Proceedings of the IEEE International Conference on Software Maintenance and Evolution, Cleveland, OH, USA, 29 September–4 October 2019. [Google Scholar]

- Wei, H.; Li, M. Positive and Unlabeled Learning for Detecting Software Functional Clones with Adversarial Training. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated Graph Sequence Neural Networks. arXiv 2015, arXiv:abs/1511.05493. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, C.; Yang, H.; Huang, D.; Zhang, J.; Dong, N.; Xu, J.; Zhu, J. Review Sharing via Deep Semi-Supervised Code Clone Detection. IEEE Access 2020, 8, 24948–24965. [Google Scholar] [CrossRef]

- Svajlenko, J.; Islam, J.F.; Keivanloo, I.; Roy, C.K.; Mia, M. Towards a Big Data Curated Benchmark of Inter-Project Code Clones. In Proceedings of the International Conference on Software Maintenance and Evolution, Victoria, BC, Canada, 29 September–3 October 2014. [Google Scholar]

- Mou, L.; Li, G.; Zhang, L.; Wang, T.; Jin, Z. Convolutional Neural Networks over Tree Structures for Programming Language Processing. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1287–1293. [Google Scholar]

- Mou, L.; Peng, H.; Li, G.; Xu, Y.; Zhang, L.; Jin, Z. Discriminative neural sentence modeling by tree-based convolution. arXiv 2015, arXiv:1504.01106. [Google Scholar]

- Chilowicz, M.; Duris, E.; Roussel, G. Syntax tree fingerprinting for source code similarity detection. In Proceedings of the 17th IEEE International Conference on Program Comprehension, Vancouver, BC, Canada, 17–19 May 2009; pp. 243–247. [Google Scholar]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. code2vec: Learning distributed representations of code. Proc. ACM Program. Lang. 2019, 3, 1–29. [Google Scholar] [CrossRef]

- Alon, U.; Brody, S.; Levy, O.; Yahav, E. code2seq: Generating sequences from structured representations of code. arXiv 2018, arXiv:1808.01400. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 1735–1742. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

| Models | Precision | Recall | F1-Measure | Accuracy |

|---|---|---|---|---|

| CLCD-I | 0.99 | 0.63 | 0.78 | 0.40 |

| Vanilla LSTM | 0.60 | 0.45 | 0.53 | 0.76 |

| LSTM+BA | 0.59 | 0.45 | 0.54 | 0.75 |

| LSTM+LA | 0.62 | 0.53 | 0.56 | 0.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yahya, M.A.; Kim, D.-K. CLCD-I: Cross-Language Clone Detection by Using Deep Learning with InferCode. Computers 2023, 12, 12. https://doi.org/10.3390/computers12010012

Yahya MA, Kim D-K. CLCD-I: Cross-Language Clone Detection by Using Deep Learning with InferCode. Computers. 2023; 12(1):12. https://doi.org/10.3390/computers12010012

Chicago/Turabian StyleYahya, Mohammad A., and Dae-Kyoo Kim. 2023. "CLCD-I: Cross-Language Clone Detection by Using Deep Learning with InferCode" Computers 12, no. 1: 12. https://doi.org/10.3390/computers12010012