Self-Monitoring of Emotions and Mood Using a Tangible Approach †

Abstract

:1. Introduction

2. Emotions and Mood

- First, inexperienced users may not be so compliant in tracking their own emotions. This issue is also present in clinical settings, where therapists compel the patient to track her emotions. Users can fail to self-monitor themselves due to lack of motivation, lack of time, or to forgetfulness. Moreover, the user can avoid the tracking activity proposed because she could consider the entire process onerous. Indeed, for every record she has to take out the smartphone, open the app, insert data, close the app, put away the smartphone. Furthermore, much of the output of self-monitoring devices and mobile health applications, including the data that they generate, fail to engage people [32] because they are designed on the basis of existing healthcare systems and do not involve the end users in the design process, as also indicated by the World Health Organization [33].

- Second, users usually tend to self-report data after the event to be recorded has occurred. In fact, often it is not feasible for the user to interrupt her activity in order to record what she feels. However, when the user is reminded of reporting the data, it is often too late to recollect the exact experienced emotional states. This is the case when beliefs stand above feelings in the self-reporting of emotions [24]. For example, beliefs can influence the emotions felt in a particular event or situation (e.g., birthdays are considered happy events), or generalized beliefs can influence consideration about the self (e.g., derived by trait measures of extraversion or neuroticism) or related social stereotypes (e.g., women are more emotional than men) only when the actual experience is relatively inaccessible (later in the time). In fact, memory is reconstructive [34]: with the passage of time, a shift from relatively veridical memories to relatively schematic or stereotypical ones can be observed.

- Make self-monitoring more fun and enjoyable. Over the last 10 years, novel design solutions have been explored to allow a serendipitous navigation through data [35,36] or content [37,38,39], and gamification techniques [40,41,42,43,44] have been employed to enhance users’ motivation [45] or change individuals’ behavior [46,47], foreseeing the use of game design elements in personal informatics context [48]. Building on these attempts for making content and data exploration and management more enjoyable, we here propose the use of tangible interaction to involve people in self-reporting their emotional states. Tangible User Interfaces (TUIs) leverage physical representations for connecting the digital and physical worlds [49]; interaction with TUIs relies on users’ existing skills of interaction with the real world [50], offering interfaces that are learned quickly and easier to use. Using personal objects as tangible interfaces could be even more straightforward, since users already have a mental model associated to the physical objects, thus facilitating the comprehension and usage modalities of those objects [51]. TUIs can remind people to insert data, motivating users to perform tasks usually perceived as repetitive and burdensome. In fact, TUIs involve the user more than Graphical User Interfaces (GUIs) when a task is not appealing enough on its own [52], providing a more engaging experience that can increase the repetition of the activities carried out by the user [53]. Moreover, using TUIs for self-reporting can make users more physically engaged, providing richer feedback during the interaction [53]. The act of self-reporting becomes a physical activity where users, playing with the object, automatically provide information on their emotional state. Earlier studies demonstrate that data produced by research participants have a more consistent quality if the subjects feel that they have mastered the use of the data tracking equipment [54]. In other words, the better the subjects control the equipment, the less cumbersome it is for them.

- Supporting users in the retrospective reconstruction of emotions. Since people’s reports of their emotions reflect whatever information is accessible at the time [24], we aim to provide people with some hints in order to recall the experience where the emotions arose. This would allow the user to connect her emotional states to the places visited, the people met, and the task accomplished, and, through them, remember what happened to her during the day and report her emotions more faithfully, in a way as similar as possible to how it actually happened (trying to avoid the influence of beliefs).

- Supporting users to track their emotions with a low cognitive load. Since a complex self-tracking process may lead users to avoid the recording of their emotional states, we aim to provide the user with a TUI in order to help her to self-report them in a simple and less onerous way. This would allow her to immediately report her emotions, avoiding the possibility that subsequent beliefs could produce bias in the reporting process.

3. Related Work

3.1. Emotional Tracking

3.2. Tangible Interfaces and Intuitive Visualiztions

4. Our Conceptual Framework

- It allows the self-reporting of emotions or mood in an amusing (i.e., which fosters the use of the device), simple (i.e., which does not require previous knowledge), and appealing (i.e., which might engage users) way by means of a tangible interface;

- It allows one to automatically collect contextual aspects related to the emotions, including location, time and people in the surrounding environment when the emotion occurs, that will help users in recalling their emotion;

- It provides this contextual information to users to help them in remembering their emotions;

- It will be able to provide users with a complex aggregated picture of the emotions of a period of time or of an experience, and correlations among data.

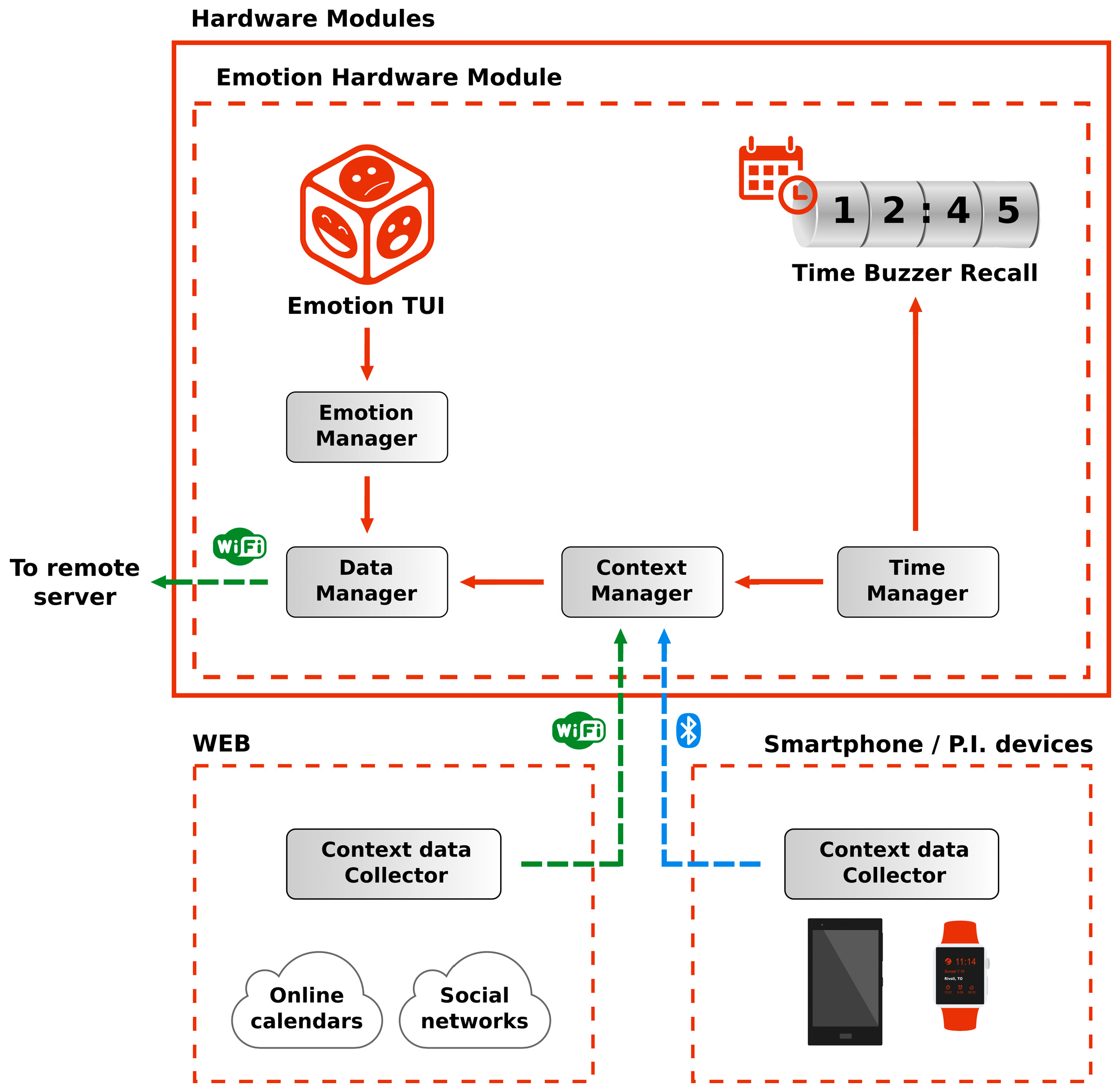

- Emotion TUI, used to provide user emotions;

- Time Buzzer Recall, a buzzer inside the TUI that reminds the user of reporting her emotions.

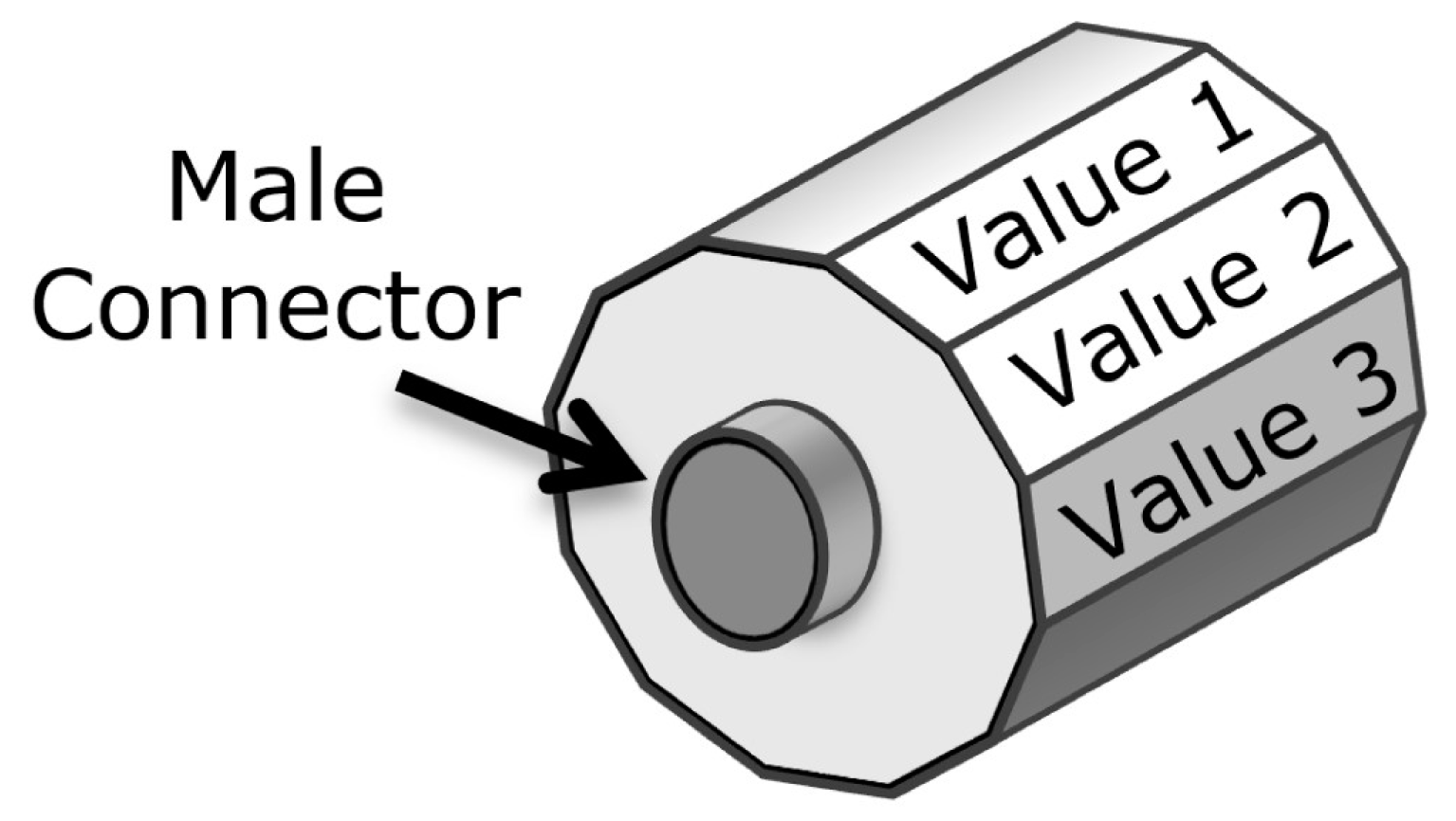

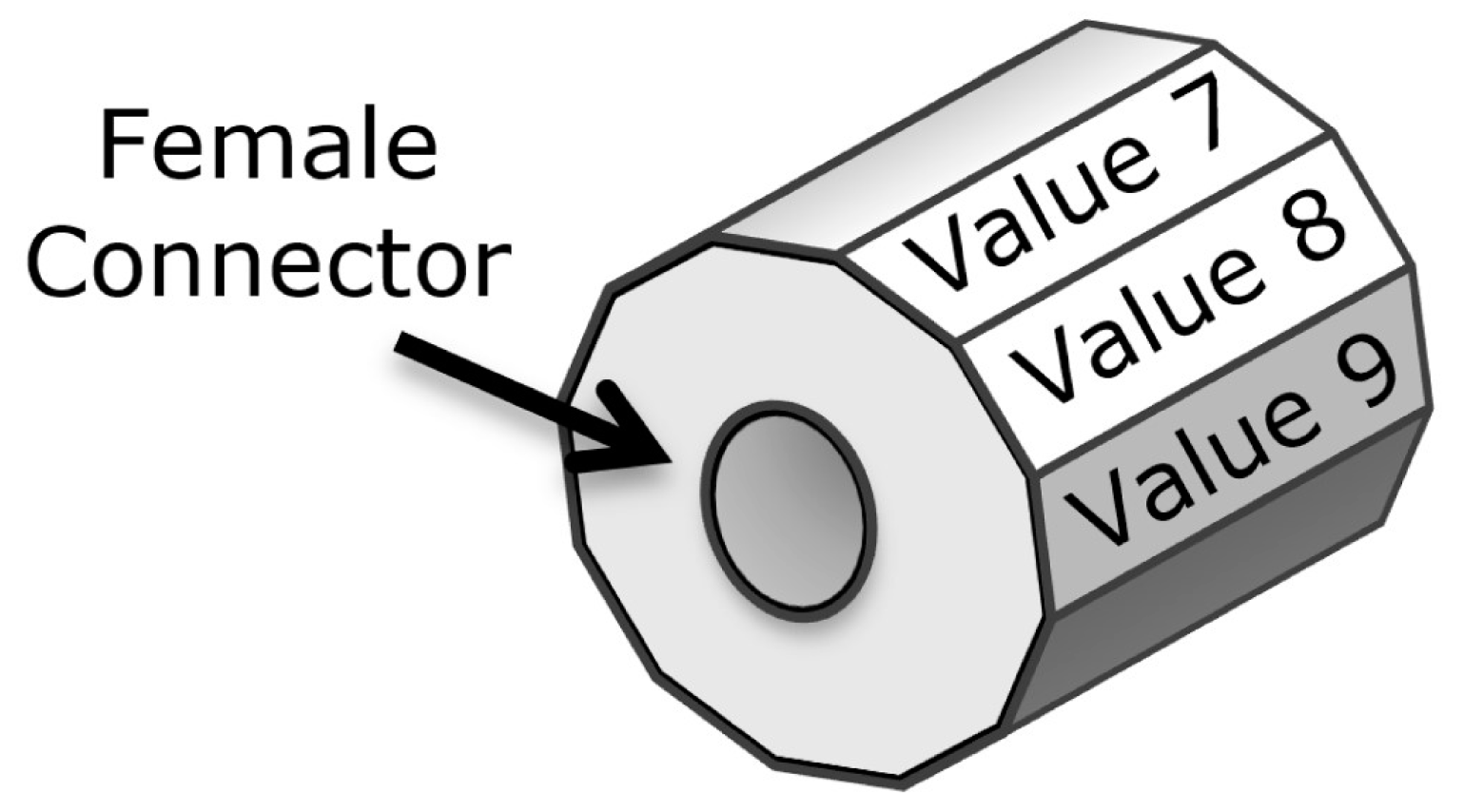

4.1. Instantiation of the Proposal

4.2. Scenario

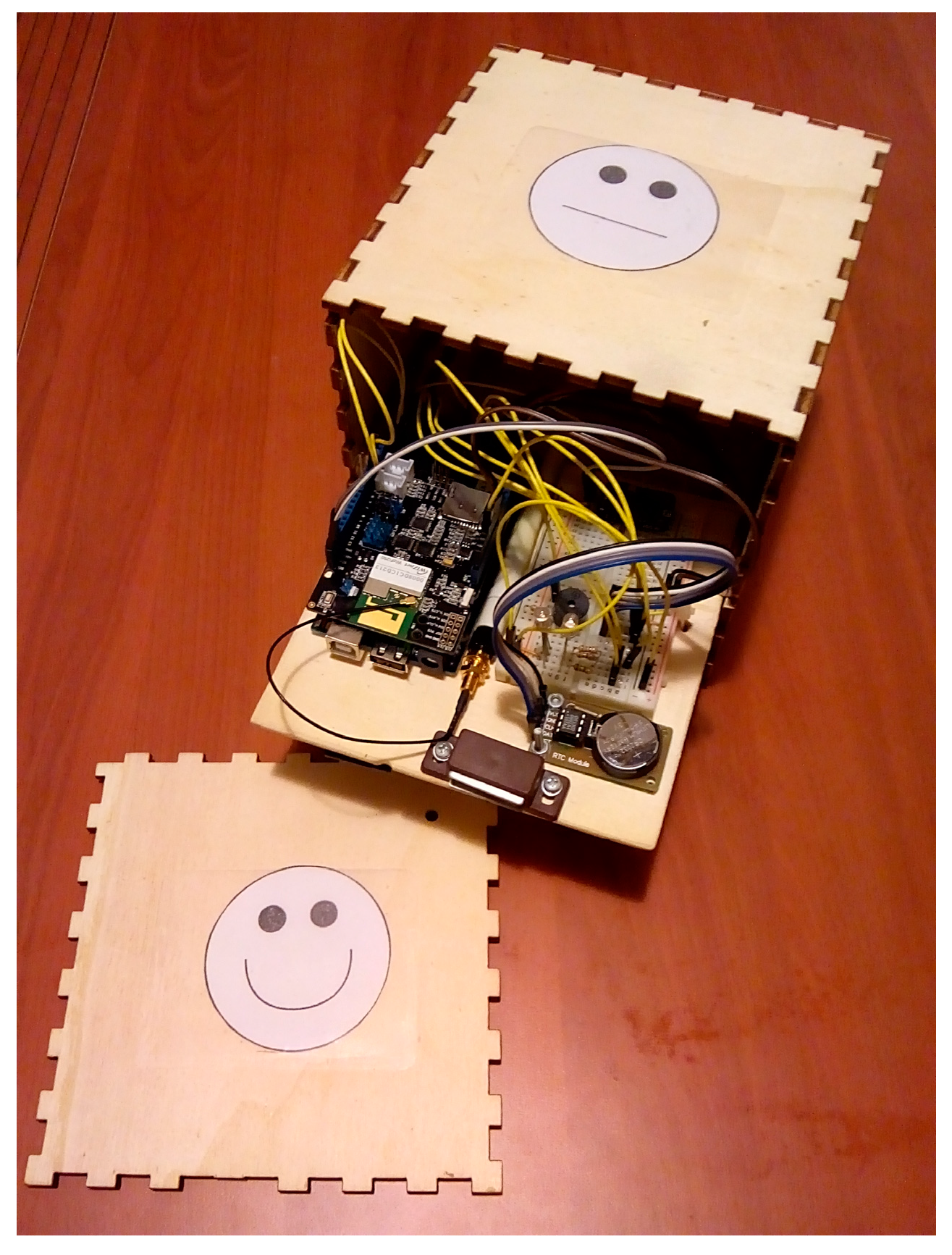

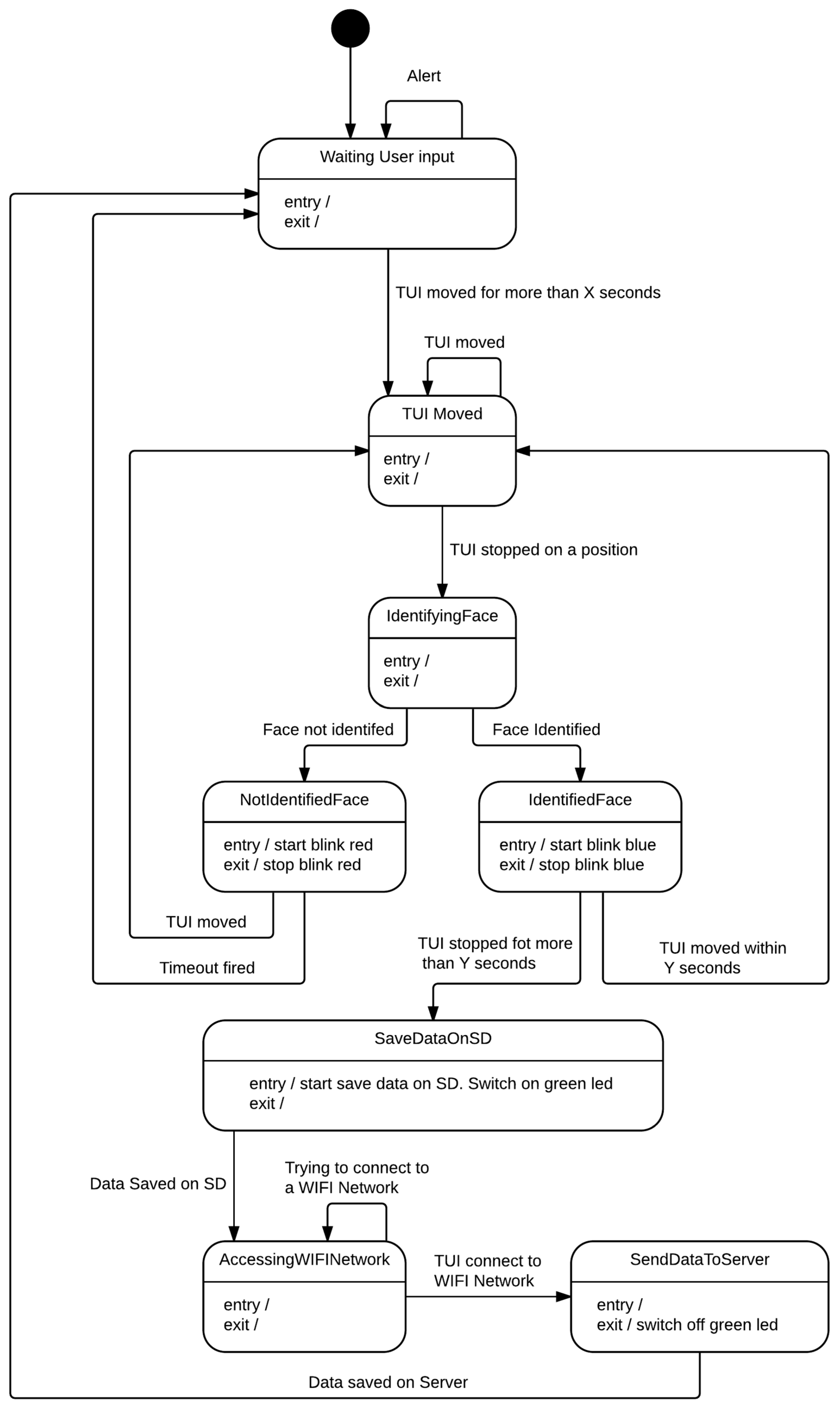

4.3. TUI Implementation

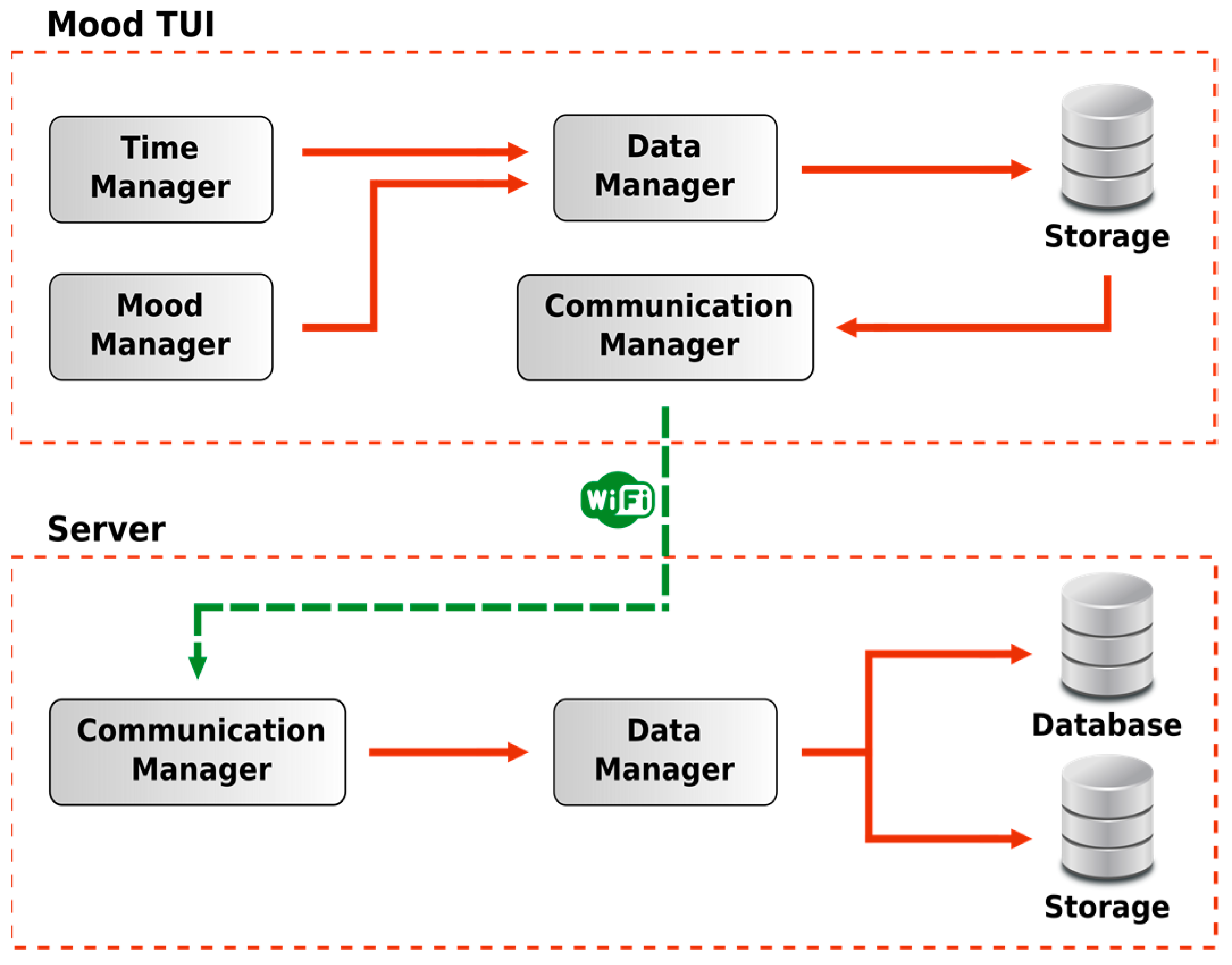

4.3.1. Implementation: Client Side (Mood TUI)

- An inertial measurement unit (IMU) is used to recognize which face of the TUI the user selects. We used an IMU with 10 degrees of freedom (SEN0140 by dfRobot). It integrates an accelerometer (ADXL345), a magnetometer (HMC5883L), a gyro (ITG-3205), and a barometric pressure and temperature sensor (BMP085). To communicate with this sensor, we used a dedicated free library, FreeIMU [102];

- A Real Time Clock (RTC) is necessary to obtain the current date/time and allow for associating the mood state to a particular event or time. It holds a battery to preserve the time information;

- A secure digital (SD) card reader;

- An SD card that contains initialization parameters used by the platform, such as Wi-Fi network name and passphrase and server IP and port and where data are stored before sending them to the remote server;

- A Wi-Fi shield enables the wireless connection to the remote server. We used the WizFi250 shield by Seeedstudio;

- A buzzer, used to inform the user that the platform is waiting for her input;

- Some red, green and blue (RGB) light emitting diodes (LEDs) show the state of the platform to the user;

- A global positioning system (GPS) sensor to collect information about the user location.

4.3.2. Implementation: Server Side

5. Evaluation Method

- Generation Z (students aged 14 to 17 years);

- Generation Y (students aged 18 years and over and teachers under the age of 35 years);

- Generation X (teachers aged 35 years and over).

5.1. Sample

5.2. Procedure

- Quantified-self context. We introduced the main concept, discussing several parameters that people would like to track: heartbeat, steps, etc. We introduced some specific terms or acronyms like IoT, wearable devices, and tangible interfaces that we used in the discussion. We asked participants what kind of data would be useful to track and which devices they normally use. Participants discussed the cited parameters, citing apps or devices they use (about 10 min).

- Conceptual framework. We introduced some parameters that are not easy to monitor like food eaten, dreams, and emotion/mood and guided the discussion toward how they could track them (some of them cited the apps used). We introduced our conceptual framework (Section 4) related to monitoring mood (about 5 min).

- Questionnaire. Each participant had to fill in a questionnaire composed of nine questions (about 5 min). Participants could ask information if some questions were not clear. The questionnaire is listed in Appendix A. Results of the questionnaire are presented in Table 4 and Table 5 (the column headings refer to the questions of the questionnaire—see Appendix A).

- Prototype. We introduced our Mood TUI prototype by showing it to participants. Subsequently, we asked each participant to use it; each one chose a value representing her current mood (about 10–15 min).

- Ideas and feedback. A blank sheet was delivered to each participant. We asked them to express their feedback (even in a graphic form) about the following themes: what they thought about the device, where they would place it, what kind of improvements they suggested to make the device more suitable to their daily habits in terms of design, form, material, tracked parameters (ideas to use the prototype to monitor other parameters), connection to social networks, etc. During this time, each participant could use and/or analyze the prototype (about 10 min).

- Discussion. Each participant read aloud her feedback, presenting it to others. During the discussion, participants were free to intervene, expressing all their thoughts in order to improve the open discussion (about 20–30 min).

5.3. Data Analysis

6. Results

- A bracelet (U02, U04): mood levels could be represented with buttons positioned along the outer surface. To select the preferred mood level, the user has to push the relative button. Protection to avoid false inputs (pushing) has to be implemented;

- A pendant (U25): a small pendant could be inserted into a necklace, kept anchored by two magnets. When the user wants to insert a mood value, she releases the pendant, shakes it and then presses one of its faces. The pendant might be a cube or have another shape based on the number of states the user wants to handle;

- A smartwatch (U05, U13): similar to the bracelet shape, mood levels would be represented by buttons placed along the strap. To select the mood level, the user has to push over one button. Protection to avoid false inputs has to be implemented (i.e., user has to rotate the clock ring); U19, instead, suggested implementing a force sensor into the strap: when a user wants to enter the mood value, she has to open the strap and straighten it. Then she can fold it according to the value she wants to insert. The emoticon related to the mood value could be shown on the screen of the smartwatch. More mood values could be managed than with the cubic form.

7. Discussion

- -

- Tangible interfaces should be designed in a way that allows for the portability of the device, since the monitoring of mood may occur in every situation of an individual’s life.

- -

- Maintenance should be reduced to a minimum, since the monitoring of emotions should not require supplementary actions apart from the act of recording.

- -

- Maintaining the engagement over time is difficult, and designers should focus on how we can motivate users to continuously use the tangible interface, by experimenting novel forms of reminders, which, nevertheless, should not be annoying.

- -

- The playful approach is paramount for users that are not strongly self-motivated in monitoring their emotions (i.e., they do not have a mood disorder); designers should explore how such an approach could be made more effective, for example, by experimenting with gamification techniques that could be paired with a tangible approach to design [112].

7.1. Optimizing Mood TUI

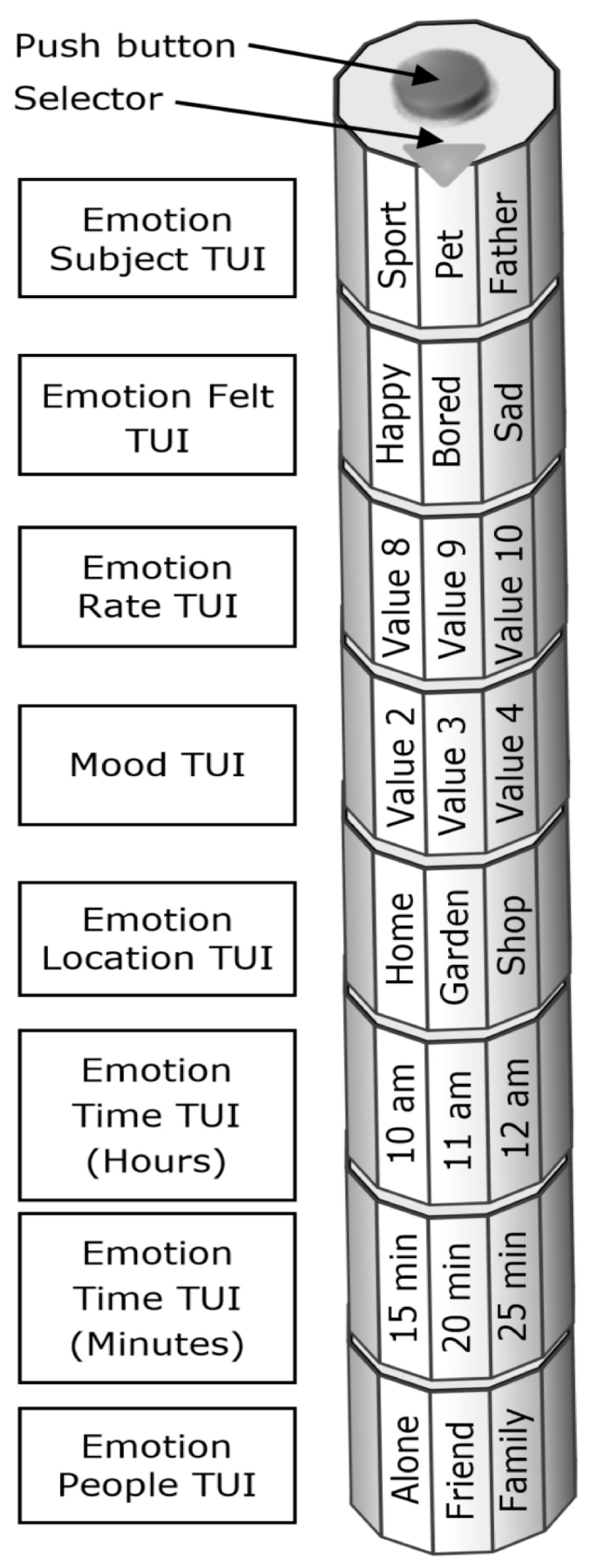

7.2. Tracking Emotions

- The subject related to the emotion felt: sports, family, pets, friends, colleagues, workplace, home, etc. (Emotion Subject TUI);

- The type of emotion felt (stressed, worried, sad, motivated, bored, happy, etc.) (Emotion Felt TUI);

- The rate of the emotions felt (Emotion Rate TUI);

- The mood felt (Mood TUI);

- The location where the emotion was felt (this parameter could be collected directly from the user’s smartphone or by the system itself if a GPS sensor is installed in) (Emotion Location TUI);

- The time when the emotion was felt (this parameter could be collected directly from the user’s smartphone or by the system itself if a Real Time Clock is installed in). This TUI is composed of two subTUIs: one for the hour and one for the minute (Emotion Time TUI);

- People involved in the context or within the environment (Emotion People TUI).

8. Limitations

9. Conclusions

Conflicts of Interest

Appendix A

- Q1

- Sex (closed question: M or F);

- Q2

- Profession;

- Q3

- Age (numeric value);

- Q4

- On a scale 1 to 5 (1 = not confident, 5 = very confident) how would you rate your confidence with technological devices (numeric value);

- Q5

- Smartphone/Mobile phones.

- How many smartphones do you own? (user has to answer with the number of smartphones owned);

- What type of smartphones do you own? (open answer with brand and specific model)

- How many mobile phones do you own? (user has to answer with the number of mobile phones owned);

- What type of mobile phones do you own? (open answer with information about brand and specific model)

- Q6

- How many hours per day do you use the phone:

- to make calls? (numeric value);

- to play? (numeric value)

- to chat? (numeric value)

- to surf on the Internet? (numeric value)

- to send/receive mails? (numeric value)

- to take/see photos/videos? (numeric value)

- to listen to the music? (numeric value)

- Q7

- How many hours per day do you use the Internet? (numeric value)

- Q8

- How many hours per day do you play videogames? (numeric value)

- Q9

- Wearable devices.

- Have you ever used a wearable device? (closed question: T or F);

- Do they monitor some personal data? (closed question: T or F);

References

- Marcengo, A.; Rapp, A. Visualization of human behavior data: The quantified self. In Innovative Approaches of Data Visualization and Visual Analytics; Huang, M.L., Huang, W., Eds.; IGI Global: Hershey, PA, USA, 2014; pp. 236–265. ISBN 9781466643093. [Google Scholar]

- Rapp, A.; Tirassa, M. Know Thyself: A Theory of the Self for Personal Informatics. Hum.-Comput. Interact. 2017, 32, 335–380. [Google Scholar] [CrossRef]

- Fox, S.; Duggan, M. Health Online 2012; Pew Internet & American Life Project: Washington, DC, USA, 2012. [Google Scholar]

- Jimison, H.; Gorman, P.; Woods, S.; Nygren, P.; Walker, M.; Norris, S.; Hersh, W. Barriers and drivers of health information technology use for the elderly, chronically ill, and underserved. Evid. Rep./Technol. Assess. 2008, 1, 1–1422. [Google Scholar]

- Fox, S. The Social Life of Health Information, 2011; Pew Internet & American Life Project: Washington, DC, USA, 2011. [Google Scholar]

- Fox, S. Is There Hope for SMS Health Alerts. Available online: http://www.pewinternet.org/2012/12/17/is-there-hope-for-sms-health-alerts/ (accessed on 4 January 2018).

- Rapp, A.; Cena, F.; Kay, J.; Kummerfeld, B.; Hopfgartner, F.; Plumbaum, T.; Larsen, J.E. New frontiers of quantified self: Finding new ways for engaging users in collecting and using personal data. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 969–972. [Google Scholar]

- Rapp, A.; Cena, F.; Kay, J.; Kummerfeld, B.; Hopfgartner, F.; Plumbaum, T.; Larsen, J.E.; Epstein, D.A.; Gouveia, R. New frontiers of quantified self 2: Going beyond numbers. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; ACM: New York, NY, USA, 2016; pp. 506–509. [Google Scholar]

- Rapp, A.; Cena, F.; Kay, J.; Kummerfeld, B.; Hopfgartner, F.; Plumbaum, T.; Larsen, J.E.; Epstein, D.A.; Gouveia, R. New frontiers of quantified self 3: Exploring understudied categories of users. In Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; ACM: New York, NY, USA, 2017; pp. 861–864. [Google Scholar]

- Cena, F.; Likavec, S.; Rapp, A. Quantified self and modeling of human cognition. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 1021–1026. [Google Scholar]

- Rapp, A.; Cena, F. Self-monitoring and Technology: Challenges and Open Issues in Personal Informatics. In Universal Access in Human-Computer Interaction. Design for All and Accessibility Practice, Part IV, Proceedings of the 8th International Conference, UAHCI 2014, Held as Part of HCI International 2014, Heraklion, Crete, Greece, 22–27 June 2014; Stephanidis, C., Antona, M., Eds.; Springer: Cham, Switzerland, 2014; pp. 613–622. ISBN 978-3-319-07509-9. [Google Scholar]

- Rapp, A.; Cena, F. Personal informatics for everyday life: How users without prior self-tracking experience engage with personal data. Int. J. Hum.-Comput. Stud. 2016, 94, 1–17. [Google Scholar] [CrossRef]

- Matassa, A.; Rapp, A.; Simeoni, R. Wearable accessories for cycling: Tracking memories in urban spaces. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013; ACM: New York, NY, USA, 2013; pp. 415–424. [Google Scholar]

- Banaee, H.; Ahmed, M.U.; Loutfi, A. Data mining for wearable sensors in health monitoring systems: A review of recent trends and challenges. Sensors 2013, 13, 17472–17500. [Google Scholar] [CrossRef] [PubMed]

- Cena, F.; Likavec, S.; Rapp, A.; Marcengo, A. An ontology for quantified self: Capturing the concepts behind the numbers. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; ACM: New York, NY, USA, 2016; pp. 602–604. [Google Scholar]

- Hilviu, D.; Rapp, A. Narrating the quantified self. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 1051–1056. [Google Scholar]

- Nafus, D.; Denman, P.; Durham, L.; Florez, O.; Nachman, L.; Sahay, S.; Savage, E.; Sharma, S.; Strawn, D.; Wouhaybi, R.H. As simple as possible but no simpler: Creating flexibility in personal informatics. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 1445–1452. [Google Scholar]

- Rapp, A.; Cena, F. Affordances for self-tracking wearable devices. In Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 141–142. [Google Scholar]

- Rapp, A.; Cena, F.; Hilviu, D.; Tirassa, M. Human body and smart objects. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 939–943. [Google Scholar]

- Cena, F.; Rapp, A.; Likavec, S.; Marcengo, A. Envisioning the Future of Personalization through Personal Informatics: A User Study. Int. J. Mob. Hum. Comput. Interact. (IJMHCI) 2018, 10, 52–66. [Google Scholar] [CrossRef]

- Cabanac, M. What is emotion? Behav. Process. 2002, 60, 69–83. [Google Scholar] [CrossRef]

- Schacter, D.L.; Gilbert, D.T.; Wegner, D.M. Psychology, 2nd ed.; Worth: New York, NY, USA, 2011. [Google Scholar]

- Brave, S.; Nass, C. Emotion in human-computer interaction. In The Human-Computer Interaction Handbook; L. Erlbaum Associates Inc.: Mahwah, NJ, USA, 2002; pp. 81–96. [Google Scholar]

- Clore, G.L.; Robinson, M.D. Knowing our emotions: How do we know what we feel. Handb. Self-Knowl. 2012, 1, 194–209. [Google Scholar]

- Affectiva. Available online: http://www.affectiva.com/technology/ (accessed on 13 August 2017).

- Emotient. Available online: http://www.emotient.com (accessed on 13 August 2016).

- Marcengo, A.; Rapp, A.; Cena, F.; Geymonat, M. The Falsified Self: Complexities in Personal Data Collection. In Proceedings of the HCI International Conference on Universal Access in Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Methods, Techniques, and Best Practices, Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2016; Volume 9737, pp. 351–358. [Google Scholar]

- Wallbott, H.G.; Scherer, K.R. Assessing emotion by questionnaire. Emot. Theory Res. Exp. 1989, 4, 55–82. [Google Scholar]

- Lorr, M. Models and methods for measurement of mood. In Emotion: Theory, Research, and Experience; The Measurement of Emotions; Plutchik, R., Kellerman, H., Eds.; Academic Press: San Diego, CA, USA, 1989; Volume 4, pp. 37–53. [Google Scholar]

- Lang, P.J. The emotion probe: Studies of motivation and attention. Am. Psychol. 1995, 50, 372. [Google Scholar] [CrossRef] [PubMed]

- Plutchik, R. Measuring emotions and their derivatives. Emot. Theory Res. Exp. 1989, 4, 1. [Google Scholar]

- McCurdie, T.; Taneva, S.; Casselman, M.; Yeung, M.; McDaniel, C.; Ho, W.; Cafazzo, J. mHealth Consumer Apps: The Case for User-Centered Design. Biomed. Instrum. Technol. 2012, 46, 49–56. [Google Scholar] [CrossRef] [PubMed]

- Kay, M.; Santos, J.; Takane, M. mHealth: New horizons for health through mobile technologies. World Health Organ. 2011, 3, 66–71. [Google Scholar]

- Bartlett, F.C.; Burt, C. Remembering: A study in experimental and social psychology. Br. J. Educ. Psychol. 1933, 3, 187–192. [Google Scholar] [CrossRef]

- Cardillo, D.; Rapp, A.; Benini, S.; Console, L.; Simeoni, R.; Guercio, E.; Leonardi, R. The art of video MashUp: Supporting creative users with an innovative and smart application. Multimed. Tools Appl. 2011, 53, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Console, L.; Antonelli, F.; Biamino, G.; Carmagnola, F.; Cena, F.; Chiabrando, E.; Cuciti, V.; Demichelis, M.; Fassio, F.; Franceschi, F.; et al. Interacting with social networks of intelligent things and people in the world of gastronomy. ACM Trans. Interact. Intell. Syst. 2013, 3, 1–38. [Google Scholar] [CrossRef]

- Simeoni, R.; Etzler, L.; Guercio, E.; Perrero, M.; Rapp, A.; Montanari, R.; Tesauri, F. Innovative TV: From an old standard to a new concept of Interactive TV—An Italian job. In Proceedings of the International Conference on Human-Computer Interaction, Beijing, China, 22–27 July 2007; HCI Intelligent Multimodal Interaction Environments, Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2007; Volume 4552, pp. 971–980. [Google Scholar] [CrossRef]

- Simeoni, R.; Geymonat, M.; Guercio, E.; Perrero, M.; Rapp, A.; Tesauri, F.; Montanari, R. Where Have You Ended Up Today? Dynamic TV and the Inter-tainment Paradigm. In Proceedings of the European Conference on Interactive Television, Salzburg, Austria, 3–4 July 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 238–247. [Google Scholar]

- Vellar, A.; Simeoni, R.; Montanari, R.; Rapp, A. A parasocial navigation concept for movie discovery. In Proceedings of the Interfaces and Human Computer Interaction (IHCI), Amsterdam, The Netherlands, 25–27 July 2008; pp. 291–296, ISBN 978-972-8924-59-1. [Google Scholar]

- Rapp, A.; Marcengo, A.; Console, L.; Simeoni, R. Playing in the wild: Enhancing user engagement in field evaluation methods. In Proceedings of the 16th International Academic MindTrek Conference, Tampere, Finland, 3–5 October 2012; ACM: New York, NY, USA, 2012; pp. 227–228. [Google Scholar]

- Rapp, A. A Qualitative Investigation of Gamification: Motivational Factors in Online Gamified Services and Applications. Int. J. Technol. Hum. Interact. 2015, 11, 67–82. [Google Scholar] [CrossRef]

- Rapp, A.; Cena, F.; Gena, C.; Marcengo, A.; Console, L. Using game mechanics for field evaluation of prototype social applications: A novel methodology. Behav. Inf. Technol. 2016, 35, 184–195. [Google Scholar] [CrossRef]

- Rapp, A. Beyond Gamification: Enhancing User Engagement through Meaningful Game Elements. In Proceedings of the Foundation of Digital Games, Chania, Greece, 14–17 May 2013; Society for the Advancement of the Science of Digital Games. ISBN 978-0-9913982-0-1. [Google Scholar]

- Rapp, A.; Cena, F.; Hopfgartner, F.; Hamari, J.; Linehan, C. Fictional game elements: Critical perspectives on gamification design. In Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts (CHI PLAY Companion 2016), Austin, Texas, USA, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 373–377. [Google Scholar]

- Rapp, A. Designing interactive systems through a game lens: An ethnographic approach. Comput. Hum. Behav. 2017, 71, 455–468. [Google Scholar] [CrossRef]

- Rapp, A. Drawing inspiration from World of Warcraft: Gamification design elements for behavior change technologies. Interact. Comput. 2017, 29, 648–678. [Google Scholar] [CrossRef]

- Rapp, A. From games to gamification: A classification of rewards in World of Warcraft for the design of gamified systems. Simul. Gaming 2017, 48, 381–401. [Google Scholar] [CrossRef]

- Rapp, A. Meaningful game elements for personal informatics. In Proceedings of the 2014 ACM International Symposium on Wearable Computers: Adjunct Program, Seattle, WA, USA, 13–17 September 2014; ACM: New York, NY, USA, 2014; pp. 125–130. [Google Scholar]

- Shaer, O.; Hornecker, E. Tangible user interfaces: Past, present, and future directions. Found. Trends Hum.-Comput. Interact. 2010, 3, 1–137. [Google Scholar] [CrossRef] [Green Version]

- Ishii, H.; Ullmer, B. Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 22–27 March 1997; ACM: New York, NY, USA, 1997; pp. 234–241. [Google Scholar]

- Mugellini, E.; Rubegni, E.; Gerardi, S.; Khaled, O.A. Using personal objects as tangible interfaces for memory recollection and sharing. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; ACM: New York, NY, USA, 2007; pp. 231–238. [Google Scholar]

- Xie, L.; Antle, A.N.; Motamedi, N. Are tangibles more fun?: Comparing children’s enjoyment and engagement using physical, graphical and tangible user interfaces. In Proceedings of the 2nd International Conference on Tangible and Embedded Interaction, BatBonn, Germany, 18–20 February 2008; ACM: New York, NY, USA, 2008; pp. 191–198. [Google Scholar]

- Zuckerman, O.; Gal-Oz, A. To TUI or not to TUI: Evaluating performance and preference in tangible vs. graphical user interfaces. Int. J. Hum.-Comput. Stud. 2013, 71, 803–820. [Google Scholar] [CrossRef]

- Christensen, P.; Mikkelsen, M.R.; Nielsen, T.A.S.; Harder, H. Children, mobility, and space: Using GPS and mobile phone technologies in ethnographic research. J. Mix. Methods Res. 2011, 5, 227–246. [Google Scholar] [CrossRef]

- Track Your Happiness. Available online: https://www.trackyourhappiness.org/ (accessed on 5 July 2016).

- Happy Factor. Available online: http://howhappy.dreamhosters.com/ (accessed on 13 August 2017).

- Mood Panda. Available online: http://www.moodpanda.com/ (accessed on 13 August 2016).

- Matthews, M.; Doherty, G. In the mood: Engaging teenagers in psychotherapy using mobile phones. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 2947–2956. [Google Scholar]

- Mood 24/7. Available online: https://www.mood247.com/ (accessed on 13 August2017).

- Mood Scope. Available online: https://www.moodscope.com// (accessed on 13 August 2016).

- Mood Tracker. Available online: https://www.moodtracker.com/ (accessed on 13 August 2016).

- Gotta Feeling. Available online: http://gottafeeling.com// (accessed on 13 August 2017).

- Mappiness. Available online: http://www.mappiness.org.uk/ (accessed on 13 August 2016).

- StressEraser. Available online: http://www.stresseraser.com (accessed on 5 July 2016).

- T2 Mood Tracker. Available online: http://www.t2.health.mil/apps/t2-mood-tracker (accessed on 5 July 2016).

- Med Help Mood Tracker. Available online: http://www.medhelp.org/user-trackers/gallery/mood/ (accessed on 13 August 2016).

- Feelytics. Available online: Http://feelytics.me/index.php (accessed on 13 August 2016).

- Li, I. Designing personal informatics applications and tools that facilitate monitoring of behaviors. In Proceedings of the UIST 2009, Victoria, BC, Canada, 4–7 October 2009. [Google Scholar]

- Valenza, G.; Gentili, C.; Lanatà, A.; Scilingo, E.P. Mood recognition in bipolar patients through the PSYCHE platform: Preliminary evaluations and perspectives. Artif. Intell. Med. 2013, 57, 49–58. [Google Scholar] [CrossRef] [PubMed]

- Fractal. Available online: http://www.design.philips.com/about/design/designportfolio/design-futures/fractal.page (accessed on 13 August 2016).

- Davis, F.; Roseway, A.; Carroll, E.; Czerwinski, M. Actuating mood: Design of the textile mirror. In Proceedings of the 7th International Conference on Tangible, Embedded and Embodied Interaction, Barcelona, Spain, 10–13 February 2013; ACM: New York, NY, USA, 2013; pp. 99–106. [Google Scholar]

- BodyMonitor. Available online: http://bodymonitor.de (accessed on 13 August 2017).

- Rationalizer. Available online: http://www.mirrorofemotions.com/ (accessed on 5 July 2016).

- Bretl, D. Emotish. Available online: https://www.appfutura.com/app/emotish-dj (accessed on 4 January 2018).

- Ouellet, S. Real-time emotion recognition for gaming using deep convolutional network features. arXiv 2014, arXiv:14083750. [Google Scholar]

- Yu, Z.; Zhang, C. Image based static facial expression recognition with multiple deep network learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; ACM: New York, NY, USA, 2015; pp. 435–442. [Google Scholar]

- Kim, B.-K.; Roh, J.; Dong, S.-Y.; Lee, S.-Y. Hierarchical committee of deep convolutional neural networks for robust facial expression recognition. J. Multimodal User Interfaces 2016, 10, 173–189. [Google Scholar] [CrossRef]

- Knapton, S. Bad mood? New app senses emotion and suggests food to lift spirits. The Telegraph, 27 November 2016. [Google Scholar]

- Lathia, N.; Pejovic, V.; Rachuri, K.K.; Mascolo, C.; Musolesi, M.; Rentfrow, P.J. Smartphones for Large-Scale Behavior Change Interventions. IEEE Pervasive Comput. 2013, 12, 66–73. [Google Scholar] [CrossRef]

- Hornecker, E.; Buur, J. Getting a grip on tangible interaction: A framework on physical space and social interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 22–27 April 2006; ACM: New York, NY, USA, 2006; p. 437. [Google Scholar]

- Klemmer, S.R.; Landay, J.A. Tangible User Interface Input: Tools and Techniques. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2004. [Google Scholar]

- Klemmer, S.R.; Li, J.; Lin, J.; Landay, J.A. Papier-Mache: Toolkit support for tangible input. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; ACM: New York, NY, USA, 2004; pp. 399–406. [Google Scholar]

- Kimura, H.; Tokunaga, E.; Okuda, Y.; Nakajima, T. CookieFlavors: Easy Building Blocks for Wireless Tangible Input. In Proceedings of the CHI 2006 Extended Abstracts on Human Factors in Computing Systems (CHI EA 2006), Montréal, QC, Canada, 22–27 April 2006; ACM: New York, NY, USA, 2006; pp. 965–970. [Google Scholar]

- Jacquemin, C. Pogany: A Tangible Cephalomorphic Interface for Expressive Facial Animation. In Proceedings of the Affective Computing and Intelligent Interaction: Second International Conference, ACII 2007, Lisbon, Portugal, 12–14 September 2007; Paiva, A.C.R., Prada, R., Picard, R.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 558–569, ISBN 978-3-540-74889-2. [Google Scholar]

- Cohen, J.; Withgott, M.; Piernot, P. Logjam: A Tangible Multi-person Interface for Video Logging. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 1999), Pittsburgh, PA, USA, 15–20 May 1999; ACM: New York, NY, USA, 1999; pp. 128–135. [Google Scholar]

- Terrenghi, L.; Kranz, M.; Holleis, P.; Schmidt, A. A Cube to Learn: A Tangible User Interface for the Design of a Learning Appliance. Pers. Ubiquitous Comput. 2006, 10, 153–158. [Google Scholar] [CrossRef]

- Camarata, K.; Do, E.Y.-L.; Johnson, B.R.; Gross, M.D. Navigational Blocks: Navigating Information Space with Tangible Media. In Proceedings of the 7th International Conference on Intelligent User Interfaces (IUI 2002), San Francisco, CA, USA, 13–16 January 2002; ACM: New York, NY, USA, 2002; pp. 31–38. [Google Scholar]

- Van Laerhoven, K.; Villar, N.; Schmidt, A.; Kortuem, G.; Gellersen, H. Using an Autonomous Cube for Basic Navigation and Input. In Proceedings of the 5th International Conference on Multimodal Interfaces (ICMI 2003), Vancouver, BC, Canada, 5–7 November 2003; ACM: New York, NY, USA, 2003; pp. 203–210. [Google Scholar]

- Huron, S.; Carpendale, S.; Thudt, A.; Tang, A.; Mauerer, M. Constructive Visualization. In Proceedings of the 2014 Conference on Designing Interactive Systems (DIS 2014), Vancouver, BC, Canada, 21–25 June 2014; ACM: New York, NY, USA, 2014; pp. 433–442. [Google Scholar]

- Emotional Tactile Map. Available online: http://vis4me.com/portfolio/emotional-tactile-map/ (accessed on 16 December 2017).

- Inca Quipus. Available online: http://dataphys.org/list/peruvian-quipus/ (accessed on 16 December 2017).

- Pulse: Animated Heart Shows Sentiments. Available online: http://dataphys.org/list/pulse-showing-emotional-responses-on-the-web/ (accessed on 16 December 2017).

- What Made Me. Available online: http://dataphys.org/list/what-made-me-interactive-public-installation/ (accessed on 16 December 2017).

- Physical Visual Sedimentation. Available online: http://dataphys.org/list/physical-visual-sedimentation/ (accessed on 16 December 2017).

- Physical Bar Charts. Available online: http://www.lucykimbell.com/LucyKimbell/PhysicalBarCharts.html (accessed on 16 December 2017).

- The Happy Show. Available online: https://sagmeisterwalsh.com/work/all/the-happy-show/ (accessed on 16 December 2017).

- Arduino. Available online: http://www.arduino.cc (accessed on 13 August 2017).

- Sarzotti, F.; Lombardi, I.; Rapp, A.; Marcengo, A.; Cena, F. Engaging Users in Self-Reporting Their Data: A Tangible Interface for Quantified Self. In International Conference on Universal Access in Human-Computer Interaction; Springer: Cham, Switzerland, 2015; pp. 518–527. [Google Scholar]

- Cena, F.; Lombardi, I.; Rapp, A.; Sarzotti, F. Self-Monitoring of Emotions: A Novel Personal Informatics Solution for an Enhanced Self-Reporting. In Proceedings of the 22nd Conference on User Modeling, Adaptation and Personalization UMAP 2014, Aalborg, Denmark, 7–11 July 2014; CEUR Workshop Proceedings. 2014; Volume 1181. [Google Scholar]

- D3.js. Available online: https://d3js.org/ (accessed on 18 December 2017).

- Mapbox. Available online: https://www.mapbox.com/ (accessed on 18 December 2017).

- Varesano, F. FreeIMU: An open hardware framework for orientation and motion sensing. arXiv 2013, arXiv:13034949. [Google Scholar]

- Ishii, H. Tangible User Interfaces. In Human-Computer Interaction: Design Issues, Solutions, and Applications; CRC Press: Boca Raton, FL, USA, 2007; p. 469. [Google Scholar]

- Howe, N.; Strauss, W. Generations: The History of America’s Future, 1584 to 2069; HarperCollins: New York, NY, USA, 1992; ISBN 978-0-688-11912-6. [Google Scholar]

- Howe, N.; Strauss, W. Millennials Rising: The Next Great Generation; Vintage: New York, NY, USA, 2009. [Google Scholar]

- Twenge, J.M. Generation Me-Revised and Updated: Why Today’s Young Americans Are More Confident, Assertive, Entitled—And More Miserable than Ever Before; Simon and Schuster: New York, NY, USA, 2014. [Google Scholar]

- Strauss, W.; Howe, N. Generations: The History of America’s Future, 1584 to 2069, 1st Quill ed.; Quill: New York, NY, USA, 1991; ISBN 978-0-688-11912-6. [Google Scholar]

- Woodman, D.; Wyn, J. Youth and Generation: Rethinking Change and Inequality in the Lives of Young People; Sage: Thousand Oaks, CA, USA; London, UK, 2014. [Google Scholar]

- Takahashi, T. Japanese youth and mobile media. In Deconstructing Digital Natives; Routledge: New York, NY, USA; London, UK, 2011; pp. 67–82. [Google Scholar]

- Thomas, M. Deconstructing Digital Natives: Young People, Technology, and the New Literacies; Routledge: New York, NY, USA; London, UK, 2011; ISBN 978-0-415-88993-3. [Google Scholar]

- Guest, G.; MacQueen, K.M.; Namey, E.E. Applied Thematic Analysis; Sage: Los Angeles, CA, USA; London, UK, 2011. [Google Scholar]

- Rapp, A.; Marino, A.; Simeoni, R.; Cena, F. An ethnographic study of packaging-free purchasing: Designing an interactive system to support sustainable social practices. Behav. Inf. Technol. 2017, 36, 1193–1217. [Google Scholar] [CrossRef]

- Sarzotti, F. A Tangible Personal Informatics System for an Amusing Self-reporting. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, (UbiComp/ISWC 2015), Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 1033–1038. [Google Scholar]

| Age Range (years) | Participants |

|---|---|

| 14–17 | 15 |

| 18–35 | 12 |

| >35 | 5 |

| Age Range (years) | Generation | Sex | Number of Participants |

|---|---|---|---|

| 14–17 | Gen Z | M | 10 |

| F | 5 | ||

| 18–35 | Gen Y | M | 9 |

| F | 3 | ||

| >35 | Gen X | M | 1 |

| F | 4 |

| Age Range (years) | Session Number | Week Number | Number of Participants | Participants |

|---|---|---|---|---|

| 14–17 | S1 | W1 | 5 | U01–U05 |

| S2 | W2 | 5 | U06–U10 | |

| S3 | W3 | 5 | U11–U15 | |

| 18–35 | S4 | W1 | 5 | U16–U20 |

| S5 | W2 | 7 | U21–U27 | |

| >35 | S6 | W3 | 5 | U28–U32 |

| Participant | Q1 | Q3 | Q4 | Q6.a | Q6.b | Q6.c | Q6.d | Q6.e | Q6.f | Q6.g | Q7 | Q8 | Q9.a | Q9.b | Session |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| U01 | F | 14 | 4 | 2 | 0 | 7 | 7 | 1 | 2 | 5 | 7 | 0 | T | T | S1 |

| U02 | F | 15 | 3 | 1 | 1 | 1 | 1 | <1 | 1 | 2 | 1 | 0 | T | T | S1 |

| U03 | M | 15 | 3 | 3 | <1 | <1 | 4 | <1 | <1 | 1 | 2 | 0.5 | F | F | S1 |

| U04 | M | 15 | 4 | 0.2 | 1 | 1 | <1 | 0 | <1 | 4 | 1 | 2 | T | T | S1 |

| U05 | M | 15 | 5 | 0 | 0,5 | 1 | 0 | <1 | 0 | 1 | 1 | 2 | F | F | S1 |

| U06 | M | 14 | 4 | 0.3 | <1 | 2 | 2.5 | 0.3 | 0.5 | 3 | 2 | 3.5 | F | F | S2 |

| U07 | M | 15 | 5 | 0.5 | 0.5 | 1.5 | 2 | <1 | <1 | 3 | 2 | 0.5 | T | T | S2 |

| U08 | M | 14 | 3 | 0.2 | 1 | 3 | 3 | <1 | 0.5 | 1 | 3 | 3 | F | F | S2 |

| U09 | M | 16 | 4 | 0 | 0 | 1 | 1 | 0 | 2 | 0 | 3 | 1 | F | F | S2 |

| U10 | F | 15 | 5 | 1 | 3 | 13 | 12 | 1 | 10 | 3 | 18 | 5.5 | F | F | S2 |

| U11 | M | 16 | 3 | 0 | 1.5 | 1 | 0.5 | 0 | 0.5 | 0.5 | 3 | 2 | T | T | S3 |

| U12 | F | 16 | 3 | 2 | 3 | 3 | 7 | 4 | 5 | 7 | 5 | 2 | F | F | S3 |

| U13 | M | 15 | 4 | <1 | <1 | 1 | 4 | <1 | 0.5 | 0 | 5 | <1 | F | F | S3 |

| U14 | M | 14 | 3 | 0.5 | 1 | 1.5 | 1 | <1 | <1 | 1 | 1 | 1 | T | T | S3 |

| U15 | F | 15 | 5 | 2 | 1 | 5.5 | 1 | 0.5 | 2 | 7 | 7 | 1 | T | T | S3 |

| U16 | M | 18 | 4 | 2.5 | 2 | 3 | 1.5 | 0.2 | 1 | 4 | 3 | 2 | T | T | S4 |

| U17 | F | 22 | 5 | 2 | 2 | 1 | 1.5 | 0.5 | 1 | 2.5 | 3 | 1.5 | F | F | S4 |

| U18 | F | 21 | 4 | 1.5 | 1 | 2.5 | 0.5 | 1 | 1 | 2 | 3.5 | 1 | T | T | S4 |

| U19 | M | 23 | 3 | 0.5 | 0.5 | 4 | 1 | 1.5 | 2 | 1 | 2 | 1 | F | F | S4 |

| U20 | F | 19 | 4 | 1 | 3 | 2 | 1.5 | 1 | 0.5 | 2 | 1 | 2 | T | T | S4 |

| U21 | M | 18 | 3 | 1 | 0 | 3 | 2.5 | <1 | <1 | 7 | 3 | 0 | T | T | S5 |

| U22 | M | 18 | 4 | <1 | <1 | 2 | 1 | 0 | 2 | 2 | 1 | 0 | F | T | S5 |

| U23 | M | 18 | 3 | 2.5 | 0 | 2 | 3 | 0.5 | 2 | 3 | 3 | 0 | T | T | S5 |

| U24 | M | 19 | 5 | 1 | 0.5 | 16 | 10 | 1 | 5 | 9 | 10 | 0.5 | F | F | S5 |

| U25 | M | 32 | 5 | 4.5 | 0 | 11 | 1.5 | 11 | 0.5 | 0.5 | 5.5 | 0 | T | T | S5 |

| U26 | M | 19 | 4 | 0.5 | 0 | 5 | 3 | 0.5 | 1 | 2 | 4 | 0 | F | F | S5 |

| U27 | M | 20 | 4 | 1 | 0 | 3 | 1.5 | 0.5 | 0.5 | 2.5 | 5.5 | 1 | F | F | S5 |

| U28 | F | 52 | 3 | 1 | 0.5 | 1 | 0 | 2 | 0.5 | 0.5 | 1 | 0 | F | F | S6 |

| U29 | F | 41 | 2 | 1.5 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 1 | F | F | S6 |

| U30 | M | 36 | 4 | 2.5 | 0.5 | 2 | 1 | 2.5 | 0 | 0.5 | 1 | 0.5 | F | F | S6 |

| U31 | F | 37 | 3 | 0.5 | 0.5 | 1.5 | 0 | 2.5 | 0.5 | 0 | 1 | 0.5 | T | T | S6 |

| U32 | F | 45 | 3 | 1 | 0 | 2 | 1 | 2 | 0.5 | 0 | 3 | 0 | T | T | S6 |

| Participant | Profession | Q5.a | Q5.b | Q5.c | Q5.d |

|---|---|---|---|---|---|

| U01 | High School Student | 1 | Apple—Iphone 7 | 0 | – |

| U02 | High School Student | 1 | Apple—Iphone 6 | 0 | – |

| U03 | High School Student | 1 | Apple—Iphone 6 | 0 | – |

| U04 | High School Student | 1 | Samsung Galaxy S6 | 0 | – |

| U05 | High School Student | 1 | Apple—Iphone 6 | 0 | – |

| U06 | High School Student | 1 | Samsung Galaxy S6 | 0 | – |

| U07 | High School Student | 1 | Apple—Iphone 6 Plus | 0 | – |

| U08 | High School Student | 1 | Samsung Galaxy Core | 0 | – |

| U09 | High School Student | 1 | Samsung Galaxy S3 Neo | 0 | – |

| U10 | High School Student | 1 | Huawei P8 Lite | 0 | – |

| U11 | High School Student | 1 | Wiko Sunset 2 | 0 | – |

| U12 | High School Student | 1 | Samsung Galaxy S5 Mini | 0 | – |

| U13 | High School Student | 1 | Apple Iphone 5S | 0 | – |

| U14 | High School Student | 1 | Apple Iphone5C | 0 | – |

| U15 | High School Student | 1 | Samsung S6 | 0 | – |

| U16 | High School Student | 1 | Oppo Mirror | 0 | – |

| U17 | Undergraduate | 1 | LG Spirit | 0 | – |

| U18 | Undergraduate | 1 | Apple—Iphone 6 | 0 | – |

| U19 | Employee | 1 | OnePlus 3T | 0 | – |

| U20 | High School Student | 1 | Apple—Iphone 6 | 0 | – |

| U21 | High School Student | 1 | Apple—Iphone 5 | 0 | – |

| U22 | High School Student | 1 | Samsung Galaxy S6 Edge+ | 0 | – |

| U23 | High School Student | 1 | LG K5 | 0 | – |

| U24 | High School Student | 1 | Apple—Iphone 6 | 0 | – |

| U25 | PhD Student | 1 | Samsung Galaxy S5 | 0 | – |

| U26 | Undergraduate | 1 | Apple—Iphone 6 | 0 | – |

| U27 | Undergraduate | 1 | Samsung Galaxy S5 | 0 | – |

| U28 | Teacher | 1 | Galaxy Note 4 | 0 | – |

| U29 | Teacher | 0 | 1 | Nokia 3310 | |

| U30 | Secretary | 1 | Samsung Galaxy S5 | 0 | – |

| U31 | Teacher | 0 | 1 | Nokia 3510i | |

| U32 | Secretary | 1 | Apple—Iphone 7 | 0 | – |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sarzotti, F. Self-Monitoring of Emotions and Mood Using a Tangible Approach. Computers 2018, 7, 7. https://doi.org/10.3390/computers7010007

Sarzotti F. Self-Monitoring of Emotions and Mood Using a Tangible Approach. Computers. 2018; 7(1):7. https://doi.org/10.3390/computers7010007

Chicago/Turabian StyleSarzotti, Federico. 2018. "Self-Monitoring of Emotions and Mood Using a Tangible Approach" Computers 7, no. 1: 7. https://doi.org/10.3390/computers7010007

APA StyleSarzotti, F. (2018). Self-Monitoring of Emotions and Mood Using a Tangible Approach. Computers, 7(1), 7. https://doi.org/10.3390/computers7010007