Vision-Based Parking-Slot Detection: A Benchmark and A Learning-Based Approach

Abstract

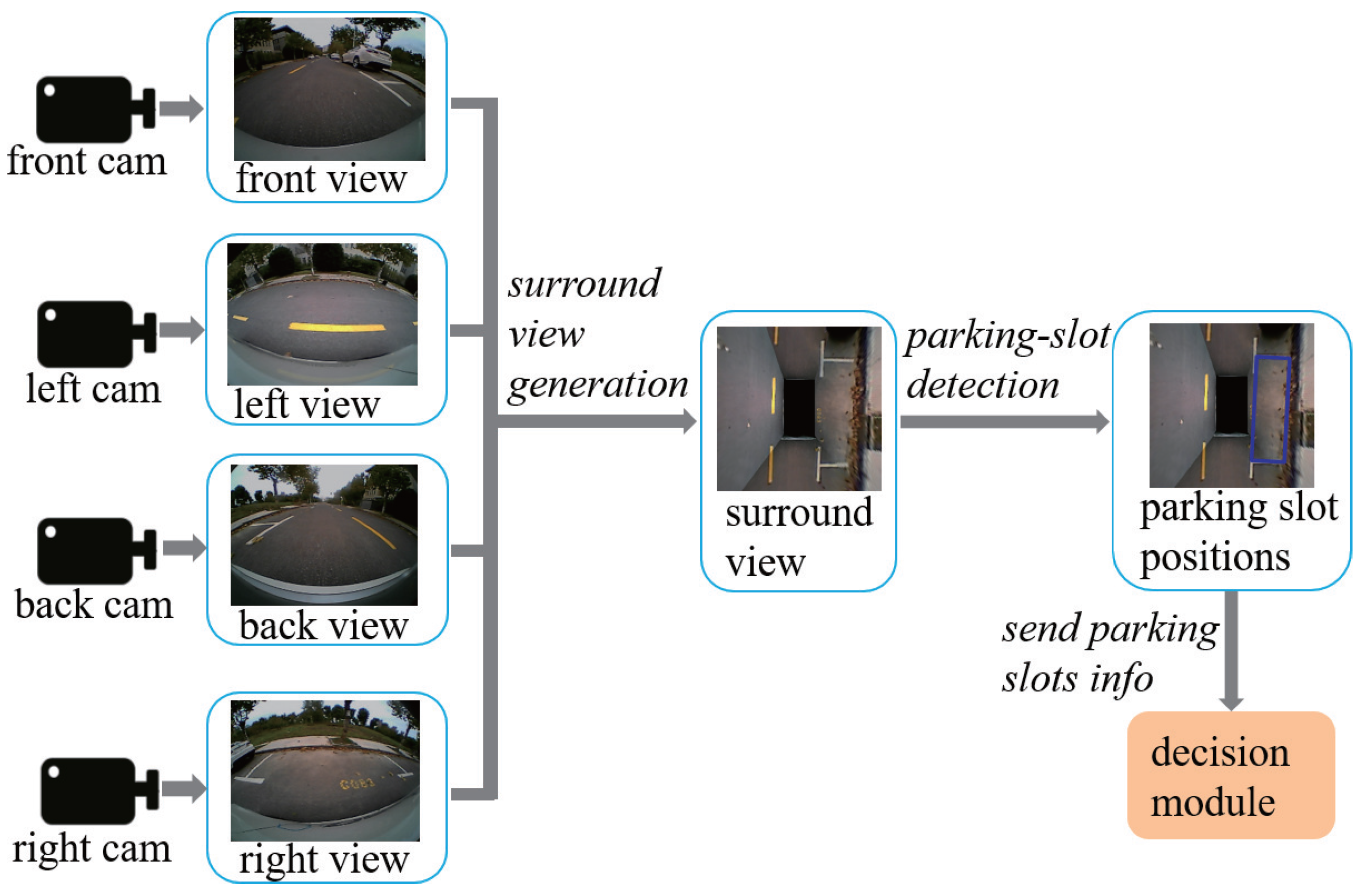

:1. Introduction

1.1. Related Work

1.2. Our Motivations and Contributions

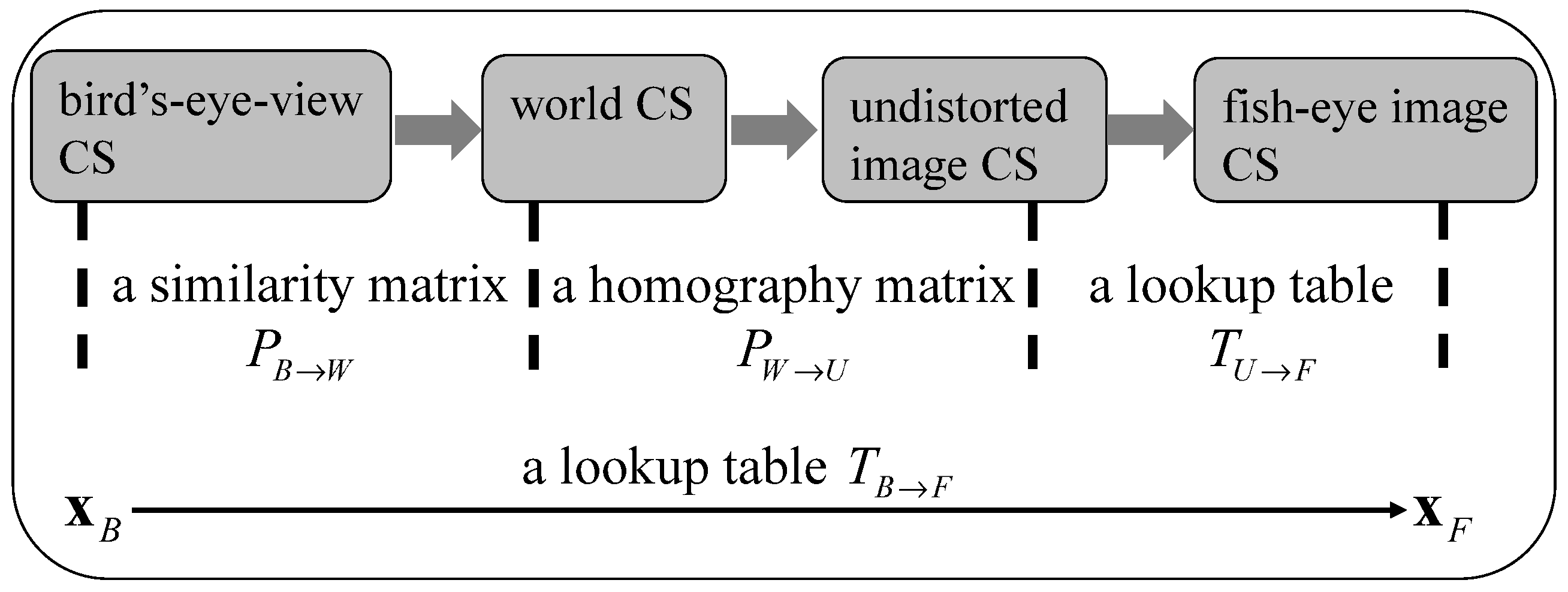

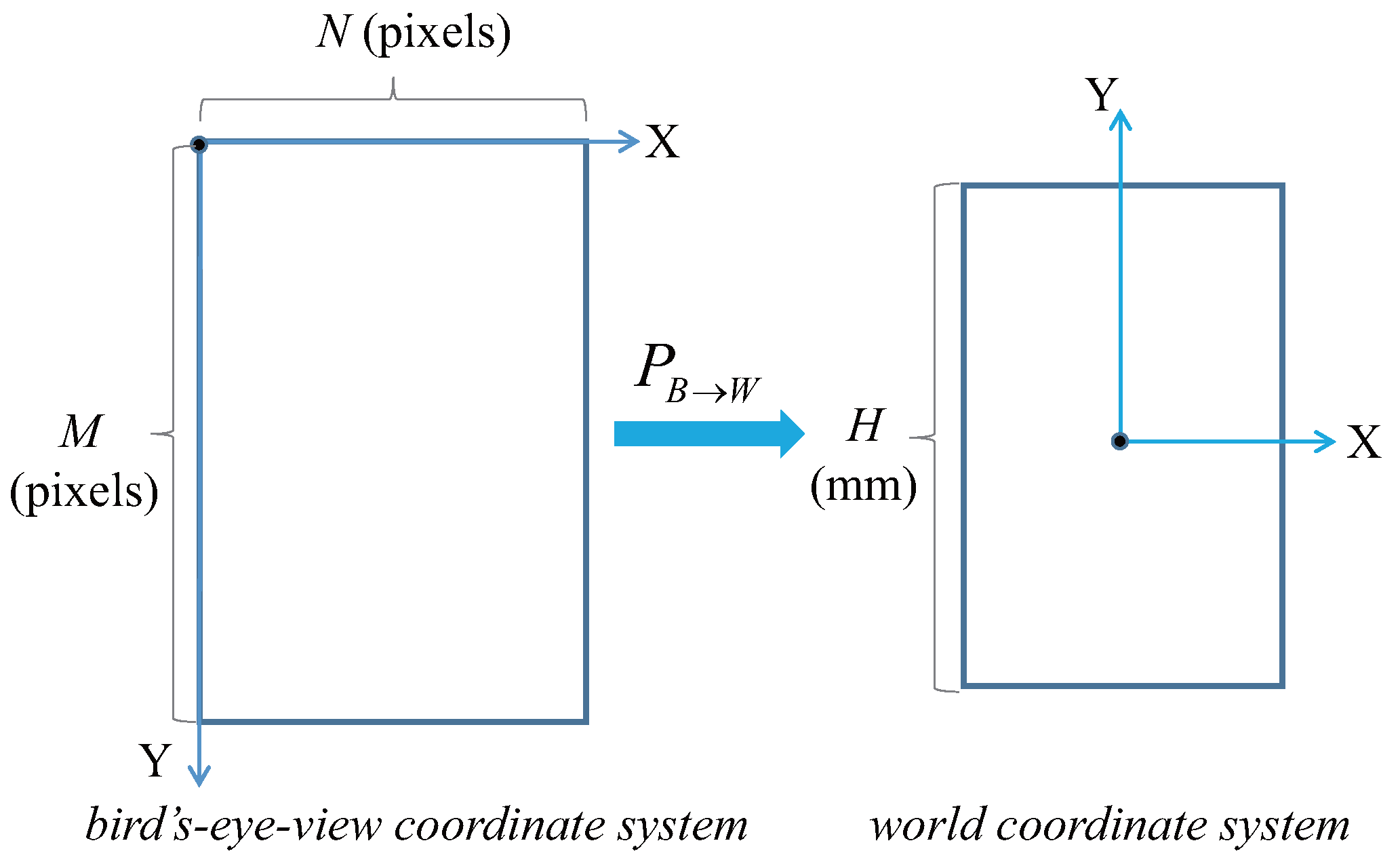

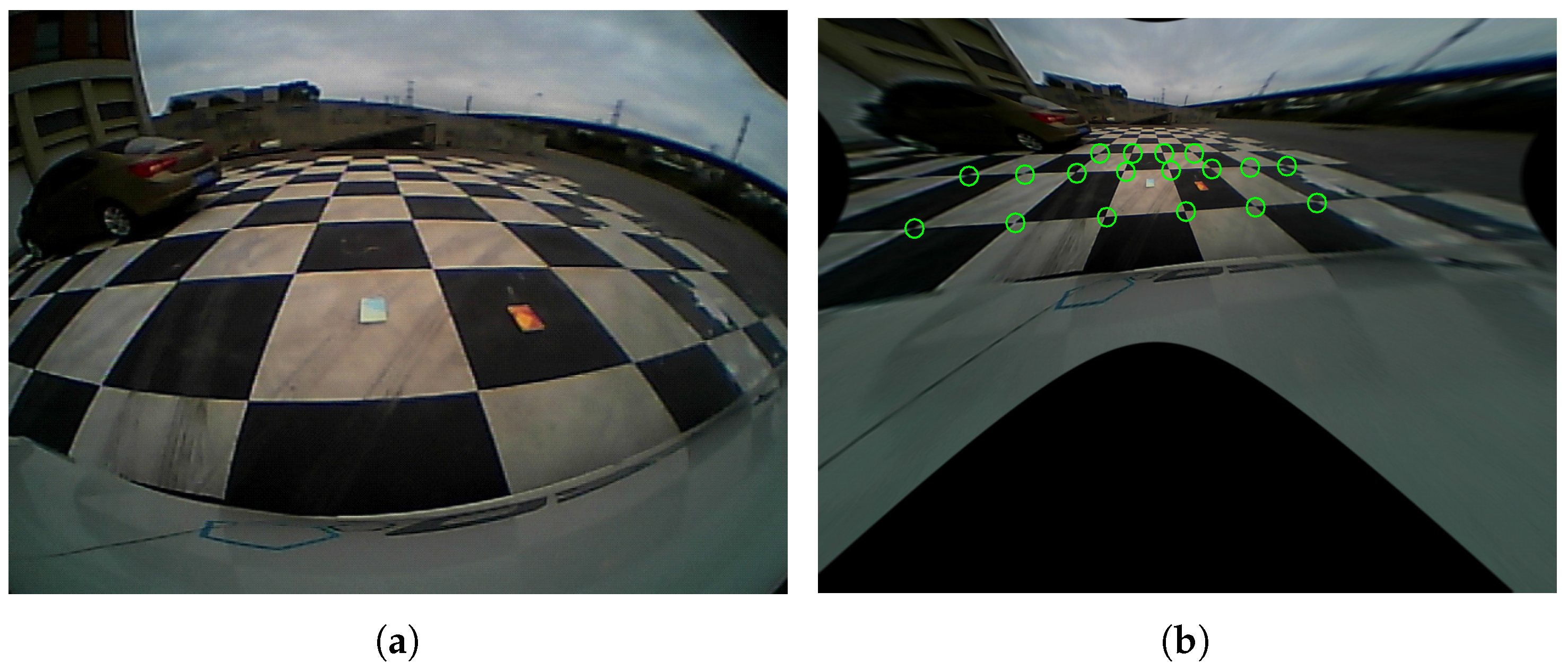

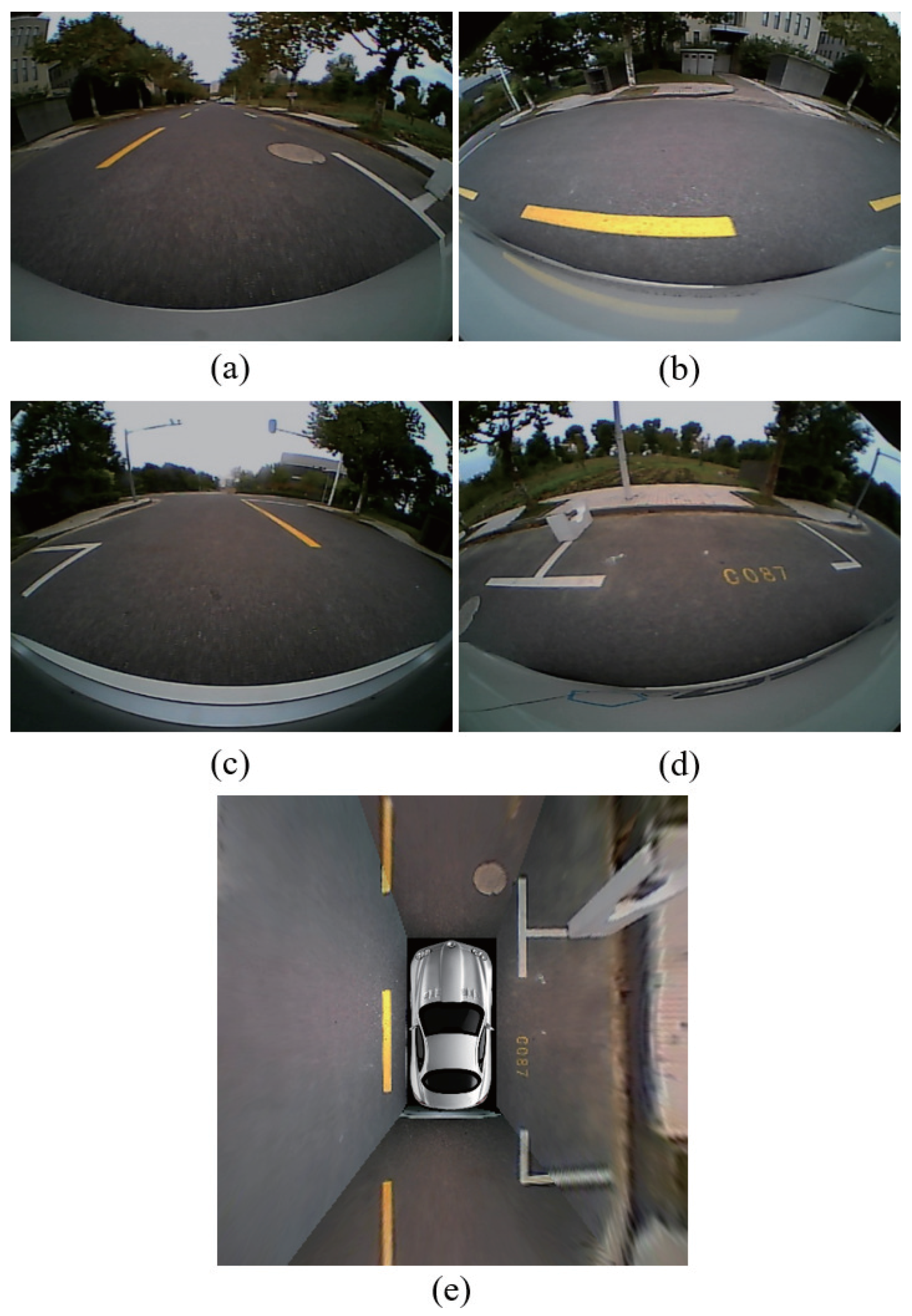

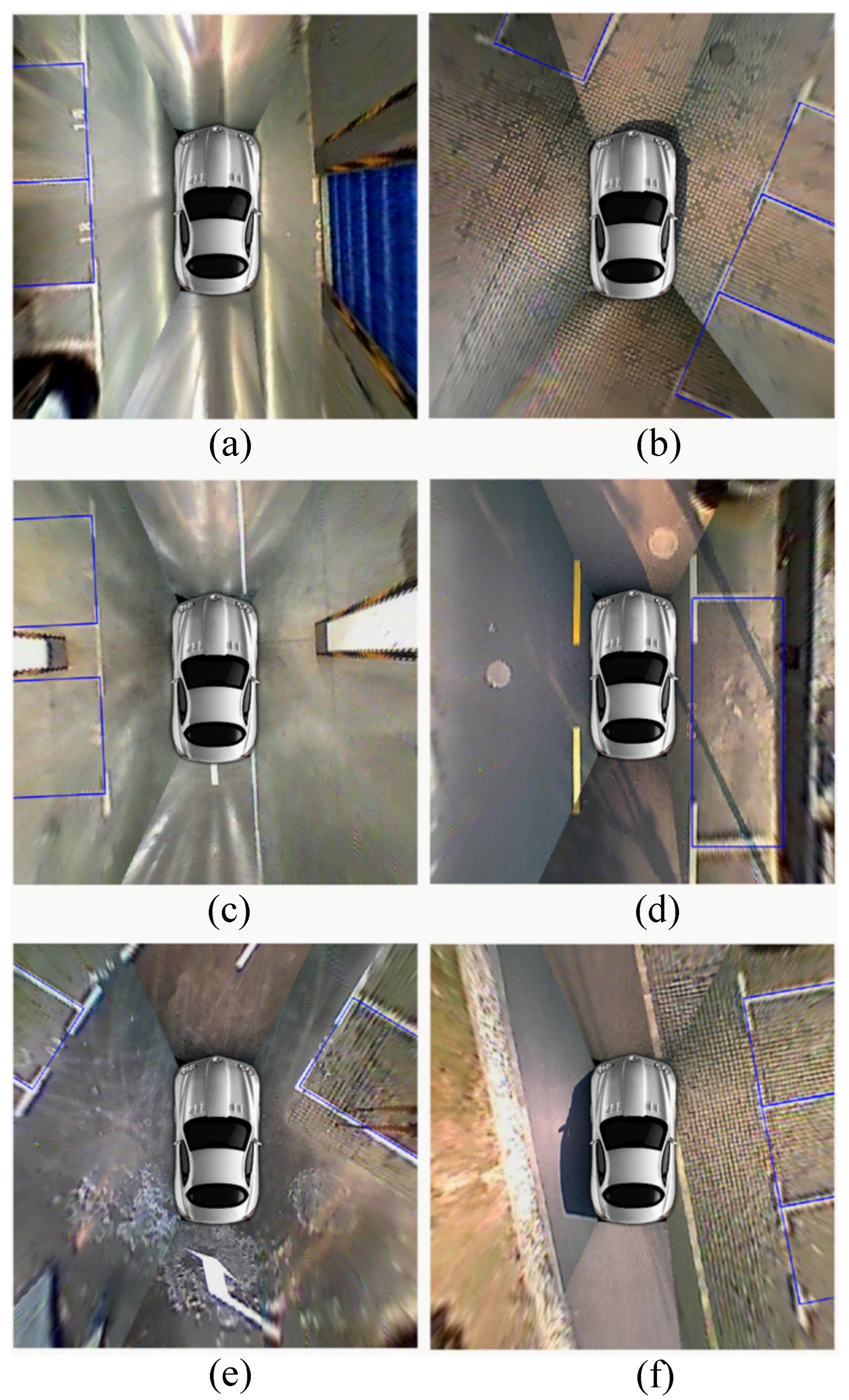

2. Surround-View Generation

3. : A Learning Based Approach for Detecting Parking-Slots

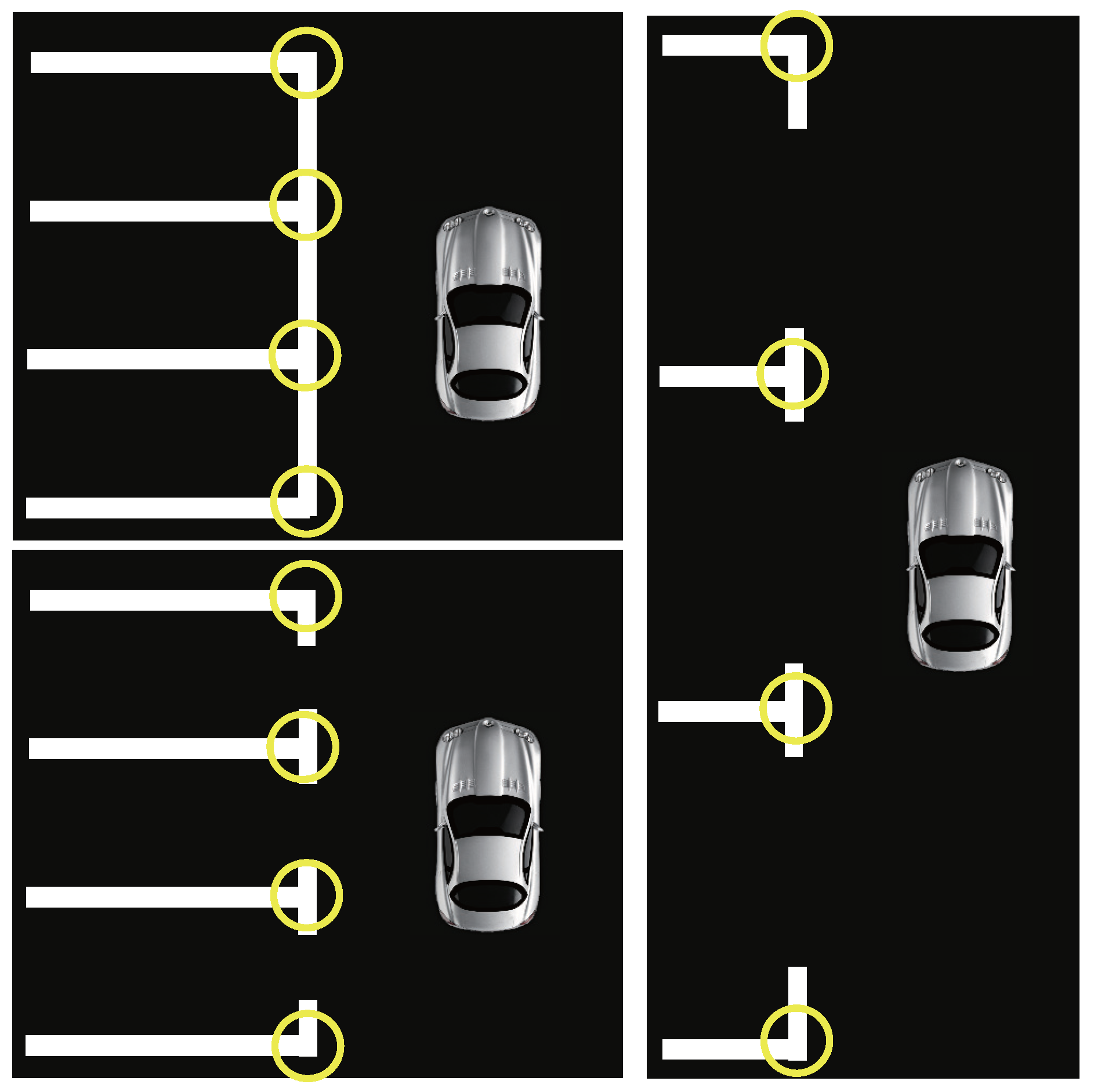

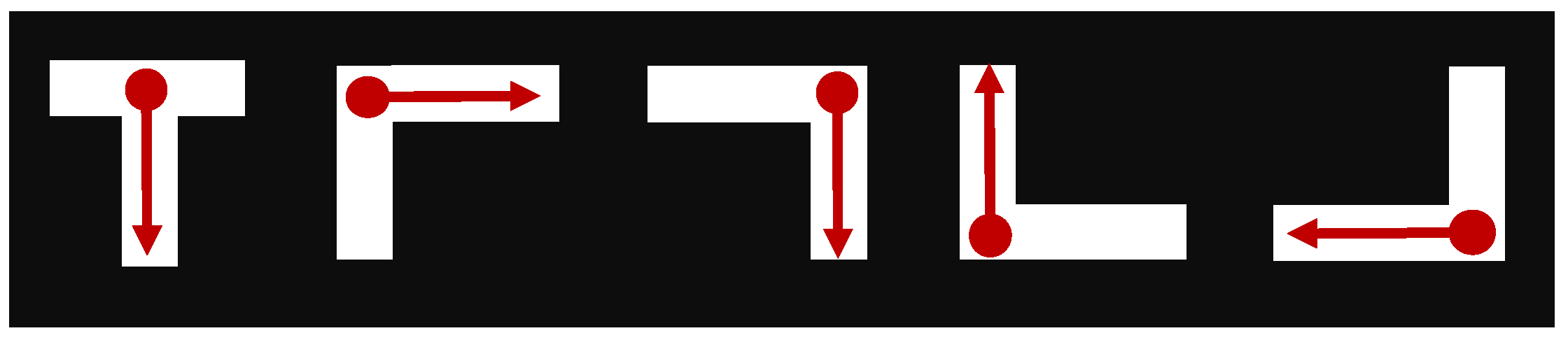

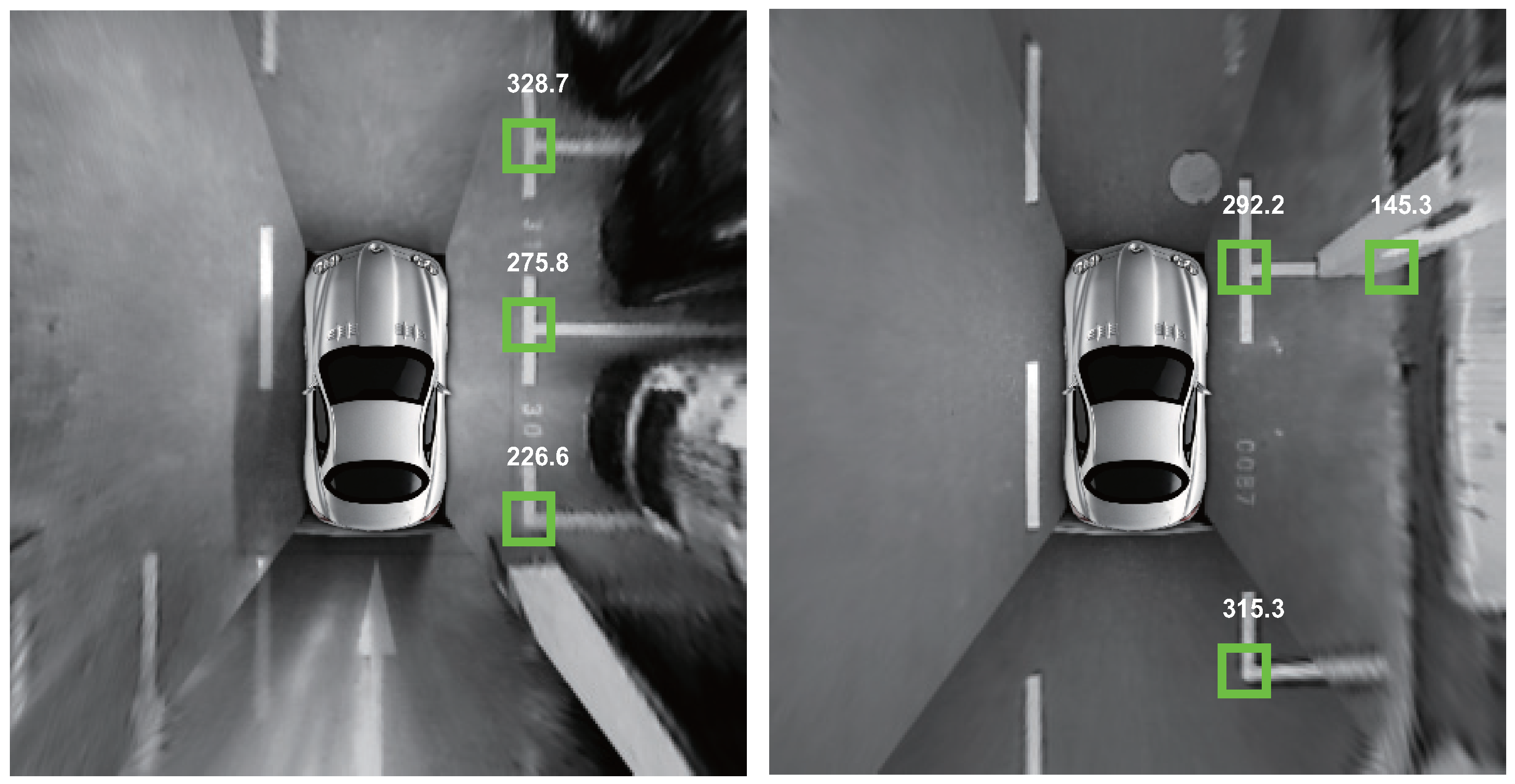

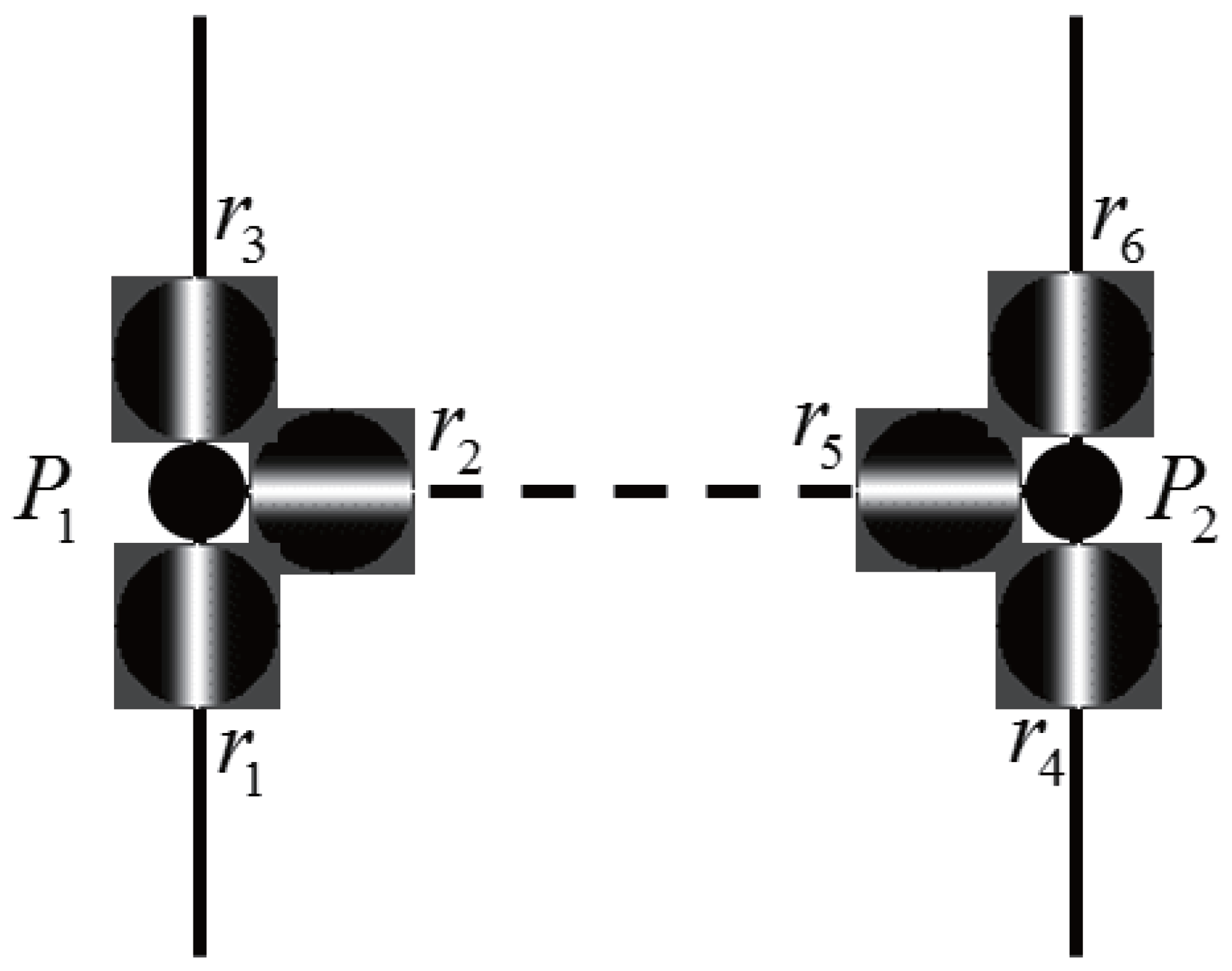

3.1. Marking-Point Detection

3.2. Parking-Slot Inference

| Algorithm 1: Checking-Rules for Determining the Validity of for Being an Entrance-Line and the Parking-Slot Orientation |

|

4. Experimental Results

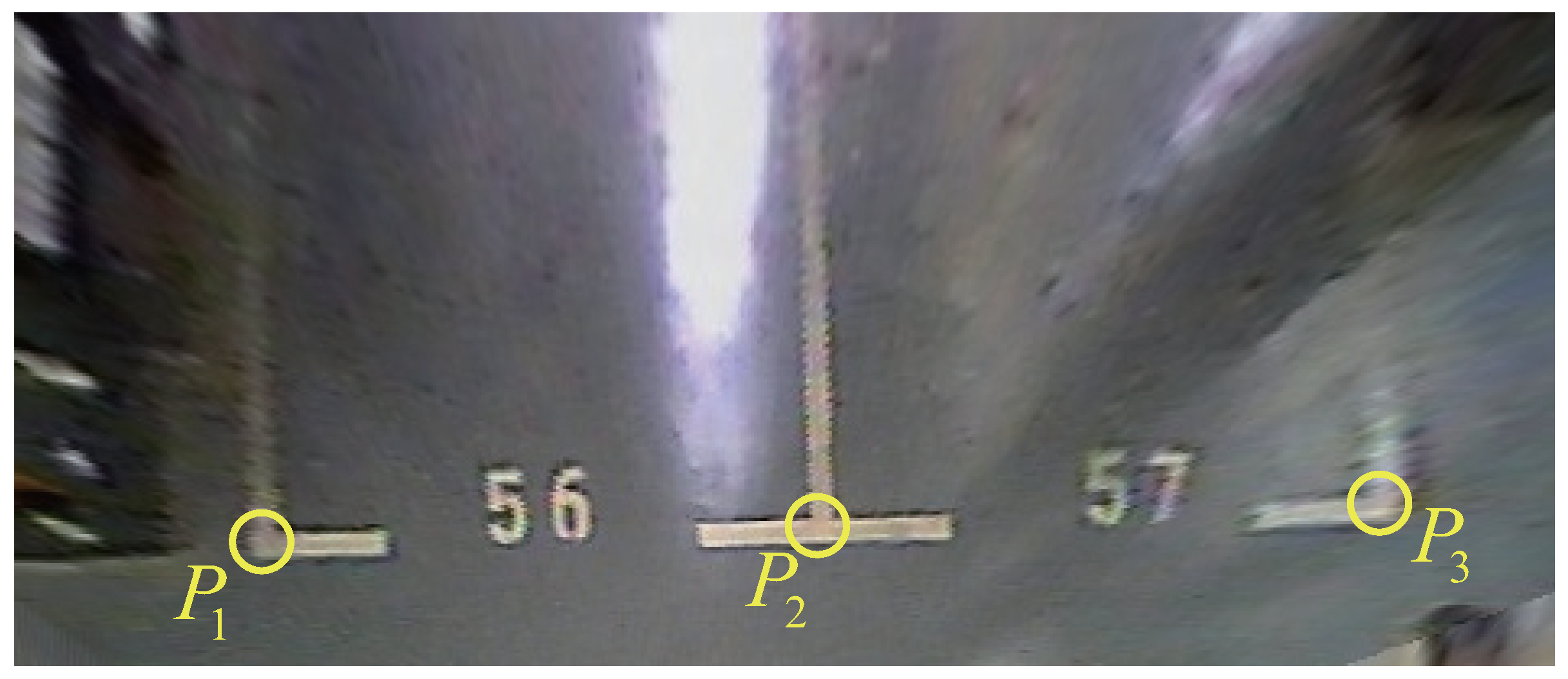

4.1. Benchmark Dataset

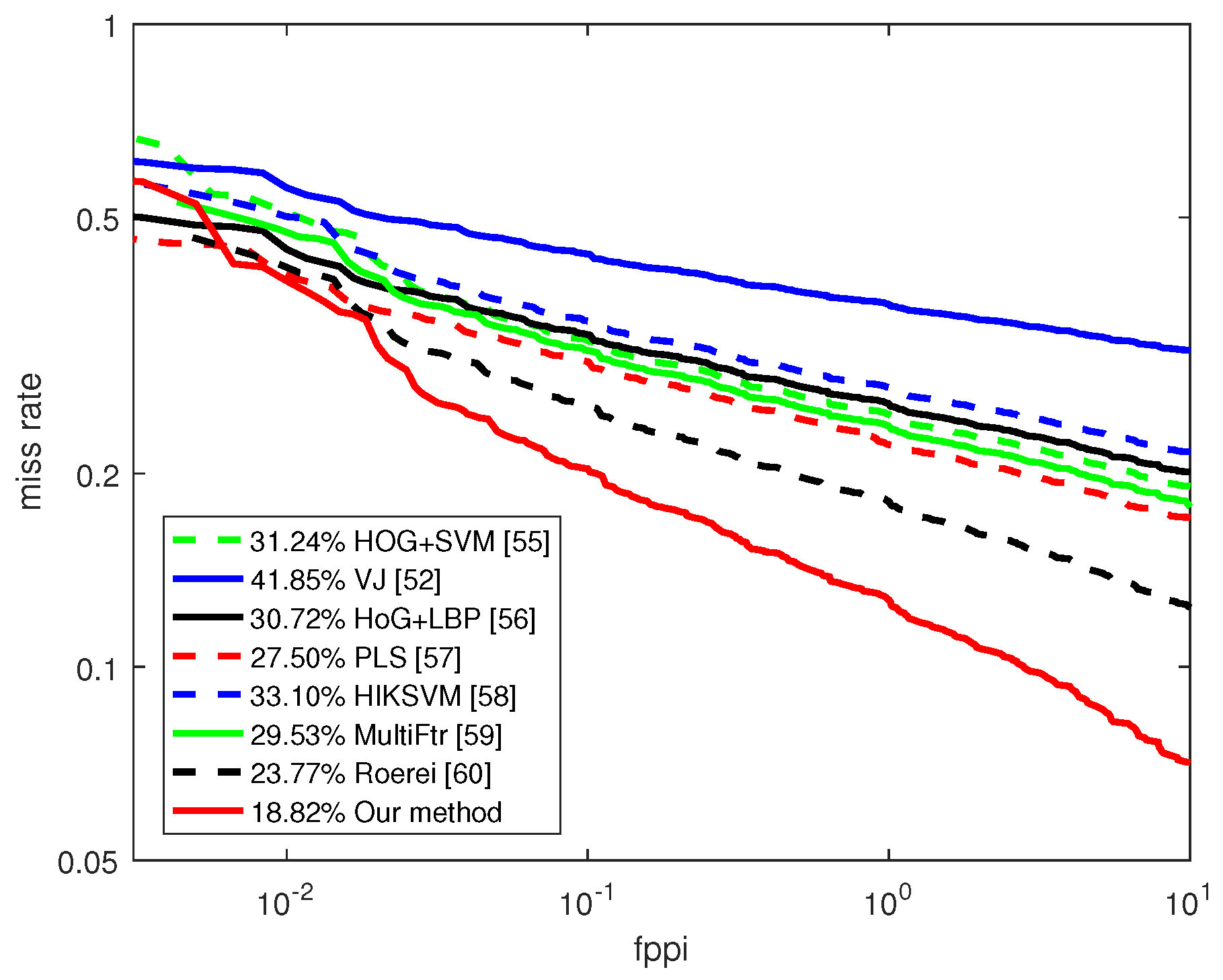

4.2. Evaluating the Performance of Marking-Point Detection

4.3. Evaluating the Performance of Parking-Slot Detection

4.4. Discussion about the Usability of Our Parking-Slot Detection System

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Jo, K.; Kim, J.; Kim, D.; Jang, C.; Sunwoo, M. Development of autonomous car—Part I: Distributed system architecture and development process. IEEE Trans. Ind. Electron. 2014, 61, 7131–7140. [Google Scholar] [CrossRef]

- Jo, K.; Kim, J.; Kim, D.; Jang, C.; Sunwoo, M. Development of autonomous car—Part II: A case study on the implementation of an autonomous driving system based on distributed architecture. IEEE Trans. Ind. Electron. 2016, 62, 5119–5132. [Google Scholar] [CrossRef]

- Wada, M.; Yoon, K.S.; Hashimoto, H. Development of advanced parking assistance system. IEEE Trans. Ind. Electron. 2003, 50, 4–17. [Google Scholar] [CrossRef]

- Suzuki, Y.; Koyamaishi, M.; Yendo, T.; Fujii, T.; Tanimoto, M. Parking assistance using multi-camera infrastructure. In Proceedings of the IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 106–111. [Google Scholar]

- Yan, G.; Yang, W.; Rawat, D.B.; Olariu, S. SmartParking: A secure and intelligent parking system. IEEE Intell. Transp. Syst. Mag. 2011, 3, 18–30. [Google Scholar]

- Sung, K.; Choi, J.; Kwak, D. Vehicle control system for automatic valet parking with infrastructure sensors. In Proceedings of the 2011 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–12 January 2011; pp. 567–568. [Google Scholar]

- Huang, C.; Tai, Y.; Wang, S. Vacant parking space detection based on plane-based Bayesian hierarchical framework. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1598–1610. [Google Scholar] [CrossRef]

- De Almeida, P.; Oliveira, L.S.; Britto, A.S., Jr.; Silva, E.J., Jr.; Koerich, A.L. PKLot—A robust dataset for parking lot classification. Exp. Syst. Appl. 2015, 42, 4937–4949. [Google Scholar] [CrossRef]

- Pohl, J.; Sethsson, M.; Degerman, P.; Larsson, J. A semi-automated parallel parking system for passenger cars. J. Autom. Eng. 2006, 220, 53–65. [Google Scholar] [CrossRef]

- Satonaka, H.; Okuda, M.; Hayasaka, S.; Endo, T.; Tanaka, Y.; Yoshida, T. Development of parking space detection using an ultrasonic sensor. In Proceedings of the 13th World Congress Intelligent Transport Systems and Services, London, UK, 8–12 October 2006; pp. 1–10. [Google Scholar]

- Park, W.J.; Kim, B.S.; Seo, D.E.; Kim, D.S.; Lee, K.H. Parking space detection using ultrasonic sensor in parking assistance system. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 1039–1044. [Google Scholar]

- Jeong, S.H.; Choi, C.G.; Oh, J.N.; Yoon, P.J.; Kim, B.S.; Kim, M.; Lee, K.H. Low cost design of parallel parking assist system based on an ultrasonic sensor. Int. J. Autom. Technol. 2010, 11, 409–416. [Google Scholar] [CrossRef]

- Ford Fusion. Available online: http://www.ford.com/cars/fusion/2017/features/smart/ (accessed on 28 February 2018).

- BMW 7 Series Sedan. Available online: http://www.bmw.com/com/en/newvehicles/7series/sedan/2015/showroom/driver_assistance.html (accessed on 28 February 2017).

- Toyota Prius. Available online: http://www.toyota.com/prius/prius-features/) (accessed on 28 February 2017).

- Jung, H.G.; Cho, Y.H.; Yoon, P.J.; Kim, J. Scanning laser radar-based target position designation for parking aid system. IEEE Trans. Intell. Transp. Syst. 2008, 9, 406–424. [Google Scholar] [CrossRef]

- Zhou, J.; Navarro-Serment, L.E.; Hebert, M. Detection of parking spots using 2D range data. In Proceedings of the 2012 15th International IEEE Conference on Intelligent Transportation Systems (ITSC), Anchorage, AK, USA, 16–19 September 2012; pp. 1280–1287. [Google Scholar]

- Ibisch, A.; Stumper, S.; Altinger, H.; Neuhausen, M.; Tschentscher, M.; Schlipsing, M.; Salmen, J.; Knoll, A. Towards autonomous driving in a parking garage: Vehicle localization and tracking using environment-embeded LIDAR sensors. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 829–834. [Google Scholar]

- Schmid, M.R.; Ates, S.; Dickmann, J.; Hundelshausen, F.; Wuensche, H.J. Parking space detection with hierarchical dynamic occupancy grids. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 254–259. [Google Scholar]

- Dube, R.; Hahn, M.; Schutz, M.; Dickmann, J.; Gingras, D. Detection of parked vehicles from a radar based on occupancy grid. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 1415–1420. [Google Scholar]

- Loeffler, A.; Ronczka, J.; Fechner, T. Parking lot measurement with 24 GHz short range automotive radar. In Proceedings of the 2015 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 1–6. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Kim, J. Light stripe projection-based target position designation for intelligent parking-assist system. IEEE Trans. Intell. Transp. Syst. 2010, 11, 942–953. [Google Scholar] [CrossRef]

- Scheunert, U.; Fardi, B.; Mattern, N.; Wanielik, G.; Keppeler, N. Free space determination for parking slots using a 3D PMD sensor. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 154–159. [Google Scholar]

- Kaempchen, N.; Franke, U.; Ott, R. Stereo vision based pose estimation of parking lots using 3-D vehicle models. In Proceedings of the 2002 IEEE Intelligent Vehicle Symposium, Versailles, France, 17–21 June 2002; pp. 459–464. [Google Scholar]

- Fintzel, K.; Bendahan, R.; Vestri, C.; Bougnoux, S.; Kakinami, T. 3D parking assitant system. In Proceedings of the 2004 IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 881–886. [Google Scholar]

- Vestri, C.; Bougnoux, S.; Bendahan, R.; Fintzel, K.; Wybo, S.; Abad, F.; Kakinami, T. Evaluation of a vision-based parking assistance system. In Proceedings of the IEEE International Conference Intelligent Transportation System, Vienna, Austria, 13–16 September 2005; pp. 131–135. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. 3D vision system for the recognition of free parking site location. Int. J. Autom. Technol. 2006, 7, 361–367. [Google Scholar]

- Suhr, J.K.; Jung, H.G.; Bae, K.; Kim, J. Automatic free parking space detection by using motion stereo-based 3D reconstruction. Mach. Vis. Appl. 2010, 21, 163–176. [Google Scholar] [CrossRef]

- Unger, C.; Wahl, E.; Ilic, S. Parking assistance using dense motion-stereo. Mach. Vis. Appl. 2004, 25, 561–581. [Google Scholar] [CrossRef]

- Xu, J.; Chen, G.; Xie, M. Vision-guided automatic parking for smart car. In Proceedings of the IV 2000 Intelligent Vehicles Symposium, Dearborn, MI, USA, 5 October 2000; pp. 725–730. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Structure Analysis Based Parking Slot Marking Recognition for Semi-Automatic Parking System; Joint IAPR International Workshops Structural and Syntactic Pattern Recognition (SSPR): Colorado Springs, CO, USA, 2006; pp. 384–393. [Google Scholar]

- Jung, H.G.; Lee, Y.H.; Kim, J. Uniform user interface for semi-automatic parking slot marking recognition. IEEE Trans. Veh. Technol. 2010, 59, 616–626. [Google Scholar] [CrossRef]

- Du, X.; Tan, K. Autonomous reverse parking system based on robust path generation and improved sliding mode control. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1225–1237. [Google Scholar] [CrossRef]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Parking slot markings recognition for automatic parking assist system. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 106–113. [Google Scholar]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Thomson-Engineering: Mobile, AL, USA, 2008. [Google Scholar]

- Wang, C.; Zhang, H.; Yang, M.; Wang, X.; Ye, L.; Guo, C. Automatic parking based on a bird’s eye view vision system. Adv. Mech. Eng. 2014, 2014, 847406. [Google Scholar] [CrossRef]

- Deans, S.R. The Radon Transform and Some of Its Applications; Dover Publications: Mineola, NY, USA, 1983. [Google Scholar]

- Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Surround view based parking lot detection and tracking. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 1106–1111. [Google Scholar]

- Matas, J.; Galambos, C.; Kittler, J. Robust detection of lines using the progressive probabilistic Hough transform. Comput. Vis. Image Underst. 2000, 78, 119–137. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Automatic parking space detection and tracking for underground and indoor environments. IEEE Trans. Ind. Electron. 2016, 63, 5687–5698. [Google Scholar] [CrossRef]

- Borgefors, G. Hierarchical chamfer matching: A parametric edge matching algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 849–856. [Google Scholar] [CrossRef]

- Lee, S.; Seo, S. Available parking slot recognition based on slot context analysis. IET Intell. Transp. Syst. 2016, 10, 594–604. [Google Scholar] [CrossRef]

- Nieto, M.; Salgado, L. Robust multiple lane road modeling based on perspective analysis. In Proceedings of the ICIP 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 2396–2399. [Google Scholar]

- Barber, D. Bayesian Reasoning and Machine Learning; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Suhr, J.K.; Jung, H.G. Full-automatic recognition of various parking slot markings using a hierarchical tree structure. Opt. Eng. 2013, 52, 037203. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Sensor fusion-based vacant parking slot detection and tracking. IEEE Trans. Intell. Transp. Syst. 2014, 15, 21–36. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- OpenCV 3.1. Available online: http://opencv.org/opencv-3-1.html (accessed on 28 February 2017).

- Dollar, P.; Tu, Z.; Perona, P.; Belongie, S. Integral channel features. In Proceedings of the British Machine Vision Conference, London, UK, 7–10 September 2009; pp. 91:1–91:11. [Google Scholar]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Viola, P.A.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Dollar, P.; Appel, R.; Kienzle, W. Crosstalk cascades for frame-rate pedestrain detection. In Proceedings of the 12th European conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 645–659. [Google Scholar]

- Roewe E50 Review. Available online: http://www.autocar.co.uk/car-review/roewe/e50 (accessed on 28 February 2017).

- Dalal, N.; Triggs, B. Histogram of oriented gradients for human detection. In Proceedings of the CVPR 2005. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Wang, X.; Han, X.; Yan, S. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Schwarts, W.; Kembhavi, A.; Harwood, D.; Davis, L. Human detection using partial least squares analysis. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 24–31. [Google Scholar]

- Maji, S.; Berg, A.; Malik, J. Classification using intersection kernel support vector machines is efficient. In Proceedings of the CVPR 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Wojek, C.; Schiele, B. A performance evaluation of single and multi-feature people detection. In Proceedings of the 30th DAGM symposium on Pattern Recognition, Munich, Germany, 10–13 June 2008; pp. 82–91. [Google Scholar]

- Benenson, R.; Mathias, M.; Tuytelaars, T.; van Gool, L. Seeking the strongest rigid detector. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3666–3673. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Li, X.; Huang, J.; Shen, Y.; Wang, D. Vision-Based Parking-Slot Detection: A Benchmark and A Learning-Based Approach. Symmetry 2018, 10, 64. https://doi.org/10.3390/sym10030064

Zhang L, Li X, Huang J, Shen Y, Wang D. Vision-Based Parking-Slot Detection: A Benchmark and A Learning-Based Approach. Symmetry. 2018; 10(3):64. https://doi.org/10.3390/sym10030064

Chicago/Turabian StyleZhang, Lin, Xiyuan Li, Junhao Huang, Ying Shen, and Dongqing Wang. 2018. "Vision-Based Parking-Slot Detection: A Benchmark and A Learning-Based Approach" Symmetry 10, no. 3: 64. https://doi.org/10.3390/sym10030064

APA StyleZhang, L., Li, X., Huang, J., Shen, Y., & Wang, D. (2018). Vision-Based Parking-Slot Detection: A Benchmark and A Learning-Based Approach. Symmetry, 10(3), 64. https://doi.org/10.3390/sym10030064