1. Introduction

Recently, personal authentication has become a vital and highly demanding technique which is the foundation of many applications, such as security access systems, time attendance systems, and forensics science [

1]. Propelled by the needs of the aforementioned applications, personal identity authentication has become a topic of great concern. In order to solve such problems effectively, biometric-based methods, such as fingerprints [

2,

3], faces [

4,

5,

6,

7,

8,

9], irises [

10,

11,

12,

13] and palmprints [

14], have drawn increasing attention recently because of their convenience, safety and high accuracy. Among these biometric identifiers, the palmprint and the palmvein have received massive investigations due to their uniqueness, non-intrusiveness and reliability.

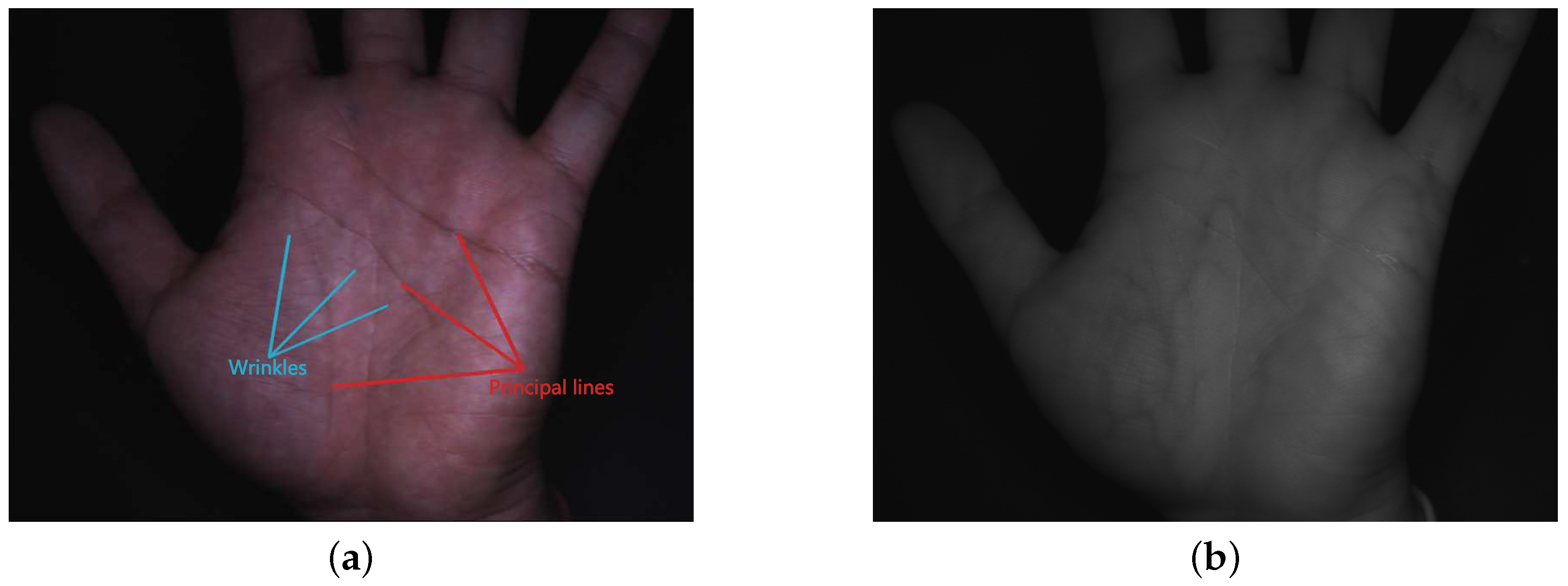

The palmprint (as illustrated in

Figure 1a) is a skin pattern on the inner palm surface which comprises mainly two kinds of physiological features: the ridge and valley structures like the fingerprint and the discontinuities in the epidermal ridge patterns [

15]. A palmprint image has many unique features that can be used for recognition, such as principal lines, wrinkles, valleys, ridges and minutiae points. As a biometric feature, it can offer highly distinctive and robust information. The palmvein (as illustrated in

Figure 1b) is the dark lines of palms which will appear through the irradiation of near-infrared (NIR) light, and it has validity in the living body and can be explored for anti-counterfeiting.

Actually, researchers have exhaustively investigated the problem of the palmprint/palmvein recognition in last decades. The majority of methods in this field can be classified into three categories, line-like feature extraction, subspace feature learning and texture-based coding. However, their results are unsatisfactory and have much room to improve. Different from these hand-crafted methods, deep convolutional neural networks (DCNN) can learn higher-level features from massive training samples via a deep architecture, and it can capture the representation for the task-specific knowledge. Considering this point, we propose a DCNN-based scheme for the problem of palmprint/palmvein recognition, namely (short for palmprint and palmvein recognition using CNNs), which can further extract the deep and valuable information from the input image. The effectiveness and efficiency of have been validated through experiments performed on benchmark datasets.

1.1. Palmprint/Palmvein Recognition Methods

In this section, considering that the palmprint and the palmvein recognition often adopt similar solutions, we will review some representative work in the field of palmprint recognition. The process of palmprint recognition usually consists of the following stages: the acquisition of images, region of interest (ROI) extraction, feature extraction and feature classification (for the problem of identification) or distance measurement (for the problem of verification). The acquisition of images requires a certain hardware and software foundation, and we will introduce the palmvein acquisition device we developed in detail in

Section 4. The key technology lies in the stage of feature extraction. The majority of these methods can be categorized into three classes: line-like feature extraction, subspace feature learning and texture-based coding. These three categories are not mutually exclusive and their combinations are also possible.

Palmprints usually have three principal lines: the heart line, the head line and the life line. These three kinds of lines change little over one’s lifetime. Their appearance and locations on the palm are the most significant physiological traits for palmprint-based personal authentication systems. Wrinkles are much thinner than principal lines and also are much more irregular. Creases are minute features that spread over the whole palm, just as the ridges do in a fingerprint. Line-based methods either develop novel edge detectors or use off-the-shelf ones to find palm-lines [

16,

17,

18]. Shu et al. [

19] proposed a way to extract features based on principle lines, which uses 12 line detection operators to detect palmprint lines in all directions and approximate them with straight line segments. Then, the features of the palmprint, like the end points, intercepts, and angles of the straight-line segments are extracted. Wu et al. [

20] used the Canny edge operator [

21] to extract palm-lines and then the directions of the edge points were fed into four membership functions representing four different directions. In their later work, Wu et al. [

22] proposed a method of using the derivative of a Gaussian to extract the lines of palmprint images. Boles et al. resorted to Sobel operators to build binary edge maps [

23] and then utilized the Hough transform to obtain the parameters of six lines with the largest densities in the accumulator array for matching. However, these principal lines based feature extraction methods have been proved to be insufficient because of their sparse nature and the possibility of highly similar palm-lines of different individuals.

Subspace-based approaches are sometimes referred to as appearance-based approaches. They typically resorted to the principal component analysis (PCA), the linear discriminant analysis (LDA) and the independent component analysis (ICA) [

24,

25,

26]. These methods can capture the global characteristics of a palmprint image by projecting it to the most discriminative dimensions. In [

25], Lu et al. proposed the EigenPalm method for dimensionality reduction, which globally projects palmprint images to a lower dimensional space defined by PCA. On seeing that PCA takes more palmprint representation into account instead of the discriminating information of palmprints, Wu et al. [

26] proposed a FisherPalm method using LDA dimensionality reduction on the basis of PCA. The LDA method considers both the intra-class divergence and the inter-class divergence, and it can calculate the optimal projection matrix by maximizing the inter-class divergence and minimizing the intra-class divergence. Besides, Jiang et al. [

27] fused PCA and LDA features together, and Wang et al. [

28] applied the Kernel Fisher discriminant analysis method. In general, subspace-based methods do not depend on any prior knowledge about palmprints. However, these methods have not been proven to be useful in representing palmprint images and demonstrate a low accuracy compared to the state-of-the-art techniques. The underlying cause is that subspaces learned from misaligned palmprints cannot generate an accurate representation for each individual.

Different from the two kinds of methods aforementioned, texture-based coding methods can learn more stable features. Usually, such a method first filters the palmprint image and encodes the responses, and then the feature is stored in the form of bit codes. For matching, they use binary ‘AND’ or ‘XOR’ to calculate the similarity of two code maps. The most typical method belonging to this category is Kong et al.’s approach [

29]. This method adopted a 2D Gabor filter to convolve the palmprint image and then encoded the real and imaginary responses. In addition, Kong et al. also investigated a series of methods to confirm the advantage of the texture-based coding approaches [

30].

Besides, there are also some approaches that are difficult to be categorized, such as [

31,

32], because they integrate various different processing methods together to extract palmprint features.

1.2. Palmvein Benchmark Datasets Publicly Available

In the past decades, researchers have established several contactless palmvein benchmark datasets and made them publicly available. In [

33], Kabacinski et al. collected a palmvein dataset (CIE vein dataset). Its images were taken from 100 hands in three separate sessions. The time interval between two acquisition sessions was at least one week. In each session, there were four palmvein images. Volunteers were asked to put their hand on the device to cover the acquisition window, and they had to make their fingers coincident with the lines of the device. In total, there are altogether 1200 (100 × 3 × 4) palmvein images in Kabacinski et al.’s dataset. The resolution of these palmvein images is 1280 × 960. Hao et al. [

34] also collected a contactless palmvein dataset, and these images were captured from 200 palms in two separate sessions. The time interval between the first and second acquisition sessions was about one month. In each session, there were three samples. Each sample includes six palm images captured under six different spectrums, 460 nm, 630 nm, 700 nm, 850 nm, 940 nm, and white light, respectively. So, there are altogether 7200 (

) palm images in Hao et al.’s dataset. Among them, 4800 images are palmprint images (those taken under visible spectrums) and the other 2400 ones are palmvein images (those taken under IR spectrums). The resolution of these palmvein images is 768 × 576. Ferrer et al. [

35] constructed a contactless palmvein dataset, which consists of 10 different acquisitions from 102 persons within two single sessions. The time interval between two acquisition sessions was at least one week. A total of 1020 images were taken from the users’ right hands. The resolution of these palmvein images is 640 × 480. In

Section 5, information of these publicly available dataset along with our newly established dataset are summarized in

Table 1.

The remainder of this paper is organized as follows. Our motivations and contributions are given in

Section 2. A DCNN-based palmprint/palmvein recognition scheme is presented in

Section 3. We will introduce our newly designed contactless palmvein acquisition device in

Section 4.

Section 5 presents the experimental results. Finally, our conclusions are presented in

Section 6.

2. Our Motivations and Contributions

Through the literature survey, it can be found that for contactless palmprint and palmvein recognition, a large room deserving further studies still exists in at least two aspects.

Firstly, though many techniques have been proposed for extracting palmprint/palmvein features, nearly all these methods are based on low-level features, such as principal lines and textures detected by some algorithms. In fact, these features are generally unstable and unrepresentative. For example, the approach based on line-like features does not make the most use of the palm’s discriminant information. The texture-based approach also has its inherent drawback in that it depends on the specific encoding method. Recently, with the development of deep learning technologies, massive fields have adopted the latest deep learning technology and have made breakthrough progress. Compared with the traditional models, these deep models can learn rich features with deeper expression. Since the 2012 ImageNet competition winning entry “AlexNet” proposed by Krizhevsky et al. [

36], DCNN -based solutions have been successfully applied to a large variety of computer vision tasks, for example object-detection, segmentation, human pose estimation, and video classification. Particularly, DCNN-based models have also been explored in the field of biometrics, such as the models proposed for face recognition [

6,

7] and the models proposed for iris recognition [

12,

13]. The aforementioned examples are just a few of the applications in which DCNNs have been very successfully explored. There have been a great deal of thoughts for improving AlexNet’s performance. Among them, two ideas proposed recently are quite eminent: residual connections introduced by He et al. in [

37] and Inception architecture [

38]. In [

37], He et al. pointed out that residual connections are of paramount importance for training very deep models. In addition, there have been a series of updates to the Inception structure in [

39,

40,

41]. Especially in [

41], Szegedy et al. combined the Inception structure with the Residual connections. In this paper, we modify Inception_ResNet_v1 and successfully apply it in solving the problem of palmprint/palmvein recognition.

Secondly, palmvein benchmark datasets are indispensable for researchers to devise high-efficient recognition algorithms. An outstanding palmvein benchmark dataset is expected to be set up which comprises many classes and a large number of samples collected from at least two separate sessions. Unfortunately, a dataset like this is still absent for contactless palmvein.

In this paper, we try to fill the aforementioned research gaps to some extent and our contributions made can be briefly summarized as follows.

- (1)

A DCNN-based approach for contactless palmprint/palmvein recognition, namely , is proposed. It can extract more stable and representative features. Its superiority over the traditional methods has been corroborated in our experiments.

- (2)

We have developed a novel device for capturing high-quality contactless palmvein images. With the developed device as the acquisition tool, a large-scale contactless palmvein dataset is established. Our dataset is larger in scale than all the existing benchmark datasets for contactless palmveins. The dataset now is publicly available at

http://sse.tongji.edu.cn/linzhang/contactlesspalmvein/index.htm.

3. : A DCNN-Based Approach for Palmprint/Palmvein Recognition

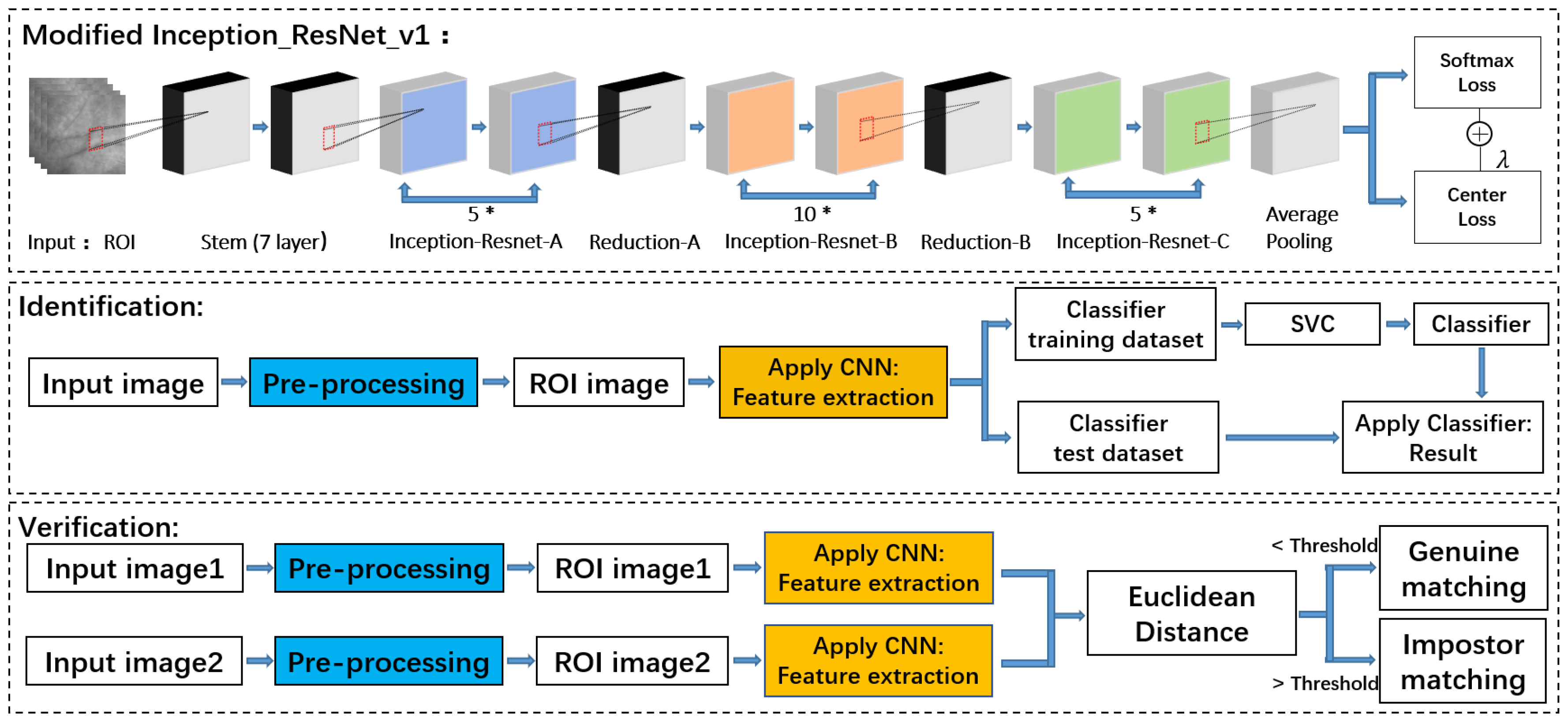

is a universal scheme that can be applied for palmprint recognition and palmvein recognition both. For convenience, we will describe our approach only using palmprint images for illustration. Identical operations can be applied to palmvein images. The overview of our

scheme is depicted in

Figure 2. This scheme mainly includes three parts, our modified Inception_ResNet_v1, the identification approach, and the verification approach.

In this paper, we use a modified Inception_ResNet_v1 network. Specifically, we use some data to train the network model, and then use it as a feature extractor. More details about the network will be presented in

Section 3.2. The second part is identification. Identification is to classify an input image into a large number of identity classes, while verification is to determine whether a pair of images belong to the same identity or not. In this article, we pre-process the dataset, extract the ROI, then we use the trained network model to extract the features. Next, we use half of the data to train an SVM classifier, and the other half can be used for test. For the problem of palmprint verification, we also pre-process the input palmprint image and use the ROI detection algorithm to extract the ROI of the palmprint image. Then, the trained modified Inception_ResNet_v1 is used to extract the features vectors. Finally, the Euclidean distance between the two feature vectors is regarded as their matching distance. Through the above brief introduction, it can be found that the content of the scheme mainly contains three aspects: ROI extraction, modified Inception_ResNet_v1, and identification/verification.

3.1. ROI Extraction

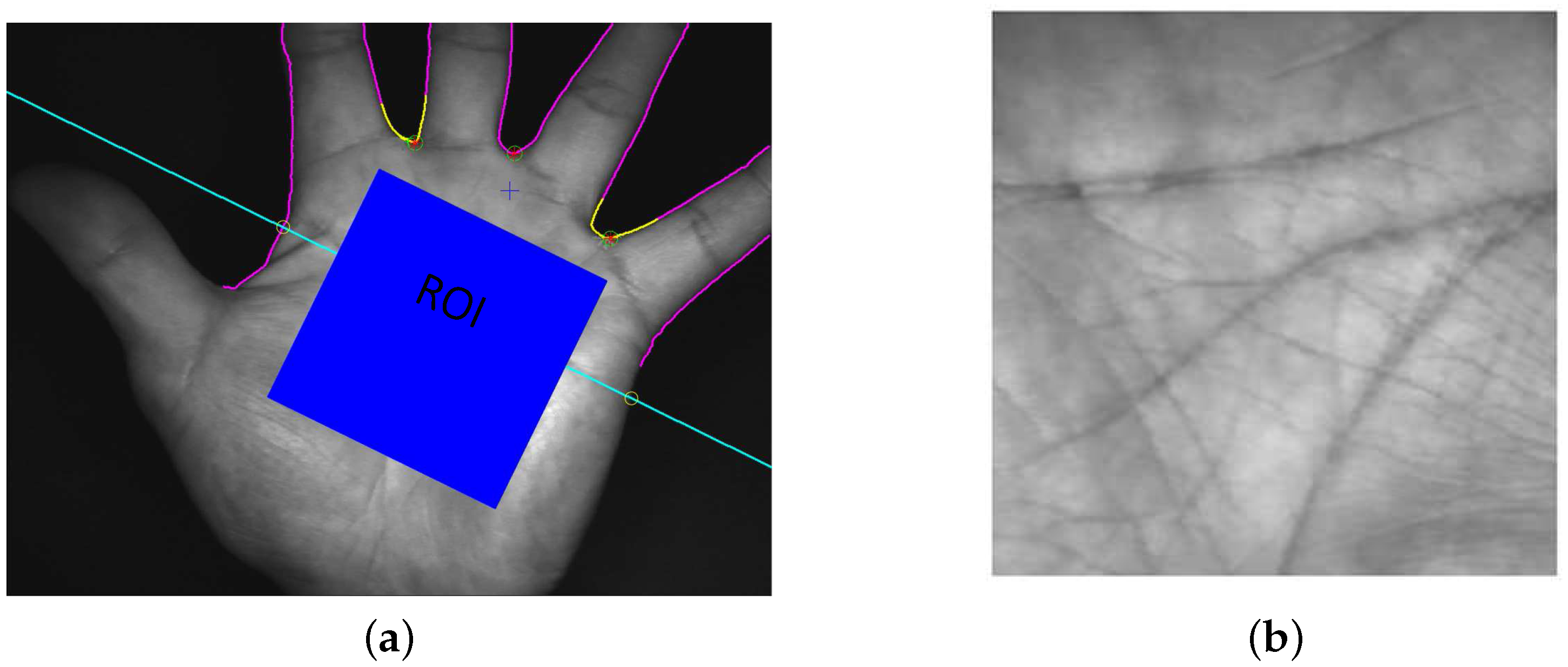

Taking the global geometric transformation between two palmprint images into account, the alignment of palmprint images is necessary and critical. ROI extraction is used to align different palmprint images for feature extraction. Usually, ROI extraction algorithms set up a local coordinate system based on the key points between fingers. In this paper, for ROI extraction, we resort to the approach proposed in [

42]. This algorithm has good robustness to the translation, rotation and scaling variations of contactless palmprint images.

Figure 3a illustrates the key points detected on the palmprint image and

Figure 3b shows the extracted ROI. From each original palmprint image, a 160 × 160 ROI sub-image is extracted. The later feature extraction and classification steps are actually performed on these ROIs.

3.2. Modified Inception_ResNet_v1

For feature extraction, we propose a new DCNN by modifying Inception_ResNet_v1. We divide the network into two parts to analyze. In the first half of this network, it is a seven-layer stem structure (as shown in

Table 2) comprising traditional convolution and maxpooling operations. In

Table 2, we give a detailed list of parameter configurations for the stem structure. After one stem, the output is of the dimension 17 × 17 × 256. The second half of the network comprises three Inception-ResNet structures, two Reduction structures, and a pooling layer.

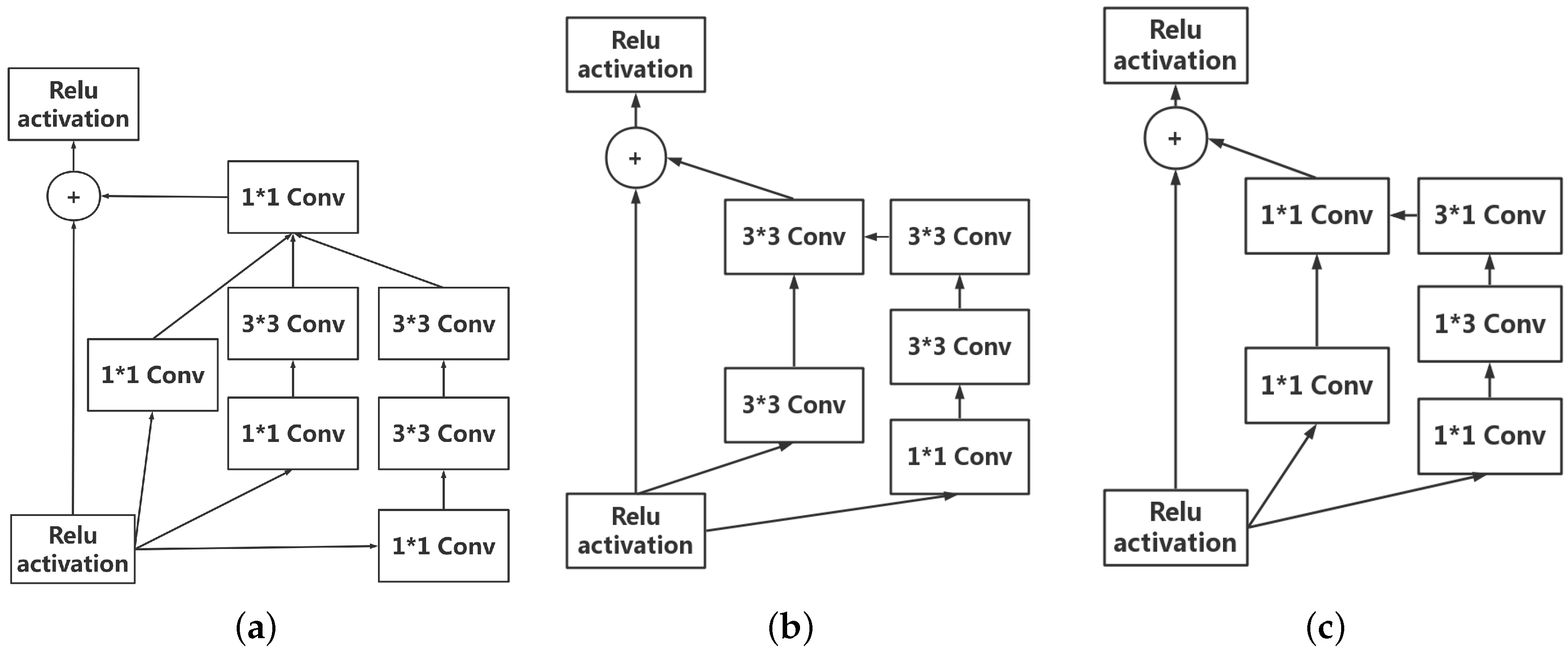

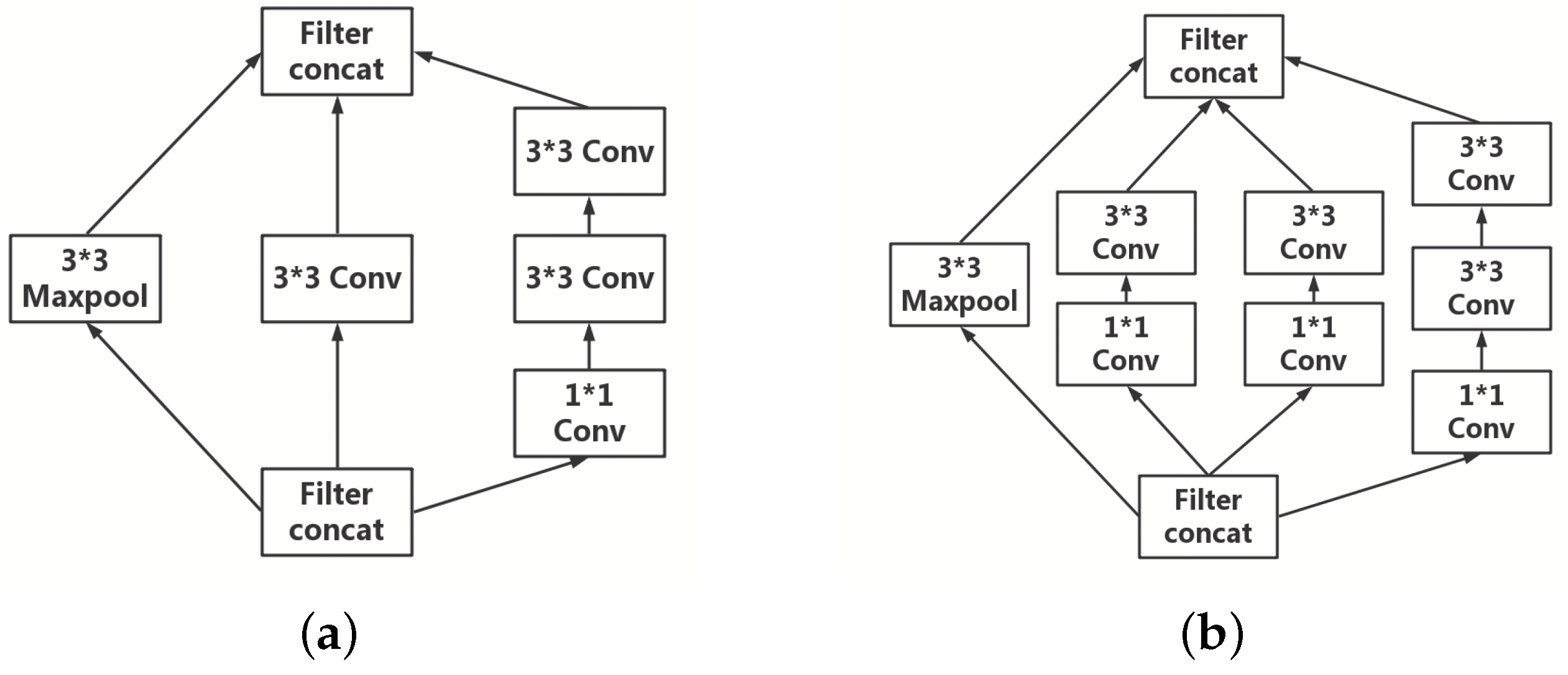

3.2.1. Inception-ResNet Modules

The Inception-ResNet modules combine Inception architecture with residual connections. Inception-ResNet is a most important module used to deepen the network. Very deep CNNs are critical to the largest improvement in image recognition performance. Especially, the Inception architecture has showed satisfactory performance with relatively low computational cost compared to other deep structures. Meanwhile, the residual connection also has achieved a great success in the image recognition field due to that it can speed up network convergence. So, it is an extremely worthwhile direction to combine the Inception architecture with residual connections together. In this paper, for different stages in the network, the Inception architecture and residual connections are designed as three different modules, Inception_ResNet_A (as shown in

Figure 4a), Inception_ResNet_B (as shown in

Figure 4b) and Inception_ResNet_C (as shown in

Figure 4c). All three modules use a residual connection, so the size of the output to these modules is the same as the input size. In other words, the feature map size after stem is 17 × 17 × 256, which is also the input size for Inception_ResNet_A. The feature map size of Inception_ResNet_A’s outputs is also 17 × 17 × 256.

3.2.2. Reduction Modules

As the number of the Inception_ResNet module increased, the network becomes more complex and the computational complexity is increased at the same time. In order to solve this problem, it is important to use 1 × 1 convolutions to reduce the dimensionality. This method can reduce the number of filters and the model complexity without losing the ability to represent model features. Our modified Inception_ResNet_v1 network mainly contains two kinds of Reduction modules, Reduction_A (as shown in

Figure 5a) and Reduction_B (as shown in

Figure 5b). The input dimension for the module Reduction_A is 17 × 17 × 256 and its output is of the size 8 × 8 × 896, which is also the dimension of module Inception_ResNet_B’s inputs. The input to module Reduction_B is of the dimension 8 × 8 × 896 and the output is of the dimension 3 × 3 × 1792, which is the dimension of module Inception_ResNet_C’s inputs. After Inception_ResNet_C, there is an average Pooling layer, the output of which is a 1792-dimensional vector. Finally, after a fully connected layer we can get a 128-dimensional feature vector, which represents the input image being examined. It needs to be noted that in order to enhance the discriminative power of learned features, we combine the Softmax loss and the Center loss as the total loss. The center loss can learn a center for features extracted from each class and can penalize the distances between features and their associated class centers (Wen et al. gives a detailed description of the advantages of center loss and the necessity of using it in [

43]). By adjusting the hyper parameters of network, including Batch_Size (number of images to process in a batch), Weight_Decay (L2 weight regularization) and

(control the weight of center loss), we obtain the final model. This final model will serve as a feature extractor for subsequent experiments.

3.3. Identification and Verification

We utilize the trained modified Inception_ResNet_v1 model as our feature extractor. In this way, each ROI can be represented as a 128-dimensional feature vector.

For identification, a given test sample needs to be classified into one of the classes in the training set. To solve such a problem, we train an SVM classifier which takes feature vectors extracted by modified Inception_ResNet_v1 as data samples.

For verification, we need to determine whether the two given images belong to the same palm. When solving such a problem, we regard the Euclidean distance between their feature vectors as the matching distance.

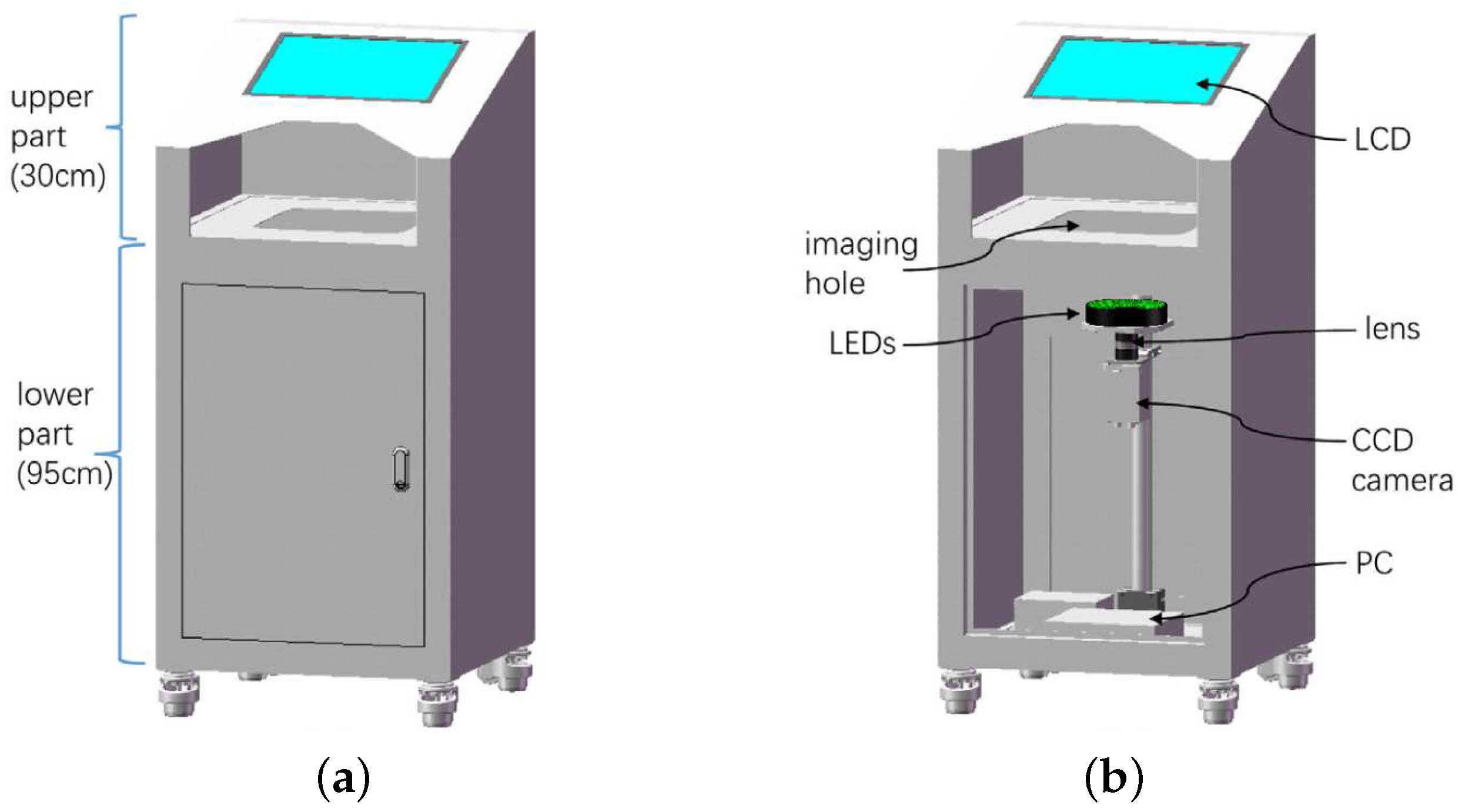

4. Contactless Palmvein Acquisition Device

Good tools are prerequisite to the successful execution of a job. With the aim to study the potential discriminating power of the contactless palmvein images, a novel contactless palmvein acquisition device is designed and developed in this work.

Figure 6a shows its 3D CAD model and

Figure 6b shows its internal mechanical structure. This device can be seen as two parts. The lower part has a CCD camera, a ring near infrared LED light source, a lens, a power regulator, and an industrial computer. The top cover on the lower part of the housing has a square “imaging hole” of the size 230 mm × 230 mm centered on the optical axis of the lens. The upper part of the housing is wedge-shaped and a thin LCD with a touch screen is mounted on it. This device’s overall height is about 950 mm. For palmvein image collection, the subject needs to place their palm over the “imaging hole”. The subject can adjust the posture of the palm by observing the camera’s video stream from the LCD in real-time. The intensity of the LED light can be modulated by its power modulator. We carefully adjusted the position and brightness of the LEDs to make the quality of the captured images as high as possible.

Compared to the existing palmvein image acquisition devices, our newly designed one has the following merits. First, it is user-friendly. Since our device is designed in accordance with ergonomics, the user will feel comfortable when using it. Second, due to that we carefully adjust the parameters of the imaging sensor, the lens and the near infrared LED, the captured palmvein images can have quite high quality. Third, using our device, the acquired palmvein image has a simple background since it is taken against the dark back surface of the LCD. A simple background can simplify the later data preprocessing steps and consequently can boost the system’s overall robustness. By the developed device, a large-scale contactless palmvein image dataset has been established, whose details can be found in

Section 5.1.

5. Experiments

5.1. A Large-Scale Palmvein Benchmark Dataset

With the self-developed palmvein image acquisition device, we have at first collected a large-scale contactless palmvein image dataset. Since the developed device can be used straightforwardly, the only instruction given to the subjects taking part in the data collection is that they need to stretch their hands naturally to make the finger gaps observed on the screen. We collected contactless palmvein images of 300 volunteers, including 192 males and 108 females. All volunteers were from Tongji University, Shanghai, China. We collected images in two separate sessions. The average time interval between the first and second acquisition sessions was about two months. In each session, 10 palmvein images from each palm were captured from each volunteer and in this way 6000 palmvein images were acquired from 600 palms. In total, there are altogether 12,000 (300 × 2 × 10 × 2) high-quality contactless palmvein images in our dataset. In

Figure 7, typical palmvein images from Kabacinski et al.’s dataset [

33], Hao et al.’s dataset [

34], and our dataset are shown.

Information about the publicly available datasets and our newly established one is summarized in

Table 1. In

Table 1, it can be seen that our dataset is the largest one in scale. In addition, the image quality of our dataset is actually better than that of the existing datasets. Hence, it can serve as a better benchmark for researchers to develop more advanced contactless palmvein recognition approaches.

5.2. Experiments for Palmprint Recognition

The implementation of our approach is based on Tensorflow with an NVIDIA GTX 980Ti GPU (NVIDIA, Santa Clara, CA, USA). Tongji University Contactless Palmprint Dataset [

42] used in our experiments is a publicly available one. The resolution of these images is 800 × 600. The ROIs have also been provided by [

42]. In all, a total of 600 palms (300 left palms and 300 right palms) were collected. There are altogether 12,000 contactless palmprint images.

We partitioned the palmprint dataset into two parts. The first part had 7200 palmprint images (360 palms, 20 palmprint images for each palm), and the second part had 4800 palmprint images (240 palms, 20 palmprint images for each palm). We used the data in the first part to train our modified Inception_ResNet_v1 network. By adjusting the hyper parameters of network, including Batch_Size, Weight_Decay and , we obtain the final model by comparing the accuracy of the model using various hyper parameters. We found that the model is better for the verification problem when we increase the hyper parameter , while its performance on the classification problem is worse. This phenomenon shows that adding center loss with a certain weight can reduce the intra-class distance of each class. For each identification method, the parameters are empirically tuned in a greedy manner and the tuning criterion was that parameter configurations that could lead to a higher recognition rate would be preferred. In our experiment, in order to ensure that the performance of identification and verification of the results are satisfactory, key hyper parameters are set as follows, Batch_Size = 24, Weight_Decay = 5 × 10, = 0. This final model serves as a feature extractor for our subsequent experiments of palmprint identification and verification.

(1) Palmprint identification: Identification is a process of comparing one image against many images in the registration dataset. We partitioned the second part of the data into two subsets, the training subset and test subset. Each subset has 2400 palmprint images (240 palms, 10 palmprint images for each palm). We used the training subset to train an SVM classifier, and the test subset to test the classifier’s performance. Recognition accuracy and the “precision-recall” rate can be calculated to evaluate the efficacy of our method for the task of palmprint identification. We compare our result with the other state-of-the-art methods in this field and the results are reported in

Table 3. Our approach can classify all the 2400 palmprint images correctly, which demonstrates that the features learned by our modified Inception_ResNet_v1 are highly representative.

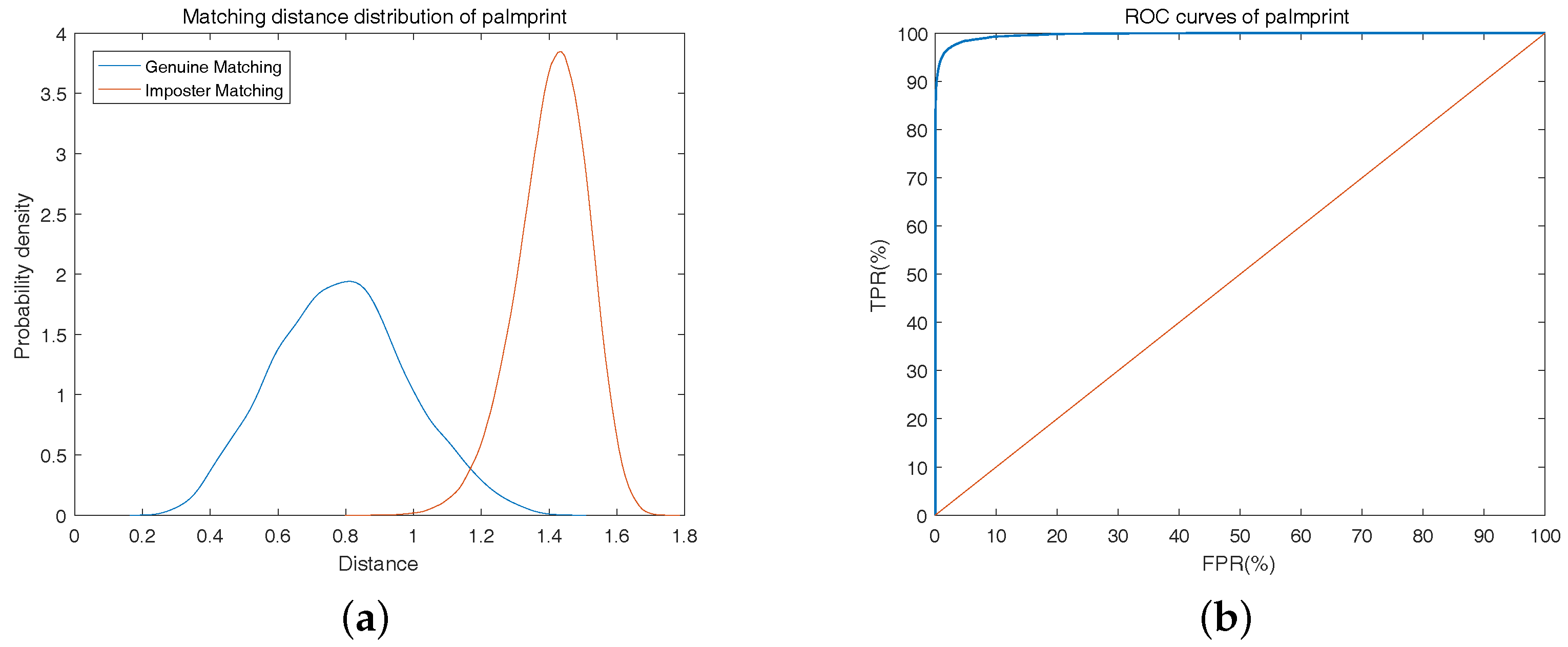

(2) Palmprint verification: Palmprint verification is actually a procedure of one-to-one matching, answering the question of whether the two palmprint images being compared are from the same palm or not. In this experiment, we used the test subset. There are a total of 2400 palmprint images (120 palms, 20 palmprint images for each palm) in this test subset. For genuine matching, the two palmprint images belong to the same palm and come from different sessions. In the actual implementation of the code, we use the flag ’F’ to represent the data of the first session and the flag ’S’ to represent the data of the second session. In total, there are altogether 12,000 (120 × 10 × 10) genuine matchings in our verification experiment. For impostor matching, the two palmprint images belong to different palms and come from different sessions. There are altogether 1,428,000 (120 × 10 × 10 × (120 − 1)) impostor matchings in our experiment. The distance distribution of genuine and impostor matchings of our method is demonstrated in

Figure 8a while

Figure 8b shows the ROC (receiver operating characteristic) curve. In

Figure 8b, the horizontal coordinate is false positive rate (FPR), and the vertical coordinate is true positive rate (TPR). The equal error rate (EER), a point where the false accept rate (FAR) is equal to the false reject rate (FRR), is utilized to evaluate the performance of verification algorithms.

Table 4 shows the comparison of our method with the other competitors. It can be observed that our scheme can achieve a lower EER than the methods employed for comparison.

5.3. Experiments for Palmvein Recognition

In the experiment of palmvein identification and verification, we used the data we collected in

Section 5.1. We partitioned the dataset into two parts: the first comprised has 7200 palmvein images (360 palms, 20 palmvein images for each palm), and the second part comprised 4800 palmvein images (240 palms, 20 palmvein images for each palm). In order to make the neural network model suitable for the palmvein problem, we need to fine-tune the model trained for palmprint recognition. For this purpose, we used the data in the first part to fine-tune modified Inception_ResNet_v1. Key hyper parameters were set as follows: Batch_Size = 24, Weight_Decay = 5 × 10

,

= 0. This final model serves as the feature extractor for our subsequent experiments of palmvein identification and verification.

For the problem of palmvein identification, we partitioned the second part of the data into two parts, the training subset and the test subset. Each subset had 2400 palmvein images (240 palms, 10 palmprint images for each palm). We used the training subset to train an SVM classifier and used the test subset to test the classifier’s performance. Comparisons with the other methods are shown in

Table 5. It can be observed that our network correctly classifies all 2400 palmvein images.

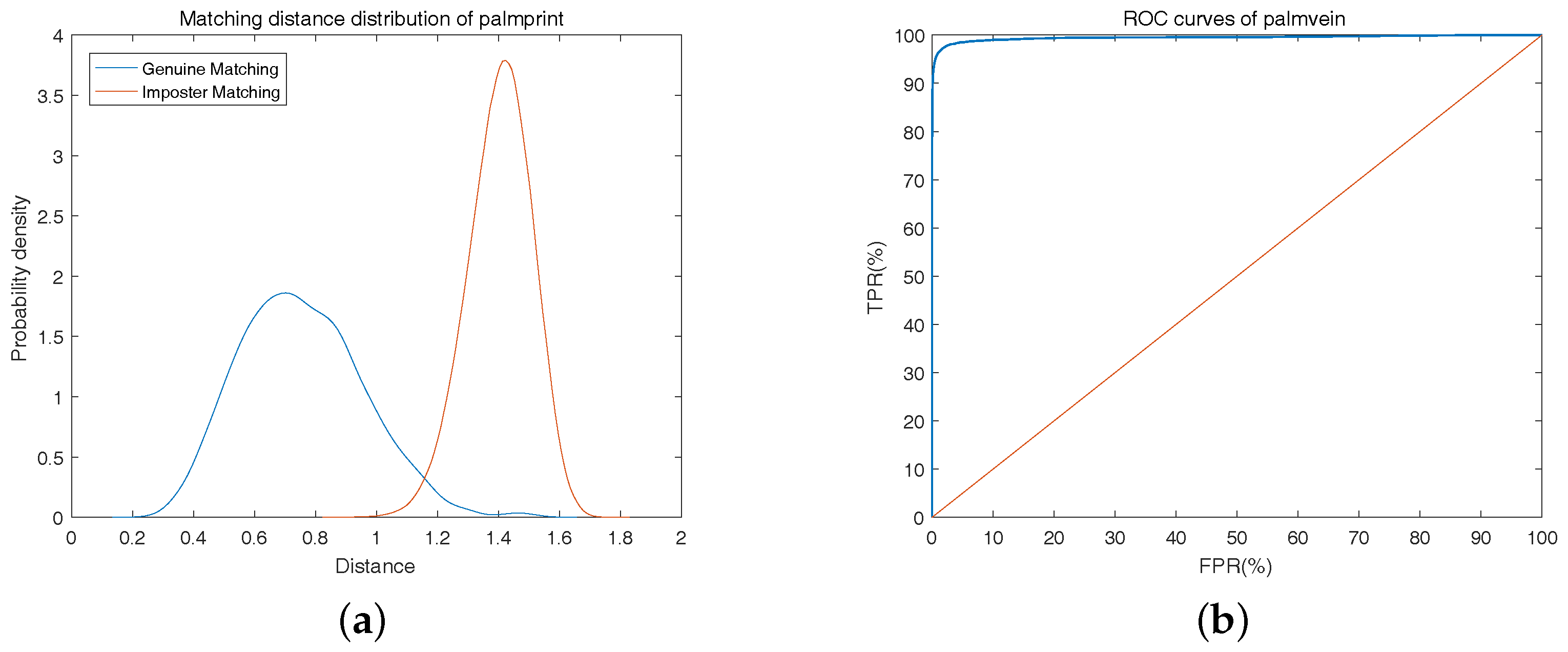

For the problem of verification, we used the palmvein data of the test set (comprising 2400 palmvein images and each palm contains 20 palmvein images). There are altogether 12,000 (120 × 10 × 10) genuine matchings and 1,428,000 (120 × 10 × 10 × (120 − 1)) impostor matchings. The distance distribution of genuine and impostor matchings for our method is illustrated in

Figure 9a while

Figure 9b shows the ROC curve. Comparisons with the other competitors are shown in

Table 6, from which it can be seen that a lower EER can be obtained by using our scheme.

6. Conclusions

In this paper, we studied the problem of palmprint/palmvein recognition and proposed a DCNN-based scheme, namely , which is the first exploration of DCNN in the contactless palmprint/palmvein recognition field. At the feature extraction stage, we applied modified Inception_ResNet_v1 to extract deeper valuable features, which can be further used for identification or verification. For identification, we train an SVM classifier which takes feature vectors extracted by modified Inception_ResNet_v1 network as data samples. For verification, we regard the Euclidean distance between the feature vectors of the two examined palms as their matching distance. In addition, we have designed and developed a new contactless palmvein acquisition device which is use-friendly and can collect high-quality palmvein images. Using this device, we established the largest publicly available palmvein dataset, which comprises 12,000 palmvein images acquired from 600 different palms. The superiority of our proposed palmprint/palmvein recognition scheme over the other competitors was corroborated by thorough experiments conducted on benchmark datasets. In the future, we will take one step into the research of combining palmprint and palmvein together for further improving the recognition accuracy.

Acknowledgments

This work was supported in part by the Natural Science Foundation of China under grant No. 61672380, in part by the Fundamental Research Funds for the Central Universities under Grant No. 2100219068, and in part by the Shanghai Automotive Industry Science and Technology Development Foundation under grant No. 1712.

Author Contributions

Ying Shen and Zaixi Cheng conceived and designed the experiments; Ying Shen performed the experiments; Ying Shen and Dongqing Wang analyzed the data; Zaixi Cheng contributed reagents/materials/analysis tools; Lin Zhang wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, C.; Fan, I.; Han, C.; Chang, J.; Fan, K.; Liao, H. Palmprint verification using gradient maps and support vector machines. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012; pp. 1–4. [Google Scholar]

- Maltoni, D.; Maio, D.; Jain, A.K.; Prabhakar, S. Handbook of Fingerprint Recognition; Springer: London, UK, 2009; ISBN 978-1-84882-253-5. [Google Scholar]

- Ratha, N.; Bolle, R. Automatic Fingerprint Recognition Systems; Springer: New York, NY, USA, 2007; ISBN 978-0-387-95593-3. [Google Scholar]

- Wechsler, H. Reliable Face Recognition Methods: System Design, Implementation and Evaluation; Springer Publishing Company: New York, NY, USA, 2010; ISBN 978-0-387-22372-8. [Google Scholar]

- Wright, J.; Yang, A.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 210–227. [Google Scholar] [CrossRef] [PubMed]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6738–6746. [Google Scholar]

- Sun, Y.; Wang, X.; Tang, X. Deeply learned face representations are sparse, selective, and robust. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2892–2900. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Arsalan, M.; Hong, H.; Naqvi, R.A.; Lee, M.B.; Kim, M.C.; Kim, D.S.; Kim, C.S.; Park, K.R. Deep learning-based iris segmentation for iris recognition in visible light environment. Symmetry 2017, 9, 263. [Google Scholar] [CrossRef]

- Daugman, J. How iris recognition works. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 21–30. [Google Scholar] [CrossRef]

- Gangwar, A.; Joshi, A. DeepIrisNet: Deep iris representation with applications in iris recognition and cross-sensor iris recognition. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 2301–2305. [Google Scholar]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access. in press. [CrossRef]

- Zhang, Y.; Liu, H.; Geng, X.; Liu, L. Palmprint recognition based on multi-feature integration. In Proceedings of the IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, Xi’an, China, 3–5 October 2016; pp. 992–995. [Google Scholar]

- Jain, A.K.; Feng, J. Latent palmprint matching. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1032–1047. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Leung, M.K.; Yu, X. Palmprint identification using Hausdorff distance. In Proceedings of the International Workshop on Biomedical Circuits and Systems, Singapore, 1–3 December 2004. [Google Scholar] [CrossRef]

- Lu, G.; Zhang, D. Wavelet-based feature extraction for palmprint identification. Proceeding of the Second International Conference on Image and Graphics, Hefei, China, 16–18 August 2002; pp. 780–784. [Google Scholar]

- Wu, X.; Wang, K.; Zhang, D. Fuzzy directional element energy feature (FDEEF) based palmprint identification. In Proceedings of the International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; pp. 95–98. [Google Scholar]

- Shu, W.; Zhang, D. Palmprint verification: An implementation of biometric technology. In Proceedings of the Fourteenth International Conference on Pattern Recognition, Brisbane, Australia, 16–20 August 1998; pp. 219–221. [Google Scholar]

- Wu, X.; Wang, K.; Zhang, D. A novel approach of palm-line extraction. In Proceedings of the Third International Conference on Image and Graphics, Hong Kong, China, 18–20 December 2004; pp. 230–233. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Wang, K.; Zhang, D. Differential feature analysis for palmprint authentication. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Munster, Germany, 2–4 September 2009; pp. 125–132. [Google Scholar]

- Boles, W.W.; Chu, S. Personal identification using images of the human palm. In Proceedings of the Speech and Image Technologies for Computing and Telecommunications, Brisbane, Australia, 4 December 1997; pp. 295–298. [Google Scholar]

- Connie, T.; Jin, A.; Ong, M.G.K.; Ling, D. An automated palmprint recognition system. Image Vis. Comput. 2005, 23, 501–515. [Google Scholar] [CrossRef]

- Lu, G.; Zhang, D.; Wang, K. Palmprint recognition using eigenpalms features. Pattern Recognit. Lett. 2003, 24, 1463–1467. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, D.; Wang, K. Fisherpalms based palmprint recognition. Pattern Recognit. Lett. 2003, 24, 2829–2838. [Google Scholar] [CrossRef]

- Jiang, W.; Tao, J.; Wang, L. A novel palmprint recognition algorithm based on PCA &FLD. In Proceedings of the International Conference on Digital Telecommunications, Cote d’Azur, France, 29–31 Auguest 2006; p. 28. [Google Scholar]

- Wang, Y.; Ruan, Q. Kernel Fisher discriminant analysis for palmprint recognition. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 457–460. [Google Scholar]

- Kong, W.; Zhang, D. Palmprint texture analysis based on low-resolution images for personal authentication. In Proceedings of the 16th International Conference on Pattern Recognition, Québec City, QC, Canada, 11–15 August 2002; pp. 807–810. [Google Scholar]

- Kong, A.; Zhang, D.; Kamel, M. Palmprint identification using feature-level fusion. Pattern Recognit. 2006, 39, 478–487. [Google Scholar] [CrossRef]

- Chen, G.; Bui, T.D.; Krzyzak, A. Palmprint classification using dual-tree complex wavelets. Proceeding of the International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; pp. 2645–2648. [Google Scholar]

- Chen, J.; Moon, Y.S.; Yeung, H.W. Palmprint authentication using time series. Proceeding of the Fifth International Conference on Audio- and Video-based Biometric Person Authentication, Hilton Rye Town, NY, USA, 20–22 July 2005; pp. 376–385. [Google Scholar]

- Kabacinski, R.; Kowalski, M. Vein pattern database and benchmark results. Electron. Lett. 2011, 47, 1127–1128. [Google Scholar] [CrossRef]

- Hao, Y.; Sun, Z.; Tan, T.; Ren, C. Multispectral palm image fusion for accurate contact-free palmprint recognition. In Proceedings of the 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 281–284. [Google Scholar]

- Ferrer, M.A.; Morales, A.; Ortega, L. Infrared hand dorsum images for identification. Electron. Lett. 2009, 45, 306–308. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Zhang, L.; Li, L.; Yang, A.; Shen, Y.; Yang, M. Towards contactless palmprint recognition: A novel device, a new benchmark, and a collaborative representation based identification approach. Pattern Recognit. 2017, 69, 199–212. [Google Scholar] [CrossRef]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 499–515. [Google Scholar]

- Liang, Q.; Zhang, L.; Li, H.; Lu, J. Palmprint recognition based on image sets. In Proceedings of the International Conference on Intelligent Computing, Fuzhou, China, 20–23 August 2015; pp. 305–315. [Google Scholar]

- Li, W.; Zhang, D.; Xu, Z. Palmprint identification by Fourier transform. Int. J. Pattern Recognit. Artif. Intell. 2002, 16, 417–432. [Google Scholar] [CrossRef]

- Pang, Y.; Connie, T.; Jin, A.; Ling, D. Palmprint authentication with Zernike moment invariants. In Proceedings of the 3rd IEEE International Symposium on Signal Processing and Information Technology, Darmstadt, Germany, 17 December 2003; pp. 199–202. [Google Scholar]

- Wu, X.; Wang, K.; Zhang, D. Palmprint recognition using directional line energy feature. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26 August 2004; pp. 475–478. [Google Scholar]

- Li, Y.; Wang, K.; Zhang, D. Palmprint recognition based on translation invariant Zernike moments and modular neural network. In Proceedings of the International Symposium on Neural Networks, Chongqing, China, 30 May–1 June 2005; pp. 177–182. [Google Scholar]

- Mirmohamadsadeghi, L.; Drygajlo, A. Palm vein recognition with local binary patterns and local derivative patterns. In Proceedings of the 2011 International Joint Conference on Biometrics, Washington, DC, USA, 11–13 October 2011; pp. 1–6. [Google Scholar]

- Zhang, Y.; Li, Q.; You, J.; Bhattacharya, P. Palm vein extraction and matching for personal authentication. In Proceedings of the 9th International Conference on Advances in Visual Information Systems, Shanghai, China, 28–29 June 2007; pp. 154–164. [Google Scholar]

- Lee, J. A novel biometric system based on palm vein image. Pattern Recognit. Lett. 2012, 33, 1520–1528. [Google Scholar] [CrossRef]

- Pan, M.; Kang, W. Palm vein recognition based on three local invariant feature extraction algorithms. In Proceedings of the Chinese Conference on Biometric Recognition, Beijing, China, 3–4 December 2011; pp. 116–124. [Google Scholar]

- Kang, W.; Wu, Q. Contactless palm vein recognition using a mutual foreground-based local binary pattern. IEEE Trans. Infom. Forensics Secur. 2014, 9, 1974–1985. [Google Scholar] [CrossRef]

Figure 1.

(a) is a sample palmprint image while (b) is a sample palmvein image.

Figure 1.

(a) is a sample palmprint image while (b) is a sample palmvein image.

Figure 2.

The overview of the structure. The top row is the structure used for training modified Inception_ResNet_v1. The input of our network is the ROI of the palmprint image. A stem (comprising a seven-layer network structure, six convolutions and one pooling operation) is used to extract shallow features. Then, we use three Inception modules and two Reduction modules to deepen the network. Finally, we join the center loss with the softmax loss to make the features learned by the network more representative. The middle row is the flowchart for palmprint identification. We first extract the ROI. An SVM (Support Vector Machine) classifier is applied in the final classification phase due to its efficacy. The third row is the flowchart for palmprint verification. Firstly, we need to extract the ROIs from the two palmprint images. Then, we extract their features using the trained network. Finally, by calculating the matching distance between the two feature vectors, the decision can be made.

Figure 2.

The overview of the structure. The top row is the structure used for training modified Inception_ResNet_v1. The input of our network is the ROI of the palmprint image. A stem (comprising a seven-layer network structure, six convolutions and one pooling operation) is used to extract shallow features. Then, we use three Inception modules and two Reduction modules to deepen the network. Finally, we join the center loss with the softmax loss to make the features learned by the network more representative. The middle row is the flowchart for palmprint identification. We first extract the ROI. An SVM (Support Vector Machine) classifier is applied in the final classification phase due to its efficacy. The third row is the flowchart for palmprint verification. Firstly, we need to extract the ROIs from the two palmprint images. Then, we extract their features using the trained network. Finally, by calculating the matching distance between the two feature vectors, the decision can be made.

![Symmetry 10 00078 g002]()

Figure 3.

Illustration of ROI extraction. (a) The key points detected on the palmprint; (b) The extracted ROI.

Figure 3.

Illustration of ROI extraction. (a) The key points detected on the palmprint; (b) The extracted ROI.

Figure 4.

(a) is the schema for Inception_ResNet_A module of , whose input and output dimensions are both 17 × 17 × 256; (b) is the schema for Inception_ResNet_B module of , whose input and output dimensions are both 8 × 8 × 896; (c) is the schema for Inception_ResNet_C module of , whose input and output dimensions are both 3 × 3 × 1792.

Figure 4.

(a) is the schema for Inception_ResNet_A module of , whose input and output dimensions are both 17 × 17 × 256; (b) is the schema for Inception_ResNet_B module of , whose input and output dimensions are both 8 × 8 × 896; (c) is the schema for Inception_ResNet_C module of , whose input and output dimensions are both 3 × 3 × 1792.

Figure 5.

(a) is the schema for the Reduction_A module, whose input and output dimensions are 17 17 × 256 and 8 × 8 × 896, respectively; (b) is the schema for the Reduction_B module, whose input and output dimensions are 8 × 8 × 896 and 3 × 3 × 1792, respectively.

Figure 5.

(a) is the schema for the Reduction_A module, whose input and output dimensions are 17 17 × 256 and 8 × 8 × 896, respectively; (b) is the schema for the Reduction_B module, whose input and output dimensions are 8 × 8 × 896 and 3 × 3 × 1792, respectively.

Figure 6.

(a) is the 3D CAD model of our contactless palmvein acquisition device and (b) shows its internal structure.

Figure 6.

(a) is the 3D CAD model of our contactless palmvein acquisition device and (b) shows its internal structure.

Figure 7.

The top row shows exemplary images in the dataset of Kabacinski et al. [

33]. The middle row shows exemplary images in the dataset of Hao et al. [

34]. The third row shows exemplary images in the dataset we collected.

Figure 7.

The top row shows exemplary images in the dataset of Kabacinski et al. [

33]. The middle row shows exemplary images in the dataset of Hao et al. [

34]. The third row shows exemplary images in the dataset we collected.

Figure 8.

(a,b) are the matching distance distribution and the ROC curve, respectively, of our proposed method when being applied for palmprint verification.

Figure 8.

(a,b) are the matching distance distribution and the ROC curve, respectively, of our proposed method when being applied for palmprint verification.

Figure 9.

(a,b) are the matching distance distribution and the ROC curve, respectively, of our proposed method when being applied for palmvein verification.

Figure 9.

(a,b) are the matching distance distribution and the ROC curve, respectively, of our proposed method when being applied for palmvein verification.

Table 1.

Contactless Palmvein Benchmark Datasets.

Table 1.

Contactless Palmvein Benchmark Datasets.

| Datasets | Number | Number | Number | Resolution | Time Interval |

|---|

| of Palms | of Sessions | of Images | of Acquisition |

|---|

| Kabacinski et al. [33] | 100 | 3 | 1200 | 1280 × 960 | One week |

| Hao et al. [34] | 200 | 2 | 2400 | 768 × 576 | One month |

| Ferrer et al. [35] | 102 | 1 | 1020 | 640 × 480 | One week |

| Our dataset | 600 | 2 | 12,000 | 800 × 600 | Two months |

Table 2.

Configuration of the stem used in the modified Inception_ResNet_v1 network.

Table 2.

Configuration of the stem used in the modified Inception_ResNet_v1 network.

| Type of Layer | Number of Filter | Feature Map size | Kernel Size | Stride | Padding |

|---|

| Image input layer | - | 160 × 160 × 1 | - | - | - |

| Conv-1 | 32 | 79 × 79 × 32 | 3 × 3 | 2 × 2 | 0 × 0 |

| Conv-2 | 32 | 77 × 77 × 32 | 3 × 3 | 1 × 1 | 0 × 0 |

| Conv-3 | 64 | 77 × 77 × 64 | 3 × 3 | 1 × 1 | 1 × 1 |

| Max-pool | - | 38 × 38 × 64 | 3 × 3 | 2 × 2 | 0 × 0 |

| Conv-4 | 80 | 38 × 38 × 80 | 1 × 1 | 1 × 1 | 0 × 0 |

| Conv-5 | 192 | 36 × 36 × 192 | 3 × 3 | 1 × 1 | 0 × 0 |

| Conv-5 | 256 | 17 × 17 × 256 | 3 × 3 | 2 × 2 | 0 × 0 |

Table 3.

Accuracy and Precision-recall Rate for Palmprint Identification.

Table 3.

Accuracy and Precision-recall Rate for Palmprint Identification.

| Method | Accuracy | Precision | Recall |

|---|

| Liang et al. [44] | 96.63% | 96.86% | 96.63% |

| Li et al. [45] | 95.48% | 95.83% | 95.48% |

| Zhang et al. [42] | 98.78% | 98.95% | 98.78% |

| Wu et al. [26] | 99.20% | 99.50% | 99.20% |

| Ours | 100.00% | 100.00% | 100.00% |

Table 4.

EERs for Palmprint Verification.

Table 4.

EERs for Palmprint Verification.

| Method | EER |

|---|

| Pang et al. [46] | 6.46% |

| Wu et al. [47] | 3.37% |

| Li et al. [48] | 4.50% |

| Ours | 2.74% |

Table 5.

Accuracy and Precision-recall Rate for Palmvein Identification.

Table 5.

Accuracy and Precision-recall Rate for Palmvein Identification.

| Method | Accuracy | Precision | Recall |

|---|

| Mirmohamadsadeghi et al. [49] | 97.20% | 97.51% | 97.20% |

| Zhang et al. [50] | 98.80% | 98.83% | 98.80% |

| Lee et al. [51] | 99.18% | 99.47% | 99.18% |

| Ours | 100.00% | 100.00% | 100.00% |

Table 6.

EERs for Palmvein Verification.

Table 6.

EERs for Palmvein Verification.

| Method | EER |

|---|

| Pan et al. [52] | 4.00% |

| Kang et al. [53] | 2.36% |

| Ours | 2.30% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).