After classifying points as imaging points and non-imaging points of flight toward imaging points, the generation method is introduced. During the process in which the UAV image points surveilled by the pilot, the UAV does not imagine surveillance point at all flight points. Actually, since facilities could not be imaged in points where they should be, flight points are classified into imaging points and points of flight toward imaging points. Although UAV’s imaging angle is important in points where surveillance points are image, it is not important and thus not considered in flight points where surveillance points are not image.

4.1. Definition of Imaging Point and Non-Imaging Point Clusters

In order to determine the flight path on which the UAV will navigate, normalized non-imaging point set

U’ is classified and is added to normalized non-imaging point cluster set

C’m, which is a collection of normalized non-imaging point clusters defined as shown in Equation (10). The

oth normalized non-imaging point cluster, which categorizes a collection of normalized non-imaging points, is defined as normalized non-imaging point cluster

C’m,o.

Normalized non-imaging point cluster centroid set

is defined as shown in Equation (11). Normalized non-imaging point cluster

C’m,o includes a part of normalized non-imaging point set

U’, and the normalized non-imaging point cluster centroid is denoted as

and defined as

.

The finally classified normalized non-imaging point set U’ is defined by the normalized non-imaging point cluster set C’w the normalized non-imaging point cluster centroid set .

Set normalized imaging point set

W’, which is the collection of normalized imaging points, is also classified identically to normalized non-imaging point cluster set

C’m, which is the collection of normalized non-imaging point clusters. Set normalized imaging point cluster set

C’n, which is the collection of normalized imaging point clusters during the

nth run, is defined as shown in Equation (12). Set normalized imaging point cluster set

C’n includes normalized imaging point cluster

C’n,g, which is the

gth normalized imaging point cluster.

Normalized imaging point cluster centroid set

is defined as shown in Equation (13). Normalized imaging point cluster

C’n,g includes some elements of normalized imaging point set

W’. The normalized imaging point cluster centroid

is defined by Equation (14), using normalized point

and angle

.

where

,

.

The finally classified results of the normalized imaging point set W’ are the normalized imaging point cluster set C’v and normalized imaging point cluster centroid set .

4.2. Normalized Non-Imaging Point Categorization

Categorization of non-imaging points is identical to the conventional K-means algorithm. First, K, the number of the elements of the normalized non-imaging point cluster set C’m, is defined and normalized non-imaging point cluster centroids are set. K is set by considering the total distance in flying during the process of categorizing the non-imaging points. Second, Non-imaging points are classified by utilizing normalized non-imaging point cluster centroid. Third, normalized non-imaging point cluster centroids are adjusted by non-imaging points in re-allocated normalized non-imaging point clusters. Fourth, the second and the third processes are performed repeatedly until there is no change of normalized non-imaging point cluster centroids.

Finally, normalized non-imaging point cluster set C’w and Normalized non-imaging point cluster centroid set are created based on non-imaging point set U’.

In the Second step, normalized non-imaging points in normalized non-imaging point set

U’ are assigned clusters based on the normalized non-imaging point cluster centroids that were established. In order to categorize normalized non-imaging point

as a normalized non-imaging point cluster member, the similarity is calculated, as shown in Equation (15). Function

returns the sum of each weighted Euclidean distance of normalized non-imaging point

. Using function

, the normalized non-imaging point

is added to the normalized non-imaging point cluster with the lowest value, as shown in Equation (16).

where each point

is assigned to exactly one normalized non-imaging point cluster

, even if it could be assigned to two or more of them.

In the Fourth step, the stability of the configured non-imaging point cluster centroids is checked. A state of no change is defined the distance between two non-imaging point cluster centroids between pre- and post-alteration of cluster centroids is less than the threshold value

, as shown in Equation (17). After checking whether there is a change in the non-imaging point cluster centroids, then the method is ends. If there is a change, the method returns to the Second step.

4.3. Non-Imaging Point Denormalization

The denormalized results of normalized non-imaging point cluster centroid set

is defined by non-imaging point cluster centroid set

. There are normalized non-imaging point cluster centroids in non-imaging point cluster centroid set

. Normalized non-imaging point cluster centroid is denoted as

and defined as

. Non-imaging point cluster centroids of non-imaging point cluster centroid set

are created as shown in Equation (18), which has the same number elements with the number of the elements of Non-imaging point cluster centroid set

.

oth non-imaging point cluster centroid

is set by denomalizing each element of the normalized non-imaging point cluster centroid

as shown in Equation (19).

4.4. Normalized Imaging Point Categorization

Non-imaging points do not require accurate positions while surveillance points are designated. However, a method is required to generate imaging points designated by the pilot. In non-imaging points, empty clusters are not generated due to the large quantity of non-imaging points collected, but in imaging points, empty clusters can be generated depending on the amount of imaging points designated by the pilot. During the imaging point categorization process, a method to set cluster center points minimizing the distribution of distance differences is required.

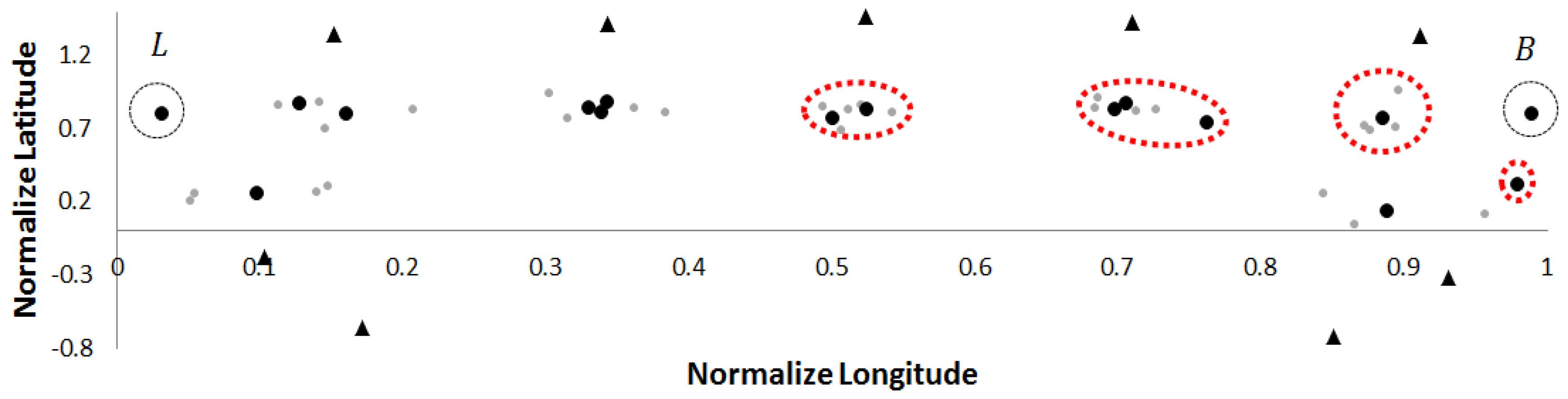

The proposed Bounded K-Means algorithm proceeds as follows. First, K, which is the number of normalized imaging point cluster C’n, is determined, and the normalized imaging point cluster centroids are set. Second, the set normalized imaging point cluster centroids are used to divide normalized imaging points. Third, normalized imaging point cluster centroids are readjusted as normalized imaging points belonging to the reassigned normalized imaging point clusters. Fourth, Steps 2 and 3 are repeated until there is no variation in the normalized imaging point cluster centroids.

During the reassigning process that takes place if the number of imaging points is low and they are widely distributed, empty imaging point clusters can be generated if imaging points exist in the bounding boundary of imaging points. After readjusting normalized imaging point cluster centroids, empty clusters are checked. If an empty normalized imaging point cluster exists and the cost of adding other normalized imaging point cluster members that are out of the error range of their normalized imaging point cluster centroids to this normalized imaging point cluster is less than the current cost, it proceeds to the empty cluster centroid is set to be the normalized imaging point cluster with the lowest cost for all normalized imaging point cluster members that are out of the error range of their own normalized imaging point cluster centroids. The distance between the normalized imaging point cluster centroid and each of its normalized imaging point cluster member is calculated using function

as shown in Equation (20). The error range is calculated in err, as shown in Equation (21). Normalized imaging point cluster members that satisfy the out of error range (

) from their normalized imaging point cluster centroid are identified. When a normalized imaging point cluster centroid is changed back into a normalized imaging point cluster member, the state with the lowest cost is set as the empty cluster centroid, as shown in Equation (22). The method then proceeds to Step 2.

where

i and

q are

It is checked whether two random normalized imaging point cluster centroids are within error range (

) and whether random members are out of the error range of the normalized imaging point cluster centroid using

. The normalized imaging point cluster centroid and random normalized imaging point cluster members that satisfy both conditions are used. If changing random members within the error range to the relevant normalized imaging point cluster centroid is lower than the current cost, the method proceeds to when combined as shown in Equation (23), Equation (24) members that are out of the error range are set as centroid points among two of the

g1th and

g2th imaging point cluster centroids that are within the error range with the lowest cost, the

gth normalized imaging point cluster centroid, and the normalized imaging point cluster members. After creating new clusters, the method returns to Step 3. If all two conditions are not met, it proceeds to Step 2.

where

where

i and

q are

In Step 2, the members of normalized imaging point set

W’ are clustered. In order to cluster normalized imaging point, a similarity is calculated, as shown in Equation (25). Function

returns the sum of each weighted Euclidean distance of normalized state. Normalized imaging points are added to the cluster with the lowest such value, as shown in Equation (26).

where each normalized imaging point

is assigned to exactly one normalized imaging point cluster

, even if it could be assigned to two or more of them.

In Step 4, the stability of the current cluster centroids is checked. If the distance between two cluster centroids pre- and post-alteration is less than a threshold value

, as shown in Equation (27), it is defined as a state of no change for that normalized imaging point cluster. If there is no change in any imaging point cluster, then the method is end. If there is a change, the method returns to Step 2.

Instead of Equations (22) and (24), Equations (28) and (29) can be applied by applying the weight.

where

i and

q are

where

i and

q are

4.5. Normalized Imaging Point Denormalization

This is a method of generation of imaging points that will be actually used which denormalizes the normalized imaging point cluster centroid set

not to a value of 0 or 1, but to a location and angle values that are actually used. The imaging point cluster centroid set

, which records the denormalized value of the normalized imaging point cluster centroid set

, is generated. The imaging point cluster centroids of the imaging point cluster centroid set

is generated as shown in Equation (30) as large as the size of the normalized imaging point cluster centroid set

. The imaging point cluster centroid is denoted as

and defined as

. Here,

,

.

gth imaging point cluster centroid

is set by denormalizing the elements of the normalized imaging point cluster centroid

as show in Equation (31).

_Park.png)